A Multi-Stage Feature Aggregation and Structure Awareness Network for Concrete Bridge Crack Detection

Abstract

1. Introduction

- (1)

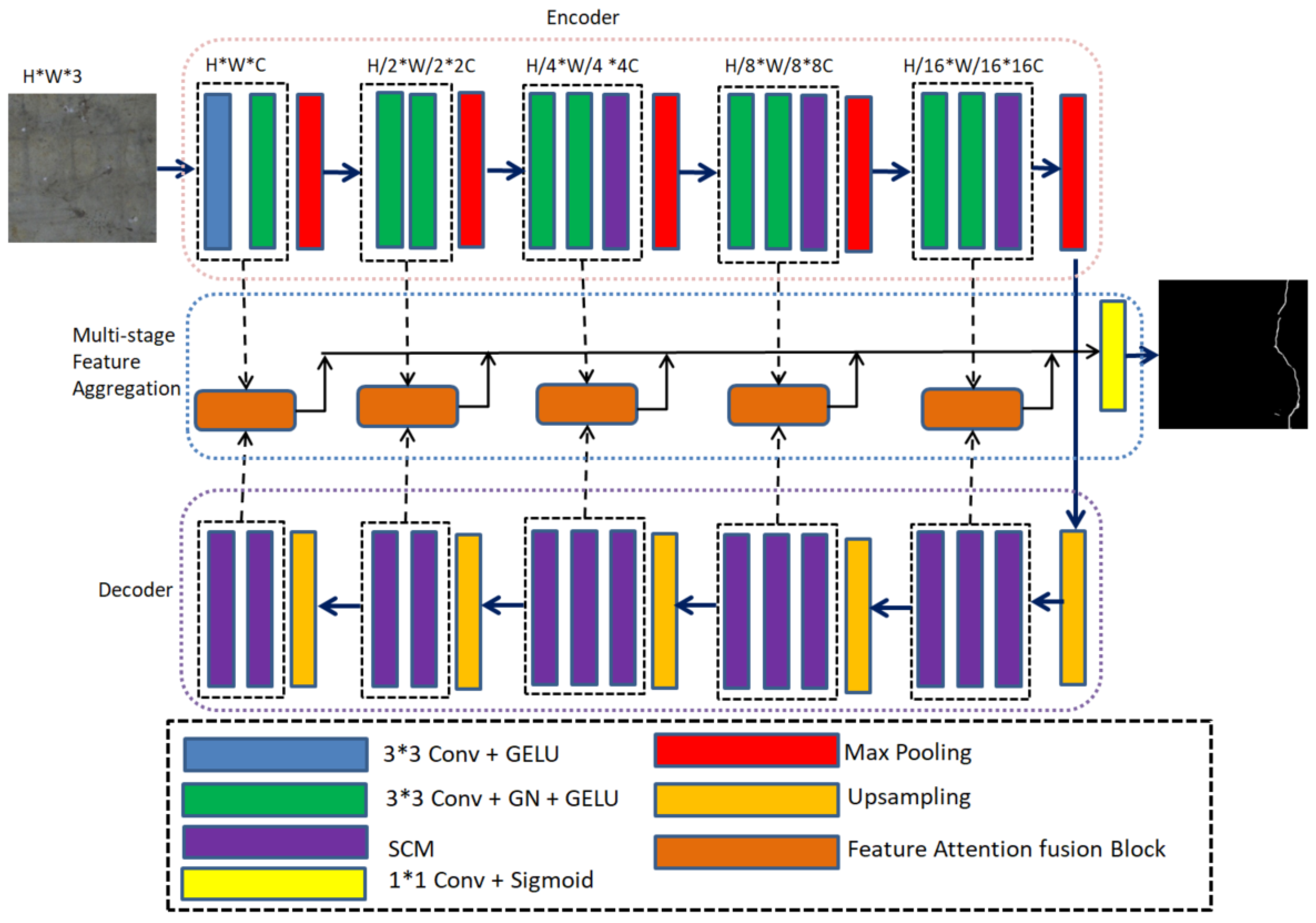

- A multi-stage feature aggregation and structure awareness network is proposed for bridge crack detection. The proposed MFSA-Net can effectively perceive the elongated structure of bridge cracks and obtain fine-grained segmentation results in a multi-stage aggregation manner.

- (2)

- A structure-aware convolution block (SAB) is proposed, where the square convolution can extract local detailed information and the strip convolution is employed to refine the thin and long features of cracks for establishing long-range dependencies between discrete regions of cracks.

- (3)

- A feature attention fusion block (FAB) is designed for fusing local context information and global context information with the attention mask, which can suppress interference from irrelevant background regions and sharpen the edges of bridge cracks.

2. Related Works

2.1. Crack Segmentation

2.2. Attention Mechanisms

2.3. Strip Convolution

3. The Proposed Method

3.1. Network Architecture

3.2. Encoder

3.3. Decoder

3.4. Multi-Stage Feature Aggregation

3.5. Loss Function

4. Experiments

4.1. Experimental Setup

4.2. Datasets

4.3. Evaluation Metrics

4.4. Comparison with the State-of-the-Art (SOTA) Methods

4.4.1. The Results on BlurredCrack

4.4.2. The Results on CrackLS315

4.4.3. The Results on CFD

4.5. Ablation Study

4.5.1. Verifying the Validity of the Strip Decoder

4.5.2. Impact of the SCM’s Position in the Encoder on the Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Glossary

| Symbols | Description |

| real number space | |

| input tensor | |

| attention mask map of the kth stage | |

| side output of the kth stage | |

| tensor concatenation operation | |

| Sigmoid activation function | |

| upsampling to the input image size | |

| Output prediction map after multi-stage fusion, i.e., the final prediction result map | |

| convolution operation | |

| element-wise addition operation | |

| element-wise multiplication operation | |

| P | the predicted segmentation result |

| G | the ground truth binary label |

| N | the total number of pixels in a crack image |

| predicting the error weights | |

| predicting the correct weights | |

| the number of positive samples in a crack image | |

| the number of negative samples in a crack image | |

| the loss ratio to balance the positive and negative samples | |

| the balanced weighted cross-entropy loss | |

| false positive weights | |

| false negative weights | |

| Tversky loss | |

| and weighted losses | |

| the weight of loss | |

| The final total loss function | |

| the loss weight of the kth stage | |

| the loss weight of the final fusion stage | |

| Summation |

References

- Zinno, R.; Haghshenas, S.S.; Guido, G.; VItale, A. Artificial Intelligence and Structural Health Monitoring of Bridges: A Review of the State-of-the-Art. IEEE Access 2022, 10, 88058–88078. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, M.; Peng, Y.; Wu, L.; Wang, Y. HDCB-Net: A Neural Network with the Hybrid Dilated Convolution for Pixel-Level Crack Detection on Concrete Bridges. IEEE Trans. Ind. Inform. 2021, 17, 5485–5494. [Google Scholar] [CrossRef]

- Zhao, H.; Qin, G.; Wang, X. Improvement of canny algorithm based on pavement edge detection. In Proceedings of the 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 2, pp. 964–967. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-nested edge detection. Int. J. Comput. Vis. 2017, 125, 3–18. [Google Scholar] [CrossRef]

- Kamaliardakani, M.; Sun, L.; Ardakani, M.K. Sealed-crack detection algorithm using heuristic thresholding approach. J. Comput. Civil Eng. 2014, 30, 04014110. [Google Scholar] [CrossRef]

- Win, M.; Bushroa, A.R.; Hassan, M.A.; Hilman, N.M.; Ide-Ektessabi, A. A contrast adjustment thresholding method for surface defect detection based on mesoscopy. IEEE Trans. Ind. Inform. 2015, 11, 642–649. [Google Scholar] [CrossRef]

- Oliveira, H.; Correia, P.L. CrackIT−An image processing toolbox for crack detection and characterization. In Proceedings of the IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014. [Google Scholar] [CrossRef]

- Zou, Q.; Cao, Y.; Li, Q.Q.; Mao, Q.Z.; Wang, S. CrackTree: Automatic crack detectionfrom pavement images. Pattern Recogn. Lett. 2012, 33, 227–238. [Google Scholar] [CrossRef]

- Zhang, Z.; Mansfield, E.; Li, J.; Russell, J.; Young, G.; Adams, C.; Wang, J.Z. A Machine Learning Paradigm for Studying Pictorial Realism: Are Constable’s Clouds More Real than His Contemporaries? IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 33–42. [Google Scholar] [CrossRef]

- Jiang, Y.; Palaoag, T.D.; Zhang, H.; Yang, Z. A Road Crack Detection Algorithm Based on SIFT Feature and BP Neural Network. In Proceedings of the 2022 International Conference on Machine Learning and Intelligent Systems Engineering (MLISE), Guangzhou, China, 5–7 August 2022; pp. 177–181. [Google Scholar] [CrossRef]

- Meng, L.; Wang, Z.; Fujikawa, Y.; Oyanagi, S. Detecting cracks on a concrete surface using histogram of oriented gradients. In Proceedings of the 2015 International Conference on Advanced Mechatronic Systems (ICAMechS), Beijing, China, 22–24 August 2015; pp. 103–107. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic Road Crack Detection Using Random Structured Forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Zhang, Z.; Davaasuren, D.; Wu, C.; Goldstein, J.A.; Gernand, A.D.; Wang, J.Z. Multi-region saliency-aware learning for cross-domain placenta image segmentation. Pattern Recognit. Lett. 2020, 140, 165–171. [Google Scholar] [CrossRef]

- Ali, R.; Chuah, J.H.; Talip, M.S.A.; Mokhtar, N.; Shoaib, M.A. Automatic pixel-level crack segmentation in images using fully convolutional neural network based on residual blocks and pixel local weights. Eng. Appl. Artif. Intell. 2021, 104, 104391. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, Y.; Luo, X.; Huang, T.; Yang, X. Automatic Pixel-Level Crack Detection and Measurement Using Fully Convolutional Network. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1090–1109. [Google Scholar] [CrossRef]

- Cheng, J.; Xiong, W.; Chen, W.; Gu, Y.; Li, Y. Pixel-level Crack Detection using U-Net. In Proceedings of the IEEE Region 10 Conference, Jeju, Republic of Korea, 28–31 October 2018; pp. 0462–0466. [Google Scholar] [CrossRef]

- Song, C.; Wu, L.; Chen, Z.; Zhou, H.; Lin, P.; Cheng, S.; Wu, Z. Pixel-Level Crack Detection in Images Using SegNet. Lect. Notes Comput. Sci. 2019, 11909, 247–254. [Google Scholar] [CrossRef]

- Sun, X.; Xie, Y.; Jiang, L.; Cao, Y.; Liu, B. DMA-Net: DeepLab with Multi-Scale Attention for Pavement Crack Segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18392–18403. [Google Scholar] [CrossRef]

- Chen, H.; Lin, H. An Effective Hybrid Atrous Convolutional Network for Pixel-Level Crack Detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. DeepCrack: Learning Hierarchical Convolutional Features for Crack Detection. IEEE Trans. Image Process. 2019, 28, 1498–1512. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Huang, Y.; Li, Y.; Chen, Q. FPCNet: Fast Pavement Crack Detection Network Based on Encoder-Decoder Architecture. arXiv 2019, arXiv:1907.02248. [Google Scholar] [CrossRef]

- Qu, Z.; Chen, W.; Wang, S.; Yi, T.; Liu, L. A crack detection algorithm for concrete pavement based on attention mechanism and multi-features fusion. IEEE Trans. Intell. Transp. Syst. 2022, 23, 11710–11719. [Google Scholar] [CrossRef]

- Liu, H.; Miao, X.; Mertz, C.; Xu, C.; Kong, H. CrackFormer: Transformer Network for Fine-Grained Crack Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3763–3772. [Google Scholar] [CrossRef]

- Zhang, E.; Shao, L.; Wang, Y. Unifying transformer and convolution for dam crack detection. Autom. Constr. 2023, 147, 104712. [Google Scholar] [CrossRef]

- Zhu, Q.; Phun, M.D.; Ha, Q. Crack Detection Using Enhanced Hierarchical Convolutional Neural Networks. arXiv 2019, arXiv:1912.12139. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature Pyramid and Hierarchical Boosting Network for Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1525–1535. [Google Scholar] [CrossRef]

- Fan, Z.; Li, C.; Chen, Y.; Wei, J.; Loprencipe, G.; Chen, X.; Di Mascio, P. Automatic Crack Detection on Road Pavements Using Encoder-Decoder Architecture. Materials 2020, 13, 2960. [Google Scholar] [CrossRef] [PubMed]

- Ju, H.; Li, W.; Tighe, S.; Xu, Z.; Zhai, J. CrackU-net: A novel deep convolutional neural network for pixelwise pavement crack detection. Struct. Control. Health Monit. 2020, 27, e2551. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. Int. J. Comput. Vis. 2020, 128, 742–755. [Google Scholar] [CrossRef]

- Han, C.; Ma, T.; Huyan, J.; Huang, X.; Zhang, Y. CrackW-Net: A Novel Pavement Crack Image Segmentation Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22135–22144. [Google Scholar] [CrossRef]

- Lin, F.; Yang, J.; Shu, J.; Scherer, R.J. Crack Semantic Segmentation using the U-Net with Full Attention Strategy. arXiv 2021, arXiv:2104.14586. [Google Scholar] [CrossRef]

- Li, K.; Wang, B.; Tian, Y.; Qi, Z. Fast and Accurate Road Crack Detection Based on Adaptive Cost-Sensitive Loss Function. IEEE Trans. Cybern. 2023, 53, 1051–1062. [Google Scholar] [CrossRef] [PubMed]

- Choi, W.; Cha, Y.J. SDDNet: Real-Time Crack Segmentation. IEEE Trans. Ind. Electron. 2020, 67, 8016–8025. [Google Scholar] [CrossRef]

- Ji, W.; Zhang, Y.; Huang, P.; Yan, Y.; Yang, Q. A Neural Network with Spatial Attention for Pixel-Level Crack Detection on Concrete Bridges. In Proceedings of the 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS), Chengdu, China, 3–5 August 2022; pp. 481–486. [Google Scholar] [CrossRef]

- Chen, F.C.; Jahanshahi, M.R. ARF-Crack: Rotation invariant deep fully convolutional network for pixel-level crack detection. Mach. Vis. Appl. 2020, 31, 47. [Google Scholar] [CrossRef]

- Zhou, Y.; Ye, Q.; Qiu, Q.; Jiao, J. Oriented Response Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4961–4970. [Google Scholar] [CrossRef]

- Sharma, H.; Pradhan, P.; Balamuralidhar, P. SCNet: A Generalized Attention-based Model for Crack Fault Segmentation. arXiv 2021, arXiv:2112.01426. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Xu, Z.; Guan, H.; Kang, J.; Lei, X.; Ma, L.; Yu, Y.; Chen, Y.; Li, J. Pavement crack detection from CCD images with a locally enhanced transformer network. Int. J. Appl. Earth Obs. Geoinf. 2022, 110, 102825. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.M.; Hu, S.M. Visual attention network. arXiv 2022, arXiv:2202.09741. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Cheng, M.M.; Feng, J. Strip Pooling: Rethinking Spatial Pooling for Scene Parsing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4002–4011. [Google Scholar] [CrossRef]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. SegNeXt: Rethinking Convolutional Attention Design for Semantic Segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar] [CrossRef]

- Sun, T.; Di, Z.; Che, P.; Liu, C.; Wang, Y. Leveraging Crowdsourced GPS Data for Road Extraction from Aerial Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7501–7510. [Google Scholar] [CrossRef]

- Mei, J.; Li, R.J.; Gao, W.; Cheng, M.M. CoANet: Connectivity Attention Network for Road Extraction from Satellite Imagery. IEEE Trans. Image Process. 2021, 30, 8540–8552. [Google Scholar] [CrossRef]

- Fan, R.; Wang, X.; Hou, Q.; Liu, H.; Mu, T.J. SpinNet: Spinning convolutional network for lane boundary detection. Comput. Vis. Media 2019, 5, 417–428. [Google Scholar] [CrossRef]

- Liu, C.; Lai, J. Pattern Matters: Hierarchical Correlated Strip Convolutional Network for Scene Text Recognition. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Z.; Cheng, M.M.; Hu, X.; Wang, K.; Bai, X. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky Loss Function for Image Segmentation Using 3D Fully Convolutional Deep Networks. In 8th International Workshop on Machine Learning in Medical Imaging; Springer: Cham, Switzerland, 2017; Volume 10541, pp. 379–387. [Google Scholar] [CrossRef]

| Methods | Bridge88 | BridgeTL58 | BridgeDB288 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pr (%) | Re (%) | F1 (%) | IoU (%) | Pr (%) | Re (%) | F1 (%) | IoU (%) | Pr (%) | Re (%) | F1 (%) | IoU (%) | |

| U-Net [16] | 58.01 | 77.80 | 66.46 | 49.77 | 71.43 | 66.71 | 68.99 | 52.66 | 66.30 | 61.46 | 63.79 | 46.83 |

| RCF [48] | 60.38 | 69.50 | 64.62 | 47.74 | 62.87 | 62.79 | 62.83 | 45.80 | 64.54 | 58.48 | 61.36 | 47.13 |

| DeepCrack [20] | 57.69 | 72.67 | 64.32 | 47.40 | 61.10 | 62.68 | 61.88 | 48.66 | 61.03 | 56.78 | 58.83 | 47.47 |

| CrackFormer [23] | 71.07 | 86.78 | 78.14 | 64.13 | 63.10 | 71.81 | 67.17 | 50.57 | 73.68 | 71.09 | 72.36 | 56.69 |

| HDCBNet [2] | 72.47 | 64.58 | 68.30 | 51.86 | 66.81 | 60.25 | 63.36 | 46.37 | 60.42 | 63.54 | 61.94 | 50.40 |

| MFSA-Net | 76.21 | 76.35 | 76.28 | 61.65 | 67.17 | 75.81 | 71.23 | 55.32 | 77.83 | 78.97 | 78.40 | 64.47 |

| Methods | Pr (%) | Re (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|

| U-Net [16] | 65.33 | 68.05 | 66.67 | 49.99 |

| RCF [48] | 69.52 | 87.02 | 77.29 | 62.99 |

| DeepCrack [20] | 74.66 | 98.00 | 84.76 | 73.54 |

| CrackFormer [23] | 77.39 | 97.51 | 86.29 | 75.89 |

| HDCBNet [2] | 76.79 | 98.34 | 86.24 | 75.81 |

| MFSA-Net | 82.37 | 99.10 | 89.97 | 81.76 |

| Methods | Pr (%) | Re (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|

| U-Net [16] | 71.82 | 75.97 | 73.83 | 58.52 |

| RCF [48] | 67.00 | 70.86 | 68.88 | 52.53 |

| DeepCrack [20] | 60.42 | 66.52 | 63.32 | 46.33 |

| CrackFormer [23] | 83.57 | 83.93 | 83.75 | 72.04 |

| HDCBNet [2] | 66.70 | 61.29 | 63.88 | 46.93 |

| MFSA-Net | 88.60 | 85.61 | 87.08 | 77.12 |

| Decoder | Pr (%) | Re (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|

| Square convolution | 67.39 | 73.54 | 70.62 | 54.23 |

| Self-attention | 76.62 | 72.08 | 74.28 | 58.66 |

| SCM | 76.21 | 76.35 | 76.28 | 61.65 |

| Encoder | SCM Position | Pr (%) | Re (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|---|

| Base SegNet | - | 66.89 | 70.44 | 68.62 | 52.07 |

| Base SegNet + SCM | L | 72.04 | 74.75 | 73.37 | 56.78 |

| Base SegNet + SCM | A | 71.96 | 74.32 | 73.12 | 56.14 |

| Base SegNet + SCM | LLT | 76.21 | 76.35 | 76.28 | 61.65 |

| Base SegNet + SCM | A + L | 66.92 | 70.70 | 68.76 | 52.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, E.; Jiang, T.; Duan, J. A Multi-Stage Feature Aggregation and Structure Awareness Network for Concrete Bridge Crack Detection. Sensors 2024, 24, 1542. https://doi.org/10.3390/s24051542

Zhang E, Jiang T, Duan J. A Multi-Stage Feature Aggregation and Structure Awareness Network for Concrete Bridge Crack Detection. Sensors. 2024; 24(5):1542. https://doi.org/10.3390/s24051542

Chicago/Turabian StyleZhang, Erhu, Tao Jiang, and Jinghong Duan. 2024. "A Multi-Stage Feature Aggregation and Structure Awareness Network for Concrete Bridge Crack Detection" Sensors 24, no. 5: 1542. https://doi.org/10.3390/s24051542

APA StyleZhang, E., Jiang, T., & Duan, J. (2024). A Multi-Stage Feature Aggregation and Structure Awareness Network for Concrete Bridge Crack Detection. Sensors, 24(5), 1542. https://doi.org/10.3390/s24051542