Complying with ISO 26262 and ISO/SAE 21434: A Safety and Security Co-Analysis Method for Intelligent Connected Vehicle

Abstract

:1. Introduction

- Lack of information sharing [20]: If there is not enough information sharing between the two teams, it can lead to duplication of effort or conflicting information, which can affect the quality of the overall analysis process.

- Differences in analysis methods [21]: There may be differences in the analytical methods used by the HARA and TARA teams. If there is no consensus between the two teams, this may make it difficult to harmonize the results of the analysis.

- Lack of comprehensive analysis [22]: If the two teams do not conduct an effective integrated analysis, it may result in an inability to adequately consider the correlations and interactions between safety and security, which may affect the assessment and management of the overall risk to the system.

- We improved STPA by adding a physical component diagram to describe the component interactions of ICVs while highlighting the ICV’s connections to the external world. In addition, we proposed loss scenario trees for describing fault propagation paths and attack paths, which are used to obtain more detailed safety and security requirements.

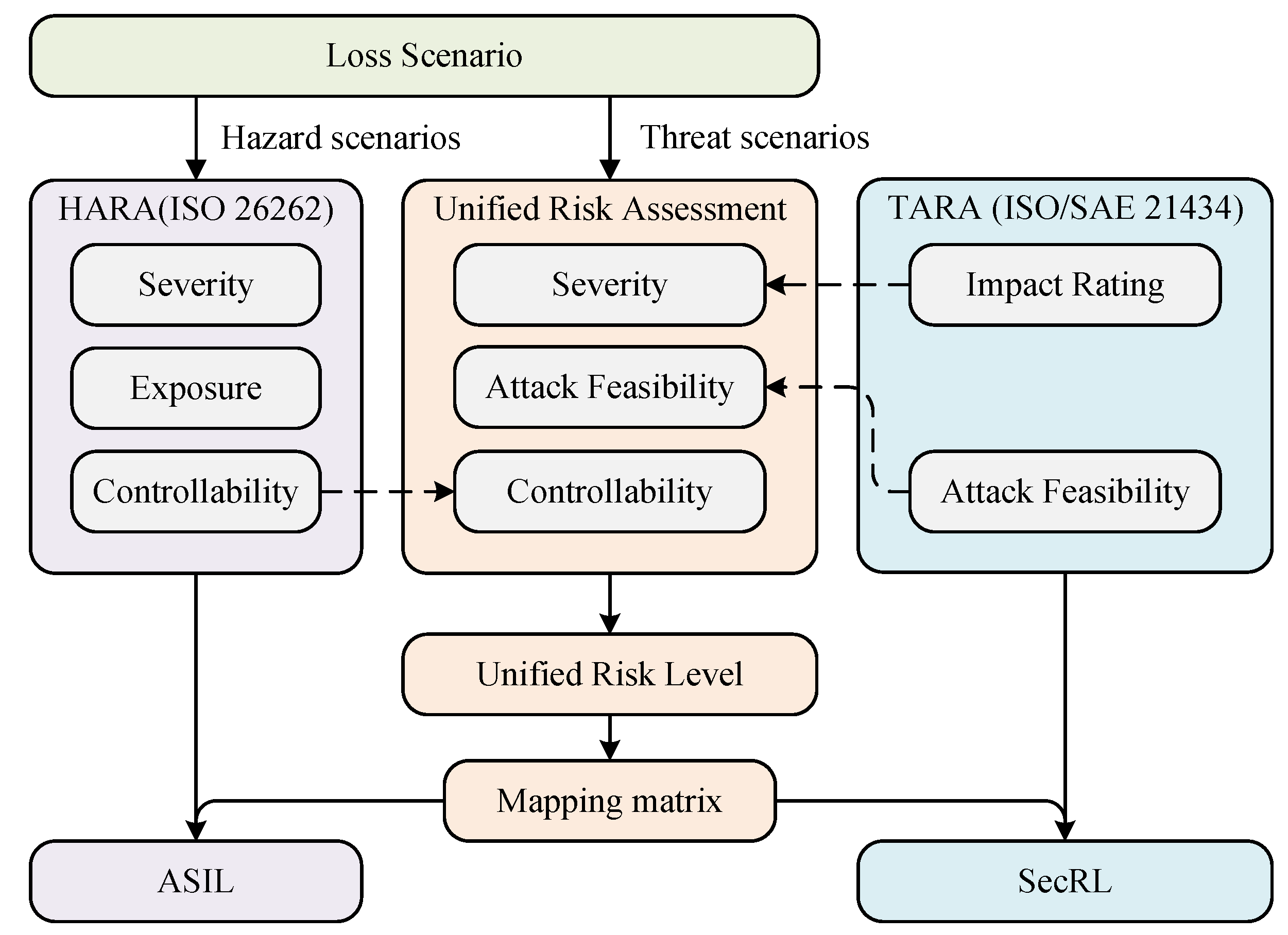

- Our approach combines HARA and TARA in the same process, which compensates for the lack of information sharing and analytical synthesis. A unified risk assessment process has been added to our method. The risk level of events at the root of the loss scenario tree is evaluated using a unified risk matrix and the results are mapped to the automotive safety integrity level (ASIL) of ISO 26262 and the security risk level (SecRL) of ISO/SAE 21434.

- A case study of the autonomous emergency braking (AEB) system on our experimental vehicle platform shows that our method can effectively support the concept phase of the vehicle development process.

2. Related Work

2.1. Safety Analysis Methods

2.2. Security Analysis Methods

2.3. S & S Co-Analysis Methods

3. The Proposed Method

3.1. Overview of the Method

- Step 1: Hazard/Threat Analysis (enhanced STPA process)

- (a)

- Step 1.1: Define purpose of the analysis: At the beginning of the analysis process, the scope of the target system needs to be defined first. This can be obtained from item definition of HARA (from ISO 26262) and asset identification of TARA (from ISO/SAE 21434). With this step, system-level constraints can be initially obtained. This can provide the basis for the generation of functional safety requirements (required by ISO 26262) and cybersecurity requirements (required by ISO/SAE 21434).

- (b)

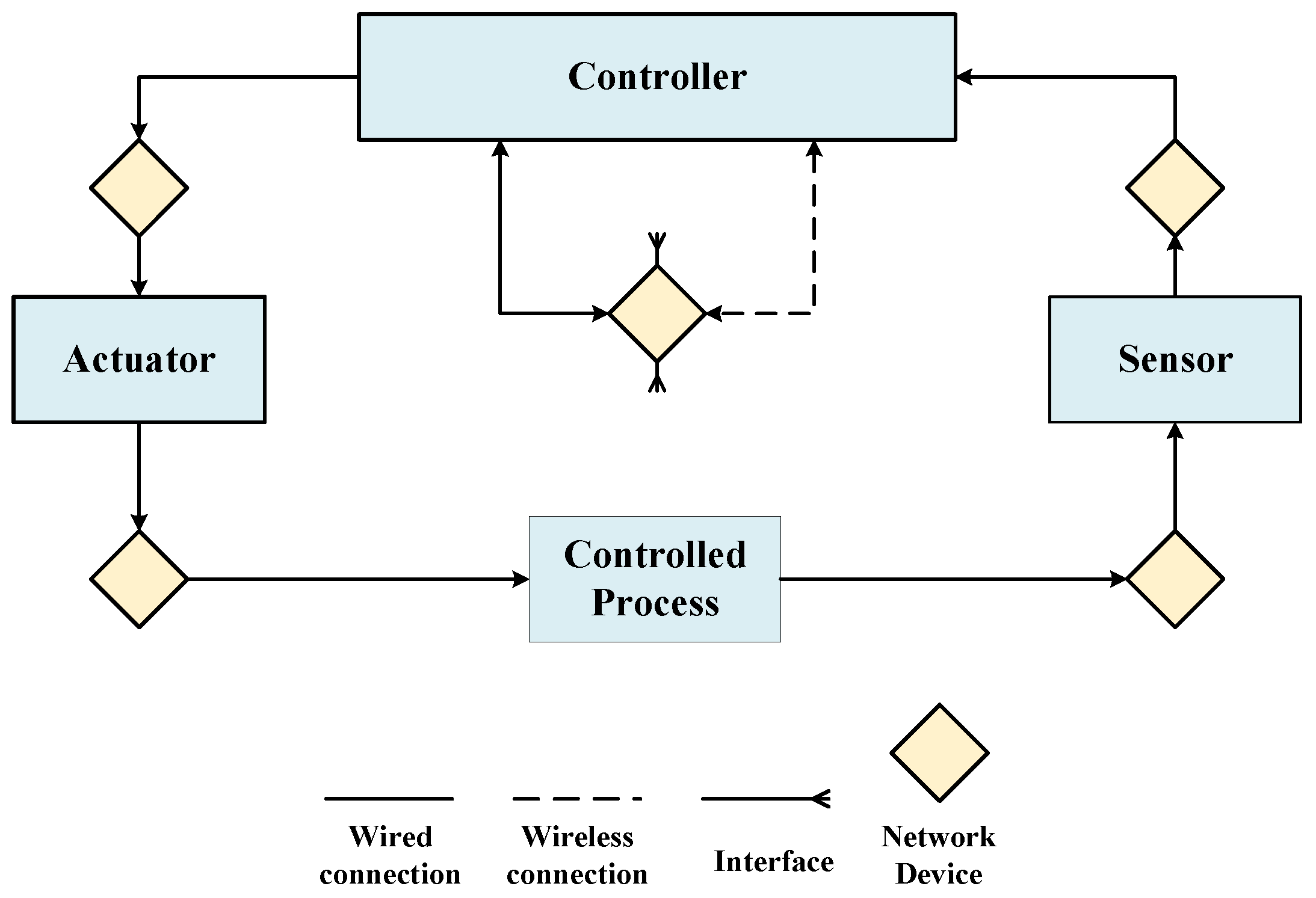

- Step 1.2: Model the control structure: Establish the control structure of the target system. The physical component diagram of the target system is obtained through the mapping from the control structure to the physical component diagram.

- (c)

- Step 1.3: Identify Unsafe/Unsecure Control Actions: Based on the control structure of the target system, the possible unsafe/unsecure control actions are analyzed and obtained.

- (d)

- Step 1.4: Identify loss scenarios: Based on the physical component diagram of the system, the possible loss scenarios are derived from the Loss Scenario Tree. This step corresponds to HARA’s hazard analysis and TARA’s threat scenario identification (threat scenarios are the reasons why threats arise, and some threats may have the same consequences as hazards) and attack path analysis.

- Step 2: Risk assessment: For the identified loss scenarios, their risk values are obtained through a unified risk matrix and mapped to Automotive Safety Integration Level (ASIL, defined in ISO 26262) and Security Risk Level (SecRL, defined in ISO/SAE 21434), respectively.

3.2. Foundations of ISO 26262, ISO/SAE 21434 and the Proposed Method

3.3. Hazard/Threat Analysis

3.3.1. Defining the Purpose of the Analysis

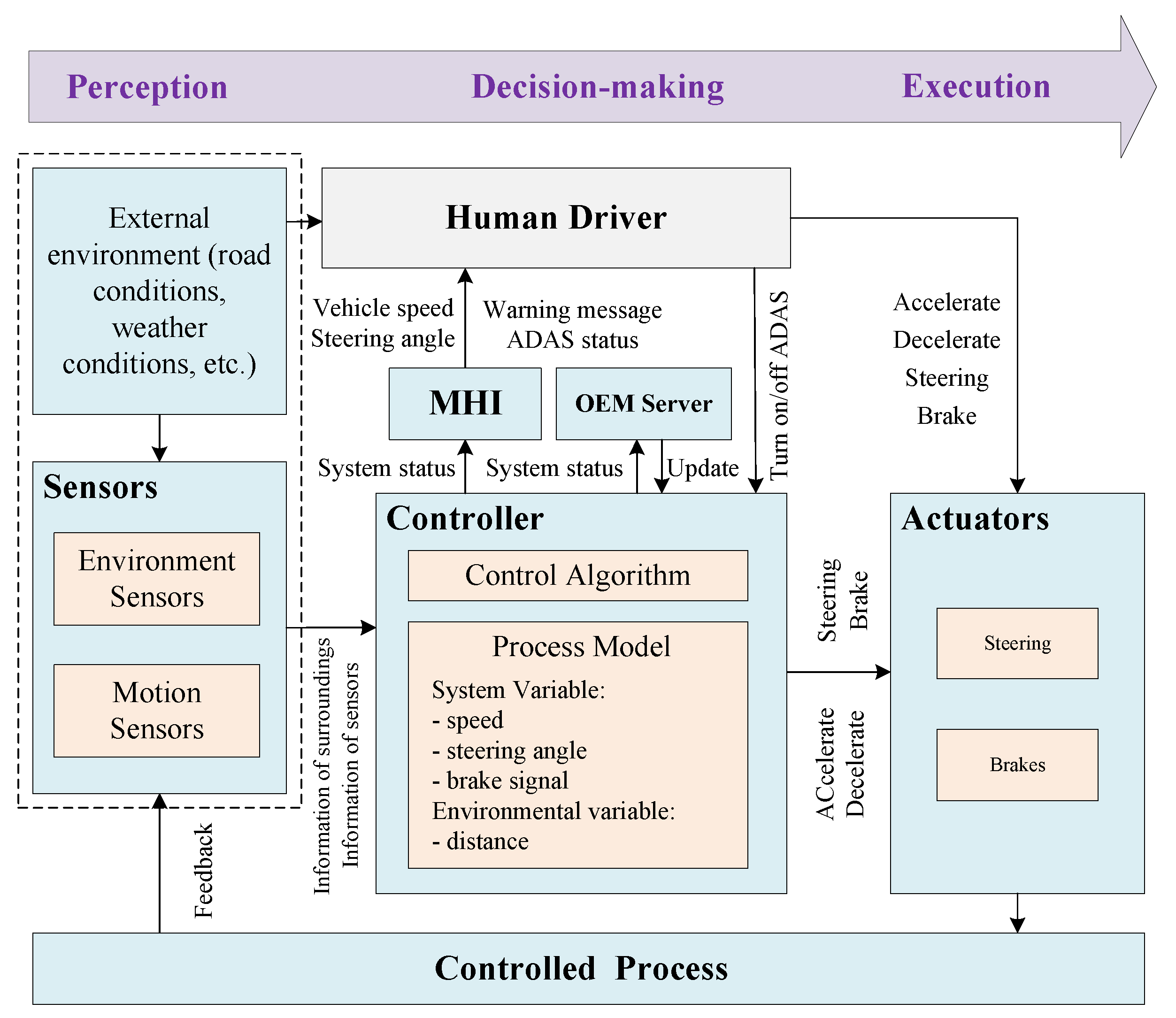

3.3.2. Modeling the Control Structure

3.3.3. Identifying Unsafe/Unsecure Control Actions

- Not Providing;

- Providing;

- Providing too early, too late, or in the wrong order;

- Lasting too long or stopping too early.

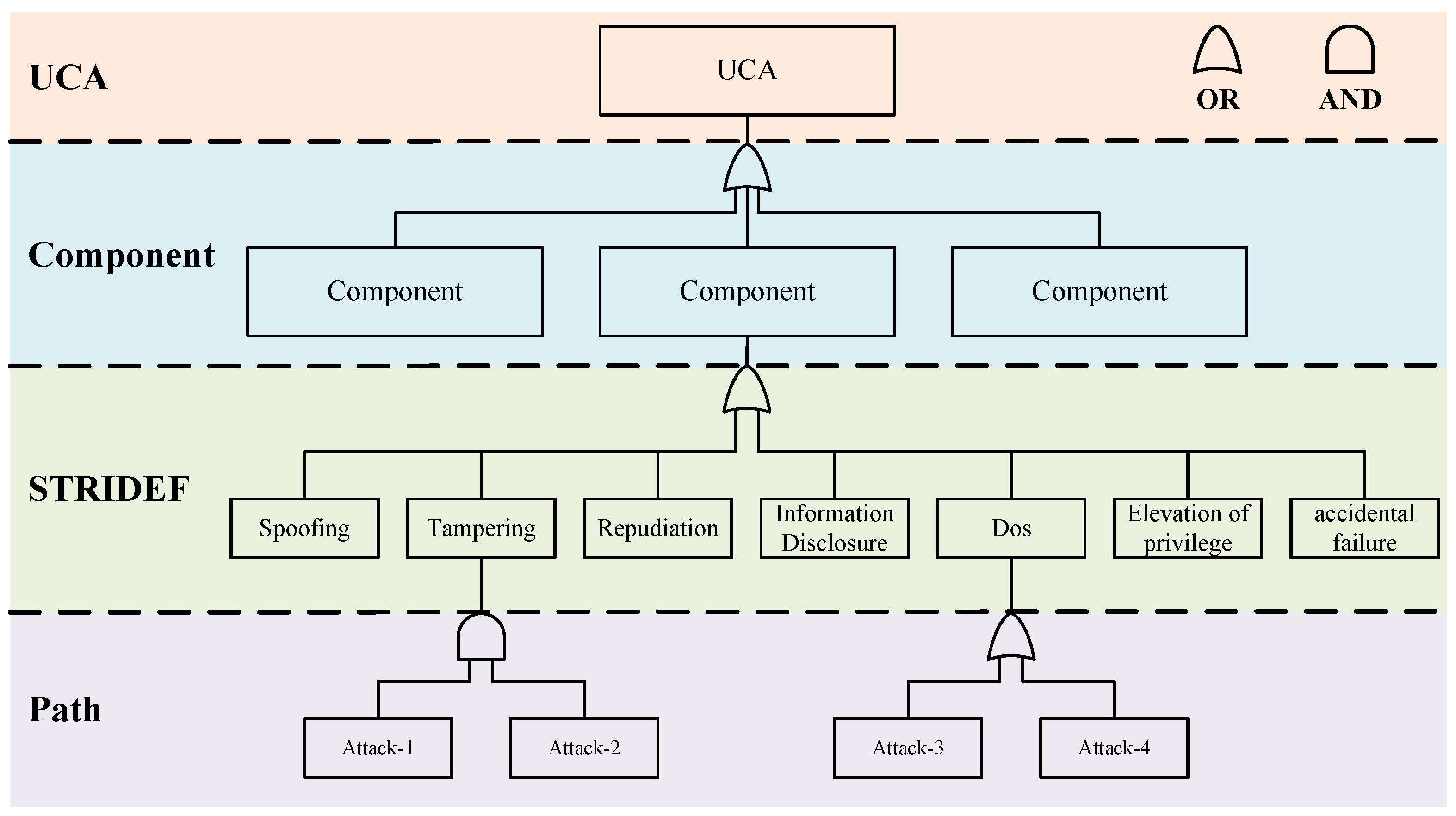

3.3.4. Identifying Loss Scenarios

- UCA layer: The UCA as the root of the scenario tree is generated by the previous steps. We construct the entire loss scenario tree by analyzing the causes of UCA.

- Component layer: At the component layer, we focus on critical components that may experience accidental failures or malicious attacks. It is important to note that the malfunction of a component or a combination of certain components can lead to UCA. In addition, a component may also consist of other subcomponents, which can be represented as several subtrees of this component.

- STRIDEF layer: For components identified at the component level, if a malfunction occurs it may be due to a malicious attack or an accidental failure. At the STRIDEF (STRIDE+Failure) layer, we categorize threats into six well-defined categories based on the STRIDE (proposed by Microsoft) threat model, namely spoofing, tampering, repudiation, information leakage, denial of service, and elevation of privilege. This categorization helps us to have a clear picture of potential threats so that we can take appropriate security countermeasures. At this level, we also consider accidental component function failures (usually triggered by hardware aging, design flaws, etc., including random hardware failures and systematic failures in ISO26262), and this comprehensive analysis ensures the overall safety of the system.

- Path layer: At the Path layer, we describe how a malicious attack or accidental failure occurs. We describe in detail the possible attack paths that an attacker could take and the fault propagation paths of the components. We can represent the relationship between each of the basic events more explicitly by using some logic gates (OR/AND). In the traditional component-based analysis, it is difficult to represent the interaction between components. Our method makes some improvements to address this issue. For some complex systems, if there are multiple components interacting with each other, we can still add the Component layer of a low-level component to the Path layer of a high-level component or reuse other existing analysis results.

3.4. Risk Assessment

3.4.1. Hara Process

3.4.2. Tara Process

- Elapsed time (ET): Indicates the time it takes for an attacker to recognize and exploit a vulnerability to successfully execute an attack.

- Knowledge of system (K): Indicates the difficulty of obtaining information about the target system.

- Expertise (Ex): Attacker’s expertise and experience.

- Window of opportunity (W): Indicates whether a special window of opportunity is required to perform the attack, including the type of access control (such as remote, physical) and the duration of access (such as restricted, unrestricted).

- Equipment (Eq): Indicates the hardware, software, or other related equipment required by the attacker to realize the attack.

3.4.3. Unified Risk Assessment

- Controllability: We use controllability to measure the likelihood that the driver or others at potential risk will avoid harm. The incorporation of controllability into the TARA process was demonstrated by Bolovinou et al. [52]. In order to maximize compliance with international standards and reduce the complexity of the assessment, we follow the definition of controllability in HARA (ISO 26262), as shown in Table 5. By taking into account the influence of human factors on the risk level, it will result in a more comprehensive risk assessment.

- Severity: As shown in Figure 6, the severity parameter in the unified risk assessment are derived from TARA’s impact rating. We evaluate the severity of loss scenarios through four factors: Safety (S), Financial (F), Operational (O), and Privacy (P), which are categorized as shown in Table 6. In these four factors, the Safety factor is the same as the severity parameter of ISO 26262 (see Section 3.4.1). To simplify the calculation of severity, we use the maximum value in the severity vector ( = (, , , )) to represent the overall severity of the loss scenario. For example, if a loss scenario has a severity vector = [2, 2, 1, 1], then the severity of this loss scenario is 2.

- Attack Feasibility: The attack feasibility in the unified risk assessment process is derived from the attack potential-based approach recommended by ISO/SAE 21434, which we have described in detail in Section 3.4.2. We first calculate the attack potential of the loss scenario based on Table 3, and then map the attack potential to the attack feasibility level based on Table 4.

4. Case Study

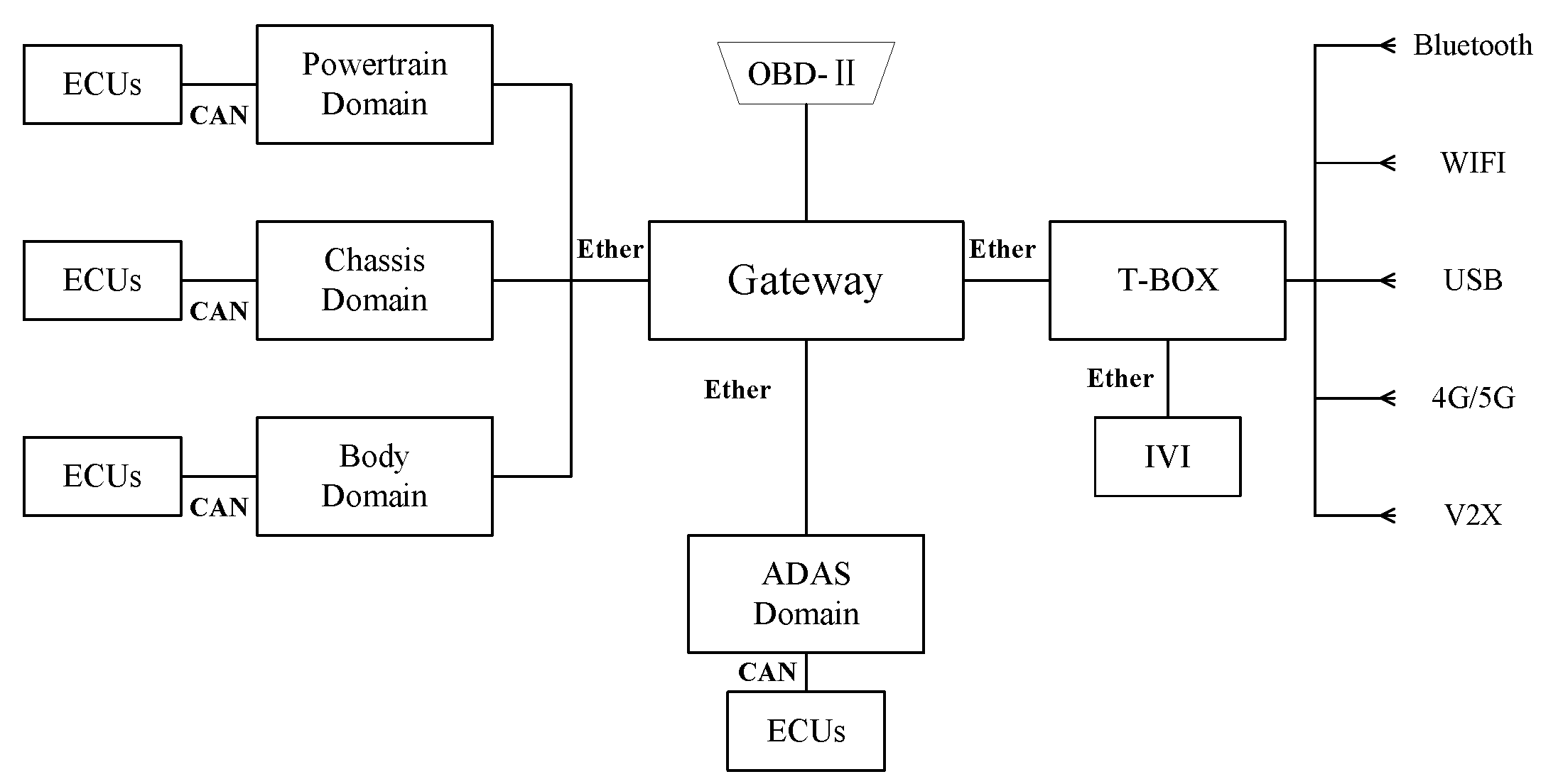

4.1. System Description

4.2. Hazard/Threat Analysis

4.2.1. Define Purpose of the Analysis

Identifying Losses

Identifying System-Level Hazards/Threats and Constraints

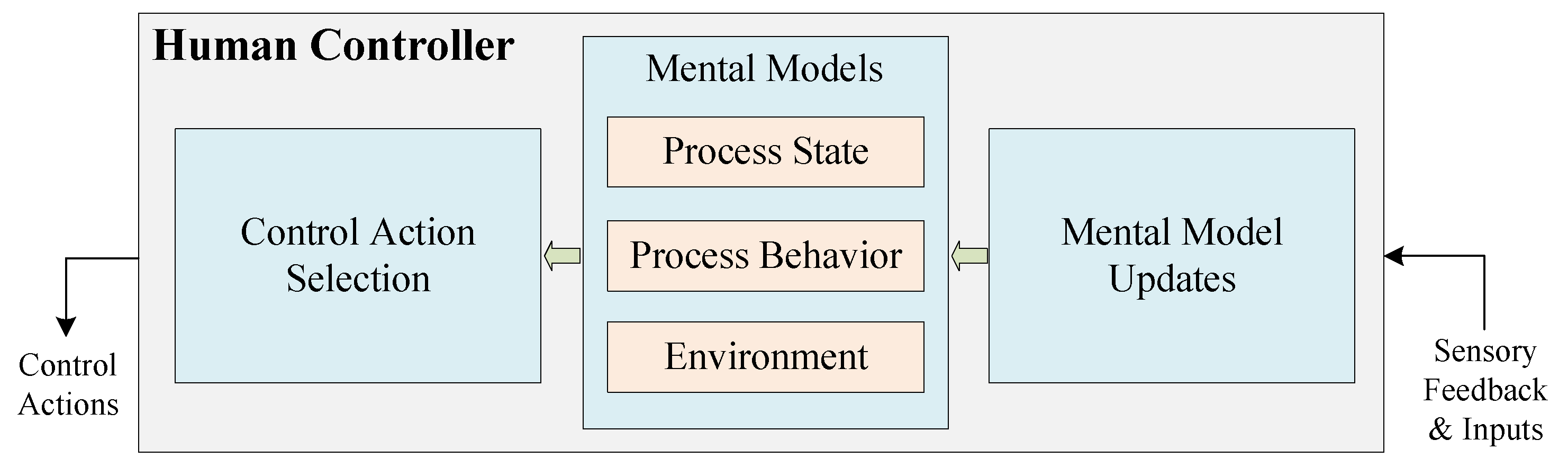

4.2.2. Modeling the Control Structure

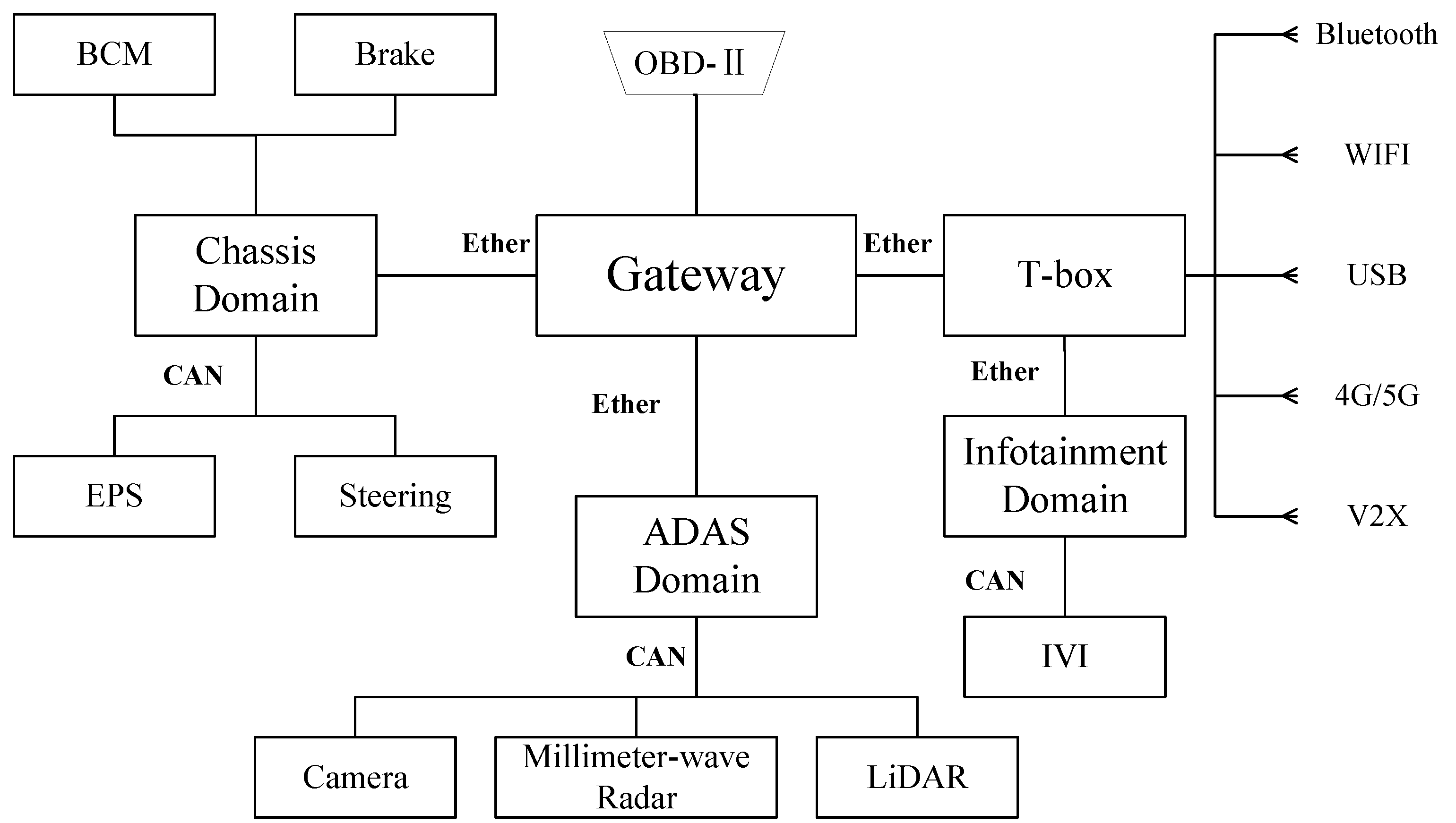

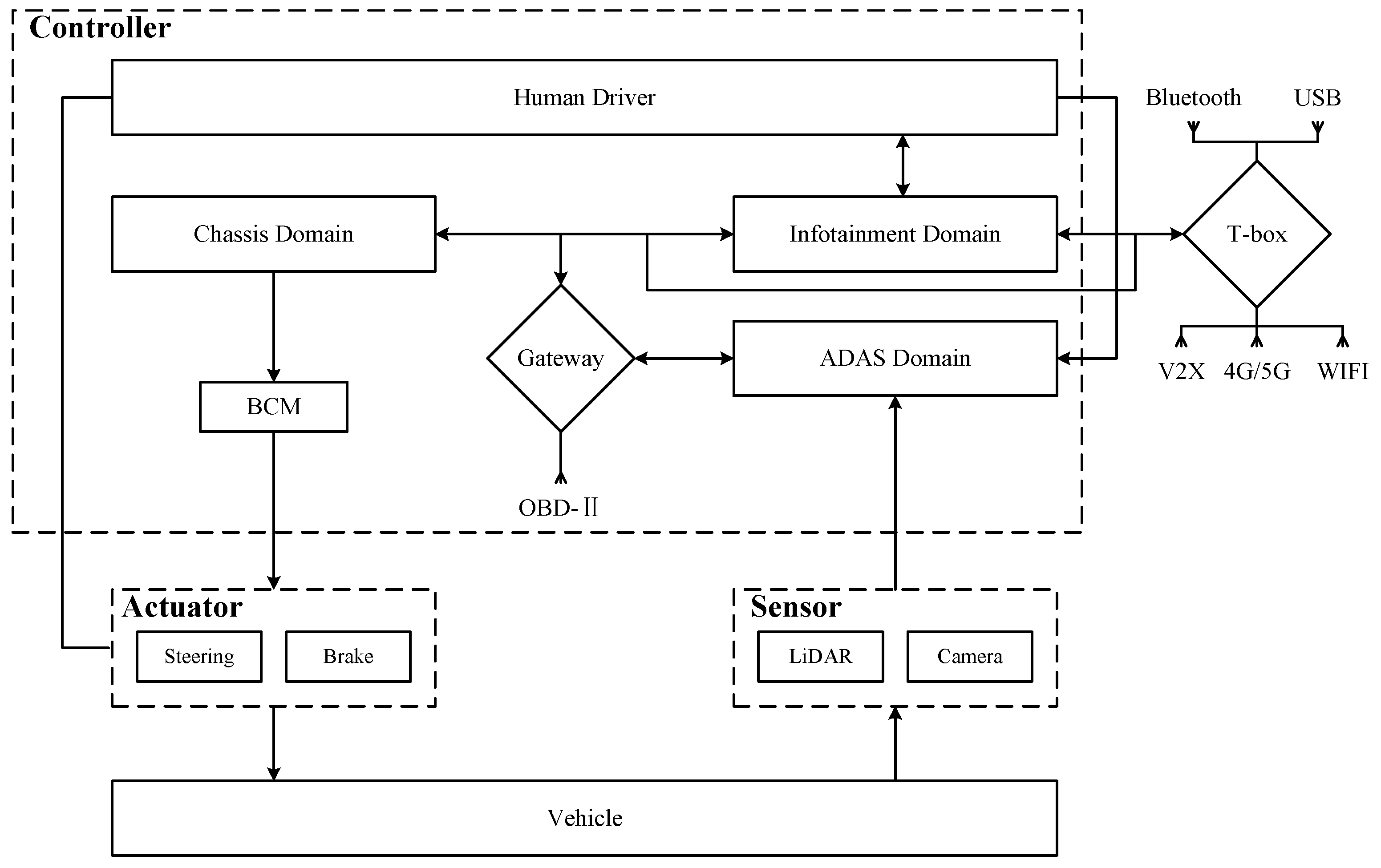

Mapping Control Structures to Physical Component Diagrams

- Collision warning: Continuous monitoring of the distance to the vehicle in front through sensors, sending warning signals to IVI according to the distance to the vehicle in front of it.

- Collision mitigation: ADAS sends a command to the Brake Control Module (BCM) when the sensors detect that the vehicle in front is too close. If the driver reacts urgently but braking force is insufficient, additional braking force is provided.

- Emergency braking: When the sensors detect that the vehicle in front is too close, if the driver does not respond to the warning, the ADAS will send a deceleration command to the BCM and send a lock command to the Electric Power Steering (EPS).

4.2.3. Identifying Unsafe/Unsecure Control Actions

4.2.4. Identifying Loss Scenarios

Identifying Loss Scenarios Related to Human Driver

Modeling Loss Scenario Trees

4.2.5. Refining Safety/Security Constraints

4.3. Risk Assessment

4.4. Methods Comparison Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ICV | Intelligent Connected Vehicle |

| CPS | Cyber-Physical System |

| ECU | Electronic Control Unit |

| CAN | Controller Area Network |

| HARA | Hazard Analysis and Risk Assessment |

| TARA | Threat Analysis and Risk Assessment |

| ASIL | Automotive Safety Integrity Level |

| SecRL | Security Risk Level |

| AEB | Autonomous Emergency Braking |

| STPA | System-Theoretic Process Analysis |

References

- Carreras Guzman, N.H.; Wied, M.; Kozine, I.; Lundteigen, M.A. Conceptualizing the key features of cyber-physical systems in a multi-layered representation for safety and security analysis. Syst. Eng. 2020, 23, 189–210. [Google Scholar] [CrossRef]

- Pan, L.; Zheng, X.; Chen, H.; Luan, T.; Bootwala, H.; Batten, L. Cyber security attacks to modern vehicular systems. J. Inf. Secur. Appl. 2017, 36, 90–100. [Google Scholar] [CrossRef]

- Kim, K.; Kim, J.S.; Jeong, S.; Park, J.H.; Kim, H.K. Cybersecurity for autonomous vehicles: Review of attacks and defense. Comput. Secur. 2021, 103, 102150. [Google Scholar] [CrossRef]

- Zelle, D.; Plappert, C.; Rieke, R.; Scheuermann, D.; Krauß, C. ThreatSurf: A method for automated threat surface assessment in automotive cybersecurity engineering. Microprocess. Microsys. 2022, 90, 104461. [Google Scholar] [CrossRef]

- Auto, U. Upstream Security’s 2023 Global Automotive Cybersecurity Report. 2023. Available online: https://upstream.auto/reports/2023report/ (accessed on 23 March 2023).

- Cai, Z.; Wang, A.; Zhang, W.; Gruffke, M.; Schweppe, H. 0-days & mitigations: Roadways to exploit and secure connected BMW cars. Black Hat USA 2019, 2019, 6. [Google Scholar]

- Bohara, R.; Ross, M.; Rahlfs, S.; Ghatta, S. Cyber Security and Software Update management system for connected vehicles in compliance with UNECE WP. 29, R155 and R156. In Proceedings of the Software Engineering 2023 Workshops; Gesellschaft für Informatik: Bonn, Germany, 2023. [Google Scholar]

- Mader, R.; Winkler, G.; Reindl, T.; Pandya, N. The Car’s Electronic Architecture in Motion: The Coming Transformation. In Proceedings of the 42nd International Vienna Motor Symposium, Vienna, Austria, 29–30 April 2021; pp. 28–30. [Google Scholar]

- Nie, S.; Liu, L.; Du, Y. Free-fall: Hacking tesla from wireless to can bus. Black Hat USA 2017, 25, 16. [Google Scholar]

- Yu, J.; Luo, F. A systematic approach for cybersecurity design of in-vehicle network systems with trade-off considerations. Secur. Commun. Netw. 2020, 2020, 7169720. [Google Scholar] [CrossRef]

- Qureshi, A.; Marvi, M.; Shamsi, J.A.; Aijaz, A. eUF: A framework for detecting over-the-air malicious updates in autonomous vehicles. J. King Saud-Univ. Comput. Inf. Sci. 2022, 34, 5456–5467. [Google Scholar] [CrossRef]

- Kumar, R.; Stoelinga, M. Quantitative security and safety analysis with attack-fault trees. In Proceedings of the 2017 IEEE 18th International Symposium on High Assurance Systems Engineering (HASE), Singapore, 12–14 January 2017; pp. 25–32. [Google Scholar]

- Macher, G.; Sporer, H.; Berlach, R.; Armengaud, E.; Kreiner, C. SAHARA: A security-aware hazard and risk analysis method. In Proceedings of the 2015 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2015; pp. 621–624. [Google Scholar]

- Friedberg, I.; McLaughlin, K.; Smith, P.; Laverty, D.; Sezer, S. STPA-SafeSec: Safety and security analysis for cyber-physical systems. J. Inf. Secur. Appl. 2017, 34, 183–196. [Google Scholar] [CrossRef]

- Leveson, N.G.; Thomas, J.P. STPA Handbook; McMaster University: Hamilton, ON, Canada, 2018. [Google Scholar]

- Young, W.; Leveson, N. Systems thinking for safety and security. In Proceedings of the 29th Annual Computer Security Applications Conference, New Orleans, LA, USA, 9–13 December 2013; pp. 1–8. [Google Scholar]

- Schmittner, C.; Macher, G. Automotive cybersecurity standards-relation and overview. In Proceedings of the Computer Safety, Reliability, and Security: SAFECOMP 2019 Workshops, ASSURE, DECSoS, SASSUR, STRIVE, and WAISE, Turku, Finland, 10 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 153–165. [Google Scholar]

- Kelechava, B. ISO 26262:2018; Road Vehicles Functional Safety Standards. International Organization for Standardization: Geneva, Switzerland, 2019.

- ISO/SAE 21434; Road Vehicles: Cybersecurity Engineering. International Organization for Standardization: Geneva, Switzerland, 2021.

- Kaneko, T.; Yamashita, S.; Takada, A.; Imai, M. Triad concurrent approach among functional safety, cybersecurity and SOTIF. J. Space Saf. Eng. 2023, 10, 505–508. [Google Scholar] [CrossRef]

- Dürrwang, J.; Braun, J.; Rumez, M.; Kriesten, R.; Pretschner, A. Enhancement of automotive penetration testing with threat analyses results. SAE Int. J. Transp. Cybersecur. Priv. 2018, 1, 91–112. [Google Scholar] [CrossRef]

- Agrawal, V.; Achuthan, B.; Ansari, A.; Tiwari, V.; Pandey, V. Threat/Hazard Analysis and Risk Assessment: A Framework to Align the Functional Safety and Security Process in Automotive Domain. SAE Int. J. Transp. Cybersecur. Priv. 2021, 4, 83–96. [Google Scholar]

- United Nations Economic Commission for Europe. Uniform Provisions Concerning the Approval of Vehicles with Regards to Cyber Security and Cyber Security Management System. Regulation Addendum 154-UN Regulation No. 155. 2021. Available online: https://unece.org/sites/default/files/2021-03/R155e.pdf (accessed on 30 January 2022).

- United Nations Economic Commission for Europe. Uniform Provisions Concerning the Approval of Vehicles with Regards to Software Update and Software Updates Management System. Regulation Addendum 155-UN Regulation No. 156. 2021. Available online: https://unece.org/sites/default/files/2021-03/R156e.pdf (accessed on 30 January 2022).

- Benyahya, M.; Collen, A.; Nijdam, N.A. Analyses on standards and regulations for connected and automated vehicles: Identifying the certifications roadmap. Transp. Eng. 2023, 14, 100205. [Google Scholar] [CrossRef]

- Chen, L.; Jiao, J.; Zhao, T. A novel hazard analysis and risk assessment approach for road vehicle functional safety through integrating STPA with FMEA. Appl. Sci. 2020, 10, 7400. [Google Scholar] [CrossRef]

- Cui, J.; Zhang, B. VeRA: A simplified security risk analysis method for autonomous vehicles. IEEE Trans. Veh. Technol. 2020, 69, 10494–10505. [Google Scholar] [CrossRef]

- Cui, J.; Sabaliauskaite, G. US 2: An unified safety and security analysis method for autonomous vehicles. In Proceedings of the 2018 Future of Information and Communication Conference, Vancouver, Canada, 13–14 November 2018; Springer: Cham, Switzerland, 2018; pp. 600–611. [Google Scholar]

- Sabaliauskaite, G.; Liew, L.S.; Cui, J. Integrating autonomous vehicle safety and security analysis using STPA method and the six-step model. Int. J. Adv. Secur. 2018, 11, 160–169. [Google Scholar]

- Triginer, J.C.; Martin, H.; Winkler, B.; Marko, N. Integration of safety and cybersecurity analysis through combination of systems and reliability theory methods. In Proceedings of the Embedded Real-Time Systems, Toulouse, France, 29–31 January 2020. [Google Scholar]

- Teng, S.H.; Ho, S.Y. Failure mode and effects analysis: An integrated approach for product design and process control. Int. J. Qual. Reliab. Manag. 1996, 13, 8–26. [Google Scholar] [CrossRef]

- Lee, W.S.; Grosh, D.L.; Tillman, F.A.; Lie, C.H. Fault tree analysis, methods, and applications—A review. IEEE Trans. Reliab. 1985, 34, 194–203. [Google Scholar] [CrossRef]

- Marhavilas, P.K.; Filippidis, M.; Koulinas, G.K.; Koulouriotis, D.E. The integration of HAZOP study with risk-matrix and the analytical-hierarchy process for identifying critical control-points and prioritizing risks in industry—A case study. J. Loss Prev. Process Ind. 2019, 62, 103981. [Google Scholar] [CrossRef]

- Bolbot, V.; Theotokatos, G.; Bujorianu, L.M.; Boulougouris, E.; Vassalos, D. Vulnerabilities and safety assurance methods in Cyber-Physical Systems: A comprehensive review. Reliab. Eng. Syst. Saf. 2019, 182, 179–193. [Google Scholar] [CrossRef]

- Mahajan, H.S.; Bradley, T.; Pasricha, S. Application of systems theoretic process analysis to a lane keeping assist system. Reliab. Eng. Syst. Saf. 2017, 167, 177–183. [Google Scholar] [CrossRef]

- Abdulkhaleq, A.; Wagner, S. Experiences with applying STPA to software-intensive systems in the automotive domain. In Proceedings of the 2013 STAMP Conference at MIT, Boston, MA, USA, 26–28 March 2013. [Google Scholar]

- Sharma, S.; Flores, A.; Hobbs, C.; Stafford, J.; Fischmeister, S. Safety and security analysis of AEB for L4 autonomous vehicle using STPA. In Proceedings of the Workshop on Autonomous Systems Design (ASD 2019), Florence, Italy, 29 March 2019. [Google Scholar]

- Ten, C.W.; Liu, C.C.; Govindarasu, M. Vulnerability assessment of cybersecurity for SCADA systems using attack trees. In Proceedings of the 2007 IEEE Power Engineering Society General Meeting, Tampa, FL, USA, 24–28 June 2007; pp. 1–8. [Google Scholar]

- Karray, K.; Danger, J.L.; Guilley, S.; Abdelaziz Elaabid, M. Attack tree construction and its application to the connected vehicle. In Cyber-Physical Systems Security; Springer: Berlin/Heidelberg, Germany, 2018; pp. 175–190. [Google Scholar]

- Henniger, O.; Apvrille, L.; Fuchs, A.; Roudier, Y.; Ruddle, A.; Weyl, B. Security requirements for automotive on-board networks. In Proceedings of the 2009 9th International Conference on Intelligent Transport Systems Telecommunications, Lille, France, 20–22 October 2009; pp. 641–646. [Google Scholar]

- Boudguiga, A.; Boulanger, A.; Chiron, P.; Klaudel, W.; Labiod, H.; Seguy, J.C. RACE: Risk analysis for cooperative engines. In Proceedings of the 2015 7th International Conference on New Technologies, Mobility and Security (NTMS), Paris, France, 27–29 July 2015; pp. 1–5. [Google Scholar]

- Monteuuis, J.P.; Boudguiga, A.; Zhang, J.; Labiod, H.; Servel, A.; Urien, P. Sara: Security automotive risk analysis method. In Proceedings of the 4th ACM Workshop on Cyber-Physical System Security, Incheon, Republic of Korea, 4–8 June 2018; pp. 3–14. [Google Scholar]

- Sheik, A.T.; Maple, C.; Epiphaniou, G.; Dianati, M. Securing Cloud-Assisted Connected and Autonomous Vehicles: An In-Depth Threat Analysis and Risk Assessment. Sensors 2023, 24, 241. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Zaboli, A.; Hong, J.; Kwon, J. An Integrated Approach of Threat Analysis for Autonomous Vehicles Perception System. IEEE Access 2023, 11, 14752–14777. [Google Scholar] [CrossRef]

- Sahay, R.; Estay, D.S.; Meng, W.; Jensen, C.D.; Barfod, M.B. A comparative risk analysis on CyberShip system with STPA-Sec, STRIDE and CORAS. Comput. Secur. 2023, 128, 103179. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Q.; Zhuang, W.; Zhou, Y.; Cao, C.; Wu, J. Dynamic Heterogeneous Redundancy-Based Joint Safety and Security for Connected Automated Vehicles: Preliminary Simulation and Field Test Results. IEEE Veh. Technol. Mag. 2023, 18, 89–97. [Google Scholar] [CrossRef]

- Cui, J.; Sabaliauskaite, G.; Liew, L.S.; Zhou, F.; Zhang, B. Collaborative analysis framework of safety and security for autonomous vehicles. IEEE Access 2019, 7, 148672–148683. [Google Scholar] [CrossRef]

- De Souza, N.P.; César, C.d.A.C.; de Melo Bezerra, J.; Hirata, C.M. Extending STPA with STRIDE to identify cybersecurity loss scenarios. J. Inf. Secur. Appl. 2020, 55, 102620. [Google Scholar] [CrossRef]

- SAE International. 3061: Cybersecurity Guidebook for Cyber-Physical Vehicle Systems; Society for Automotive Engineers: Warrendale, PA, USA, 2016. [Google Scholar]

- Cui, J.; Sabaliauskaite, G. On the Alignment of Safety and Security for Autonomous Vehicles; IARIA CYBER: Barcelona, Spain, 2017. [Google Scholar]

- Sun, L.; Li, Y.F.; Zio, E. Comparison of the HAZOP, FMEA, FRAM, and STPA methods for the hazard analysis of automatic emergency brake systems. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part Mech. Eng. 2022, 8, 031104. [Google Scholar] [CrossRef]

- Bolovinou, A.; Atmaca, U.I.; Ur-Rehman, O.; Wallraf, G.; Amditis, A. Tara+: Controllability-aware threat analysis and risk assessment for l3 automated driving systems. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 8–13. [Google Scholar]

- France, M.E. Engineering for Humans: A New Extension to STPA. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2017. [Google Scholar]

- Petit, J.; Stottelaar, B.; Feiri, M.; Kargl, F. Remote attacks on automated vehicles sensors: Experiments on camera and lidar. Black Hat Eur. 2015, 11, 995. [Google Scholar]

| Method | Type | Analysis Results (Qualitative/Quantitative) | Causal Factors for Loss Scenarios | Level of Analysis (Abstract/Specific) | Comply with Standard (Y/N) |

|---|---|---|---|---|---|

| STPAFT [26] | Safety | Qualitative and quantitative | Component failure (including unsafe and unexpected interactions between system components) | Abstract and Specific | N |

| VeRA [27] | Security | Qualitative and quantitative | Malicious attacks | Specific | Y |

| STPA-Sec [16] | Security | Qualitative | Component failure (including unsecure and unexpected interactions between system components) | Abstract | N |

| [28] | S&S | Qualitative and quantitative | Component failure and malicious attacks | Specific | Y |

| STPA with Six-Step Model [29] | S&S | Qualitative | Component failure and malicious attacks (including unsafe/unsecure and unexpected interactions between system components) | Specific | Y |

| STPA-SafeSec [14] | S&S | Qualitative | Component failure and malicious attacks (including unsafe/unsecure and unexpected interactions between system components) | Abstract and Specific | N |

| Unified safety and cybersecurity analysis method [30] | S&S | Qualitative and quantitative | Component failure and malicious attacks (including unsafe/unsecure and unexpected interactions between system components) | Abstract | Y |

| ISO 26262 (HARA) | ISO/SAE 21434 (TARA) | Our Method | |

|---|---|---|---|

| Phase | Concept phase | Concept phase | Apply at any phase of system design, including the concept phase |

| Characteristics | Develop clear safety goals and corresponding safety requirements Continuous refinement of safety requirements during the vehicle development cycle | Develop clear security goals and corresponding security requirements Continuous refinement of security requirements during the vehicle development cycle | Continuously refine safety/security constraints in subsequent steps after determining them |

| Risk assessment | Determine ASIL based on severity, exposure, and controllability | Determine security level based on impact rating and attack feasibility | Risks are assessed and mapped to ASIL level and security level using the same risk matrix |

| Results | Categorize hazard events according to ASIL levels while identifying safety constraints and safety requirements to avoid unreasonable risks | Identify potential threats to vehicles and their risk levels to clarify cybersecurity objectives and generate cybersecurity requirements | Identify system-related hazards or threats, determine their causal scenarios, and develop safety and security constraints to eliminate, mitigate, or control them |

| Factor | Level | Value |

|---|---|---|

| Elapsed time | ≤1 day | 0 |

| ≤1 week | 1 | |

| ≤1 month | 4 | |

| ≤3 months | 10 | |

| ≤6 months | 17 | |

| >6 months | 19 | |

| not practical | ∞ | |

| Expertise | Layman | 0 |

| Proficient | 3 | |

| Expert | 6 | |

| Multiple experts | 8 | |

| Knowledge of system | Public | 0 |

| Restricted | 3 | |

| Sensitive | 7 | |

| Critical | 11 | |

| Window of opportunity | Unnecessary/unlimited | 0 |

| Easy | 1 | |

| Moderate | 4 | |

| Difficult | 10 | |

| None | ∞ | |

| Equipment | Standard | 0 |

| Specialized | 4 | |

| Bespoke | 7 | |

| Multiple bespoke | 9 |

| Values | Attack Potential Required to Identify and Exploit Attack Path | Attack Feasibility | |

|---|---|---|---|

| 0–9 | Basic | 5 | High |

| 10–13 | Enhanced-Basic | 4 | High |

| 14–19 | Moderate | 3 | Medium |

| 20–24 | High | 2 | Low |

| ≥25 | Beyond High | 1 | Very low |

| C0 | C1 | C2 | C3 |

|---|---|---|---|

| Controllable in general | Simply controllable | Normally controllable | Difficult to control or uncontrollable |

| Safety () | Financial () | Operational () | Privacy () |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 1 | 1 | 1 | 1 |

| 2 | 2 | 2 | 2 |

| 3 | 3 | 3 | 3 |

| Controllability | Severity | Attack Feasibility | ||||

|---|---|---|---|---|---|---|

| AF = 1 | AF = 2 | AF = 3 | AF = 4 | AF = 5 | ||

| C = 0 | S = 0 | 0 | 0 | 1 | 2 | 3 |

| S = 1 | 0 | 1 | 2 | 3 | 4 | |

| S = 2 | 1 | 2 | 3 | 4 | 5 | |

| S = 3 | 2 | 3 | 4 | 5 | 6 | |

| C = 1 | S = 0 | 0 | 1 | 2 | 3 | 4 |

| S = 1 | 1 | 2 | 3 | 4 | 5 | |

| S = 2 | 2 | 3 | 4 | 5 | 6 | |

| S = 3 | 3 | 4 | 5 | 6 | 7 | |

| C = 2 | S = 0 | 1 | 2 | 3 | 4 | 5 |

| S = 1 | 2 | 3 | 4 | 5 | 6 | |

| S = 2 | 3 | 4 | 5 | 6 | 7 | |

| S = 3 | 4 | 5 | 6 | 7 | 7+ | |

| C = 3 | S = 0 | 2 | 3 | 4 | 5 | 6 |

| S = 1 | 3 | 4 | 5 | 6 | 7 | |

| S = 2 | 4 | 5 | 6 | 7 | 7+ | |

| S = 3 | 5 | 6 | 7 | 7+ | 7+ | |

| Unified Risk Level | ASIL | Security Risk Level |

|---|---|---|

| 1 | QM | 1 |

| 2 | A | 2 |

| 3 | A | 2 |

| 4 | B | 3 |

| 5 | B | 3 |

| 6 | C | 4 |

| 7 | D | 5 |

| 7+ | Risk deemed beyond normally acceptable levels | |

| Loss | Description |

|---|---|

| L-1 | Damage to the safety of persons |

| L-2 | Vehicle damage |

| L-3 | Sensitive data leakage |

| Hazard | Description | Constrains |

|---|---|---|

| H-1 | Vehicle did not maintain a safe distance from surrounding vehicles or obstacles during driving [L-1, L-2] | SC-1: Vehicle must maintain a safe distance from surrounding vehicles or obstacles during the driving process |

| H-2 | Damage to the physical integrity of the vehicle [L-1, L-2] | SC-2: Vehicle must maintain physical integrity |

| H-3 | Vehicle off the planned route [L-1, L-2] | SC-3: Vehicle must keep the planned route |

| H-4 | Vehicle unable to perform its functions [L-1, L-2] | SC-4: Vehicle must perform its functions properly |

| T-1 | Vehicle is subject to unauthorized remote access [L-1, L-2, L-3] | SC-5: Remote access to vehicles must be authorized |

| Control Action | Unsafe Control Action | |

|---|---|---|

| Not Providing | Providing | |

| Brake | UCA-Safe-1: The vehicle did not provide braking command when the distance to the obstacle is less than the safe distance [H-1, H-2] | UCA-Safe-2: The vehicle provides braking commands when the distance to the obstacle is greater than the safe distance [H-1, H-2] |

| Provided but wrong timing | Provided but incorrect duration | |

| UCA-Safe-3: The vehicle provides braking command, but too early [H-1, H-2] UCA-Safe-4: The vehicle provides braking command, but too late [H-1, H-2] | UCA-Safe-5: The vehicle provides braking command, but the duration is too long [H-1, H-2] UCA-Safe-6: The vehicle provides braking command, but stops too early [H-1, H-2] | |

| Control Action | Unsecure Control Action | |

|---|---|---|

| Not Providing | Providing | |

| Update | UCA-Sec-1: OEM Server does not update firmware for vulnerable ECUs [H-4, T-1] | UCA-Sec-2: OEM Server updates ECU firmware, leading to new vulnerability [H-4, T-1] |

| Provided but wrong timing | Provided but incorrect duration | |

| - | UCA-Sec-3: OEM Server firmware update process stopped too soon [H-4, T-1] | |

| Control Action | Unsafe Control Action | |

|---|---|---|

| Not Providing | Providing | |

| Brake | UCA-Safe-7: The human driver does not provide braking action when the vehicle is less than a safe distance from an obstacle [H-1, H-2] | UCA-Safe-8: The human driver provides braking action, but not enough [H-1, H-2] |

| Provided but wrong timing | Provided but incorrect duration | |

| UCA-Safe-9: The human driver provides braking action too late, when the distance to the obstacle is less than the safe distance [H-1, H-2] | UCA-Safe-10: The human driver stops the braking action sooner than necessary when the current speed is still higher than the threshold value [H-1, H-2] | |

| UCA | Q1 | Q2 | Q3 |

|---|---|---|---|

| UCA-Safe-7: The human driver does not provide braking action when the vehicle is less than a safe distance from an obstacle [H-1, H-2] | 1. The human driver believes that, with ADAS activated, he or she does not need to perform emergency braking in most situations. | 2a. The human driver incorrectly believes that the ADAS system is operating normally and does not require manual braking. | 3. The HMI displays that the ADAS is operating normally, but in reality, the HMI display is incorrect or experiencing delayed status updates. |

| 2b. The human driver knows that the AEB subsystem of ADAS will provide an emergency braking command. | |||

| 2c. The human driver fails to notice an obstacle in front of the vehicle. |

| UCA | System-Level Constrains | Safety/Security Constrains |

|---|---|---|

| UCA-Safe-2: The vehicle provides a braking command when the distance to the obstacle is greater than the safe distance [H-1, H-2] | SC-1: Vehicle must maintain a safe distance from surrounding vehicles or obstacles during the driving process [H-1] | SafeC-1.1: The vehicle’s sensors (e.g., LiDAR, camera, etc.) need to be able to accurately detect and measure the position and speed of surrounding vehicles and obstacles |

| SafeC-1.2: Redundant design of sensors | ||

| SafeC-1.3: Uses high-performance information fusion | ||

| SafeC-1.4: Reduces the transmission delay of data between nodes | ||

| SafeC-1.5: Reduces the computational latency of information received by the T-BOX | ||

| SafeC-1.6: Reduced latency in V2V communications | ||

| SecC-1.1: Ensure the integrity of sensor (e.g., LiDAR, camera, etc.) data to prevent data tampering or falsification | ||

| SecC-1.2: Encryption and authentication of communications between vehicles to ensure their protection from malicious attacks | ||

| SecC-1.3: Equipped with intrusion detection systems to defend and protect against malicious attacks | ||

| SecC-1.4: Force authentication on each node (ECU) | ||

| SC-2: Vehicle must maintain physical integrity [H-2] | SafeC-2.1: Regular inspection and maintenance of vehicles to ensure that they are not structurally damaged or corroded | |

| SafeC-2.2: Restrictions on the operation of vehicles in specific situations |

| SafeC-1.4 | SafeC-1.5 | SafeC-1.6 | |

|---|---|---|---|

| SecC-1.1 | ✓ | ||

| SecC-1.2 | ✓ | ✓ | |

| SecC-1.3 | ✓ | ✓ | |

| SecC-1.4 | ✓ |

| Threat Description | Attack Path | Attack Feasibility | |||||

|---|---|---|---|---|---|---|---|

| ET | K | Ex | W | Eq | Attack Feasibility Rating | ||

| Spoofing attack of BCM | 1. Connect to the vehicle’s WIFI or Bluetooth via cell phone | 1 | 7 | 6 | 1 | 0 | 3 |

| 2. Gain root access to T-BOX by exploiting weak password cracking or kernel vulnerabilities | |||||||

| 3. Reverse Analyzing T-BOX Firmware | |||||||

| 4. The attacker forges T-BOX commands and forwards malicious instructions to other DCUs through the gateway | |||||||

| 5. DCU sends malicious commands to the BCM | |||||||

| DoS attack of BCM | 1. The attacker gains access to the in-vehicle network by connecting to the IVI through professional tools | 1 | 7 | 6 | 1 | 0 | 3 |

| 2. Transmitting malicious control signals through the compromised IVI | |||||||

| 3. The attacker compromises the central gateway | |||||||

| 4. The gateway forwards malicious signals to DCUs | |||||||

| 5. The attacker floods the bus connecting the DCU to the BCM with a large number of malicious signals | |||||||

| Spoofing attack of LiDAR | 1. Attacker receives optical signals using transceiver A | 1 | 3 | 2 | 4 | 4 | 3 |

| 2. Transceiver B receives the voltage signal from A and sends an optical signal to the LiDAR | |||||||

| DoS attack of LiDAR | 1. Attackers use oversaturation attacks against LiDAR | 1 | 3 | 6 | 4 | 4 | 3 |

| UCA | Impact Rating | Controllability | Component | RL | ASIL | SecRL |

|---|---|---|---|---|---|---|

| UCA-Safe-2: The vehicle provides a braking command when the distance to the obstacle is greater than the safe distance [H-1, H-2] | [2, 2, 2, 0] | 3 | BCM | 6 | C | 4 |

| LiDAR | 6 | C | 4 |

| Attribute | FMEA | Attack Tree | STPA with Six-Step Model | Our Method | |

|---|---|---|---|---|---|

| Integrates human interaction | N | N | N | Y | Y |

| Identify hazards and threats | Hazards | Threats | Hazards and threats | Hazards and threats | Hazards and threats |

| Qualitative or quantitative | Qualitative and quantitative | Qualitative and quantitative | Qualitative and quantitative | Qualitative | Qualitative and quantitative |

| Hazard (threat) causal factors | Component failure | Malicious attack | Component failure | Component failure, unsafe/unsecure interaction between components | Component failure, unsafe/unsecure interaction between components |

| Perspective of analysis | Function | Component (function) | Component | Control action | Control action |

| Threat model | N | N | N | N | STRIDE |

| Complexity | Low | Low | Low | High | High |

| Model of failure (attack) path | N | Attack tree | N | Failure tree and attack tree | Loss Scenario Tree (failure and attack path) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Liu, W.; Liu, Q.; Zheng, X.; Sun, K.; Huang, C. Complying with ISO 26262 and ISO/SAE 21434: A Safety and Security Co-Analysis Method for Intelligent Connected Vehicle. Sensors 2024, 24, 1848. https://doi.org/10.3390/s24061848

Li Y, Liu W, Liu Q, Zheng X, Sun K, Huang C. Complying with ISO 26262 and ISO/SAE 21434: A Safety and Security Co-Analysis Method for Intelligent Connected Vehicle. Sensors. 2024; 24(6):1848. https://doi.org/10.3390/s24061848

Chicago/Turabian StyleLi, Yufeng, Wenqi Liu, Qi Liu, Xiangyu Zheng, Ke Sun, and Chengjian Huang. 2024. "Complying with ISO 26262 and ISO/SAE 21434: A Safety and Security Co-Analysis Method for Intelligent Connected Vehicle" Sensors 24, no. 6: 1848. https://doi.org/10.3390/s24061848

APA StyleLi, Y., Liu, W., Liu, Q., Zheng, X., Sun, K., & Huang, C. (2024). Complying with ISO 26262 and ISO/SAE 21434: A Safety and Security Co-Analysis Method for Intelligent Connected Vehicle. Sensors, 24(6), 1848. https://doi.org/10.3390/s24061848