New Scheme of MEMS-Based LiDAR by Synchronized Dual-Laser Beams for Detection Range Enhancement

Abstract

:1. Introduction

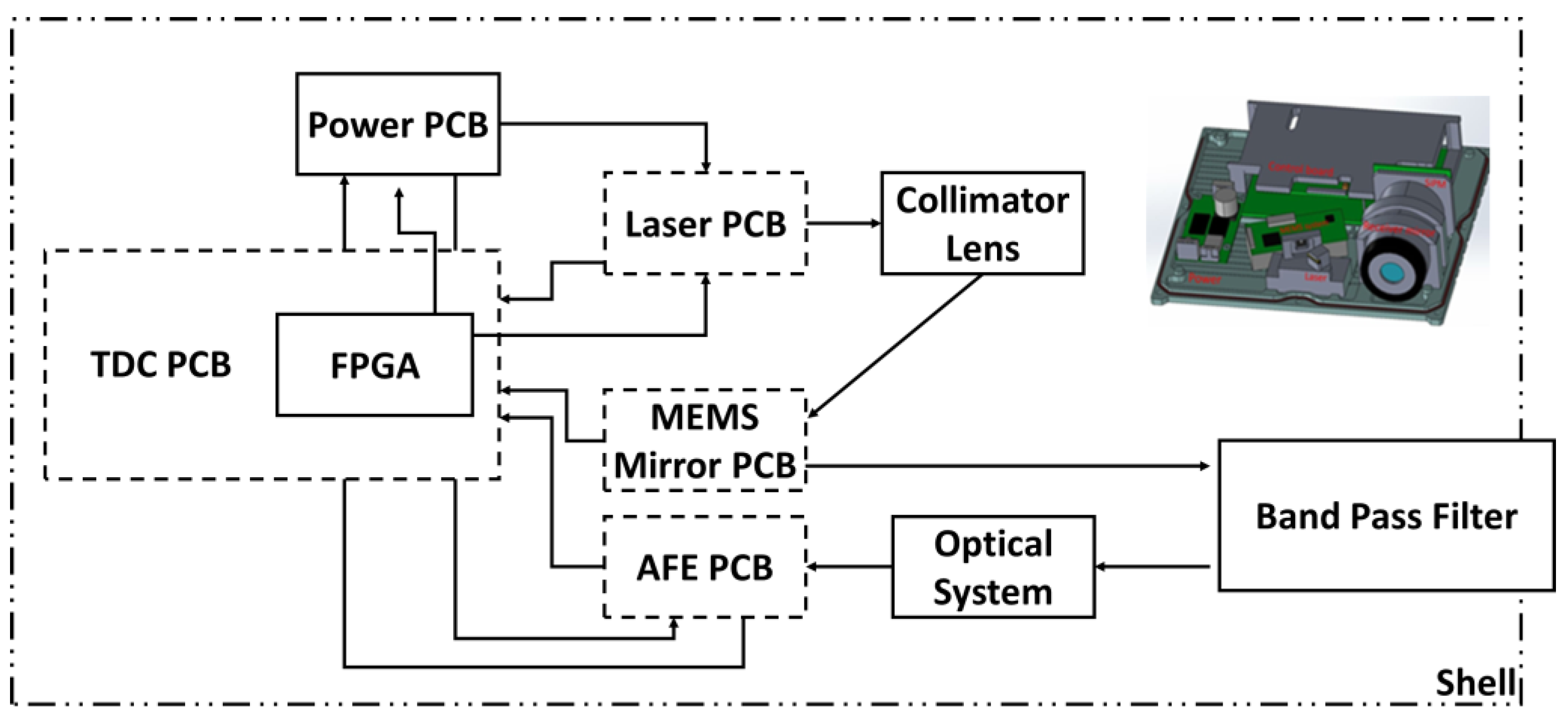

2. Structure of MEMS-Based LiDAR

2.1. Scheme of MEMS-Based LiDAR

2.2. Theory of Dual-Laser Sources to Enhance the Detection Distance

3. Measurements and Results

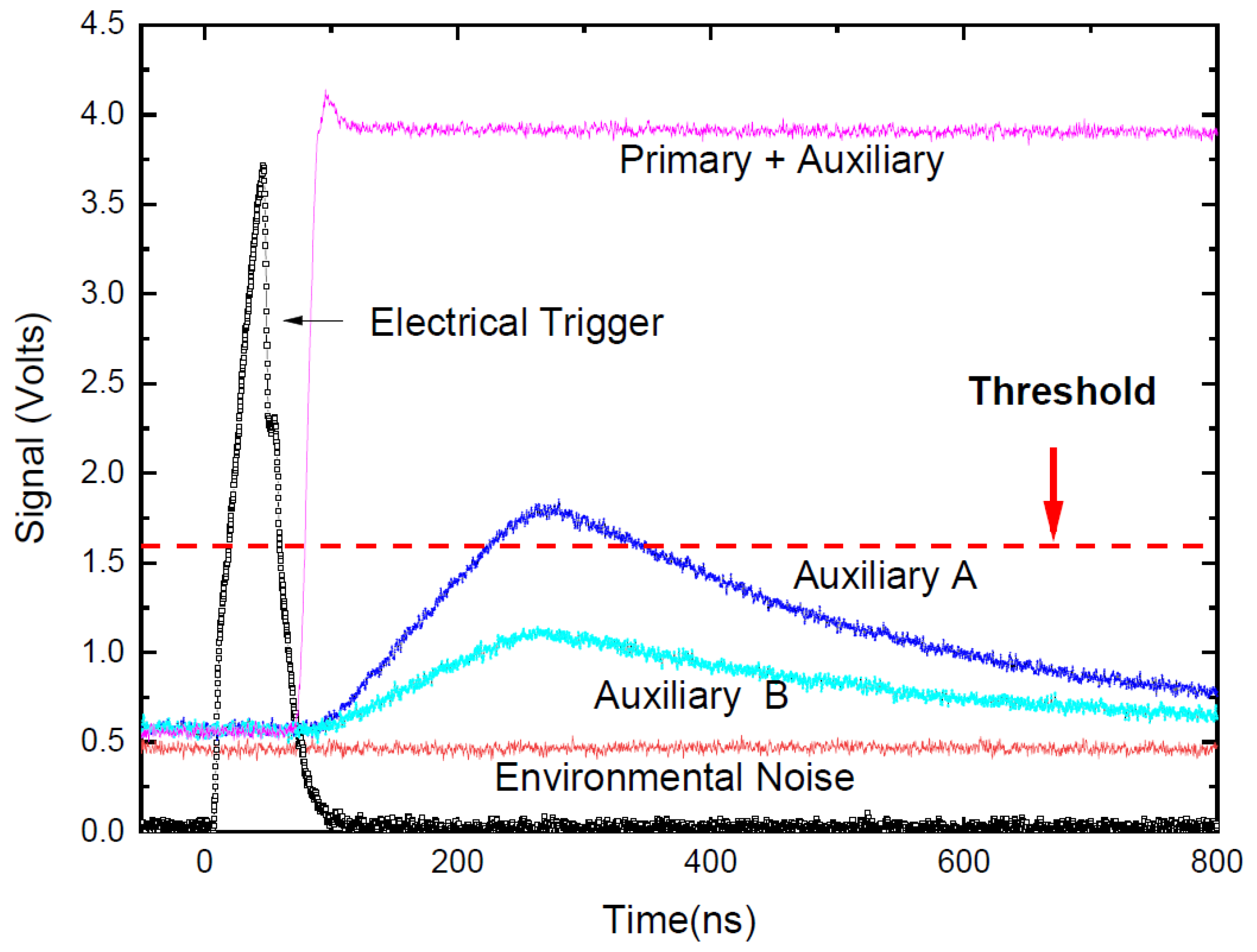

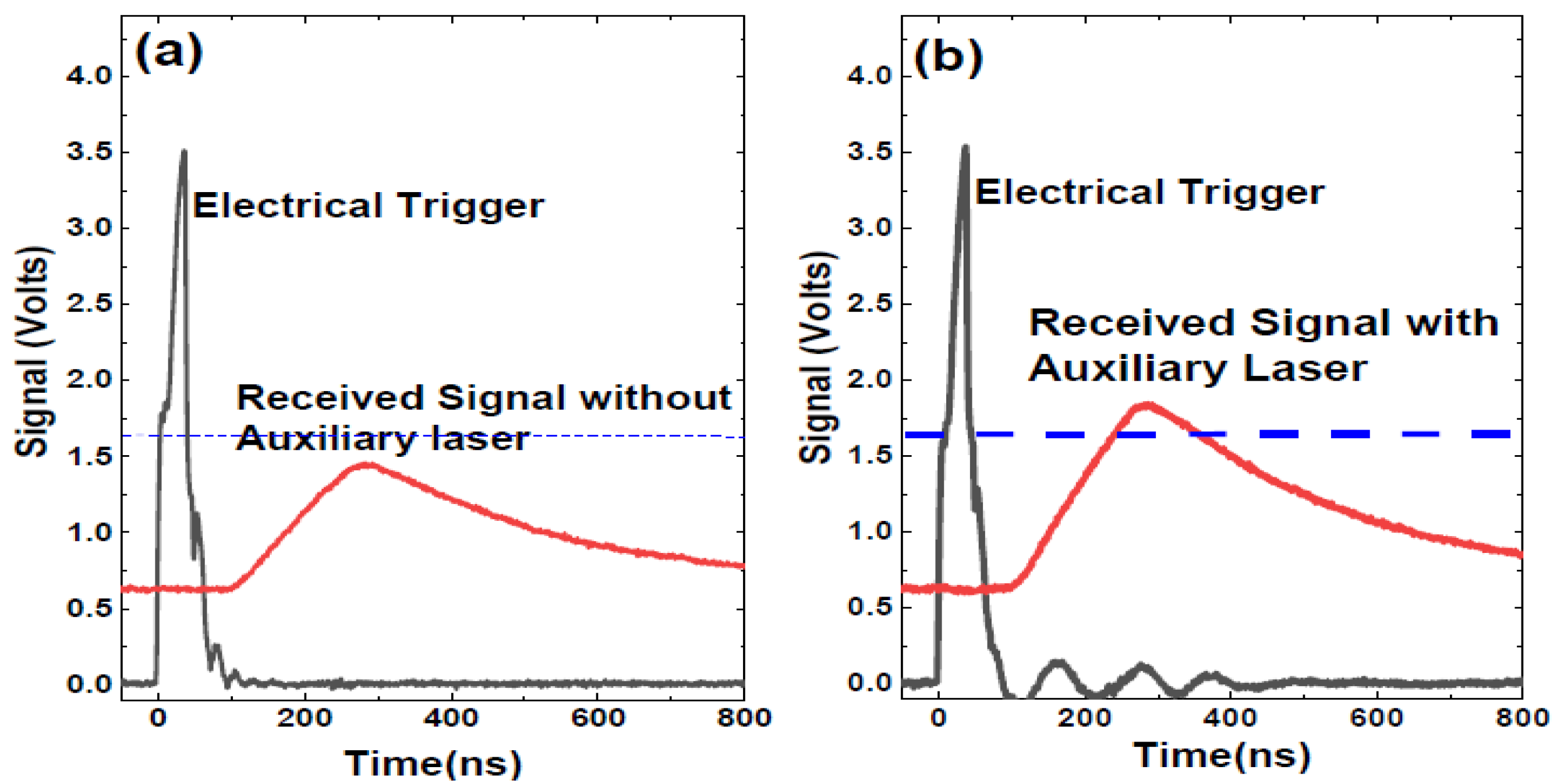

3.1. Measurement of the Verification Architecture for the Synchronized Dual-Laser Beams

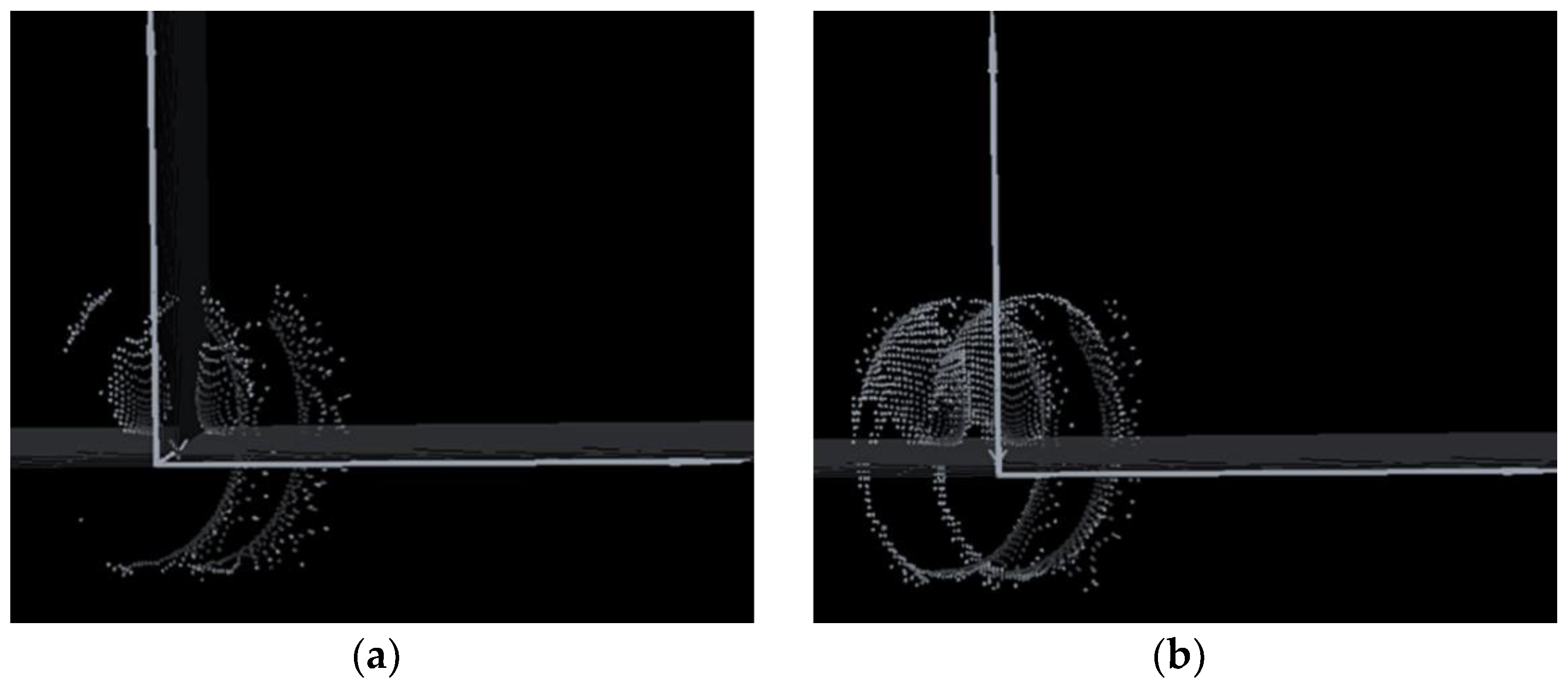

3.2. Measurement of MEMS-Based LiDAR

3.3. Measurement of FOV, Angular Resolution, and Maximum Detected Distance

3.4. Results and Discussions

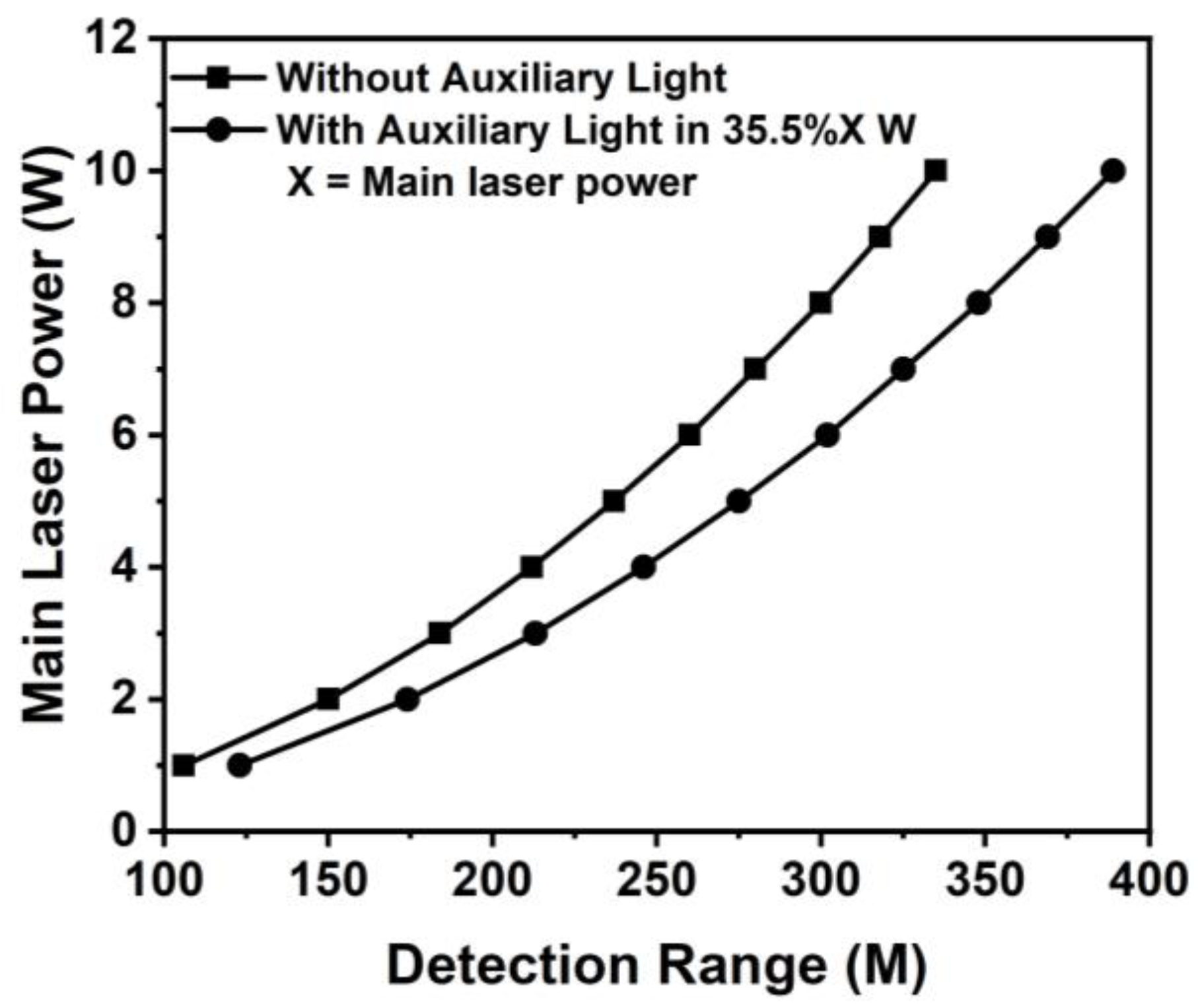

3.5. Simulation Results

- Price: A high-power laser chip with a wavelength of 1550 nm and a power of more than 4 W is not a product that one can commercially purchase. This product costs more than the standard model.

- Power Consumption: With or without an additional laser, the LiDAR’s overall output power was smaller. In general, the main laser’s consumption rate was smaller. However, when directly pumping the auxiliary laser system into the APD when the range is very close, there is very little loss of advantage.

- Dimension: The high-power laser module was more extensive compared to the low-power one. Therefore, this will impact the module structure and other electrical component parameters. This specification indicates that the volume will be larger than low-power LiDAR. Additionally, as the auxiliary laser system is an additional laser light source attached to the existing structure, with an input direction of light from the outside to the inside, it requires extra room. In general, there will be little difference between the two volumes.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, D.; Watkins, C.; Xie, H. MEMS Mirrors for LiDAR: A Review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef] [PubMed]

- Yoo, H.W.; Druml, N.; Brunner, D.; Schwarzl, C.; Thurner, T.; Hennecke, M.; Schitter, G. MEMS-based lidar for autonomous driving. Elektrotech Informat. 2018, 135, 408–415. [Google Scholar] [CrossRef]

- Donati, S.; Martini, G.; Pei, Z.; Cheng, W.H. Analysis of Timing Errors in Time-of-Flight LiDAR Using APDs and SPADs Receivers. IEEE J. Quantum Electron. 2021, 57, 1–8. [Google Scholar] [CrossRef]

- Ito, K.; Niclass, C.; Aoyagi, I.; Matsubara, H.; Soga, M.; Kato, S.; Maeda, M.; Kagami, M. System Design and Performance Characterization of a MEMS-Based Laser Scanning Time-of-Flight Sensor Based on a 256 x 64-pixel Single-Photon Imager. IEEE Photon. J. 2013, 5, 6800114. [Google Scholar] [CrossRef]

- Twiss, R.Q.; Little, A.G. The Detection of Time-correlated Photons by a Coincidence Counter. Aust. J. Phys. 1959, 12, 77–93. [Google Scholar] [CrossRef]

- Unternährer, M.; Bessire, B.; Gasparini, L.; Stoppa, D.; Stefanov, A. Coincidence detection of spatially correlated photon pairs with a monolithic time-resolving detector array. Opt. Express 2016, 24, 28829–28841. [Google Scholar] [CrossRef] [PubMed]

- Benzi, R.; Sutera, A.; Vulpiani, A. The mechanism of stochastic resonance. J. Phys. A Math. Theor. 1981, 14, L453–L457. [Google Scholar] [CrossRef]

- Hibbs, A.D.; Singsaas, A.L.; Jacobs, E.W.; Bulsara, A.R.; Bekkedahl, J.J.; Moss, F. Stochastic resonance in a superconducting loop with a Josephson junction. J. Appl. Phys. 1995, 77, 2582–2590. [Google Scholar] [CrossRef]

- Collins, J.J.; Imhoff, T.T.; Grigg, P. Noise-enhanced tactile sensation. Nature 1996, 383, 770. [Google Scholar] [CrossRef] [PubMed]

- Dylov, D.V.; Fleischer, J.W. Nonlinear self-filtering of noisy images via dynamical stochastic resonance. Nat. Photonics 2010, 4, 323–328. [Google Scholar] [CrossRef]

- Lu, G.N.; Pittet, P.; Carrillo, G.; Mourabit, A. El On-chip synchronous detection for CMOS photodetector. In Proceedings of the 9th International Conference on Electronics, Circuits and Systems, Dubrovnik, Croatia, 15 September 2002. [Google Scholar]

- Dodda, A.; Oberoi, A.; Sebastian, A.; Choudhury, T.H.; Redwing, J.M.; Das, S. Stochastic resonance in MoS2 photodetector. Nat. Commun. 2020, 11, 4406. [Google Scholar] [CrossRef] [PubMed]

- Warren, M.E.; Podva, D.; Dacha, P.; Block, M.K.; Helms, C.J.; Maynard, J.; Carson, R.F. Low-divergence high-power VCSEL arrays for lidar application. In Proceedings of the SPIE Optics + Photonics 2018, San Francisco, CA, USA, 19 August 2018. [Google Scholar]

- Artilux Research Laboratory. Artilux Phoenix GeSi APD Technology; White Paper; Artilux Inc.: Hsinchu County, Taiwan, 2022. [Google Scholar]

- Lee, X.; Wang, C.; Luo, Z.; Li, S. Optical design of a new folding scanning system in MEMS-based lidar. Opt. Laser. Technol. 2020, 125, 106013. [Google Scholar] [CrossRef]

- Tian, Y.; Ding, W.; Feng, X.; Lin, Z.; Zhao, Y. High signal-noise ratio avalanche photodiodes with dynamic biasing technology for laser radar applications. Opt. Express 2022, 30, 26484–26491. [Google Scholar] [CrossRef] [PubMed]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian decomposition and calibration of a novel small-footprint full-waveform digitising airborne laser scanner. ISPRS J. Photogramm. 2006, 60, 100–112. [Google Scholar] [CrossRef]

| Item | Model | Advantage | Shortcoming |

|---|---|---|---|

| 1 [11] | The dual-light source effect causes a stochastic resonance system. | A single light source splits into two and adjusts itself to enhance the intensity of the light signal. | A larger volume reduces the detection distance. |

| 2 [12] | A circuit module boosts the signal that is received. | Able to boost weak signals to the sensor’s lowest possible reception level. | Synchronous control will result in a momentary black space. |

| 3 [13] | Increase the power of vertical-cavity surface-emitting laser (VCSEL). | Using light source array mode to boost optical power. | The cost is too high; light source module temperature is too high. |

| 4 [14] | Using high-sensitivity avalanche photodiode (APD). | Developing low excess noise APDs with new material (GeSi). | In development. |

| 5 [15] | Improve the optical lens modules’ matching. | Less of an effect on the LiDAR’s general structure. | Extremely exacting in terms of lens design and specs. |

| Measurement Data | Specification of MEMS | |||

|---|---|---|---|---|

| Measurement distance (cm) | 280 | 380 | 480 | 380 |

| Length for field (cm) | 240 | 320 | 400 | NA |

| Width for field (cm) | 132 | 163 | 218 | NA |

| Horizon for FOV (°) | 46.2 | 45.6 | 45.2 | 45 |

| Vertical for FOV (°) | 26.5 | 24.2 | 24.4 | 25 |

| Resolution for object | 36 × 76 | 27 × 61 | 22 × 47 | NA |

| Resolution | 392 × 218 | 393 × 216 | 400 × 223 | NA |

| Angular resolution (H °) × (V °) | 0.1 × 0.1 | 0.1 × 0.1 | 0.1 × 0.1 | NA |

| The Output Power of the Auxiliary (mW) | Maximum Detection Range (m) | Enhancement Rate (%) |

|---|---|---|

| 0 | 107 | X |

| 0.1 | 113 | 6.7 |

| 53.6 | 115 | 8.5 |

| 204 | 116 | 9.5 |

| 355 | 124 | 16 |

| Price | Power Consumption | Dimension | |

|---|---|---|---|

| Without Auxiliary Laser | High | High | Smaller |

| With Auxiliary Laser | Low | Low |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, C.-W.; Liu, C.-N.; Mao, S.-C.; Tsai, W.-S.; Pei, Z.; Tu, C.W.; Cheng, W.-H. New Scheme of MEMS-Based LiDAR by Synchronized Dual-Laser Beams for Detection Range Enhancement. Sensors 2024, 24, 1897. https://doi.org/10.3390/s24061897

Huang C-W, Liu C-N, Mao S-C, Tsai W-S, Pei Z, Tu CW, Cheng W-H. New Scheme of MEMS-Based LiDAR by Synchronized Dual-Laser Beams for Detection Range Enhancement. Sensors. 2024; 24(6):1897. https://doi.org/10.3390/s24061897

Chicago/Turabian StyleHuang, Chien-Wei, Chun-Nien Liu, Sheng-Chuan Mao, Wan-Shao Tsai, Zingway Pei, Charles W. Tu, and Wood-Hi Cheng. 2024. "New Scheme of MEMS-Based LiDAR by Synchronized Dual-Laser Beams for Detection Range Enhancement" Sensors 24, no. 6: 1897. https://doi.org/10.3390/s24061897

APA StyleHuang, C.-W., Liu, C.-N., Mao, S.-C., Tsai, W.-S., Pei, Z., Tu, C. W., & Cheng, W.-H. (2024). New Scheme of MEMS-Based LiDAR by Synchronized Dual-Laser Beams for Detection Range Enhancement. Sensors, 24(6), 1897. https://doi.org/10.3390/s24061897