Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods

Abstract

:1. Introduction

2. Background

2.1. Industry 5.0

2.2. Human–Machine Collaboration

2.3. Digital Twin

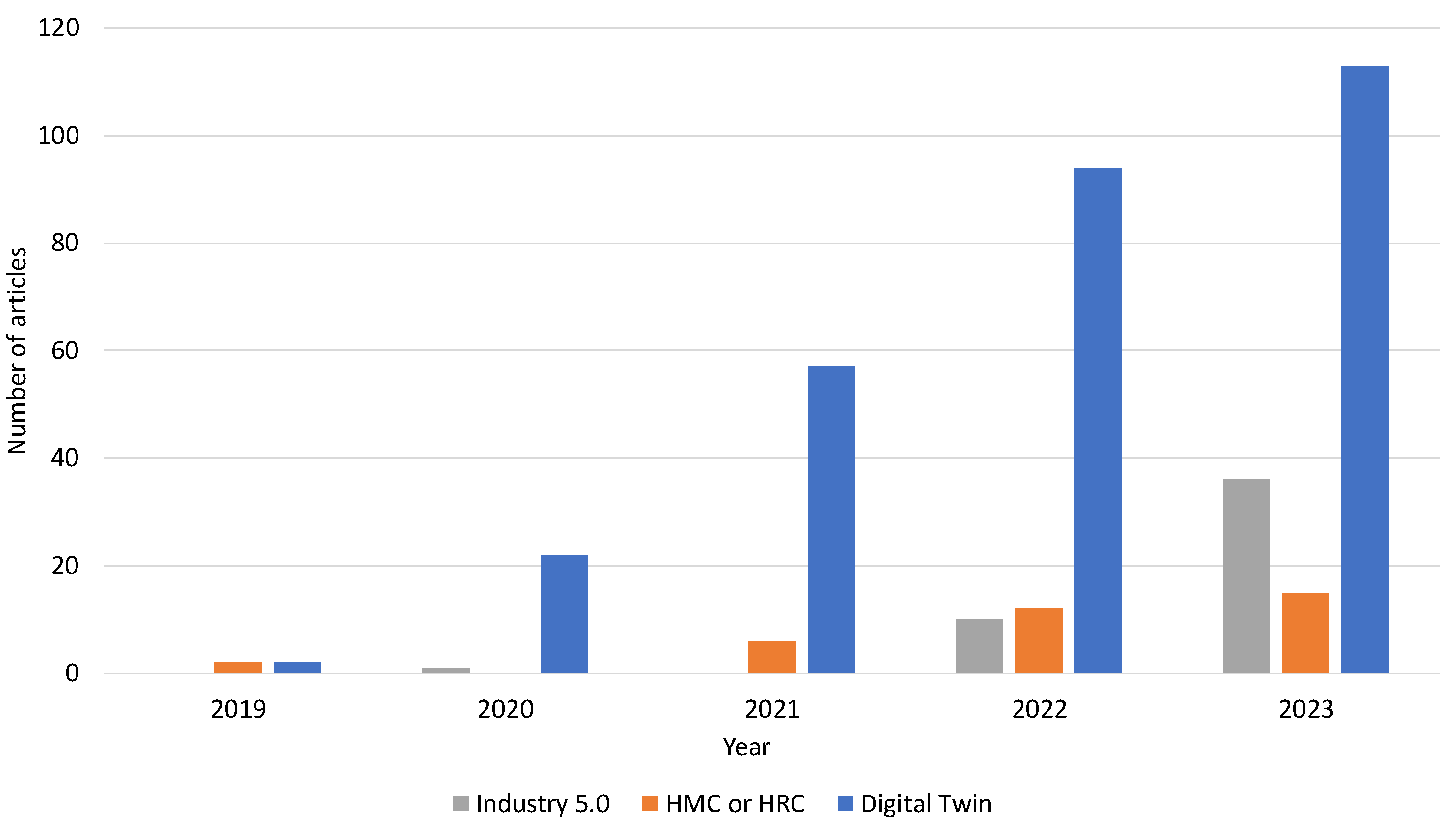

3. Review Methodology

4. Enabling Technologies and Methods

4.1. Digital Twins and Simulations

4.2. Artificial Intelligence

| AI Method | Specific Technique | Task | Application Problem |

|---|---|---|---|

| Traditional methods | SVM [50,72] | Classification [50] Object recognition [72] | Human skill level analysis [50] Soft-robot tactile sensor feedback [72] |

| Heuristic methods | Search algorithms [59] | Decision making [59] | Assembly line reconfiguration and planning [59] |

| Neural networks | FFN [57] RNN [73] | Object recognition [57]

Sequential data handling [73] | Human safety [57]

Dynamic changes’prediction [73] |

| Deep learning | 1D-CNN [74] Mask R-CNN [75,76] CNN [53,58,60,77,78] PVNet Parallel network [79] PointNet [79] SAE [80] | Detection or recognition [53,60,74,75,76,79] Classification [77] Human action and motion recognition [78] Pose estimation [58,79] Feature extraction [73] Anomaly detection [80] | Human safety [60,75,76,78,79,80] Ergonomics [60] Human action andintention understanding [60,77] Efficiency [78,79] Position estimation [53] Decision making [73] Object manipulation [74] |

| Reinforcement learning | Model-free RL[51] TRPO [61] PPO [61] DDPG [61] Q-learning [63] | Robot motion planning [51] Robot learning [61] Dynamic programming [63] | Training [51] Teleoperation [51] Robot skill learning [61] Assembly planning optimisation [63] |

| Deep reinforcement learning | Deep Q-learning [81] PPO [52] SAC [52] DDPG [64] D-DDPG [73] | Task scheduling [81] Decision making [81] Training [52] Humanoid robot arm control and motion planning Optimisation [64,73] | Smart manufacturing [81] Optimisation [52] Robot learning [64] Enhancing efficiency and adaptability [73] |

| Generative AI | motion GAN [82] | Human motion prediction [82] | Human action prediction [82] |

4.3. Human–Machine Interaction

| HMI Technology | Specific Technique | Task | Application Problem | |

|---|---|---|---|---|

| Touch interfaces | Tablet [55] Phone [83] | Visual augmentation [55,83] | Safety [55,83] HM cooperation [55] | |

| Web interfaces | BLE tags [80] | Indoor positioning [80] | Occupational safety monitoring [80] | |

| Extended reality | VR | HTC Vive [50,56,65] HTC Vive Pro Eye [85] Facebook Oculus [50] Sony PlayStation VR [50] Handheld sensors [65,85] | Training [65] Validation [65] Safe development [56] Data generation [85] Auto-labelling [85] Interaction with virtual environment [74] Robot operation demonstration [50] | Online shopping [74] Human productivity and comfort [50] Human action recognition [85] |

| AR | HoloLens 2 [51,79,90] Tablet [55] Phone [83] | Robot teleoperation [51] Visual augmentation [55,83,90] Real-time interaction [79] | Human safety [55,79,83,90] Intuitive human–robot interaction [51] Productivity [79] | |

| MR | HoloLens 2 [53,75] | Visual augmentation [75] Object manipulation [53] | Human safety [75] | |

| Natural user interfaces | Gestures | HoloLens 2 [53] Kinect [91] | Head gestures [53] | 3D object robot manipulation [53,91] |

| Motion | Perception Neuron Pro [85] Manus VR Prime II [54] Xsens Awinda [54] | Motion capture [85] Finger tracking [54] Body joint tracking [54] | Human motion recognition [54,85] | |

| Gaze | Pupil Invisible [54] HoloLens 2 [53] | Object focusing [54] Target tracking [53] | Assembly task precision [54] Interface adaptation [53] | |

| Voice | HoloLens 2 [53] | MR image capture [53] | 3D manipulation [53] | |

4.4. Data Transmission, Storage, and Analysis Technologies

| Category | Technology | Task | Application Problem |

|---|---|---|---|

| Storage | MySQL [55]

MongoDB [60] Cloud database [80,104] | CAD, audio, and 3D model files [55]

Assembly step executions [60] Robotic arm motion list [104] General system data storage [80] | Human safety [60,80,104]

Productivity [60,104] |

| Data Transmission | TCP/IP [50,55,73,75]

Ethernet [55] MQTT [97] Cellular [80] WiFi [55,80] Bluetooth [80] | Physical and digital world communication [50,75]

Human–robot Android AR application [55] Robot control movement [73] Occupational safety system [80] Edge intelligence anomaly detection [97] | Human safety [55,75,80]

Productivity [55] Human skill level analysis [50] Dynamic changes’ prediction [73] Maintenance [97] |

| Analysis | Principal component analysis [50]

Parameter sensitivity analysis [105] | Dimension reduction [50]

Model adaptability enhancement [105] | Human safety [80]

Maintenance [105] |

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| AMR | Autonomous Mobile Robot |

| AR | augmented reality |

| CDT | Cognitive Digital Twin |

| DT | digital twin |

| EC | edge computing |

| HMC | human–machine collaboration |

| HRC | human-robot collaboration |

| HMI | human–machine interaction |

| HDT | human digital twin |

| IoT | Internet of Things |

| MR | mixed reality |

| NUI | natural user interface |

| ROS | Robot Operating System |

| VR | virtual reality |

| VQA | Visual Question Answering |

| WoS | Web of Science |

| XR | extended reality |

References

- Semeraro, C.; Lezoche, M.; Panetto, H.; Dassisti, M. Digital twin paradigm: A systematic literature review. Comput. Ind. 2021, 130, 103469. [Google Scholar] [CrossRef]

- Directorate-General for Research and Innovation (European Commission); Breque, M.; De Nul, L.; Petridis, A. Industry 5.0—Towards a Sustainable, Human-Centric and Resilient European Industry; Publications Office of the European Union: Luxembourg, 2021. [Google Scholar] [CrossRef]

- Lu, Y.; Zheng, H.; Chand, S.; Xia, W.; Liu, Z.; Xu, X.; Wang, L.; Qin, Z.; Bao, J. Outlook on human-centric manufacturing towards Industry 5.0. J. Manuf. Syst. 2022, 62, 612–627. [Google Scholar] [CrossRef]

- Directorate-General for Research and Innovation (European Commission); Müller, J. Enabling Technologies for Industry 5.0—Results of a Workshop with Europe’s Technology Leaders; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Adel, A. Future of industry 5.0 in society: Human-centric solutions, challenges and prospective research areas. J. Cloud Comput. 2022, 11, 40. [Google Scholar] [CrossRef] [PubMed]

- Akundi, A.; Euresti, D.; Luna, S.; Ankobiah, W.; Lopes, A.; Edinbarough, I. State of Industry 5.0—Analysis and identification of current research trends. Appl. Syst. Innov. 2022, 5, 27. [Google Scholar] [CrossRef]

- Group, B.C. Industry 4.0. 2023. Available online: https://www.bcg.com/capabilities/manufacturing/industry-4.0 (accessed on 15 February 2024).

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, conception and perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Romero, D.; Bernus, P.; Noran, O.; Stahre, J.; Fast-Berglund, Å. The operator 4.0: Human cyber-physical systems & adaptive automation towards human-automation symbiosis work systems. In Proceedings of the Advances in Production Management Systems. Initiatives for a Sustainable World: IFIP WG 5.7 International Conference, APMS 2016, Iguassu Falls, Brazil, 3–7 September 2016; Revised Selected Papers. Springer: Cham, Switzerland, 2016; pp. 677–686. [Google Scholar]

- Gladysz, B.; Tran, T.a.; Romero, D.; van Erp, T.; Abonyi, J.; Ruppert, T. Current development on the Operator 4.0 and transition towards the Operator 5.0: A systematic literature review in light of Industry 5.0. J. Manuf. Syst. 2023, 70, 160–185. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, P.; Yin, Y.; Shih, A.; Wang, L. Toward human-centric smart manufacturing: A human-cyber-physical systems (HCPS) perspective. J. Manuf. Syst. 2022, 63, 471–490. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.V.; Makris, S.; Chryssolouris, G. Symbiotic human-robot collaborative assembly. CIRP Ann. 2019, 68, 701–726. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Kolbeinsson, A.; Lagerstedt, E.; Lindblom, J. Foundation for a classification of collaboration levels for human-robot cooperation in manufacturing. Prod. Manuf. Res. 2019, 7, 448–471. [Google Scholar] [CrossRef]

- Magrini, E.; Ferraguti, F.; Ronga, A.J.; Pini, F.; De Luca, A.; Leali, F. Human-robot coexistence and interaction in open industrial cells. Robot. Comput.-Integr. Manuf. 2020, 61, 101846. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Simões, A.C.; Pinto, A.; Santos, J.; Pinheiro, S.; Romero, D. Designing human-robot collaboration (HRC) workspaces in industrial settings: A systematic literature review. J. Manuf. Syst. 2022, 62, 28–43. [Google Scholar]

- Xiong, W.; Fan, H.; Ma, L.; Wang, C. Challenges of human—machine collaboration in risky decision-making. Front. Eng. Manag. 2022, 9, 89–103. [Google Scholar] [CrossRef]

- Othman, U.; Yang, E. Human–robot collaborations in smart manufacturing environments: Review and outlook. Sensors 2023, 23, 5663. [Google Scholar] [CrossRef] [PubMed]

- Malik, A.A.; Bilberg, A. Digital twins of human robot collaboration in a production setting. Procedia Manuf. 2018, 17, 278–285. [Google Scholar] [CrossRef]

- Asad, U.; Khan, M.; Khalid, A.; Lughmani, W.A. Human-Centric Digital Twins in Industry: A Comprehensive Review of Enabling Technologies and Implementation Strategies. Sensors 2023, 23, 3938. [Google Scholar] [CrossRef]

- Zheng, X.; Lu, J.; Kiritsis, D. The emergence of cognitive digital twin: Vision, challenges and opportunities. Int. J. Prod. Res. 2022, 60, 7610–7632. [Google Scholar] [CrossRef]

- Zhang, N.; Bahsoon, R.; Theodoropoulos, G. Towards engineering cognitive digital twins with self-awareness. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; IEEE: Piscataway, NJ, USA, 2020; p. 3891. [Google Scholar]

- Al Faruque, M.A.; Muthirayan, D.; Yu, S.Y.; Khargonekar, P.P. Cognitive digital twin for manufacturing systems. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Virtual, 1–5 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 440–445. [Google Scholar]

- Shi, Y.; Shen, W.; Wang, L.; Longo, F.; Nicoletti, L.; Padovano, A. A cognitive digital twins framework for human-robot collaboration. Procedia Comput. Sci. 2022, 200, 1867–1874. [Google Scholar] [CrossRef]

- Umeda, Y.; Ota, J.; Kojima, F.; Saito, M.; Matsuzawa, H.; Sukekawa, T.; Takeuchi, A.; Makida, K.; Shirafuji, S. Development of an education program for digital manufacturing system engineers based on ‘Digital Triplet’concept. Procedia Manuf. 2019, 31, 363–369. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, C.; Kevin, I.; Wang, K.; Huang, H.; Xu, X. Digital Twin-driven smart manufacturing: Connotation, reference model, applications and research issues. Robot. Comput.-Integr. Manuf. 2020, 61, 101837. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Casiraghi, E.; Fogli, D. A survey on digital twin: Definitions, characteristics, applications, and design implications. IEEE Access 2019, 7, 167653–167671. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A state-of-the-art survey on Augmented Reality-assisted Digital Twin for futuristic human-centric industry transformation. Robot. Comput.-Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Mazumder, A.; Sahed, M.; Tasneem, Z.; Das, P.; Badal, F.; Ali, M.; Ahamed, M.; Abhi, S.; Sarker, S.; Das, S.; et al. Towards next generation digital twin in robotics: Trends, scopes, challenges, and future. Heliyon 2023, 9, e13359. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wang, Z.; Zhou, G.; Chang, F.; Ma, D.; Jing, Y.; Cheng, W.; Ding, K.; Zhao, D. Towards new-generation human-centric smart manufacturing in Industry 5.0: A systematic review. Adv. Eng. Inform. 2023, 57, 102121. [Google Scholar] [CrossRef]

- Wang, B.; Zhou, H.; Li, X.; Yang, G.; Zheng, P.; Song, C.; Yuan, Y.; Wuest, T.; Yang, H.; Wang, L. Human Digital Twin in the context of Industry 5.0. Robot. Comput.-Integr. Manuf. 2024, 85, 102626. [Google Scholar] [CrossRef]

- Hu, Z.; Lou, S.; Xing, Y.; Wang, X.; Cao, D.; Lv, C. Review and perspectives on driver digital twin and its enabling technologies for intelligent vehicles. IEEE Trans. Intell. Veh. 2022, 7, 417–440. [Google Scholar] [CrossRef]

- Park, J.S.; Lee, D.G.; Jimenez, J.A.; Lee, S.J.; Kim, J.W. Human-Focused Digital Twin Applications for Occupational Safety and Health in Workplaces: A Brief Survey and Research Directions. Appl. Sci. 2023, 13, 4598. [Google Scholar] [CrossRef]

- Elbasheer, M.; Longo, F.; Mirabelli, G.; Nicoletti, L.; Padovano, A.; Solina, V. Shaping the role of the digital twins for human-robot dyad: Connotations, scenarios, and future perspectives. IET Collab. Intell. Manuf. 2023, 5, e12066. [Google Scholar] [CrossRef]

- Guruswamy, S.; Pojić, M.; Subramanian, J.; Mastilović, J.; Sarang, S.; Subbanagounder, A.; Stojanović, G.; Jeoti, V. Toward better food security using concepts from industry 5.0. Sensors 2022, 22, 8377. [Google Scholar] [CrossRef] [PubMed]

- Kaur, D.P.; Singh, N.P.; Banerjee, B. A review of platforms for simulating embodied agents in 3D virtual environments. Artif. Intell. Rev. 2023, 56, 3711–3753. [Google Scholar] [CrossRef]

- Inamura, T. Digital Twin of Experience for Human–Robot Collaboration through Virtual Reality. Int. J. Autom. Technol. 2023, 17, 284–291. [Google Scholar] [CrossRef]

- Lehtola, V.V.; Koeva, M.; Elberink, S.O.; Raposo, P.; Virtanen, J.P.; Vahdatikhaki, F.; Borsci, S. Digital twin of a city: Review of technology serving city needs. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 102915. [Google Scholar] [CrossRef]

- Feddoul, Y.; Ragot, N.; Duval, F.; Havard, V.; Baudry, D.; Assila, A. Exploring human-machine collaboration in industry: A systematic literature review of digital twin and robotics interfaced with extended reality technologies. Int. J. Adv. Manuf. Technol. 2023, 129, 1917–1932. [Google Scholar] [CrossRef]

- Falkowski, P.; Osiak, T.; Wilk, J.; Prokopiuk, N.; Leczkowski, B.; Pilat, Z.; Rzymkowski, C. Study on the Applicability of Digital Twins for Home Remote Motor Rehabilitation. Sensors 2023, 23, 911. [Google Scholar] [CrossRef] [PubMed]

- Ramasubramanian, A.K.; Mathew, R.; Kelly, M.; Hargaden, V.; Papakostas, N. Digital twin for human–robot collaboration in manufacturing: Review and outlook. Appl. Sci. 2022, 12, 4811. [Google Scholar] [CrossRef]

- Wilhelm, J.; Petzoldt, C.; Beinke, T.; Freitag, M. Review of digital twin-based interaction in smart manufacturing: Enabling cyber-physical systems for human-machine interaction. Int. J. Comput. Integr. Manuf. 2021, 34, 1031–1048. [Google Scholar] [CrossRef]

- Lv, Z. Digital Twins in Industry 5.0. Research 2023, 6, 0071. [Google Scholar] [CrossRef]

- Agnusdei, G.P.; Elia, V.; Gnoni, M.G. Is digital twin technology supporting safety management? A bibliometric and systematic review. Appl. Sci. 2021, 11, 2767. [Google Scholar] [CrossRef]

- Bhattacharya, M.; Penica, M.; O’Connell, E.; Southern, M.; Hayes, M. Human-in-Loop: A Review of Smart Manufacturing Deployments. Systems 2023, 11, 35. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F.; Hu, T.; Anwer, N.; Liu, A.; Wei, Y.; Wang, L.; Nee, A. Enabling technologies and tools for digital twin. J. Manuf. Syst. 2021, 58, 3–21. [Google Scholar] [CrossRef]

- Wang, Q.; Jiao, W.; Wang, P.; Zhang, Y. Digital twin for human-robot interactive welding and welder behavior analysis. IEEE/CAA J. Autom. Sin. 2020, 8, 334–343. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Li, S.; Pang, Y.; Lee, C.K. AR-assisted digital twin-enabled robot collaborative manufacturing system with human-in-the-loop. Robot. Comput.-Integr. Manuf. 2022, 76, 102321. [Google Scholar] [CrossRef]

- Matulis, M.; Harvey, C. A robot arm digital twin utilising reinforcement learning. Comput. Graph. 2021, 95, 106–114. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Lee, J.Y.; Ghasemi, Y.; Mohammed, M.; Jeong, H. Hands-free human–robot interaction using multimodal gestures and deep learning in wearable mixed reality. IEEE Access 2021, 9, 55448–55464. [Google Scholar] [CrossRef]

- Tuli, T.B.; Kohl, L.; Chala, S.A.; Manns, M.; Ansari, F. Knowledge-based digital twin for predicting interactions in human-robot collaboration. In Proceedings of the 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vasteras, Sweden, 7–10 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Michalos, G.; Karagiannis, P.; Makris, S.; Tokçalar, Ö.; Chryssolouris, G. Augmented reality (AR) applications for supporting human-robot interactive cooperation. Procedia CIRP 2016, 41, 370–375. [Google Scholar] [CrossRef]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The effectiveness of virtual environments in developing collaborative strategies between industrial robots and humans. Robot. Comput.-Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Dröder, K.; Bobka, P.; Germann, T.; Gabriel, F.; Dietrich, F. A machine learning-enhanced digital twin approach for human-robot-collaboration. Procedia Cirp 2018, 76, 187–192. [Google Scholar] [CrossRef]

- Lee, H.; Kim, S.D.; Al Amin, M.A.U. Control framework for collaborative robot using imitation learning-based teleoperation from human digital twin to robot digital twin. Mechatronics 2022, 85, 102833. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Lotsaris, K.; Bavelos, A.C.; Baris, P.; Michalos, G.; Makris, S. Digital twin for designing and reconfiguring human–robot collaborative assembly lines. Appl. Sci. 2021, 11, 4620. [Google Scholar] [CrossRef]

- Dimitropoulos, N.; Togias, T.; Zacharaki, N.; Michalos, G.; Makris, S. Seamless human–robot collaborative assembly using artificial intelligence and wearable devices. Appl. Sci. 2021, 11, 5699. [Google Scholar] [CrossRef]

- Liang, C.J.; Kamat, V.R.; Menassa, C.C. Teaching robots to perform quasi-repetitive construction tasks through human demonstration. Autom. Constr. 2020, 120, 103370. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Giannoulis, C.; Michalos, G.; Makris, S. Digital twin for adaptation of robots’ behavior in flexible robotic assembly lines. Procedia Manuf. 2019, 28, 121–126. [Google Scholar] [CrossRef]

- De Winter, J.; EI Makrini, I.; Van de Perre, G.; Nowé, A.; Verstraten, T.; Vanderborght, B. Autonomous assembly planning of demonstrated skills with reinforcement learning in simulation. Auton. Robot. 2021, 45, 1097–1110. [Google Scholar] [CrossRef]

- Liu, C.; Gao, J.; Bi, Y.; Shi, X.; Tian, D. A multitasking-oriented robot arm motion planning scheme based on deep reinforcement learning and twin synchro-control. Sensors 2020, 20, 3515. [Google Scholar] [CrossRef]

- Malik, A.A.; Masood, T.; Bilberg, A. Virtual reality in manufacturing: Immersive and collaborative artificial-reality in design of human-robot workspace. Int. J. Comput. Integr. Manuf. 2020, 33, 22–37. [Google Scholar] [CrossRef]

- Bilberg, A.; Malik, A.A. Digital twin driven human–robot collaborative assembly. CIRP Ann. 2019, 68, 499–502. [Google Scholar] [CrossRef]

- Malik, A.A.; Brem, A. Digital twins for collaborative robots: A case study in human-robot interaction. Robot. Comput.-Integr. Manuf. 2021, 68, 102092. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital twin: Enabling technologies, challenges and open research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Wang, T.; Li, J.; Kong, Z.; Liu, X.; Snoussi, H.; Lv, H. Digital twin improved via visual question answering for vision-language interactive mode in human–machine collaboration. J. Manuf. Syst. 2021, 58, 261–269. [Google Scholar] [CrossRef]

- Baranyi, G.; Dos Santos Melício, B.C.; Gaál, Z.; Hajder, L.; Simonyi, A.; Sindely, D.; Skaf, J.; Dušek, O.; Nekvinda, T.; Lőrincz, A. AI Technologies for Machine Supervision and Help in a Rehabilitation Scenario. Multimodal Technol. Interact. 2022, 6, 48. [Google Scholar] [CrossRef]

- Huang, Z.; Shen, Y.; Li, J.; Fey, M.; Brecher, C. A survey on AI-driven digital twins in industry 4.0: Smart manufacturing and advanced robotics. Sensors 2021, 21, 6340. [Google Scholar] [CrossRef] [PubMed]

- Jin, T.; Sun, Z.; Li, L.; Zhang, Q.; Zhu, M.; Zhang, Z.; Yuan, G.; Chen, T.; Tian, Y.; Hou, X.; et al. Triboelectric nanogenerator sensors for soft robotics aiming at digital twin applications. Nat. Commun. 2020, 11, 5381. [Google Scholar] [CrossRef] [PubMed]

- Lv, Q.; Zhang, R.; Sun, X.; Lu, Y.; Bao, J. A digital twin-driven human-robot collaborative assembly approach in the wake of COVID-19. J. Manuf. Syst. 2021, 60, 837–851. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Zhu, M.; Zhang, Z.; Chen, Z.; Shi, Q.; Shan, X.; Yeow, R.C.H.; Lee, C. Artificial Intelligence of Things (AIoT) enabled virtual shop applications using self-powered sensor enhanced soft robotic manipulator. Adv. Sci. 2021, 8, 2100230. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.H.; Park, K.B.; Roh, D.H.; Lee, J.Y.; Mohammed, M.; Ghasemi, Y.; Jeong, H. An integrated mixed reality system for safety-aware human-robot collaboration using deep learning and digital twin generation. Robot. Comput.-Integr. Manuf. 2022, 73, 102258. [Google Scholar] [CrossRef]

- Hata, A.; Inam, R.; Raizer, K.; Wang, S.; Cao, E. AI-based safety analysis for collaborative mobile robots. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1722–1729. [Google Scholar]

- Laamarti, F.; Badawi, H.F.; Ding, Y.; Arafsha, F.; Hafidh, B.; El Saddik, A. An ISO/IEEE 11073 standardized digital twin framework for health and well-being in smart cities. IEEE Access 2020, 8, 105950–105961. [Google Scholar] [CrossRef]

- Wang, T.; Li, J.; Deng, Y.; Wang, C.; Snoussi, H.; Tao, F. Digital twin for human-machine interaction with convolutional neural network. Int. J. Comput. Integr. Manuf. 2021, 34, 888–897. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, G.; Ma, D.; Wang, R.; Xiao, J.; Zhao, D. A deep learning-enabled human-cyber-physical fusion method towards human-robot collaborative assembly. Robot. Comput.-Integr. Manuf. 2023, 83, 102571. [Google Scholar] [CrossRef]

- Zhan, X.; Wu, W.; Shen, L.; Liao, W.; Zhao, Z.; Xia, J. Industrial internet of things and unsupervised deep learning enabled real-time occupational safety monitoring in cold storage warehouse. Saf. Sci. 2022, 152, 105766. [Google Scholar] [CrossRef]

- Xia, K.; Sacco, C.; Kirkpatrick, M.; Saidy, C.; Nguyen, L.; Kircaliali, A.; Harik, R. A digital twin to train deep reinforcement learning agent for smart manufacturing plants: Environment, interfaces and intelligence. J. Manuf. Syst. 2021, 58, 210–230. [Google Scholar] [CrossRef]

- Gui, L.Y.; Zhang, K.; Wang, Y.X.; Liang, X.; Moura, J.M.; Veloso, M. Teaching robots to predict human motion. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 562–567. [Google Scholar]

- Papcun, P.; Cabadaj, J.; Kajati, E.; Romero, D.; Landryova, L.; Vascak, J.; Zolotova, I. Augmented reality for humans-robots interaction in dynamic slotting “chaotic storage” smart warehouses. In Proceedings of the Advances in Production Management Systems. Production Management for the Factory of the Future: IFIP WG 5.7 International Conference, APMS 2019, Austin, TX, USA, 1–5 September 2019; Proceedings, Part I. Springer: Cham, Switzerland, 2019; pp. 633–641. [Google Scholar]

- Zhang, Z.; Wen, F.; Sun, Z.; Guo, X.; He, T.; Lee, C. Artificial intelligence-enabled sensing technologies in the 5G/internet of things era: From virtual reality/augmented reality to the digital twin. Adv. Intell. Syst. 2022, 4, 2100228. [Google Scholar] [CrossRef]

- Dallel, M.; Havard, V.; Dupuis, Y.; Baudry, D. Digital twin of an industrial workstation: A novel method of an auto-labeled data generator using virtual reality for human action recognition in the context of human–robot collaboration. Eng. Appl. Artif. Intell. 2023, 118, 105655. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A survey of indoor localization systems and technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- Steinberg, G. Natural user interfaces. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Karpov, A.; Yusupov, R. Multimodal interfaces of human–computer interaction. Her. Russ. Acad. Sci. 2018, 88, 67–74. [Google Scholar] [CrossRef]

- Liu, H.; Fang, T.; Zhou, T.; Wang, Y.; Wang, L. Deep learning-based multimodal control interface for human-robot collaboration. Procedia Cirp 2018, 72, 3–8. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Yin, Y.; Pang, Y.M.; Huo, S. An AR-assisted Deep Reinforcement Learning-based approach towards mutual-cognitive safe human-robot interaction. Robot. Comput.-Integr. Manuf. 2023, 80, 102471. [Google Scholar] [CrossRef]

- Horváth, G.; Erdős, G. Gesture control of cyber physical systems. Procedia Cirp 2017, 63, 184–188. [Google Scholar] [CrossRef]

- Qi, Q.; Zhao, D.; Liao, T.W.; Tao, F. Modeling of cyber-physical systems and digital twin based on edge computing, fog computing and cloud computing towards smart manufacturing. In Proceedings of the International Manufacturing Science and Engineering Conference. American Society of Mechanical Engineers, College Station, TX, USA, 18–22 June 2018; Volume 51357, p. V001T05A018. [Google Scholar]

- Urbaniak, D.; Rosell, J.; Suárez, R. Edge Computing in Autonomous and Collaborative Assembly Lines. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Wan, S.; Gu, Z.; Ni, Q. Cognitive computing and wireless communications on the edge for healthcare service robots. Comput. Commun. 2020, 149, 99–106. [Google Scholar] [CrossRef]

- Ruggeri, F.; Terra, A.; Hata, A.; Inam, R.; Leite, I. Safety-based Dynamic Task Offloading for Human-Robot Collaboration using Deep Reinforcement Learning. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2119–2126. [Google Scholar]

- Fraga-Lamas, P.; Barros, D.; Lopes, S.I.; Fernández-Caramés, T.M. Mist and Edge Computing Cyber-Physical Human-Centered Systems for Industry 5.0: A Cost-Effective IoT Thermal Imaging Safety System. Sensors 2022, 22, 8500. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Yang, L.; Wang, Y.; Xu, X.; Lu, Y. Digital twin-driven online anomaly detection for an automation system based on edge intelligence. J. Manuf. Syst. 2021, 59, 138–150. [Google Scholar] [CrossRef]

- Khan, L.U.; Saad, W.; Niyato, D.; Han, Z.; Hong, C.S. Digital-twin-enabled 6G: Vision, architectural trends, and future directions. IEEE Commun. Mag. 2022, 60, 74–80. [Google Scholar] [CrossRef]

- Casalicchio, E. Container orchestration: A survey. Systems Modeling: Methodologies and Tools; Springer: Cham, Switzerland, 2019; pp. 221–235. [Google Scholar]

- De Lauretis, L. From monolithic architecture to microservices architecture. In Proceedings of the 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Berlin, Germany, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 93–96. [Google Scholar]

- Costa, J.; Matos, R.; Araujo, J.; Li, J.; Choi, E.; Nguyen, T.A.; Lee, J.W.; Min, D. Software aging effects on kubernetes in container orchestration systems for digital twin cloud infrastructures of urban air mobility. Drones 2023, 7, 35. [Google Scholar] [CrossRef]

- Costantini, A.; Di Modica, G.; Ahouangonou, J.C.; Duma, D.C.; Martelli, B.; Galletti, M.; Antonacci, M.; Nehls, D.; Bellavista, P.; Delamarre, C.; et al. IoTwins: Toward implementation of distributed digital twins in industry 4.0 settings. Computers 2022, 11, 67. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F. Digital twin and big data towards smart manufacturing and industry 4.0: 360 degree comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Closed-Loop Robotic Arm Manipulation Based on Mixed Reality. Appl. Sci. 2022, 12, 2972. [Google Scholar] [CrossRef]

- Wang, J.; Ye, L.; Gao, R.X.; Li, C.; Zhang, L. Digital Twin for rotating machinery fault diagnosis in smart manufacturing. Int. J. Prod. Res. 2019, 57, 3920–3934. [Google Scholar] [CrossRef]

- Human, S.; Alt, R.; Habibnia, H.; Neumann, G. Human-centric personal data protection and consenting assistant systems: Towards a sustainable Digital Economy. In Proceedings of the 55th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2022. [Google Scholar]

- Lutz, R.R. Safe-AR: Reducing risk while augmenting reality. In Proceedings of the 2018 IEEE 29th International Symposium on Software Reliability Engineering (ISSRE), Memphis, TN, USA, 15–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 70–75. [Google Scholar]

- Robert, L.; Bansal, G.; Lutge, C. ICIS 2019 SIGHCI workshop panel report: Human computer interaction challenges and opportunities for fair, trustworthy and ethical artificial intelligence. AIS Trans. Hum.-Comput. Interact. 2020, 12, 96–108. [Google Scholar] [CrossRef]

- Gartner. What’s New in Artificial Intelligence from the 2023. Available online: https://www.gartner.com/en/articles/what-s-new-in-artificial-intelligence-from-the-2023-gartner-hype-cycle (accessed on 15 February 2024).

- Stoica, I.; Song, D.; Popa, R.A.; Patterson, D.; Mahoney, M.W.; Katz, R.; Joseph, A.D.; Jordan, M.; Hellerstein, J.M.; Gonzalez, J.E.; et al. A berkeley view of systems challenges for ai. arXiv 2017, arXiv:1712.05855. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Pregan: Preemptive migration prediction network for proactive fault-tolerant edge computing. In Proceedings of the IEEE INFOCOM 2022-IEEE Conference on Computer Communications, Virtual, 2–5 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 670–679. [Google Scholar]

- Zheng, H.; Lee, R.; Lu, Y. HA-ViD: A Human Assembly Video Dataset for Comprehensive Assembly Knowledge Understanding. arXiv 2023, arXiv:2307.05721. [Google Scholar]

- Brecko, A.; Kajati, E.; Koziorek, J.; Zolotova, I. Federated learning for edge computing: A survey. Appl. Sci. 2022, 12, 9124. [Google Scholar] [CrossRef]

- Fernandez, R.A.S.; Sanchez-Lopez, J.L.; Sampedro, C.; Bavle, H.; Molina, M.; Campoy, P. Natural user interfaces for human-drone multi-modal interaction. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1013–1022. [Google Scholar]

- Danys, L.; Zolotova, I.; Romero, D.; Papcun, P.; Kajati, E.; Jaros, R.; Koudelka, P.; Koziorek, J.; Martinek, R. Visible Light Communication and localization: A study on tracking solutions for Industry 4.0 and the Operator 4.0. J. Manuf. Syst. 2022, 64, 535–545. [Google Scholar] [CrossRef]

| Ref. | Year | Description | ET | EM | UC | HM |

|---|---|---|---|---|---|---|

| [23] | 2023 | State-of-the-art literature review on human-centric digital twins (HCDTs) and their enabling technologies. | H | H | H | H |

| [31] | 2023 | State-of-the-art studies of AR-assisted DTs across different sectors of the industrial field in the design, production, distribution, maintenance, and end-of-life stages. | H | H | H | H |

| [32] | 2023 | Recent trends for DT-incorporated robotics. | H | H | H | H |

| [33] | 2023 | Literature review on human-centric smart manufacturing to identify promising research topics with high potential for further investigations. | H | H | H | H |

| [34] | 2024 | Focus on human centricity as core value of Industry 5.0 and on the concept of human digital twins (HDTs) and their representative applications and technologies | H | H | H | M |

| [35] | 2022 | A driver digital twin was introduced to create a more comprehensive model of the human driver. | H | H | H | M |

| [21] | 2023 | A systemic review and an in-depth discussion of the key technologies currently being employed in smart manufacturing with HRC systems. | H | M | H | H |

| [36] | 2023 | Review on technological aspects of relevant applications dealing with occupational safety and health program issues that can be solved with human-focused DT. | H | M | H | H |

| [37] | 2023 | Provides a comprehensive perspective of DTs’ critical design aspects in the broad application areas of human--robot interaction systems. | M | M | M | H |

| [38] | 2022 | Research on utilisation of information and communication technologies toward better food sustainability, where humans collaborating with intelligent machines find their place. | M | M | M | M |

| [39] | 2022 | Review on simulation platforms and their comparison based on their properties and functionalities from a user’s perspective. | M | H | H | L |

| [40] | 2023 | The author examined current DT technology from the viewpoint of human–robot interaction systems. | M | M | M | M |

| [41] | 2022 | The integration of human factors into a DT of a city and a human interacting with a DT of objects in the city. | M | M | M | L |

| [42] | 2023 | The analysis of the progress of DTs and robotics interfaced with extended reality. | L | L | H | H |

| [43] | 2023 | Overview of DT applications within the fields of industry and health. The concept of controlling a rehabilitation exoskeleton via its DT in the VR is presented. | M | M | H | L |

| [44] | 2022 | Focus on DT technologies in the manufacturing domain and human–robot collaboration scenarios. | L | L | M | H |

| [45] | 2021 | Integration and interaction of human and DT in smart manufacturing systems and current state of the art of DT-based HMI. | L | L | M | L |

| [46] | 2023 | The impact of DT technology on industrial manufacturing in the context of Industry 5.0’s potential applications and key modelling technologies is discussed. | H | L | L | L |

| [47] | 2021 | Analysis of existing fields of application of DTs for supporting safety management processes and the relation between DTs and safety issues. | L | L | M | L |

| [48] | 2023 | Use case review of how human operators affect the performance of cyber–physical systems within a “smart” or “cognitive’” setting. | L | L | L | L |

| Id | Keyword | Occurrences | Total Link Strength |

|---|---|---|---|

| 1 | digital twin | 229 | 316 |

| 2 | human–robot collaboration | 58 | 71 |

| 3 | virtual reality | 36 | 62 |

| 4 | artificial intelligence | 27 | 69 |

| 5 | Industry 4.0 | 21 | 40 |

| 6 | simulation | 17 | 35 |

| 7 | human digital twin | 17 | 27 |

| 8 | augmented reality | 16 | 42 |

| 9 | machine learning | 16 | 36 |

| 10 | human–robot interaction | 16 | 31 |

| 11 | Industry 5.0 | 16 | 28 |

| 12 | smart manufacturing | 13 | 38 |

| 13 | human–computer interaction | 11 | 25 |

| 14 | Internet of Things | 11 | 25 |

| 15 | cyber–physical system | 10 | 26 |

| 16 | safety | 10 | 24 |

| 17 | human–machine interaction | 10 | 20 |

| 18 | robotics | 9 | 27 |

| 19 | human factors | 9 | 19 |

| 20 | smart city | 9 | 19 |

| 21 | Metaverse | 9 | 14 |

| 22 | extended reality | 8 | 21 |

| 23 | deep learning | 8 | 18 |

| 24 | computer vision | 8 | 15 |

| 25 | mixed reality | 7 | 19 |

| 26 | task analysis | 7 | 17 |

| 27 | training | 6 | 12 |

| 28 | Operator 4.0 | 6 | 9 |

| 29 | edge computing | 5 | 17 |

| 30 | teleoperation | 5 | 17 |

| 31 | collaborative robotics | 5 | 16 |

| 32 | sustainability | 5 | 16 |

| 33 | assembly | 5 | 13 |

| 34 | ergonomics | 5 | 12 |

| 35 | blockchain | 5 | 10 |

| Digital Twin Tool | Description | Application Areas | Literature Review |

|---|---|---|---|

| Unity [50,51,52,53,54,55,56] | Real-time 3D development platform | Gaming, AR/VR, Automotive | Virtual reality support [50] DT of physical robot [51] DT of virtual space [52] MR system development [53] Human action prediction [54] Safety and productivity [55] Human reaction analysis [56] |

| Matlab [57,58] | High-level technical computing language | Engineering, Research | Obstacle detection and3D localisation [57] Human digital twin [58] |

| ROS [55,59,60,61,62] | Middleware for robotics software development | Robotics, automation | Communication [55] Decision making [59] Safety and ergonomics [60] Robot learning [61] Human behaviour [62] Flexible assembly [62] |

| Gazebo [59,60,61] | Advanced robotics simulation | Robotics, educational research | Decision making [59] Safety and ergonomics [60] Robot learning [61] |

| Klampt [63] | Versatile motion planning and simulation tool | Robotics, education | Assembly planning [63] |

| V-REP [64] | Robot dynamics simulator with a rich set of features | Robotics, educational research | Robot control [64] |

| Siemens NX [65] | Advanced solution for engineering design and simulation | Engineering, manufacturing | Robot programming [65] |

| Technomatix Process Simulate [22,66,67] | 3D simulation of manufacturing processes | Manufacturing, automation | Flexible assembly [66] Design, development, and operation [22,67] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krupas, M.; Kajati, E.; Liu, C.; Zolotova, I. Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods. Sensors 2024, 24, 2232. https://doi.org/10.3390/s24072232

Krupas M, Kajati E, Liu C, Zolotova I. Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods. Sensors. 2024; 24(7):2232. https://doi.org/10.3390/s24072232

Chicago/Turabian StyleKrupas, Maros, Erik Kajati, Chao Liu, and Iveta Zolotova. 2024. "Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods" Sensors 24, no. 7: 2232. https://doi.org/10.3390/s24072232

APA StyleKrupas, M., Kajati, E., Liu, C., & Zolotova, I. (2024). Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods. Sensors, 24(7), 2232. https://doi.org/10.3390/s24072232