Improving Eye-Tracking Data Quality: A Framework for Reproducible Evaluation of Detection Algorithms

Abstract

:1. Introduction

1.1. Navigating the Landscape of Eye-Tracking Algorithms

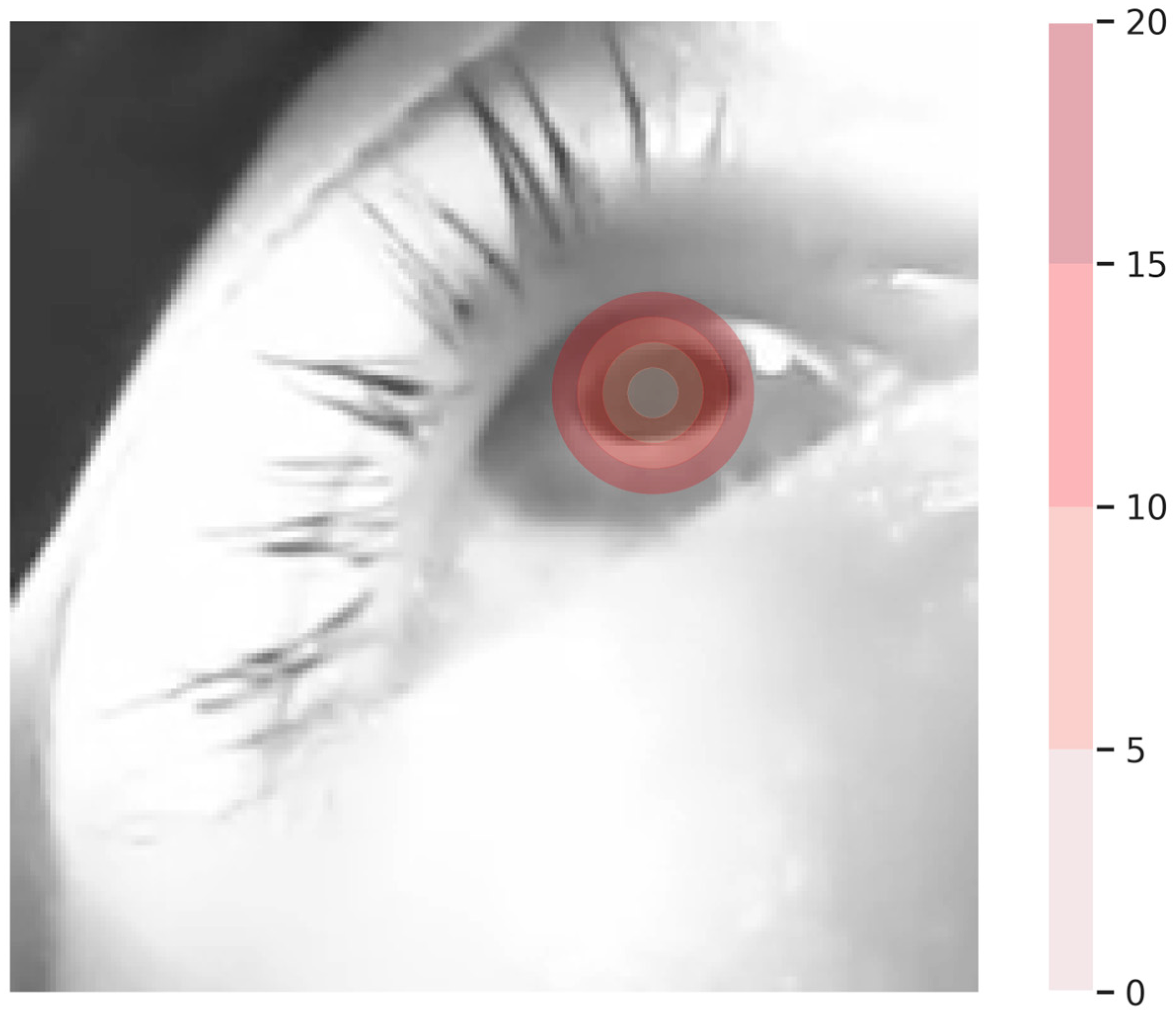

- In scenarios where pupil size is of interest, such as pupillography, algorithms typically yield the best-fitting ellipse enclosing the pupil [17]. The output provides information on the size of the major and minor axes along with their rotation and two-dimensional position.

- A more versatile representation utilizes a segmentation map covering the entire sample [28]. This segmentation mask is a binary mask where only the pupil is indicated. Some of these algorithms may also provide data on other eye components, such as the iris and sclera. Theoretically, this encoding allows for the use of partially hidden pupils due to eyelids or blinks.

1.2. Choosing an Algorithm for Own Research

- Recorded samples vary significantly based on the camera position, resolution, and distance [31]. As a result, samples from different recording setups are not directly comparable. The non-linear transformation of the pupil when viewed from larger eye angles can present additional challenges to the algorithms [32].

- Algorithms often require the setting of hyperparameters that are dependent on the specific samples. Many of these hyperparameters have semantic meanings and are tailored to the camera’s position. While reusing published values may suffice if the setups are similar enough, obtaining more suitable detections will likely depend on fine-tuning these parameters.

- The population of subjects may differ considerably due to the context of the measurement and external factors. In medical contexts, specific correlated phenotypes may seriously hinder detection rates. There is a scarcity of published work, such as Kulkarni et al. [33], that systematically evaluates induced bias in pupil detection. Furthermore, challenges exist even within the general population, as documented by Fuhl et al. [27]. For instance, detecting pupils in participants wearing contact lenses requires detectors to perform well under this specific condition without introducing bias.

- Metrics used for performance evaluation can vary significantly between studies. Often, metrics are chosen to optimally assess a specific dataset or use case. For instance, the evaluation paper by Fuhl et al. [27] used a threshold of five pixels to classify the detection of the pupil center inside a sample as correct. While this choice is sound for the tested datasets, samples with significantly different resolutions due to another camera setup necessitate adopting alternative concepts.

2. Methods

2.1. Defining Criteria for the Framework

- Flexibility: The proposed framework must exhibit maximum flexibility in its hardware and software environment for seamless execution. It should operate offline without reliance on remote servers, enabling widespread use in all countries.

- Accessibility: Additionally, the framework should not be tied to specific commercial systems that may pose accessibility issues due to license regulations or fees. Applied researchers should have the freedom to use the framework directly on their existing experimental systems, avoiding data duplication, preserving privacy, and simplifying knowledge management. As such, the framework should be compatible with a wide range of hardware, including UNIX-based operating systems commonly licensed as open-Source software, as well as the popular yet proprietary Microsoft Windows.

- Ease of setup: Once the system is available, setting up the framework should be straightforward and not require advanced technical knowledge. This may appear trivial but is complicated by the diversity of pupil detection algorithms. Existing implementations often depend on various programming languages and require multiple libraries and build tools, making the installation process challenging and time-consuming. To overcome this issue, the framework should not demand manual setup, but facilitating faster, and achieve assessment results easier.

- Scalability: The proposed framework must be scalable to handle the large volumes of samples in modern datasets, the diversity of algorithms, and the numerous tunable hyperparameters. Fortunately, the independence of algorithms and samples allows for easy parallelization of detections, enabling the efficient utilization of computational resources. The framework should be capable of benefiting from a single machine, multiple virtual machines, or even a cluster of physical devices, ensuring efficient exploration of the vast search space.

- Modularity and standardization: The framework should be designed with a modular approach and adhere to established standards and best practices. Embracing existing standards simplifies support and ensures sustainable development. Moreover, adhering to these standards allows for the re-use of individual components within the system, facilitating the integration of selected pupil detection algorithms into the final experiment seamlessly.

- Adaptability for researchers and developers: The framework should not only cater to researchers employing pupil detection algorithms but also be accessible to developers creating new detectors. By simplifying the evaluation process, developers may enhance their algorithms.

2.2. Inclusion Criteria of the Pupil Detection Algorithms

- Availability of implementations: To ensure reproducibility, the published algorithms had to be accompanied by associated implementations. Although textual descriptions may exist, replicating an algorithm without access to its original implementation can introduce unintended variations, leading to inconsistent results. Therefore, only algorithms with readily available and accurate implementations as intended by the original authors were included.

- Independence of dependencies and programming languages: While no strict enforcement of specific dependencies or programming languages was imposed, a preference was given to algorithms that could be executed on UNIX-based systems. This choice was driven by the desire to avoid proprietary components and promote open-source software in science. As a result, algorithms solely available as compiled Microsoft Windows libraries without accompanying source codes were excluded. Similarly, algorithms implemented in scripting languages requiring a paid license, such as MATLAB, were not included.

2.3. Architecture and Design of the Framework

2.4. Validation Data and Procedure

3. Results

3.1. Defining our Evaluation Criteria

3.2. Generating the Predictions of all Pupil Detection Algorithms

3.3. Evaluation of the Pupil Detection Algorithms

3.4. Testing for Statistically Significant Performance Differences in Pupil Detectors

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Punde, P.A.; Jadhav, M.E.; Manza, R.R. A Study of Eye Tracking Technology and Its Applications. In Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM), Aurangabad, India, 5–6 October 2017; pp. 86–90. [Google Scholar]

- Larsen, R.S.; Waters, J. Neuromodulatory Correlates of Pupil Dilation. Front. Neural Circuits 2018, 12, 21. [Google Scholar] [CrossRef] [PubMed]

- McGinley, M.J.; Vinck, M.; Reimer, J.; Batista-Brito, R.; Zagha, E.; Cadwell, C.R.; Tolias, A.S.; Cardin, J.A.; McCormick, D.A. Waking State: Rapid Variations Modulate Neural and Behavioral Responses. Neuron 2015, 87, 1143–1161. [Google Scholar] [CrossRef]

- Iriki, A.; Tanaka, M.; Iwamura, Y. Attention-Induced Neuronal Activity in the Monkey Somatosensory Cortex Revealed by Pupillometrics. Neurosci. Res. 1996, 25, 173–181. [Google Scholar] [CrossRef] [PubMed]

- Alnæs, D.; Sneve, M.H.; Espeseth, T.; Endestad, T.; van de Pavert, S.H.P.; Laeng, B. Pupil Size Signals Mental Effort Deployed during Multiple Object Tracking and Predicts Brain Activity in the Dorsal Attention Network and the Locus Coeruleus. J. Vis. 2014, 14, 1. [Google Scholar] [CrossRef]

- McGinley, M.J.; David, S.V.; McCormick, D.A. Cortical Membrane Potential Signature of Optimal States for Sensory Signal Detection. Neuron 2015, 87, 179–192. [Google Scholar] [CrossRef] [PubMed]

- Murphy, P.R.; Robertson, I.H.; Balsters, J.H.; O’connell, R.G. Pupillometry and P3 Index the Locus Coeruleus–Noradrenergic Arousal Function in Humans. Psychophysiology 2011, 48, 1532–1543. [Google Scholar] [CrossRef]

- Muhafiz, E.; Bozkurt, E.; Erdoğan, C.E.; Nizamoğulları, Ş.; Demir, M.S. Static and Dynamic Pupillary Characteristics in Multiple Sclerosis. Eur. J. Ophthalmol. 2022, 32, 2173–2180. [Google Scholar] [CrossRef] [PubMed]

- Guillemin, C.; Hammad, G.; Read, J.; Requier, F.; Charonitis, M.; Delrue, G.; Vandeleene, N.; Lommers, E.; Maquet, P.; Collette, F. Pupil Response Speed as a Marker of Cognitive Fatigue in Early Multiple Sclerosis. Mult. Scler. Relat. Disord. 2022, 65, 104001. [Google Scholar] [CrossRef] [PubMed]

- Prescott, B.R.; Saglam, H.; Duskin, J.A.; Miller, M.I.; Thakur, A.S.; Gholap, E.A.; Hutch, M.R.; Smirnakis, S.M.; Zafar, S.F.; Dupuis, J.; et al. Anisocoria and Poor Pupil Reactivity by Quantitative Pupillometry in Patients with Intracranial Pathology. Crit. Care Med. 2022, 50, e143–e153. [Google Scholar] [CrossRef]

- Chougule, P.S.; Najjar, R.P.; Finkelstein, M.T.; Kandiah, N.; Milea, D. Light-Induced Pupillary Responses in Alzheimer’s Disease. Front. Neurol. 2019, 10, 360. [Google Scholar] [CrossRef]

- La Morgia, C.; Romagnoli, M.; Pizza, F.; Biscarini, F.; Filardi, M.; Donadio, V.; Carbonelli, M.; Amore, G.; Park, J.C.; Tinazzi, M.; et al. Chromatic Pupillometry in Isolated Rapid Eye Movement Sleep Behavior Disorder. Mov. Disord. 2022, 37, 205–210. [Google Scholar] [CrossRef] [PubMed]

- You, S.; Hong, J.-H.; Yoo, J. Analysis of Pupillometer Results According to Disease Stage in Patients with Parkinson’s Disease. Sci. Rep. 2021, 11, 17880. [Google Scholar] [CrossRef] [PubMed]

- Stein, N.; Niehorster, D.C.; Watson, T.; Steinicke, F.; Rifai, K.; Wahl, S.; Lappe, M. A Comparison of Eye Tracking Latencies Among Several Commercial Head-Mounted Displays. i-Perception 2021, 12, 2041669520983338. [Google Scholar] [CrossRef] [PubMed]

- Cognolato, M.; Atzori, M.; Müller, H. Head-Mounted Eye Gaze Tracking Devices: An Overview of Modern Devices and Recent Advances. J. Rehabil. Assist. Technol. Eng. 2018, 5, 2055668318773991. [Google Scholar] [CrossRef] [PubMed]

- Cazzato, D.; Leo, M.; Distante, C.; Voos, H. When I Look into Your Eyes: A Survey on Computer Vision Contributions for Human Gaze Estimation and Tracking. Sensors 2020, 20, 3739. [Google Scholar] [CrossRef] [PubMed]

- Zandi, B.; Lode, M.; Herzog, A.; Sakas, G.; Khanh, T.Q. PupilEXT: Flexible Open-Source Platform for High-Resolution Pupillometry in Vision Research. Front. Neurosci. 2021, 15, 676220. [Google Scholar] [CrossRef] [PubMed]

- Stengel, M.; Grogorick, S.; Eisemann, M.; Eisemann, E.; Magnor, M.A. An Affordable Solution for Binocular Eye Tracking and Calibration in Head-Mounted Displays. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 15–24. [Google Scholar]

- Vicente-Saez, R.; Martinez-Fuentes, C. Open Science Now: A Systematic Literature Review for an Integrated Definition. J. Bus. Res. 2018, 88, 428–436. [Google Scholar] [CrossRef]

- Tonsen, M.; Zhang, X.; Sugano, Y.; Bulling, A. Labelled Pupils in the Wild: A Dataset for Studying Pupil Detection in Unconstrained Environments. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 139–142. [Google Scholar]

- Chen, Y.; Ning, Y.; Kao, S.L.; Støer, N.C.; Müller-Riemenschneider, F.; Venkataraman, K.; Khoo, E.Y.H.; Tai, E.-S.; Tan, C.S. Using Marginal Standardisation to Estimate Relative Risk without Dichotomising Continuous Outcomes. BMC Med. Res. Methodol. 2019, 19, 165. [Google Scholar] [CrossRef] [PubMed]

- Orquin, J.L.; Holmqvist, K. Threats to the Validity of Eye-Movement Research in Psychology. Behav. Res. 2018, 50, 1645–1656. [Google Scholar] [CrossRef]

- Steinhauer, S.R.; Bradley, M.M.; Siegle, G.J.; Roecklein, K.A.; Dix, A. Publication Guidelines and Recommendations for Pupillary Measurement in Psychophysiological Studies. Psychophysiology 2022, 59, e14035. [Google Scholar] [CrossRef]

- Godfroid, A.; Hui, B. Five Common Pitfalls in Eye-Tracking Research. Second. Lang. Res. 2020, 36, 277–305. [Google Scholar] [CrossRef]

- Kret, M.E.; Sjak-Shie, E.E. Preprocessing Pupil Size Data: Guidelines and Code. Behav. Res. 2019, 51, 1336–1342. [Google Scholar] [CrossRef] [PubMed]

- van Rij, J.; Hendriks, P.; van Rijn, H.; Baayen, R.H.; Wood, S.N. Analyzing the Time Course of Pupillometric Data. Trends Hear. 2019, 23, 233121651983248. [Google Scholar] [CrossRef] [PubMed]

- Fuhl, W.; Santini, T.C.; Kübler, T.; Kasneci, E. ElSe: Ellipse Selection for Robust Pupil Detection in Real-World Environments. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; ACM: New York, NY, USA, 2016; pp. 123–130. [Google Scholar]

- Fuhl, W.; Schneider, J.; Kasneci, E. 1000 Pupil Segmentations in a Second Using Haar Like Features and Statistical Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Virtual, 11–17 October 2021; pp. 3466–3476. [Google Scholar]

- Santini, T. Towards Ubiquitous Wearable Eye Tracking. Ph.D. Thesis, Universität Tübingen, Tübingen, Germany, 2019. [Google Scholar]

- Kothari, R.S.; Bailey, R.J.; Kanan, C.; Pelz, J.B.; Diaz, G.J. EllSeg-Gen, towards Domain Generalization for Head-Mounted Eyetracking. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–17. [Google Scholar] [CrossRef]

- Niehorster, D.C.; Santini, T.; Hessels, R.S.; Hooge, I.T.C.; Kasneci, E.; Nyström, M. The Impact of Slippage on the Data Quality of Head-Worn Eye Trackers. Behav. Res. 2020, 52, 1140–1160. [Google Scholar] [CrossRef]

- Petersch, B.; Dierkes, K. Gaze-Angle Dependency of Pupil-Size Measurements in Head-Mounted Eye Tracking. Behav. Res. 2021, 54, 763–779. [Google Scholar] [CrossRef]

- Kulkarni, O.N.; Patil, V.; Singh, V.K.; Atrey, P.K. Accuracy and Fairness in Pupil Detection Algorithm. In Proceedings of the 2021 IEEE Seventh International Conference on Multimedia Big Data (BigMM), Taichung, Taiwan, 14–17 November 2021; pp. 17–24. [Google Scholar]

- Akinlar, C.; Kucukkartal, H.K.; Topal, C. Accurate CNN-Based Pupil Segmentation with an Ellipse Fit Error Regularization Term. Expert. Syst. Appl. 2022, 188, 116004. [Google Scholar] [CrossRef]

- Xiang, Z.; Zhao, X.; Fang, A. Pupil Center Detection Inspired by Multi-Task Auxiliary Learning Characteristic. Multimed. Tools Appl. 2022, 81, 40067–40088. [Google Scholar] [CrossRef]

- Bonteanu, P.; Bozomitu, R.G.; Cracan, A.; Bonteanu, G. A New Pupil Detection Algorithm Based on Multiple Angular Integral Projection Functions. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 18–19 November 2021; pp. 1–4. [Google Scholar]

- Cai, X.; Zeng, J.; Shan, S. Landmark-Aware Self-Supervised Eye Semantic Segmentation. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar]

- Kothari, R.S.; Chaudhary, A.K.; Bailey, R.J.; Pelz, J.B.; Diaz, G.J. EllSeg: An Ellipse Segmentation Framework for Robust Gaze Tracking. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2757–2767. [Google Scholar] [CrossRef]

- Larumbe-Bergera, A.; Garde, G.; Porta, S.; Cabeza, R.; Villanueva, A. Accurate Pupil Center Detection in Off-the-Shelf Eye Tracking Systems Using Convolutional Neural Networks. Sensors 2021, 21, 6847. [Google Scholar] [CrossRef]

- Shi, L.; Wang, C.; Jia, H.; Hu, X. EPS: Robust Pupil Edge Points Selection with Haar Feature and Morphological Pixel Patterns. Int. J. Patt. Recogn. Artif. Intell. 2021, 35, 2156002. [Google Scholar] [CrossRef]

- Wan, Z.-H.; Xiong, C.-H.; Chen, W.-B.; Zhang, H.-Y. Robust and Accurate Pupil Detection for Head-Mounted Eye Tracking. Comp. Electr. Eng. 2021, 93, 107193. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Y.; Liu, Y.; Lu, F. Edge-Guided Near-Eye Image Analysis for Head Mounted Displays. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 4–8 October 2021; pp. 11–20. [Google Scholar]

- Bonteanu, P.; Bozomitu, R.G.; Cracan, A.; Bonteanu, G. A Pupil Detection Algorithm Based on Contour Fourier Descriptors Analysis. In Proceedings of the 2020 IEEE 26th International Symposium for Design and Technology in Electronic Packaging (SIITME), Pitesti, Romania, 21–24 October 2020; pp. 98–101. [Google Scholar]

- Fuhl, W.; Gao, H.; Kasneci, E. Tiny Convolution, Decision Tree, and Binary Neuronal Networks for Robust and Real Time Pupil Outline Estimation. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; ACM: New York, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Han, S.Y.; Kwon, H.J.; Kim, Y.; Cho, N.I. Noise-Robust Pupil Center Detection Through CNN-Based Segmentation with Shape-Prior Loss. IEEE Access 2020, 8, 64739–64749. [Google Scholar] [CrossRef]

- Manuri, F.; Sanna, A.; Petrucci, C.P. PDIF: Pupil Detection After Isolation and Fitting. IEEE Access 2020, 8, 30826–30837. [Google Scholar] [CrossRef]

- Bonteanu, P.; Bozomitu, R.G.; Cracan, A.; Bonteanu, G. A New Pupil Detection Algorithm Based on Circular Hough Transform Approaches. In Proceedings of the 2019 IEEE 25th International Symposium for Design and Technology in Electronic Packaging (SIITME), Cluj-Napoca, Romania, 23–26 October 2019; pp. 260–263. [Google Scholar]

- Bonteanu, P.; Bozomitu, R.G.; Cracan, A.; Bonteanu, G. A High Detection Rate Pupil Detection Algorithm Based on Contour Circularity Evaluation. In Proceedings of the 2019 IEEE 25th International Symposium for Design and Technology in Electronic Packaging (SIITME), Cluj-Napoca, Romania, 23–26 October 2019; pp. 264–267. [Google Scholar]

- Bonteanu, P.; Cracan, A.; Bozomitu, R.G.; Bonteanu, G. A Robust Pupil Detection Algorithm Based on a New Adaptive Thresholding Procedure. In Proceedings of the 2019 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2019; pp. 1–4. [Google Scholar]

- Bozomitu, R.G.; Păsărică, A.; Tărniceriu, D.; Rotariu, C. Development of an Eye Tracking-Based Human-Computer Interface for Real-Time Applications. Sensors 2019, 19, 3630. [Google Scholar] [CrossRef] [PubMed]

- Eivazi, S.; Santini, T.; Keshavarzi, A.; Kübler, T.; Mazzei, A. Improving Real-Time CNN-Based Pupil Detection through Domain-Specific Data Augmentation. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver, CO, USA, 25–28 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Han, S.Y.; Kim, Y.; Lee, S.H.; Cho, N.I. Pupil Center Detection Based on the UNet for the User Interaction in VR and AR Environments. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 958–959. [Google Scholar]

- Krause, A.F.; Essig, K. Boosting Speed- and Accuracy of Gradient Based Dark Pupil Tracking Using Vectorization and Differential Evolution. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver, CO, USA, 25–28 June 2019; ACM: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Miron, C.; Pasarica, A.; Bozomitu, R.G.; Manta, V.; Timofte, R.; Ciucu, R. Efficient Pupil Detection with a Convolutional Neural Network. In Proceedings of the 2019 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2019; pp. 1–4. [Google Scholar]

- Yiu, Y.-H.; Aboulatta, M.; Raiser, T.; Ophey, L.; Flanagin, V.L.; zu Eulenburg, P.; Ahmadi, S.-A. DeepVOG: Open-Source Pupil Segmentation and Gaze Estimation in Neuroscience Using Deep Learning. J. Neurosci. Methods 2019, 324, 108307. [Google Scholar] [CrossRef] [PubMed]

- Fuhl, W.; Geisler, D.; Santini, T.; Appel, T.; Rosenstiel, W.; Kasneci, E. CBF: Circular Binary Features for Robust and Real-Time Pupil Center Detection. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; ACM: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Fuhl, W.; Eivazi, S.; Hosp, B.; Eivazi, A.; Rosenstiel, W.; Kasneci, E. BORE: Boosted-Oriented Edge Optimization for Robust, Real Time Remote Pupil Center Detection. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; ACM: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- George, A.; Routray, A. ESCaF: Pupil Centre Localization Algorithm with Candidate Filtering. arXiv 2018, arXiv:1807.10520. [Google Scholar]

- Li, J.; Li, S.; Chen, T.; Liu, Y. A Geometry-Appearance-Based Pupil Detection Method for Near-Infrared Head-Mounted Cameras. IEEE Access 2018, 6, 23242–23252. [Google Scholar] [CrossRef]

- Martinikorena, I.; Cabeza, R.; Villanueva, A.; Urtasun, I.; Larumbe, A. Fast and Robust Ellipse Detection Algorithm for Head-Mounted Eye Tracking Systems. Mach. Vis. Appl. 2018, 29, 845–860. [Google Scholar] [CrossRef]

- Santini, T.; Fuhl, W.; Kasneci, E. PuReST: Robust Pupil Tracking for Real-Time Pervasive Eye Tracking. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Santini, T.; Fuhl, W.; Kasneci, E. PuRe: Robust Pupil Detection for Real-Time Pervasive Eye Tracking. Comput. Vis. Image Underst. 2018, 170, 40–50. [Google Scholar] [CrossRef]

- Vera-Olmos, F.J.; Pardo, E.; Melero, H.; Malpica, N. DeepEye: Deep Convolutional Network for Pupil Detection in Real Environments. Integr. Comput.-Aided Eng. 2018, 26, 85–95. [Google Scholar] [CrossRef]

- Fuhl, W.; Santini, T.; Kasneci, G.; Rosenstiel, W.; Kasneci, E. PupilNet v2.0: Convolutional Neural Networks for CPU Based Real Time Robust Pupil Detection. arXiv 2017, arXiv:1711.00112. [Google Scholar]

- Topal, C.; Cakir, H.I.; Akinlar, C. APPD: Adaptive and Precise Pupil Boundary Detection Using Entropy of Contour Gradients arXiv 2018. arXiv:1709.06366.

- Vera-Olmos, F.J.; Malpica, N. Deconvolutional Neural Network for Pupil Detection in Real-World Environments. In Proceedings of the Biomedical Applications Based on Natural and Artificial Computing, Corunna, Spain, 19–23 June 2017; Ferrández Vicente, J.M., Álvarez-Sánchez, J.R., de la Paz López, F., Toledo Moreo, J., Adeli, H., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 223–231. [Google Scholar]

- Fuhl, W.; Santini, T.; Kasneci, G.; Kasneci, E. PupilNet: Convolutional Neural Networks for Robust Pupil Detection. arXiv 2016, arXiv:1601.04902. [Google Scholar]

- Fuhl, W.; Kübler, T.; Sippel, K.; Rosenstiel, W.; Kasneci, E. ExCuSe: Robust Pupil Detection in Real-World Scenarios. In Computer Analysis of Images and Patterns; Azzopardi, G., Petkov, N., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9256, pp. 39–51. ISBN 978-3-319-23191-4. [Google Scholar]

- Javadi, A.-H.; Hakimi, Z.; Barati, M.; Walsh, V.; Tcheang, L. SET: A Pupil Detection Method Using Sinusoidal Approximation. Front. Neuroeng. 2015, 8, 4. [Google Scholar] [CrossRef] [PubMed]

- Świrski, L.; Bulling, A.; Dodgson, N. Robust Real-Time Pupil Tracking in Highly off-Axis Images. In Proceedings of the Symposium on Eye Tracking Research and Applications—ETRA’12, Santa Barbara, CA, USA, 28–30 March 2012; ACM Press: New York, NY, USA, 2012; p. 173. [Google Scholar]

- Kassner, M.; Patera, W.; Bulling, A. Pupil: An Open Source Platform for Pervasive Eye Tracking and Mobile Gaze-Based Interaction. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1151–1160. [Google Scholar]

- Li, D.; Winfield, D.; Parkhurst, D.J. Starburst: A Hybrid Algorithm for Video-Based Eye Tracking Combining Feature-Based and Model-Based Approaches. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)—Workshops, San Diego, CA, USA, 21–23 September 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 3, p. 79. [Google Scholar]

- Hammerla, N.Y.; Kirkham, R.; Andras, P.; Ploetz, T. On Preserving Statistical Characteristics of Accelerometry Data Using Their Empirical Cumulative Distribution. In Proceedings of the 2013 International Symposium on Wearable Computers, Zurich, Switzerland, 8–12 September 2013; ACM: New York, NY, USA, 2013; pp. 65–68. [Google Scholar]

| Publication | Code Available? | Programming Language | Included? |

|---|---|---|---|

| Akinlar et al. [34] | ✓ | Python | ✓ |

| Xiang et al. [35] | - | Excluded: Code not available | |

| Bonteanu et al. [36] | - | Excluded: Code not available | |

| Cai et al. [37] | - | Excluded: Code not available | |

| Fuhl et al. [28] | - | Excluded: Code not available | |

| Kothari et al. [38] | ✓ | Python | ✓ |

| Larumbe-Bergera et al. [39] | - | Excluded: Code not available | |

| Shi et al. [40] | - | Excluded: Code not available | |

| Wan et al. [41] | ✓ | Python | ✓ |

| Wang et al. [42] | ✓ | Python | ✓ |

| Bonteanu et al. [43] | - | Excluded: Code not available | |

| Fuhl et al. [44] | - | Excluded: Code not available | |

| Han et al. [45] | ✓ | Python | Excluded: No weights for the neural network |

| Manuri et al. [46] | - | Excluded: Code not available | |

| Bonteanu et al. [47] | - | Excluded: Code not available | |

| Bonteanu et al. [48] | - | Excluded: Code not available | |

| Bonteanu et al. [49] | - | Excluded: Code not available | |

| Bozomitu et al. [50] | - | Excluded: Code not available | |

| Eivazi et al. [51] | ✓ | Python | ✓ |

| Han et al. [52] | - | Excluded: Code not available | |

| Krause et al. [53] | ✓ | C++ | ✓ |

| Miron et al. [54] | - | Excluded: Code not available | |

| Yiu et al. [55] | ✓ | Python | Excluded: Unable to specify the container |

| Fuhl et al. [56] | (✓) | C++ | Excluded: Binary library not available for Linux |

| Fuhl et al. [57] | (✓) | C++ | ✓ |

| George et al. [58] | - | Excluded: Code not available | |

| Li et al. [59] | - | Excluded: Code not available | |

| Martinikorena et al. [60] | ✓ | MATLAB | Excluded: Requires proprietary interpreter |

| Santini et al. [61] | ✓ | C++ | Excluded: Temporal extension of another algorithm |

| Santini et al. [62] | ✓ | C++ | ✓ |

| Vera-Olmos et al. [63] | ✓ | Python | Excluded: Unable to specify the container |

| Fuhl et al. [64] | - | Excluded: Code not available | |

| Topal et al. [65] | - | Excluded: Code not available | |

| Vera-Olmos et al. [66] | - | Excluded: Code not available | |

| Fuhl et al. [67] | - | Excluded: Code not available | |

| Fuhl et al. [27] | ✓ | C++ | ✓ |

| Fuhl et al. [68] | ✓ | C++ | ✓ |

| Javadi et al. [69] | ✓ | .NET | Excluded: Not available for Linux |

| Świrski et al. [70] | ✓ | C++ | ✓ |

| Kassner et al. [71] | ✓ | Python | ✓ |

| Li et al. [72] | ✓ | C++ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gundler, C.; Temmen, M.; Gulberti, A.; Pötter-Nerger, M.; Ückert, F. Improving Eye-Tracking Data Quality: A Framework for Reproducible Evaluation of Detection Algorithms. Sensors 2024, 24, 2688. https://doi.org/10.3390/s24092688

Gundler C, Temmen M, Gulberti A, Pötter-Nerger M, Ückert F. Improving Eye-Tracking Data Quality: A Framework for Reproducible Evaluation of Detection Algorithms. Sensors. 2024; 24(9):2688. https://doi.org/10.3390/s24092688

Chicago/Turabian StyleGundler, Christopher, Matthias Temmen, Alessandro Gulberti, Monika Pötter-Nerger, and Frank Ückert. 2024. "Improving Eye-Tracking Data Quality: A Framework for Reproducible Evaluation of Detection Algorithms" Sensors 24, no. 9: 2688. https://doi.org/10.3390/s24092688

APA StyleGundler, C., Temmen, M., Gulberti, A., Pötter-Nerger, M., & Ückert, F. (2024). Improving Eye-Tracking Data Quality: A Framework for Reproducible Evaluation of Detection Algorithms. Sensors, 24(9), 2688. https://doi.org/10.3390/s24092688