PoseNeRF: In Situ 3D Reconstruction Method Based on Joint Optimization of Pose and Neural Radiation Field for Smooth and Weakly Textured Aeroengine Blade

Abstract

1. Introduction

- (1)

- An in situ high-fidelity 3D reconstruction method, named PoseNeRF, for aeroengine blades, based on the joint optimization of pose and NeRF is proposed. The method is of great significance for aeroengine out-field detection, reverse reconstruction, digital twinning, and life assessment.

- (2)

- A deep learning method of background filtering based on complex network theory (ComBFNet) is designed. The method improves the background filtering effect by improving the feature extraction ability of the backbone. Background filtering can improve the fidelity of blade 3D reconstruction.

- (3)

- An implicit 3D reconstruction method based on cone sampling and “pose–NeRF” joint optimization is designed to solve the problems of excessive blur, aliasing artifacts, and other problems caused by smooth blade surface, weak texture information, and other factors, as well as the cumulative error caused by camera pose pre-estimation.

2. Related Works

2.1. Image-Based 3D Reconstruction

2.2. Three-Dimensional Reconstruction of Aeroengine Blades

3. Method

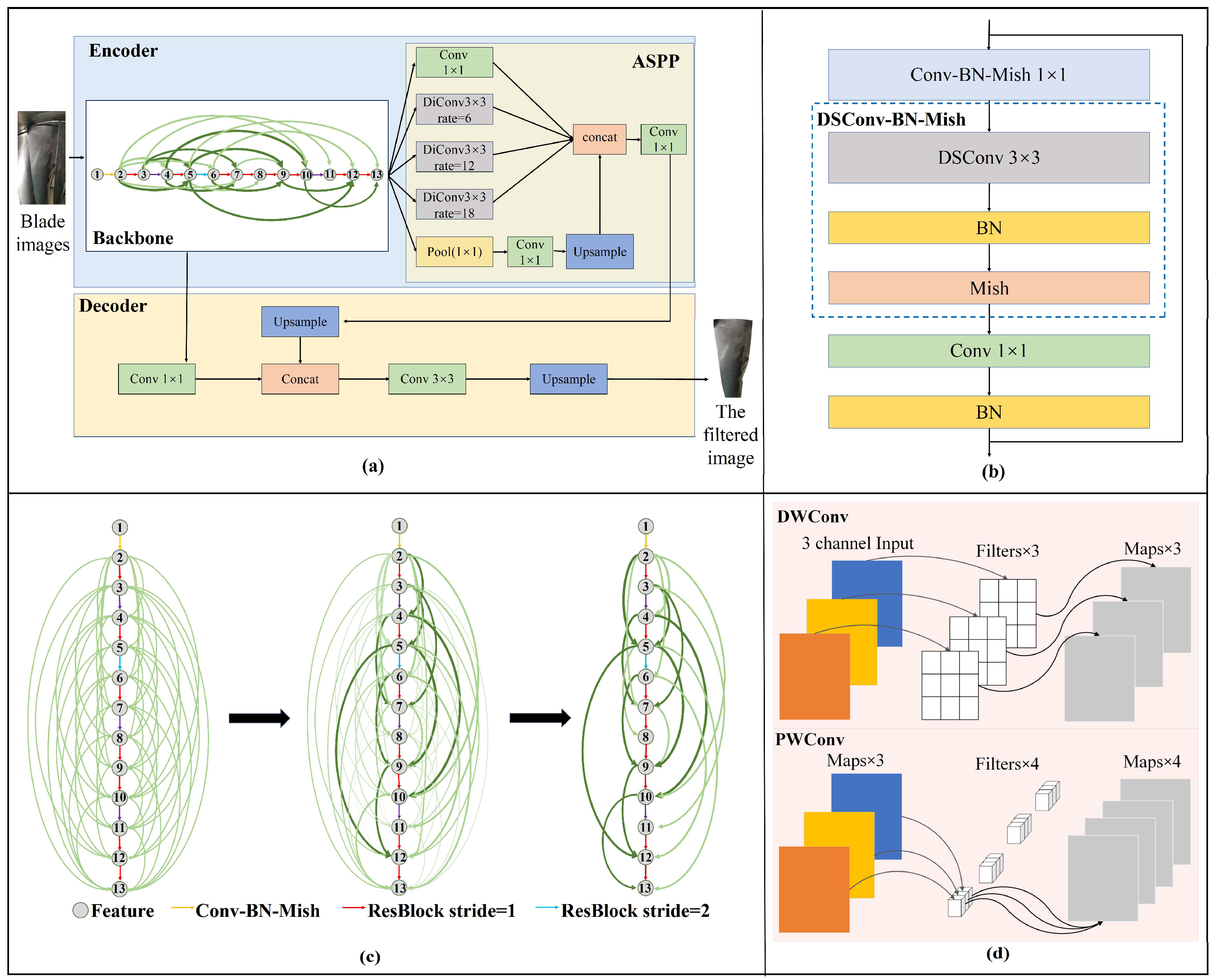

3.1. Background Filtering

3.1.1. Backbone

3.1.2. ASPP and Decoder

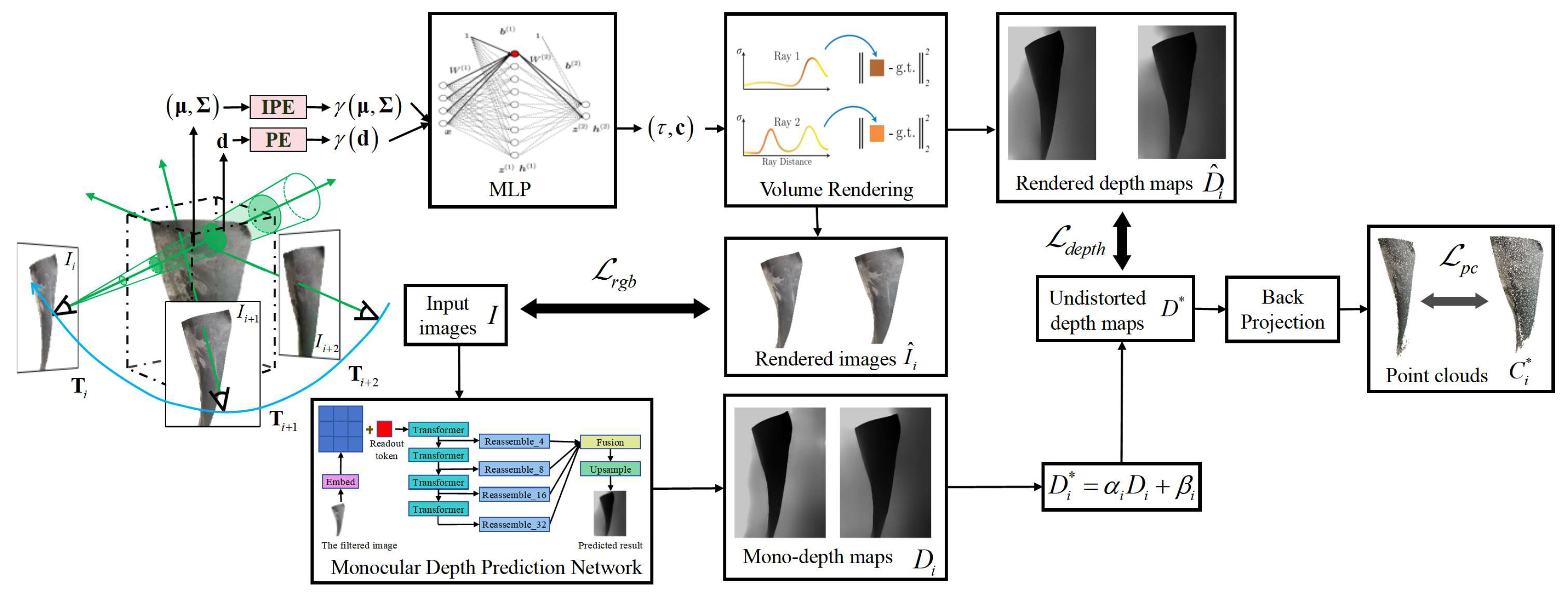

3.2. Three-Dimensional Reconstitution

3.2.1. Monocular Depth Prediction Network

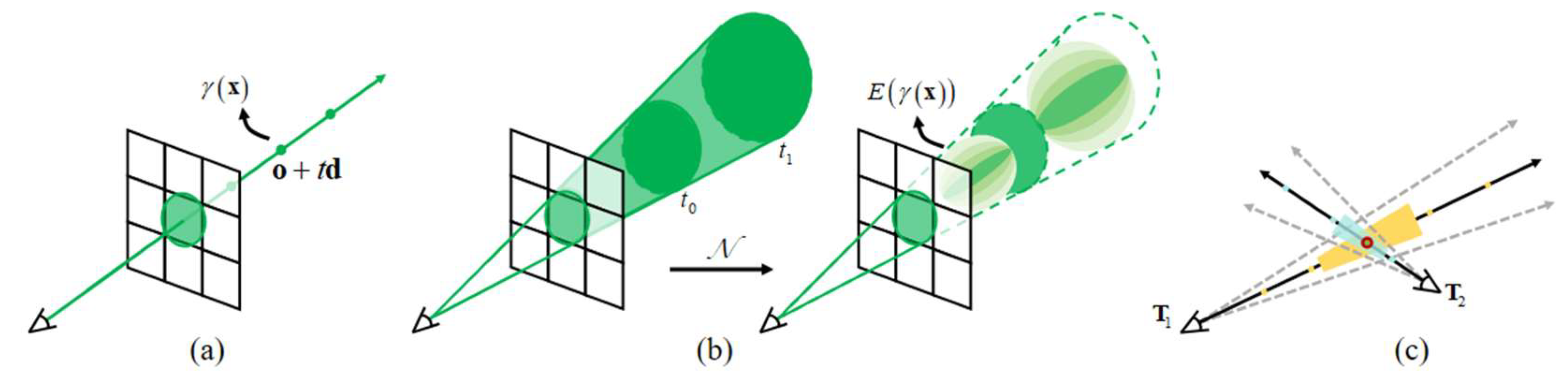

3.2.2. Cone Sampling Based NeRF

3.2.3. Joint Optimization Loss Function

4. Experiments and Results

4.1. Implementation Details

4.1.1. Experimental Conditions

4.1.2. Dataset

4.1.3. Metrics

4.2. Background Filtering Experiments

4.3. Three-Dimensional Reconstruction Experiments

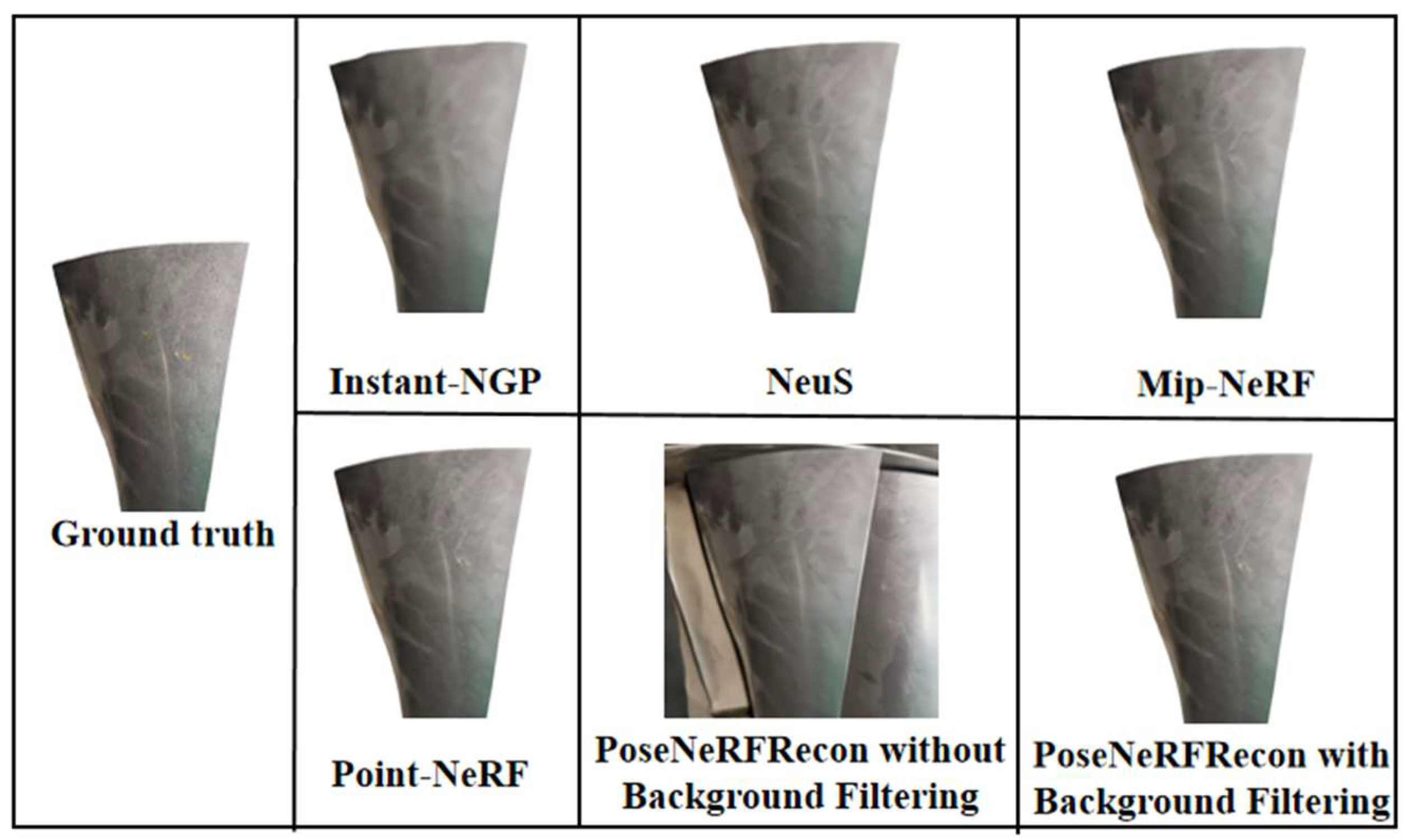

4.3.1. Comparison Experiments

4.3.2. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, X.; Peng, Y.; Zhang, B.; Xu, M. Aero engine health management technology based on digital twins. Aerosp. Power 2022, 33–36. [Google Scholar]

- Zhang, P.; Liu, J.; Yang, H.; Yang, P.; Yu, Z. Laser overlapping three-dimensional reconstruction of damaged aero engine blade. Laser Optoelectron. Prog. 2020, 57, 323–331. [Google Scholar] [CrossRef]

- Wang, H.; Wang, L.; Wang, T.; Ding, H. Method and implementation of remanufacture and repair of aircraft engine damaged blades. Acta Aeronaut. Astronaut. Sin. 2016, 37, 1036–1048. [Google Scholar]

- Aust, J.; Mitrovic, A.; Pons, D.J. Comparison of visual and visual-tactile inspection of aircraft engine blades. Aerospace 2021, 8, 123. [Google Scholar] [CrossRef]

- Hussin, R.M.; Ismail, N.H.; Mustapa, S.M.A. A study of foreign object damage (FOD) and prevention method at the airport and aircraft maintenance area. IOP Conf. Ser. Mater. Sci. Eng. 2016, 152, 012043. [Google Scholar] [CrossRef]

- Barnard, S.T.; Fischler, M.A. Computational stereo. ACM Comput. Surv. 1982, 14, 553–572. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2001, 47, 7–42. [Google Scholar] [CrossRef]

- Li, W.L.; Zhou, L.-P.; Yan, S. A case study of blade inspection based on optical scanning method. Int. J. Prod. Res. 2015, 53, 2165–2178. [Google Scholar] [CrossRef]

- He, W.; Li, Z.; Guo, Y.; Cheng, X.; Zhong, K.; Shi, Y. A robust and accurate automated registration method for turbine blade precision metrology. Int. J. Adv. Manuf. Technol. 2018, 97, 3711–3721. [Google Scholar] [CrossRef]

- Ou, J.; Zou, L.; Wang, Q.; Li, X.; Li, Y. Weld-seam identification and model reconstruction of remanufacturing blade based on three-dimensional vision. Adv. Eng. Inform. 2021, 49, 101300. [Google Scholar]

- Su, C.; Jiang, X.; Huo, G.; Zou, Q.; Zheng, Z.; Feng, H.Y. Accurate model construction of deformed aero-engine blades for remanufacturing. Int. J. Adv. Manuf. Technol. 2020, 106, 3239–3251. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing scenes as neural radiance fields for view synthesis. ACM Trans. Graph. 2020, 40, 1–15. [Google Scholar] [CrossRef]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P. Mip-NeRF: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 5835–5844. [Google Scholar]

- Wang, Y.; Han, Q.; Habermann, M.; Daniilidis, K.; Theobalt, C.; Liu, L. NeuS2: Fast learning of neural implicit surfaces for multi-view reconstruction. arXiv 2022, arXiv:2212.05231. [Google Scholar]

- Wang, P.; Liu, L.; Liu, Y.; Theobalt, C.; Komura, T.; Wang, W. NeuS: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv 2021, arXiv:2106.10689. [Google Scholar]

- Xu, Q.; Xu, Z.; Philip, J.; Bi, S.; Shu, Z.; Sunkavalli, K.; Neumann, U. Point-NeRF: Point-based neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5428–5438. [Google Scholar]

- Somraj, N.; Soundararajan, R. VIP-NeRF: Visibility prior for sparse input neural radiance fields. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Schönberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multi-view stereopsis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Pollefeys, M.; Koch, R.; Gool, L.V. Self-calibration and metric reconstruction inspite of varying and unknown intrinsic camera parameters. Int. J. Comput. Vis. 1998, 32, 7–25. [Google Scholar]

- Wu, C. Towards linear-time incremental structure from motion. In Proceedings of the International Conference on 3D Vision (3DV), Seattle, WA, USA, 29 June-1 July 2013; pp. 127–134. [Google Scholar]

- Tomasi, C.; Kanade, T. Shape and motion from image streams under orthography: A factorization method. Int. J. Comput. Vis. 1992, 9, 137–154. [Google Scholar] [CrossRef]

- Sturm, P.F.; Triggs, B. A factorization based algorithm for multi-image projective structure and motion. In Proceedings of the European Conference on Computer Vision (ECCV), Cambridge, UK, 15–18 April 1996. [Google Scholar]

- Jiang, N.; Cui, Z.; Tan, P. A global linear method for camera pose registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 481–488. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012; pp. 1–2. [Google Scholar]

- Hirschmüller, H. Stereo processing by semi-global matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Yao, Y.; Luo, Z.; Li, S.; Fang, T.; Quan, L. MVSNet: Depth inference for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, R.; Han, S.; Xu, J.; Su, H. Point-based multi-view stereo network. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1538–1547. [Google Scholar]

- Gu, X.; Fan, Z.; Zhu, S.; Dai, Z.; Tan, F.; Tan, P. Cascade cost volume for high-resolution multi-view stereo and stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2492–2501. [Google Scholar]

- Wang, F.; Galliani, S.; Vogel, C.; Speciale, P.; Pollefeys, M. PatchmatchNet: Learned multi-view patchmatch stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14189–14198. [Google Scholar]

- Ding, Y.; Yuan, W.; Zhu, Q.; Zhang, H.; Liu, X.; Wang, Y.; Liu, X. TransMVSNet: Global context-aware multi-view stereo network with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtually, 19–25 June 2021; pp. 8575–8584. [Google Scholar]

- Mineo, C.; Pierce, S.G.; Summan, R. Novel algorithms for 3D surface point cloud boundary detection and edge reconstruction. J. Comput. Des. Eng. 2019, 6, 81–91. [Google Scholar] [CrossRef]

- Xu, J.; Yang, P.; Zheng, J. Binocular vision measurement method of aero engine blade based on enhanced feature information. J. Xiamen Univ. Sci. 2022, 61, 223–230. [Google Scholar]

- Song, J.; Sun, B.; Pu, Y.; Xu, X.; Wang, T. 3D reconstruction of blade surface based on laser point cloud data. Acta Metrol. Sin. 2023, 44, 171–177. [Google Scholar]

- Jia, J.; Wang, J.; Sun, Y.; Ma, C.; Liu, A.; Yan, X. A review of studies on two parallel visual streams in cortex: The dorsal and ventral visual pathways. Chin. J. Optom. Ophthalmol. Vis. Sci. 2022, 24, 316–320. [Google Scholar]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef] [PubMed]

- Girvan, M.; Newman, M.E.J. Community structure in social and biological networks. Proc. Natl. Acad. Sci. USA 2001, 99, 7821–7826. [Google Scholar] [CrossRef]

- McInnes, B.I.A.; McBride, J.S.; Evans, N.J.; Lambert, D.D.; Andrew, A.S. Emergence of scaling in random networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 770–778. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1800–1807. [Google Scholar]

- Kaiser, L.; Gomez, A.N.; Chollet, F. Depthwise separable convolutions for neural machine translation. arXiv 2017, arXiv:1706.03059. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Bottou, L. Stochastic gradient descent tricks. In Neural Networks Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. [Google Scholar]

- Gotmare, A.D.; Keskar, N.S.; Xiong, C.; Socher, R. A closer look at deep learning heuristics: Learning rate restarts, warmup and distillation. arXiv 2018, arXiv:1810.13243. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Remondino, F.; Karami, A.; Yan, Z.; Mazzacca, G.; Rigon, S.; Qin, R. A Critical Analysis of NeRF-Based 3D Reconstruction. Remote Sens. 2023, 15, 3585. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention-MICCAI 2015; LNCS; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Kopf, J.; Rong, X.; Huang, J.-B. Robust consistent video depth estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtually, 19–25 June 2021; pp. 1611–1621. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Zhang, Z.; Scaramuzza, D. A tutorial on quantitative trajectory evaluation for visual (-inertial) odometry. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7244–7251. [Google Scholar]

| Experimental Condition | Background Filtering | 3D Reconstruction |

|---|---|---|

| Operating system | Windows 10 | Ubuntu 20.04 |

| Graphics card | GeForce RTX 3080 | GeForce RTX 3080 |

| PyTorch | 1.7.0 | 1.8.0 |

| Optimization | Adam | SGD |

| batch_size | 8 | 8 |

| Model | Backbone | mIoU (%) |

|---|---|---|

| U-Net | ResNet50 | 85.9 |

| ComNet | 88.8 | |

| PSPNet | ResNet50 | 87.1 |

| ComNet | 90.2 | |

| Yolov8n-seg | Xception | 91.8 |

| ComNet | 93.1 | |

| DeepLabv3 | ResNet50 | 93.4 |

| ComNet | 94.5 | |

| ComBFNet (ours) | ComNet | 95.5 |

| Method | PSNR | SSIM | LPIPS |

|---|---|---|---|

| Instant-NGP | 21.94 | 0.618 | 0.367 |

| NeuS | 22.92 | 0.655 | 0.357 |

| Mip-NeRF | 23.57 | 0.672 | 0.296 |

| Point-NeRF | 24.68 | 0.689 | 0.275 |

| PoseNeRF woBF | 24.53 | 0.687 | 0.278 |

| PoseNeRF wBF | 25.59 | 0.719 | 0.239 |

| Method | STD | RMSE |

|---|---|---|

| Instant-NGP | 0.57 | 0.78 |

| NeuS | 1.95 | 2.16 |

| Mip-NeRF | 1.46 | 1.24 |

| Point-NeRF | 1.72 | 1.65 |

| PoseNeRF woBF | 0.61 | 0.83 |

| PoseNeRF wBF | 0.47 | 0.69 |

| Cone | Ray | wBF | woBF | PSNR | SSIM | LPIPS | RPEr | RPEt | |

|---|---|---|---|---|---|---|---|---|---|

| Ray + woBF + (y1 = 0.2, y2 = 0.2) | √ | √ | 20.08 | 0.549 | 0.445 | 0.329 | 0.087 | ||

| Cone + woBF + (y1 = 0.2, y2 = 0.2) | √ | √ | 22.38 | 0.619 | 0.279 | 0.229 | 0.083 | ||

| Ray + wBF + (y1= = 0.2, y2 = 0.2) | √ | √ | 20.59 | 0.580 | 0.391 | 0.339 | 0.094 | ||

| Ray + woBF + (y1 = 0.6, y2 = 0.6) | √ | √ | 22.01 | 0.619 | 0.331 | 0.231 | 0.078 | ||

| Ray + woBF + (y1 = 1.0, y2 = 1.0) | √ | √ | 22.34 | 0.629 | 0.299 | 0.174 | 0.069 | ||

| Ray + wBF + (y1 = 1.0, y2 = 1.0) | √ | √ | 23.29 | 0.631 | 0.319 | 0.175 | 0.071 | ||

| Cone + woBF + (y1 = 1.0, y2 = 1.0) | √ | √ | 24.13 | 0.687 | 0.268 | 0.148 | 0.064 | ||

| Cone + wBF + (y1 = 0.2, y2 = 0.2) | √ | √ | 23.45 | 0.659 | 0.321 | 0.314 | 0.085 | ||

| Cone + wBF + (y1 = 0.6, y2 = 0.6) | √ | √ | 24.48 | 0.702 | 0.251 | 0.212 | 0.075 | ||

| Cone + wBF + (y1 = 1.0, y2 = 1.0) | √ | √ | 25.59 | 0.719 | 0.239 | 0.147 | 0.068 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, Y.; Wu, X.; Yin, Y.; Cai, Y.; Hou, Y. PoseNeRF: In Situ 3D Reconstruction Method Based on Joint Optimization of Pose and Neural Radiation Field for Smooth and Weakly Textured Aeroengine Blade. Sensors 2025, 25, 6145. https://doi.org/10.3390/s25196145

Xiao Y, Wu X, Yin Y, Cai Y, Hou Y. PoseNeRF: In Situ 3D Reconstruction Method Based on Joint Optimization of Pose and Neural Radiation Field for Smooth and Weakly Textured Aeroengine Blade. Sensors. 2025; 25(19):6145. https://doi.org/10.3390/s25196145

Chicago/Turabian StyleXiao, Yao, Xin Wu, Yizhen Yin, Yu Cai, and Yuanhan Hou. 2025. "PoseNeRF: In Situ 3D Reconstruction Method Based on Joint Optimization of Pose and Neural Radiation Field for Smooth and Weakly Textured Aeroengine Blade" Sensors 25, no. 19: 6145. https://doi.org/10.3390/s25196145

APA StyleXiao, Y., Wu, X., Yin, Y., Cai, Y., & Hou, Y. (2025). PoseNeRF: In Situ 3D Reconstruction Method Based on Joint Optimization of Pose and Neural Radiation Field for Smooth and Weakly Textured Aeroengine Blade. Sensors, 25(19), 6145. https://doi.org/10.3390/s25196145