Wireless Inertial Measurement Units in Performing Arts

Abstract

1. Introduction

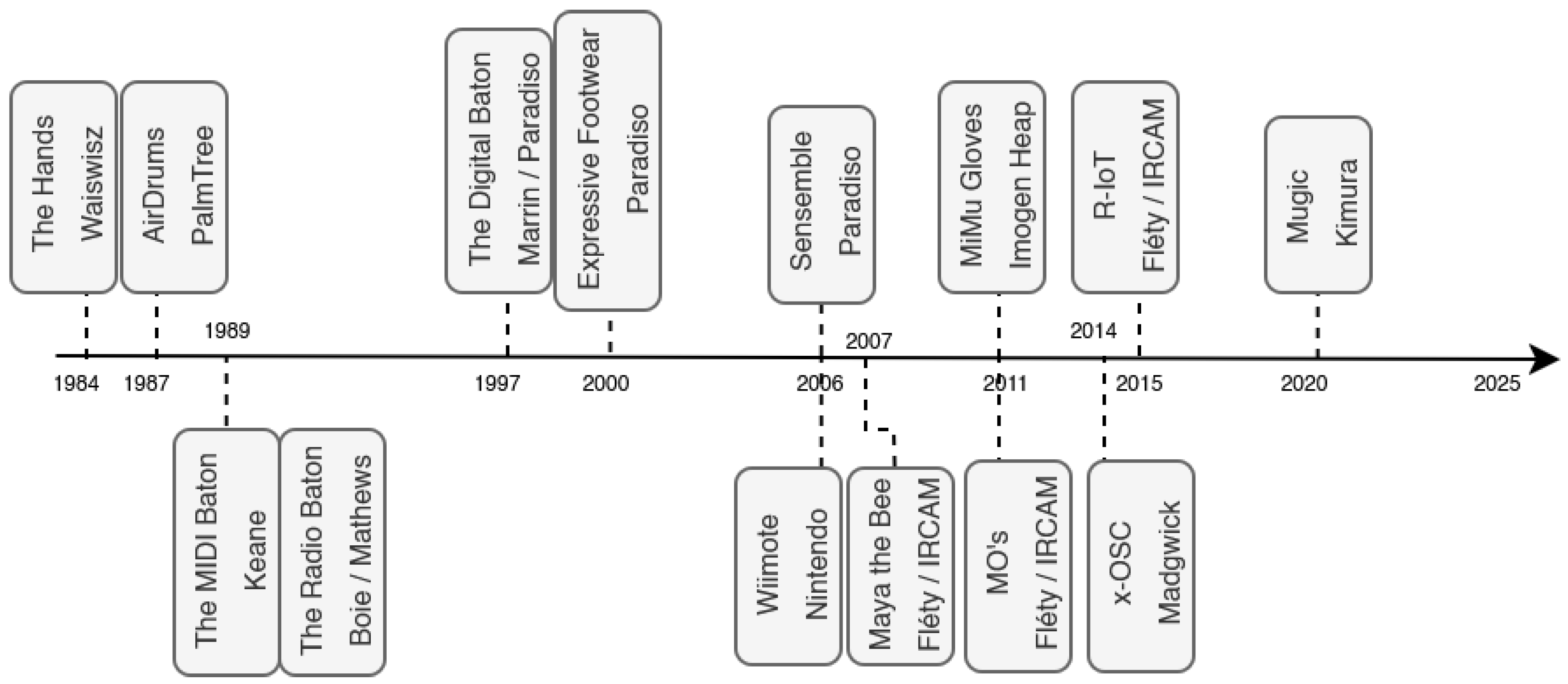

2. Movement Sensing in Performing Arts: Historical Perspectives

2.1. Capacitive Sensing and Sonar Systems

2.2. Inertial Measurement Units

- compact form factor (a few cm) and lightweight design suitable for attachment to a musical instrument or performer’s body (<20 g),

- robustness over time, making them compatible with touring conditions (bending sensors, by contrast, wear out quickly),

- low latency and low jitter, both essential for musical controllers (<10 ms).

3. Inertial Sensing and Wireless Transmission: Hardware Principles

3.1. Inertial Measurement Unit (IMU)

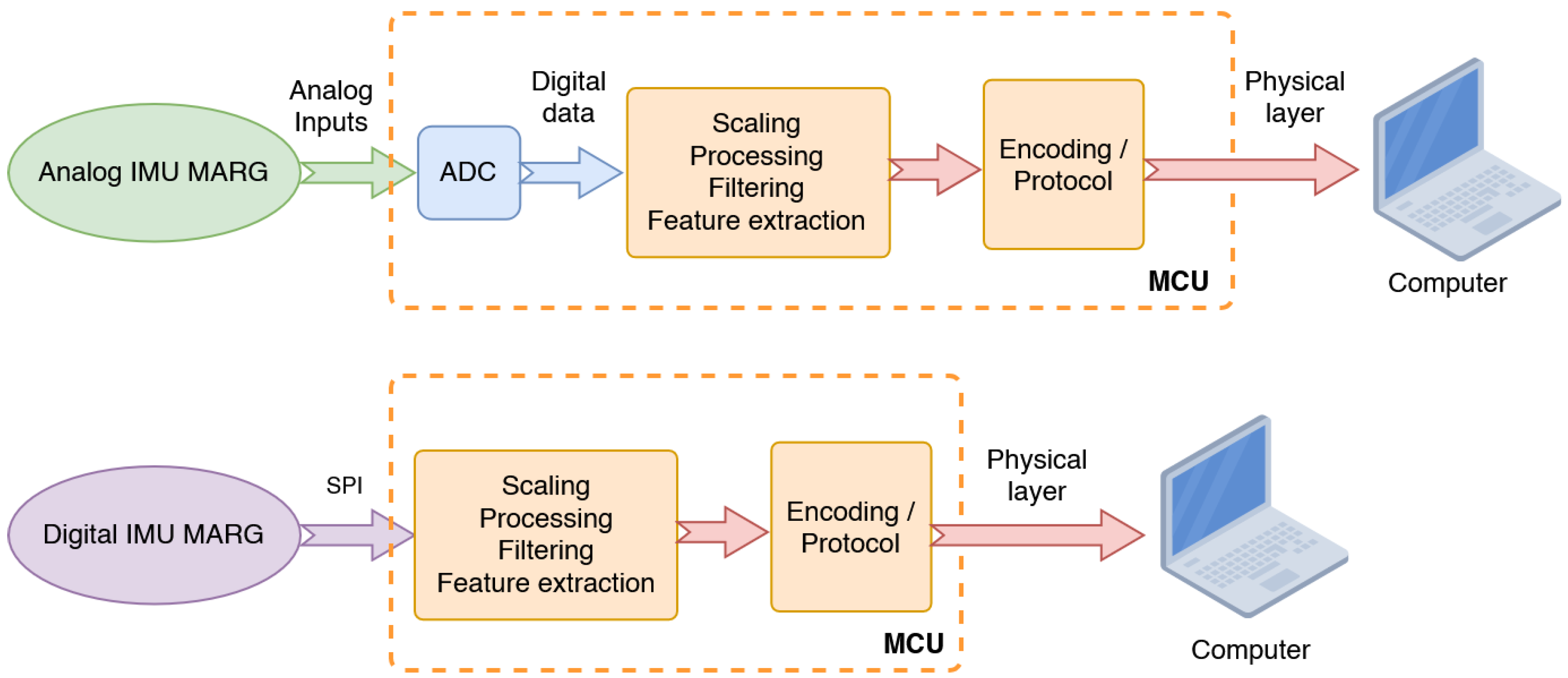

3.2. Data Acquisition and Transmission

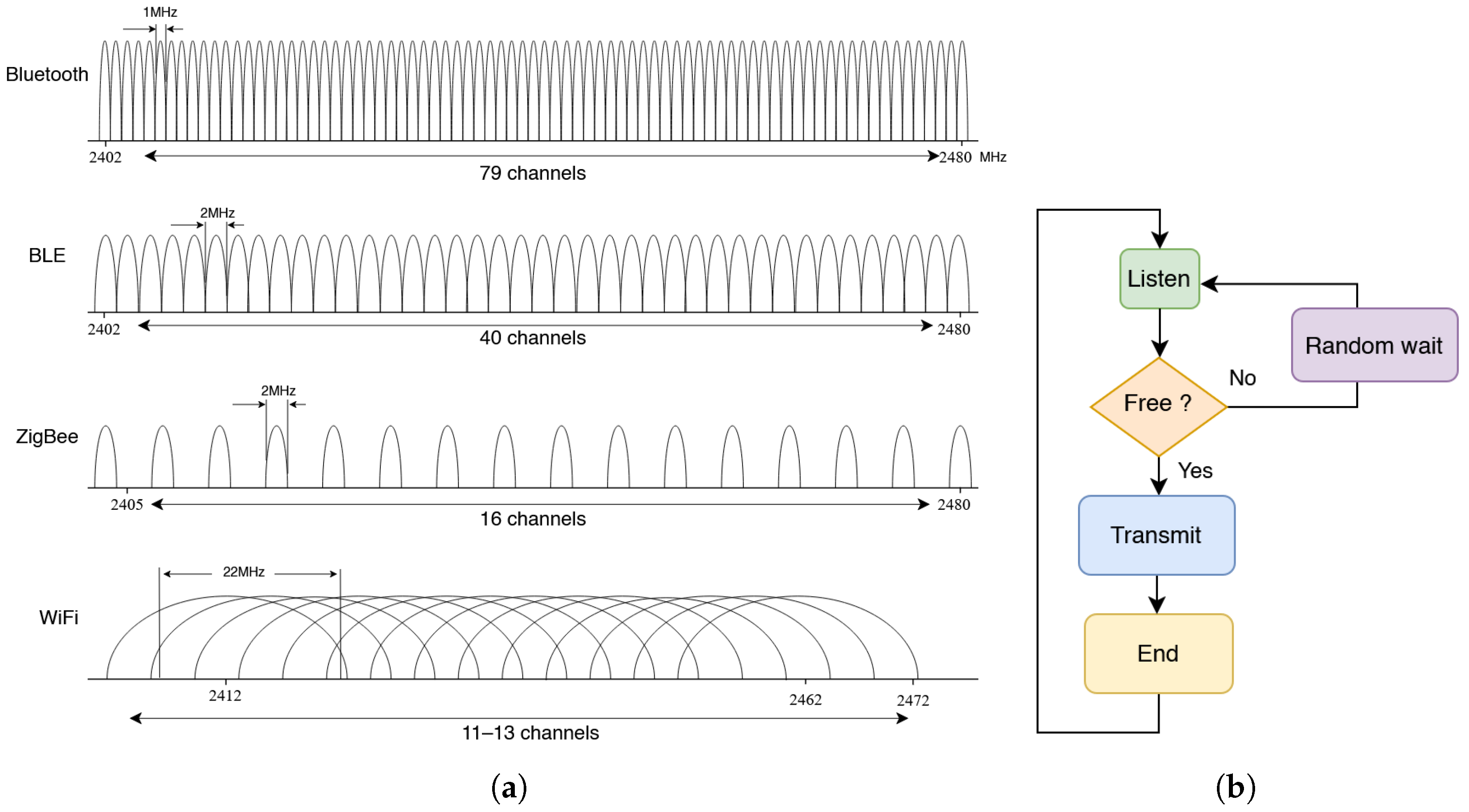

3.3. WiFi Versus Bluetooth

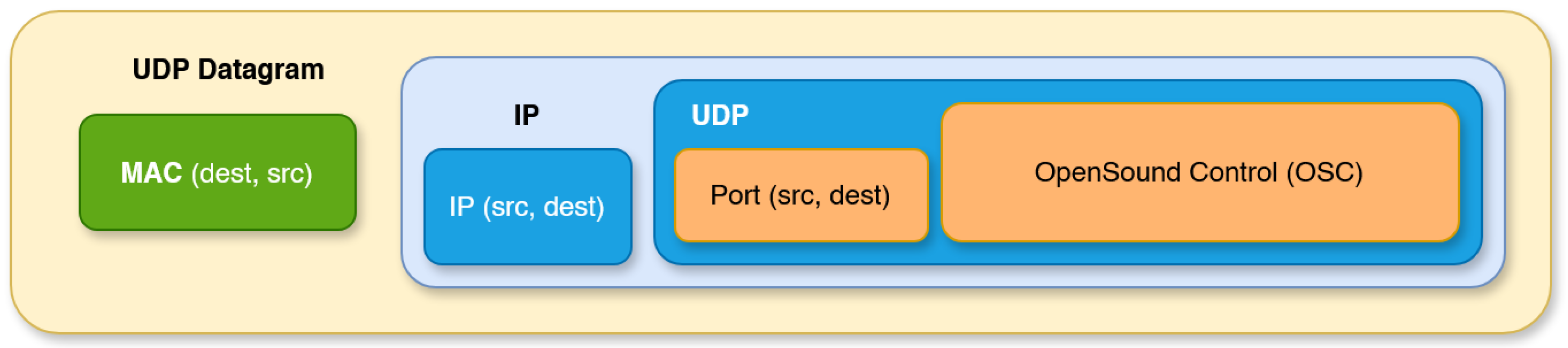

3.4. Protocol and Data Transmission

3.4.1. Sample Rate and Resolution Considerations

3.4.2. Efficiency

4. IMU Processing for Performing Arts

- Triggering events such as sound samples or MIDI events. This typically requires computing filtered acceleration intensities (see Section 4.2.1) with an onset detection (see Section 4.2.3), or using zero crossing in gyroscopes.

- Continuous “direct” mapping using the orientation (see Section 4.1.4), angular velocities from gyroscopes, or filtered acceleration intensities.

- So-called “indirect” mapping strategies using machine learning (software examples in Section 4.3). This often involves concatenating parameters of the processed parameters we mentioned (e.g. filtered acceleration intensities, orientation, and angular velocities).

4.1. Raw Data Processing

4.1.1. Sensitivity and Resolution Adjustments

4.1.2. Sampling Rate Conversion

4.1.3. IMU Calibration

- Voltage offset in the signal conditioner or the sensor’s internal ADC, as it is also typically found in gyroscopes.

- MEMS engraving offset on the silicon.

- Misalignment of the sensor’s IC casing on the PCB.

- Geometric offset or mechanical misalignment of the sensor within its housing.

4.1.4. Orientation Algorithms

4.1.5. Standard Filtering

4.2. IMU Analysis and Feature Extraction

4.2.1. Movement Intensities Derived from Acceleration Computation

4.2.2. Stillness Computation

4.2.3. Onset Detection

4.2.4. High-Level Motion Descriptors

4.3. Software Toolbox of Interactive Use Cases

- The Digital Orchestra Toolbox is a open-source collection of Max modules for the development of Digital Musical Instruments, including usefuel resources for IMU processing (https://github.com/malloch/digital-orchestra-toolbox, accessed on 17 May 2025).

- EyesWeb, by Antonio Camurri and colleagues [22], is a patch-based programming environment for body movement–computer interaction and visual arts (http://www.infomus.org/eyesweb_eng.php, accessed on 17 May 2025).

- The Gesture Recognition Toolkit (GRT) is a cross-platform, open-source C++ machine-learning library specifically designed for real-time gesture recognition (https://github.com/nickgillian/grt, accessed on 17 May 2025). In addition to a comprehensive C++ API, the GRT also includes an easy-to-use graphical user interface.

- The Gestural Sound Toolkit, using MuBu (see below), is a package that provides objects to facilitate sensor processing, triggering, gesture recognition [73]. It contains examples of gesture to sound mapping, using direct or indirect methods with Machine Learning. The toolkit accepts diverse data inputs, including wireless IMU from the R-IoT module (Section 5.3) or from smartphone inertial sensors using the Comote app (Section 5) (https://github.com/ircam-ismm/Gestural-Sound-Toolkit, accessed on 17 May 2025).

- The Libmapper library is a system for representing input and output signals on a network and for allowing arbitrary “mappings” to be dynamically created between them (http://idmil.org/software/libmapper, accessed on 17 May 2025).

- MuBu is a package for Max (Cycling’74) that contains several objects for real-time sensor analysis, including filtering (such as the accelerometer intensities described in Section 4.2.1) and gesture recognition algorithms that can be trained with one or a few examples provided by the users [51] (https://forum.ircam.fr/projects/detail/mubu/, accessed on 17 May 2025)

- Soundcool is an interactive system for collaborative sound and visual creation using smartphones, sensors and other devices (http://soundcool.org). See Dannenberg et al. [74] for examples in music education.

- the Wekinator is a stand-alone applications for using machine learning to build real-time interactive systems (http://www.wekinator.org/, accessed on 17 May 2025).

5. Recent Systems and Devices Examples

- Comote (https://apps.ismm.ircam.fr/comote)

- MediaPipe (https://chuoling.github.io/mediapipe/)

- MotionSender (http://louismccallum.com/portfolio/wekinator-motion-app)

- touchOSC (https://hexler.net/touchosc)

5.1. Mi.Mu Gloves

5.2. MUGIC

5.3. R-IoT

5.4. SOMI-1

5.5. Wave

5.6. WiDig + Orient4D

5.7. x-IMU3

5.8. Summary

6. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ADC | Analog to Digital Converter |

| API | Application Programming Interface |

| AM | Amplitude Modulation |

| ASK | Amplitude Shift Keying |

| BLE | Bluetooth Low Energy |

| CPU | Central Processing Unit |

| CSMA/CA | Carrier Sense Multiple Access with Collision Avoidance |

| CSMA/CD | Carrier Sense Multiple Access with Collision Detection |

| DAW | Digital Audio Workstation |

| DMA | Direct Memory Access |

| DMI | Digital Musical Instrument |

| DoF | Degree of Freedom |

| FM | Frequency Modulation |

| FSK | Frequency Shift Keying |

| GATT | Generic Attribute Profile |

| GRT | Gesture Recognition Toolkit |

| I2C | Inter-Integrated Circuit |

| IMU | Inertial Measurement Unit |

| MARG | Magnetic, Angular Rate and Gravity |

| MIDI | Musical Instrument Digital Interface |

| MCU | MicroController Unit |

| MEMS | Miniature Electro-Mechanical System |

| ML | Machine Learning |

| NIME | New Interfaces for Musical Expression |

| NTP | Network Time Protocol |

| ODR | Output Data Rate |

| OSC | Open Sound Control |

| OSI | Open Systems Interconnection |

| SPI | Serial Peripheral Interface |

| SSP | Secure Simple Pairing |

| TDMA | Time Division Multiple Access |

| UART | Universal Asynchronous Receiver Transmitter |

| W3C | World Wide Web Consortium |

Appendix A

References

- Rosa, J. Theremin in the Press: Instrument remediation and code-instrument transduction. Organ. Sound 2018, 23, 256–269. [Google Scholar] [CrossRef]

- Cook, P.R. Principles for Designing Computer Music Controllers. In Proceedings of the Conference on New Interfaces for Musical Expression, Seattle, WA, USA, 1–2 April 2001; pp. 1–4. [Google Scholar]

- Bevilacqua, F.; Boyer, E.O.; Françoise, J.; Houix, O.; Susini, P.; Roby-Brami, A.; Hanneton, S. Sensori-Motor Learning with Movement Sonification: Perspectives from Recent Interdisciplinary Studies. Front. Neurosci. 2016, 10, 385. [Google Scholar] [CrossRef] [PubMed]

- Peyre, I.; Roby-Brami, A.; Segalen, M.; Giron, A.; Caramiaux, B.; Marchand-Pauvert, V.; Pradat-Diehl, P.; Bevilacqua, F. Effect of sonification types in upper-limb movement: A quantitative and qualitative study in hemiparetic and healthy participants. J. Neuroeng. Rehabil. 2023, 20, 136. [Google Scholar] [CrossRef] [PubMed]

- Visi, F.; Faasch, F. Motion Controllers, Sound, and Music in Video Games: State of the Art and Research Perspectives. In Emotion in Video Game Soundtracking; Springer International Publishing: Cham, Switzerland, 2018; pp. 85–103. [Google Scholar] [CrossRef]

- Medeiros, C.B.; Wanderley, M.M. A Comprehensive Review of Sensors and Instrumentation Methods in Devices for Musical Expression. Sensors 2014, 14, 13556–13591. [Google Scholar] [CrossRef] [PubMed]

- Volta, E.; Di Stefano, N. Using Wearable Sensors to Study Musical Experience: A Systematic Review. Sensors 2024, 24, 5783. [Google Scholar] [CrossRef]

- Cavdir, D.; Wang, G. Designing felt experiences with movement-based, wearable musical instruments: From inclusive practices toward participatory design. Wearable Technol. 2022, 3, e19. [Google Scholar] [CrossRef]

- Minaoglou, P.; Efkolidis, N.; Manavis, A.; Kyratsis, P. A Review on Wearable Product Design and Applications. Machines 2024, 12, 62. [Google Scholar] [CrossRef]

- Bisht, R.S.; Jain, S.; Tewari, N. Study of Wearable IoT devices in 2021: Analysis & Future Prospects. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 577–581. [Google Scholar] [CrossRef]

- Miller Leta, E. Cage, Cunningham, and Collaborators: The Odyssey of “Variations V”. Music Q. 2001, 85, 545–567. [Google Scholar] [CrossRef]

- Wanderley, M. Prehistoric NIME: Revisiting Research on New Musical Interfaces in the Computer Music Community before NIME. In Proceedings of the International Conference on New Interfaces for Musical Expression, Mexico City, Mexico, 31 May–3 June 2023; Ortiz, M., Marquez-Borbon, A., Eds.; pp. 60–69, ISSN 2220-4806. [Google Scholar] [CrossRef]

- Torre, G.; Andersen, K.; Baldé, F. The Hands: The Making of a Digital Musical Instrument. Comput. Music J. 2016, 40, 22–34. [Google Scholar] [CrossRef]

- Paradiso, J.A. The Brain Opera Technology: New Instruments and Gestural Sensors for Musical Interaction and Performance. J. New Music Res. 1999, 28, 130–149. [Google Scholar] [CrossRef]

- Boulanger, R.; Mathews, M.V. The 1997 Mathews Radio-Baton and Improvisation Modes. In Proceedings of the 1997 International Computer Music Conference, ICMC 1997, Thessaloniki, Greece, 25–30 September 1997; Michigan Publishing: Ann Arbor, MI, USA, 1997. Available online: http://hdl.handle.net/2027/spo.bbp2372.1997.105 (accessed on 20 August 2025).

- Boie, R.A. The Radio Drum as a Synthesizer Controller. In Proceedings of the 1989 International Computer Music Conference, ICMC 1989, Columbus, OH, USA, 2–5 November 1989; Michigan Publishing: Ann Arbor, MI, USA, 1989. Available online: http://hdl.handle.net/2027/spo.bbp2372.1989.010 (accessed on 20 August 2025).

- Broadhurst, S.B. Troika Ranch: Making New Connections A Deleuzian Approach to Performance and Technology. Perform. Res. 2008, 13, 109–117. [Google Scholar] [CrossRef]

- Mulder, A. The I-Cube system: Moving towards sensor technology for artists. In Proceedings of the Sixth Symposium on Electronic Arts (ISEA 95), Montreal, QC, Canada, 17–24 September 1995. [Google Scholar]

- Simanowski, R. Very Nervous System and the Benefit of Inexact Control. Interview with David Rokeby. Dicht. Digit. J. für Kunst und Kult. Digit. Medien. 2003, 5, 1–9. [Google Scholar] [CrossRef]

- Winkler, T. Creating Interactive Dance with the Very Nervous System. In Proceedings of the Connecticut College Symposium on Arts and Technology, New London, CT, USA, 27 February–2 March 1997. [Google Scholar]

- Povall, R. Realtime control of audio and video through physical motion: STEIM’s BigEye. In Proceedings of the Journées d’Informatique Musicale, île de Tatihou, France, 16–18 May 1996. [Google Scholar]

- Camurri, A.; Hashimoto, S.; Ricchetti, M.; Ricci, A.; Suzuki, K.; Trocca, R.; Volpe, G. EyesWeb: Toward Gesture and Affect Recognition in Interactive Dance and Music Systems. Comput. Music J. 2000, 24, 57–69. [Google Scholar] [CrossRef]

- Chung, J.L.; Ong, L.Y.; Leow, M.C. Comparative analysis of skeleton-based human pose estimation. Future Internet 2022, 14, 380. [Google Scholar] [CrossRef]

- Downes, P. Motion Sensing in Music and Dance Performance. In Proceedings of the Audio Engineering Society Conference: 5th International Conference: Music and Digital Technology, Los Angeles, CA, USA, 1–3 May 1987; Audio Engineering Society: New York, NY, USA, 1987. [Google Scholar]

- Keane, D.; Gross, P. The MIDI Baton. In Proceedings of the 1989 International Computer Music Conference, ICMC 1989, Columbus, OH, USA, 2–5 November 1989; Michigan Publishing: Ann Arbor, MI, USA, 1989. [Google Scholar]

- Putnam, W.; Knapp, R.B. Input/data acquisition system design for human computer interfacing. Unpubl. Lect. Notes Oct. 1996, 17. section 3.3. [Google Scholar]

- Verplaetse, C. Inertial proproceptive devices: Self-motion-sensing toys and tools. IBM Syst. J. 1996, 35, 639–650. [Google Scholar] [CrossRef]

- Marrin, T.; Paradiso, J.A. The Digital Baton: A Versatile Performance Instrument. In Proceedings of the International Computer Music Conference Proceedings: Vol. 1997, Thessaloniki, Greece, 25–30 September 1997. [Google Scholar]

- Ronchetti, L. Eluvion-Etude—For Viola, Real-Time Electronic Device and Interactive Shots. 1997. Available online: https://medias.ircam.fr/en/work/eluvion-etude (accessed on 17 May 2025).

- Paradiso, J.A.; Gershenfeld, N. Musical Applications of Electric Field Sensing. Comput. Music J. 1997, 21, 69–89. [Google Scholar] [CrossRef]

- Machover, T. Hyperinstruments: A Progress Report, 1987–1991; MIT Media Laboratory: Cambridge, MA, USA, 1992. [Google Scholar]

- Bevilacqua, F.; Fléty, E.; Lemouton, S.; Baschet, F. The augmented violin project: Research, composition and performance report. In Proceedings of the 6th Conference on New Interfaces for Musical Expression, NIME-06, Paris, France, 4–8 June 2006. [Google Scholar]

- Lambert, J.P.; Robaszkiewicz, S.; Schnell, N. Synchronisation for Distributed Audio Rendering over Heterogeneous Devices, in HTML5. In Proceedings of the 2nd Web Audio Conference (WAC-2016), Atlanta, GA, USA, 4–6 April 2016; Available online: https://hal.science/hal-01304889v1/ (accessed on 17 May 2025).

- Coduys, T.; Henry, C.; Cont, A. TOASTER and KROONDE: High-Resolution and High-Speed Real-time Sensor Interfaces. In Proceedings of the Conference on New Interfaces for Musical Expression, Hamamatsu, Japan, 3–5 June 2004; pp. 205–206. [Google Scholar]

- Simon, E. The Impressive Electronic Arm of Emilie Simon. 2023. Available online: https://www.youtube.com/watch?v=xIEZtr_FYxA (accessed on 17 May 2025).

- Dimitrov, S.; Serafin, S. A Simple Practical Approach to a Wireless Data Acquisition Board. In Proceedings of the International Conference on New Interfaces for Musical Expression, Paris, France, 4–8 June 2006; pp. 184–187. [Google Scholar] [CrossRef]

- Aylward, R.; Paradiso, J.A. Sensemble: A Wireless, Compact, Multi-User Sensor System for Interactive Dance. In Proceedings of the International Conference on New Interfaces for Musical Expression, Paris, France, 4–8 June 2006; pp. 134–139. [Google Scholar] [CrossRef]

- Nikoukar, A.; Raza, S.; Poole, A.; Güneş, M.; Dezfouli, B. Low-Power Wireless for the Internet of Things: Standards and Applications. IEEE Access 2018, 6, 67893–67926. [Google Scholar] [CrossRef]

- Fléty, E. The Wise Box: A multi-performer wireless sensor interface using WiFi and OSC. In Proceedings of the 5th Conference on New Interfaces for Musical Expression, NIME-05, Vancouver, BC, Canada, 26–28 May 2005; pp. 266–267. [Google Scholar]

- Fléty, E.; Maestracci, C. Latency improvement in sensor wireless transmission using IEEE 802.15.4. In Proceedings of the International Conference on New Interfaces for Musical Expression, Oslo, Norway, 30 May–1 June 2011; Jensenius, A.R., Tveit, A., Godøy, R.I., Overholt, D., Eds.; 2011; pp. 409–412, ISSN 2220-4806. [Google Scholar]

- Wanderley, M.; Battier, M. Trends in Gestural Control of Music [CD-ROM]; IRCAM-Centre Pompidou: Paris, France, 2000. [Google Scholar]

- Fléty, E. AtoMIC Pro: A multiple sensor acquisition device. In Proceedings of the 2002 Conference on New Interfaces for Musical Expression, Dublin, Ireland, 24–26 May 2002; pp. 1–6. [Google Scholar]

- Miranda, E.; Wanderley, M. New Digital Musical Instruments: Control and Interaction beyond the Keyboard; A-R Editions: Middleton, WI, USA, 2006. [Google Scholar]

- Wang, J.; Mulder, A.; Wanderley, M.M. Practical Considerations for MIDI over Bluetooth Low Energy as a Wireless Interface. In Proceedings of the Conference on New Interfaces for Musical Expression, Porto Alegre, Brazil, 3–6 June 2019. [Google Scholar]

- Mitchell, T.; Madgwick, S.; Rankine, S.; Hilton, G.; Freed, A.; Nix, A. Making the Most of Wi-Fi: Optimisations for Robust Wireless Live Music Performance. In Proceedings of the Conference on New Interfaces for Musical Expression, London, UK, 30 June–4 July 2014. [Google Scholar]

- Wang, J. Analysis of Wireless Interface Latency and Usability for Digital Musical Instruments. Ph.D. Thesis, McGill University—Input Devices and Music Interaction Laboratory, Montreal, QC, Canada, 2021. [Google Scholar]

- Freed, A. Open Sound Control: A New Protocol for Communicating with Sound Synthesizers. In Proceedings of the International Computer Music Conference, Thessaloniki, Greece, 25–30 September 1997. [Google Scholar]

- Vaessen, M.J.; Abassi, E.; Mancini, M.; Camurri, A.; de Gelder, B. Computational Feature Analysis of Body Movements Reveals Hierarchical Brain Organization. Cereb. Cortex 2018, 29, 3551–3560. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Zamborlin, B.; Sypniewski, A.; Schnell, N.; Guédy, F.; Rasamimanana, N. Continuous Realtime Gesture Following and Recognition. In Proceedings of the Gesture in Embodied Communication and Human-Computer Interaction, Berlin/Heidelberg, Germany, 25–27 February 2009; Kopp, S., Wachsmuth, I., Eds.; 2019; pp. 73–84. [Google Scholar]

- Bevilacqua, F.; Schnell, N.; Rasamimanana, N.; Bloit, J.; Fléty, E.; Caramiaux, B.; Françoise, J.; Boyer, E. De-MO: Designing Action-sound Relationships with the M0 Interfaces. In Proceedings of the CHI ’13 Extended Abstracts on Human Factors in Computing Systems, CHI EA ’13, Paris, France, 27 April–2 May 2013; pp. 2907–2910. [Google Scholar] [CrossRef]

- Françoise, J.; Bevilacqua, F. Motion-sound mapping through interaction: An approach to user-centered design of auditory feedback using machine learning. ACM Trans. Interact. Intell. Syst. (TiiS) 2018, 8, 1–30. [Google Scholar] [CrossRef]

- Visi, F.G.; Tanaka, A. Interactive machine learning of musical gesture. In Handbook of Artificial Intelligence for Music: Foundations, Advanced Approaches, and Developments for Creativity; Springer: Berlin/Heidelberg, Germany, 2021; pp. 771–798. [Google Scholar]

- Alonso Trillo, R.; Nelson, P.A.; Michailidis, T. Rethinking Instrumental Interface Design: The MetaBow. Comput. Music J. 2023, 47, 5–20. [Google Scholar] [CrossRef]

- Spielvogel, A.R.; Shah, A.S.; Whitcomb, L.L. Online 3-Axis Magnetometer Hard-Iron and Soft-Iron Bias and Angular Velocity Sensor Bias Estimation Using Angular Velocity Sensors for Improved Dynamic Heading Accuracy. arXiv 2022, arXiv:2201.02449. [Google Scholar] [CrossRef]

- Song, S.Y.; Pei, Y.; Hsiao-Wecksler, E.T. Estimating Relative Angles Using Two s Without Magnetometers. IEEE Sens. J. 2022, 22, 19688–19699. [Google Scholar] [CrossRef]

- Wang, H. Research on the application of wireless wearable sensing devices in interactive music. J. Sens. 2021, 2021, 7608867. [Google Scholar] [CrossRef]

- Mahony, R.; Hamel, T.; Pflimlin, J.M. Nonlinear Complementary Filters on the Special Orthogonal Group. IEEE Trans. Autom. Control 2008, 53, 1203–1218. [Google Scholar] [CrossRef]

- Madgwick, S. An Efficient Orientation Filter for Inertial and Inertial/Magnetic Sensor Arrays; Technical Report; University of Bristol: Bristol, UK, 2010. [Google Scholar]

- Sensortech, B. Smart Sensor: BNO055, 2025. Available online: https://www.bosch-sensortec.com/products/smart-sensor-systems/bno055/ (accessed on 17 May 2025).

- García-de Villa, S.; Casillas-Pérez, D.; Jiménez-Martín, A.; García-Domínguez, J.J. Inertial Sensors for Human Motion Analysis: A Comprehensive Review. IEEE Trans. Instrum. Meas. 2023, 72, 1–39. [Google Scholar] [CrossRef]

- Freire, S.; Santos, G.; Armondes, A.; Meneses, E.A.; Wanderley, M.M. Evaluation of inertial sensor data by a comparison with optical motion capture data of guitar strumming gestures. Sensors 2020, 20, 5722. [Google Scholar] [CrossRef]

- Schoeller, F.; Ashur, P.; Larralde, J.; Le Couedic, C.; Mylapalli, R.; Krishnanandan, K.; Ciaunica, A.; Linson, A.; Miller, M.; Reggente, N.; et al. Gesture sonification for enhancing agency: An exploratory study on healthy participants. Front. Psychol. 2025, 15, 1450365. [Google Scholar] [CrossRef]

- Flash, T.; Hogan, N. The coordination of arm movements: An experimentally confirmed mathematical model. J. Neurosci. 1985, 5, 1688–1703. [Google Scholar] [CrossRef]

- Sharkawy, A. Minimum Jerk Trajectory Generation for Straight and Curved Movements: Mathematical Analysis. In Advances in Robotics: Review; Yurish, S.Y., Ed.; IFSA Publishing: Barcelona, Spain, 2021; Volume 2, pp. 187–201, arXiv.2102.07459. [Google Scholar]

- Camurri, A.; Mazzarino, B.; Ricchetti, M.; Timmers, R.; Volpe, G. Multimodal analysis of expressive gesture in music and dance performances. In Proceedings of the Gesture-Based Communication in Human-Computer Interaction: 5th International Gesture Workshop, GW 2003, Genova, Italy, 15–17 April 2003; Selected Revised Papers 5. Springer: Cham, Switzerland, 2004; pp. 20–39. [Google Scholar]

- Niewiadomski, R.; Kolykhalova, K.; Piana, S.; Alborno, P.; Volpe, G.; Camurri, A. Analysis of Movement Quality in Full-Body Physical Activities. ACM Trans. Interact. Intell. Syst. 2019, 9, 1–20. [Google Scholar] [CrossRef]

- Caramiaux, B.; Françoise, J.; Schnell, N.; Bevilacqua, F. Mapping Through Listening. Comput. Music J. 2014, 38, 34–48. [Google Scholar] [CrossRef]

- Françoise, J.; Schnell, N.; Bevilacqua, F. A multimodal probabilistic model for gesture–based control of sound synthesis. In Proceedings of the 21st ACM International Conference on Multimedia, MM ’13, New York, NY, USA, 21–25 October 2013; pp. 705–708. [Google Scholar] [CrossRef]

- Rasamimanana, N.; Bevilacqua, F.; Schnell, N.; Guedy, F.; Flety, E.; Maestracci, C.; Zamborlin, B.; Frechin, J.L.; Petrevski, U. Modular musical objects towards embodied control of digital music. In Proceedings of the Fifth International Conference on Tangible, Embedded, and Embodied Interaction, TEI ’11, New York, NY, USA, 22–26 January 2010; pp. 9–12. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Schnell, N.; Françoise, J.; Boyer, É.O.; Schwarz, D.; Caramiaux, B. Designing action–sound metaphors using motion sensing and descriptor-based synthesis of recorded sound materials. In The Routledge Companion to Embodied Music Interaction; Routledge: London, UK, 2017; pp. 391–401. [Google Scholar]

- Larboulette, C.; Gibet, S. A Review of Computable Expressive Descriptors of Human Motion. In Proceedings of the International Workshop on Movement and Computing, Vancouver, BC, Canada, 14–15 August 2015; pp. 21–28. [Google Scholar] [CrossRef]

- Niewiadomski, R.; Mancini, M.; Piana, S.; Alborno, P.; Volpe, G.; Camurri, A. Low-intrusive recognition of expressive movement qualities. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, ICMI ’17, Glasgow, UK, 13–17 November 2017; pp. 230–237. [Google Scholar] [CrossRef]

- Caramiaux, B.; Altavilla, A.; Françoise, J.; Bevilacqua, F. Gestural sound toolkit: Reflections on an interactive design project. In Proceedings of the International Conference on New Interfaces for Musical Expression, Auckland, New Zealand, 28 June–1 July 2022; Available online: https://ircam-ismm.github.io/max-msp/gst.html (accessed on 17 May 2025).

- Dannenberg, R.B.; Sastre, J.; Scarani, S.; Lloret, N.; Carrascosa, E. Mobile Devices and Sensors for an Educational Multimedia Opera Project. Sensors 2023, 23, 4378. [Google Scholar] [CrossRef]

- Brown, D. End-User Action-Sound Mapping Design for Mid-Air Music Performance. Ph.D. Thesis, University of the West of England, Bristol, UK, 2020. [Google Scholar]

- Mitchell, T.J.; Madgwick, S.; Heap, I. Musical interaction with hand posture and orientation: A toolbox of gestural control mechanisms. In Proceedings of the International Conference on New Interfaces for Musical Expression, Ann Arbor, MI, USA, 21–23 May 2012. [Google Scholar]

- Kimura, M. Rossby Waving for Violin, MUGIC® sensor, and video. In Proceedings of the NIME 2021, Shanghai, China, 14–18 June 2021. [Google Scholar]

- Lough, A.; Micchelli, M.; Kimura, M. Gestural envelopes: Aesthetic considerations for mapping physical gestures using wireless motion sensors. In Proceedings of the ICMC, Daegu, Republic of Korea, 5–10 August 2018. [Google Scholar]

- Fernandez, J.M.; Köppel, T.; Verstraete, N.; Lorieux, G.; Vert, A.; Spiesser, P. Gekipe, a gesture-based interface for audiovisual performance. In Proceedings of the NIME, Copenhagen, Denmark, 15–18 May 2017; pp. 450–455. [Google Scholar]

- Lemouton, S.; Borghesi, R.; Haapamäki, S.; Bevilacqua, F.; Fléty, E. Following orchestra conductors: The IDEA open movement dataset. In Proceedings of the 6th International Conference on Movement and Computing, Tempe, AZ, USA, 10–12 October 2019; pp. 1–6. [Google Scholar]

- Dalmazzo, D.; Ramirez, R. Air violin: A machine learning approach to fingering gesture recognition. In Proceedings of the 1st ACM SIGCHI International Workshop on Multimodal Interaction for Education, Glasgow, UK, 13 November 2017; pp. 63–66. [Google Scholar]

- Buchberger, S. Investigating Creativity Support Opportunities Through Digital Tools in Dance. Ph.D. Thesis, Technische Universität Wien, Vienna, Austria, 2024. [Google Scholar]

- Daoudagh, S.; Ignesti, G.; Moroni, D.; Sebastiani, L.; Paradisi, P. Assessment of Dance Movement Therapy Outcomes: A Preliminary Proposal. In Proceedings of the International Conference on Computer-Human Interaction Research and Applications; Springer: Cham, Switzerland, 2024; pp. 382–395. [Google Scholar]

- Norderval, K. Electrifying Opera, Amplifying Agency: Designing a Performer-Controlled Interactive Audio System for Opera Singers. Ph.D. Thesis, Oslo National Academy of the Arts, Academy of Opera, Oslo, Norway, 2023. [Google Scholar] [CrossRef]

- Bilodeau, M.E.; Gagnon, G.; Breuleux, Y. NUAGE: A Digital Live Audiovisual Arts Tangible Interface. In Proceedings of the 27th International Symposium on Electronic Arts, Barcelona, Spain, 10–16 June 2022. [Google Scholar]

- Hilton, C.; Hawkins, K.; Tew, P.; Collins, F.; Madgwick, S.; Potts, D.; Mitchell, T. EqualMotion: Accessible Motion Capture for the Creative Industries. arXiv 2025, arXiv:2507.08744. [Google Scholar] [CrossRef]

- Madgwick, S.; Mitchell, T. x-OSC: A versatile wireless I/O device for creative/music applications. In Proceedings of the SMC 2013, Manchester, UK, 13–16 October 2013; Available online: https://www.x-io.co.uk/downloads/x-OSC-SMC-2013-Paper.pdf (accessed on 17 May 2025).

- Machhi, V.S.; Shah, A.M. A Review of Wearable Devices for Affective Computing. In Proceedings of the 2024 International Conference on Advancements in Smart, Secure and Intelligent Computing (ASSIC), Bhubaneswar, India, 27–29 January 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Lu, J.; Fang, D.; Qin, Y.; Tang, J. Wireless Interactive Sensor Platform for Real-Time Audio-Visual Experience. In Proceedings of the 12th International Conference on New Interfaces for Musical Expression, NIME 2012, nime.org, Ann Arbor, MI, USA, 21–23 May 2012; Essl, G., Gillespie, R.B., Gurevich, M., O’Modhrain, S., Eds.; Zenodo: Geneva, Switzerland, 2012. [Google Scholar] [CrossRef]

- Torre, G.; Fernström, M.; O’Flynn, B.; Angove, P. Celeritas: Wearable Wireless System. In Proceedings of the International Conference on New Interfaces for Musical Expression, New York City, NY, USA, 3–6 June 2007; pp. 205–208. [Google Scholar] [CrossRef]

- Peyre, I. Sonification du Mouvement pour la Rééducation Après une lésion Cérébrale Acquise: Conception et Évaluations de Dispositifs. Ph.D. Thesis, Sorbonne Université, Paris, France, 2022. [Google Scholar]

| Sensors | Samplerate (Hz) | Res. (bits) | Protocol | Wireless | Prog. | API | |

|---|---|---|---|---|---|---|---|

| Mi.Mu | MARG | >100 | unknown | OSC | Wi-Fi | N | N |

| MUGIC | MARG | 40–100 | 16 | OSC | Wi-Fi | N | N |

| R-IoT 3 | MARG + Baro | 200 | 16 | OSC MIDI | Wi-Fi BLE | Y | Y |

| SOMI-1 | MARG | 200 | 7–14 | MIDI | BLE | N | N |

| Wave | 6D IMU | 100 | 7 | MIDI | BLE | N | N |

| WiDig | MARG | >100 | 16 | OSC MIDI | Wi-Fi BLE | N | Y |

| x-IMU 3 | MARG | 400 | 16 | UDP TCP | Wi-Fi BLE | N | Y |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fléty, E.; Bevilacqua, F. Wireless Inertial Measurement Units in Performing Arts. Sensors 2025, 25, 6188. https://doi.org/10.3390/s25196188

Fléty E, Bevilacqua F. Wireless Inertial Measurement Units in Performing Arts. Sensors. 2025; 25(19):6188. https://doi.org/10.3390/s25196188

Chicago/Turabian StyleFléty, Emmanuel, and Frédéric Bevilacqua. 2025. "Wireless Inertial Measurement Units in Performing Arts" Sensors 25, no. 19: 6188. https://doi.org/10.3390/s25196188

APA StyleFléty, E., & Bevilacqua, F. (2025). Wireless Inertial Measurement Units in Performing Arts. Sensors, 25(19), 6188. https://doi.org/10.3390/s25196188