Images Versus Videos in Contrast-Enhanced Ultrasound for Computer-Aided Diagnosis

Abstract

1. Introduction

Motivation and Novelty

2. State of the Art

2.1. Classical CNN-Based Approaches

2.2. Vision Transformers (ViTs)

2.3. Hybrid CNN–Transformer Models

2.4. Video-Based Approaches

3. Materials and Methods

3.1. Materials

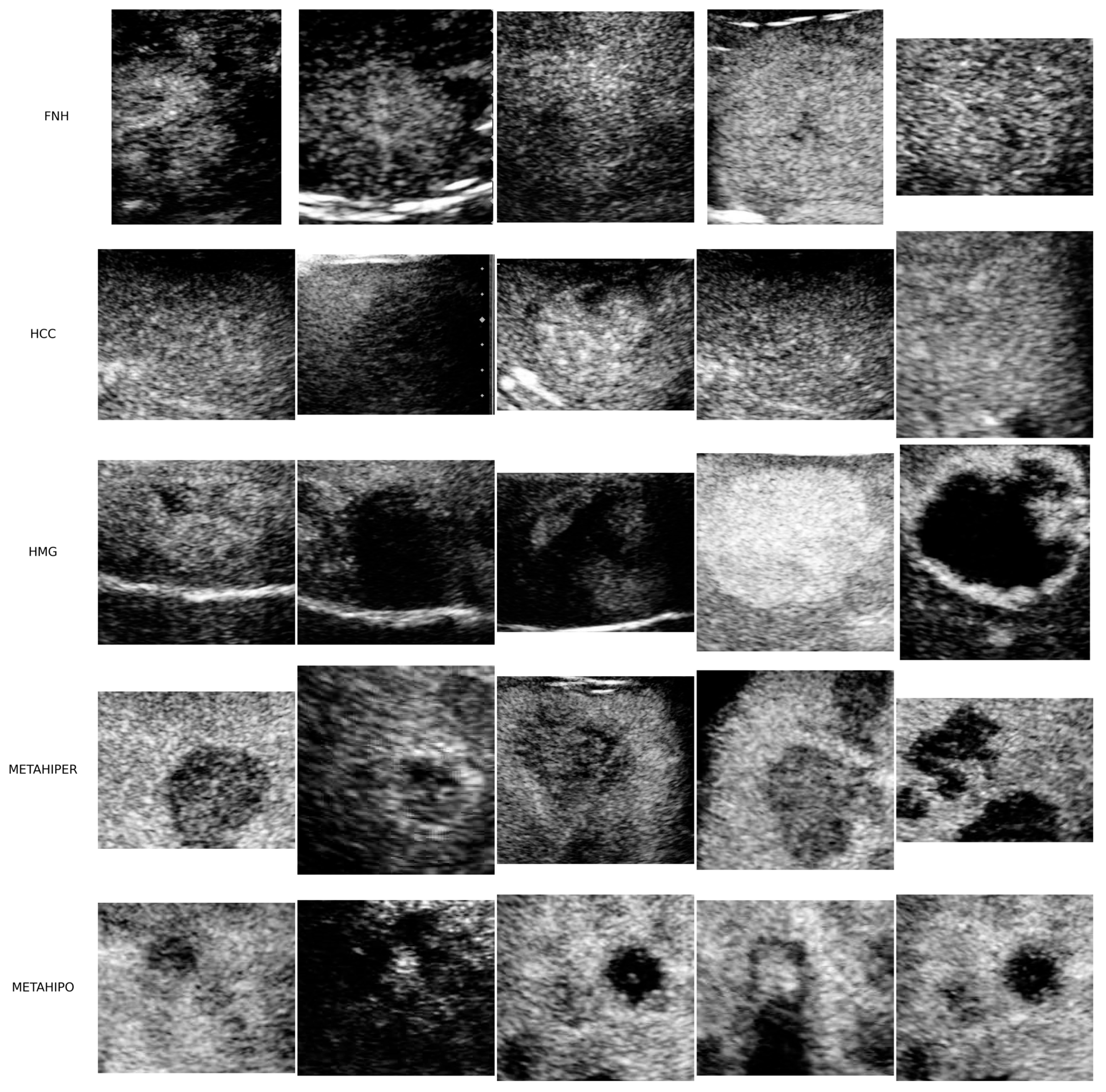

3.1.1. Image Data

3.1.2. Video Data

3.2. Methods

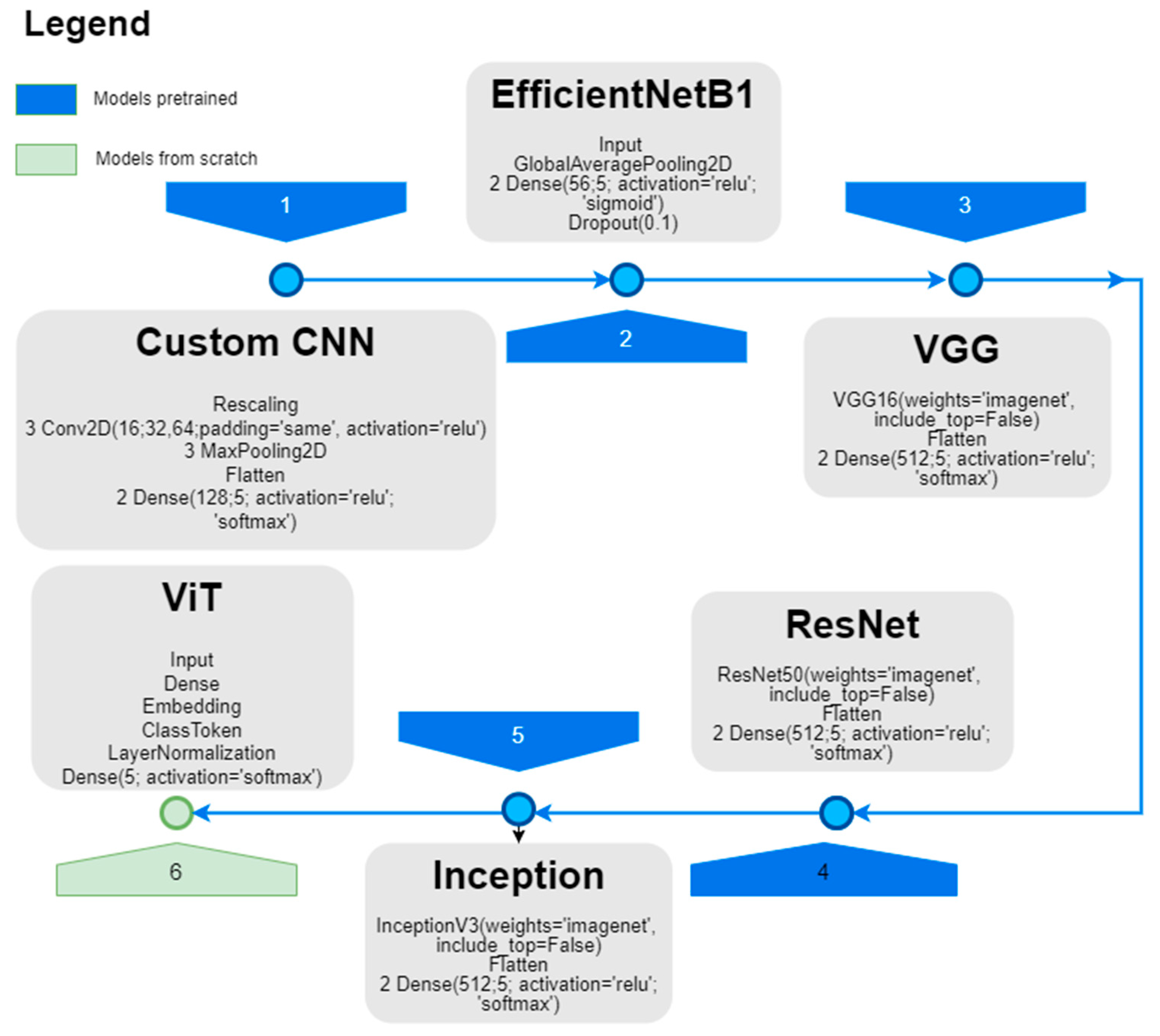

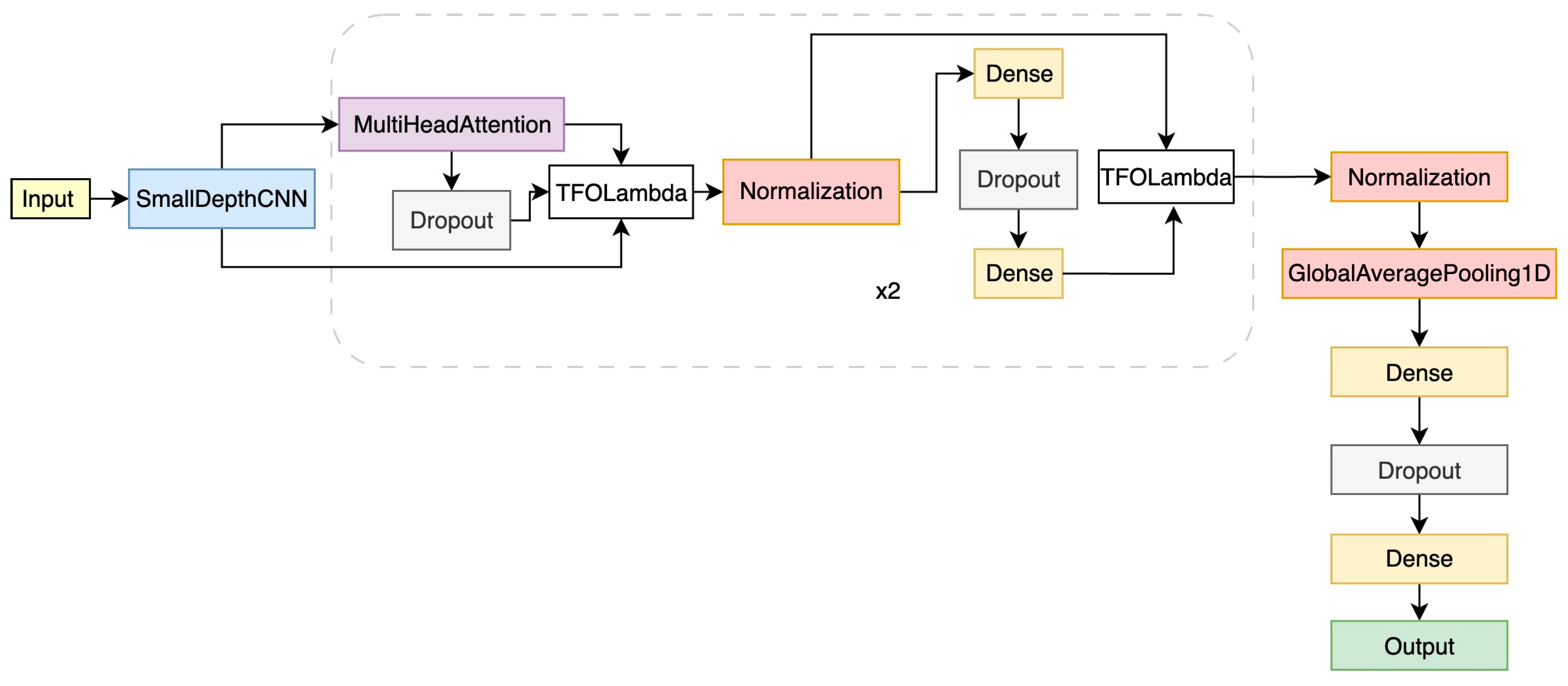

3.2.1. DNN Architecture for CEUS Image Analysis

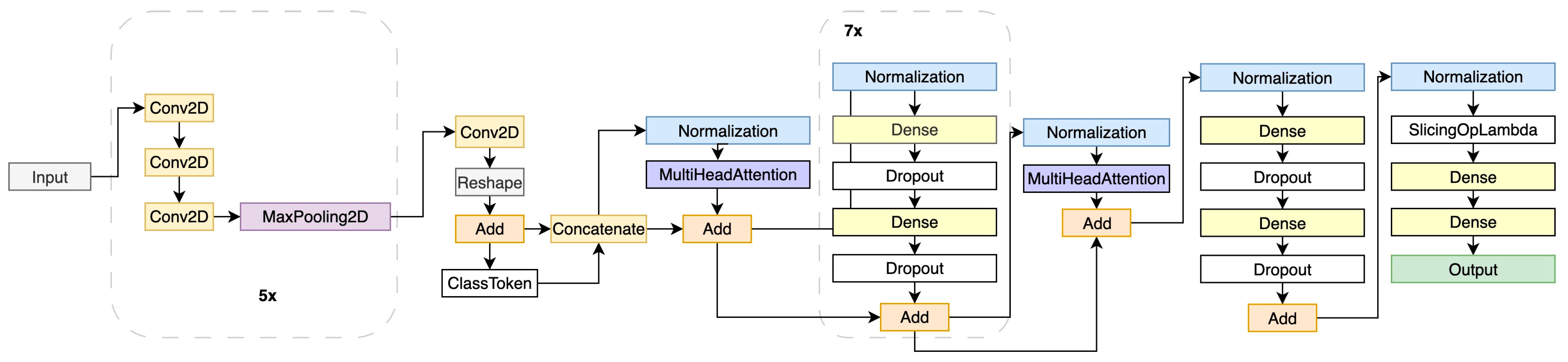

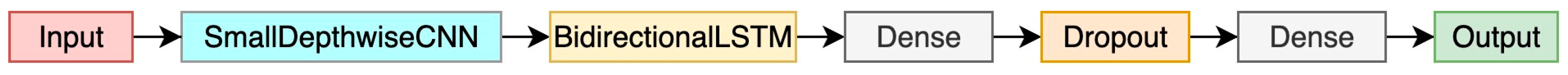

3.2.2. DNN Architecture for CEUS Video Analysis

4. Experimental Results

4.1. Image-Based Evaluation

- -

- Hardware architecture: CPU: AMD RYZEN 7 5800X, RAM: 64 GB, GPU: NVIDIA GeForce RTX 3090, 24 GB RAM.

- -

- Software framework: TensorFlow 2.11.1, Python 3.8.0, Ubuntu 20.04 LTS 64-bit.

4.2. Video-Based Evaluation

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gerstenmaier, J.F.; Gibson, R.N. Ultrasound in Chronic Liver Disease. Insights Imaging 2014, 5, 441–455. [Google Scholar] [CrossRef] [PubMed]

- Joyner, C.R.; Reid, J.M. Applications of Ultrasound in Cardiology and Cardiovascular Physiology. Prog. Cardiovasc. Dis. 1963, 5, 482–497. [Google Scholar] [CrossRef]

- Kossoff, G. Diagnostic Applications of Ultrasound in Cardiology. Australas. Radiol. 1966, 10, 101–106. [Google Scholar] [CrossRef]

- Gramiak, R.; Shah, P.M. Cardiac ultrasonography. Radiol. Clin. N. Am. 1971, 9, 469–490. [Google Scholar] [CrossRef] [PubMed]

- Turkgeldi, E.; Urman, B.; Ata, B. Role of Three-Dimensional Ultrasound in Gynecology. J. Obstet. Gynecol. India 2015, 65, 146–154. [Google Scholar] [CrossRef] [PubMed]

- Derchi, L.E.; Serafini, G.; Gandolfo, N.; Gandolfo, N.G.; Martinoli, C. Ultrasound in Gynecology. Eur. Radiol. 2001, 11, 2137–2155. [Google Scholar] [CrossRef] [PubMed]

- Boostani, M.; Pellacani, G.; Wortsman, X.; Suppa, M.; Goldust, M.; Cantisani, C.; Pietkiewicz, P.; Lőrincz, K.; Bánvölgyi, A.; Wikonkál, N.M.; et al. FDA and EMA-Approved Noninvasive Imaging Techniques for Basal Cell Carcinoma Subtyping: A Systematic Review. JAAD Int. 2025, 21, 73–86. [Google Scholar] [CrossRef]

- Ying, X.; Dong, S.; Zhao, Y.; Chen, Z.; Jiang, J.; Shi, H. Research Progress on Contrast-Enhanced Ultrasound (CEUS) Assisted Diagnosis and Treatment in Liver-Related Diseases. Int. J. Med. Sci. 2025, 22, 1092–1108. [Google Scholar] [CrossRef]

- Yeasmin, M.N.; Al Amin, M.; Joti, T.J.; Aung, Z.; Azim, M.A. Advances of AI in Image-Based Computer-Aided Diagnosis: A Review. Array 2024, 23, 100357. [Google Scholar] [CrossRef]

- Sporea, I.; Badea, R.; Martie, A.; Sirli, R.; Socaciu, M.; Popescu, A.; Dănilă, M. Contrast Enhanced Ultrasound for the Characterization of Focal Liver Lesions. Med. Ultrason. 2011, 13, 38–44. [Google Scholar] [PubMed]

- Moga, T.V.; Popescu, A.; Sporea, I.; Danila, M.; David, C.; Gui, V.; Iacob, N.; Miclaus, G.; Sirli, R. Is Contrast Enhanced Ultrasonography a Useful Tool in a Beginner’s Hand? How Much Can a Computer Assisted Diagnosis Prototype Help in Characterizing the Malignancy of Focal Liver Lesions? Med. Ultrason. 2017, 19, 252. [Google Scholar] [CrossRef] [PubMed]

- Chammas, M.C.; Bordini, A.L. Contrast-Enhanced Ultrasonography for the Evaluation of Malignant Focal Liver Lesions. Ultrasonography 2022, 41, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Khalifa, M.; Albadawy, M. AI in Diagnostic Imaging: Revolutionising Accuracy and Efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Srivastav, S.; Chandrakar, R.; Gupta, S.; Babhulkar, V.; Agrawal, S.; Jaiswal, A.; Prasad, R.; Wanjari, M.B. ChatGPT in Radiology: The Advantages and Limitations of Artificial Intelligence for Medical Imaging Diagnosis. Cureus 2023, 15, e41435. [Google Scholar] [CrossRef] [PubMed]

- Hameed, B.M.Z.; Prerepa, G.; Patil, V.; Shekhar, P.; Zahid Raza, S.; Karimi, H.; Paul, R.; Naik, N.; Modi, S.; Vigneswaran, G.; et al. Engineering and Clinical Use of Artificial Intelligence (AI) with Machine Learning and Data Science Advancements: Radiology Leading the Way for Future. Ther. Adv. Urol. 2021, 13, 17562872211044880. [Google Scholar] [CrossRef] [PubMed]

- Anwar, S.M.; Majid, M.; Qayyum, A.; Awais, M.; Alnowami, M.; Khan, M.K. Medical Image Analysis Using Convolutional Neural Networks: A Review. J. Med. Syst. 2018, 42, 226. [Google Scholar] [CrossRef]

- Supriyono; Wibawa, A.P.; Suyono; Kurniawan, F. Advancements in Natural Language Processing: Implications, Challenges, and Future Directions. Telemat. Inform. Rep. 2024, 16, 100173. [Google Scholar] [CrossRef]

- Islam, S.; Elmekki, H.; Elsebai, A.; Bentahar, J.; Drawel, N.; Rjoub, G.; Pedrycz, W. A Comprehensive Survey on Applications of Transformers for Deep Learning Tasks. Expert Syst. Appl. 2024, 241, 122666. [Google Scholar] [CrossRef]

- Shamshad, F.; Khan, S.; Zamir, S.W.; Khan, M.H.; Hayat, M.; Khan, F.S.; Fu, H. Transformers in Medical Imaging: A Survey. Med. Image Anal. 2023, 88, 102802. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, J.; Chen, J.; Tang, Y.; Wang, C.; Landman, B.A.; Zhou, S.K. Transforming Medical Imaging with Transformers? A Comparative Review of Key Properties, Current Progresses, and Future Perspectives. Med. Image Anal. 2023, 85, 102762. [Google Scholar] [CrossRef] [PubMed]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; Association for Computational Linguistics: Kerrville, TX, USA; pp. 38–45. [Google Scholar]

- Chen, Y.; Song, X.; Lee, C.; Wang, Z.; Zhang, Q.; Dohan, D.; Kawakami, K.; Kochanski, G.; Doucet, A.; Ranzato, M.A.; et al. Towards learning universal hyperparameter optimizers with transformers. In Proceedings of the 36th International Conference on Neural Information Processing Systems (NIPS ’22), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates Inc.: Red Hook, NY, USA, 2022; pp. 32053–32068. [Google Scholar]

- Wang, W.; Chen, C.; Ding, M.; Yu, H.; Zha, S.; Li, J. TransBTS: Multimodal Brain Tumor Segmentation Using Transformer. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021; De Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12901, pp. 109–119. ISBN 978-3-030-87192-5. [Google Scholar]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.R.; Asif, H.; Asif, A.; Farooq, U. A Survey of the Vision Transformers and Their CNN-Transformer Based Variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, M.; Metaxas, D.N. UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021; De Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12903, pp. 61–71. ISBN 978-3-030-87198-7. [Google Scholar]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Suzuki, K. Overview of Deep Learning in Medical Imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef]

- Murphy, Z.R.; Venkatesh, K.; Sulam, J.; Yi, P.H. Visual Transformers and Convolutional Neural Networks for Disease Classification on Radiographs: A Comparison of Performance, Sample Efficiency, and Hidden Stratification. Radiol. Artif. Intell. 2022, 4, e220012. [Google Scholar] [CrossRef]

- Li, C.; Zhang, C. Toward a Deeper Understanding: RetNet Viewed through Convolution. Pattern Recognit. 2024, 155, 110625. [Google Scholar] [CrossRef]

- El-Serag, H.B. Epidemiology of Hepatocellular Carcinoma. In The Liver; Arias, I.M., Alter, H.J., Boyer, J.L., Cohen, D.E., Shafritz, D.A., Thorgeirsson, S.S., Wolkoff, A.W., Eds.; Wiley: Hoboken, NJ, USA, 2020; pp. 758–772. ISBN 978-1-119-43682-9. [Google Scholar]

- Country Cancer Profile 2025. Available online: https://www.oecd.org/content/dam/oecd/en/publications/reports/2025/02/eu-country-cancer-profile-romania-2025_ef833241/8474a271-en.pdf (accessed on 29 September 2025).

- National Cancer Institute. Available online: https://www.cancer.gov/types/liver/research (accessed on 29 September 2025).

- Gul, S.; Khan, M.S.; Hossain, M.S.A.; Chowdhury, M.E.H.; Sumon, M.S.I. A Comparative Study of Decoders for Liver and Tumor Segmentation Using a Self-ONN-Based Cascaded Framework. Diagnostics 2024, 14, 2761. [Google Scholar] [CrossRef]

- Kohli, M.; Prevedello, L.M.; Filice, R.W.; Geis, J.R. Implementing Machine Learning in Radiology Practice and Research. Am. J. Roentgenol. 2017, 208, 754–760. [Google Scholar] [CrossRef]

- Turco, S.; Tiyarattanachai, T.; Ebrahimkheil, K.; Eisenbrey, J.; Kamaya, A.; Mischi, M.; Lyshchik, A.; Kaffas, A.E. Interpretable Machine Learning for Characterization of Focal Liver Lesions by Contrast-Enhanced Ultrasound. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2022, 69, 1670–1681. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.-Y.; Wei, Q.; Wu, G.-G.; Tang, Q.; Pan, X.-F.; Chen, G.-Q.; Zhang, D.; Dietrich, C.F.; Cui, X.-W. Artificial Intelligence—Based Ultrasound Elastography for Disease Evaluation—A Narrative Review. Front. Oncol. 2023, 13, 1197447. [Google Scholar] [CrossRef] [PubMed]

- Adriana Mercioni, M.; Daniel Căleanu, C. Computer Aided Diagnosis for Contrast-Enhanced Ultrasound Using a Small Hybrid Transformer Neural Network. In Proceedings of the 2024 International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 7–8 November 2024; pp. 1–4. [Google Scholar]

- Zakaria, R.; Abdelmajid, H.; Zitouni, D. Deep Learning in Medical Imaging: A Review. In Applications of Machine Intelligence in Engineering; CRC Press: New York, NY, USA, 2022; pp. 131–144. ISBN 978-1-00-326979-3. [Google Scholar]

- Timofte, E.M.; Ligia Balan, A.; Iftime, T. AI Driven Adaptive Security Mesh: Cloud Container Protection for Dynamic Threat Landscapes. In Proceedings of the 2024 International Conference on Development and Application Systems (DAS), Suceava, Romania, 23–25 May 2024; pp. 71–77. [Google Scholar]

- Albahar, M. A Survey on Deep Learning and Its Impact on Agriculture: Challenges and Opportunities. Agriculture 2023, 13, 540. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of Machine Learning with Deep Learning: Architectures, Workflow, Applications and Future Directions. Computers 2023, 12, 91. [Google Scholar] [CrossRef]

- Eugen, I.; Laurențiu, M.D. Monitoring Energy Losses of a Residential Building through Thermographic Assessments. In Proceedings of the 2024 International Conference on Development and Application Systems (DAS), Suceava, Romania, 23–25 May 2024; pp. 1–7. [Google Scholar]

- Timofte, E.M.; Ligia Balan, A.; Iftime, T. Designing an Authentication Methodology in IoT Using Energy Consumption Patterns. In Proceedings of the 2024 International Conference on Development and Application Systems (DAS), Suceava, Romania, 23–25 May 2024; pp. 64–70. [Google Scholar]

- Mitrea, D.; Badea, R.; Mitrea, P.; Brad, S.; Nedevschi, S. Hepatocellular Carcinoma Automatic Diagnosis within CEUS and B-Mode Ultrasound Images Using Advanced Machine Learning Methods. Sensors 2021, 21, 2202. [Google Scholar] [CrossRef]

- Sirbu, C.L.; Seiculescu, C.; Adrian Burdan, G.; Moga, T.; Daniel Caleanu, C. Evaluation of Tracking Algorithms for Contrast Enhanced Ultrasound Imaging Exploration. In Proceedings of the Australasian Computer Science Week 2022, Brisbane, Australia, 14–18 February 2022; pp. 161–167. [Google Scholar]

- Căleanu, C.D.; Sîrbu, C.L.; Simion, G. Deep Neural Architectures for Contrast Enhanced Ultrasound (CEUS) Focal Liver Lesions Automated Diagnosis. Sensors 2021, 21, 4126. [Google Scholar] [CrossRef] [PubMed]

- Caleanu, C.-D.; Simion, G.; David, C.; Gui, V.; Moga, T.; Popescu, A.; Sirli, R.; Sporea, I. A Study over the Importance of Arterial Phase Temporal Parameters in Focal Liver Lesions CEUS Based Diagnosis. In Proceedings of the 2014 11th International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 14–15 November 2014; pp. 1–4. [Google Scholar]

- Shiraishi, J.; Sugimoto, K.; Moriyasu, F.; Kamiyama, N.; Doi, K. Computer-aided Diagnosis for the Classification of Focal Liver Lesions by Use of Contrast-enhanced Ultrasonography. Med. Phys. 2008, 35, 1734–1746. [Google Scholar] [CrossRef] [PubMed]

- Mercioni, M.A.; Holban, S. The Most Used Activation Functions: Classic Versus Current. In Proceedings of the 2020 International Conference on Development and Application Systems (DAS), Suceava, Romania, 21–23 May 2020; pp. 141–145. [Google Scholar]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Gašparović, B.; Mauša, G.; Rukavina, J.; Lerga, J. Evaluating YOLOV5, YOLOV6, YOLOV7, and YOLOV8 in Underwater Environment: Is There Real Improvement? In Proceedings of the 2023 8th International Conference on Smart and Sustainable Technologies (SpliTech), Split/Bol, Croatia, 20–23 June 2023; pp. 1–4. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Playout, C.; Duval, R.; Boucher, M.C.; Cheriet, F. Focused Attention in Transformers for Interpretable Classification of Retinal Images. Med. Image Anal. 2022, 82, 102608. [Google Scholar] [CrossRef]

- Xiao, H.; Li, L.; Liu, Q.; Zhu, X.; Zhang, Q. Transformers in Medical Image Segmentation: A Review. Biomed. Signal Process. Control 2023, 84, 104791. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Neural Information Processing Systems, 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Takahashi, S.; Sakaguchi, Y.; Kouno, N.; Takasawa, K.; Ishizu, K.; Akagi, Y.; Aoyama, R.; Teraya, N.; Bolatkan, A.; Shinkai, N.; et al. Comparison of Vision Transformers and Convolutional Neural Networks in Medical Image Analysis: A Systematic Review. J. Med. Syst. 2024, 48, 84. [Google Scholar] [CrossRef]

- Vernuccio, F.; Cannella, R.; Bartolotta, T.V.; Galia, M.; Tang, A.; Brancatelli, G. Advances in Liver US, CT, and MRI: Moving toward the Future. Eur. Radiol. Exp. 2021, 5, 52. [Google Scholar] [CrossRef] [PubMed]

- Cao, L.; Wang, Q.; Hong, J.; Han, Y.; Zhang, W.; Zhong, X.; Che, Y.; Ma, Y.; Du, K.; Wu, D.; et al. MVI-TR: A Transformer-Based Deep Learning Model with Contrast-Enhanced CT for Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma. Cancers 2023, 15, 1538. [Google Scholar] [CrossRef]

- Chen, S.; Ge, C.; Tong, Z.; Wang, J.; Song, Y.; Wang, J.; Luo, P. AdaptFormer: Adapting Vision Transformers for Scalable Visual Recognition. Adv. Neural Inf. Process. Syst. 2022, 35, 16664–16678. [Google Scholar]

- Basit, A.; Yaqoob, A.; Hannan, A. ViT-LiSeg: Vision Transformer-Based Liver Cancer Segmentation in High-Resolution Computed Tomography. In Second International Conference on Computing Technologies, Tools and Applications (ICTAPP-24), June 4–6, 2024 (Abstract Book); Pakistan Scientific and Technological Information Center: Islamabad, Pakistan, 2024. [Google Scholar]

- Wang, X.; Ying, H.; Xu, X.; Cai, X.; Zhang, M. TransLiver: A Hybrid Transformer Model for Multi-Phase Liver Lesion Classification. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 14221, pp. 329–338. ISBN 978-3-031-43894-3. [Google Scholar]

- Zhou, H.; Ding, J.; Zhou, Y.; Wang, Y.; Zhao, L.; Shih, C.-C.; Xu, J.; Wang, J.; Tong, L.; Chen, Z.; et al. Malignancy Diagnosis of Liver Lesion in Contrast Enhanced Ultrasound Using an End-to-End Method Based on Deep Learning. BMC Med. Imaging 2024, 24, 68. [Google Scholar] [CrossRef]

- Sun, Q.; Fang, N.; Liu, Z.; Zhao, L.; Wen, Y.; Lin, H. HybridCTrm: Bridging CNN and Transformer for Multimodal Brain Image Segmentation. J. Healthc. Eng. 2021, 2021, 7467261. [Google Scholar] [CrossRef] [PubMed]

- Bougourzi, F.; Dornaika, F.; Distante, C.; Taleb-Ahmed, A. D-TrAttUnet: Toward Hybrid CNN-Transformer Architecture for Generic and Subtle Segmentation in Medical Images. Comput. Biol. Med. 2024, 176, 108590. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Li, W. A Hybrid CNN-Transformer Architecture with Frequency Domain Contrastive Learning for Image Deraining. arXiv 2023, arXiv:2308.03340. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, C.; Li, J. A Hybrid CNN-Transformer Model for Predicting N Staging and Survival in Non-Small Cell Lung Cancer Patients Based on CT-Scan. Tomography 2024, 10, 1676–1693. [Google Scholar] [CrossRef] [PubMed]

- Hu, B.; Jiang, W.; Zeng, J.; Cheng, C.; He, L. FOTCA: Hybrid Transformer-CNN Architecture Using AFNO for Accurate Plant Leaf Disease Image Recognition. Front. Plant Sci. 2023, 14, 1231903. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Gao, P.; Yu, T.; Wang, F.; Yuan, R.-Y. CSWin-UNet: Transformer UNet with Cross-Shaped Windows for Medical Image Segmentation. Inf. Fusion 2025, 113, 102634. [Google Scholar] [CrossRef]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in Transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 15908–15919. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training Data-Efficient Image Transformers & Distillation through Attention. Proc. Mach. Learn. Res. 2020, 139, 10347–10357. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Demir, U.; Zhang, Z.; Wang, B.; Antalek, M.; Keles, E.; Jha, D.; Borhani, A.; Ladner, D.; Bagci, U. Transformer Based Generative Adversarial Network for Liver Segmentation. In Image Analysis and Processing. ICIAP 2022 Workshops; Mazzeo, P.L., Frontoni, E., Sclaroff, S., Distante, C., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2022; Volume 13374, pp. 340–347. ISBN 978-3-031-13323-7. [Google Scholar]

- Schmiedt, K.; Simion, G.; Caleanu, C.D. Preliminary Results on Contrast Enhanced Ultrasound Video Stream Diagnosis Using Deep Neural Architectures. In Proceedings of the 2022 International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Sirbu, C.L.; Simion, G.; Caleanu, C.D. Deep CNN for Contrast-Enhanced Ultrasound Focal Liver Lesions Diagnosis. In Proceedings of the 2020 International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 5–6 November 2020; pp. 1–4. [Google Scholar]

- Mercioni, M.A.; Căleanu, C.D.; Sîrbu, C.L. Computer Aided Diagnosis for Contrast-Enhanced Ultrasound Using Transformer Neural Network. In Proceedings of the 2023 25th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Nancy, France, 11–14 September 2023; pp. 256–259. [Google Scholar]

- Liang, X.; Cao, Q.; Huang, R.; Lin, L. Recognizing Focal Liver Lesions in Contrast-Enhanced Ultrasound with Discriminatively Trained Spatio-Temporal Model. In Proceedings of the 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI), Beijing, China, 29 April–2 May 2014; pp. 1184–1187. [Google Scholar]

- Mercioni, M.A.; Holban, S. A Brief Review of the Most Recent Activation Functions for Neural Networks. In Proceedings of the 2023 17th International Conference on Engineering of Modern Electric Systems (EMES), Oradea, Romania, 9–10 June 2023; pp. 1–4. [Google Scholar]

- Hassan, T.M.; Elmogy, M.; Sallam, E.-S. Diagnosis of Focal Liver Diseases Based on Deep Learning Technique for Ultrasound Images. Arab. J. Sci. Eng. 2017, 42, 3127–3140. [Google Scholar] [CrossRef]

- Pan, F.; Huang, Q.; Li, X. Classification of Liver Tumors with CEUS Based on 3D-CNN. In Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), Toyonaka, Japan, 3–5 July 2019; pp. 845–849. [Google Scholar]

- Guo, L.; Wang, D.; Xu, H.; Qian, Y.; Wang, C.; Zheng, X.; Zhang, Q.; Shi, J. CEUS-Based Classification of Liver Tumors with Deep Canonical Correlation Analysis and Multi-Kernel Learning. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 1748–1751. [Google Scholar]

- Vancea, F.; Mitrea, D.; Nedevschi, S.; Rotaru, M.; Stefanescu, H.; Badea, R. Hepatocellular Carcinoma Segmentation within Ultrasound Images Using Convolutional Neural Networks. In Proceedings of the 2019 IEEE 15th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 5–7 September 2019; pp. 483–490. [Google Scholar]

- Wu, K.; Chen, X.; Ding, M. Deep Learning Based Classification of Focal Liver Lesions with Contrast-Enhanced Ultrasound. Optik 2014, 125, 4057–4063. [Google Scholar] [CrossRef]

- Streba, C.T. Contrast-Enhanced Ultrasonography Parameters in Neural Network Diagnosis of Liver Tumors. World J. Gastroenterol. 2012, 18, 4427. [Google Scholar] [CrossRef]

- Chen, J.; Huang, Z.; Jiang, Y.; Wu, H.; Tian, H.; Cui, C.; Shi, S.; Tang, S.; Xu, J.; Xu, D.; et al. Diagnostic Performance of Deep Learning in Video-Based Ultrasonography for Breast Cancer: A Retrospective Multicentre Study. Ultrasound Med. Biol. 2024, 50, 722–728. [Google Scholar] [CrossRef]

- Rauniyar, A.; Hagos, D.H.; Jha, D.; Håkegård, J.E.; Bagci, U.; Rawat, D.B.; Vlassov, V. Federated Learning for Medical Applications: A Taxonomy, Current Trends, Challenges, and Future Research Directions. IEEE Internet Things J. 2024, 11, 7374–7398. [Google Scholar] [CrossRef]

- Dai, Z.; Yang, Z.; Yang, Y.; Carbonell, J.; Le, Q.; Salakhutdinov, R. Transformer-XL: Attentive Language Models beyond a Fixed-Length Context. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Kerrville, TX, USA; pp. 2978–2988. [Google Scholar]

- Wang, Y.; Deng, Y.; Zheng, Y.; Chattopadhyay, P.; Wang, L. Vision Transformers for Image Classification: A Comparative Survey. Technologies 2025, 13, 32. [Google Scholar] [CrossRef]

- Bilic, P.; Christ, P.; Li, H.B.; Vorontsov, E.; Ben-Cohen, A.; Kaissis, G.; Szeskin, A.; Jacobs, C.; Mamani, G.E.H.; Chartrand, G.; et al. The Liver Tumor Segmentation Benchmark (LiTS). Med. Image Anal. 2023, 84, 102680. [Google Scholar] [CrossRef] [PubMed]

- Yoon, S.; Kim, T.H.; Jung, Y.K.; Kim, Y. Accelerated Muscle Mass Estimation from CT Images through Transfer Learning. BMC Med. Imaging 2024, 24, 271. [Google Scholar] [CrossRef] [PubMed]

- Fang, X.; Yan, P. Multi-Organ Segmentation Over Partially Labeled Datasets with Multi-Scale Feature Abstraction. IEEE Trans. Med. Imaging 2020, 39, 3619–3629. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Lin, L.; Cao, Q.; Huang, R.; Wang, Y. Recognizing Focal Liver Lesions in CEUS With Dynamically Trained Latent Structured Models. IEEE Trans. Med. Imaging 2016, 35, 713–727. [Google Scholar] [CrossRef] [PubMed]

| Model | Validation | |||

|---|---|---|---|---|

| Accuracy (Max) | Loss (Min) | Total Params | Total Training Time [min] | |

| CNN | 91.99% | 0.3274 | 5,144,357 | 8 |

| EfficientNetB1 | 91.82% | 0.5992 | 6,647,253 | 8 |

| VGG | 97.61% | 0.1171 | 24,154,949 | 53 |

| ResNet50 | 99.17% | 0.0415 | 74,971,013 | 51 |

| Inception | 99.34% | 1.2361 | 38,583,077 | 34 |

| ViT | 86.54% | 0.6431 | 2,584,517 | 17 |

| Ref. | Lesions | General Accuracy [%] |

|---|---|---|

| Hassan et al. [83] | Cyst, HEM, HCC | 97.2 |

| Pan et al. [84] | FNH, HCC | 93.1 |

| Guo et al. [85] | Malign, Benign | 90.4 |

| Vancea et al. [86] | HCC | 80.3 |

| Wu et al. [87] | HCC, CH, META, LFS | 86.3 |

| Streba et al. [88] | HCC, HYPERM, HYPOM, HEM, FFC | 87.1 |

| Căleanu et al. [47] | FNH, HCC, HMG, HYPERM HYPOM | 88.0 |

| Mercioni et al. [80] | FNH, HCC, HMG, METAHIPER, METAHIPO | 97.5 |

| HTNN (ours) | FNH, HCC, HMG, METAHIPER, METAHIPO | 97.77 |

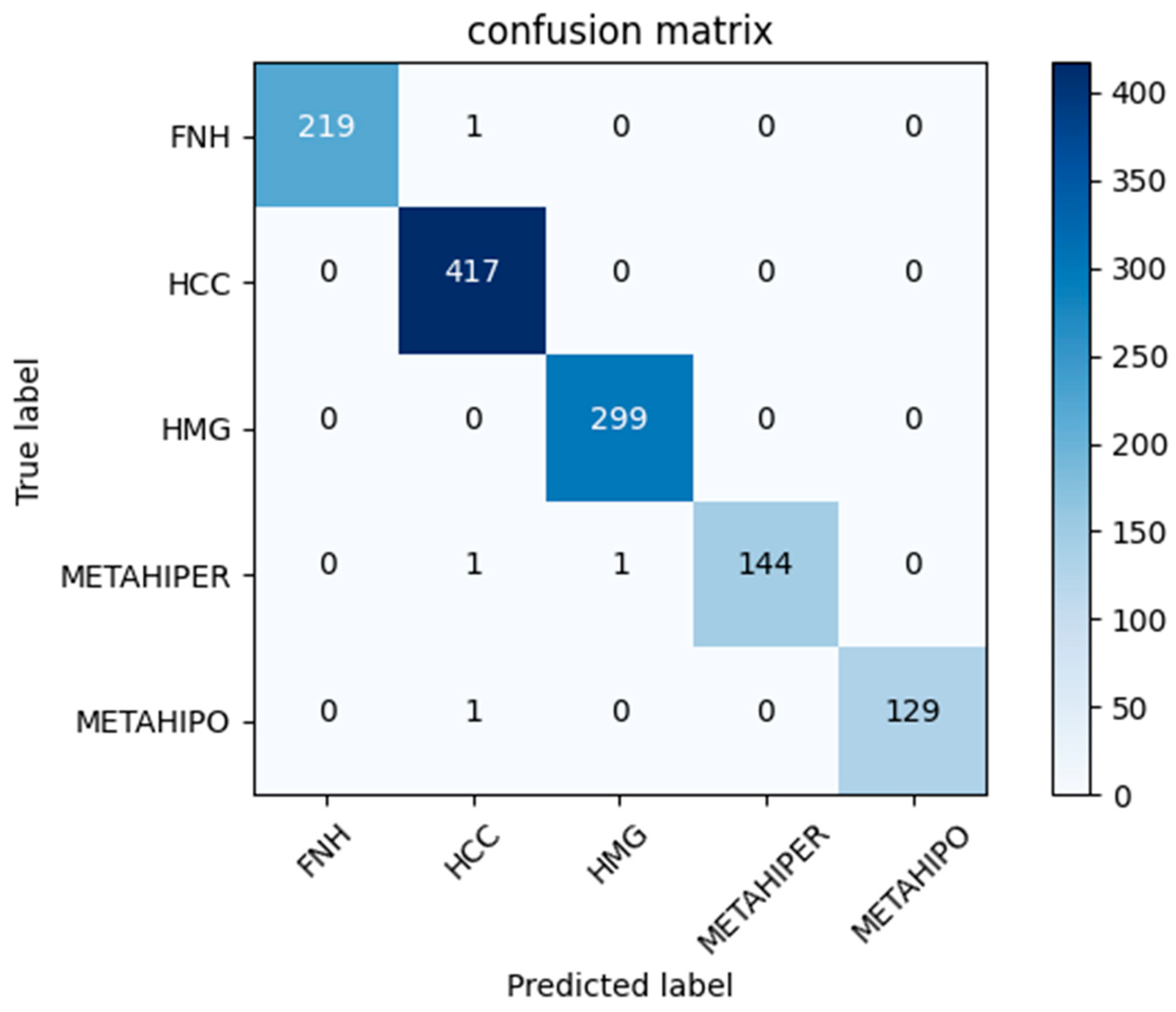

| Precision [%] | Recall [%] | F1-Score [%] | Samples | |

|---|---|---|---|---|

| FNH | 100 | 100 | 100 | 222 |

| HCC | 99 | 100 | 99 | 394 |

| HMG | 99 | 99 | 99 | 324 |

| METAHIPER | 99 | 99 | 99 | 134 |

| METAHIPO | 100 | 99 | 99 | 138 |

| Accuracy | 99 | 1212 | ||

| Macro avg | 100 | 99 | 99 | 1212 |

| Weighted avg | 99 | 99 | 99 | 1212 |

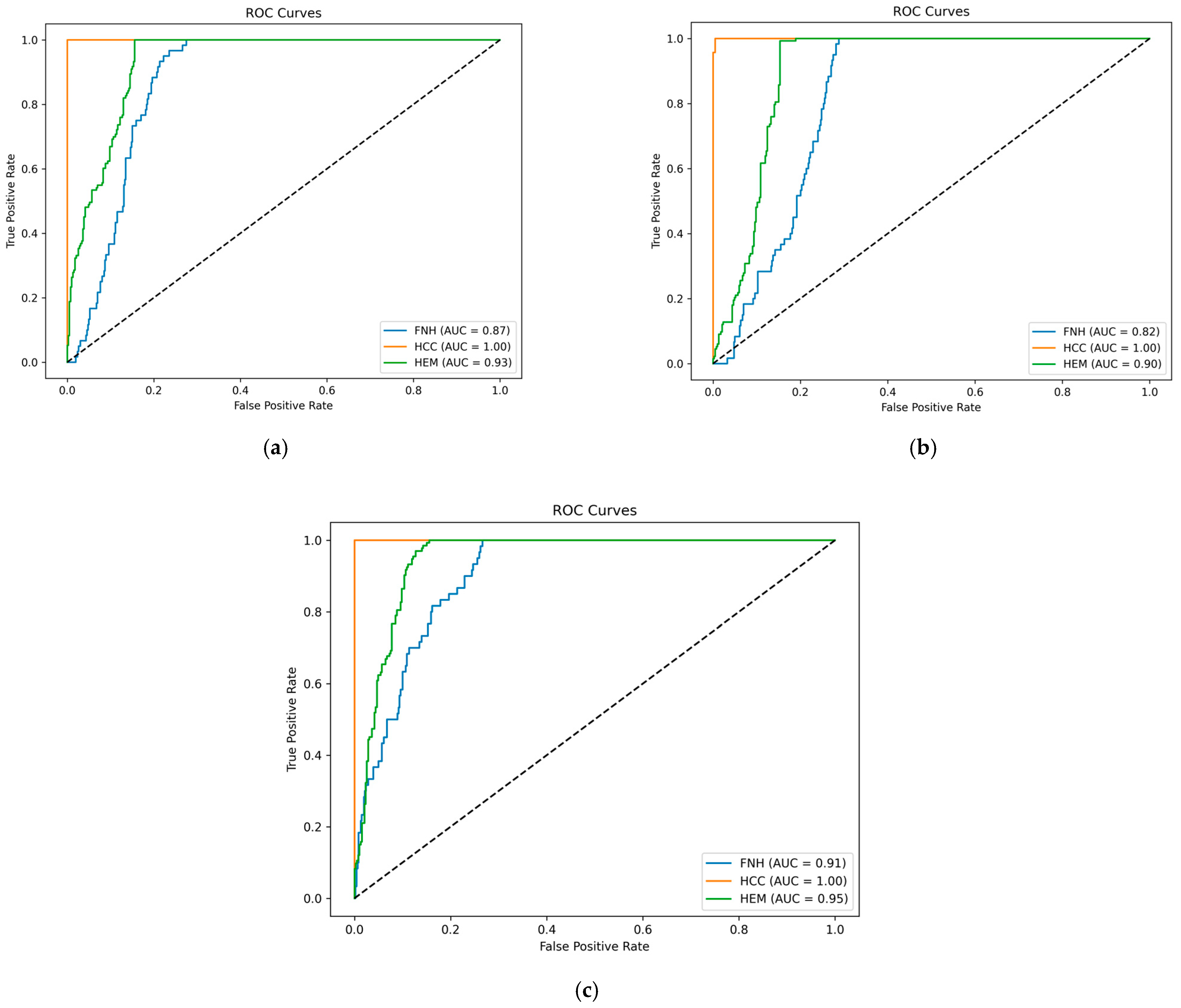

| Architecture | Lesion Type | Precision | Recall | F1-Score | Samples |

|---|---|---|---|---|---|

| Video lightweight CNN | FNH | 0.39 | 0.73 | 0.51 | 60 |

| HCC | 1.00 | 1.00 | 1.00 | 325 | |

| HEM (HMG) | 0.80 | 0.47 | 0.59 | 133 | |

| macro avg | 0.73 | 0.74 | 0.70 | 518 | |

| weighted avg | 0.88 | 0.83 | 0.84 | 518 | |

| accuracy | 0.83 | 518 | |||

| Video CNN + LSTM | FNH | 0.30 | 0.82 | 0.44 | 60 |

| HCC | 0.99 | 1.00 | 1.00 | 325 | |

| HEM (HMG) | 0.62 | 0.12 | 0.20 | 133 | |

| macro avg | 0.64 | 0.65 | 0.54 | 518 | |

| weighted avg | 0.81 | 0.75 | 0.73 | 518 | |

| accuracy | 0.75 | 518 | |||

| ViVit | FNH | 0.48 | 0.43 | 0.46 | 60 |

| HCC | 1.00 | 1.00 | 1.00 | 325 | |

| HEM (HMG) | 0.76 | 0.79 | 0.77 | 133 | |

| macro avg | 0.75 | 0.74 | 0.74 | 518 | |

| weighted avg | 0.88 | 0.88 | 0.88 | 518 | |

| accuracy | 0.88 | 518 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mercioni, M.A.; Căleanu, C.D.; Ursan, M.-E.-O. Images Versus Videos in Contrast-Enhanced Ultrasound for Computer-Aided Diagnosis. Sensors 2025, 25, 6247. https://doi.org/10.3390/s25196247

Mercioni MA, Căleanu CD, Ursan M-E-O. Images Versus Videos in Contrast-Enhanced Ultrasound for Computer-Aided Diagnosis. Sensors. 2025; 25(19):6247. https://doi.org/10.3390/s25196247

Chicago/Turabian StyleMercioni, Marina Adriana, Cătălin Daniel Căleanu, and Mihai-Eronim-Octavian Ursan. 2025. "Images Versus Videos in Contrast-Enhanced Ultrasound for Computer-Aided Diagnosis" Sensors 25, no. 19: 6247. https://doi.org/10.3390/s25196247

APA StyleMercioni, M. A., Căleanu, C. D., & Ursan, M.-E.-O. (2025). Images Versus Videos in Contrast-Enhanced Ultrasound for Computer-Aided Diagnosis. Sensors, 25(19), 6247. https://doi.org/10.3390/s25196247