Enhancing Off-Road Topography Estimation by Fusing LIDAR and Stereo Camera Data with Interpolated Ground Plane

Abstract

:1. Introduction

2. Related Work

3. Prerequisite

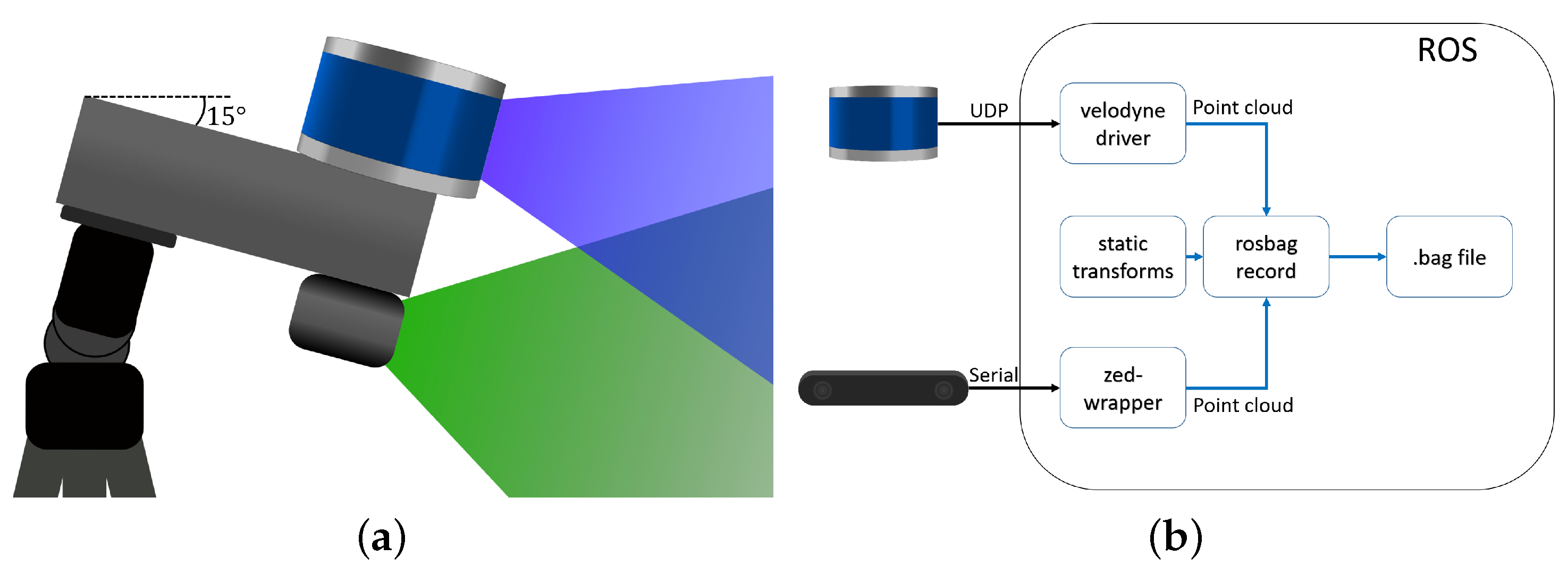

3.1. LIDARs

3.2. Stereo Cameras

4. Sensor Fusion Methods

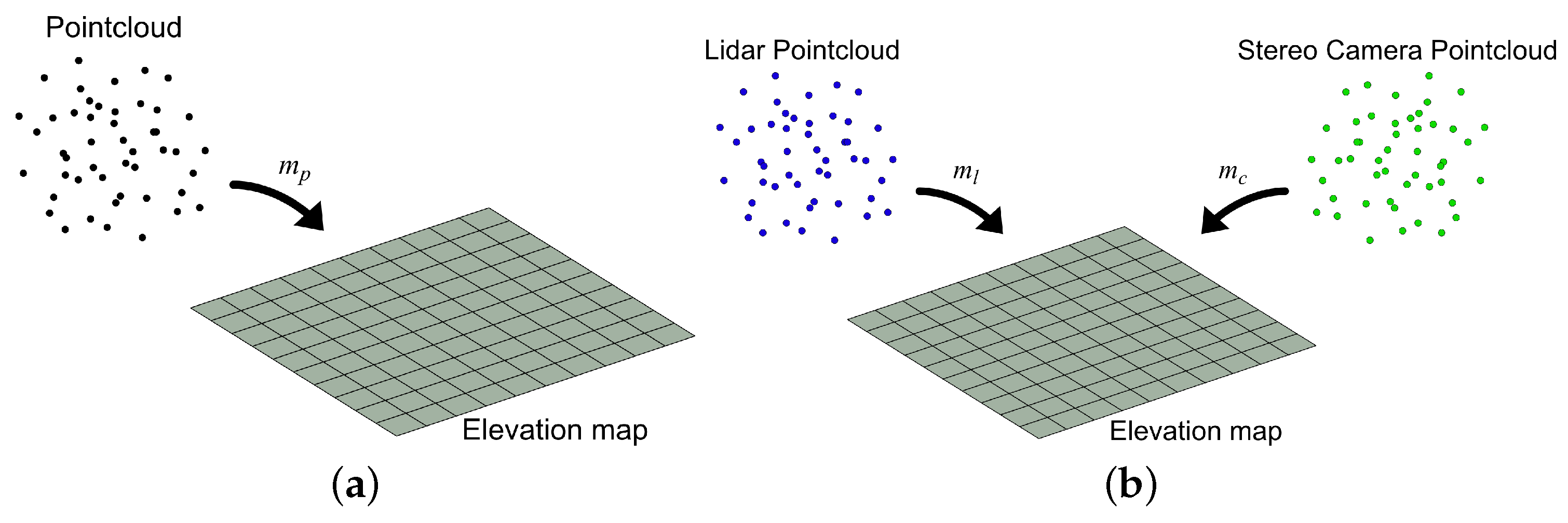

4.1. Baseline (Naive) Approach

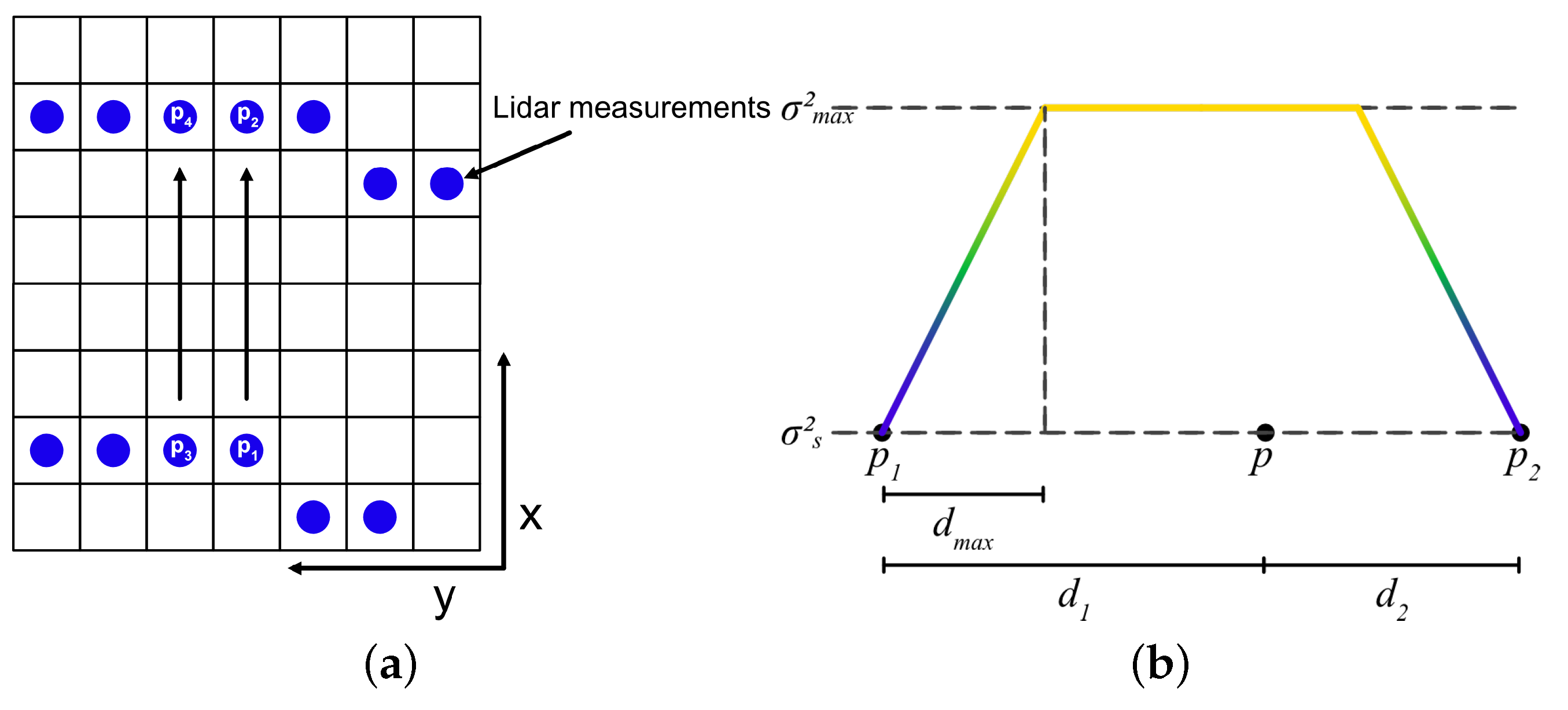

4.2. Interpolation Based Approach

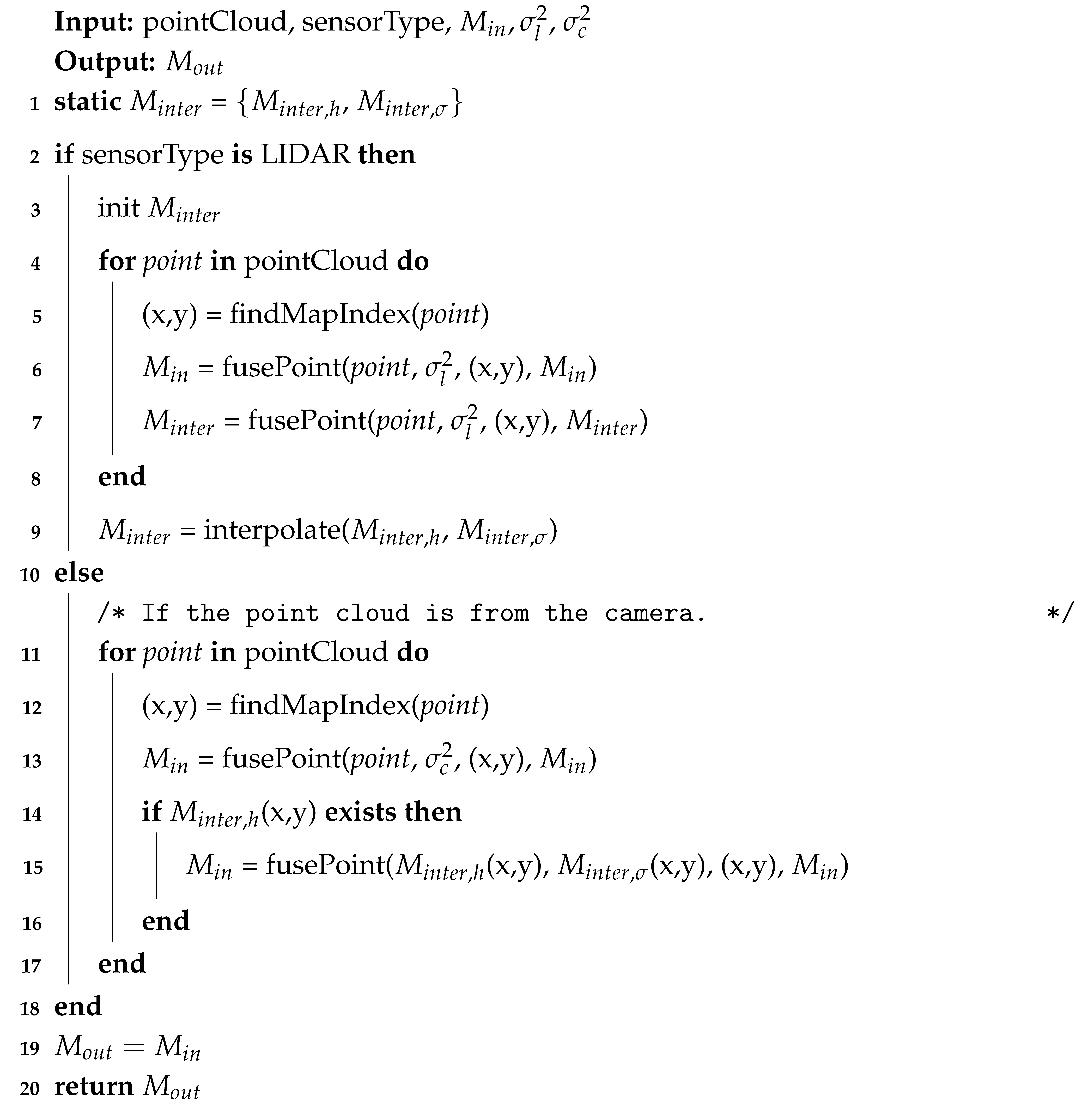

| Algorithm 1: Fusion Algorithm. |

|

5. Results

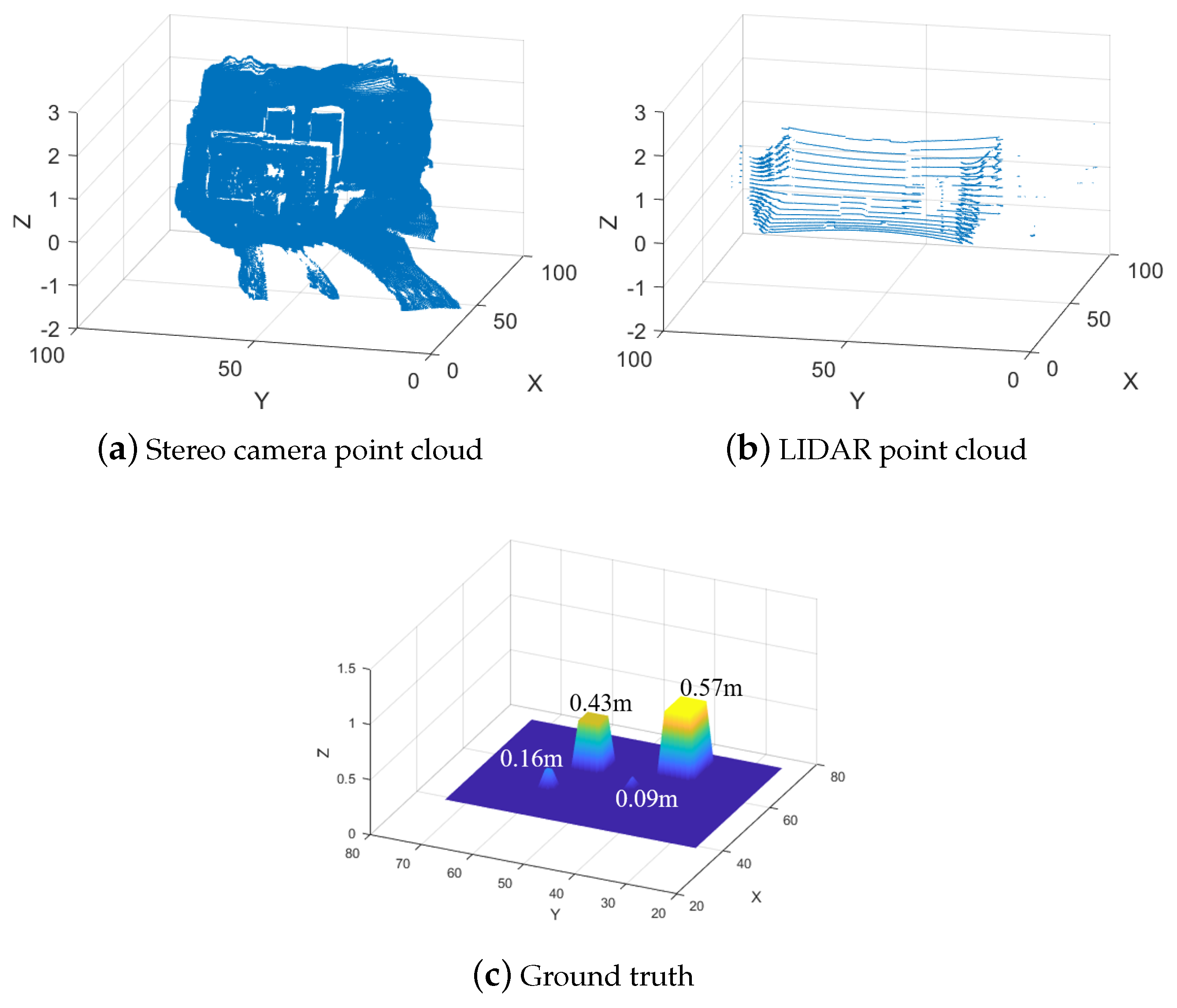

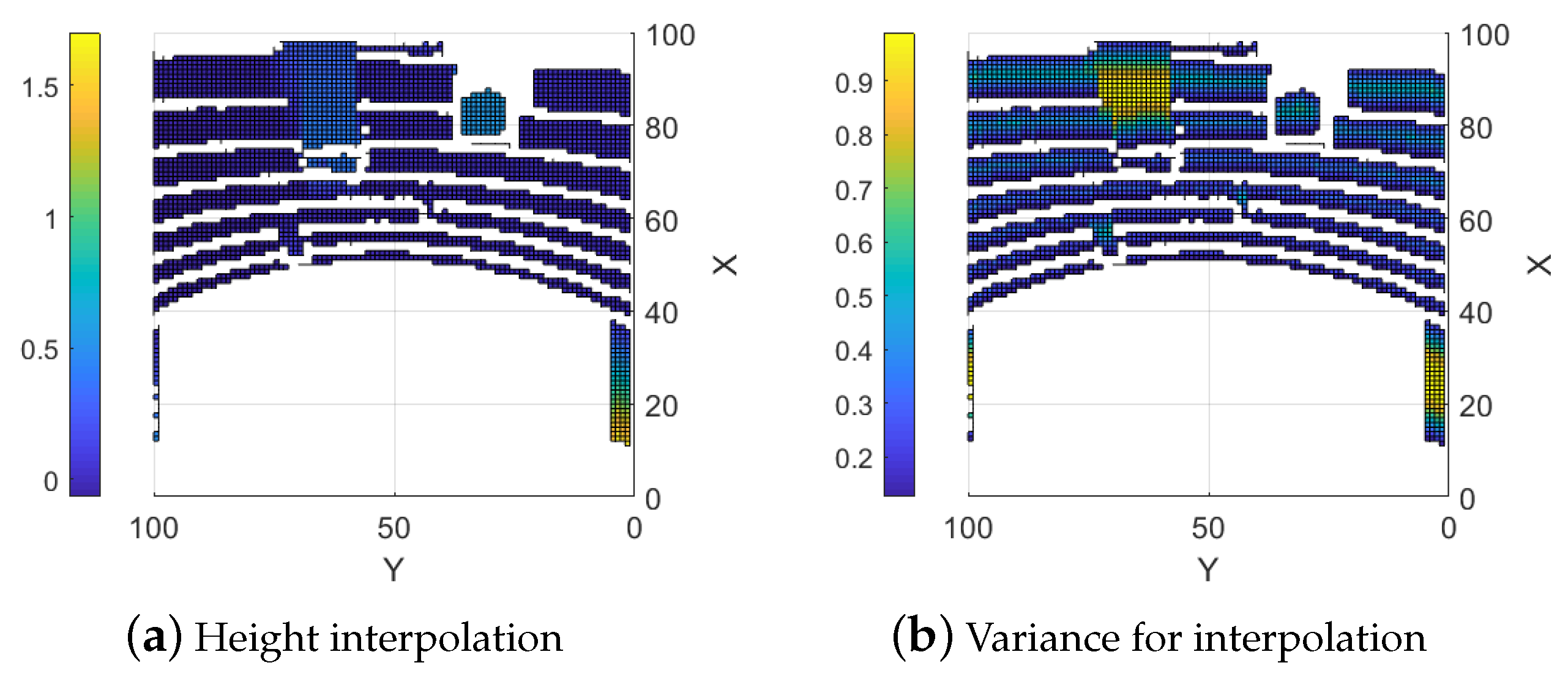

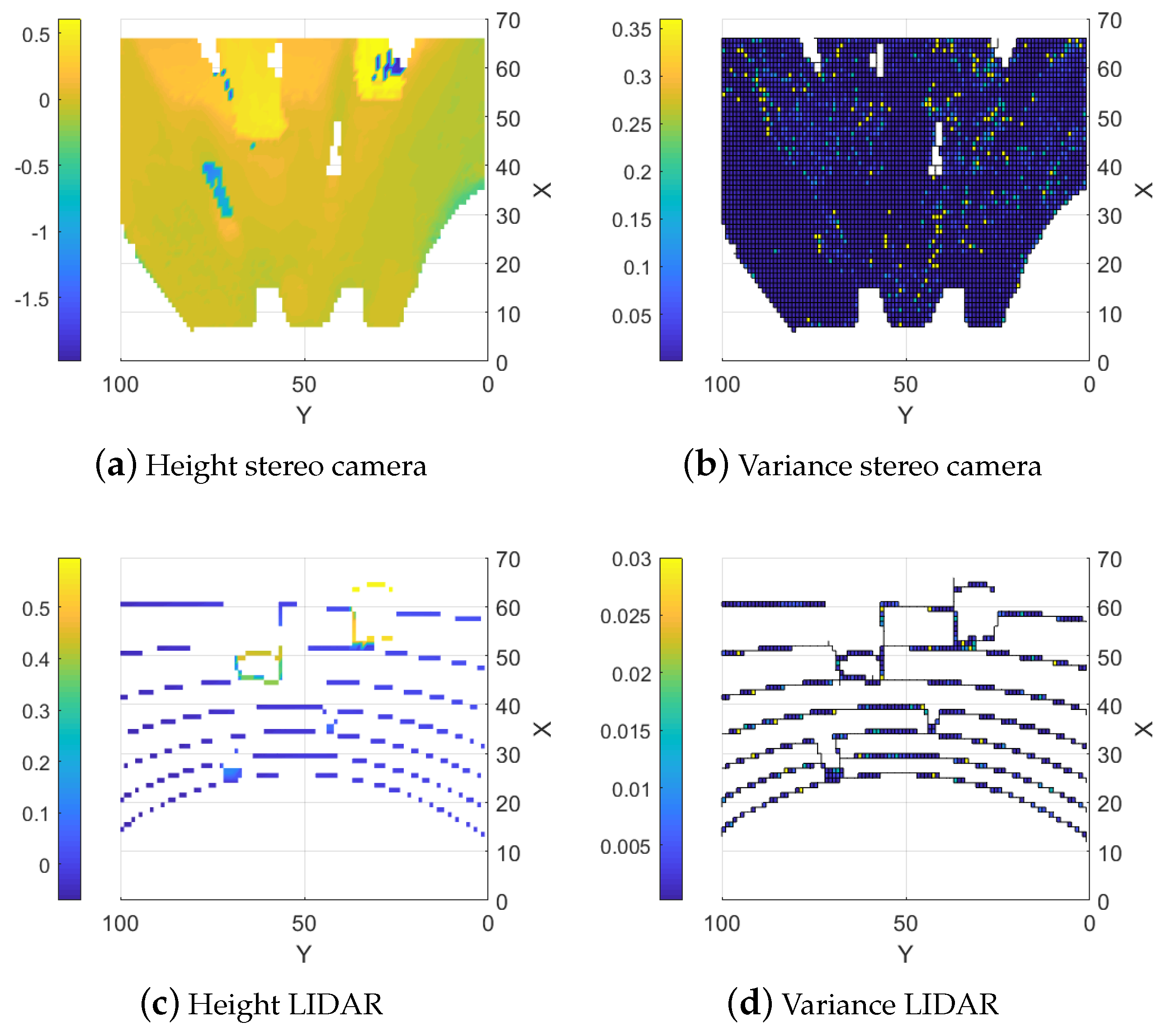

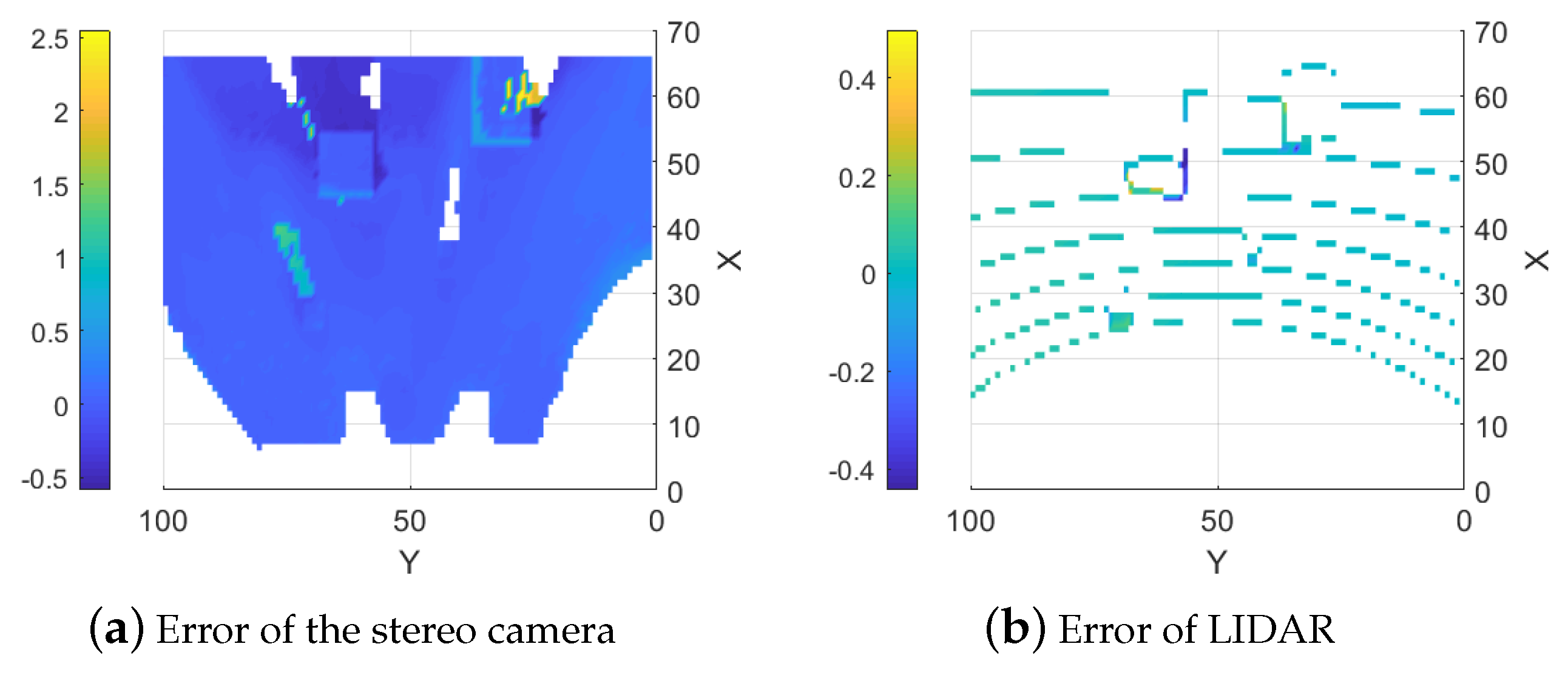

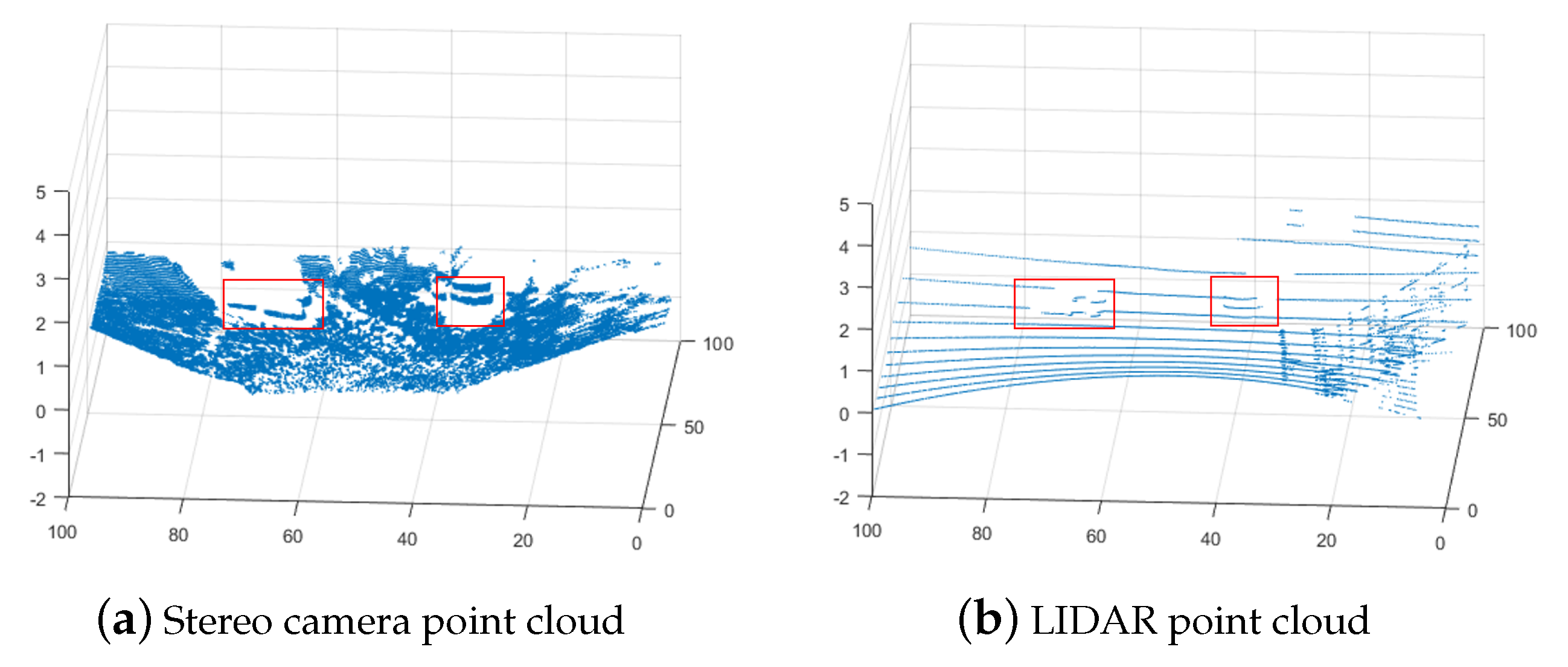

5.1. Controlled Lab Environment

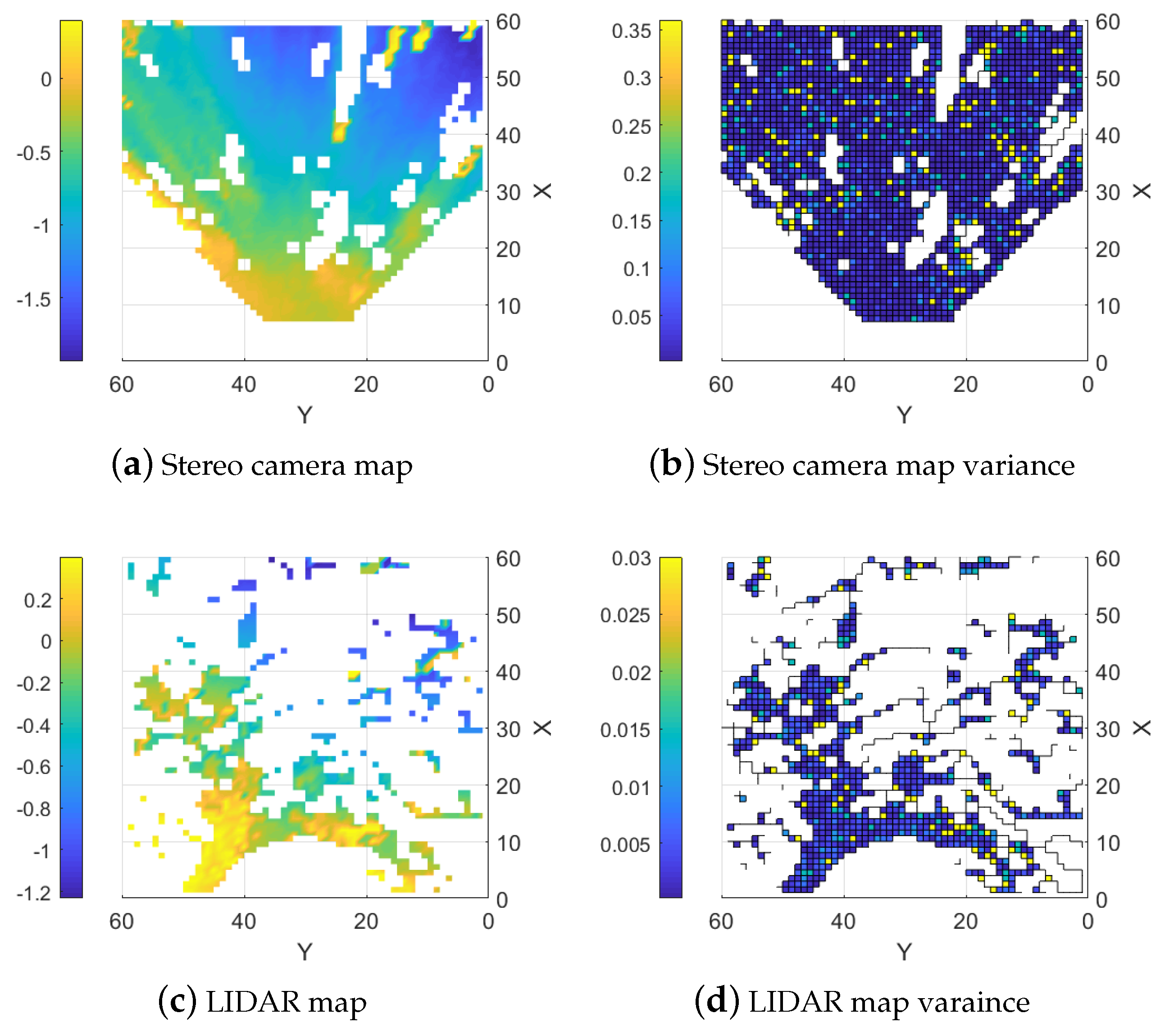

5.1.1. Stereo Camera

5.1.2. LIDAR

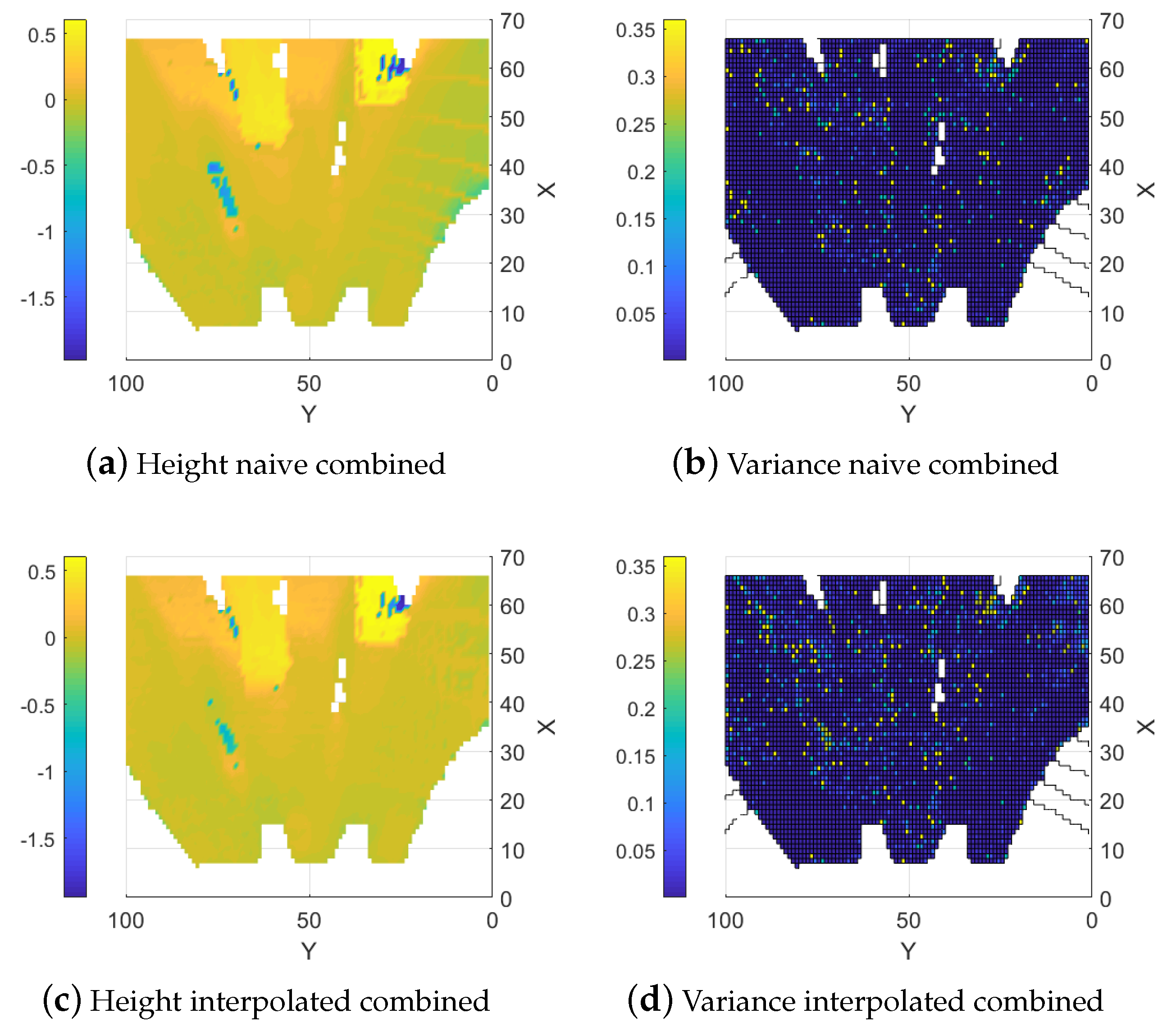

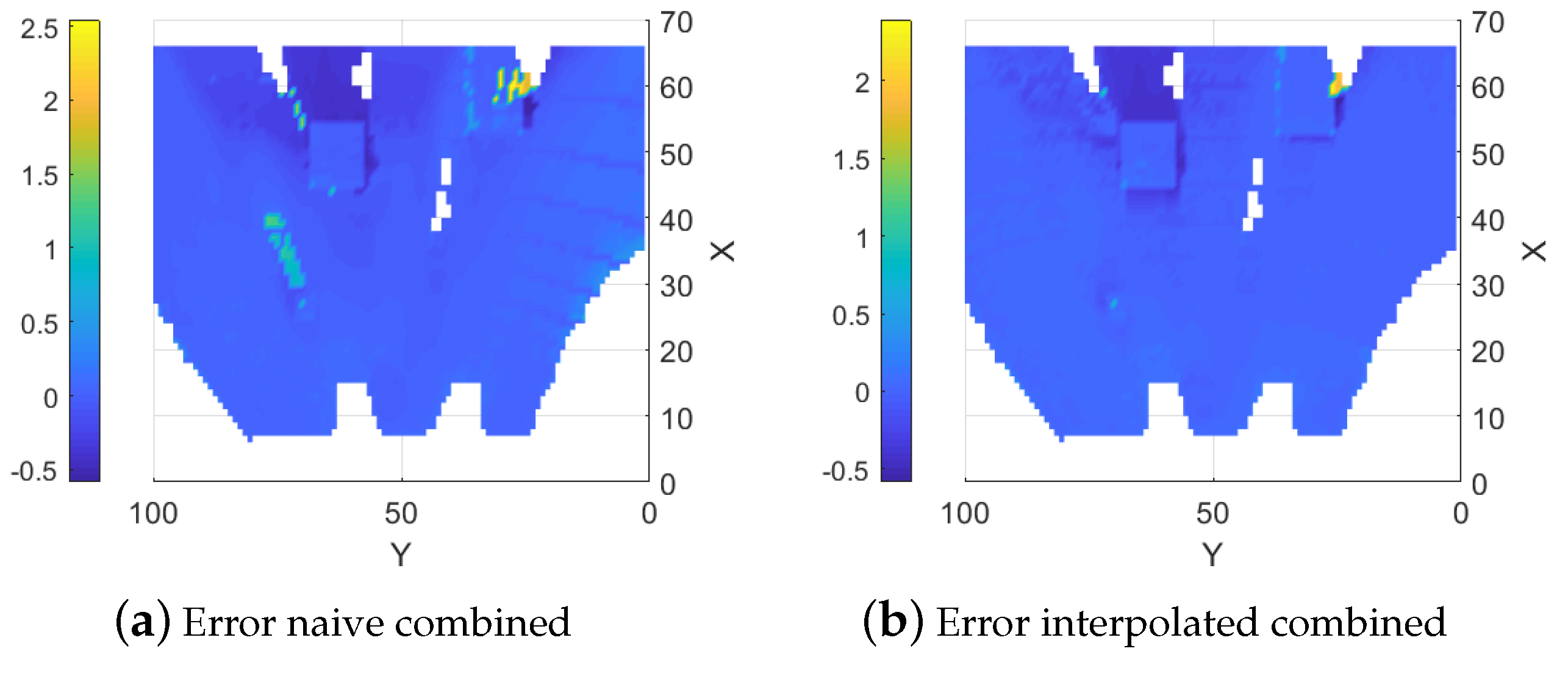

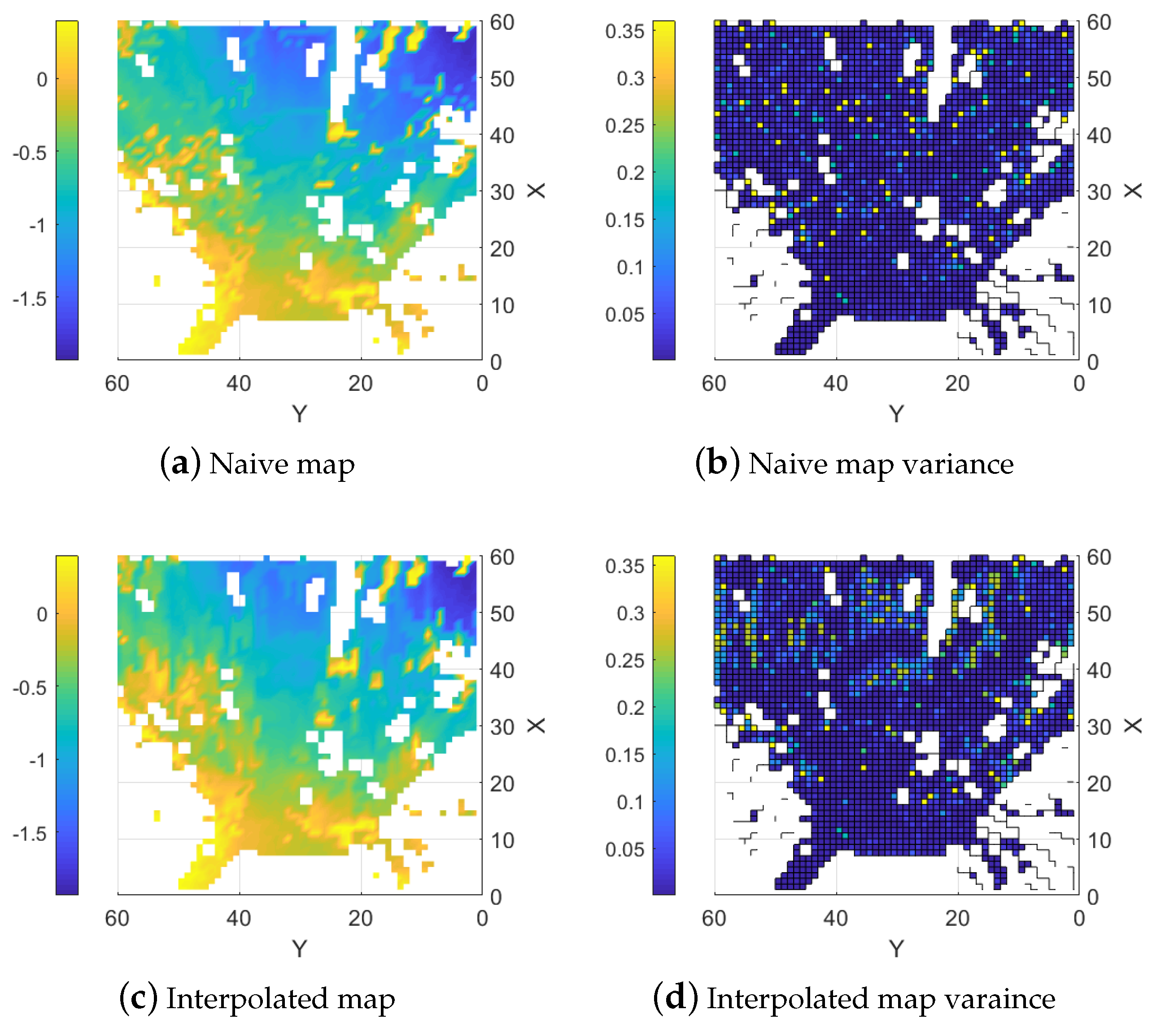

5.1.3. Naive Combined Map

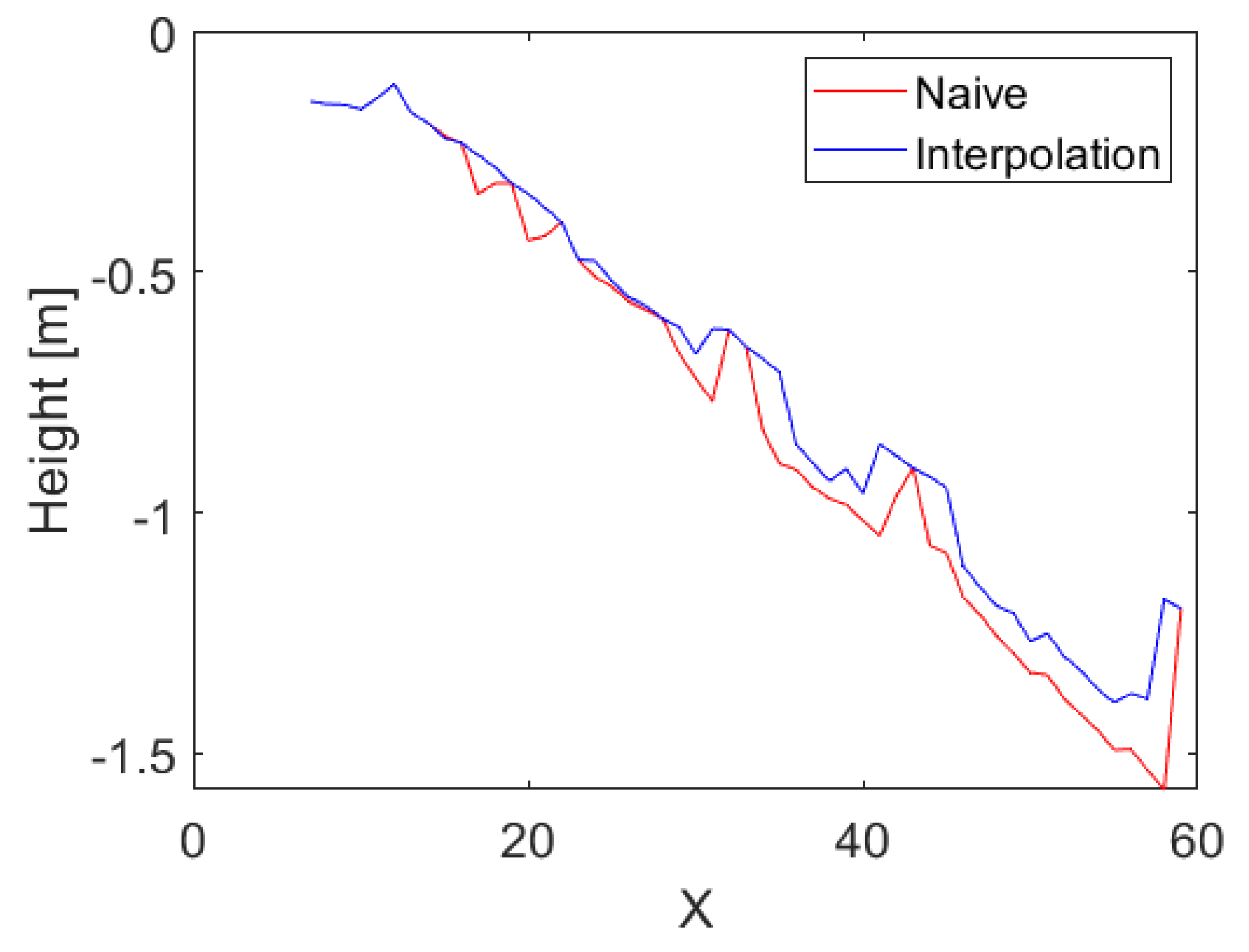

5.1.4. Interpolation Based Combination

5.2. Semi-Controlled Outdoor Environment

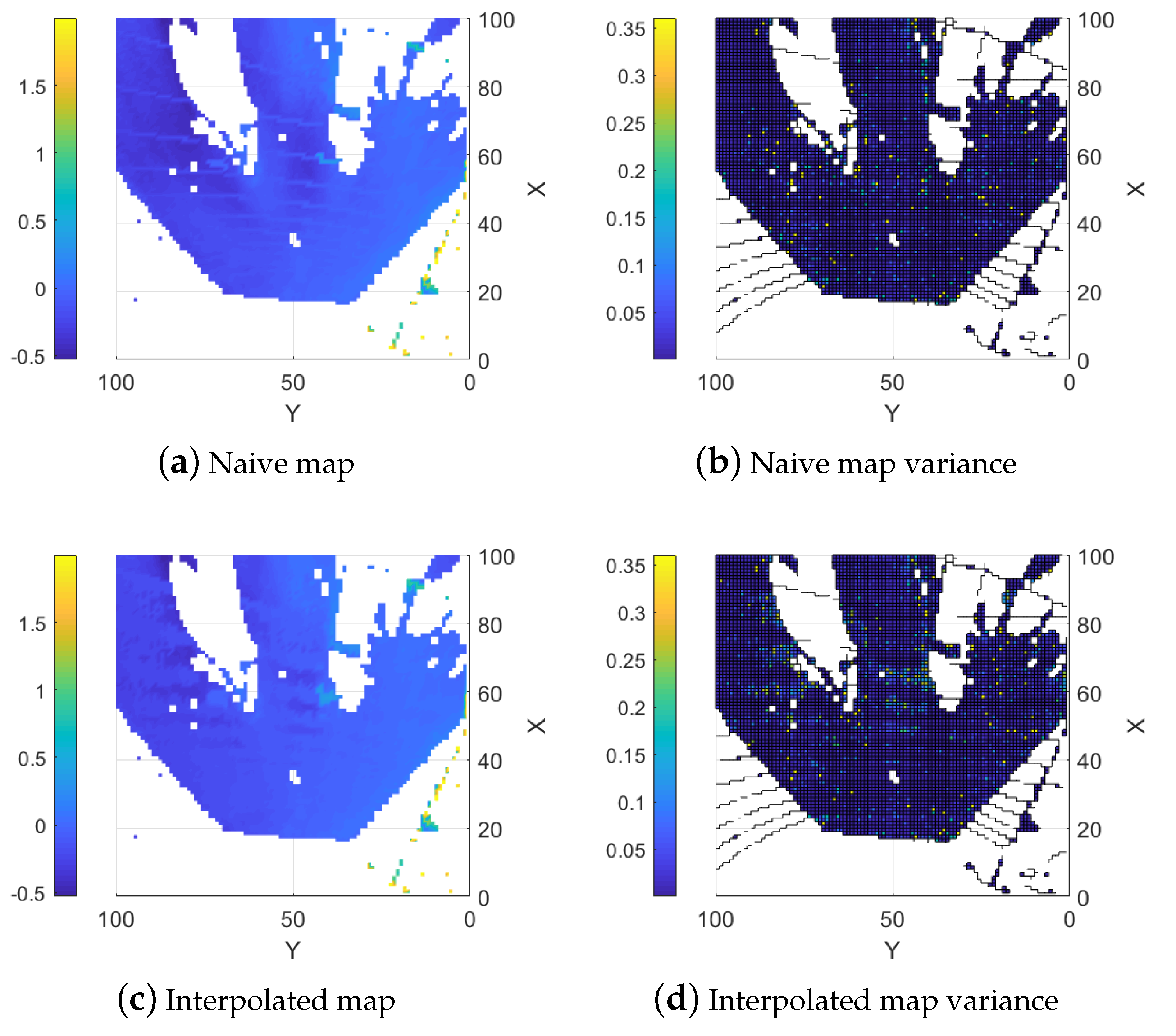

5.3. Unstructured Forest Terrain

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Santos, L.C.; Aguiar, A.S.; Santos, F.N.; Valente, A.; Ventura, J.B.; Sousa, A.J. Navigation Stack for Robots Working in Steep Slope Vineyard. In Intelligent Systems and Applications; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer: Cham, Switzerland, 2021; pp. 264–285. [Google Scholar]

- Jud, D.; Hurkxkens, I.; Girot, C.; Hutter, M. Robotic embankment: Free-form autonomous formation in terrain with HEAP. Constr. Robot. 2021, 5, 101–113. [Google Scholar] [CrossRef]

- Chatziparaschis, D.; Lagoudakis, M.G.; Partsinevelos, P. Aerial and Ground Robot Collaboration for Autonomous Mapping in Search and Rescue Missions. Drones 2020, 4, 79. [Google Scholar] [CrossRef]

- Sock, J.; Kim, J.; Min, J.; Kwak, K. Probabilistic traversability map generation using 3D-LIDAR and camera. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5631–5637. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Hutter, M. Probabilistic Terrain Mapping for Mobile Robots with Uncertain Localization. IEEE Robot. Autom. Lett. (RA-L) 2018, 3, 3019–3026. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Gehring, C.; Hutter, M.; Siegwart, R. Robot-Centric Elevation Mapping with Uncertainty Estimates. In Proceedings of the International Conference on Climbing and Walking Robots (CLAWAR), Poznań, Poland, 21–23 July 2014. [Google Scholar]

- Bohlin, J.; Bohlin, I.; Jonzén, J.; Nilsson, M. Mapping forest attributes using data from stereophotogrammetry of aerial images and field data from the national forest inventory. Silva Fenn. 2017, 51, 2021. [Google Scholar] [CrossRef]

- Nilsson, M.; Nordkvist, K.; Jonzén, J.; Lindgren, N.; Axensten, P.; Wallerman, J.; Egberth, M.; Larsson, S.; Nilsson, L.; Eriksson, J.; et al. A nationwide forest attribute map of Sweden predicted using airborne laser scanning data and field data from the National Forest Inventory. Remote Sens. Environ. 2016, 194, 447–454. [Google Scholar] [CrossRef]

- Deems, J.; Painter, T.; Finnegan, D. Lidar measurement of snow depth: A review. J. Glaciol. 2013, 59, 467–479. [Google Scholar] [CrossRef]

- Canuto, M.; Estrada-Belli, F.; Garrison, T.; Houston, S.; Acuña, M.; Kovac, M.; Marken, D.; Nondedeo, P.; Auld-Thomas, L.; Castanet, C.; et al. Ancient Lowland Maya Complexity as Revealed by Airborne Laser Scanning of Northern Guatemala. Science 2018, 361, eaau0137. [Google Scholar] [CrossRef]

- Zeng, Z.; Wen, J.; Luo, J.; Ding, G.; Geng, X. Dense 3D Point Cloud Environmental Mapping Using Millimeter-Wave Radar. Sensors 2024, 24, 6569. [Google Scholar] [CrossRef]

- Wang, P. Research on Comparison of LiDAR and Camera in Autonomous Driving. J. Phys. Conf. Ser. 2021, 2093, 012032. [Google Scholar] [CrossRef]

- Kolar, P.; Benavidez, P.; Jamshidi, M. Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation. Sensors 2020, 20, 2180. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Zhang, B.; Xu, N.; Zhou, J.; Shi, J.; Diao, Z. Vision-based navigation and guidance for agricultural autonomous vehicles and robots: A review. Comput. Electron. Agric. 2023, 205, 107584. [Google Scholar] [CrossRef]

- Aghi, D.; Mazzia, V.; Chiaberge, M. Local Motion Planner for Autonomous Navigation in Vineyards with a RGB-D Camera-Based Algorithm and Deep Learning Synergy. Machines 2020, 8, 27. [Google Scholar] [CrossRef]

- Gerdes, L.; Azkarate, M.; Sánchez Ibáñez, J.R.; Perez-del Pulgar, C.; Joudrier, L. Efficient autonomous navigation for planetary rovers with limited resources. J. Field Robot. 2020, 37, 1153–1170. [Google Scholar] [CrossRef]

- Hu, J.w.; Zheng, B.y.; Wang, C.; Zhao, C.h.; Hou, X.l.; Pan, Q.; Xu, Z. A survey on multi-sensor fusion based obstacle detection for intelligent ground vehicles in off-road environments. Front. Inf. Technol. Electron. Eng. 2020, 21, 675–692. [Google Scholar] [CrossRef]

- Forkel, B.; Wuensche, H.J. LiDAR-SGM: Semi-Global Matching on LiDAR Point Clouds and Their Cost-Based Fusion into Stereo Matching. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2841–2847. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, L.; Li, K.; Fu, Z.; Guo, Y. SLFNet: A Stereo and LiDAR Fusion Network for Depth Completion. IEEE Robot. Autom. Lett. 2022, 7, 10605–10612. [Google Scholar] [CrossRef]

- Zou, S.; Liu, X.; Huang, X.; Zhang, Y.; Wang, S.; Wu, S.; Zheng, Z.; Liu, B. Edge-Preserving Stereo Matching Using LiDAR Points and Image Line Features. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F.; Varshosaz, M. Exploiting Light Directionality for Image-Based 3D Reconstruction of Non-Collaborative Surfaces. Photogramm. Rec. 2022, 37, 111–138. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. A Real-Time Algorithm for Mobile Robot Mapping With Applications to Multi-Robot and 3D Mapping. In Proceedings of the 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 321–328. [Google Scholar] [CrossRef]

- Wolf, P.; Berns, K. Data-fusion for robust off-road perception considering data quality of uncertain sensors. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 6876–6883. [Google Scholar] [CrossRef]

- Belter, D.; Łabcki, P.; Skrzypczyński, P. Estimating terrain elevation maps from sparse and uncertain multi-sensor data. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 715–722. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Z. Mapping method of embedded system based on multi-sensor data fusion for unstructured terrain. In Proceedings of the 2023 5th International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 8–10 December 2023; pp. 913–917. [Google Scholar] [CrossRef]

- Kong, Q.; Zhang, L.; Xu, X. Outdoor real-time RGBD sensor fusion of stereo camera and sparse lidar. J. Phys. Conf. Ser. 2022, 2234, 012010. [Google Scholar] [CrossRef]

- Kim, H.; Willers, J.; Kim, S. Digital elevation modeling via curvature interpolation for LiDAR data. Electron. J. Differ. Equ. 2016, 23, 47–57. [Google Scholar]

- Maddern, W.; Newman, P. Real-time probabilistic fusion of sparse 3D LIDAR and dense stereo. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 2181–2188. [Google Scholar] [CrossRef]

- Pan, Y.; Xu, X.; Ding, X.; Huang, S.; Wang, Y.; Xiong, R. GEM: Online Globally Consistent Dense Elevation Mapping for Unstructured Terrain. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Qiu, Z.; Yue, L.; Liu, X. Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks. Remote Sens. 2019, 11, 2829. [Google Scholar] [CrossRef]

- Stoelzle, M.; Miki, T.; Gerdes, L.; Azkarate, M.; Hutter, M. Reconstructing Occluded Elevation Information in Terrain Maps With Self-Supervised Learning. IEEE Robot. Autom. Lett. 2022, 7, 1697–1704. [Google Scholar] [CrossRef]

- Barreto-Cubero, A.J.; Gómez-Espinosa, A.; Escobedo Cabello, J.A.; Cuan-Urquizo, E.; Cruz-Ramírez, S.R. Sensor Data Fusion for a Mobile Robot Using Neural Networks. Sensors 2022, 22, 305. [Google Scholar] [CrossRef]

- Velodyne Lidar. Available online: https://velodynelidar.com/products/puck/ (accessed on 25 October 2023).

- Ortiz, L.; Cabrera, E.; Gonçalves, L. Depth Data Error Modeling of the ZED 3D Vision Sensor from Stereolabs. Electron. Lett. Comput. Vis. Image Anal. 2018, 17, 0001–15. [Google Scholar] [CrossRef]

- Ahn, M.S.; Chae, H.; Noh, D.; Nam, H.; Hong, D. Analysis and Noise Modeling of the Intel RealSense D435 for Mobile Robots. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Republic of Korea, 24–27 June 2019; pp. 707–711. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar] [CrossRef]

- Stereolabs. Available online: https://store.stereolabs.com/en-eu/products/zed-2 (accessed on 25 October 2023).

- Stanford Artificial Intelligence Laboratory Robotic Operating System. Available online: https://www.ros.org (accessed on 25 October 2023).

- Fankhauser, P.; Bloesch, M.; Rodriguez, D.; Kaestner, R.; Hutter, M.; Siegwart, R. Kinect v2 for mobile robot navigation: Evaluation and modeling. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 388–394. [Google Scholar] [CrossRef]

| Type | Max Error | Mean Error | RMSE | Fill % |

|---|---|---|---|---|

| Stereo | 2.5496 | 0.1012 | 0.2234 | 62.19% |

| LIDAR | 0.4984 | 0.0339 | 0.0686 | 16.59% |

| Naive | 2.5436 | 0.0918 | 0.2100 | 63.39% |

| Interpolation | 2.3913 | 0.0555 | 0.1324 | 63.39% |

| Points | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Height [m] | 0.185 | 0.185 | 0.27 | 0.24 | 0.46 |

| Type | Stereo | LIDAR | Naive | Interpolation |

|---|---|---|---|---|

| Point 1 error | 0.155 | 0.015 | 0.025 | 0.025 |

| Point 2 error | 0.095 | 0.005 | 0.025 | 0.005 |

| Point 3 error | 0.1 | 0.03 | 0.07 | 0.03 |

| Point 4 error | 0.14 | 0.01 | 0.14 | 0.02 |

| Point 5 error | 0.09 | 0.01 | 0.03 | 0.0 |

| Max error | 0.16 | 0.03 | 0.14 | 0.03 |

| Mean error | 0.12 | 0.01 | 0.06 | 0.02 |

| RMSE | 0.13 | 0.02 | 0.07 | 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sten, G.; Feng, L.; Möller, B. Enhancing Off-Road Topography Estimation by Fusing LIDAR and Stereo Camera Data with Interpolated Ground Plane. Sensors 2025, 25, 509. https://doi.org/10.3390/s25020509

Sten G, Feng L, Möller B. Enhancing Off-Road Topography Estimation by Fusing LIDAR and Stereo Camera Data with Interpolated Ground Plane. Sensors. 2025; 25(2):509. https://doi.org/10.3390/s25020509

Chicago/Turabian StyleSten, Gustav, Lei Feng, and Björn Möller. 2025. "Enhancing Off-Road Topography Estimation by Fusing LIDAR and Stereo Camera Data with Interpolated Ground Plane" Sensors 25, no. 2: 509. https://doi.org/10.3390/s25020509

APA StyleSten, G., Feng, L., & Möller, B. (2025). Enhancing Off-Road Topography Estimation by Fusing LIDAR and Stereo Camera Data with Interpolated Ground Plane. Sensors, 25(2), 509. https://doi.org/10.3390/s25020509