From ADAS to Material-Informed Inspection: Review of Hyperspectral Imaging Applications on Mobile Ground Robots

Abstract

:1. Introduction

- Navigation: assisting autonomous vehicles and UGVs in environment perception, terrain classification, and road condition analysis.

- Inspection and Monitoring: supporting non-destructive material analysis for various purposes.

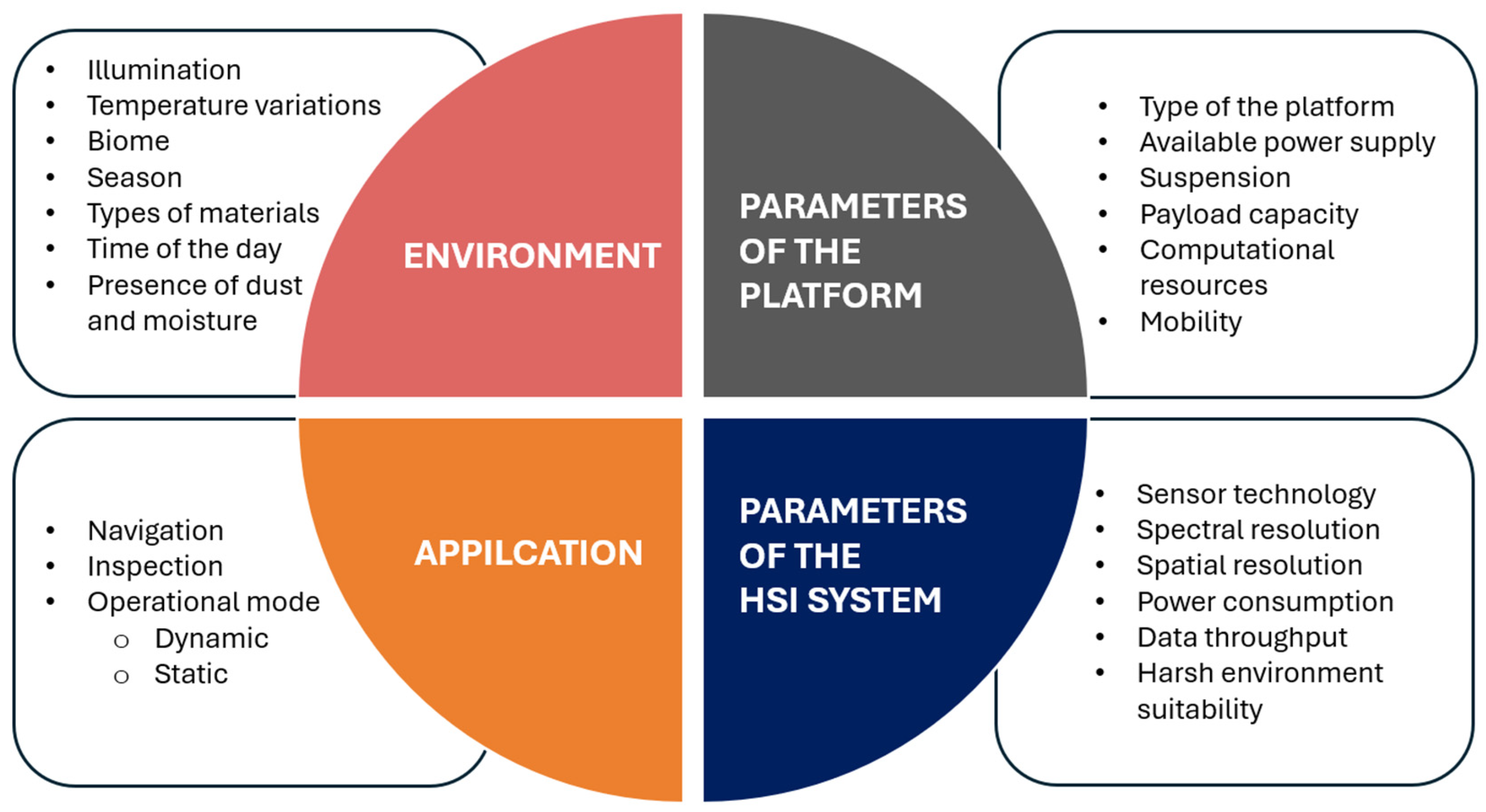

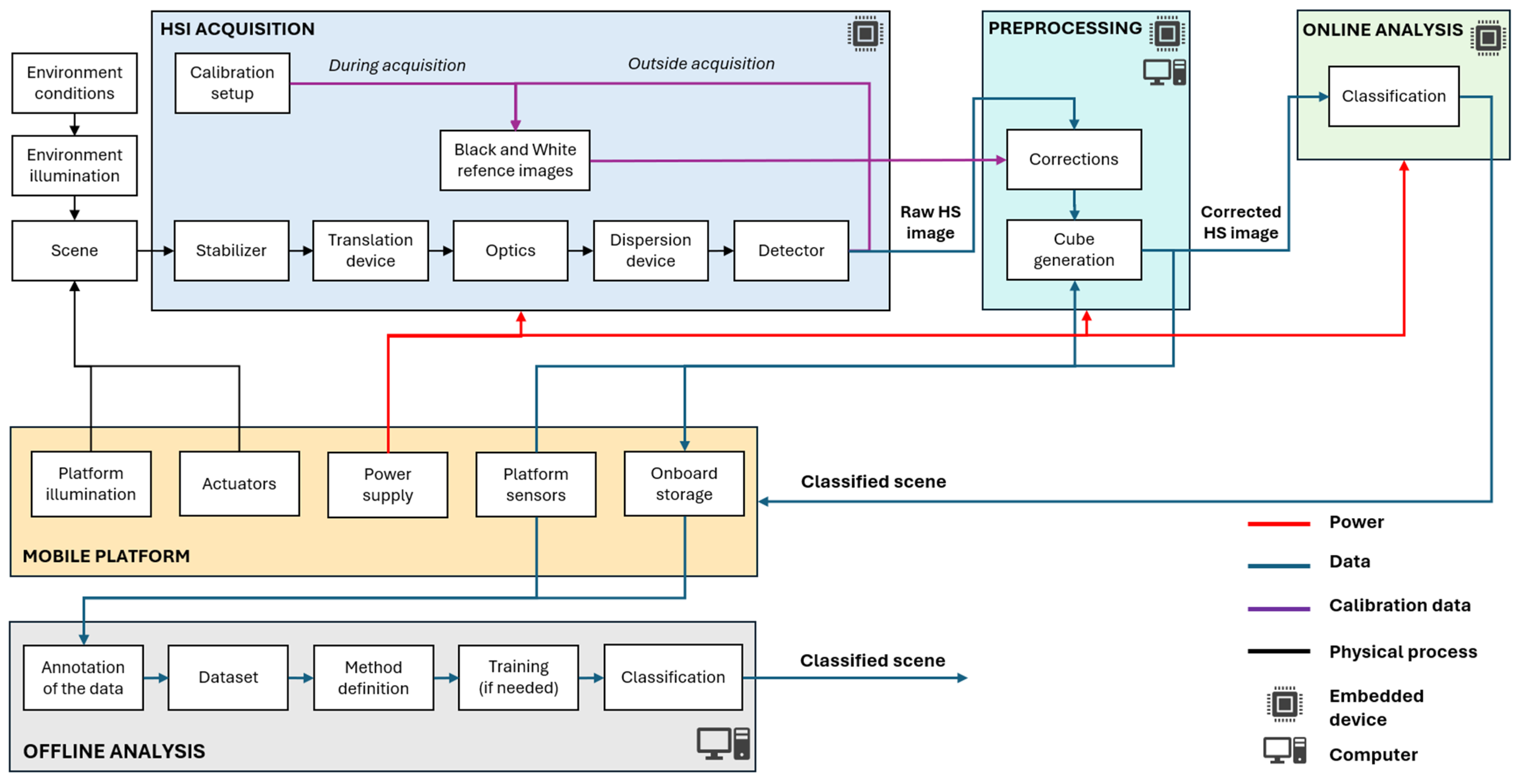

2. HSI for Mobile Platforms

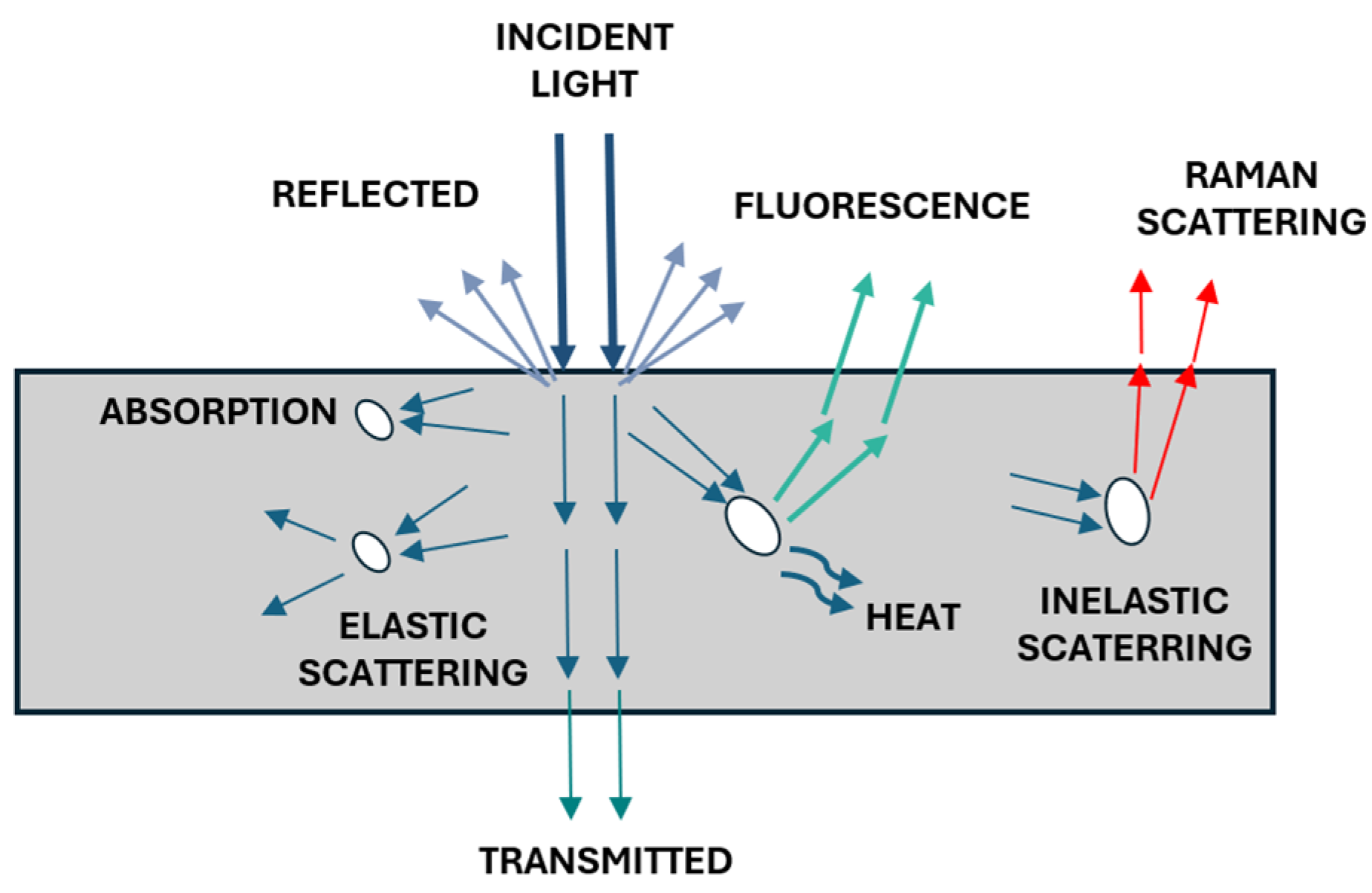

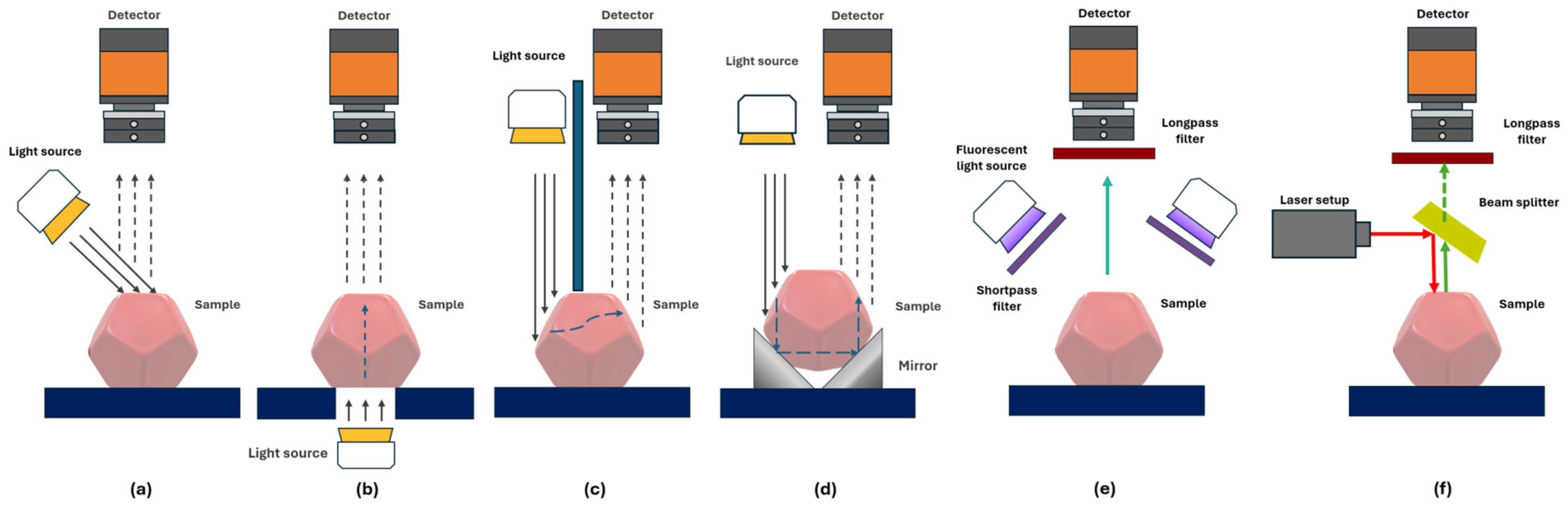

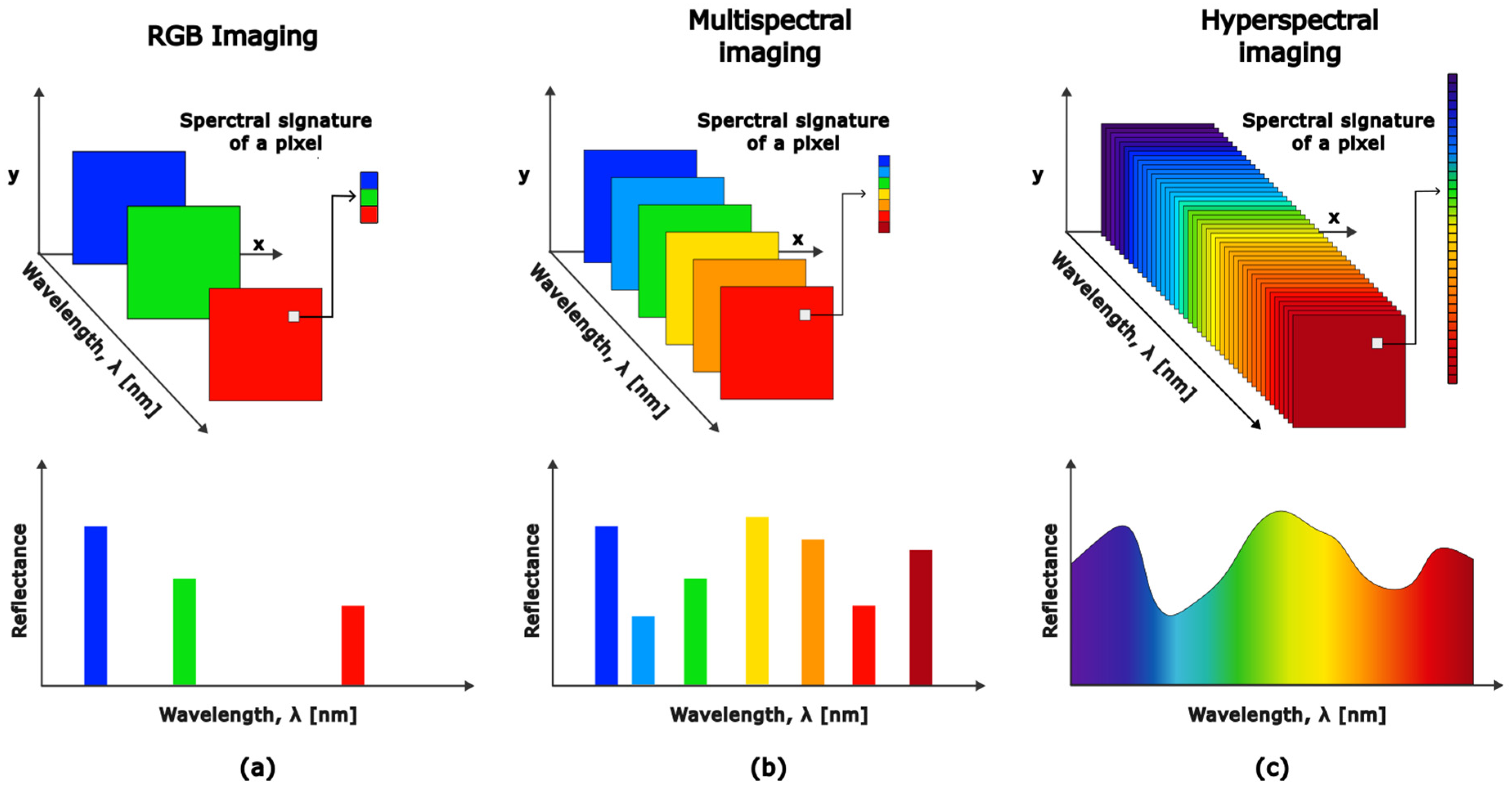

2.1. Sensing Modes

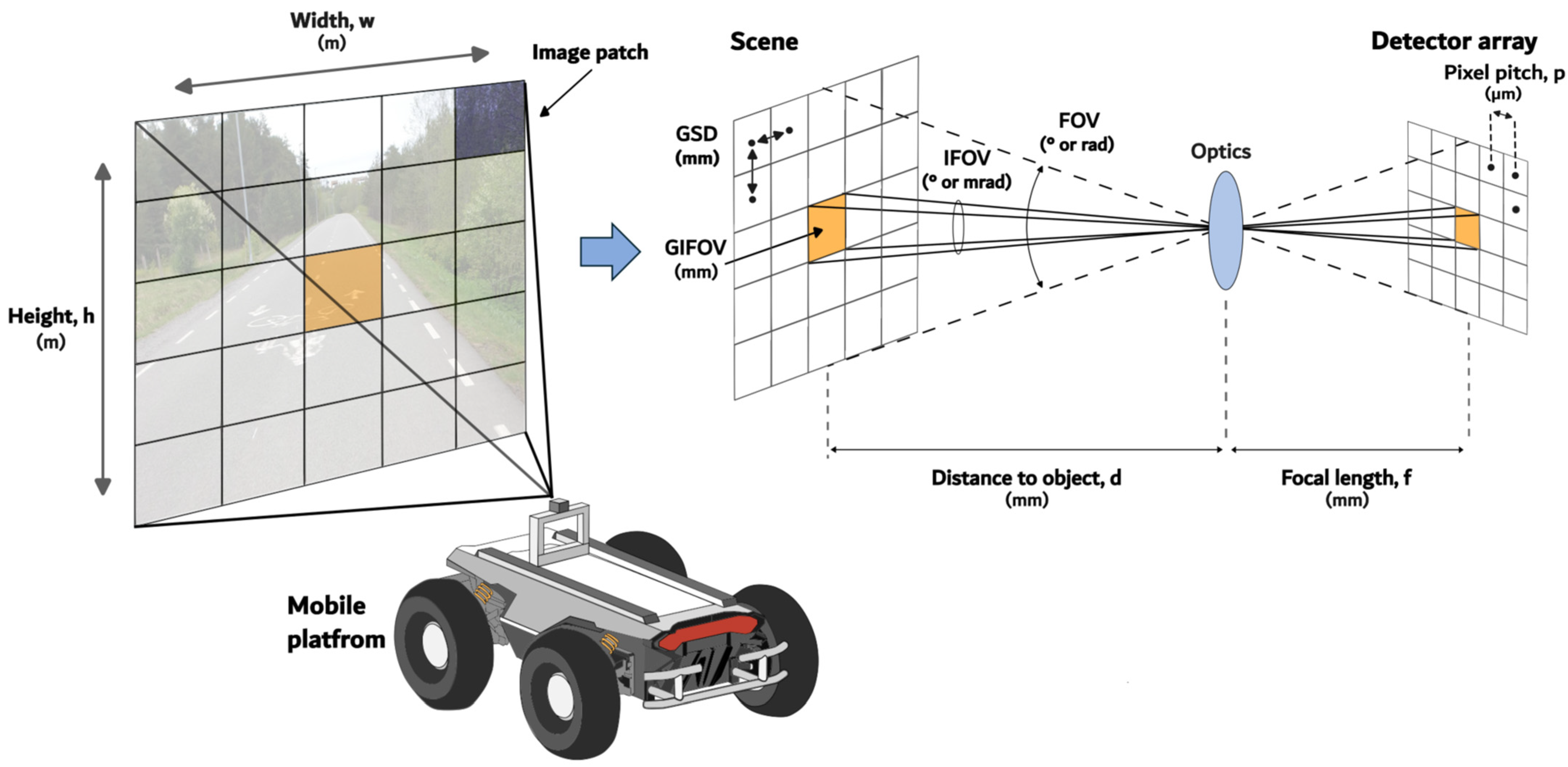

2.2. Parameters of the HSI System

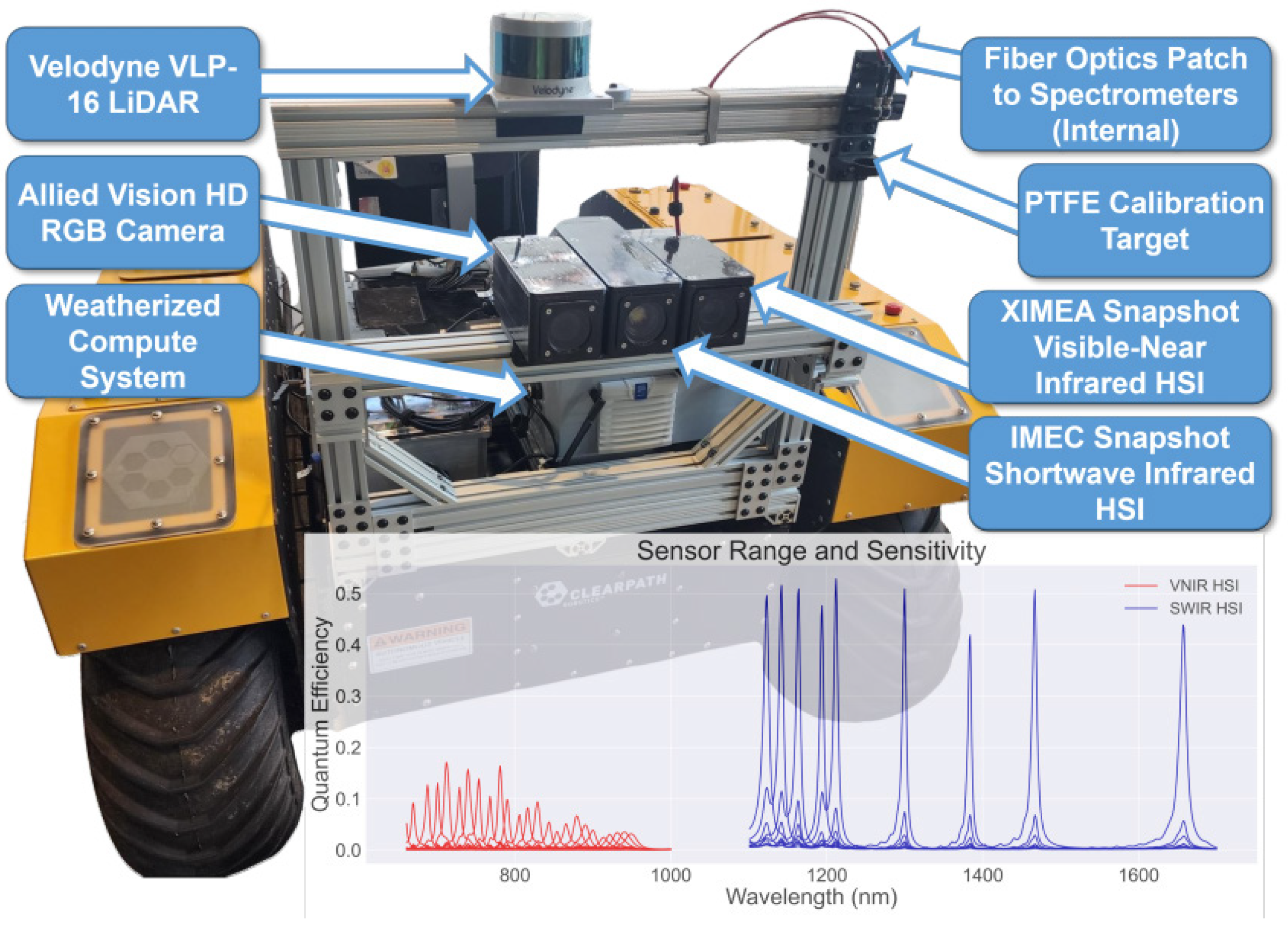

2.3. HSI Deployment on Mobile Platform

3. Components of the HSI System

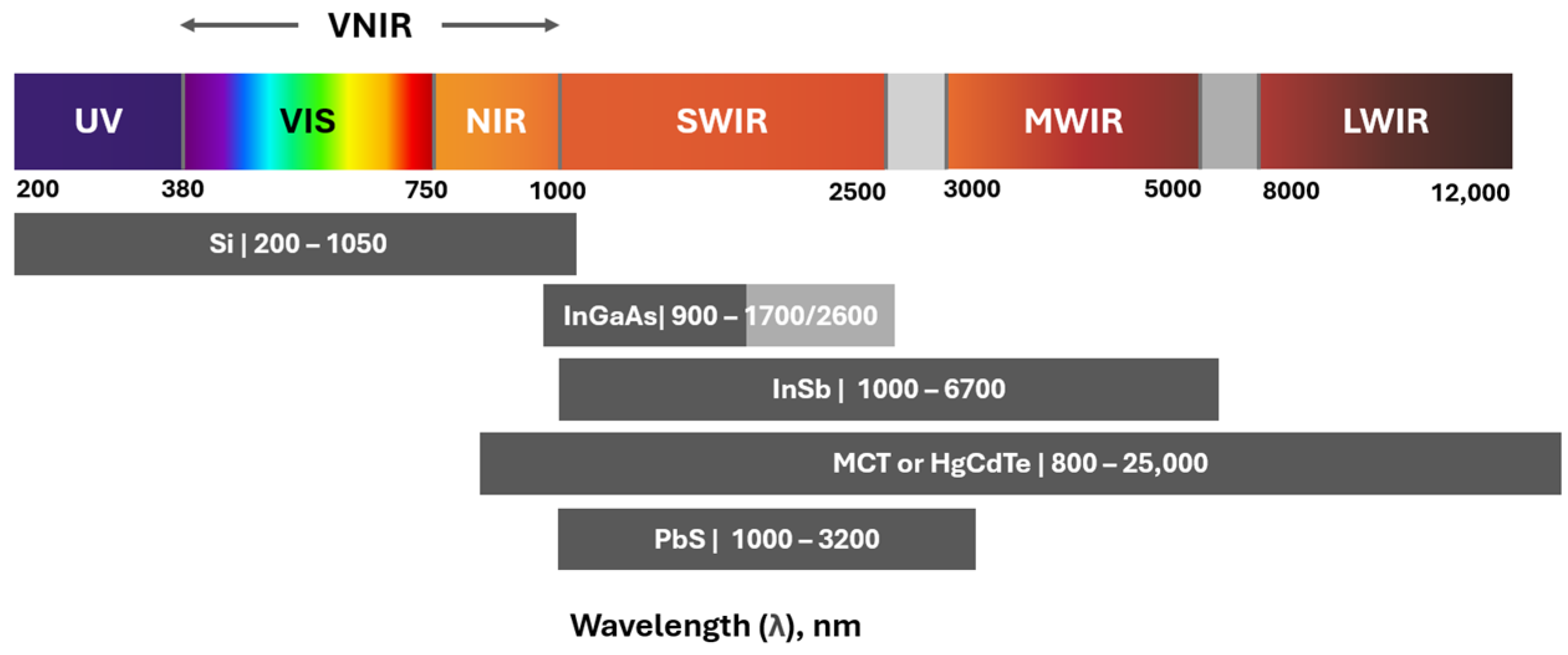

3.1. Dispersion Device and Detector

3.2. Translation Device

3.3. Illumination

3.4. Calibration

3.5. Stabilizer

4. Acquisition Modes

4.1. Point Scanning

4.2. Line Scanning

4.3. Area Scanning

4.4. Snapshot Camera

4.5. Applicability of Modes for Mobile Platforms

5. Applications of HSI on Ground Mobile Platforms

5.1. Autonomous Navigation

5.1.1. ADAS

- Dependence on road infrastructure, including markings and traffic signs;

- Rural environments and offroad navigation;

- Discrimination between visually similar objects;

- Adverse weather conditions.

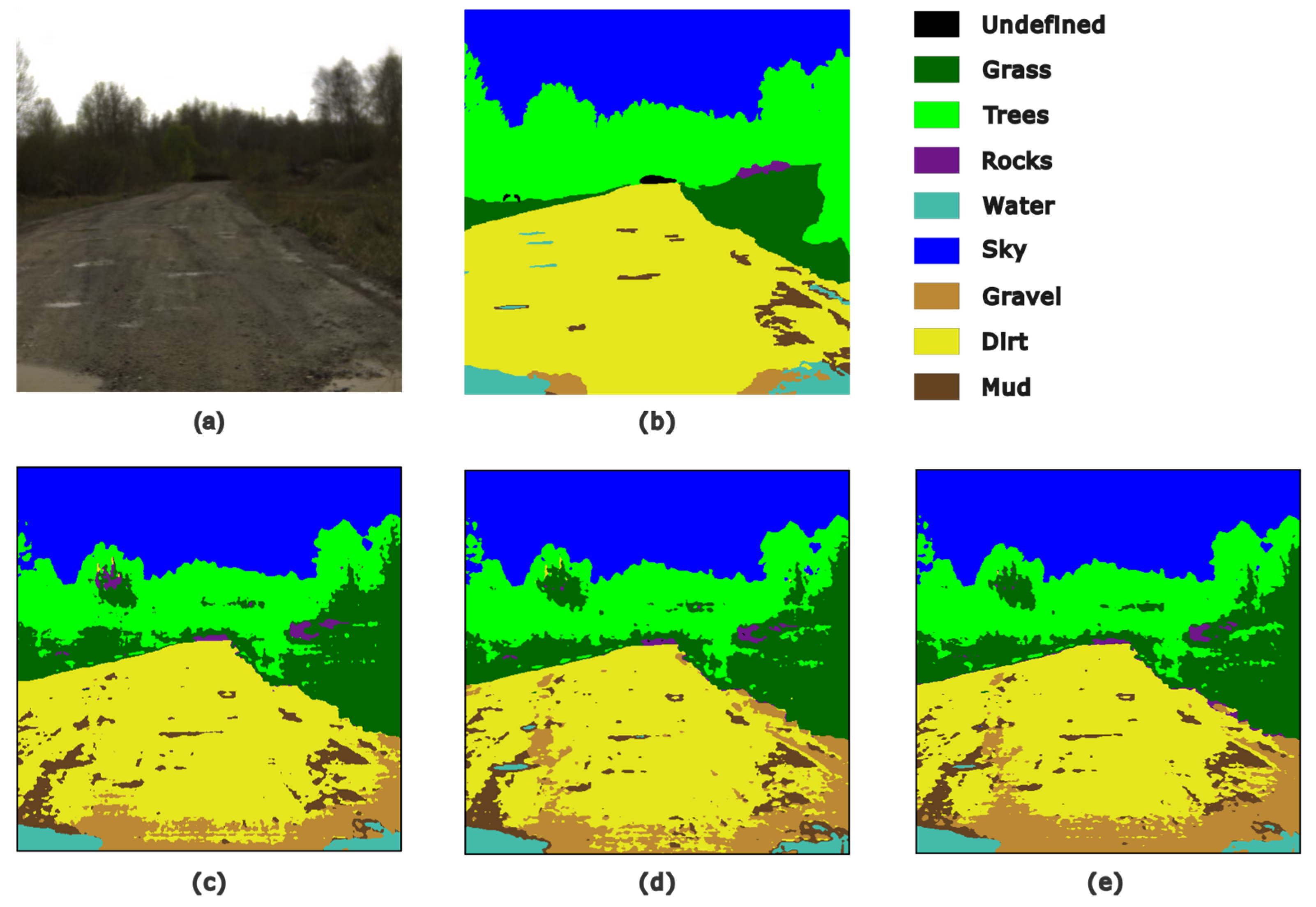

5.1.2. Offroad Navigation

5.1.3. Autonomous Driving Relevant Scenarios

5.1.4. HSI Datasets for Mobile Navigation and ADAS

- Drivability-based annotation: Labels are based on either drivable or non-drivable;

- Object-based annotation: Labels are based on correspondence to a predefined object category (e.g., car, building, road);

- Material-based annotation: Labels are based on the type of surface material (e.g., concrete, painted metal, asphalt, paint).

| Dataset | Year | Sensor | Manufacturer | Spectral Range | Number of Bands | Mode | Number of Classes |

|---|---|---|---|---|---|---|---|

| HyKo 1 [49] | 2017 | MQ022HG-IM-SM4X4-VIS | XIMEA GmbH, Münster, Germany | 470–630 | 15 | Snapshot | 5 classes (drivability) |

| MQ022HG-IM-SM5X5-NIR | XIMEA GmbH, Münster, Germany | 600–975 | 25 | Snapshot | |||

| Qmini Wide | Broadcom Inc., San Jose, CA, USA | 225–1000 | 2500 | Point scan | |||

| HyKo 2 [49] | 2017 | MQ022HG-IM-SM4X4-VIS | XIMEA GmbH, Münster, Germany | 470–630 | 15 | Snapshot | 11 classes (semantic) 9 classes (materials) 5 classes (drivability) |

| MQ022HG-IM-SM5X5-NIR | XIMEA GmbH, Münster, Germany | 600–975 | 25 | Snapshot | |||

| Qmini Wide | Broadcom Inc., San Jose, CA, USA | 225–1000 | 2500 | Point scan | |||

| Hyperspectral City v1.0 [94] | 2019 | Not specified | LightGene, Nanjing, China | 450–950 | 129 | Snapshot | 10 classes (semantic) |

| Hyperspectral City v2.0 [95] | 2021 | Not specified | LightGene, Nanjing, China | 450–950 | 129 | Snapshot | 19 classes (semantic) |

| HSI road [109] | 2020 | MQ022HG-IM-SM5x5 | XIMEA GmbH, Münster, Germany | 680–960 | 25 | Snapshot | 2 classes (semantic) |

| HSI drive v1.1 [92] | 2021 | MV1-D2048x1088-HS02-96-G2 | Photonfocus AG, Lachen, Switzerland | 600–975 | 25 | Snapshot | 10 classes |

| HSI drive v2 [51] | 2022 | MV1-D2048x1088-HS02-96-G2 | Photonfocus AG, Lachen, Switzerland | 600–975 | 25 | Snapshot | 10 classes |

| Hyper-Drive [50] | 2023 | Not specified | IMEC, Leuven, Belgium | 660–900 | 24 | Snapshot | 5 classes (semantic) 10 classes (materials) |

| Not specified | IMEC, Leuven, Belgium | 1100–1700 | 9 | Snapshot | |||

| Pebble VIS-NIR | Ibsen Photonics, Farum, Denmark | 550–1100 | 256 | Point scan | |||

| Pebble NIR | Ibsen Photonics, Farum, Denmark | 950–1700 | 128 | Point scan | |||

| Hyperspectral image dataset of unstructured terrains for UGV perception [103,108] | 2024 | IQ | Specim, Spectral Imaging Ltd., Oulu, Finland | 400–1000 | 204 | Push broom | 9 classes (semantic) |

5.2. Inspection

5.2.1. Search and Rescue

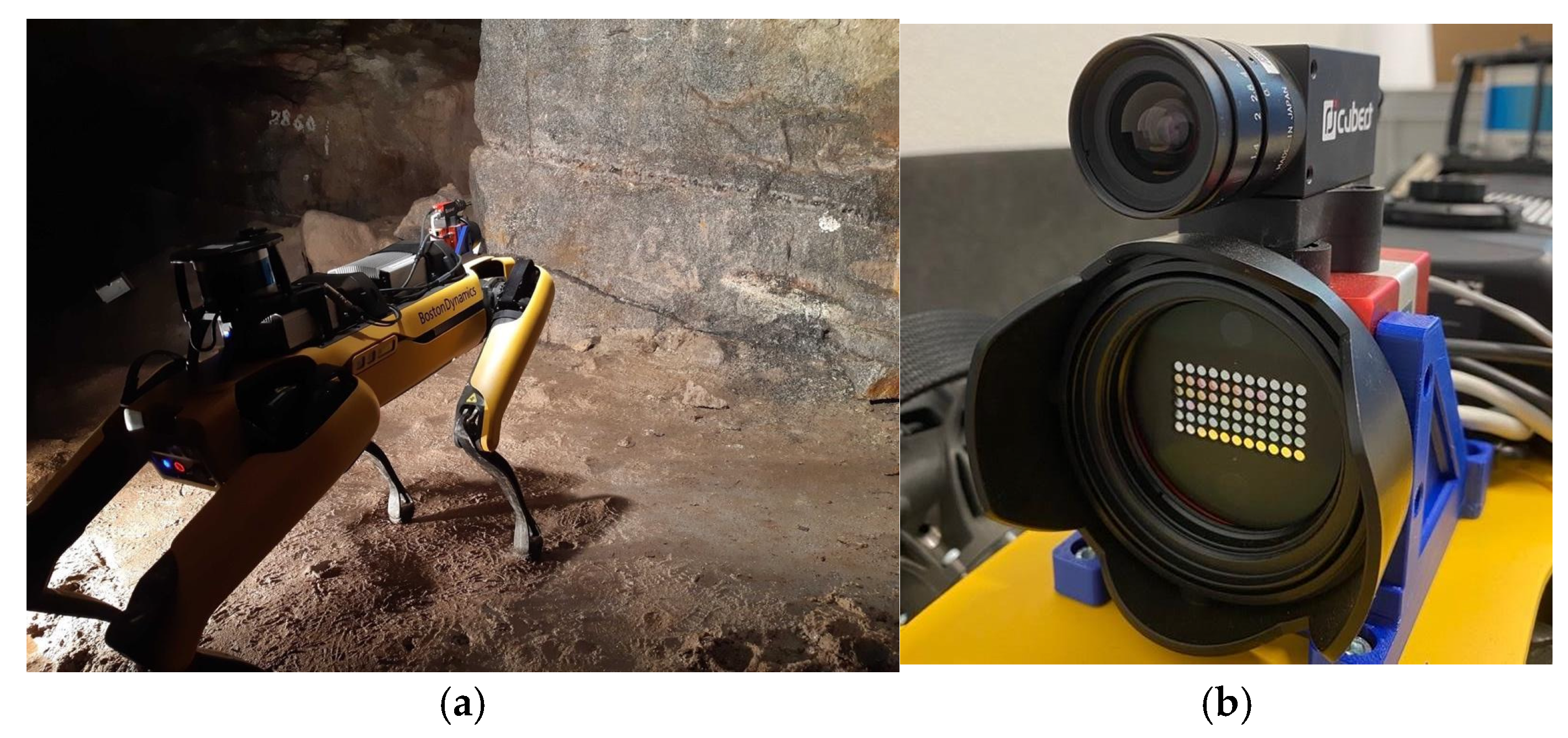

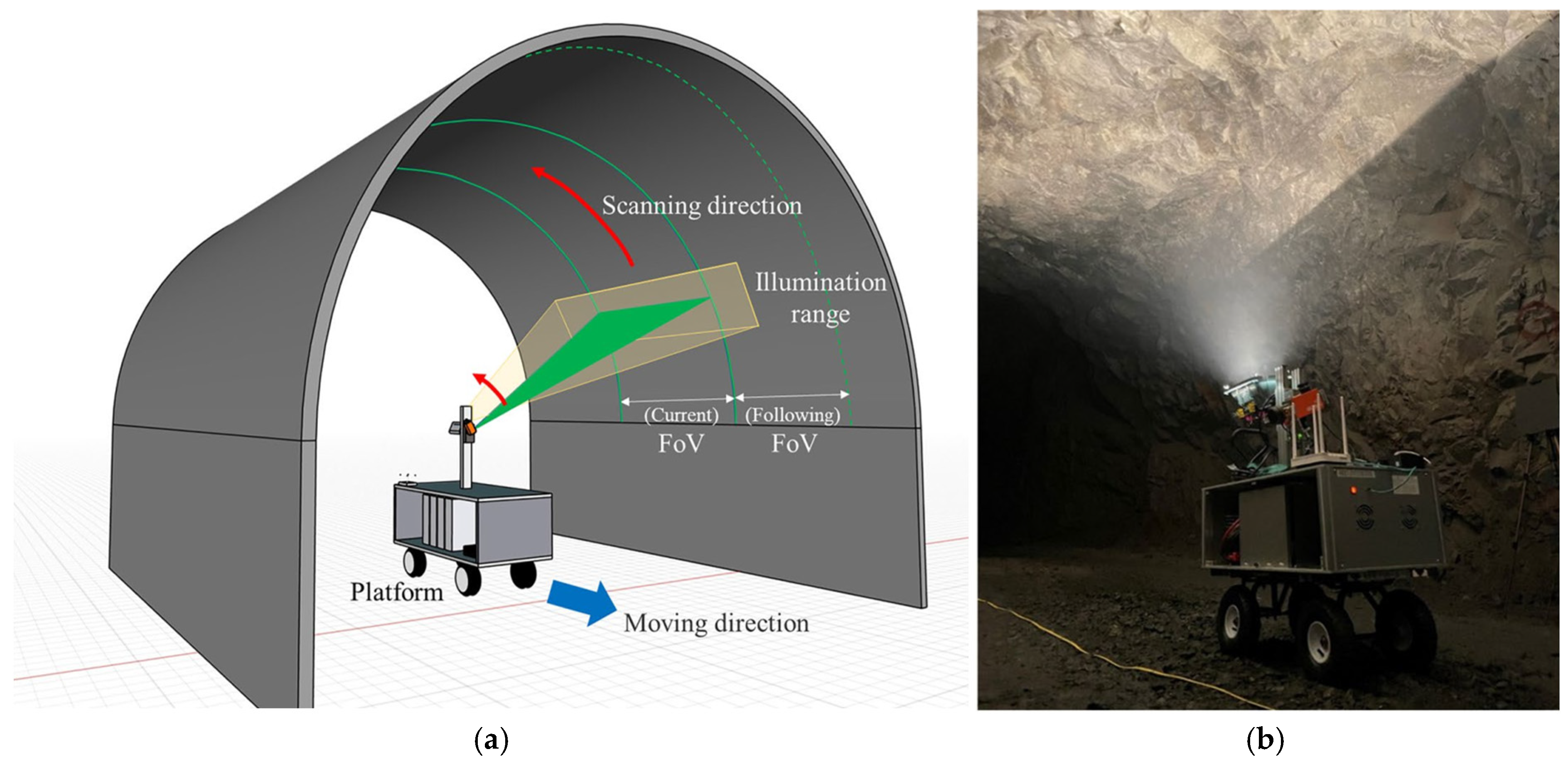

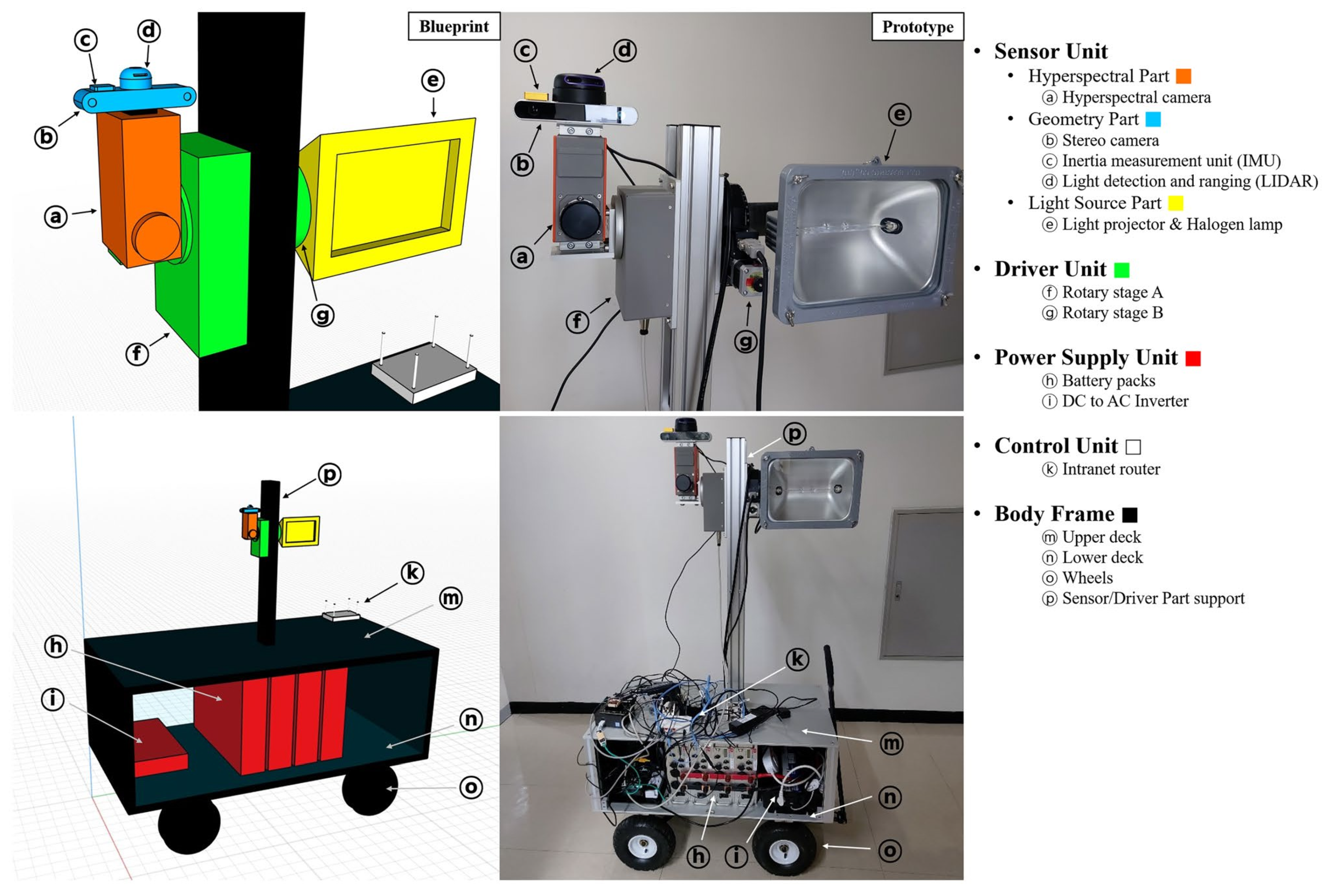

5.2.2. Mines

5.2.3. Infrastructure Inspection

6. Integration

- Managing variable illumination;

- Ensuring weatherproofing and environmental protection;

- Adapting scene acquisition to platform and task-specific requirements;

- Handling data large volumes.

6.1. Illumination

6.1.1. Outdoor Conditions

- White reference calibration;

- Design of the calibration setup;

- Manual exposure time adjustment;

- Active illumination.

6.1.2. Indoor Conditions

6.2. Weather Protection and Optical System Contamination

6.3. Platform-Specific Scene Acquisition Approaches

6.4. Constraints Defined by the Size of Data

6.4.1. Data Storage

6.4.2. Embedded Processing

7. Discussion

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 2D | Two dimensional |

| 3D | Three dimensional |

| AGAS | Active Vision Group |

| ACI | Asphalt Crack Index |

| ADAS | Advanced Driver Assistance System |

| ANN | Artificial Neural Network |

| AOTF | Acousto-Optical Tunable Filters |

| CCD | Charge-Coupled Device |

| CMOS | Complementary Metal-Oxide Semiconductor |

| DN | Digital Number |

| DVE | Degraded Visual Environment |

| EIS | Electrical Impedance Spectroscopy |

| FCN | Fully Convolutional Network |

| FPGA | Field Programmable Gate Array |

| FOV | Field of View |

| FTIR | Fourier Transform Infrared Spectroscopy |

| FCLS | Fully Constrained Least Squares |

| Ga | Gallium |

| GIFOV | Ground Instantaneous Field of View |

| GSD | Ground Sample Distance |

| HSI | Hyperspectral Imaging |

| IFOV | Instantaneous Field of View |

| IMU | Inertial Measurement Unit |

| In | Indium |

| HgCdTe | Mercury Cadmium Telluride |

| InGaAs | Indium Gallium Arsenide |

| InSb | Indium Antimonide |

| IR | Infrared |

| JPL | Jet Propulsion Laboratory |

| LCTF | Liquid Crystal Tunable Filter |

| LED | Light-Emitting Diode |

| LiDAR | Light Detection and Ranging |

| LWIR | Long-Wave Infrared |

| MNF | Minimum Noise Fraction |

| MSI | Multispectral Imaging |

| MWIR | Mid-Wave Infrared |

| NASA | National Aeronautics and Space Administration |

| NIR | Near-Infrared |

| PCA | Principal Component Analysis |

| PCI | Pavement Condition Index |

| PLSDA | Partial Least Squares Discriminant Analysis |

| RGB | Red–Green–Blue |

| Si | Silicon |

| Si:As | Silicon doped with Arsenic |

| SNR | Signal-to-Noise Ratio |

| SWIR | Short-Wave Infrared |

| SVM | Support Vector Machine |

| Te | Tellurium |

| UAV | Unmanned Aerial Vehicle |

| UGV | Unmanned Ground Vehicle |

| UV | Ultraviolet |

| VIS | Visible Light |

| VNIR | Visible and Near-Infrared |

| XRD | X-ray Diffraction |

References

- Vane, G.; Goetz, A.F.H. Proceedings of the Airborne Imaging Spectrometer Data Analysis Workshop; JPL Publication: Pasadena, CA, USA, 1985; pp. 85–126. [Google Scholar]

- Goetz, A.F.H. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Environ. 2009, 113 (Suppl. S1), S5–S16. [Google Scholar] [CrossRef]

- Yoon, J. Hyperspectral Imaging for Clinical Applications. BioChip J. 2022, 16, 1–12. [Google Scholar] [CrossRef]

- Ram, B.G.; Oduor, P.; Igathinathane, C.; Howatt, K.; Sun, X. A systematic review of hyperspectral imaging in precision agriculture: Analysis of its current state and future prospects. Comput. Electron. Agric. 2024, 222, 109037. [Google Scholar] [CrossRef]

- Tamin, O.; Moung, E.G.; Dargham, J.A.; Yahya, F.; Omatu, S.; Moung, E.G. A review of hyperspectral imaging-based plastic waste detection state-of-the-arts. Int. J. Electr. Comput. Eng. (IJECE) 2023, 13, 3407–3419. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Yel, S.G.; Gormus, E.T. Exploiting hyperspectral and multispectral images in the detection of tree species: A review. Front. Remote Sens. 2023, 4, 1136289. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W. Advanced applications of hyperspectral imaging technology for food quality and safety analysis and assessment: A review—Part I: Fundamentals. Innov. Food Sci. Emerg. Technol. 2013, 19, 1–14. [Google Scholar] [CrossRef]

- Liu, L.; Miteva, T.; Delnevo, G.; Mirri, S.; Walter, P.; de Viguerie, L.; Pouyet, E. “Neural Networks for Hyperspectral Imaging of Historical Paintings: A Practical Review. Sensors 2023, 23, 2419. [Google Scholar] [CrossRef]

- Shimoni, M.; Haelterman, R.; Perneel, C. Hypersectral imaging for military and security applications: Combining Myriad processing and sensing techniques. IEEE Geosci. Remote Sens. Mag. 2019, 7, 101–117. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, L.; Wang, Q.; Jiang, L.; Qi, Y.; Wang, S.; Shen, T.; Tang, B.-H.; Gu, Y. UAV Hyperspectral Remote Sensing Image Classification: A Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 3099–3124. [Google Scholar] [CrossRef]

- Peyghambari, S.; Zhang, Y. Hyperspectral remote sensing in lithological mapping, mineral exploration, and environmental geology: An updated review. J. Appl. Remote Sens. 2021, 15, 031501. [Google Scholar] [CrossRef]

- Aburaed, N.; Alkhatib, M.Q.; Marshall, S.; Zabalza, J.; Al Ahmad, H. A Review of Spatial Enhancement of Hyperspectral Remote Sensing Imaging Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2275–2300. [Google Scholar] [CrossRef]

- Xu, R.; Li, C. A Review of High-Throughput Field Phenotyping Systems: Focusing on Ground Robots. Plant Phenomics 2022, 2022, 9760269. [Google Scholar] [CrossRef] [PubMed]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefevre, S. Deep learning for classification of hyperspectral data: A comparative review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef]

- Benelli, A.; Cevoli, C.; Fabbri, A. In-field hyperspectral imaging: An overview on the ground-based applications in agriculture. J. Agric. Eng. 2020, 51, 129–139. [Google Scholar] [CrossRef]

- Ahmed, M.R.; Yasmin, J.; Mo, C.; Lee, H.; Kim, M.S.; Hong, S.-J.; Cho, B.-K. “Outdoor Applications of Hyperspectral Imaging Technology for Monitoring Agricultural Crops: A Review. J. Biosyst. Eng. 2016, 41, 396–407. [Google Scholar] [CrossRef]

- Qian, S.E. Hyperspectral Satellites, Evolution, and Development History. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7032–7056. [Google Scholar] [CrossRef]

- Lu, Y.; Saeys, W.; Kim, M.; Peng, Y.; Lu, R. Hyperspectral imaging technology for quality and safety evaluation of horticultural products: A review and celebration of the past 20-year progress. Postharvest Biol. Technol. 2020, 170, 111318. [Google Scholar] [CrossRef]

- Vignati, S.; Tugnolo, A.; Giovenzana, V.; Pampuri, A.; Casson, A.; Guidetti, R.; Beghi, R. Hyperspectral Imaging for Fresh-Cut Fruit and Vegetable Quality Assessment: Basic Concepts and Applications. Appl. Sci. 2023, 13, 9740. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef]

- Munera, S.; Blasco, J.; Amigo, J.M.; Cubero, S.; Talens, P.; Aleixos, N. Use of hyperspectral transmittance imaging to evaluate the internal quality of nectarines. Biosyst. Eng. 2019, 182, 54–64. [Google Scholar] [CrossRef]

- Femenias, A.; Gatius, F.; Ramos, A.J.; Teixido-Orries, I.; Marín, S. Hyperspectral imaging for the classification of individual cereal kernels according to fungal and mycotoxins contamination: A review. Food Res. Int. 2022, 155, 111102. [Google Scholar] [CrossRef]

- Meléndez, J.; Guarnizo, G.; Abramski, K.M. Fast Quantification of Air Pollutants by Mid-Infrared Hyperspectral Imaging and Principal Component Analysis. Sensors 2021, 21, 2092. [Google Scholar] [CrossRef] [PubMed]

- Ariana, D.P.; Lu, R. Quality evaluation of pickling cucumbers using hyperspectral reflectance and transmittance imaging: Part I. Development of a prototype. Sens. Instrum. Food Qual. Saf. 2008, 2, 144–151. [Google Scholar] [CrossRef]

- Kim, Y.-K.; Baek, I.; Lee, K.-M.; Qin, J.; Kim, G.; Shin, B.K.; Chan, D.E.; Herrman, T.J.; Cho, S.-K.; Kim, M.S. Investigation of reflectance, fluorescence, and Raman hyperspectral imaging techniques for rapid detection of aflatoxins in ground maize. Food Control 2022, 132, 108479. [Google Scholar] [CrossRef]

- Rodrigues e Brito, L.; Chaves, A.B.; Braz, A.; Pimentel, M.F. Raman hyperspectral imaging and a novel approach for objective determination of the order of crossing ink lines. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2019, 223, 117287. [Google Scholar] [CrossRef]

- Keef, J.L. Hyper-Spectral Sensor Calibration Extrapolated from Multi-Spectral Measurements. 2008. Available online: https://repository.arizona.edu/handle/10150/193627 (accessed on 5 January 2025).

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.M.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Papp, A.; Pegoraro, J.; Bauer, D.; Taupe, P.; Wiesmeyr, C.; Kriechbaum-Zabini, A. Automatic annotation of hyperspectral images and spectral signal classification of people and vehicles in areas of dense vegetation with deep learning. Remote Sens. 2020, 12, 2111. [Google Scholar] [CrossRef]

- Gardner, C.W.; Wentworth, R.; Treado, P.J.; Batavia, P.; Gilbert, G. Remote chemical biological and explosive agent detection using a robot-based Raman detector. Unmanned Syst. Technol. X 2008, 6962, 69620T. [Google Scholar] [CrossRef]

- Noh, H.K.; Lu, R. Hyperspectral laser-induced fluorescence imaging for assessing apple fruit quality. Postharvest Biol. Technol. 2007, 43, 193–201. [Google Scholar] [CrossRef]

- Zou, Z.; Wang, Q.; Wu, Q.; Li, M.; Zhen, J.; Yuan, D.; Xiao, Y.; Xu, C.; Yin, S.; Zhou, M.; et al. Fluorescence hyperspectral imaging technology combined with chemometrics for kiwifruit quality attribute assessment and non-destructive judgment of maturity. Talanta 2024, 280, 126793. [Google Scholar] [CrossRef] [PubMed]

- Schwaiger, S.; Muster, L.; Scherl, A.; Trivisonne, P.; Wöber, W.; Thalhammer, S. Towards full autonomy in mobile robot navigation and manipulation. e+i Elektrotechnik Und Informationstechnik 2024, 141, 400–410. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson Education: London, UK, 2008. [Google Scholar]

- Bhargava, A.; Sachdeva, A.; Sharma, K.; Alsharif, M.H.; Uthansakul, P.; Uthansakul, M. Hyperspectral imaging and its applications: A review. Heliyon 2024, 10, e33208. [Google Scholar] [CrossRef] [PubMed]

- Dalgleish, F.R.; Caimi, F.M.; Mazel, C.H.; Glynn, J.M. Extended Range Underwater Optical Imaging Architecture. In Proceedings of the OCEANS 2006, Boston, MA, USA, 18–21 September 2006; pp. 1–5. [Google Scholar] [CrossRef]

- Segovia, I.; Pliego, A.; Papaelias, M.; Márquez, F.P.G. Optimal management of marine inspection with autonomous underwater vehicles. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 760–771. [Google Scholar] [CrossRef]

- Rogass, C.; Koerting, F.M.; Mielke, C.; Brell, M.; Boesche, N.K.; Bade, M.; Hohmann, C. Translational imaging spectroscopy for proximal sensing. Sensors 2017, 17, 1857. [Google Scholar] [CrossRef] [PubMed]

- Verhoeven, G. Resolving some spatial resolution issues—Part 1: Between line pairs and sampling distance. AARGnews 2018, 57, 25–34. [Google Scholar] [CrossRef]

- Théau, J. Temporal Resolution. In Encyclopedia of GIS; Shekhar, S., Xiong, H., Eds.; Springer: Boston, MA, USA, 2008; pp. 1150–1151. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The Need for Accurate Geometric and Radiometric Corrections of Drone-Borne Hyperspectral Data for Mineral Exploration: MEPHySTo—A Toolbox for Pre-Processing Drone-Borne Hyperspectral Data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Liu, D.; Zeng, X.-A.; Sun, D.-W. Recent Developments and Applications of Hyperspectral Imaging for Quality Evaluation of Agricultural Products: A Review. Crit. Rev. Food Sci. Nutr. 2015, 55, 1744–1757. [Google Scholar] [CrossRef]

- Gowen, A.A.; O’Donnell, C.P.; Cullen, P.J.; Downey, G.; Frias, J.M. Hyperspectral imaging—An emerging process analytical tool for food quality and safety control. Trends Food Sci. Technol. 2007, 18, 590–598. [Google Scholar] [CrossRef]

- Gutiérrez-Zaballa, J.; Basterretxea, K.; Echanobe, J.; Martínez, M.V.; Martinez-Corral, U.; Mata-Carballeira, Ó.; del Campo, I. On-chip hyperspectral image segmentation with fully convolutional networks for scene understanding in autonomous driving. J. Syst. Archit. 2023, 139, 102878. [Google Scholar] [CrossRef]

- Tran, M.H.; Fei, B. Compact and ultracompact spectral imagers: Technology and applications in biomedical imaging. J. Biomed. Opt. 2023, 28, 040901. [Google Scholar] [CrossRef]

- Winkens, C.; Sattler, F.; Adams, V.; Paulus, D. HyKo: A Spectral Dataset for Scene Understanding. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, ICCVW 2017, Venice, Italy, 22–29 October 2017; pp. 254–261. [Google Scholar] [CrossRef]

- Hanson, N.; Pyatski, B.; Hibbard, S.; Dimarzio, C.; Padir, T. Hyper-Drive: Visible-Short Wave Infrared Hyperspectral Imaging Datasets for Robots in Unstructured Environments. In Proceedings of the 2023 13th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Athens, Greece, 31 October–2 November 2023. [Google Scholar] [CrossRef]

- Gutierrez-Zaballa, J.; Basterretxea, K.; Echanobe, J.; Martinez, M.V.; Martinez-Corral, U. HSI-Drive v2.0: More Data for New Challenges in Scene Understanding for Autonomous Driving. In Proceedings of the 2023 IEEE Symposium Series on Computational Intelligence (SSCI), Mexico City, Mexico, 5–8 December 2023; pp. 207–214. [Google Scholar] [CrossRef]

- Antson, L.; Vandenhoeke, A.; Shimoni, M.; Hamesse, C.; Luong, H. Detection and Tracking of Search and Rescue Personnel under Hindered Light Conditions Using Hyperspectral Imaging. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022. [Google Scholar] [CrossRef]

- Blanch-Perez-del-Notario, C.; Luthman, S.; Lefrant, R.; Gonzalez, P.; Lambrechts, A. Compact high-speed snapshot hyperspectral imager in the SWIR range (1.1–1.65 nm) and its potential in sorting/recycling industry. Proc. SPIE 2022, 12094, 1209407. [Google Scholar] [CrossRef]

- MacCormac, O.; Noonan, P.; Janatka, M.; Horgan, C.C.; Bahl, A.; Qiu, J.; Elliot, M.; Trotouin, T.; Jacobs, J.; Patel, S.; et al. Lightfield hyperspectral imaging in neuro-oncology surgery: An IDEAL 0 and 1 study. Front. Neurosci. 2023, 17, 1239764. [Google Scholar]

- Cui, Q.; Park, J.; Smith, R.T.; Gao, L. Snapshot hyperspectral light field imaging using image mapping spectrometry. Opt. Lett. 2020, 45, 772. [Google Scholar] [CrossRef]

- Wisotzky, E.L.; Eisert, P.; Hilsmann, A. 3D Hyperspectral Light-Field Imaging: A first intraoperative implementation. Curr. Dir. Biomed. Eng. 2023, 9, 611–614. [Google Scholar] [CrossRef]

- Manolakis, D.; Pieper, M.; Truslow, E.; Lockwood, R.; Weisner, A.; Jacobson, J.; Cooley, T. Longwave infrared hyperspectral imaging: Principles, progress, and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 72–100. [Google Scholar] [CrossRef]

- Jørgensen, A.L.; Kjelstrup-Hansen, J.; Jensen, B.; Petrunin, V.; Fink, S.F.; Jørgensen, B. Acquisition and Analysis of Hyperspectral Thermal Images for Sample Segregation. Appl. Spectrosc. 2021, 75, 317–324. [Google Scholar] [CrossRef] [PubMed]

- Hanson, N.; Prajapati, S.; Tukpah, J.; Mewada, Y.; Padir, T. Forest Biomass Mapping with Terrestrial Hyperspectral Imaging for Wildfire Risk Monitoring. In Proceedings of the 2024 IEEE International Symposium on Safety Security Rescue Robotics (SSRR), New York, NY, USA, 12–14 November 2024; pp. 178–183. [Google Scholar] [CrossRef]

- Feng, H.; Chen, G.; Xiong, L.; Liu, Q.; Yang, W. Accurate digitization of the chlorophyll distribution of individual rice leaves using hyperspectral imaging and an integrated image analysis pipeline. Front. Plant Sci. 2017, 8, 1238. [Google Scholar] [CrossRef]

- Hanson, N.; Kelestemur, T.; Berman, J.; Ritzenhoff, D.; Padir, T. Hyperbot-A Benchmarking Testbed for Acquisition of Robot-Centric Hyperspectral Scene and In-Hand Object Data. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022. [Google Scholar] [CrossRef]

- Tao, J.; Gu, Y.; Hao, X.; Liang, R.; Wang, B.; Cheng, Z.; Yan, B.; Chen, G. Combination of hyperspectral imaging and machine learning models for fast characterization and classification of municipal solid waste. Resour. Conserv. Recycl. 2023, 188, 106731. [Google Scholar] [CrossRef]

- Zahavi, A.; Palshin, A.; Liyanage, D.C.; Tamre, M. Influence of illumination sources on hyperspectral imaging. In Proceedings of the 2019 20th International Conference on Research and Education in Mechatronics (REM), Wels, Austria, 23–24 May 2019. [Google Scholar] [CrossRef]

- Moghadam, P.; Sharma, N.; Hefeeda, M.; Moghadam, P.A.; Sharma, N.; Hefeeda, M. Enabling hyperspectral imaging in diverse illumination conditions for indoor applications. In Proceedings of the 12th ACM Multimedia Systems Conference, Istanbul, Turkey, 28 September 2021–1 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; Volume 21, pp. 24–35. [Google Scholar] [CrossRef]

- Baik, H.; Son, Y.S.; Kim, K.E. A tunnel coaxial 3D hyperspectral scanning system for underground mine investigation. Sci. Rep. 2023, 13, 13. [Google Scholar] [CrossRef]

- Hanson, N.; Shaham, M.; Erdogmus, D.; Padir, T. VAST: Visual and Spectral Terrain Classification in Unstructured Multi-Class Environments. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 3956–3963. [Google Scholar] [CrossRef]

- Dieters, S. Robot-Aided Hyperspectral Imaging for Mineral Exploration in Underground Mining Environments. Available online: https://aaltodoc.aalto.fi/handle/123456789/126663 (accessed on 29 January 2025).

- MacIsaac, D.; Kanner, G.; Anderson, G. Basic physics of the incandescent lamp (lightbulb). Phys. Teach. 1999, 37, 520–523. [Google Scholar]

- Elvidge, C.D.; Keith, D.M.; Tuttle, B.T.; Baugh, K.E. Spectral identification of lighting type and character. Sensors 2010, 10, 3961–3988. [Google Scholar] [CrossRef] [PubMed]

- Simoncic, U.; Milanic, M. Hyperspectral Imaging with Active Illumination: A Theoretical Study on the Use of Incandescent Lamp and Variable Filament Temperature. Sensors 2023, 23, 9326. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.-F.; Ding, Z.; Song, J.-Y.; Wang, Y.; Bian, L.-F.; Yang, C. Low-cost monochromatic uniform illumination HSI system for detection and classification of apple bruise. J. Food Meas. Charact. 2024, 18, 4853–4864. [Google Scholar] [CrossRef]

- Al Ktash, M.; Knoblich, M.; Eberle, M.; Wackenhut, F.; Brecht, M. UV Hyperspectral Imaging with Xenon and Deuterium Light Sources: Integrating PCA and Neural Networks for Analysis of Different Raw Cotton Types. J. Imaging 2024, 10, 310. [Google Scholar] [CrossRef]

- Shaikh, M.S.; Jaferzadeh, K.; Thörnberg, B.; Casselgren, J. Calibration of a Hyper-Spectral Imaging System Using a Low-Cost Reference. Sensors 2021, 21, 3738. [Google Scholar] [CrossRef]

- Noviyanto, A.; Abdulla, W.H. Segmentation and calibration of hyperspectral imaging for honey analysis. Comput. Electron. Agric. 2019, 159, 129–139. [Google Scholar] [CrossRef]

- Tejasree, G.; Agilandeeswari, L. An extensive review of hyperspectral image classification and prediction: Techniques and challenges. Multimed Tools Appl. 2024, 83, 80941–81038. [Google Scholar] [CrossRef]

- Green, R.O.; Chrien, T.G.; Nielson, P.J.; Sarture, C.M.; Eng, B.T.; Chovit, C.; Murray, A.T.; Eastwood, M.L.; Novack, H.I. Airborne visible/infrared imaging spectrometer (AVIRIS): Recent improvements to the sensor and data facility. Imaging Spectrom. Terr. Environ. 1993, 1937, 180. [Google Scholar] [CrossRef]

- Boldrini, B.; Kessler, W.; Rebnera, K.; Kessler, R.W. Hyperspectral Imaging: A Review of Best Practice, Performance and Pitfalls for in-line and on-line Applications. J. Near Infrared Spectrosc. 2012, 20, 483–508. [Google Scholar] [CrossRef]

- Basantia, N.; Nollet, L.M.; Kamruzzaman, M. Hyperspectral Imaging Analysis and Applications for Food Quality; CRC Press: Boca Raton, FL, USA, 2018; pp. 10–11. [Google Scholar] [CrossRef]

- Garini, Y.; Young, I.T.; McNamara, G. Spectral imaging: Principles and applications. Cytometry A 2006, 69, 735–747. [Google Scholar] [CrossRef] [PubMed]

- Hagen, N.A.; Kudenov, M.W. Review of snapshot spectral imaging technologies. Opt. Eng. 2013, 52, 090901. [Google Scholar] [CrossRef]

- Qian, K.; Shen, J.; Wang, S.; Sun, W. Recent advances in object tracking using hyperspectral videos: A survey. Multimed. Tools Appl. 2024, 83, 56155–56181. [Google Scholar] [CrossRef]

- Xiong, Z.; Wang, L.; Li, H.; Liu, D.; Wu, F. Snapshot hyperspectral light field imaging. In Proceedings of the 30th IEEE Conference on Computer Vision. and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6873–6881. [Google Scholar] [CrossRef]

- Winkens, C.; Sattler, F.; Paulus, D. Hyperspectral terrain classification for ground vehicles. In VISIGRAPP 2017, Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Porto, Potrugal, 27 February–1 March 2017; SCITEPRESS: Setúbal, Portugal, 2017; Volume 5, pp. 417–424. [Google Scholar] [CrossRef]

- Lai, Z. Cameras in Advanced Driver-Assistance Systems and Autonomous Driving Vehicles. In Advanced Driver Assistance Systems and Autonomous Vehicles: From Fundamentals to Applications; Springer Nature: Singapore, 2022; pp. 213–243. [Google Scholar] [CrossRef]

- Rassõlkin, A.; Sell, R.; Leier, M. Development case study of the first estonian self-driving car, iseauto. Electr. Control Commun. Eng. 2018, 14, 81–88. [Google Scholar] [CrossRef]

- Canis, B. Issues in Autonomous Vehicle Testing and Deployment. DC, April 2021. Available online: https://crsreports.congress.gov (accessed on 12 January 2025).

- Chougule, A.; Chamola, V.; Sam, A.; Yu, F.R.; Sikdar, B. A Comprehensive Review on Limitations of Autonomous Driving and Its Impact on Accidents and Collisions. IEEE Open J. Veh. Technol. 2024, 5, 142–161. [Google Scholar] [CrossRef]

- Pinchon, N.; Cassignol, O.; Nicolas, A.; Bernardin, F.; Leduc, P.; Tarel, J.-P.; Brémond, R.; Bercier, E.; Brunet, J. All-weather vision for automotive safety: Which spectral band? In Advanced Microsystems for Automotive Applications; Springer: Berlin, Germany, 2018; pp. 3–15. [Google Scholar]

- Winkens, C.; Paulus, D. Context aware hyperspectral scene analysis. IS T Int. Symp. Electron. Imaging Sci. Technol. 2018, 30, art00016. [Google Scholar] [CrossRef]

- Winkens, C.; Sattler, F.; Paulus, D. Deep dimension reduction for spatial-spectral road scene classification. IS T Int. Symp. Electron. Imaging Sci. Technol. 2019, 31, art00018. [Google Scholar] [CrossRef]

- Winkens, C.; Kobelt, V.; Paulus, D. Robust features for snapshot hyperspectral terrain-classification. In Lecture Notes in Computer Science; including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin/Heidelberg, Germany, 2017; Volume 10424 LNCS, pp. 16–27. [Google Scholar] [CrossRef]

- Basterretxea, K.; Martinez, V.; Echanobe, J.; Gutierrez-Zaballa, J.; Del Campo, I. HSI-drive: A dataset for the research of hyperspectral image processing applied to autonomous driving systems. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 866–873. [Google Scholar] [CrossRef]

- Du, Q.; Yang, H. Similarity-based unsupervised band selection for hyperspectral image analysis. IEEE Geosci. Remote Sens. Lett. 2008, 5, 564–568. [Google Scholar] [CrossRef]

- You, S.; Huang, E.; Liang, S.; Zheng, Y.; Li, Y.; Wang, F.; Lin, S.; Shen, Q.; Cao, X.; Zhang, D.; et al. Hyperspectral City V1.0 Dataset and Benchmark. Available online: https://arxiv.org/abs/1907.10270v4 (accessed on 1 January 2025).

- Huang, Y.; Ren, T.; Shen, Q.; Fu, Y.; You, S. HSICityV2: Urban Scene Understanding via Hyperspectral Images. Available online: https://pbdl-ws.github.io/pbdl2021/challenge/acknowledgement_and_references.html (accessed on 12 January 2025).

- Huang, Y.; Shen, Q.; Fu, Y.; You, S. Weakly-supervised Semantic Segmentation in Cityscape via Hyperspectral Image. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1117–1126. [Google Scholar] [CrossRef]

- Theisen, N.; Bartsch, R.; Paulus, D.; Neubert, P. HS3-Bench: A Benchmark and Strong Baseline for Hyperspectral Semantic Segmentation in Driving Scenarios. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab, 14–18 October 2024; pp. 5895–5901. [Google Scholar] [CrossRef]

- Kelasidi, E.; Jesmani, M.; Pettersen, K.Y.; Gravdahl, J.T. Locomotion efficiency optimization of biologically inspired snake robots. Appl. Sci. 2018, 8, 80. [Google Scholar] [CrossRef]

- Jakubczyk, K.; Siemi, B.; Wi, R.; Rapcewicz, J. Hyperspectral Imaging for Mobile Robot Navigation. Sensors 2022, 23, 383. [Google Scholar] [CrossRef]

- Siemiątkowska, B.; Więckowski, R.; Rapcewicz, J.; Kowaliński, J. Semantic-Aware Path Planning with Hexagonal Grids and Vehicle Dynamic Constraints. Energies 2023, 16, 5127. [Google Scholar] [CrossRef]

- Liyanage, D.C.; Hudjakov, R.; Tamre, M. Hyperspectral imaging methods improve RGB image semantic segmentation of unstructured terrains. In Proceedings of the 2020 International Conference Mechatronic Systems and Materials (MSM), Bialystok, Poland, 1–3 July 2020. [Google Scholar] [CrossRef]

- Liyanage, D.C.; Hudjakov, R.; Tamre, M. Hyperspectral image band selection using pooling. In Proceedings of the 2020 International Conference Mechatronic Systems and Materials (MSM), Bialystok, Poland, 1–3 July 2020. [Google Scholar] [CrossRef]

- Liyanage, D.C. Smart Terrain Perception Using Hyperspectral Imaging. Tallinn University of Technology, Tallinn. 2021. Available online: https://digikogu.taltech.ee/et/item/e94dc880-2e42-4203-8ddf-9f26b4b0f301 (accessed on 14 February 2025).

- Bhattacharyya, C.; Kim, S. Black Ice Classification with Hyperspectral Imaging and Deep Learning. Appl. Sci. 2023, 13, 11977. [Google Scholar] [CrossRef]

- Kim, H.; Kwon, S.; Kim, S. Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control. Sensors 2016, 16, 1058. [Google Scholar] [CrossRef]

- Herweg, J.A.; Kerekes, J.P.; Eismann, M.T. Hyperspectral measurements of natural signatures: Pedestrians. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XVIII; SPIE: Bellingham, WA, USA, 2012; Volume 8390, pp. 397–404. [Google Scholar] [CrossRef]

- Bhamri, A.; Mikulski, D.; Medellin, A.; Lanagri, R.; Grabowsky, D.; Gopalswamy, S. Unifying Ontological Framework for All-Terrain Datasets. In Proceedings of the 2023 Seventh IEEE International Conference on Robotic Computing (IRC), Laguna Hills, CA, USA, 11–13 December 2023; pp. 292–296. [Google Scholar] [CrossRef]

- Liyanage, D.; Tamre, M.; Hudjakov, R. Hyperspectral Image Dataset of Unstructured Terrains for UGV Perception. Tallinn, February 2024. Available online: https://ieee-dataport.org/documents/hyperspectral-image-dataset-unstructured-terrains-ugv-perception#files (accessed on 14 February 2025).

- Lu, J.; Liu, H.; Yao, Y.; Tao, S.; Tang, Z.; Lu, J. Hsi road: A hyper spectral image dataset for road segmentation. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020. [Google Scholar] [CrossRef]

- Yamasaki, Y.; Morie, M.; Noguchi, N. Development of a high-accuracy autonomous sensing system for a field scouting robot. Comput. Electron. Agric. 2022, 193, 106630. [Google Scholar] [CrossRef]

- Benelli, A.; Cevoli, C.; Ragni, L.; Fabbri, A. In-field and non-destructive monitoring of grapes maturity by hyperspectral imaging. Biosyst. Eng. 2021, 207, 59–67. [Google Scholar] [CrossRef]

- Trierscheid, M.; Pellenz, J.; Paulus, D.; Balthasar, D. Hyperspectral Imaging or Victim Detection with Rescue Robots. In Proceedings of the 2008 IEEE International Workshop on Safety, Security and Rescue Robotics, Sendai, Japan, 21–24 October 2008; pp. 7–12. [Google Scholar] [CrossRef]

- Ulloa, C.C.; Garrido, L.; del Cerro, J.; Barrientos, A. Autonomous victim detection system based on deep learning and multispectral imagery. Mach. Learn. Sci. Technol. 2023, 4, 015018. [Google Scholar] [CrossRef]

- Herold, M.; Roberts, D. Mapping asphalt road conditions with hyperspectral remote sensing. In Proceedings of the URS2005 conference, Phoenix, AZ, USA, 14–16 March 2005. [Google Scholar]

- Herold, M.; Roberts, D.; Noronha, V.; Smadi, O. Imaging spectrometry and asphalt road surveys. Transp. Res. Part C Emerg. Technol. 2008, 16, 153–166. [Google Scholar] [CrossRef]

- Abdellatif, M.; Peel, H.; Cohn, A.G.; Fuentes, R. Hyperspectral imaging for autonomous inspection of road pavement defects. In Proceedings of the 36th International Symposium on Automation and Robotics in Construction, ISARC 2019, Banff, AB, Canada, 21–24 May 2019; pp. 384–392. [Google Scholar] [CrossRef]

- Özdemir, O.B.; Soydan, H.; Çetin, Y.Y.; Düzgün, H.Ş. Neural Network Based Pavement Condition Assessment with Hyperspectral Images. Remote Sens. 2020, 12, 3931. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, X.; Ren, M.; Zhou, B.; Sun, M.; Feng, Z.; Chen, B.; Zhi, X. A multiscale enhanced pavement crack segmentation network coupling spectral and spatial information of UAV hyperspectral imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103772. [Google Scholar] [CrossRef]

- Mei, A.; Zampetti, E.; Di Mascio, P.; Fontinovo, G.; Papa, P.; D’andrea, A. ROADS—Rover for Bituminous Pavement Distress Survey: An Unmanned Ground Vehicle (UGV) Prototype for Pavement Distress Evaluation. Sensors 2022, 22, 3414. [Google Scholar] [CrossRef]

- Abdellatif, M.; Peel, H.; Cohn, A.G.; Fuentes, R. Pavement Crack Detection from Hyperspectral Images Using a Novel Asphalt Crack Index. Remote Sens. 2020, 12, 3084. [Google Scholar] [CrossRef]

- Yang, H.; Ni, J.; Chen, C.; Chen, Y. Weathering assessment approach for building sandstone using hyperspectral imaging technique. Herit. Sci. 2023, 11, 70. [Google Scholar] [CrossRef]

- Zahiri, Z.; Laefer, D.F.; Kurz, T.; Buckley, S.; Gowen, A. A comparison of ground-based hyperspectral imaging and red-edge multispectral imaging for façade material classification. Autom. Constr. 2022, 136, 104164. [Google Scholar] [CrossRef]

- Amano, K.; Lou, E.C.W.; Edwards, R. Integration of point cloud data and hyperspectral imaging as a data gathering methodology for refurbishment projects using building information modelling (BIM). J. Facil. Manag. 2019, 17, 57–75. [Google Scholar] [CrossRef]

- Florkowski, M. Hyperspectral imaging of high voltage insulating materials subjected to partial discharges. Measurement 2020, 164, 108070. [Google Scholar] [CrossRef]

- De Kerf, T. Corrosion Inspection Using Hyperspectral Imaging for Offshore Wind Turbines. Antwerpen, October 2023. Available online: https://www.researchgate.net/publication/374476859_PhD_Corrosion_inspection_using_hyperspectral_imaging_for_offshore_wind_turbines (accessed on 30 January 2025).

- Rizk, P.; Younes, R.; Ilinca, A.; Khoder, J. Wind turbine blade defect detection using hyperspectral imaging. Remote Sens. Appl. 2021, 22, 100522. [Google Scholar] [CrossRef]

- Winkens, C.; Adams, V.; Paulus, D. Automatic shadow detection using hyperspectral data for terrain classification. IS T Int. Symp. Electron. Imaging Sci. Technol. 2019, 31, art00004. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Illumination compensation in ground based hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2017, 129, 162–178. [Google Scholar] [CrossRef]

- Alamús, R.; Bará, S.; Corbera, J.; Escofet, J.; Palà, V.; Pipia, L.; Tardà, A. Ground-based hyperspectral analysis of the urban nightscape. ISPRS J. Photogramm. Remote Sens. 2017, 124, 16–26. [Google Scholar] [CrossRef]

- Gutieerrez-Zaballa, J.; Basterretxea, K.; Echanobe, J. Designing DNNs for a trade-off between robustness and processing performance in embedded devices. In Proceedings of the 2024 39th Conference on Design of Circuits and Integrated Systems, DCIS 2024, Catania, Italy, 13–15 November 2024. [Google Scholar] [CrossRef]

- Gutiérrez-Zaballa, J.; Basterretxea, K.; Echanobe, J.; Mata-Carballeira, Ó.; Martínez, M.V. Rapid Deployment of Domain-specific Hyperspectral Image Processors with Application to Autonomous Driving. In Proceedings of the 2023 30th IEEE International Conference on Electronics, Circuits and Systems (ICECS), Istanbul, Turkiye, 4–7 December 2023. [Google Scholar] [CrossRef]

| Acquisition Mode | Point Scanning | Line Scanning | Area Scanning | Snapshot |

|---|---|---|---|---|

| Sensitivity to moving and vibration | High | High | High | Low |

| Spectral resolution | High | High | Low | Moderate |

| FOV per measurement | Low | Moderate | High | High |

| Acquisition speed | Low | Low | High | Low |

| Dependence of spatial resolution on acquisition speed | High | Moderate | Moderate | Low |

| Spatial resolution is dependent on platform motion or internal scanning components | Yes | Yes | No | No |

| Applicability for inspection | High | High | Moderate | Moderate |

| Applicability for navigation | Low | Moderate | Low | High |

| Strengths | Weaknesses |

|---|---|

|

|

| Opportunities | Threats |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valme, D.; Rassõlkin, A.; Liyanage, D.C. From ADAS to Material-Informed Inspection: Review of Hyperspectral Imaging Applications on Mobile Ground Robots. Sensors 2025, 25, 2346. https://doi.org/10.3390/s25082346

Valme D, Rassõlkin A, Liyanage DC. From ADAS to Material-Informed Inspection: Review of Hyperspectral Imaging Applications on Mobile Ground Robots. Sensors. 2025; 25(8):2346. https://doi.org/10.3390/s25082346

Chicago/Turabian StyleValme, Daniil, Anton Rassõlkin, and Dhanushka C. Liyanage. 2025. "From ADAS to Material-Informed Inspection: Review of Hyperspectral Imaging Applications on Mobile Ground Robots" Sensors 25, no. 8: 2346. https://doi.org/10.3390/s25082346

APA StyleValme, D., Rassõlkin, A., & Liyanage, D. C. (2025). From ADAS to Material-Informed Inspection: Review of Hyperspectral Imaging Applications on Mobile Ground Robots. Sensors, 25(8), 2346. https://doi.org/10.3390/s25082346