Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling

Abstract

1. Introduction

2. Motivations and Challenges

3. Materials and Methods

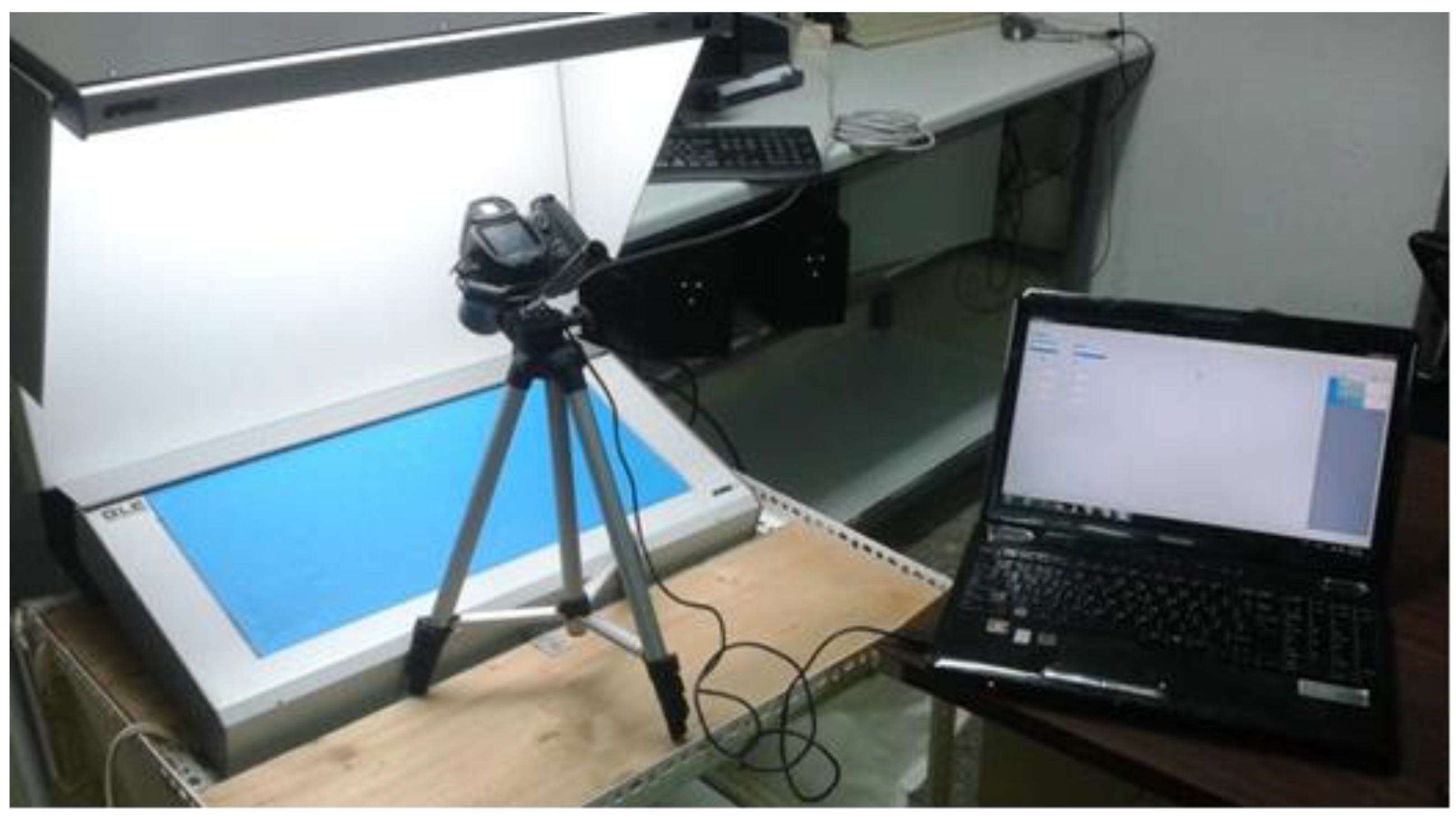

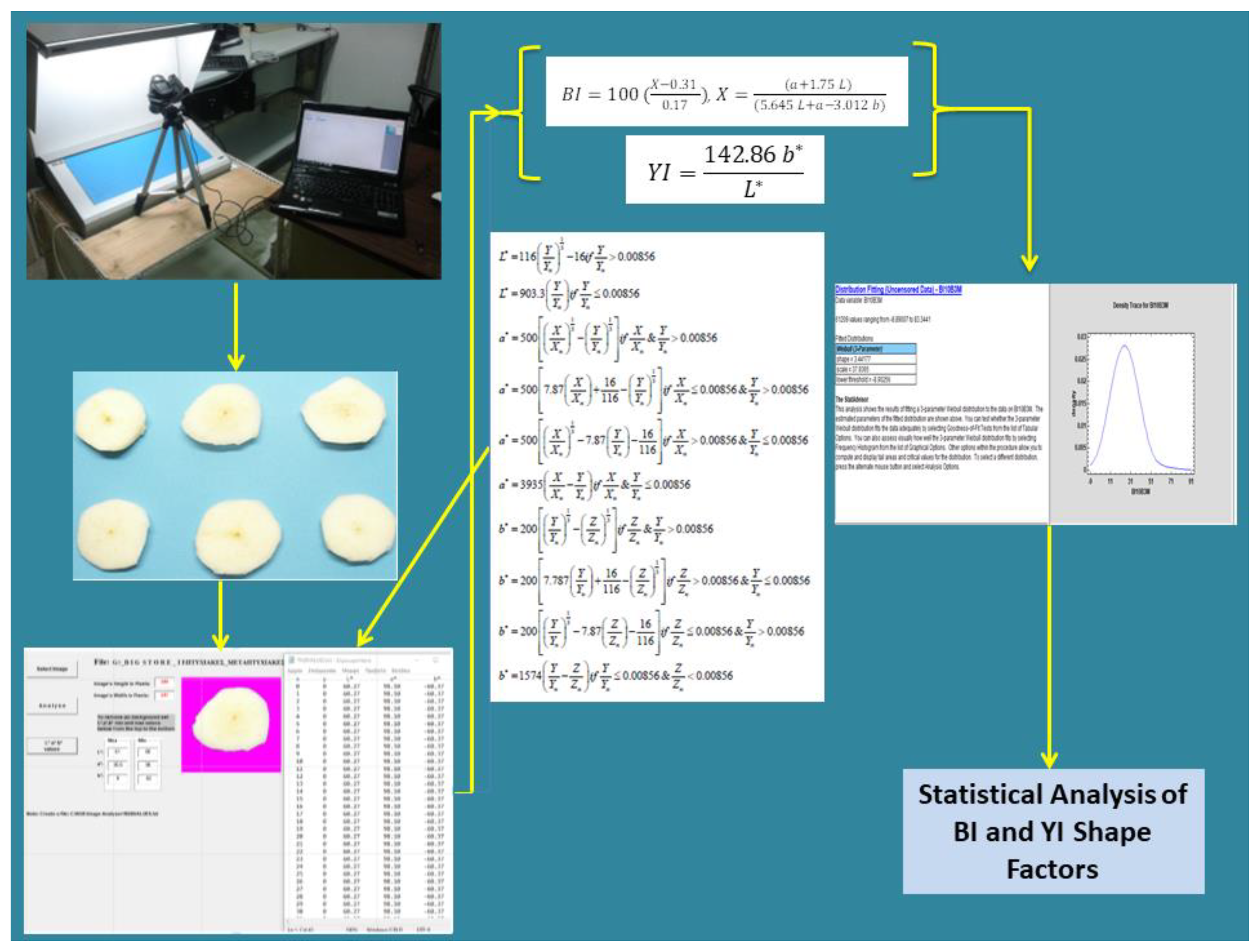

3.1. Data Preparation

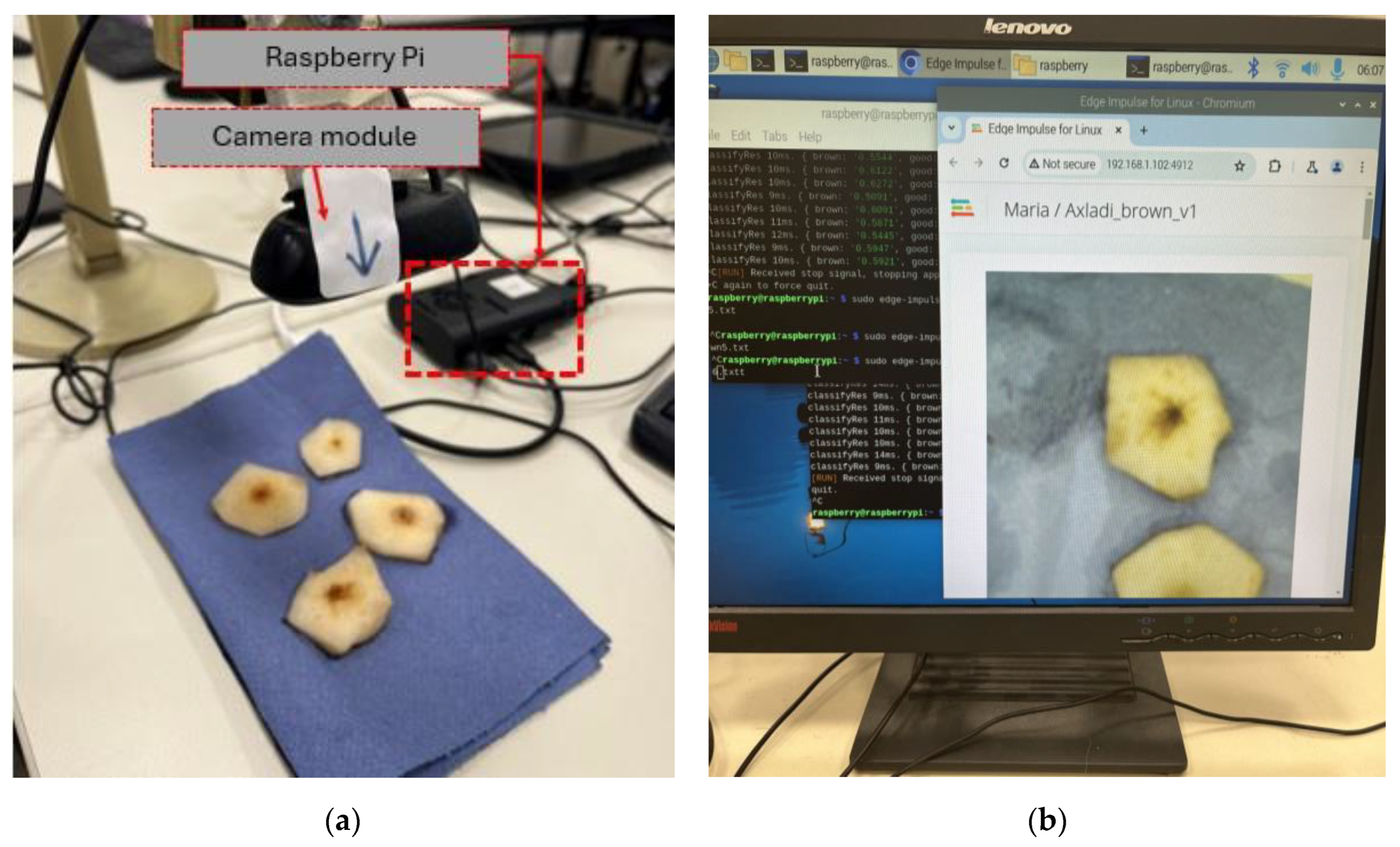

3.2. AI-Based Approach

3.2.1. Machine Learning Steps

3.2.2. Training Details and On-Device Model Integration

3.3. Stochastic Approach

3.3.1. Image Thresholding

3.3.2. Image Segmentation

3.3.3. CIE L*a*b* Model

- -

- CIE Lab* is the most widely used colour space to monitor food and fruit browning because is objective, uniform, device-independent, and reproducible across different imaging systems and matches human vision, allowing for the quantitative tracking of colour changes due to browning. It is used in image analysis and the analysis of spectrophotometry and colourimeter data. Applications of this model have showed that browning is negatively correlated with L* (as fruit browns, it gets darker), and a* shifts toward red and b* increases as browning progresses.

- -

- CIE LCh° is better for hue and chroma interpretation. It is derived from the Lab* colour model but is more intuitive for colour perception as it uses chroma (C*) and hue angle (h°) instead of a* and b*. Browning results based on this model have showed that as browning progresses, h° shifts toward yellow-red indicating oxidation, and C* decreases as brown pigments reduce the colour vibrancy.

- -

- CIE XYZ is a fundamental colour model for spectrophotometry. This model is the basis for all CIE colour models and is used in spectrophotometers and hyperspectral imaging. For fruit browning analysis, the raw reflectance data of browned fruits are transformed into XYZ values. This colour model is useful for advanced colourimetry and light interaction studies but it is not suitable for visual interpretation.

- -

- RGB and HSV are used for image processing in digital analyses and are used in computer vision, hyperspectral imaging, and digital food analysis (ripeness, colour-based sorting, identifying defects). Although they are simple, they are very sensitive to lighting conditions (device-dependent since values change with different cameras, lighting, and sensors). Using the RGB model, fruit browning is reflected as a reduction in R/G values and an increase in B (due to blue-darkening effects). In the HSV colour model, fruit browning often shifts the hue from yellow/orange (fresh fruit) to brown, the saturation decreases due to oxidation and enzymatic reactions, and browning areas tend to appear darker due to pigment changes affecting the value (V) which represents the brightness.

- -

3.3.4. Browning and Yellowness Indices

3.3.5. Statistical Analysis and Weibull Tri-Parametric Distribution

4. Results

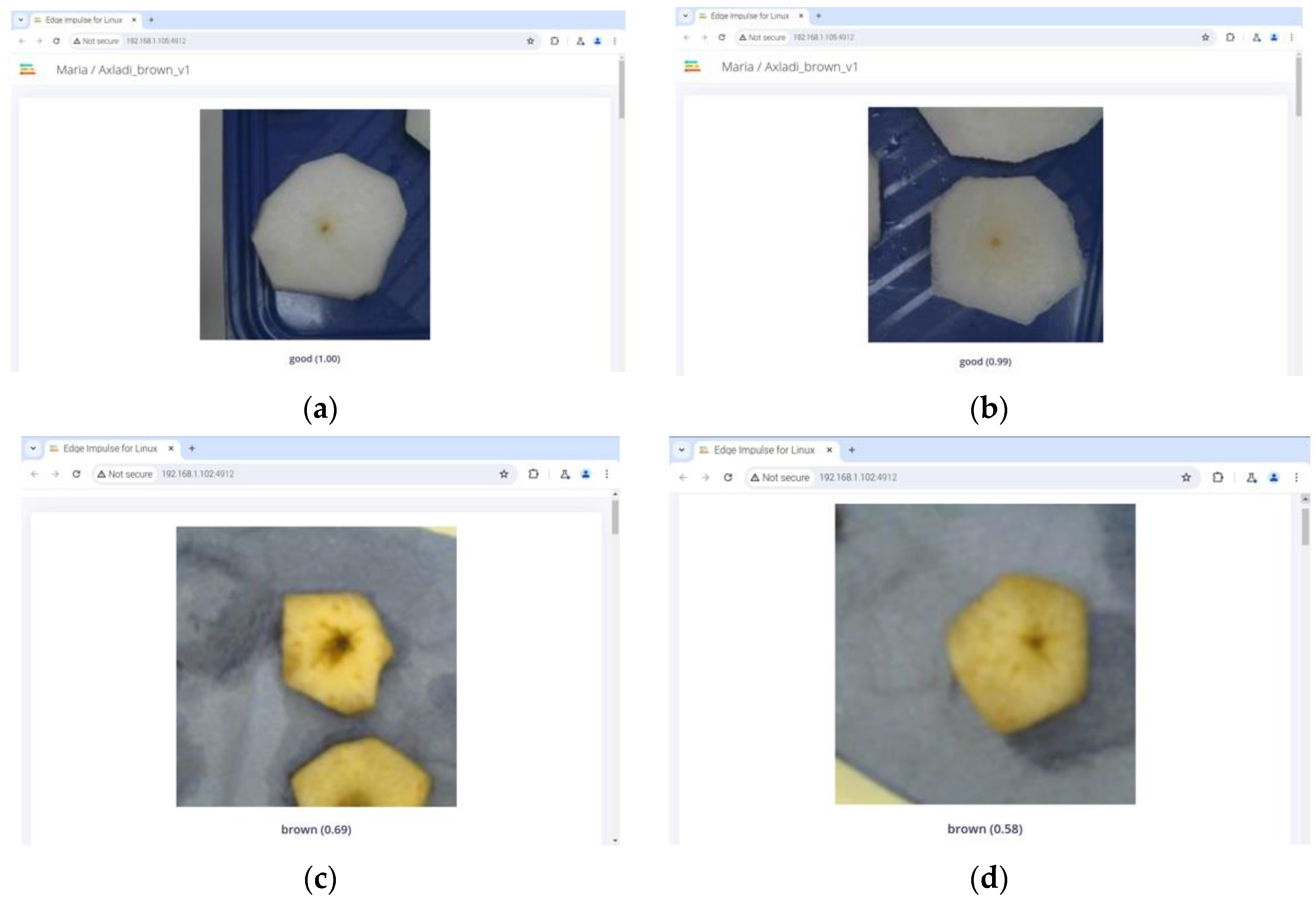

4.1. Neural Network Model Performance

- Sensitivity (or recall) measures how effectively a classifier can recognize the positive samples. The sensitivity was calculated as follows:

- Precision is the ratio of correctly predicted positive examples to the total number of predicted positive examples in a given class and was calculated as follows:

- The F1-score is the harmonic mean of the sensitivity and precision:

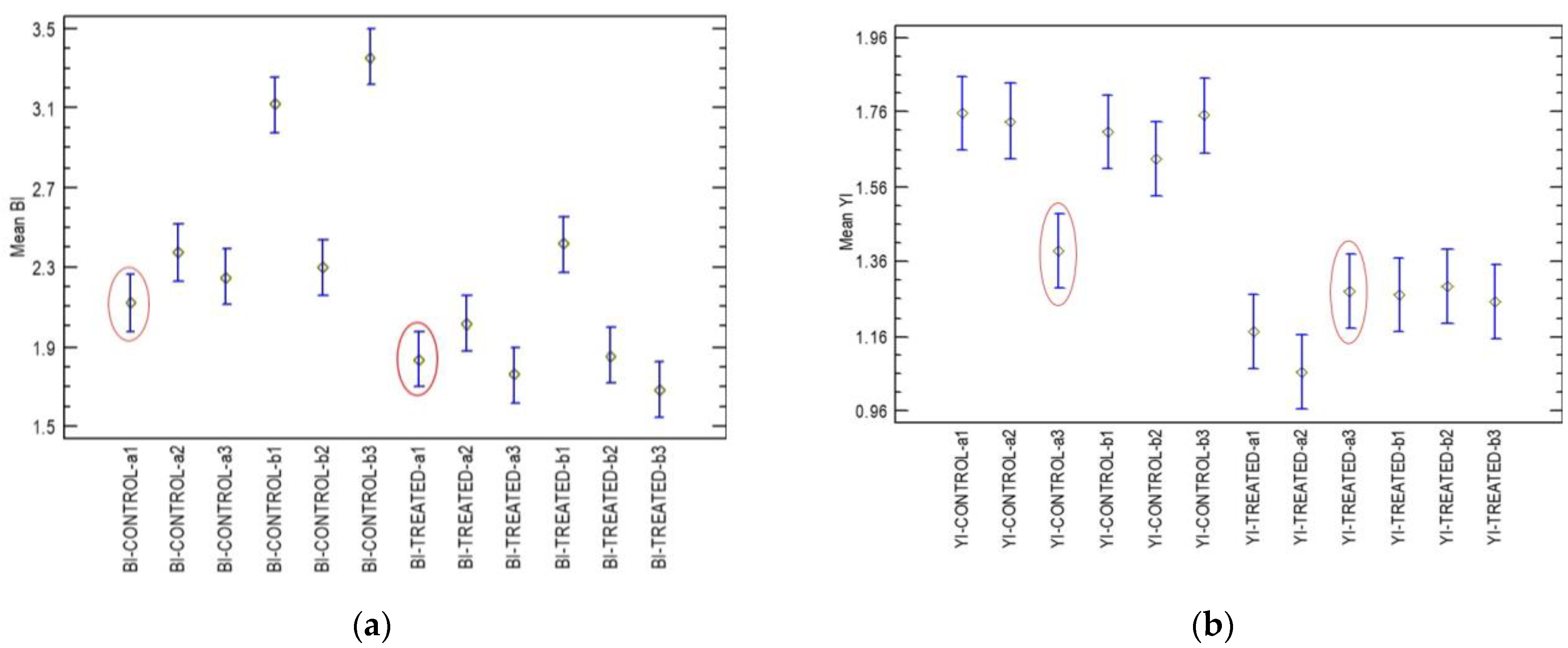

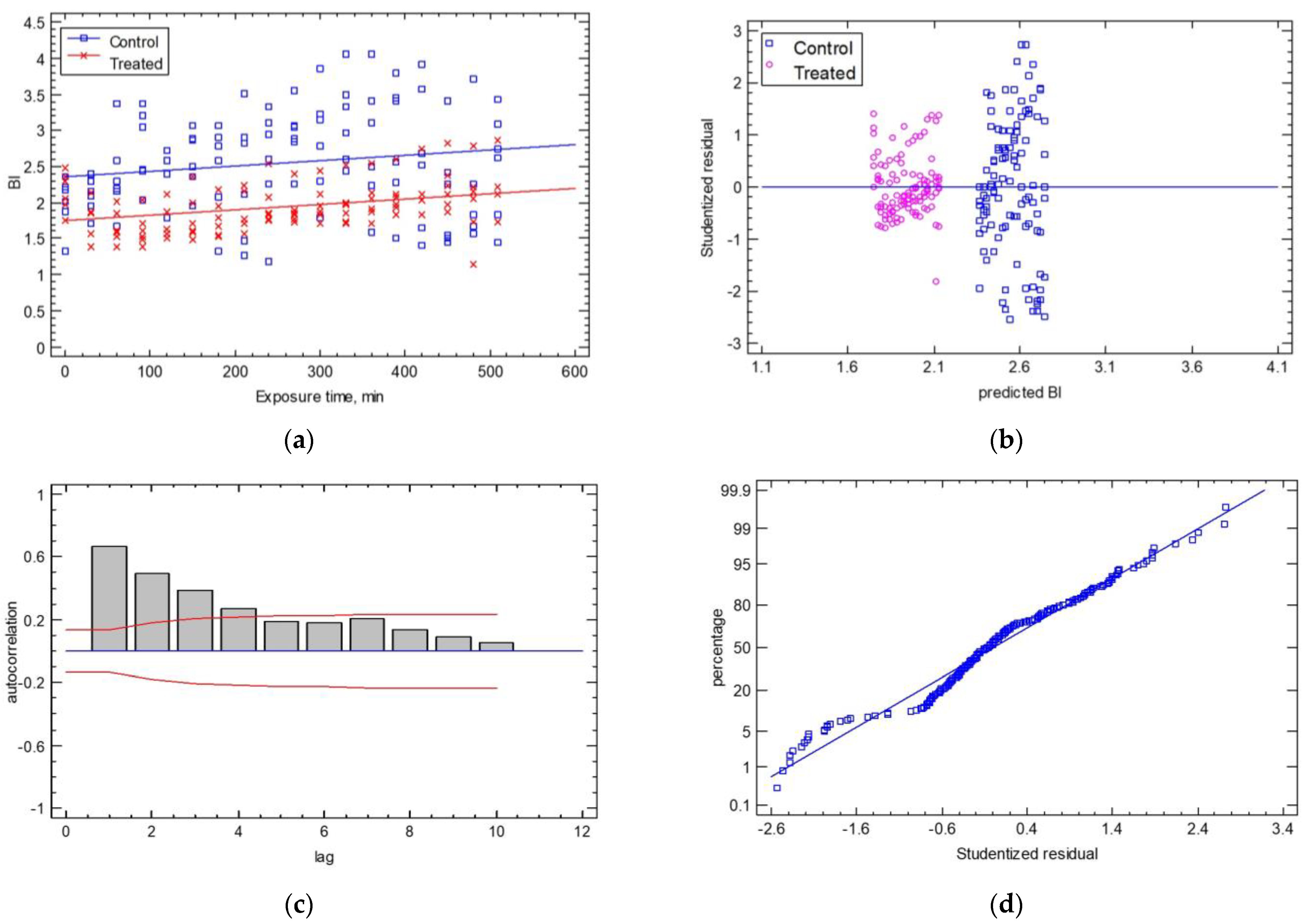

4.2. Statistical Analysis of BI and YI

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Li, X.; Li, R.; Wang, M.; Liu, Y.; Zhang, B.; Zhou, J. Hyperspectral imaging and their applications in the nondestructive quality assessment of fruits and vegetables. Hyperspectral Imaging Agric. Food Environ. 2018. [Google Scholar] [CrossRef]

- Behmann, J.; Steinrücken, J.; Plümer, L. Detection of early plant stress responses in hyperspectral images. ISPRS J. Photogramm. Remote Sens. 2014, 93, 98–111. [Google Scholar] [CrossRef]

- Polder, G.; Blok, P.M.; de Villiers, H.A.; van der Wolf, J.M.; Kamp, J. Potato virus Y detection in seed potatoes using deep learning on hyperspectral images. Front. Plant Sci. 2019, 10, 209. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Luo, Y.; Turner, E. Fresh-cut fruits. In Postharvest Physiology and Pathology of Vegetables; Bartz, J.A., Brecht, J.K., Eds.; CRC Press: Boca Raton, FL, USA, 2007; pp. 445–469. [Google Scholar]

- Oliveira, M.; Abadias, M.; Usall, J.; Torres, R.; Teixidó, N.; Viñas, I. Application of modified atmosphere packaging as a safety approach to fresh-cut fruits and vegetables—A review. Trends Food Sci. Technol. 2015, 46, 13–26. [Google Scholar] [CrossRef]

- Saquet, A.A. Storability of ‘conference’ pear under various controlled atmospheres. Erwerbs-Obstbau 2018, 60, 275–280. [Google Scholar] [CrossRef]

- Veltman, R.H.; Kho, R.M.; van Schaik, A.C.R.; Sanders, M.G.; Oosterhaven, J. Ascorbic acid and tissue browning in pears (Pyrus communis L. Cvs Rocha and conference) under controlled atmosphere conditions. Postharvest Biol. Technol. 2000, 19, 129–137. [Google Scholar] [CrossRef]

- Franck, C.; Lammertyn, J.; Ho, Q.T.; Verboven, P.; Verlinden, B.; Nicolaï, B.M. Browning disorders in Pear Fruit. Postharvest Biol. Technol. 2007, 43, 1–13. [Google Scholar] [CrossRef]

- Zhu, L.; Spachos, P.; Pensini, E.; Plataniotis, K.N. Deep learning and machine vision for Food Processing: A survey. Curr. Res. Food Sci. 2021, 4, 233–249. [Google Scholar] [CrossRef]

- El Sakka, M.; Ivanovici, M.; Chaari, L.; Mothe, J. A Review of CNN Applications in Smart Agriculture Using Multimodal Data. Sensors 2025, 25, 472. [Google Scholar] [CrossRef]

- Chuquimarca, L.E.; Vintimilla, B.X.; Velastin, S.A. A review of external quality inspection for fruit grading using CNN Models. Artif. Intell. Agric. 2024, 14, 1–20. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using Computer Vision: A Review. J. King Saud Univ.—Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Ray, P.P. A review on tinyml: State-of-the-art and prospects. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 1595–1623. [Google Scholar] [CrossRef]

- TensorFlow Lite. Available online: https://www.tensorflow.org/ (accessed on 25 May 2024).

- Warden, P.; Situnayake, D. TinyML: Machine Learning with Tensorflow Lite on Arduino, and Ultra-Low Power Micro-Controllers; O’Reilly: Beijing, China, 2020; ISBN 978-1-4920-5201-2. [Google Scholar]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An overview on Edge computing research. IEEE Access 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- Singh, R.; Gill, S.S. Edge Ai: A survey. Internet Things Cyber-Phys. Syst. 2023, 3, 71–92. [Google Scholar] [CrossRef]

- ISO/CIE 11664-2:2022; CIE Standard Illuminants for Colorimetry—Part 2: CIE Standard Illuminants for Daylight. International Commission on Illumination (CIE), International Organization for Standardization (ISO): Vienna, Austria, 2022.

- The Konica Minolta DiMAGE Z6. Available online: https://www.konicaminoltasupport.com/fileadmin/camera_minolta/specification/Camera/s2806e.pdf (accessed on 25 May 2024).

- Clelland, D.; Eismann, K. Digital Photography; Stamos, G., Translator; Anubis Publishers: Springfield, VA, USA, 2001. [Google Scholar]

- Chatzis, E.; Xanthopoulos, G.; Lambrinos, G. Digital Camera Imaging Device and Method for Measuring the Colour of Plant Organs. Patent No. 1006553, 2 October 2009. (In Greek). [Google Scholar]

- ISO/CIE 11664-1:2019; CIE 1964 10° Standard Colorimetric Observer For Colorimetry—Part 1: CIE 1964 10° Standard Colorimetric observer. International Commission on Illumination (CIE), International Organization for Standardization (ISO): Vienna, Austria, 2019.

- CIE 15:2004; CIE Standard Colorimetric Observers. International Commission on Illumination (CIE), International Organization for Standardization (ISO): Vienna, Austria, 2004.

- Electronic Vision Grader of MAF AGROBOTIC, France. Available online: https://www.agriexpo.online/prod/tong-engineering/product-181558-52704.html (accessed on 26 February 2025).

- Edge Impulse. Available online: https://edgeimpulse.com/ (accessed on 25 May 2024).

- Raspberry Pi 4. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 25 May 2024).

- Web Cam Logitech HD C270. Available online: https://www.logitech.com/el-gr/products/webcams/c270-hd-webcam.960-001063.html (accessed on 25 May 2024).

- OV5693 5MP USB Camera (B). Available online: https://www.waveshare.com/ov5693-5mp-usb-camera-b.htm (accessed on 25 May 2024).

- iPhone 13 Specs. Available online: https://www.apple.com/gr/iphone-13/specs/ (accessed on 25 May 2024).

- MobileNetV2. Available online: https://en.wikipedia.org/wiki/MobileNet (accessed on 25 May 2024).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Corel Paint, Alludo. Available online: https://www.alludo.com/en/brands/ (accessed on 25 May 2024).

- Pathare, P.B.; Opara, U.L.; Al-Said, F.A.-J. Colour measurement and analysis in fresh and Processed Foods: A Review. Food Bioprocess Technol. 2012, 6, 36–60. [Google Scholar] [CrossRef]

- Shimizu, T.; Okada, K.; Moriya, S.; Komori, S.; Abe, K. A High-Throughput Color Measurement System for Evaluating Flesh Browning in Apples. J. Am. Soc. Hortic. Sci. 2021, 146, 241–251. [Google Scholar] [CrossRef]

- Subhashree, S.N.; Sunoj, S.; Xue, J.; Bora, G.C. Quantification of browning in apples using colour and textural features by image analysis. Food Qual. Saf. 2017, 1, 221–226. [Google Scholar] [CrossRef]

- Rhim, J.; Wu, Y.; Weller, C.; Schnepf, M. Physical characteristics of a composite film of soy protein isolate and propylene glycol alginate. J. Food Sci. 1999, 64, 149–152. [Google Scholar] [CrossRef]

- Mastrodimos, N.; Lentzou, D.; Templalexis, C.; Tsitsigiannis, D.I.; Xanthopoulos, G. Thermal and digital imaging information acquisition regarding the development of aspergillus flavus in pistachios against aspergillus carbonarius in table grapes. Comput. Electron. Agric. 2022, 192, 106628. [Google Scholar] [CrossRef]

- Templalexis, C.; Giorni, P.; Lentzou, D.; Mozzoni, F.; Battilani, P.; Tsitsigiannis, D.I.; Xanthopoulos, G. IoT for Monitoring Fungal Growth and Ochratoxin A Development in Grapes Solar Drying in Tunnel and in Open Air. Toxins 2023, 15, 613. [Google Scholar] [CrossRef] [PubMed]

- Sammut, C.; Geoffrey, I.W. (Eds.) Encyclopedia of Machine Learning; Springer Science & Business Media: New York, NY, USA, 2011. [Google Scholar]

- Espíndola, R.P.; Ebecken, N.F.F. On extending F-measure and G-mean metrics to multi-class problems. WIT Trans. Inf. Commun. Technol. 2005, 35, 25–34. [Google Scholar] [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for multi-class classification: An overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Statgraphics 19 Softaware StatPoint Technologies Inc. Available online: https://www.statgraphics.com/ (accessed on 25 May 2024).

- High Sensitivity RGB + NIR 5MP 105 fps Infrared Camera for Machine Vision Sorting System. Vision Datum. Available online: https://www.alibaba.com/product-detail/High-Sensitivity-RGB-NIR-5MP-105fps_1601045956704.html (accessed on 24 February 2025).

- Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Enhancing a flexible robotic spraying platform for distant plant inspection via High-Quality thermal imagery data. Comput. Electron. Agric. 2021, 190, 106462. [Google Scholar] [CrossRef]

| Predicted Positive Class | Predicted Negative Class | |

|---|---|---|

| Actual Positive Class | TP | FN |

| Actual Negative Class | FP | TN |

| Predicted Label: Brown | Predicted Label: Good | |

|---|---|---|

| Actual label: brown | 2080 | 137 |

| Actual label: good | 0 | 1863 |

| Precision | Recall (or Sensitivity) | F1-Score | |

|---|---|---|---|

| Brown | 100% | 93.82% | 0.968 |

| Good | 93.15% | 100% | 0.965 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kondoyanni, M.; Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling. Sensors 2025, 25, 2482. https://doi.org/10.3390/s25082482

Kondoyanni M, Loukatos D, Templalexis C, Lentzou D, Xanthopoulos G, Arvanitis KG. Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling. Sensors. 2025; 25(8):2482. https://doi.org/10.3390/s25082482

Chicago/Turabian StyleKondoyanni, Maria, Dimitrios Loukatos, Charalampos Templalexis, Diamanto Lentzou, Georgios Xanthopoulos, and Konstantinos G. Arvanitis. 2025. "Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling" Sensors 25, no. 8: 2482. https://doi.org/10.3390/s25082482

APA StyleKondoyanni, M., Loukatos, D., Templalexis, C., Lentzou, D., Xanthopoulos, G., & Arvanitis, K. G. (2025). Computer Vision in Monitoring Fruit Browning: Neural Networks vs. Stochastic Modelling. Sensors, 25(8), 2482. https://doi.org/10.3390/s25082482