Dimensional Accuracy Evaluation of Single-Layer Prints in Direct Ink Writing Based on Machine Vision

Abstract

1. Introduction

- (1)

- A quantitative single-layer dimensional accuracy evaluation method for DIW is provided using a novel single-camera based machine vision system;

- (2)

- An image processing algorithm flow of the quantitative single-layer dimensional accuracy evaluation method is proposed;

- (3)

- Iterative closest point (ICP) algorithm is used in the method to improve the evaluation accuracy.

2. Related Works

3. Materials and Methods

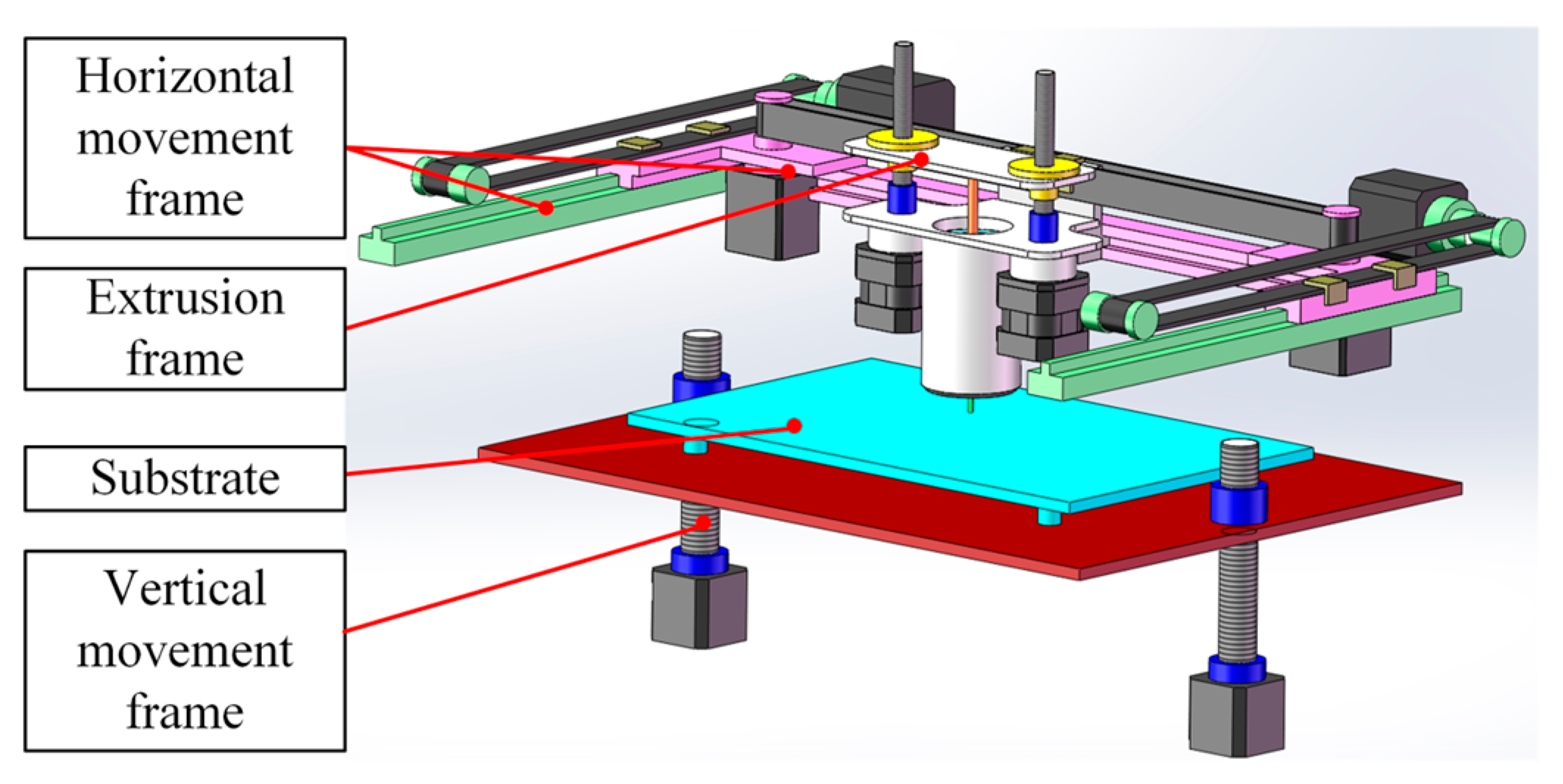

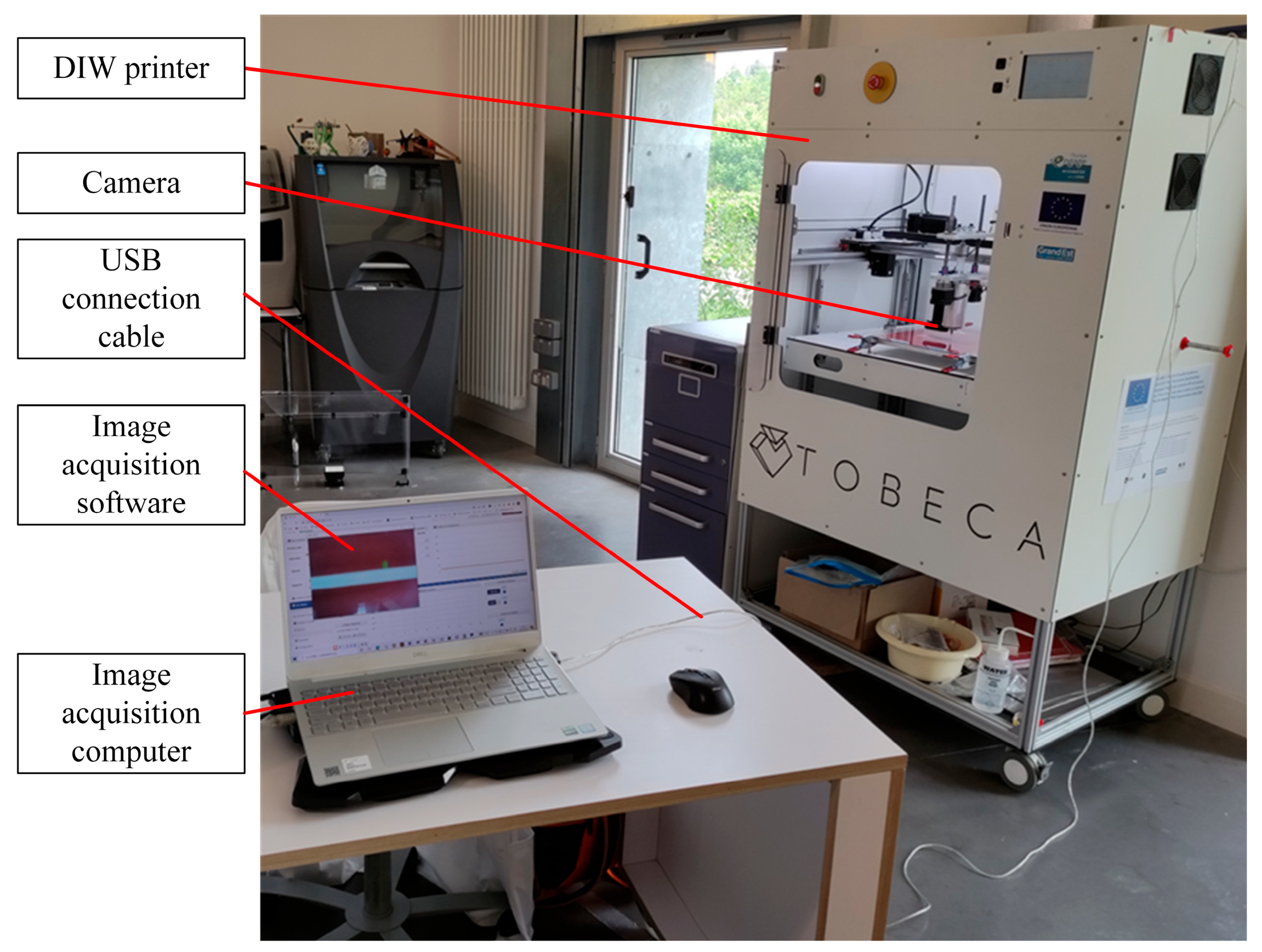

3.1. Hardware Setup

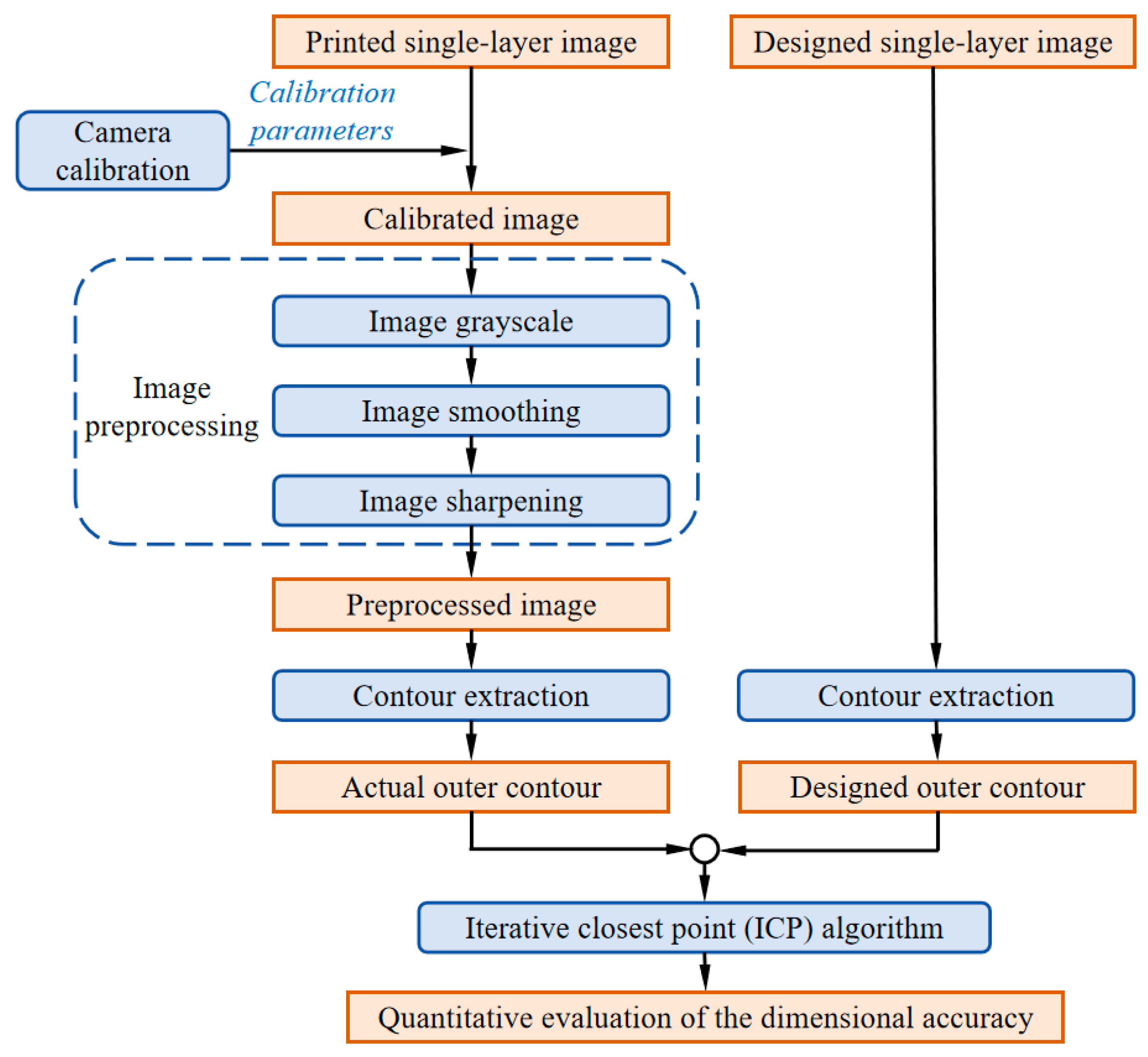

3.2. Design of Image Processing Algorithm Flow

- (1)

- Obtain camera calibration parameters, including internal parameter matrix, external parameter matrix, and distortion parameters, through camera calibration;

- (2)

- Generate the calibrated image by applying parameter corrections to the fabricated single-layer printed pattern;

- (3)

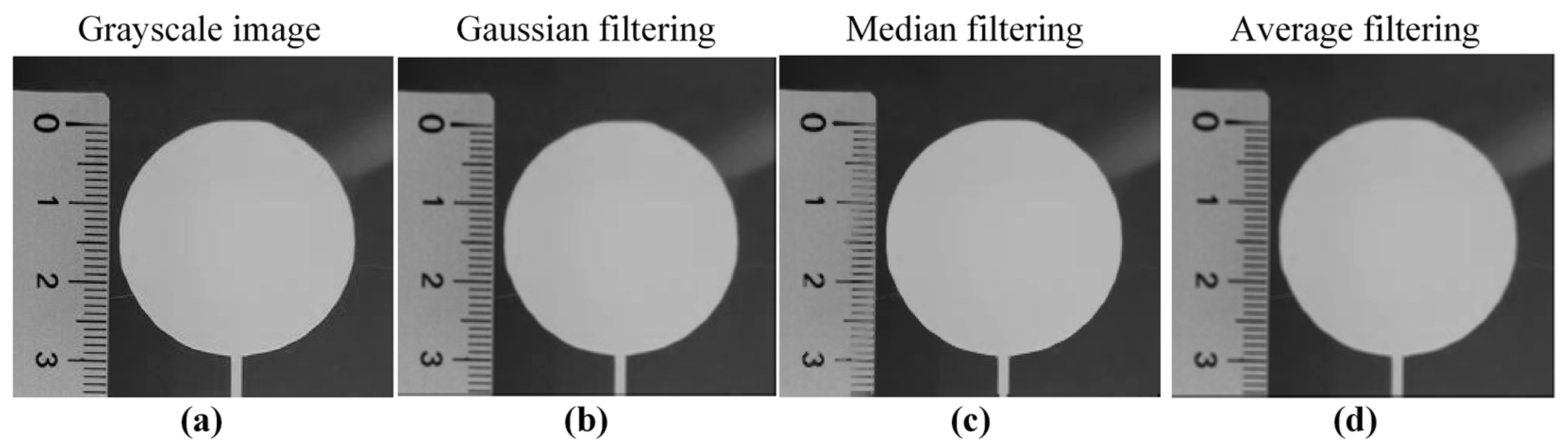

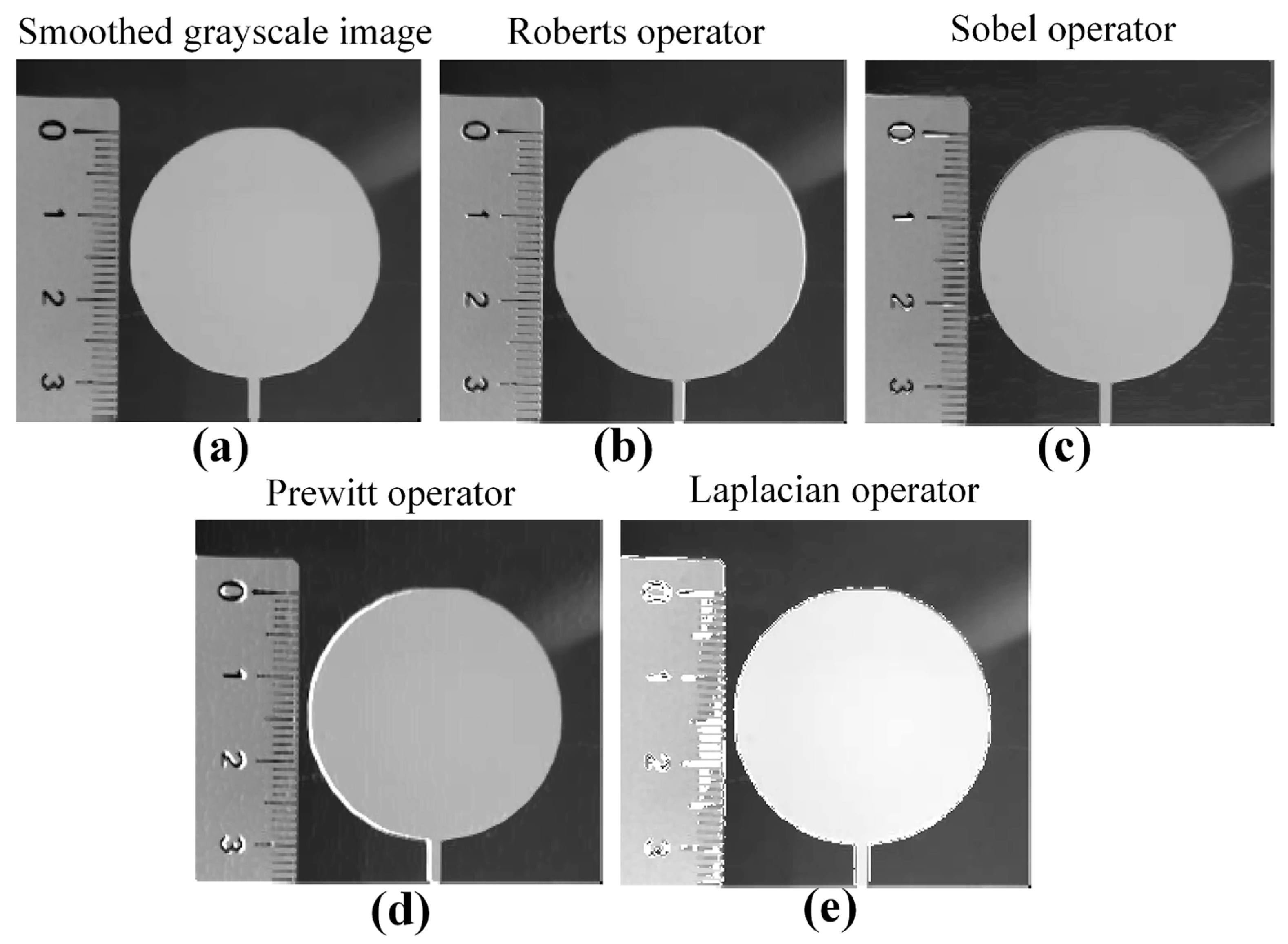

- After calibration, the image is sequentially processed through image grayscale, image smoothing, and image sharpening to obtain the preprocessed image;

- (4)

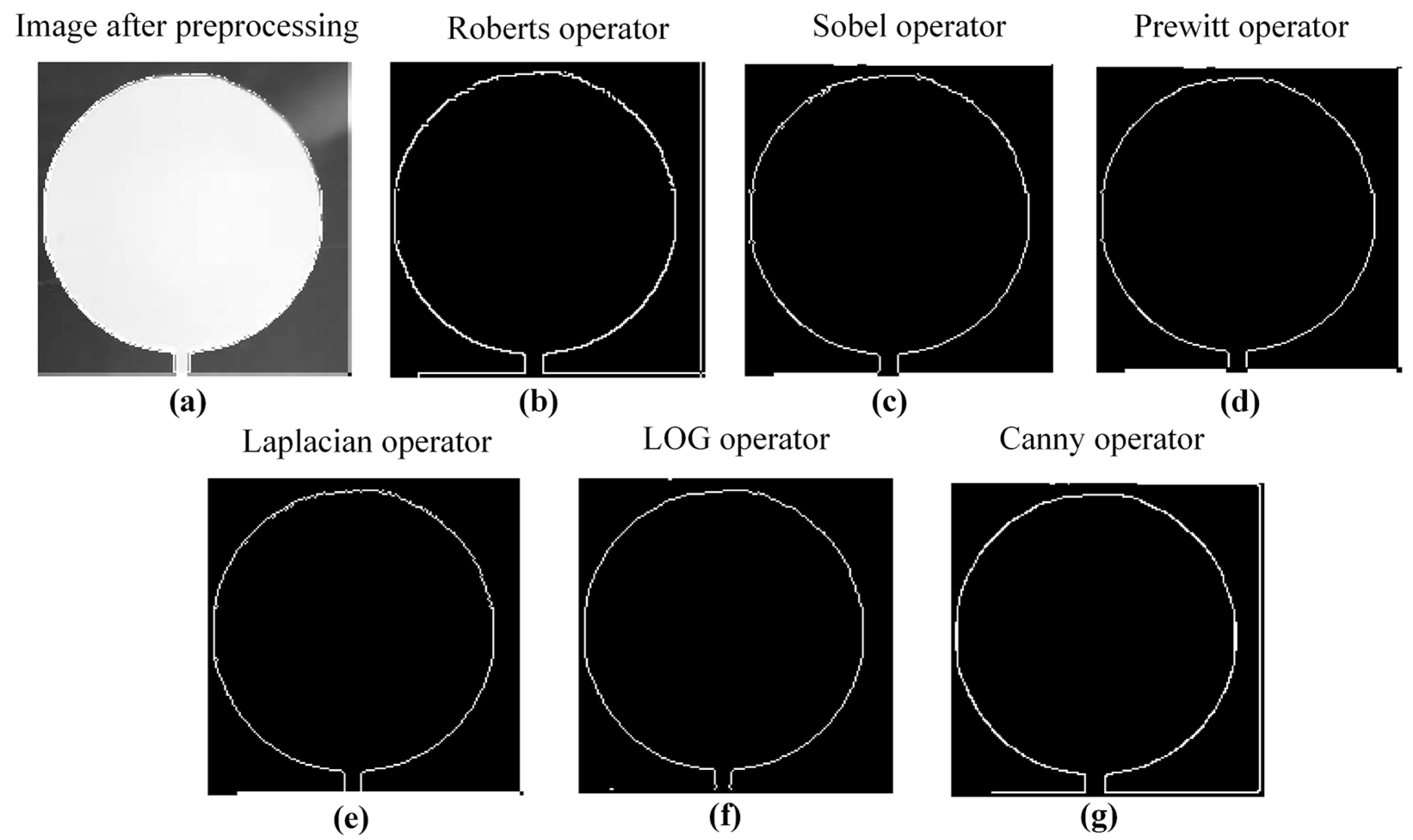

- Obtain the actual outer contour of the printed single layer in the preprocessed image through contour extraction algorithm;

- (5)

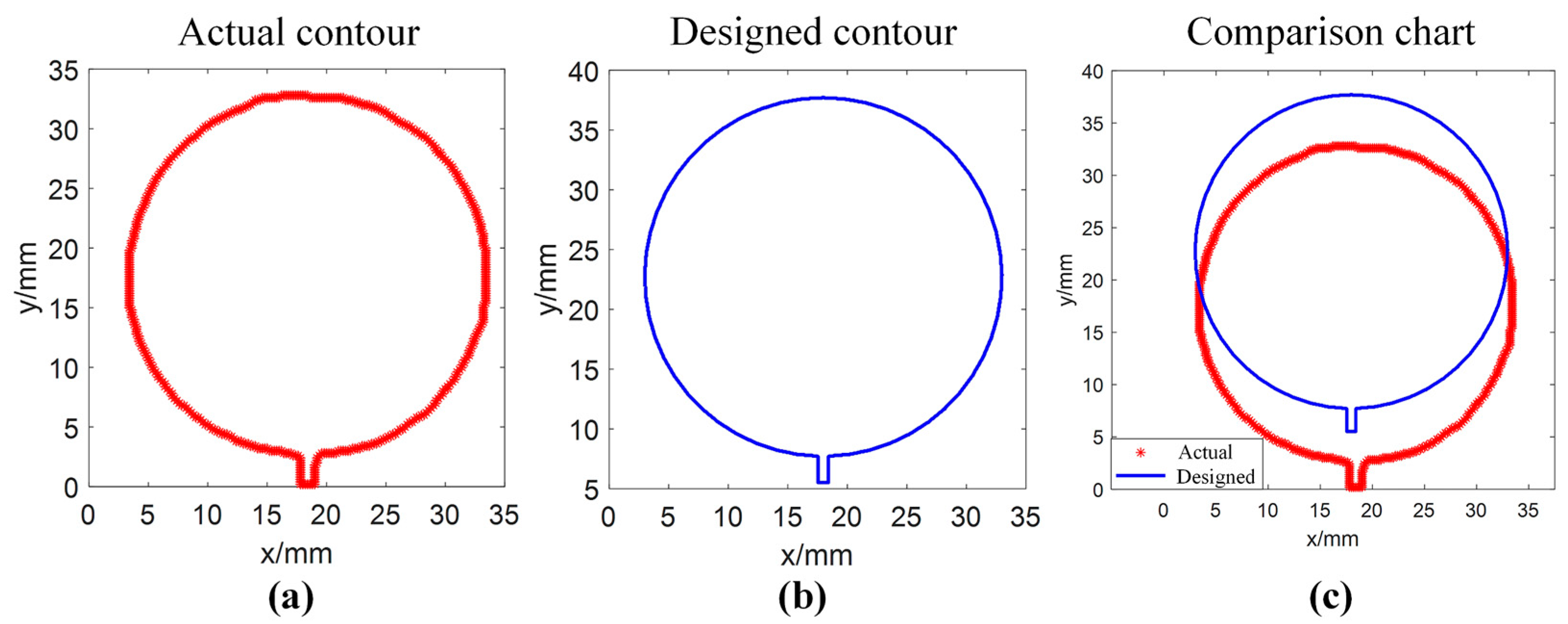

- Obtain the outer contour of the printed single-layer design in the design image through contour extraction algorithm;

- (6)

- Compare the actual outer contour with the designed outer contour and obtain a quantitative evaluation of the dimensional accuracy of the printed single layer through ICP algorithm.

3.3. Camera Calibration

- (1)

- Prepare a calibration board with known key point positions and dimensions, change the camera’s position and angle relative to the calibration board, and obtain multiple calibration board images;

- (2)

- Detect the key points in the calibration board image, obtain the pixel coordinates of the key points, and obtain the physical coordinate values of the key points on the calibration board based on the known positions and sizes of the key points on the calibration board;

- (3)

- Assuming there is no distortion in the camera lens, the calibration model determined by Equation (2) is used to obtain the parameters ,, , and ;

- (4)

- After determining the parameters in the calibration model, ignore the tangential distortion that has little impact and use the least squares method to obtain the parameters and ;

- (5)

- Optimize the estimation using the maximum likelihood method to obtain the optimized calibration model parameters ,, , and , as well as the radial distortion parameters and .

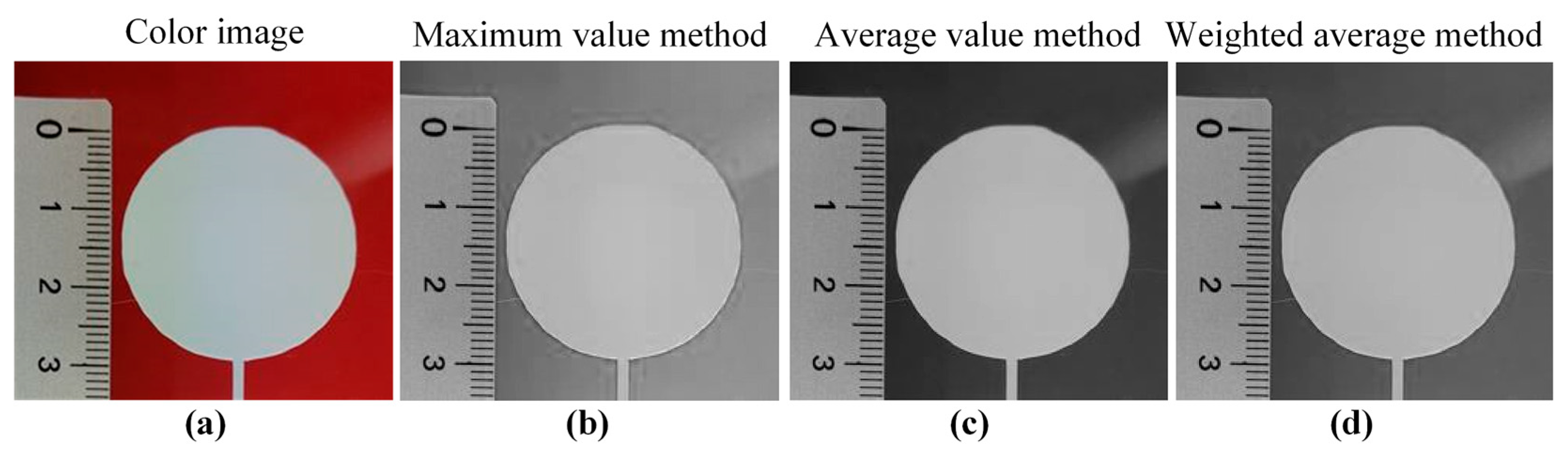

3.4. Image Preprocessing and Contour Extraction

3.5. Quantitative Acquisition of Single-Layer Dimensional Accuracy

| Algorithm 1: Iterative closest point (ICP) algorithm. |

| 1: Define the point sets of the actual outer contour and the designed outer contour in the image physical coordinate system. |

| 2: Define the rotation matrix and translation vector for the iterative process. |

| 3: Define the objective function for each iteration. |

| 4: Define the iteration stopping condition. |

| 5: If objective function value < iteration stopping condition, Then Stop, dimensional accuracy = objective function value Else Repeat to Step 3 End |

4. Results and Discussion

4.1. Calibrated Camera Parameters

4.2. Preprocessed Images and Extracted Contour

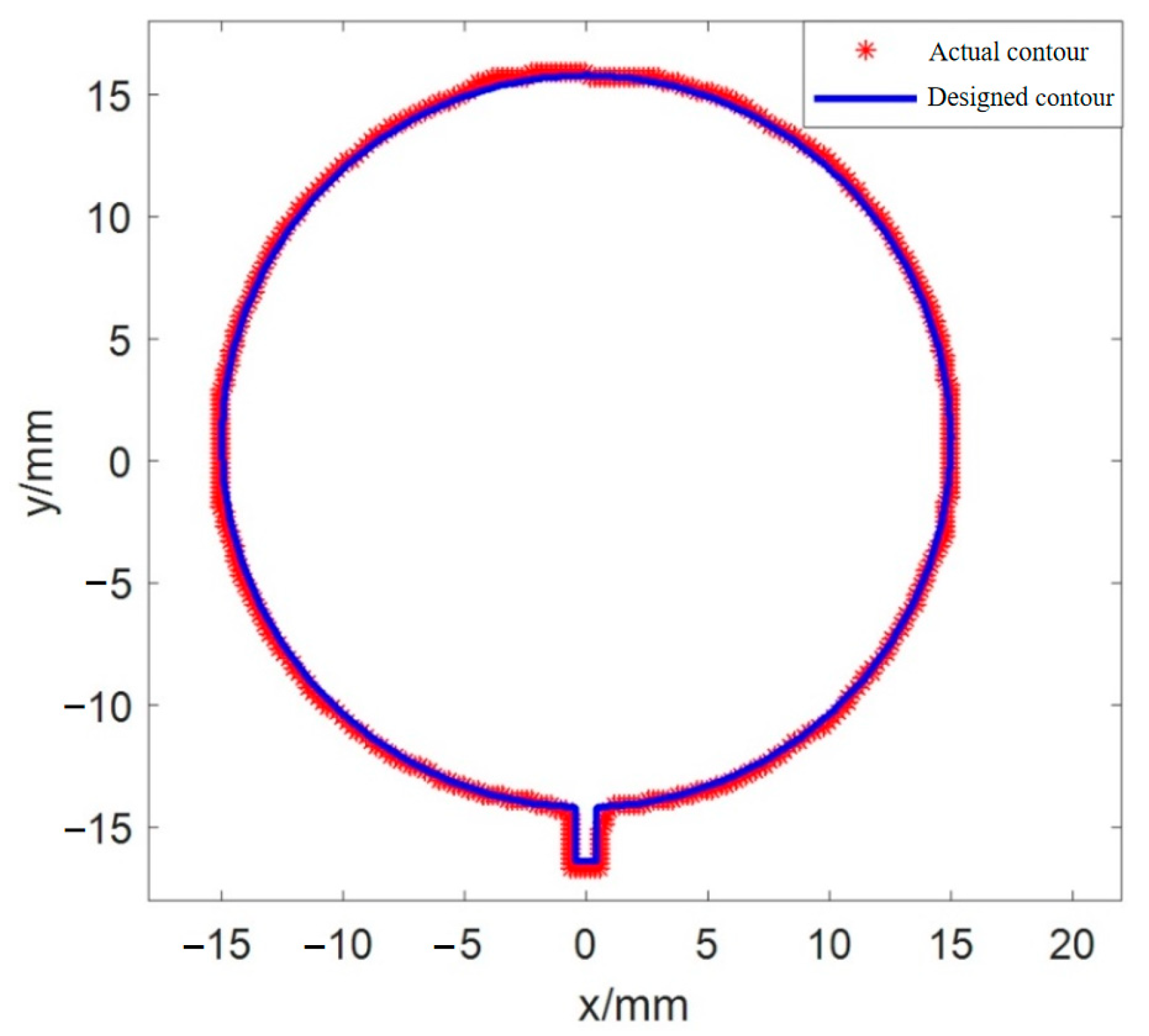

4.3. Quantitative Dimensional Accuracy

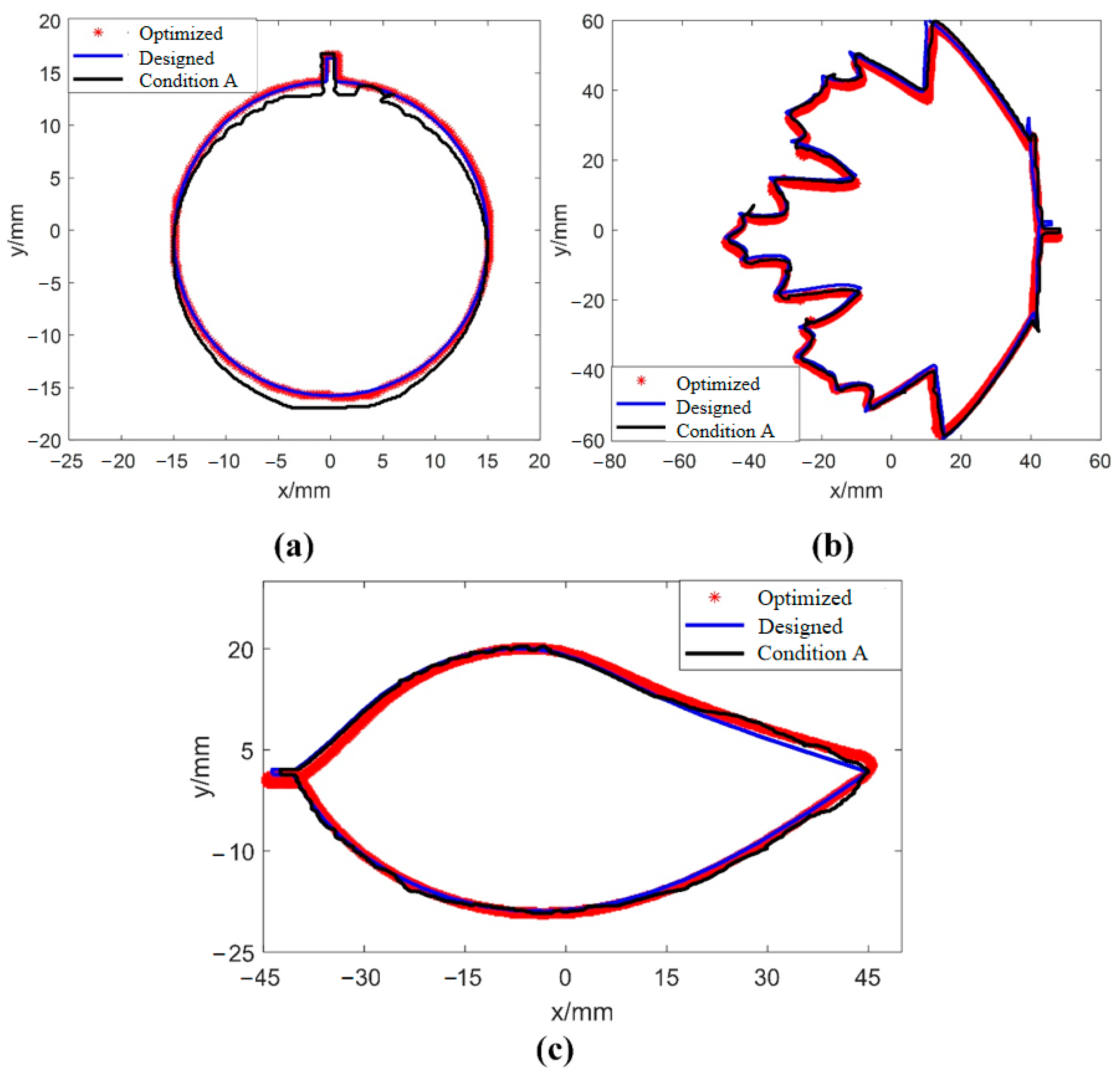

4.4. Application in Comparison of Process Parameters

5. Conclusions

- (1)

- In the camera calibration, the asymmetric circle calibration plate is selected as the calibration plate because the asymmetric circle calibration plate corresponded to the smallest average pixel error of 0.72 pixels, while the symmetric circle calibration plate corresponded to the largest average pixel error of 1.54 pixels.

- (2)

- In the image preprocessing, the average method is used to grayscale the color image. The median filtering is selected for image smoothing of the grayscale images. The Laplacian operator-based edge detection image sharpening method is selected to perform image sharpening on the smoothed grayscale image.

- (3)

- After image preprocessing, the Canny operator is selected to extract the contour of the printed single layer after image preprocessing considering accuracy, detail preservation, and contour integrity comprehensively.

- (4)

- ICP algorithm is used to obtain quantitative dimensional accuracy evaluation results based on the processed and extracted contour. The accuracy of the proposed method for dimensional accuracy evaluation of the printed single layer in DIW is 0.001 mm, which is 80% higher than previous methods. Process parameter optimization experiments verifies the proposed method as the dimensional accuracy of printed single layers is improved by at least 76.3%.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bigliardi, B.; Bottani, E.; Gianatti, E.; Monferdini, L.; Pini, B.; Petroni, A. Sustainable Additive Manufacturing in the context of Industry 4.0: A literature review. Procedia Comput. Sci. 2024, 232, 766–774. [Google Scholar] [CrossRef]

- Gao, G.; Wang, Y.; Wang, Y.; Zhang, K.; Xiang, D.; Ma, J. Development on shape and performance control of aeronautical parts in additive manufacturing. J. Manuf. Process. 2025, 141, 351–374. [Google Scholar] [CrossRef]

- Tu, Y.; Zhang, H.; Shi, X.; Fan, J.; Bao, B.; Lu, G.; Han, F.; Wu, H.; Hassan, A. Numerical Prediction and Experimental Validation of Deposited Filaments in Direct Ink Writing: Deposition Status and Profile Dimension. Polymers 2025, 17, 573. [Google Scholar] [CrossRef] [PubMed]

- Shen, A.; Caldwell, D.; Ma, A.W.K.; Dardona, S. Direct write fabrication of high-density parallel silver interconnects. Addit. Manuf. 2018, 22, 343–350. [Google Scholar] [CrossRef]

- Guzi de Moraes, E.; Ferreira, I.M.; Teixeira, L.B.; Cartapati, L.H.; Souza, M.T.; Novaes de Oliveira, A.P. Additive manufacturing of cellular structures from recycled soda-lime glass printing inks by robocasting. Ceram. Int. 2022, 49, 6554–6562. [Google Scholar] [CrossRef]

- Cesarano, J.; Calvert, P. Freeforming Objects with Low Binder Slurry. U.S. Patent US6027326A, 22 February 2000. [Google Scholar]

- Ang, X.; Tey, J.Y.; Yeo, W.H.; Shak, K.P.Y. A review on metallic and ceramic material extrusion method: Materials, rheology, and printing parameters. J. Manuf. Process. 2023, 90, 28–42. [Google Scholar] [CrossRef]

- Feng, H.; Wang, S.; Cui, Y.; Xiang, Y.; Liu, X.; Sun, X.; Zhang, W.; Tu, P. Effect of 3D printing process parameters on the mechanical properties of silica/polyethyleneimine composites using direct-ink writing. Polym. Compos. 2023, 44, 7687–7700. [Google Scholar] [CrossRef]

- Sun, L.; Parker, S.T.; Syoji, D.; Wang, X.; Lewis, J.A.; Kaplan, D.L. Direct-write assembly of 3D silk/hydroxyapatite scaffolds for bone co-cultures. Adv. Healthc. Mater. 2012, 1, 729–735. [Google Scholar] [CrossRef]

- Sun, K.; Wei, T.-S.; Ahn, B.Y.; Seo, J.Y.; Dillon, S.J.; Lewis, J.A. 3D Printing of Interdigitated Li-Ion Microbattery Architectures. Adv. Mater. 2013, 25, 4539–4543. [Google Scholar] [CrossRef]

- Lessing, J.; Glavan, A.C.; Walker, S.B.; Keplinger, C.; Lewis, J.A.; Whitesides, G.M. Inkjet Printing of Conductive Inks with High Lateral Resolution on Omniphobic “RF Paper” for Paper-Based Electronics and MEMS. Adv. Mater. 2014, 26, 4677–4682. [Google Scholar] [CrossRef]

- Frutiger, A.; Muth, J.T.; Vogt, D.M.; Mengüç, Y.; Campo, A.; Valentine, A.D.; Walsh, C.J.; Lewis, J.A. Capacitive Soft Strain Sensors via Multicore–Shell Fiber Printing. Adv. Mater. 2015, 27, 2440–2446. [Google Scholar] [CrossRef] [PubMed]

- Sydney Gladman, A.; Matsumoto, E.A.; Nuzzo, R.G.; Mahadevan, L.; Lewis, J.A. Biomimetic 4D printing. Nat. Mater. 2016, 15, 413–418. [Google Scholar] [CrossRef] [PubMed]

- Wehner, M.; Truby, R.L.; Fitzgerald, D.J.; Mosadegh, B.; Whitesides, G.M.; Lewis, J.A.; Wood, R.J. An integrated design and fabrication strategy for entirely soft, autonomous robots. Nature 2016, 536, 451–455. [Google Scholar] [CrossRef] [PubMed]

- Zhou, N.; Liu, C.; Lewis, J.A.; Ham, D. Gigahertz Electromagnetic Structures via Direct Ink Writing for Radio-Frequency Oscillator and Transmitter Applications. Adv. Mater. 2017, 29, 1605198. [Google Scholar] [CrossRef]

- Blanco-Angulo, C.; Martínez-Lozano, A.; Arias-Rodríguez, J.; Rodríguez-Martínez, A.; Vicente-Samper, J.M.; Sabater-Navarro, J.M.; Ávila-Navarro, E. Low-Cost Direct-Writing of Silver-Based Ink for Planar Microwave Circuits up to 10 GHz. IEEE Access 2023, 11, 4010–4022. [Google Scholar] [CrossRef]

- Jiang, T.; Lin, Z.; Qiao, X.; Yang, Y.; Hong, Y.; Shang, J.; Luo, Z.; Matthew Kinsella, J. A dual-index quality evaluation method for direct ink writing of soft materials. Mater. Lett. 2023, 344, 134450. [Google Scholar] [CrossRef]

- Belgin Paul, D.L.; Praveen, A.S.; Arjunan, A. Parametric optimisation for 3D printing β-tricalcium phosphate tissue engineering scaffolds using direct ink writing. Smart Mater. Manuf. 2025, 3, 100070. [Google Scholar] [CrossRef]

- Guida, L.; Romani, A.; Negri, D.; Cavallaro, M.; Levi, M. 3D-printable PVA-based inks filled with leather particle scraps for UV-assisted direct ink writing: Characterization and printability. Sustain. Mater. Technol. 2025, 44, e01335. [Google Scholar] [CrossRef]

- Legett, S.A.; Torres, X.; Schmalzer, A.M.; Pacheco, A.; Stockdale, J.R.; Talley, S.; Robison, T.; Labouriau, A. Balancing Functionality and Printability: High-Loading Polymer Resins for Direct Ink Writing. Polymers 2022, 14, 4661. [Google Scholar] [CrossRef]

- Belyaeva, A.A.; Eksakusto, P.O.; Morozova, S.M. Thermally and magnetically responsive single layer bioinspired soft actuator with patterned structure obtained by direct ink writing. Mater. Today Commun. 2024, 39, 108879. [Google Scholar] [CrossRef]

- Xu, J.; Buswell, R.A.; Kinnell, P.; Biro, I.; Hodgson, J.; Konstantinidis, N.; Ding, L. Inspecting manufacturing precision of 3D printed concrete parts based on geometric dimensioning and tolerancing. Autom. Constr. 2020, 117, 103233. [Google Scholar] [CrossRef]

- Wi, K.; Suresh, V.; Wang, K.; Li, B.; Qin, H. Quantifying quality of 3D printed clay objects using a 3D structured light scanning system. Addit. Manuf. 2020, 32, 100987. [Google Scholar] [CrossRef]

- Petsiuk, A.L.; Pearce, J.M. Open source computer vision-based layer-wise 3D printing analysis. Addit. Manuf. 2020, 36, 101473. [Google Scholar] [CrossRef]

- Shen, H.; Sun, W.; Fu, J. Multi-view online vision detection based on robot fused deposit modeling 3D printing technology. Rapid Prototyp. J. 2019, 25, 343–355. [Google Scholar] [CrossRef]

- Sun, Q.; Hou, Y.; Tan, Q.; Li, G. A Planar-Dimensions Machine Vision Measurement Method Based on Lens Distortion Correction. Sci. World J. 2013, 2013, 963621. [Google Scholar] [CrossRef]

- Li, B. Research on geometric dimension measurement system of shaft parts based on machine vision. Eurasip J. Image Video Process. 2018, 2018, 101. [Google Scholar] [CrossRef]

- Dang, A.-T.; Hsu, Q.-C.; Truong, T.-T. A simple method for dimensional measurement of ring-shaped objects using image processing technique. Int. J. Adv. Manuf. Technol. 2021, 115, 3657–3678. [Google Scholar] [CrossRef]

- Tan, Q.; Kou, Y.; Miao, J.; Liu, S.; Chai, B. A Model of Diameter Measurement Based on the Machine Vision. Symmetry 2021, 13, 187. [Google Scholar] [CrossRef]

- Nogueira, V.V.E.; Barca, L.F.; Pimenta, T.C. A Cost-Effective Method for Automatically Measuring Mechanical Parts Using Monocular Machine Vision. Sensors 2023, 23, 5994. [Google Scholar] [CrossRef]

- Liu, Y.; Lv, Z.; Zhang, Q.; Zhao, J.; Fang, Z.; Gao, Z.; Su, Y. Comparison Study of Three Camera Calibration Methods Considering the Calibration Board Quality and 3D Measurement Accuracy. Exp. Mech. 2023, 63, 289–307. [Google Scholar] [CrossRef]

- Huang, J.; Liu, S.; Liu, J.; Jian, Z. Camera calibration optimization algorithm that uses a step function. Opt. Express 2024, 32, 18453–18471. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Zhou, F.; Zhang, W.; Liu, Y. A novel camera calibration method based on circle projection model. Measurement 2023, 222, 113651. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhu, Y.; Li, Y.; Qiu, Z.; Luo, Y.; Xie, C.; Zhang, Z. Multi-Camera-Based Universal Measurement Method for 6-DOF of Rigid Bodies in World Coordinate System. Sensors 2020, 20, 5547. [Google Scholar] [CrossRef]

- Liu, X.; Tian, J.; Kuang, H.; Ma, X. A Stereo Calibration Method of Multi-Camera Based on Circular Calibration Board. Electronics 2022, 11, 627. [Google Scholar] [CrossRef]

- Tu, Y.; Arrieta-Escobar, J.A.; Hassan, A.; uz Zaman, U.K.; Siadat, A.; Yang, G. Optimizing Process Parameters of Direct Ink Writing for Dimensional Accuracy of Printed Layers. 3D Print. Addit. Manuf. 2021, 10, 816–827. [Google Scholar] [CrossRef]

| Study | Product Type | Technique Used | Result (Accuracy) |

|---|---|---|---|

| Sun et al. [26] | Shaft diameter | Machine vision method by improving the calibration model | 0.005 mm |

| Li et al. [27] | Bottom radius and height of cylinders | A CCD camera system with preprocessing operations | 0.01 mm |

| Dang et al. [28] | Large-size ring-shaped objects | Single-camera system by capturing objects with multiple partial images | 0.063 mm |

| Tan et al. [29] | Shaft diameter | Structured light system composed of a laser linear light source and a camera | 0.019 mm |

| Nogueira et al. [30] | Specifications on external contours | Monocular machine vision system by image processing | 0.008 mm |

| Calibration Parameters | Value | ||

|---|---|---|---|

| Chessboard Calibration Board | Symmetrical Circle Calibration Plate | Asymmetric Circle Calibration Plat | |

| /pixels | 3498.7 | 3478.6 | 3488.3 |

| /pixels | 3497.0 | 3490.2 | 3485.6 |

| /pixels | 1757.4 | 1747.7 | 1758.2 |

| /pixels | 1746.7 | 1773.6 | 1757.0 |

| 0.0303 | 0.0685 | 0.0882 | |

| −0.1624 | −0.0478 | −0.2265 | |

| Calibration Board | Chessboard Calibration Board | Symmetrical Circle Calibration Plate | Asymmetric Circle Calibration Plat |

|---|---|---|---|

| Average pixel error/pixels | 0.79 | 1.54 | 0.72 |

| Study | Accuracy | Improvement |

|---|---|---|

| This study | 0.001 mm | — |

| Sun et al. [26] | 0.005 mm | 80% |

| Li et al. [27] | 0.01 mm | 90% |

| Dang et al. [28] | 0.063 mm | 98% |

| Tan et al. [29] | 0.019 mm | 95% |

| Nogueira et al. [30] | 0.008 mm | 88% |

| Shape | Dimensional Accuracy/mm | Precision Improvement/% | |

|---|---|---|---|

| Condition A | Optimized Parameters | ||

| Shape A | 0.63 | 0.02 | 96.8 |

| Shape B | 0.38 | 0.09 | 76.3 |

| Shape C | 0.89 | 0.12 | 86.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tu, Y.; Zhang, H.; Chen, H.; Bao, B.; Fang, C.; Wu, H.; Chen, X.; Hassan, A.; Boudaoud, H. Dimensional Accuracy Evaluation of Single-Layer Prints in Direct Ink Writing Based on Machine Vision. Sensors 2025, 25, 2543. https://doi.org/10.3390/s25082543

Tu Y, Zhang H, Chen H, Bao B, Fang C, Wu H, Chen X, Hassan A, Boudaoud H. Dimensional Accuracy Evaluation of Single-Layer Prints in Direct Ink Writing Based on Machine Vision. Sensors. 2025; 25(8):2543. https://doi.org/10.3390/s25082543

Chicago/Turabian StyleTu, Yongqiang, Haoran Zhang, Hu Chen, Baohua Bao, Canmi Fang, Hao Wu, Xinkai Chen, Alaa Hassan, and Hakim Boudaoud. 2025. "Dimensional Accuracy Evaluation of Single-Layer Prints in Direct Ink Writing Based on Machine Vision" Sensors 25, no. 8: 2543. https://doi.org/10.3390/s25082543

APA StyleTu, Y., Zhang, H., Chen, H., Bao, B., Fang, C., Wu, H., Chen, X., Hassan, A., & Boudaoud, H. (2025). Dimensional Accuracy Evaluation of Single-Layer Prints in Direct Ink Writing Based on Machine Vision. Sensors, 25(8), 2543. https://doi.org/10.3390/s25082543