Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data

Abstract

:1. Introduction

2. Study area

3. Methods

3.1 Data collection and preprocessing

3.2 Object-based classification

3.3 Classification Accuracy Assessment

3.4 Post-classification Change Detection

3.4.1 Pixel-based post-classification change detection

3.4.2 Object-based post-classification change detection

3.5 Change Detection Accuracy Assessment

4. Results

4.1 Classification and Change Detection Accuracy

4.1.1 Classification Accuracy

4.1.2 Change Detection Accuracy

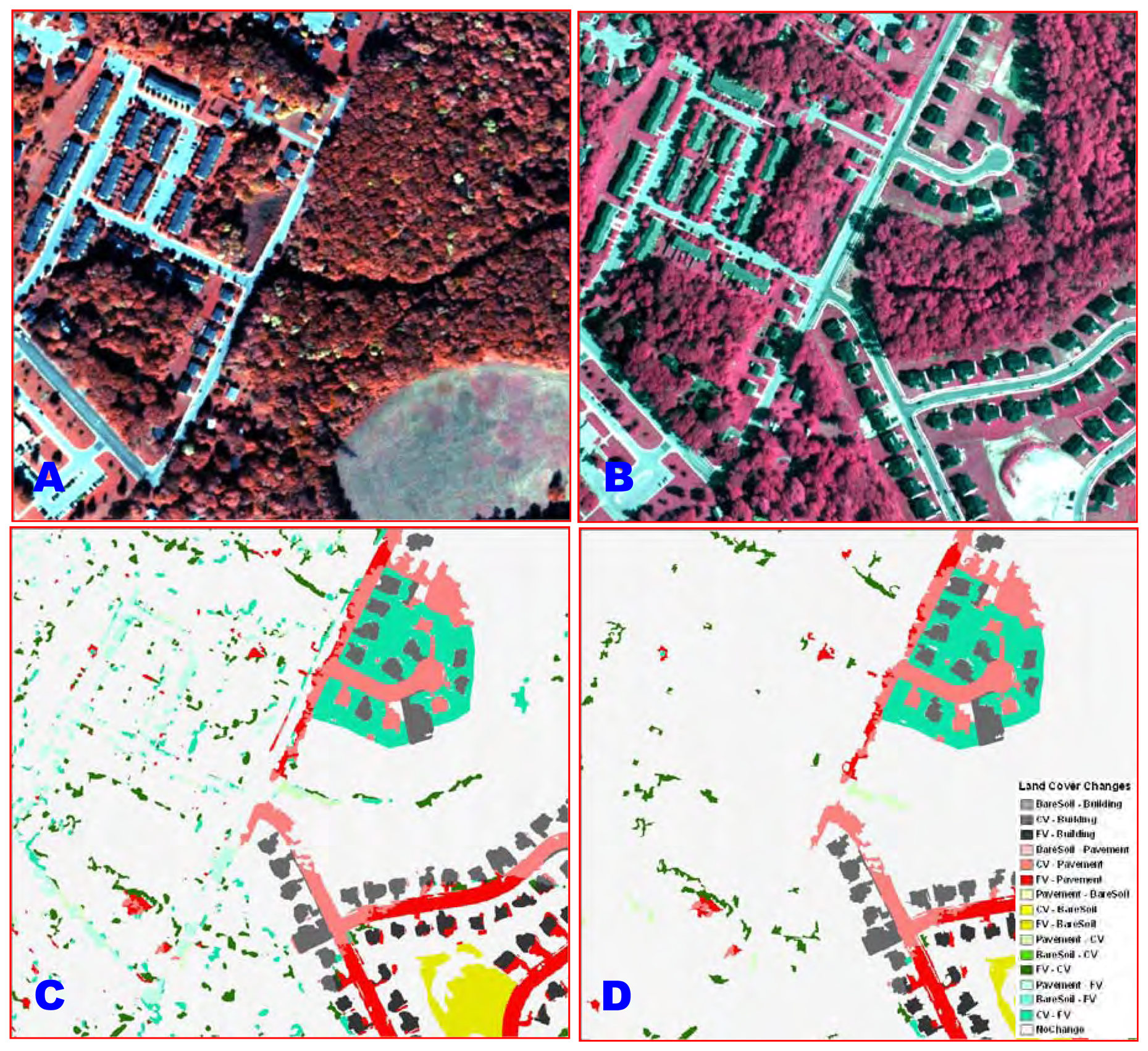

4.2 Land cover and its change in the Gwynns Falls watershed from 1999 to 2004

5. Discussions

Acknowledgments

References

- Singh, A. Digital change detection techniques using remotely-sensed data. International Journal of Remote Sensing 1989, 10, 989–1003. [Google Scholar]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. International Journal of Remote Sensing 2004, 25(12), 2365–2407. [Google Scholar]

- Rogan, J.; Chen, D.M. Remote sensing technology for mapping and monitoring land-cover and land-use change. Progress in Planning 2004, 61(4), 301–325. [Google Scholar]

- Yuan, D.; Elvidge, C. D.; Lunetta, R. S. Survey of multispectral methods for land cover change analysis. In Remote Sensing Change Detection: Environmental Monitoring Methods and Applications; Lunetta, R. S., Elvidge, C. D., Eds.; Ann Arbor Press: Chelsea, MI, 1998; pp. 21–39. [Google Scholar]

- Ridd, M. K.; Liu, J. A comparison of four algorithms for change detection in an urban environment. Remote Sensing of Environment 1998, 63, 95–100. [Google Scholar]

- Prakash, A.; Gupta, R. P. Land-use mapping and change detection in a coal mining area-a case study in the Jharia coalfield, India. International Journal of Remote Sensing 1998, 19, 391–410. [Google Scholar]

- Howarth, P. J.; Boasson, E. Landsat digital enhancements for change detection in urban environments. Remote Sensing of Environment 1983, 13, 149–160. [Google Scholar]

- Gong, P. Change detection using principal component analysis and fuzzy set theory. Canadian Journal of Remote Sensing 1993, 19, 22–29. [Google Scholar]

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogrammetric Engineering and Remote Sensing 2003, 69, 369–80. [Google Scholar]

- Johnson, R. D.; Kasischke, E. S. Change vector analysis: a technique for the multitemporal monitoring of land cover and condition. International Journal of Remote Sensing 1998, 19, 411–426. [Google Scholar]

- Dai, X. L.; Khorram, S. Remotely sensed change detection based on artificial neural networks. Photogrammetric Engineering and Remote Sensing 1999, 65, 1187–1194. [Google Scholar]

- Rogan, J.; Miller, J.; Stow, D.A.; Franklin, J.; Levien, L.; Fischer, C. Land-Cover Change Monitoring with Classification Trees Using Landsat TM and Ancillary Data. Photogrammetric Engineering and Remote Sensing 2003, 69(7), 793–804. [Google Scholar]

- Jensen, J. R. Introductory digital image processing: A remote sensing perspective; Prentice-Hall: New Jersey, 2004; p. 526. [Google Scholar]

- Mas, J. F. Monitoring land-cover changes: a comparison of change detection techniques. International Journal of Remote Sensing 1999, 20, 139–152. [Google Scholar]

- Yuan, F.; Sawaya, K. E.; Loeffelholz, B.; Bauer, M. E. Land cover classification and change analysis of the Twin Cities (Minnesota) metropolitan area by multitemporal Landsat remote sensing. Remote Sensing of Environment 2005, 98(2), 317–328. [Google Scholar]

- Yang, X. Satellite monitoring of urban spatial growth in the Atlanta metropolitan area. Photogrammetric Engineering and Remote Sensing 2002, 68(7), 725–734. [Google Scholar]

- Im, J.; Jensen, J. R.; Tullis, J. A. Object-based change detection using correlation image analysis and image segmentation. International Journal of Remote Sensing 2008, 29(2), 399–423. [Google Scholar]

- Shackelford, A. K.; Davis, C. H. A combined fuzzy pixel-based and object-based approach for classification of high-resolution multispectral data over urban areas. Geoscience and Remote Sensing, IEEE Transactions 2003, 41(10), 2354–2363. [Google Scholar]

- Zhou, W.; Troy, A. An Object-oriented Approach for Analyzing and Characterizing Urban Landscape at the Parcel Level. International Journal of Remote Sensing.

- Civco, D.; Hurd, J.; Wilson, E.; Song, M.; Zhang, Z. A comparison of land use and land cover change detection methods. ASPRS-ACSM Annual Conference 2002. [Google Scholar]

- Laliberte, A. S.; Rango, A.; Havstad, K. M.; Paris, J. F.; Beck, R. F.; Mcneely, R.; Gonzalez, A. L. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sensing of Environment 2004, 93, 198–210. [Google Scholar]

- Niemeyer, I.; Nussbaum, S.; Canty, M. J. Automation of change detection procedures for nuclear safeguards-related monitoring purposes. IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 27 July, 2003. DVD-ROM.

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS Journal of Photogrammetry & Remote Sensing 2004, 58, 225–238. [Google Scholar]

- Blaschke, T. A framework for change detection based on image objects. Göttinger Geographische Abhandlungen. Erasmi, S., Cyffka, B., Kappas, M., Eds.; 2005, pp. 1–9. Available online at http://www.definiens.com/binary_secure/213_140.pdf?binary_id=213&log_id=10463&session_id=63e0a3f4d2bb30984e35e709eb54e658 (accessed 21 December 2007).

- Frauman, E.; Wolff, E. Change detection in urban areas using very high spatial resolution satellite images-case study in Brussels: locating main changes in order to update the Urban Information System (UrbIS) database. Remote Sensing for Environmental Monitoring, GIS Applications, and Geology V. 2005, 5983, 76–87. [Google Scholar]

- Cadenasso, M. L.; Pickett, S.T.A; Schwarz, K. Spatial heterogeneity in urban ecosystems: reconceptualizing land cover and a framework for classification. Frontiers in Ecology and the Environment 2007, 5(2), 80–88. [Google Scholar]

- Baatz, M.; Schape, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte GeographischeInformationsverabeitung. XII; Strobl, T., Blaschke, T., Griesebner, G., Eds.; Beitragezum AGIT -Symp.: Salzburg, Karlsruhe, 2000; pp. 12–23. [Google Scholar]

- Definiens. Defineins Developer. 2007. Software: http://www.definiens.com/.

- Benz, U. C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS Journal of Photogrammetry & Remote Sensing 2004, 58, 239–258. [Google Scholar]

- Congalton, R. G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sensing of Environment 1991, 37, 35–46. [Google Scholar]

- Goodchild, M.F.; Biging, G. S.; Congalton, R. G.; Langley, P. G.; Chrisman, N. R.; Davis, F. W. Final Report of the Accuracy Assessment Task Force. In California Assembly Bill AB1580; Santa Barbara; University of California, National Center for Geographic Information and Analysis (NCGIA), 1994. [Google Scholar]

- Foody, G. M. Status of land cover classification accuracy assessment. Remote Sensing of Environment 2002, 80, 185–201. [Google Scholar]

- Lunetta, R.; Elvidge, C. Remote Sensing Change Detection; Taylor & Francis, 1999; p. 320. [Google Scholar]

- Murakami, H.; Nakagawa, K.; Hasegawa, H.; Shibata, T.; Iwanami, E. Change detection of buildings using an airborne laser scanner. ISPRS Journal of Photogrammetry and Remote Sensing 1999, 54(2), 148–152. [Google Scholar]

- Stow, D. A. Reducing the effects of misregistration on pixel-level change detection. International Journal of Remote Sensing 1999, 20(12), 2477–2483. [Google Scholar]

- Fuller, R. M.; Smith, G. M.; Devereux, B. J. The characterization and measurement of land cover change through remote sensing: Problems in operational applications. International Journal of Applied Earth Observation and Geoinformation 2003, 4, 243–253. [Google Scholar]

- Zhou, W.; Troy, A. In review. Development of an object-oriented framework for classifying and inventorying human-dominated forest ecosystems. International Journal of Remote Sensing.

- Chen, D.; Stow, D. A.; Gong, P. Examining the effect of spatial resolution and texture window size on classification accuracy: an urban environment case. International Journal of Remote Sensing 2004, 25(11), 2177–2192. [Google Scholar]

- Thomas, N.; Hendrix, C.; Congalton, R.G. A comparison of urban mapping methodsusing high resolution digital imagery. Photogrammetric Engineering and Remote Sensing 2003, 69, 963–972. [Google Scholar]

- Cushnie, J. L. The interactive effects of spatial resolution and degree of internal variability within land-cover type on classification accuracies. International Journal of Remote Sensing 1987, 8, 15–22. [Google Scholar]

- Congalton, R. G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC/Lewis Press: Boca Raton, FL, 1999; p. 168. [Google Scholar]

- Townshend, J. R. G.; Justice, C. O.; Gurney, C.; McManus, J. The impact of misregistration on change detection. IEEE Transactions on Geoscience and Remote Sensing 1992, 30, 1054–1060. [Google Scholar]

- Justice, C. O.; Markham, B. L.; Townshend, J. R. G.; Kennard, R. L. Spatial degradation of satellite data. International Journal of Remote Sensing 1989, 10, 1539–1561. [Google Scholar]

| Class Name | Class Description |

|---|---|

| NoChange | Land cover with no changes; Land cover changes from building to other land cover types, and from pavement to building, were considered as highly unlikely, and thus were classified as no-change. |

| BareSoil-Building | Land cover type changes from bare soil in 1999 to buildings in 2004 |

| CV-Building | Land cover type changes from CV in 1999 to buildings in 2004 |

| FV-Building | Land cover type changes from FV in 1999 to buildings in 2004 |

| BareSoil-Pavement | Land cover type changes from bare soil in 1999 to pavement in 2004 |

| CV-Pavement | Land cover type changes from CV in 1999 to pavement in 2004 |

| FV-Pavement | Land cover type changes from FV in 1999 to pavement in 2004 |

| Pavement-BareSoil | Land cover type changes from pavement in 1999 to bare soil in 2004 |

| CV-BareSoil | Land cover type changes from CV in 1999 to bare soil in 2004 |

| FV-BareSoil | Land cover type changes from FV in 1999 to bare soil in 2004 |

| Pavement-CV | Land cover type changes from pavement in 1999 to CV in 2004 |

| BareSoil-CV | Land cover type changes from bare soil in 1999 to CV in 2004 |

| FV-CV | Land cover type changes from FV in 1999 to CV in 2004 |

| Pavement-FV | Land cover type changes from pavement in 1999 to FV in 2004 |

| BareSoil-FV | Land cover type changes from bare soil in 1999 to FV in 2004 |

| CV-FV | Land cover type changes from CV in 1999 to FV in 2004 |

| 1999 | 2004 | |||

|---|---|---|---|---|

| Land cover class | User's Acc. (%) | Producer's Acc. (%) | User's Acc. (%) | Producer's Acc. (%) |

| Building | 83.6 | 94.4 | 93.4 | 93.4 |

| CV | 97.7 | 94.4 | 97.7 | 93.3 |

| FV | 94.9 | 89.3 | 91.4 | 92.5 |

| Pavement | 91.9 | 88.3 | 91.8 | 94.4 |

| Bare soil | 90.0 | 100 | 95.9 | 94.0 |

| Overall accuracy | 92.3% | 93.7% | ||

| Kappa statistic | 0.899 | 0. 921 | ||

| Reference data | Row Total | User Acc. (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Classified data | NoChange | ToBuilding | ToCV | ToFV | ToPavement | ToBareSoil | ||

| NoChange | 193 | 0 | 1 | 4 | 1 | 1 | 200 | 96.5 |

| ToBuilding | 4 | 25 | 0 | 0 | 3 | 0 | 32 | 78.1 |

| ToCV | 20 | 0 | 17 | 2 | 0 | 0 | 39 | 43.6 |

| ToFV | 24 | 1 | 5 | 28 | 0 | 0 | 58 | 48.3 |

| ToPavement | 0 | 4 | 0 | 0 | 33 | 0 | 41 | 80.5 |

| ToBareSoil | 2 | 0 | 0 | 0 | 0 | 27 | 30 | 93.3 |

| Column Total | 247 | 30 | 23 | 34 | 37 | 28 | 400 | |

| Producer Acc. (%) | 78.1 | 83.3 | 73.9 | 82.4 | 89.2 | 96.4 | ||

| Overall accuracy | 81.3% | |||||||

| Kappa Statistics | 0.712 | |||||||

| Classified data | Reference data | Row Total | User Acc. (%) | |||||

|---|---|---|---|---|---|---|---|---|

| NoChange | ToBuilding | ToCV | ToFV | ToPavement | ToBareSoil | |||

| NoChange | 192 | 1 | 1 | 2 | 1 | 3 | 200 | 96.0 |

| ToBuilding | 0 | 27 | 0 | 2 | 0 | 1 | 30 | 90.0 |

| ToCV | 11 | 0 | 22 | 3 | 0 | 0 | 36 | 61.1 |

| ToFV | 5 | 0 | 1 | 38 | 1 | 0 | 45 | 84.4 |

| ToPavement | 2 | 5 | 0 | 0 | 52 | 0 | 59 | 88.1 |

| ToBareSoil | 1 | 0 | 0 | 0 | 0 | 29 | 30 | 96.7 |

| Column Total | 211 | 33 | 24 | 45 | 54 | 33 | 400 | |

| Producer Acc. (%) | 91.0 | 81.8 | 91.7 | 84.4 | 96.3 | 87.9 | ||

| Overall accuracy | 90.0% | |||||||

| Kappa Statistics | 0.854 | |||||||

| Land cover | 1999 | 2004 | Relative Change | |||

|---|---|---|---|---|---|---|

| Area (ha) | Proportion (%) | Area (ha) | Proportion (%) | Area (ha) | Proportion (%) | |

| Building | 1989.5 | 11.6 | 2055.6 | 12.0 | 66.1 | 0.4 |

| CV | 5876.9 | 34.3 | 5915.8 | 34.5 | 38.9 | 0.2 |

| FV | 4839.0 | 28.2 | 4616.0 | 26.9 | -223.0 | -1.3 |

| Pavement | 4122.8 | 24.0 | 4442.6 | 25.9 | 319.8 | 1.9 |

| Bare soil | 321.0 | 1.9 | 119.2 | 0.7 | -201.8 | -1.2 |

| From | Building | Pavement | Bare soil | CV | FV | Total |

|---|---|---|---|---|---|---|

| To | ||||||

| Building | 0 | |||||

| Pavement | 10.00 | 102.35 | 0.39 | 112.74 | ||

| Bare soil | 24.22 | 112.49 | 3.88 | 133.07 | 273.66 | |

| CV | 30.36 | 93.56 | 25.47 | 115.09 | 264.48 | |

| FV | 11.55 | 226.45 | 36.40 | 197.18 | 471.58 | |

| Total | 66.13 | 432.50 | 71.87 | 303.41 | 248.55 | |

| Relative Change | 66.13 | 319.76 | -201.79 | 38.93 | -223.03 |

© 2008 by MDPI Reproduction is permitted for noncommercial purposes.

Share and Cite

Zhou, W.; Troy, A.; Grove, M. Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data. Sensors 2008, 8, 1613-1636. https://doi.org/10.3390/s8031613

Zhou W, Troy A, Grove M. Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data. Sensors. 2008; 8(3):1613-1636. https://doi.org/10.3390/s8031613

Chicago/Turabian StyleZhou, Weiqi, Austin Troy, and Morgan Grove. 2008. "Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data" Sensors 8, no. 3: 1613-1636. https://doi.org/10.3390/s8031613

APA StyleZhou, W., Troy, A., & Grove, M. (2008). Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data. Sensors, 8(3), 1613-1636. https://doi.org/10.3390/s8031613