1. Introduction

Digital, wide dynamic range compression (WDRC) hearing aids, which provide nonlinear amplification, have been increasingly used nowadays. Release time (RT) is an important parameter of the nonlinear compression function, determining how quickly the compressor reacts to a decrease in input sound level. RT varies from milliseconds to seconds. There is no established definition regarding short and long RTs. It is generally accepted that RTs can be considered short when they are less than 100 milliseconds and long when they are greater than 500 milliseconds. With different RT settings, temporal envelopes of amplified sounds, especially speech signals, can vary drastically. The advantages and disadvantages for short and long RTs were thoroughly reviewed and briefly summarized here [

1].

A compressor with a short RT reacts very quickly to changes in sound intensity. It has been claimed that it allows the hearing aid to provide greater gain to soft sounds, which results in an improvement in audibility of soft consonants and thereby, to some extent, restores loudness perception to “normal.” Despite the merits of short RT processing, some potential drawbacks are also evident. By providing more gain to softer sounds, short-term amplitude contrasts of a speech signal (e.g., consonant-to-vowel ratio) will be altered, and the temporal pattern of the speech signal will be distorted. As a consequence, the naturalness of the processed speech signal will be compromised. Another frequently claimed disadvantage in using short RT processing is the perceived distraction with background noise during ongoing speech pauses, which deteriorates listening comfort.

A compressor with long RT processing maintains gain for a longer period of time compared to a compressor with short RT processing. Thus, the internal intensity difference of a speech signal can be largely preserved, and minimum perceived distortion can be achieved. As a result, speech signals processed with a long RT could be more natural, and the listening comfort of hearing aid wearers could be increased. Similarly, with a long RT, the larger gain will not be applied to the relatively low-level background noise during the pauses of speech, and consequently, listening comfort could be improved. Adverse effects of long RT processing are also substantial. First, available speech cues in a modulated background masker may not receive enough amplification with long RT processing, so that speech understanding ability in such a condition will be diminished. Second, when an intense sound precedes a softer sound, the gain applied to the intense sound will be decreased due to the nonlinear amplification algorithm. Such a lower amount of gain will still be applied to the succeeding softer sounds because of a long compression release period, which may result in inaudibility.

Unfortunately, to date, no standardized strategy or protocol for prescribing RT exists. Audiologists and hearing aid specialists often use the default RT setting that is recommended by the hearing aid’s manufacturer. With this method, not all hearing aid users are satisfied.

The importance of RT selection has been well acknowledged, and several studies have sought to determine the overall superiority of either short or long RT (e.g., [

2,

3]). Such previous studies have investigated the advantages and disadvantages of different RTs under a variety of test conditions. Findings have been inconclusive, and numerous factors could possibly account for the discrepant results.

More recent research has examined the role of listeners’ cognition in determining RT superiority [

4,

5,

6,

7,

8,

9,

10,

11]. Researchers observed that cognitive functions that were closely associated with speech recognition performance were related to working memory capacity, which includes temporal storage and central executive [

12,

13]. The temporal storage function of working memory allows auditory information to be accumulated, while the central executive system of working memory decodes and processes the accumulated information. Deterioration of either of these two functions will very likely compromise the efficiency and accuracy of communication and intellectual abilities, especially for older listeners [

14]. Because working memory capacity and RT are both related to speech recognition performance, an investigation of the interaction between these two variables has received great research attention.

A seminal paper by Gatehouse et al. [

7] explored the relationship between cognition and aided speech recognition performance with short and long RTs. In Gatehouse’s study, hearing aid users’ cognitive abilities were evaluated using a visual letter monitoring test [

6]. Their aided speech recognition performance when using short and long RT settings (40 ms versus 640 ms) was evaluated using the four alternative auditory feature (FAAF) test [

15] after a 10 week acclimatization period for each RT setting [

6]. The correlation between the benefit of short over long RT and cognitive scores was examined, and the correlation coefficient was 0.30. Based on this correlation, Gatehouse and colleagues suggested that listeners with greater cognitive abilities perform better with short RTs, while listeners with poorer cognitive abilities perform better with long RTs. This finding has shed important light on the role of cognition in aided hearing and inspired researchers to further investigate this topic.

Later studies on this topic used the same hearing aid technologies and compared the same RT settings. Among these studies, Gatehouse’s finding was bolstered by Lunner and Sundewall-Thoren [

8] and Rudner et al. [

11], which included the same cognitive test, but used different speech recognition tests in different languages. However, Gatehouse’s finding could not be replicated by other studies [

4,

5] with a similar research design. They found that RT is crucial only for hearing aid users with low cognitive abilities.

Why cannot Gatehouse’s finding be replicated in some studies? Cox and Xu [

4] suggested that one factor that could potentially underlie the inconsistent findings is the linguistic context of speech test materials. In these studies, different speech recognition test materials have been used. Some studies have used sentence tests (e.g., [

5]), whereas some have used word-based tests (e.g., [

7]). Different test materials provide different amounts of linguistic context. Target words in test materials with high linguistic context are highly predictable, whereas target words in test materials with low linguistic context are less likely or even impossible to be predicted. Linguistic contexts simply provide an additional source of information that supplements the sensory information [

16]. Thus, when using speech recognition test materials with high linguistic context, top-down processing may be engaged to assist in speech understanding in addition to bottom-up processing resulting in audibility. There is evidence that the ability to use linguistic context in assisting speech understanding is associated, at least to some extent, with hearing aid users’ working memory capacity [

17]. Therefore, it is reasonable to expect that the linguistic context of speech recognition test materials impacts the relationship between hearing aid wearers’ cognitive abilities (primarily working memory capacity) and their aided speech recognition performance with different RTs.

The primary goal of the current study was to further extend the line of investigation to address the relationship between cognitive abilities of listeners with hearing impairment and aided speech understanding performance with varying RTs when speech recognition tests with different amount linguistic contexts were used. The specific research questions were as the following:

- (1)

Is the finding reported in Gatehouse et al.’s [

7] study replicable using an equivalent speech recognition test?

- (2)

What is the relationship between cognitive abilities and aided speech recognition performance in noise with short and long release times?

- (3)

What is the effect of the linguistic context of speech recognition test material on measuring this relationship?

4. Discussion

The existing research has provided support for the effects of compression RT setting on aided speech recognition performance and has examined the benefits of using short and long RTs. The hearing aids used in previous studies on this topic had two compression channels with the nominal short and long RT settings at 40 ms and 640 ms, respectively. The present study used hearing aids with newer technologies from a different manufacturer using a different processing chip. The hearing aids used in the present study had 12 compression channels with the nominal short and long RT settings at 50 ms and 2000 ms, respectively. The present study is the only known study that used different hearing aid technologies from different manufacturers to assess the relationship between cognitive abilities and RT. The results of the present study, together with the findings reported from the previous studies, make this line of investigation more generalizable across different hearing aids.

4.1. Replication of the Result from Gatehouse et al. Study

Gatehouse and colleagues reported a significant correlation of 0.30 between hearing aid users’ cognitive abilities and speech recognition benefit of short over long RT [

7]. Based on this finding, they suggested a connection between cognitive abilities and speech recognition performance with different RT processing [

7]. They suggested that hearing aid users with higher cognitive abilities had better speech recognition performance with short RT processing. In order to compare with Gatehouse’s finding, the present study adopted the same correlation analyses for each of the three speech recognition tests. Despite the fact that the correlation analyses in the present study were not statistically significant (due to the smaller number of participants), the correlation coefficients were essentially the same magnitude as the one reported in Gatehouse et al.’s [

7] study when using the AFAAF test, which is essentially equivalent to the FAAF test used in Gatehouse et al.’s study [

7]. Therefore, the present study replicated the result of Gatehouse et al. [

7] using an equivalent speech recognition test.

4.2. Speech Recognition Performance of Listeners with Different Cognitive Abilities

Previous research has suggested that higher cognitive abilities are associated with better speech understanding performance (e.g., [

31]). However, this association was not observed in all existing studies about cognitive ability and speech understanding. This inconsistency has also been found in previous studies examining the relationship between cognitive abilities and aided speech recognition with different RTs. Lunner and Sundewall-Thoren [

8] reported a significant main effect of the cognitive group, showing that the cognitively high performing group had significantly better speech recognition performance compared to the cognitively low performing group. However, Gatehouse et al. [

6] and Rudner, Foo, Rönnberg, and Lunner [

10] did not find the main effect of cognitive group. It is worth noting that the three previous studies used the same cognitive test to quantify hearing aid users’ cognitive abilities but used different speech recognition tests to evaluate their aided speech recognition performance. Using different speech recognition tests in previous research was one factor that was suspected to partially account for these observations.

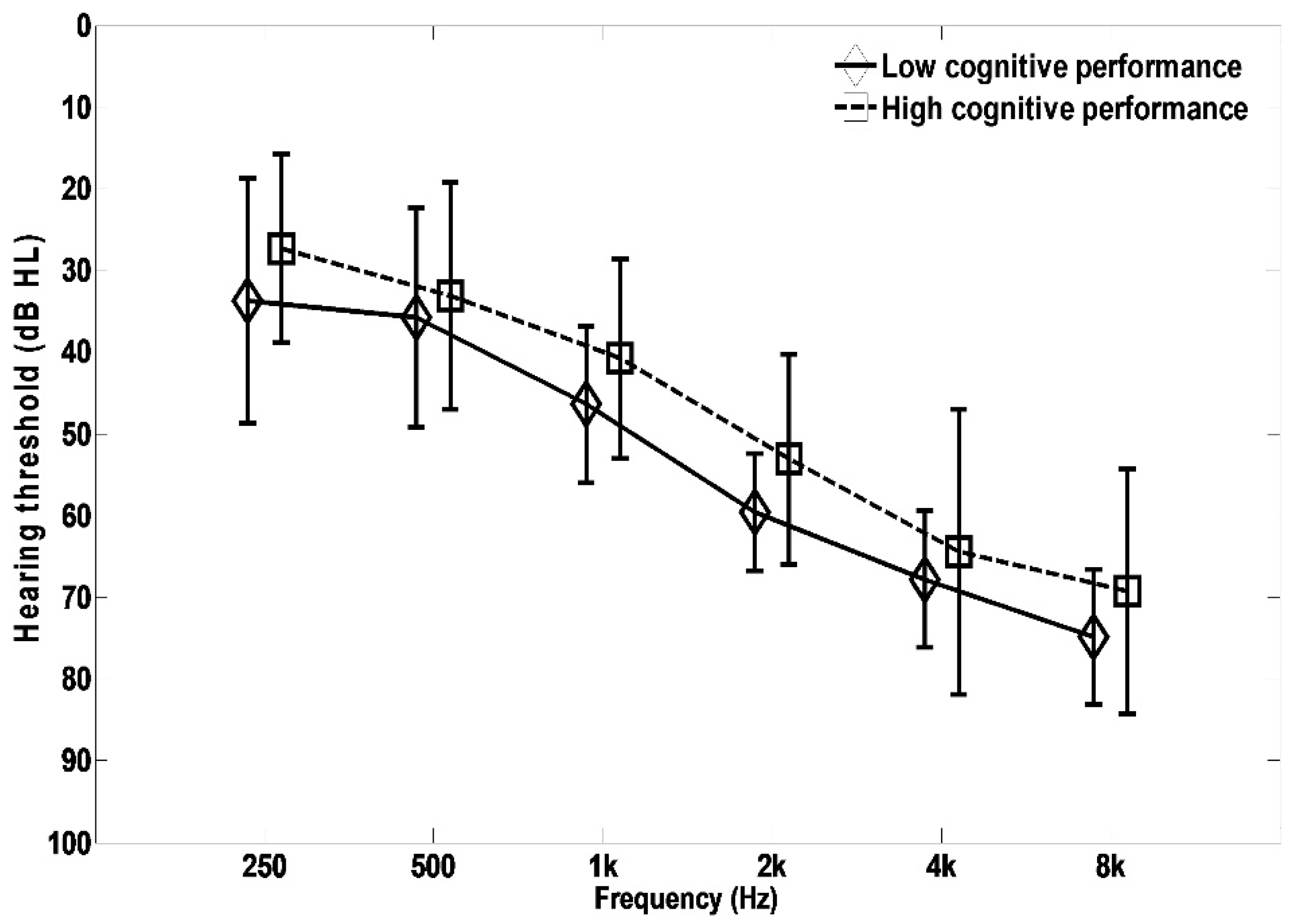

The present study did not find a significant main effect of cognitive group in the full model ANOVA (

Table 3), suggesting that the high cognitive performance group was not significantly different from the low cognitive performance group in aided speech recognition performance, regardless of speech recognition test. However, further analyses of each speech recognition test revealed marginal significant main effects of the cognitive group with the AFAAF and the BKB-SIN tests (

Table 3), indicating that hearing aid users with high cognitive abilities have better speech recognition performance compared to their counterparts with low cognitive abilities. This finding supports the suspicion based on the findings from the previous studies that speech recognition tests influence the main effect of cognitive group. The findings from the present study, together with the previous studies, suggest that the effect of cognitive ability on speech recognition performance may depend upon the chosen speech recognition test, at least to some extent.

4.3. Relationship between Cognitive Abilities and Speech Recognition with Short and Long RT

The present study examined the interaction effects between cognitive abilities, RT, and linguistic context to explore the relationship between cognitive abilities and aided speech recognition performance in noise with different RTs. The results of the interaction between cognitive ability and RT indicated that hearing aid users with high cognitive abilities performed better with a short RT than with a long RT, irrespective of speech recognition test (

Table 2 and

Figure 7). However, the present study found no significant effect of RT on speech recognition performance for either cognitive group with any of the three tests (

Figure 8). In addition, the results of the interaction between linguistic context and RT showed that hearing aid users as a group did not have significantly different speech recognition performance between the short and the long RTs when using any of the three tests (

Figure 9a). Moreover, none of the speech recognition tests produced significantly different performance between the two RTs for either cognitive group (

Figure 9b,c).

In the present study, hearing aid users with high cognitive abilities performed significantly better than did their counterparts with low cognitive abilities when using short RT processing (

Figure 8). This pattern was only observed for the AFAAF test. This suggested that the association between high cognitive abilities and short RT processing may only hold when using certain types of speech recognition tests. To examine this factor, the present study incorporated three speech recognition tests with different amounts of linguistic context. The BKB-SIN test and the WIN test are both open-set tests categorized as the context-high and the context-low tests, respectively. As described earlier, the BKB-SIN test is a sentence test and allows for substantial top-down processing to understand words and sentences. However, the WIN test is a word-based test. Listeners must rely on bottom-up processing to understand the test words because the linguistic context in the WIN test is limited. The AFAAF test is very different from the BKB-SIN test and the WIN test. First, it is a closed-set word-based test with a fixed carrier phrase. Second, the displayed alternatives differ in one or two phonological features (minimal pair), which can be very confusing. Considering the characteristics of the AFAAF test, it is assumed that some predictive cues from the displayed alternatives are available to listeners to assist in speech recognition. Therefore, in the planning phase of the present study, the AFAAF test was considered an intermediary between the BKB-SIN test and the WIN test. Assuming an effect of linguistic context on the relationship between cognitive abilities and RT, speech recognition performance with the three tests should reveal a pattern that would follow the order of the amount of linguistic context or predictive cues. However, the findings from the present study did not show such a pattern. Instead, the AFAAF test and the BKB-SIN test showed the same pattern regarding the relationship between cognitive abilities and speech understanding with short and long RTs, while the WIN test showed a pattern that was opposite to the other two speech recognition tests. It is also interesting that the AFAAF test produced the greatest effect on the difference between the two cognitive groups. Moreover, the BKB-SIN test did not differ substantially from the WIN test in patterns of speech recognition performance in terms of the factors of cognitive ability and RT (

Figure 9b,c).

The AFAAF test and the BKB-SIN test produced similar speech recognition patterns, suggesting that these two tests affected the relationship between cognitive abilities and speech understanding with different RTs the same way. This is probably because both speech recognition tests allow top-down processing to facilitate speech understanding in addition to audibility. For the BKB-SIN test, the top-down processing is involved probably because of its context-rich test sentences. Regarding speech recognition, working memory is a capacity-limited system that both stores recent phonological information in short-term memory and simultaneously processes the information with knowledge stored in long-term memory. With the BKB-SIN test, working memory capacity is linked to speech understanding. However, since the contextual cues are involved in speech understanding, RT may become less important [

4]. The AFAAF test engages top-down processing via a certain amount of predictive cues from displayed alternatives. Notably, the AFAAF test produced the greatest effect on the difference between the two cognitive groups. This suggests that the AFAAF test has some other unique characteristics in addition to linguistic context, which makes it sensitive to differences in cognitive abilities. This may also explain why Gatehouse’s study, which used the FAAF test, could not be replicated by some similar studies. Later sections offer further discussion about the characteristics of the AFAAF test and their possible impacts on speech understanding.

In contrast to the AFAAF test and the BKB-SIN test, the WIN test is an open-set monosyllabic word test that has limited linguistic context. Understanding the test words masked by noise mostly relies on audibility. Thus, speech recognition is mainly based on bottom-up processing. Consequently, cognitive abilities may not be involved as much as with the other two speech recognition tests. It is interesting that the short and the long RT processing did not reveal substantial and statistically significant differences in speech recognition performance for either group for both the BKB-SIN test and the WIN test (

Figure 9b,c). This implies that a hearing aid user’s speech recognition performance in noise with different RT settings probably does not depend on the linguistic context of speech recognition test material. Thus, it can be argued that the linguistic context of speech recognition tests may not be considered a critical factor in assessing the relationship between cognitive abilities and understanding speech with different RTs. The results from the present study do not support the hypothesis proposed by Cox and Xu [

4]. Surprisingly, the AFAAF test produced the greatest difference between the two cognitive groups. It is reasonable to speculate that other factors embedded in speech recognition tests influence the assessment of the relationship of interest.

4.4. What Makes the AFAAF a Sensitive Test to Detect Cognitive Difference?

The AFAAF test is a word-based closed-set test. Fundamental and underlying differences exist between open-set and closed-set tests, in addition to chance performance and the way the two types of tests are administered. Clopper, Pisoni, and Tierney [

32] suggested that crucial differences between the two test formats are due to the nature of the specific task demands imposed on lexical access of phonetically similar words. In open-set tests, listeners must compare the target word to all possible candidate words in their lexical memories, whereas in closed-set tests, the listeners only need to make a limited number of comparisons using the provided options. Therefore, the difference in task demand of the two test formats is, in essence, due to differences in lexical competition effect.

At first glance, it seems obvious that recognizing the same words requires more word comparisons in open-set tests compared to closed-set tests. As a result, open-set tests are more difficult compared to closed-set tests. However, lexical competition in closed-set tests could increase if the degree of confusion between response alternatives increased [

32]. According to Clopper et al. [

32], greater phonetic confusability among the response alternatives is associated with greater lexical competition in a closed-set test because it requires an effort from a listener to differentiate subtle phonetic differences.

Phonemes are confusable when they are phonologically similar. When the task is to distinguish the target item from several phonologically similar items, the probability of losing a phonological feature, which discriminates the target item from other items of the memory set, will be greatest when the number of discriminating features is small [

33]. For example, it is difficult to distinguish the target word from the given minimal pair of response alternatives because only one or two phonemes differentiate a pair of words. Comparing phonologically similar words can affect short-term memory (STM). Evidence shows that the similarity between phonemes leads to STM confusions of English vowels and consonants [

34,

35].

In addition to confusable phonemes, visual information about the displayed response alternatives is stored in a listener’s short-term memory for comparison. It has been proposed that this visual information relates to a listener’s cognitive ability, although there is little direct evidence to support this proposition. Previous research on visual word recognition shed some light on this subject. Gathercole and Baddeley [

36] reviewed studies on visual word recognition performance and suggested an association with working memory when certain types of word recognition tasks (e.g., rhyme judgment) are required. Comparing two phonological representations requires information storage, which can impose a substantial memory load.

As described earlier, working memory includes short-term memory (temporal storage) and other processing mechanisms (central executive) that help make use of short-term memory [

13,

37]. The confusion induced by similar phonemes in short-term memory could potentially influence the function of working memory. In addition, reading phonologically similar response alternatives may increase a hearing aid user’s working memory load. Therefore, since working memory is one aspect of cognitive processing [

13], it is likely to be heavily involved in distinguishing the target word from the non-target alternatives when they are phonologically similar to each other. Therefore, it is reasonable to argue that listeners with higher cognitive abilities could differentiate similar phonemes and identify the target words more accurately compared to their counterparts with lower cognitive abilities.

According to the above discussion, among the three speech recognition tests in the present study, the AFAAF test appears to be the most sensitive test in detecting differences in cognitive ability. One of the reasons is that the four alternatives on the test are constructed based on a minimal pair structure, while the alternatives are very similar in terms of phonemes. Thus, the lexical competition aimed at differentiating subtle phonological differences between target words and the corresponding non-target alternatives is high. This requires a higher cognitive capability to process the confusable lexical information stored in short-term memory. Moreover, the minimal pair structure for the alternatives also makes storage and comparison of the displayed alternatives demanding, requiring higher cognitive ability. Consequently, when using the AFAAF test, speech recognition performance could be considerably better among listeners with higher cognitive abilities.

Because the BKB-SIN test and the WIN test are two open-set tests, listeners are required to compare the target words to all possible candidate words in their lexical memories. These two tests differ substantially in their amount of linguistic context. For the BKB-SIN test, it is probable that the effect of linguistic context overcomes the effect of lexical competition, as the linguistic context is substantial. In contrast, the WIN test has limited linguistic context, and the lexical competition is truly determined based on the comparisons with the listener’s lexical memory. Therefore, in the present study, it is not appropriate to classify the AFAAF test as an intermediary test among the three speech recognition tests in terms of linguistic context. It is clear that the AFAAF test is very different from the other two tests.

4.5. What RT Should Be Prescribed?

One of the clinically relevant questions that remain unclear is what compression RT, a shorter one or a longer one, should be prescribed for hearing aid users. Previous research has suggested that a hearing aid user’s cognitive ability is one possible predictor, which can assist clinicians in selecting an appropriate compression RT. However, the results were not consistent. The results from the present study failed to indicate statistically significant benefits with short or long RT for either cognitive group. It is seen from

Table 1 that the two mean SNR50 values for each speech recognition test are similar, suggesting that adjusting release time is neither beneficial nor detrimental when listening to speech in noise. Therefore, at this moment, either a short or a long RT could be prescribed for hearing aid users, regardless of their cognitive abilities.

Nonetheless, this recommendation is still open to debate. The results from the present study suggest that the selection of the most advantageous RT for a given hearing aid user might be dependent on factors other than cognitive ability.

4.6. Limitations

There are some limitations to this study. First, in addition to linguistic context, listeners may use other cues, such as coarticulation cues, prosodic cues, and prior knowledge about the topic, to assist in understanding speech when listening to sentences or discourses (e.g., [

38]). The effect of these cues was not controlled in the present study. Second, aided speech recognition performance measured in the present study may be impacted by WDRC. When using different speech recognition test materials and SNRs, WDRC amplifiers could behave differently due to the varying amplitude of the test stimuli, resulting in various gains for keywords in a sentence test versus in a word-based test. The impact of WDRC may be especially pronounced for the AFAAF and the WIN tests because both of them use a constant carrier phrase and place the keyword at the same place. Therefore, the effects of the WDRC might not have been the same for the three speech recognition tests used in this study. Although it is beyond the scope of the present study, some acoustic measurements, for example, the temporal envelope of the hearing aid outputs for the three tests, can, to some extent, facilitate the evaluation of the impact of WDRC. Changes in temporal envelopes of amplified speech in the presence of noise will result in different output SNRs when using different compression time constants [

39], which will have an impact on audibility and how much speech cues can be utilized for top-down and bottom-up processing. Future research regarding the effect of linguistic context of speech recognition tests should take WDRC behavior into consideration.