Can Music Enhance Working Memory and Speech in Noise Perception in Cochlear Implant Users? Design Protocol for a Randomized Controlled Behavioral and Electrophysiological Study

Abstract

:1. Introduction

1.1. Music Training Improves Music Appreciation and Speech Understanding in CI Users

1.2. Music Training for Better Speech Understanding in Noise (SIN) with CI

1.3. Cognitive Aspects of Speech Understanding

1.4. Sentence Final Word Identification and Recall Test (SWIR)

1.5. Electrophysiological Measures Related to Speech Encoding, Working Memory, Attention, and Listening Effort

1.6. The Rationale for the Study

2. Research Questions

- Experiment 1

- Training effects of musical training on Speech Understanding in Noise

- Research Questions:

- (1)

- Does music training with separate focuses on pitch, timbre, and rhythm result in improvements in behavioral speech in noise measures (greater percent correct in SWIR task)?

- (2)

- Does pitch- and timbre-based training result in greater improvements in behavioral speech in noise measures (greater percent correct in SWIR task) when compared to rhythm-based training?

- Experiment 2

- Training effects of musical training on Working Memory Performance.

- Research Questions:

- (1)

- Does music training with a focus on pitch, timbre, and rhythm result in improvements in behavioral working memory performance in noise measures (greater percent of recall in SWIR working memory task)?

- (2)

- Does pitch and timbre-based training result in greater improvements in behavioral working memory performance in noise measures (greater percent of recall in SWIR working memory task) when compared to rhythm-based training?

- Experiment 3

- EEG measures of musical training on Speech Understanding in Noise and Working Memory Performance.

- Research Question:

- (1)

- Does music training alter EEG measures (alpha oscillation modulation) during the memory recall task of the SWIR?

- (2)

- Does music training alter speech neural tracking during the sentence understanding task of the SWIR?

3. Materials and Methods

3.1. Participants and Sample Size

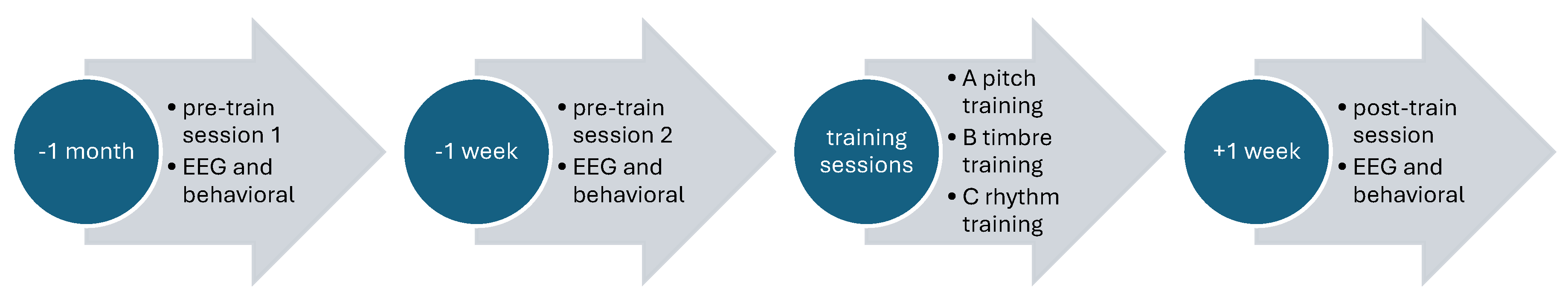

3.2. EEG Recording and Study Procedure

3.3. The Procedure of the Sentence Final Word Identification and Recall Test (SWIR)

3.4. Statistical Analysis

3.5. Instruments and Music Material

4. Expected Outcome

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Limb, C.J.; Roy, A.T. Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hear. Res. 2014, 308, 13–26. [Google Scholar] [CrossRef]

- McDermott, H.J. Music Perception with Cochlear Implants: A Review. Trends Amplif. 2004, 8, 49–82. [Google Scholar] [CrossRef]

- Looi, V.; Gfeller, K.; Driscoll, V. Music Appreciation and Training for Cochlear Implant Recipients: A Review. Semin. Hear. 2012, 33, 307–334. [Google Scholar] [CrossRef]

- Magele, A.; Wirthner, B.; Schoerg, P.; Ploder, M.; Sprinzl, G.M. Improved Music Perception after Music Therapy following Cochlear Implantation in the Elderly Population. J. Pers. Med. 2022, 12, 443. [Google Scholar] [CrossRef]

- Driscoll, V. The Effects of Training on Recognition of Musical Instruments by Adults with Cochlear Implants. Semin. Hear. 2012, 33, 410–418. [Google Scholar] [CrossRef] [PubMed]

- Veltman, J.; Maas, M.J.M.; Beijk, C.; Groenhuis, A.Y.M.; Versnel, H.; Vissers, C.; Huinck, W.J.; Hoetink, A.E. Development of the Musi-CI Training, A Musical Listening Training for Cochlear Implant Users: A Participatory Action Research Approach. Trends Hear. 2023, 27, 23312165231198368. [Google Scholar] [CrossRef]

- Fu, Q.-J.; Galvin, J.J. Maximizing cochlear implant patients’ performance with advanced speech training procedures. Hear. Res. 2008, 242, 198–208. [Google Scholar] [CrossRef]

- Lo, C.Y.; McMahon, C.M.; Looi, V.; Thompson, W.F. Melodic Contour Training and Its Effect on Speech in Noise, Consonant Discrimination, and Prosody Perception for Cochlear Implant Recipients. Behav. Neurol. 2015, 2015, 352869. [Google Scholar] [CrossRef]

- Smith, L.; Bartel, L.; Joglekar, S.; Chen, J. Musical Rehabilitation in Adult Cochlear Implant Recipients with a Self-administered Software. Otol. Neurotol. 2017, 38, e262–e267. [Google Scholar] [CrossRef]

- Abdulbaki, H.; Mo, J.; Limb, C.J.; Jiam, N.T. The Impact of Musical Rehabilitation on Complex Sound Perception in Cochlear Implant Users: A Systematic Review. Otol. Neurotol. 2023, 44, 965–977. [Google Scholar] [CrossRef]

- Chen, J.K.-C.; Chuang, A.Y.C.; McMahon, C.; Hsieh, J.-C.; Tung, T.-H.; Li, L.P.-H. Music Training Improves Pitch Perception in Prelingually Deafened Children with Cochlear Implants. Pediatrics 2010, 125, e793–e800. [Google Scholar] [CrossRef] [PubMed]

- Firestone, G.M.; McGuire, K.; Liang, C.; Zhang, N.; Blankenship, C.M.; Xiang, J.; Zhang, F. A Preliminary Study of the Effects of Attentive Music Listening on Cochlear Implant Users’ Speech Perception, Quality of Life, and Behavioral and Objective Measures of Frequency Change Detection. Front. Hum. Neurosci. 2020, 14, 110. [Google Scholar] [CrossRef] [PubMed]

- Boyer, J.; Stohl, J. MELUDIA—Online music training for cochlear implant users. Cochlear Implant. Int. 2022, 23, 257–269. [Google Scholar] [CrossRef] [PubMed]

- Dritsakis, G.; Van Besouw, R.M.; Kitterick, P.; Verschuur, C.A. A Music-Related Quality of Life Measure to Guide Music Rehabilitation for Adult Cochlear Implant Users. Am. J. Audiol. 2017, 26, 268–282. [Google Scholar] [CrossRef] [PubMed]

- Adel, Y.; Nagel, S.; Weissgerber, T.; Baumann, U.; Macherey, O. Pitch Matching in Cochlear Implant Users with Single-Sided Deafness: Effects of Electrode Position and Acoustic Stimulus Type. Front. Neurosci. 2019, 13, 1119. [Google Scholar] [CrossRef]

- D’Onofrio, K.L.; Caldwell, M.; Limb, C.; Smith, S.; Kessler, D.M.; Gifford, R.H. Musical Emotion Perception in Bimodal Patients: Relative Weighting of Musical Mode and Tempo Cues. Front. Neurosci. 2020, 14, 114. [Google Scholar] [CrossRef] [PubMed]

- Calvino, M.; Zuazua, A.; Sanchez-Cuadrado, I.; Gavilán, J.; Mancheño, M.; Arroyo, H.; Lassaletta, L. Meludia platform as a tool to evaluate music perception in pediatric and adult cochlear implant users. Eur. Arch. Otorhinolaryngol. 2024, 281, 629–638. [Google Scholar] [CrossRef]

- Lehmann, A.; Limb, C.J.; Marozeau, J. Editorial: Music and Cochlear Implants: Recent Developments and Continued Challenges. Front. Neurosci. 2021, 15, 736772. [Google Scholar] [CrossRef]

- Mo, J.; Jiam, N.T.; Deroche, M.L.D.; Jiradejvong, P.; Limb, C.J. Effect of Frequency Response Manipulations on Musical Sound Quality for Cochlear Implant Users. Trends Hear. 2022, 26, 233121652211200. [Google Scholar] [CrossRef]

- Tahmasebi, S.; Gajȩcki, T.; Nogueira, W. Design and Evaluation of a Real-Time Audio Source Separation Algorithm to Remix Music for Cochlear Implant Users. Front. Neurosci. 2020, 14, 434. [Google Scholar] [CrossRef]

- Gfeller, K. Music as Communication and Training for Children with Cochlear Implants. In Pediatric Cochlear Implantation; Young, N.M., Iler Kirk, K., Eds.; Springer: New York, NY, USA, 2016; pp. 313–326. ISBN 978-1-4939-2787-6. [Google Scholar]

- Koelsch, S.; Gunter, T.C.; Cramon, V.D.Y.; Zysset, S.; Lohmann, G.; Friederici, A.D. Bach Speaks: A Cortical “Language-Network” Serves the Processing of Music. NeuroImage 2002, 17, 956–966. [Google Scholar] [CrossRef] [PubMed]

- Zatorre, R.J.; Belin, P.; Penhune, V.B. Structure and function of auditory cortex: Music and speech. Trends Cogn. Sci. 2002, 6, 37–46. [Google Scholar] [CrossRef] [PubMed]

- Pesnot Lerousseau, J.; Hidalgo, C.; Schön, D. Musical Training for Auditory Rehabilitation in Hearing Loss. J. Clin. Med. 2020, 9, 1058. [Google Scholar] [CrossRef]

- Patel, A.D. Why would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef]

- Schön, D.; Magne, C.; Besson, M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology 2004, 41, 341–349. [Google Scholar] [CrossRef] [PubMed]

- Shahin, A.J. Neurophysiological Influence of Musical Training on Speech Perception. Front. Psychol. 2011, 2, 126. [Google Scholar] [CrossRef] [PubMed]

- Kang, R.; Nimmons, G.L.; Drennan, W.; Longnion, J.; Ruffin, C.; Nie, K.; Won, J.H.; Worman, T.; Yueh, B.; Rubinstein, J. Development and Validation of the University of Washington Clinical Assessment of Music Perception Test. Ear Hear. 2009, 30, 411–418. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. Musicians experience less age-related decline in central auditory processing. Psychol. Aging 2012, 27, 410–417. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. The Influence of Lifelong Musicianship on Neurophysiological Measures of Concurrent Sound Segregation. J. Cogn. Neurosci. 2013, 25, 503–516. [Google Scholar] [CrossRef]

- Fowler, S.L.; Calhoun, H.; Warner-Czyz, A.D. Music Perception and Speech-in-Noise Skills of Typical Hearing and Cochlear Implant Listeners. Am. J. Audiol. 2021, 30, 170–181. [Google Scholar] [CrossRef]

- McKay, C.M. No Evidence That Music Training Benefits Speech Perception in Hearing-Impaired Listeners: A Systematic Review. Trends Hear. 2021, 25, 233121652098567. [Google Scholar] [CrossRef]

- Barrett, K.C.; Ashley, R.; Strait, D.L.; Kraus, N. Art and science: How musical training shapes the brain. Front. Psychol. 2013, 4, 713. [Google Scholar] [CrossRef]

- Novembre, G.; Keller, P.E. A conceptual review on action-perception coupling in the musiciansâ€TM brain: What is it good for? Front. Hum. Neurosci. 2014, 8, 603. [Google Scholar] [CrossRef] [PubMed]

- Olthof, B.M.J.; Rees, A.; Gartside, S.E. Multiple Nonauditory Cortical Regions Innervate the Auditory Midbrain. J. Neurosci. 2019, 39, 8916–8928. [Google Scholar] [CrossRef] [PubMed]

- Zatorre, R.J.; Chen, J.L.; Penhune, V.B. When the brain plays music: Auditory–motor interactions in music perception and production. Nat. Rev. Neurosci. 2007, 8, 547–558. [Google Scholar] [CrossRef]

- Fujioka, T.; Zendel, B.R.; Ross, B. Endogenous Neuromagnetic Activity for Mental Hierarchy of Timing. J. Neurosci. 2010, 30, 3458–3466. [Google Scholar] [CrossRef]

- Grahn, J.A. Neural Mechanisms of Rhythm Perception: Current Findings and Future Perspectives. Top. Cogn. Sci. 2012, 4, 585–606. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R. The importance of the motor system in the development of music-based forms of auditory rehabilitation. Ann. N. Y. Acad. Sci. 2022, 1515, 10–19. [Google Scholar] [CrossRef]

- Slater, J.; Kraus, N. The role of rhythm in perceiving speech in noise: A comparison of percussionists, vocalists and non-musicians. Cogn. Process 2016, 17, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Herholz, S.C.; Zatorre, R.J. Musical Training as a Framework for Brain Plasticity: Behavior, Function, and Structure. Neuron 2012, 76, 486–502. [Google Scholar] [CrossRef]

- Chari, D.A.; Barrett, K.C.; Patel, A.D.; Colgrove, T.R.; Jiradejvong, P.; Jacobs, L.Y.; Limb, C.J. Impact of Auditory-Motor Musical Training on Melodic Pattern Recognition in Cochlear Implant Users. Otol. Neurotol. 2020, 41, e422–e431. [Google Scholar] [CrossRef] [PubMed]

- Van Knijff, E.C.; Coene, M.; Govaerts, P.J. Speech understanding in noise in elderly adults: The effect of inhibitory control and syntactic complexity. Int. J. Lang. Commun. Disord. 2018, 53, 628–642. [Google Scholar] [CrossRef] [PubMed]

- Bugos, J.A.; Perlstein, W.M.; McCrae, C.S.; Brophy, T.S.; Bedenbaugh, P.H. Individualized Piano Instruction enhances executive functioning and working memory in older adults. Aging Ment. Health 2007, 11, 464–471. [Google Scholar] [CrossRef] [PubMed]

- Degé, F.; Schwarzer, G. The Effect of a Music Program on Phonological Awareness in Preschoolers. Front. Psychol. 2011, 2, 124. [Google Scholar] [CrossRef] [PubMed]

- Moreno, S.; Bidelman, G.M. Examining neural plasticity and cognitive benefit through the unique lens of musical training. Hear. Res. 2014, 308, 84–97. [Google Scholar] [CrossRef]

- Anders Ericsson, K. Deliberate Practice and Acquisition of Expert Performance: A General Overview. Acad. Emerg. Med. 2008, 15, 988–994. [Google Scholar] [CrossRef] [PubMed]

- Hanna-Pladdy, B.; Gajewski, B. Recent and Past Musical Activity Predicts Cognitive Aging Variability: Direct Comparison with General Lifestyle Activities. Front. Hum. Neurosci. 2012, 6, 198. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Strait, D.L.; Anderson, S.; Hittner, E.; Kraus, N. Musical Experience and the Aging Auditory System: Implications for Cognitive Abilities and Hearing Speech in Noise. PLoS ONE 2011, 6, e18082. [Google Scholar] [CrossRef]

- Hanna-Pladdy, B.; MacKay, A. The relation between instrumental musical activity and cognitive aging. Neuropsychology 2011, 25, 378–386. [Google Scholar] [CrossRef] [PubMed]

- Francois, C.; Schon, D. Musical Expertise Boosts Implicit Learning of Both Musical and Linguistic Structures. Cereb. Cortex 2011, 21, 2357–2365. [Google Scholar] [CrossRef]

- Daneman, M.; Merikle, P.M. Working memory and language comprehension: A meta-analysis. Psychon. Bull. Rev. 1996, 3, 422–433. [Google Scholar] [CrossRef] [PubMed]

- Rönnberg, J.; Lunner, T.; Zekveld, A.; Sörqvist, P.; Danielsson, H.; Lyxell, B.; Dahlström, Ö.; Signoret, C.; Stenfelt, S.; Pichora-Fuller, M.K.; et al. The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Front. Syst. Neurosci. 2013, 7, 31. [Google Scholar] [CrossRef] [PubMed]

- Rönnberg, J.; Holmer, E.; Rudner, M. Cognitive hearing science and ease of language understanding. Int. J. Audiol. 2019, 58, 247–261. [Google Scholar] [CrossRef] [PubMed]

- Rudner, M.; Rönnberg, J. The role of the episodic buffer in working memory for language processing. Cogn. Process 2008, 9, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Zatorre, R.J. Musical training sharpens and bonds ears and tongue to hear speech better. Proc. Natl. Acad. Sci. USA 2017, 114, 13579–13584. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Buchsbaum, B.R.; Grady, C.L.; Alain, C. Noise differentially impacts phoneme representations in the auditory and speech motor systems. Proc. Natl. Acad. Sci. USA. 2014, 111, 7126–7131. [Google Scholar] [CrossRef]

- Kraus, N.; Strait, D.L.; Parbery-Clark, A. Cognitive factors shape brain networks for auditory skills: Spotlight on auditory working memory. Ann. N. Y. Acad. Sci. 2012, 1252, 100–107. [Google Scholar] [CrossRef]

- Escobar, J.; Mussoi, B.S.; Silberer, A.B. The Effect of Musical Training and Working Memory in Adverse Listening Situations. Ear Hear. 2020, 41, 278–288. [Google Scholar] [CrossRef] [PubMed]

- Puschmann, S.; Baillet, S.; Zatorre, R.J. Musicians at the Cocktail Party: Neural Substrates of Musical Training during Selective Listening in Multispeaker Situations. Cereb. Cortex 2019, 29, 3253–3265. [Google Scholar] [CrossRef] [PubMed]

- Yeend, I.; Beach, E.F.; Sharma, M. Working Memory and Extended High-Frequency Hearing in Adults: Diagnostic Predictors of Speech-in-Noise Perception. Ear Hear. 2019, 40, 458–467. [Google Scholar] [CrossRef]

- Winn, M.B. Rapid Release from Listening Effort Resulting from Semantic Context, and Effects of Spectral Degradation and Cochlear Implants. Trends Hear. 2016, 20, 233121651666972. [Google Scholar] [CrossRef]

- Hughes, K.C.; Galvin, K.L. Measuring listening effort expended by adolescents and young adults with unilateral or bilateral cochlear implants or normal hearing. Cochlear Implant. Int. 2013, 14, 121–129. [Google Scholar] [CrossRef]

- Dimitrijevic, A.; Smith, M.L.; Kadis, D.S.; Moore, D.R. Neural indices of listening effort in noisy environments. Sci. Rep. 2019, 9, 11278. [Google Scholar] [CrossRef]

- Shields, C.; Willis, H.; Nichani, J.; Sladen, M.; Kluk-de Kort, K. Listening effort: WHAT is it, HOW is it measured and WHY is it important? Cochlear Implant. Int. 2022, 23, 114–117. [Google Scholar] [CrossRef]

- Sandi, C.; Haller, J. Stress and the social brain: Behavioural effects and neurobiological mechanisms. Nat. Rev. Neurosci. 2015, 16, 290–304. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K. How Social Psychological Factors May Modulate Auditory and Cognitive Functioning during Listening. Ear Hear. 2016, 37, 92S–100S. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K.; Kramer, S.E.; Eckert, M.A.; Edwards, B.; Hornsby, B.W.Y.; Humes, L.E.; Lemke, U.; Lunner, T.; Matthen, M.; Mackersie, C.L.; et al. Hearing Impairment and Cognitive Energy: The Framework for Understanding Effortful Listening (FUEL). Ear Hear. 2016, 37, 5S–27S. [Google Scholar] [CrossRef]

- Gray, R.; Sarampalis, A.; Başkent, D.; Harding, E.E. Working-Memory, Alpha-Theta Oscillations and Musical Training in Older Age: Research Perspectives for Speech-on-speech Perception. Front. Aging Neurosci. 2022, 14, 806439. [Google Scholar] [CrossRef]

- Giallini, I.; Inguscio, B.M.S.; Nicastri, M.; Portanova, G.; Ciofalo, A.; Pace, A.; Greco, A.; D’Alessandro, H.D.; Mancini, P. Neuropsychological Functions and Audiological Findings in Elderly Cochlear Implant Users: The Role of Attention in Postoperative Performance. Audiol. Res. 2023, 13, 236–253. [Google Scholar] [CrossRef]

- Völter, C.; Oberländer, K.; Haubitz, I.; Carroll, R.; Dazert, S.; Thomas, J.P. Poor Performer: A Distinct Entity in Cochlear Implant Users? Audiol. Neurotol. 2022, 27, 356–367. [Google Scholar] [CrossRef]

- Torppa, R.; Faulkner, A.; Huotilainen, M.; Järvikivi, J.; Lipsanen, J.; Laasonen, M.; Vainio, M. The perception of prosody and associated auditory cues in early-implanted children: The role of auditory working memory and musical activities. Int. J. Audiol. 2014, 53, 182–191. [Google Scholar] [CrossRef] [PubMed]

- Rochette, F.; Moussard, A.; Bigand, E. Music Lessons Improve Auditory Perceptual and Cognitive Performance in Deaf Children. Front. Hum. Neurosci. 2014, 8, 488. [Google Scholar] [CrossRef] [PubMed]

- Good, A.; Gordon, K.A.; Papsin, B.C.; Nespoli, G.; Hopyan, T.; Peretz, I.; Russo, F.A. Benefits of Music Training for Perception of Emotional Speech Prosody in Deaf Children with Cochlear Implants. Ear Hear. 2017, 38, 455–464. [Google Scholar] [CrossRef]

- Ng, E.H.N.; Rudner, M.; Lunner, T.; Pedersen, M.S.; Rönnberg, J. Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. Int. J. Audiol. 2013, 52, 433–441. [Google Scholar] [CrossRef] [PubMed]

- Lunner, T.; Rudner, M.; Rosenbom, T.; Ågren, J.; Ng, E.H.N. Using Speech Recall in Hearing Aid Fitting and Outcome Evaluation Under Ecological Test Conditions. Ear Hear. 2016, 37, 145S–154S. [Google Scholar] [CrossRef] [PubMed]

- Ng, E.H.N.; Rudner, M.; Lunner, T.; Rönnberg, J. Noise Reduction Improves Memory for Target Language Speech in Competing Native but Not Foreign Language Speech. Ear Hear. 2015, 36, 82–91. [Google Scholar] [CrossRef]

- Lunner, T.; Alickovic, E.; Graversen, C.; Ng, E.H.N.; Wendt, D.; Keidser, G. Three New Outcome Measures That Tap Into Cognitive Processes Required for Real-Life Communication. Ear Hear. 2020, 41, 39S–47S. [Google Scholar] [CrossRef]

- Koelsch, S.; Wittfoth, M.; Wolf, A.; Müller, J.; Hahne, A. Music perception in cochlear implant users: An event-related potential study. Clin. Neurophysiol. 2004, 115, 966–972. [Google Scholar] [CrossRef]

- Dimitrijevic, A.; Smith, M.L.; Kadis, D.S.; Moore, D.R. Cortical Alpha Oscillations Predict Speech Intelligibility. Front. Hum. Neurosci. 2017, 11, 88. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Lopes Da Silva, F.H. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef] [PubMed]

- Bonnefond, M.; Jensen, O. Alpha Oscillations Serve to Protect Working Memory Maintenance against Anticipated Distracters. Curr. Biol. 2012, 22, 1969–1974. [Google Scholar] [CrossRef]

- Jensen, O. Oscillations in the Alpha Band (9–12 Hz) Increase with Memory Load during Retention in a Short-term Memory Task. Cereb. Cortex 2002, 12, 877–882. [Google Scholar] [CrossRef] [PubMed]

- Jensen, O.; Mazaheri, A. Shaping Functional Architecture by Oscillatory Alpha Activity: Gating by Inhibition. Front. Hum. Neurosci. 2010, 4, 186. [Google Scholar] [CrossRef] [PubMed]

- Sauseng, P.; Klimesch, W.; Doppelmayr, M.; Pecherstorfer, T.; Freunberger, R.; Hanslmayr, S. EEG alpha synchronization and functional coupling during top-down processing in a working memory task. Hum. Brain Mapp. 2005, 26, 148–155. [Google Scholar] [CrossRef]

- Manza, P.; Hau, C.L.V.; Leung, H.-C. Alpha Power Gates Relevant Information during Working Memory Updating. J. Neurosci. 2014, 34, 5998–6002. [Google Scholar] [CrossRef]

- Misselhorn, J.; Friese, U.; Engel, A.K. Frontal and parietal alpha oscillations reflect attentional modulation of cross-modal matching. Sci. Rep. 2019, 9, 5030. [Google Scholar] [CrossRef]

- Klimesch, W.; Sauseng, P.; Hanslmayr, S. EEG alpha oscillations: The inhibition–timing hypothesis. Brain Res. Rev. 2007, 53, 63–88. [Google Scholar] [CrossRef] [PubMed]

- Strauß, A.; Wöstmann, M.; Obleser, J. Cortical alpha oscillations as a tool for auditory selective inhibition. Front. Hum. Neurosci. 2014, 8, 350. [Google Scholar] [CrossRef]

- Strauß, A.; Kotz, S.A.; Scharinger, M.; Obleser, J. Alpha and theta brain oscillations index dissociable processes in spoken word recognition. NeuroImage 2014, 97, 387–395. [Google Scholar] [CrossRef]

- Rondina Ii, R.; Olsen, R.K.; Li, L.; Meltzer, J.A.; Ryan, J.D. Age-related changes to oscillatory dynamics during maintenance and retrieval in a relational memory task. PLoS ONE 2019, 14, e0211851. [Google Scholar] [CrossRef]

- ElShafei, H.A.; Fornoni, L.; Masson, R.; Bertrand, O.; Bidet-Caulet, A. Age-related modulations of alpha and gamma brain activities underlying anticipation and distraction. PLoS ONE 2020, 15, e0229334. [Google Scholar] [CrossRef] [PubMed]

- Wöstmann, M.; Herrmann, B.; Maess, B.; Obleser, J. Spatiotemporal dynamics of auditory attention synchronize with speech. Proc. Natl. Acad. Sci. USA 2016, 113, 3873–3878. [Google Scholar] [CrossRef] [PubMed]

- Alain, C.; Du, Y.; Bernstein, L.J.; Barten, T.; Banai, K. Listening under difficult conditions: An activation likelihood estimation meta-analysis. Hum. Brain Mapp. 2018, 39, 2695–2709. [Google Scholar] [CrossRef]

- Petersen, E.B.; Wöstmann, M.; Obleser, J.; Stenfelt, S.; Lunner, T. Hearing loss impacts neural alpha oscillations under adverse listening conditions. Front. Psychol. 2015, 6, 177. [Google Scholar] [CrossRef]

- Arlinger, S.; Lunner, T.; Lyxell, B.; Kathleen Pichora-Fuller, M. The emergence of Cognitive Hearing Science. Scand. J. Psychol. 2009, 50, 371–384. [Google Scholar] [CrossRef]

- Lunner, T.; Rudner, M.; Rönnberg, J. Cognition and hearing aids. Scand. J. Psychol. 2009, 50, 395–403. [Google Scholar] [CrossRef] [PubMed]

- Pichora-Fuller, M.K.; Singh, G. Effects of Age on Auditory and Cognitive Processing: Implications for Hearing Aid Fitting and Audiologic Rehabilitation. Trends Amplif. 2006, 10, 29–59. [Google Scholar] [CrossRef]

- Paul, B.T.; Chen, J.; Le, T.; Lin, V.; Dimitrijevic, A. Cortical alpha oscillations in cochlear implant users reflect subjective listening effort during speech-in-noise perception. PLoS ONE 2021, 16, e0254162. [Google Scholar] [CrossRef]

- Han, J.-H.; Dimitrijevic, A. Acoustic Change Responses to Amplitude Modulation in Cochlear Implant Users: Relationships to Speech Perception. Front. Neurosci. 2020, 14, 124. [Google Scholar] [CrossRef]

- Crosse, M.J.; Di Liberto, G.M.; Bednar, A.; Lalor, E.C. The Multivariate Temporal Response Function (mTRF) Toolbox: A MATLAB Toolbox for Relating Neural Signals to Continuous Stimuli. Front. Hum. Neurosci. 2016, 10, 604. [Google Scholar] [CrossRef]

- Crosse, M.J.; Zuk, N.J.; Di Liberto, G.M.; Nidiffer, A.R.; Molholm, S.; Lalor, E.C. Linear Modeling of Neurophysiological Responses to Speech and Other Continuous Stimuli: Methodological Considerations for Applied Research. Front. Neurosci. 2021, 15, 705621. [Google Scholar] [CrossRef]

- Obleser, J.; Kayser, C. Neural Entrainment and Attentional Selection in the Listening Brain. Trends Cogn. Sci. 2019, 23, 913–926. [Google Scholar] [CrossRef]

- Verschueren, E.; Somers, B.; Francart, T. Neural envelope tracking as a measure of speech understanding in cochlear implant users. Hear. Res. 2019, 373, 23–31. [Google Scholar] [CrossRef]

- Fiedler, L.; Wöstmann, M.; Herbst, S.K.; Obleser, J. Late cortical tracking of ignored speech facilitates neural selectivity in acoustically challenging conditions. NeuroImage 2019, 186, 33–42. [Google Scholar] [CrossRef] [PubMed]

- Xiu, B.; Paul, B.T.; Chen, J.M.; Le, T.N.; Lin, V.Y.; Dimitrijevic, A. Neural responses to naturalistic audiovisual speech are related to listening demand in cochlear implant users. Front. Hum. Neurosci. 2022, 16, 1043499. [Google Scholar] [CrossRef] [PubMed]

- Paul, B.T.; Uzelac, M.; Chan, E.; Dimitrijevic, A. Poor early cortical differentiation of speech predicts perceptual difficulties of severely hearing-impaired listeners in multi-talker environments. Sci. Rep. 2020, 10, 6141. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Hoechstetter, K.; Bornfleth, H.; Weckesser, D.; Ille, N.; Berg, P.; Scherg, M. BESA source coherence: A new method to study cortical oscillatory coupling. Brain Topogr. 2004, 16, 233–238. [Google Scholar] [CrossRef] [PubMed]

- Han, J.-H.; Zhang, F.; Kadis, D.S.; Houston, L.M.; Samy, R.N.; Smith, M.L.; Dimitrijevic, A. Auditory cortical activity to different voice onset times in cochlear implant users. Clin. Neurophysiol. 2016, 127, 1603–1617. [Google Scholar] [CrossRef]

- Research 7.1. Available online: http://www.besa.de/ (accessed on 19 May 2024).

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.-M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef]

- Gatehouse, S.; Noble, W. The Speech, Spatial and Qualities of Hearing Scale (SSQ). Int. J. Audiol. 2004, 43, 85–99. [Google Scholar] [CrossRef]

- McRackan, T.R.; Hand, B.N.; Cochlear Implant Quality of Life Development Consortium; Velozo, C.A.; Dubno, J.R. Cochlear Implant Quality of Life (CIQOL): Development of a Profile Instrument (CIQOL-35 Profile) and a Global Measure (CIQOL-10 Global). J. Speech Lang. Hear. Res. 2019, 62, 3554–3563. [Google Scholar] [CrossRef]

- Hagerman, B. Sentences for Testing Speech Intelligibility in Noise. Scand. Audiol. 1982, 11, 79–87. [Google Scholar] [CrossRef]

- Spahr, A.J.; Dorman, M.F.; Litvak, L.M.; Van Wie, S.; Gifford, R.H.; Loizou, P.C.; Loiselle, L.M.; Oakes, T.; Cook, S. Development and Validation of the AzBio Sentence Lists. Ear Hear. 2012, 33, 112–117. [Google Scholar] [CrossRef]

- Efird, J. Blocked Randomization with Randomly Selected Block Sizes. Int. J. Environ. Res. Public Health 2010, 8, 15–20. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Core Team: Vienna, Austria, 2021. [Google Scholar]

- Plichta, S.B.; Kelvin, E.A. Munro’s Statistical Methods for Health Care Research, 6th ed.; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2011; p. 567. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mertel, K.; Dimitrijevic, A.; Thaut, M. Can Music Enhance Working Memory and Speech in Noise Perception in Cochlear Implant Users? Design Protocol for a Randomized Controlled Behavioral and Electrophysiological Study. Audiol. Res. 2024, 14, 611-624. https://doi.org/10.3390/audiolres14040052

Mertel K, Dimitrijevic A, Thaut M. Can Music Enhance Working Memory and Speech in Noise Perception in Cochlear Implant Users? Design Protocol for a Randomized Controlled Behavioral and Electrophysiological Study. Audiology Research. 2024; 14(4):611-624. https://doi.org/10.3390/audiolres14040052

Chicago/Turabian StyleMertel, Kathrin, Andrew Dimitrijevic, and Michael Thaut. 2024. "Can Music Enhance Working Memory and Speech in Noise Perception in Cochlear Implant Users? Design Protocol for a Randomized Controlled Behavioral and Electrophysiological Study" Audiology Research 14, no. 4: 611-624. https://doi.org/10.3390/audiolres14040052