1. Introduction

Laparoscopic surgery involves the use of a thin, tubular device called a laparoscope that is inserted through a keyhole incision into the abdomen to perform operations that used to require the body cavity to be opened. Because the procedure involves smaller incisions, laparoscopic surgery promises less trauma, shorter hospital stays and faster recoveries [

1]. The laparoscope itself is a long, rigid fiber-optic instrument that is inserted into the body to view internal organs and structures. It is equipped with a miniature digital camera mounted at the end of the tube together with a light source. The surgery is guided by the close-up video imaging provided by such a camera and is viewed externally on a monitor.

Laparoscopy is a technically complicated surgery that requires excellent hand-eye coordination and an almost intuitive ability to navigate delicate internal structures. The surgeon must also rely on a patient-side assistant to position the camera correctly. Furthermore, the limited field of view (FoV) afforded by a single-camera laparoscope makes it difficult to navigate inside the body cavity to reach the targeted surgical region, or to monitor the surrounding anatomy as in an open-cavity surgery.

Several improvements to the current design of the single-camera laparoscope have been proposed. Most of these proposals focus on replacing the single-camera assembly with a pair of binocular cameras to provide 3D vision [

2,

3,

4]. Bhayani et al. [

5] and Tanagho et al. [

6] reported human test results that found that a 3D visualization system makes it easier to perform a standardized laparoscopic task than using a conventional 2D visualization system. Recently, several commercial 3D laparoscopy products have been on the markets and quite a few test results have been reported comparing the effectiveness of 3D versus 2D laparoscopic surgeries [

7,

8,

9,

10,

11,

12]. Some of these systems are robot-assisted [

9,

13]. While some wide-FoV endoscopes can reach 100–170

FoV, they are prone to undesirable distortion [

14], and may require image processing techniques to remove the distortion before the video footage can be used.

A second direction for improving the laparoscope is to incorporate multiple (more than two) cameras to enlarge the FoV. The MARVEL system [

15,

16,

17] uses multiple wirelessly controlled pan/tilt/zoom camera modules to facilitate intra-abdominal visualization. In the MARVEL system, cameras need to be individually inserted through separate incisions (other than surgical ports) into the abdomen and pinned via needles to the inside of the abdominal wall. Anderson et al. proposed a VTEI system [

18,

19] with a static micro-camera array that still needs to be surgically inserted into the abdomen and attached directly to the abdomen wall. Both the MARVEL and the VTEI systems intend to provide an enlarged FoV comparable to that of an open-cavity surgery. In addition to the MARVEL and the VTEI systems, a multi-view visualization system was developed to enlarge FoV by using two miniaturized cameras and a conventional endoscope [

20]. This visualization system reduced both the procedure time and the number of commands for operating the robotic endoscope holder. However, the proposed visualization system can only enlarge the FoV in one direction due to the limitation in camera placement and was not able to combine the multiple images into a single large one, which could degrade the efficiency of operation.

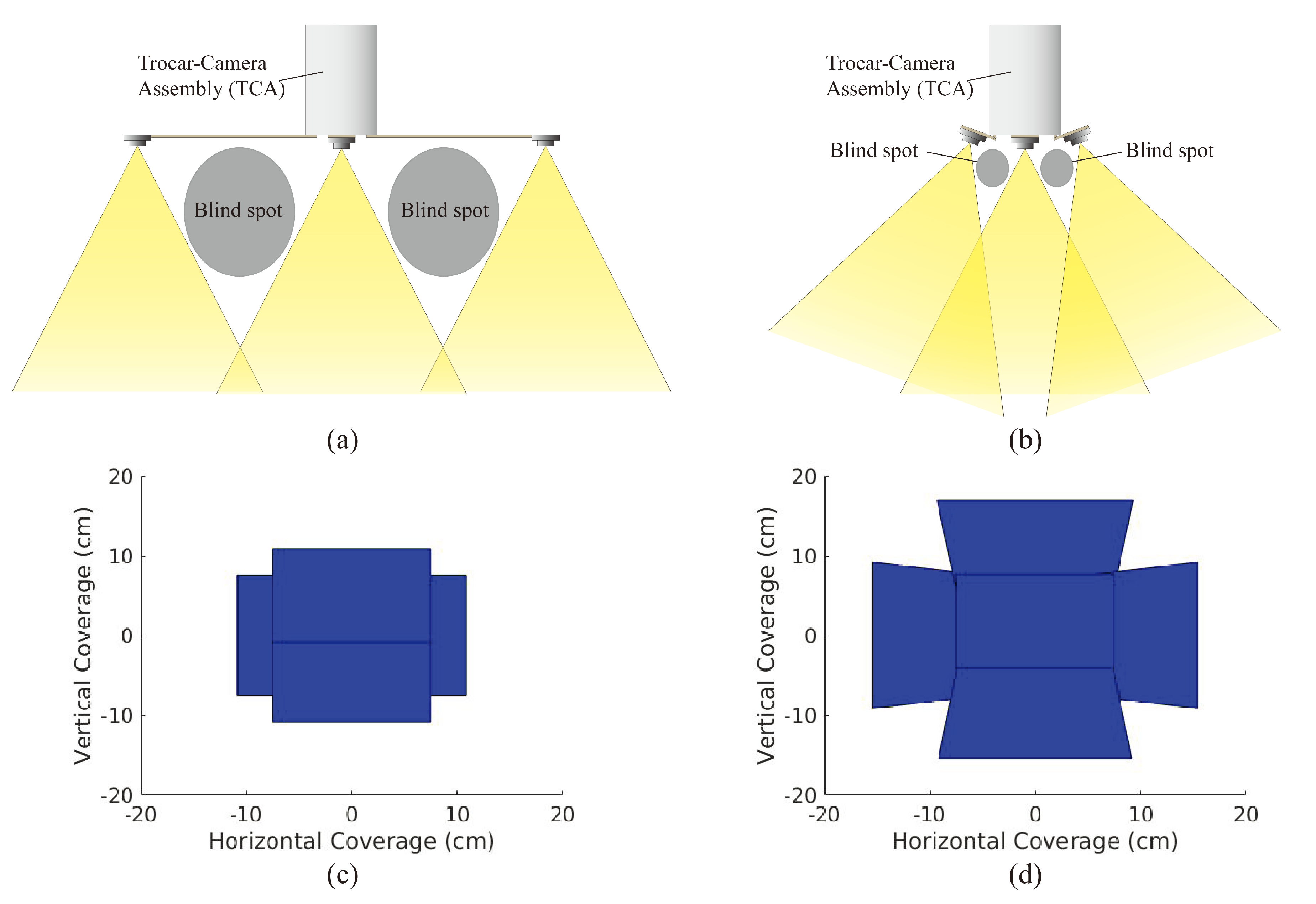

To alleviate the limited FoV and low efficiency of operation in traditional laparoscopic visualization, previously, we demonstrated a trocar-camera assembly (TCA) [

21]. However, the large spacing between the miniaturized cameras causes large blind spots and limits the operation of TCA in case of impacting the abdominal wall (

Figure 1a). Here, we demonstrate an improved design of TCA that has a tilted micro-camera array to mitigate the blind-spot issues of the previous version of TCA. In

Figure 1b, it is shown that by properly tilting the orientation of the cameras in this new design, the blind spots and spacing between cameras can be significantly reduced.

Changing the camera array from a 75 mm arm length with 0

camera rotation to an 18 mm arm length with 20

camera rotation raises the depth at which camera overlap begins to occur from 13 cm to 10 cm, thus allowing for significantly more usable scene depth and thus less blind spots. Furthermore, as can be seen in

Figure 1c,d, we can generate a 2D FoV by intersecting the viewing frustum of each camera in the array with the bottom of the FLS trainer box (represented by a plane at a depth of 16.5 cm). As the camera array does not have the same rectangular FoV frustum that single-camera systems have, the traditional vertical and horizontal solid angles used to measure FoV do not apply. Instead, the area of this 2D FoV serves as an equivalent measure.

This micro-camera array that uses tilted cameras provides larger FoV compared to that accomplished via non-tilted cameras (

Figure 1c,d). For deployment of the micro-camera array, this new design of TCA can utilize the same mechanical system demonstrated in Reference [

21] except the foldable camera support that consists of mechanical arms, a camera holder, and torsion springs. Among the components of the foldable camera support, the torsion springs need to be redesigned to achieve the desired tilting angle of the cameras because the initial angle of the torsion springs determines the tilting angle of the cameras. Once a surgical port is inserted into the patient’s abdominal cavity, the miniaturized cameras are flared out from the in vivo end of the port by a specially designed mechanical system that consists of several micro-machined components. As such, the surgical port still allows the passage of other surgical instruments after camera deployment. This advantage of TCA can still be maintained in this new design. Since the abdomen is inflated with

during the operation, and the ports are on the anterior abdominal wall, there is ample working space within the abdominal cavity to deploy the miniaturized cameras. After the completion of the operation, the mechanical system that holds the miniaturized cameras are folded back inside the port and the whole trocar including the micro-camera array is retrieved.

In this work, we hypothesize that if an array of cameras can be deployed to provide a stitched video with an expanded FoV and small blind spots, the time required to perform multiple operations at different spots can be significantly reduced. While this seems to be intuitively correct, to the best of our knowledge, no empirical study has been performed to validate this hypothesis.

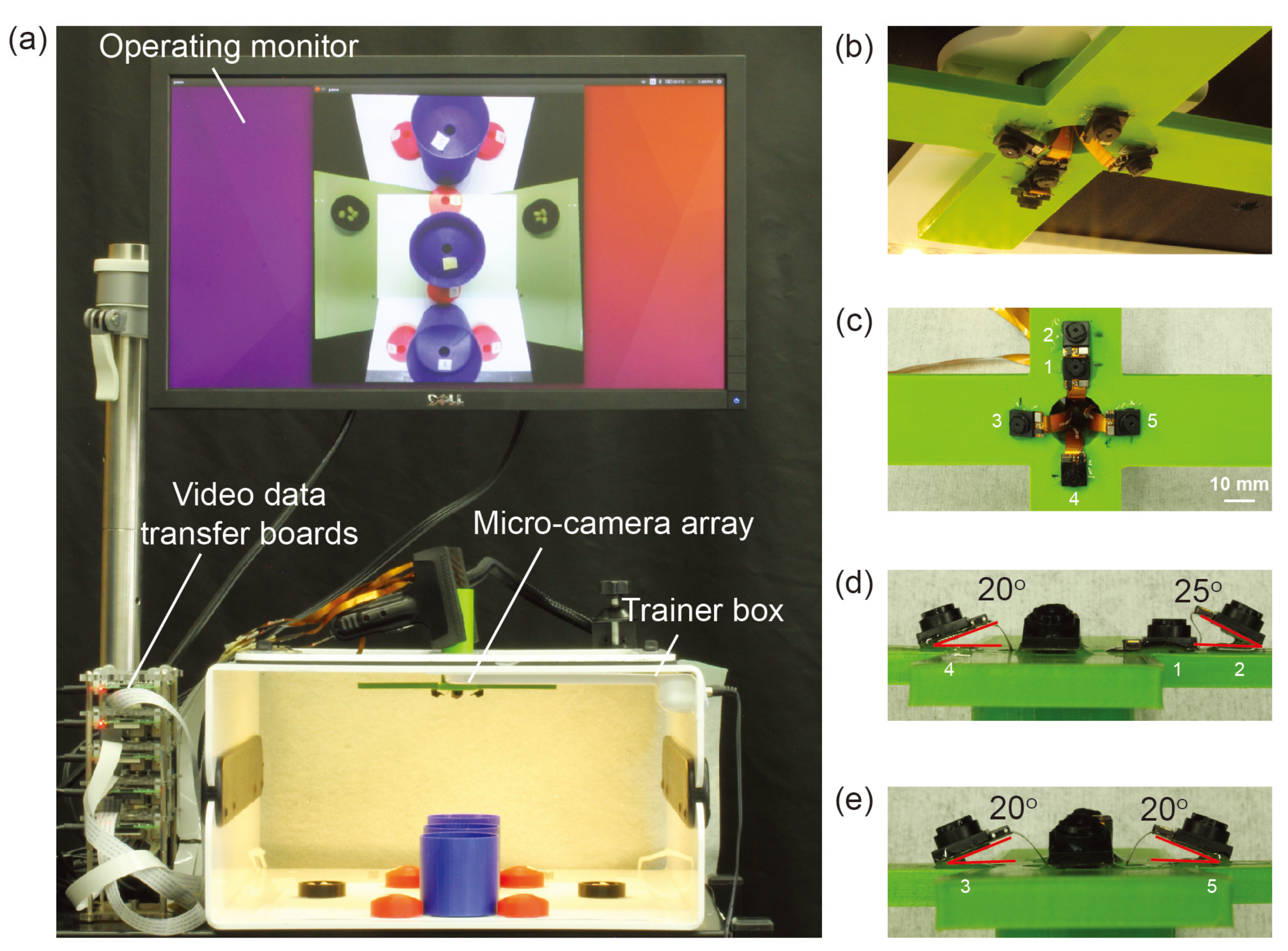

To validate this hypothesis, we conducted an experiment that compares the time taken to complete a laparoscopic surgical training task using a proof-of-concept micro-camera array against that of using a conventional single-camera laparoscope. We utilized a simplified version of the TCA which contained cameras with optimized tilting angles but without the deployment mechanism (

Figure 2) [

21]. Instead, this micro-camera array was permanently installed on a laparoscopy surgery trainer box. We focus on investigating the new camera placement and the validation of the above hypothesis with surgeons, rather than repeating the work on the mechanical deployment system previously developed and reported in Reference [

21]. The video streams of the micro-cameras were transmitted to an outside computer server where they were stitched to form a video mosaic stream with an expanded FoV.

2. Materials and Methods

2.1. Micro-Camera Array Visualization System

The micro-camera array visualization system consisted of a micro-camera array, a trainer box, video data transfer boards, operating monitor, and a laptop implementing a video stitching algorithm (

Figure 2a). We used Raspberry Pi 3 model-B boards (Adafruit, New York, NY, USA) as video data transfer boards. Five miniaturized cameras (Raspberry Pi MINI camera module (FD5647-500WPX-V2.0), RoarKit, AliExpress, China) were used to form a micro-camera array. The cameras were attached to an in-house designed, 3D printed socket to mount the micro-camera array in the trainer box (

Figure 2b). We placed four cameras about 18 mm and one camera about 28 mm away from the center of the port (

Figure 2c). The non-tilted camera 1 in

Figure 2c provided a central view of the field in the trainer box and the four tilted cameras (2 to 5) provided four different side views. To enlarge the FoV and reduce blind spots generated by a lack of detection overlap between cameras, we tilted the four cameras (2 to 5) based on the angles calculated using an algorithm we developed earlier [

22]. To find the optimized angle of the cameras, we calculated the FoV of the micro-camera array as a function of the tilted angle of the cameras (

Figure 3) and found that the optimized angle of the cameras is 20

. A tilted angle of 10

cannot maximize the FoV and holes begin to form in the FoV when the tilted angle is 30

. To minimize the effect of parallax on the visualization, we reduced the allowable arm length to the minimum possible. As noted in our previous work [

22], the total FoV increases as the camera angle increases. Thus we chose to place cameras such that these angles are maximized while maintaining sufficient overlap to satisfy the stitching constraints. Camera 1 was placed at a 0-degree angle to the micro-camera array (

Figure 2d). Cameras 3, 4, and 5 were placed at an angle of 20

to the micro-camera array (

Figure 2d,e). Camera 2, being closer to camera 1 than the others, was placed at an angle of 25

to the micro-camera array (

Figure 2d). The tilted angle of camera 2 was slightly larger than the other cameras and was slightly off the target angle 20

. It helped to enlarge the FoV without forming holes in the FoV because camera 2 is closer to camera 1 than the other cameras.

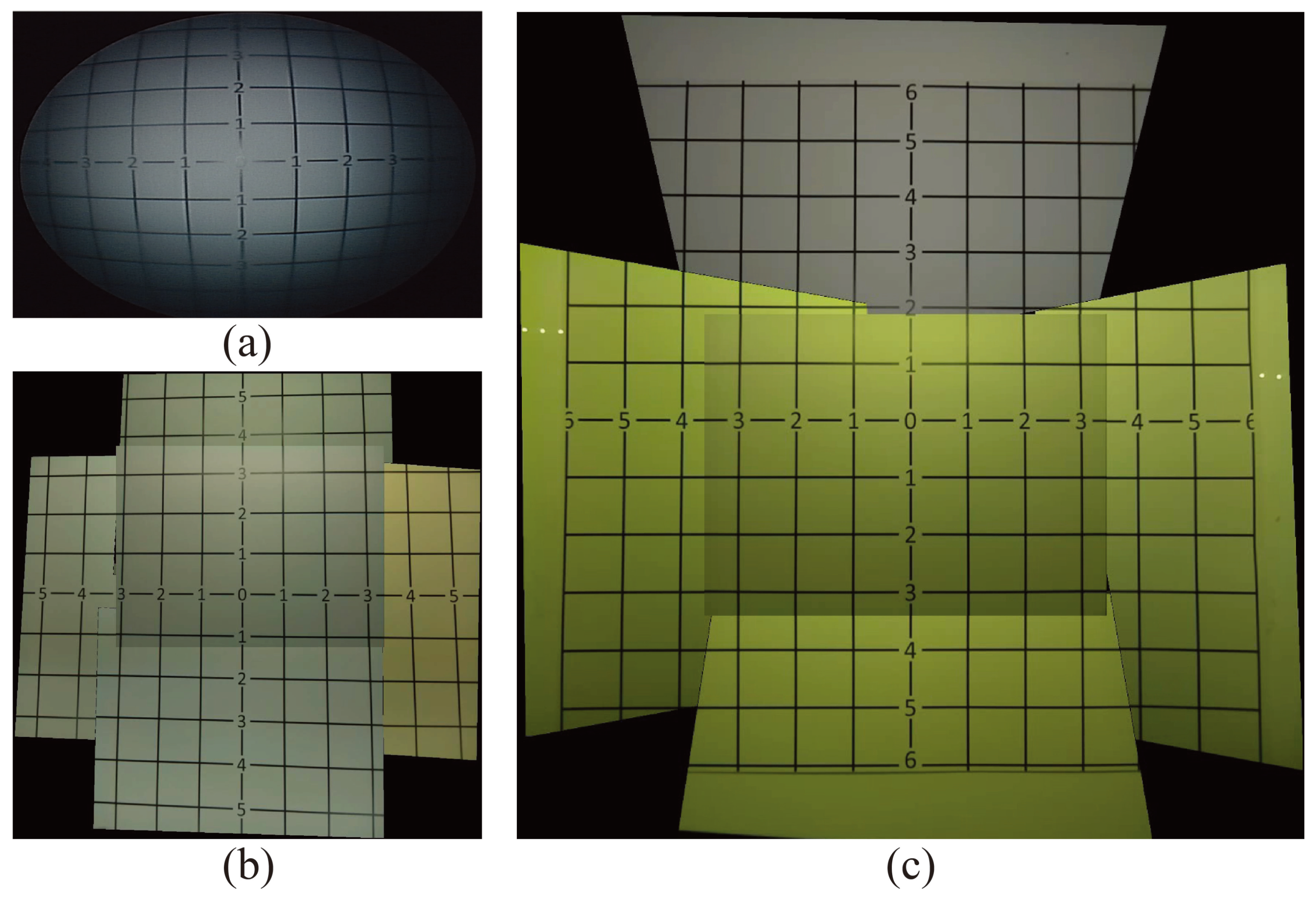

To validate the effectiveness of this proposed micro-camera array on the laparoscopic surgery, we mounted it on to an FLS laparoscope trainer box (to be discussed a bit later) and compared the FoV of the stitched video frame against the FoV of a single-camera visualization system and a non-tilted camera array. For comparison, we showed the images of the same grid paper under these three visualization systems in

Figure 4. Clearly, the number of square grids observable by the traditional visualization system (

Figure 4a) and the non-tilted camera array (

Figure 4b) is 56 and 84 squares, respectively, which was much lower than the 133 squares visible in the stitched image of the micro-camera array visualization system (

Figure 4c). This represents that the visible area using the micro-camera array visualization system increased by 137.5% and 58.3%, compared with the traditional laparoscope and non-tilted camera array, respectively. In addition, the FoV of the traditional laparoscope visualization was 62

, and the FoV of the micro-camera array was 85

, respectively. that the FoV of the micro-camera array increased by 37.1% and 14.9%, compared with the traditional laparoscope and non-tilted camera array, respectively.

In this work, we succeeded in achieving larger FoV with fewer blind spots than in the previous work that used non-tilted cameras [

21]. Furthermore, this design of the micro-camera array was able to reduce the space wasted by camera placement in the surgical field. Furthermore, the distance between the center of the camera and the center of the port was significantly reduced (see

Figure 2c) compared to the previous work (the distance was 75 mm in Reference [

21]). The new distances are 28 mm for camera 2 and 18 mm for the other cameras.

2.2. Video Stitching Algorithm

A real-time multi-view video stitching algorithm was developed to process video feeds from the five cameras to form a video mosaic whose FoV is the union of FoVs of all five cameras. At this early stage of development, we designated the non-tilted center camera as the default viewpoint (the main view) and augmented its FoV with the four peripheral cameras (the side views) using a stitching algorithm similar to the one proposed by Zheng et al. [

23]. At the beginning of the operation, intrinsic camera parameters and extrinsic camera poses of the five cameras were estimated through a camera calibration process. Since the camera array remains stationary through the operation, the calibration needs to be performed only once initially.

For each video frame, the FoV of the stitched video frame was the FoV of the main view (Camera 1) augmented by FoVs of the four side views (Cameras 2–5). For each side-view camera, the FoV of each side-view was geometrically transformed to align it to Camera 1 through a homography , and then the two are combined using a blending mask . Both and , were estimated during the initial calibration and then used throughout the operation.

The video stitching algorithm was implemented on a Linux laptop that had an Intel Xeon E3-150M v5 processor with 4 cores, and 32 GB of main memory. Multi-threading was leveraged to accelerate the computation. At the time of the experiment, this implementation of the video stitching algorithm achieved a processing rate of 25 stitched video frames per second.

2.3. Modified Bean Drop Task

The bean drop task was designed to emulate the laparoscopic surgical task of moving from one location to another during surgery [

24]. In a usual bean drop task, a subject is asked to pick up beans from a saucer using a grasper and transfer them one by one to a cup on the other side of the trainer box. In our experiment, we devised a modified bean drop task with increased complexity. We hypothesized that such modification would mitigate the performance (overall completion time) discrepancies due to different skill levels of subjects.

In the modified bean drop task, a subject was asked to move eight separate beans, each to a separate location in the trainer box. In addition, two types of cups as shown in

Figure 5b,c were used. These cups were placed in a pattern shown in

Figure 5a, such that the taller cups may obscure the smaller cups if viewed from the wrong angle. The pattern was symmetric so that completion time would be independent of handedness. We implemented these changes to require the subject to exercise a level of strategic planning and situational awareness.

We laid out a set of nine inverted cups and two saucers in the pattern seen in

Figure 5. Eight of the nine inverted cups were labeled with the numbers 1–8, while the remaining inverted cup was left unlabeled to serve as an obstacle hindering the visual search process. These labels allowed us to randomize the order of the individual bean drop tasks to introduce yet more planning. In each of the two saucers, we placed four beans. By limiting the number of beans available to our subjects, we once again emphasized the importance of task planning.

Two surgical graspers are provided to perform the modified bean drop task so that the subject may use both hands to perform the task. During the transfer of a bean from a saucer to a cup, it may be dropped accidentally. The subject may pick up a dropped bean to complete the task with some timing penalty. However, if the subject cannot see the dropped bean via the visualization system or cannot reach it via the provided ports, that bean is deemed lost during transfer. When a bean is lost, the protocol requires the subject to move on to transfer the next bean to the required numbered cup. The completion time for each of the four tasks and the number of beans dropped during each task would be recorded for subsequent data analysis.

2.4. Laparoscope Trainer Box

A standard FLS laparoscopic trainer box designed for practicing laparoscopic procedures was used to validate and evaluate the effectiveness of the proposed micro-camera array. The trainer box is a low fidelity form of simulation but has known validity evidence for laparoscopic surgeries [

25]. By default, the box contains two incisions for inserting instruments and a built-in camera placed on the inside of the box in between the instrument incisions.

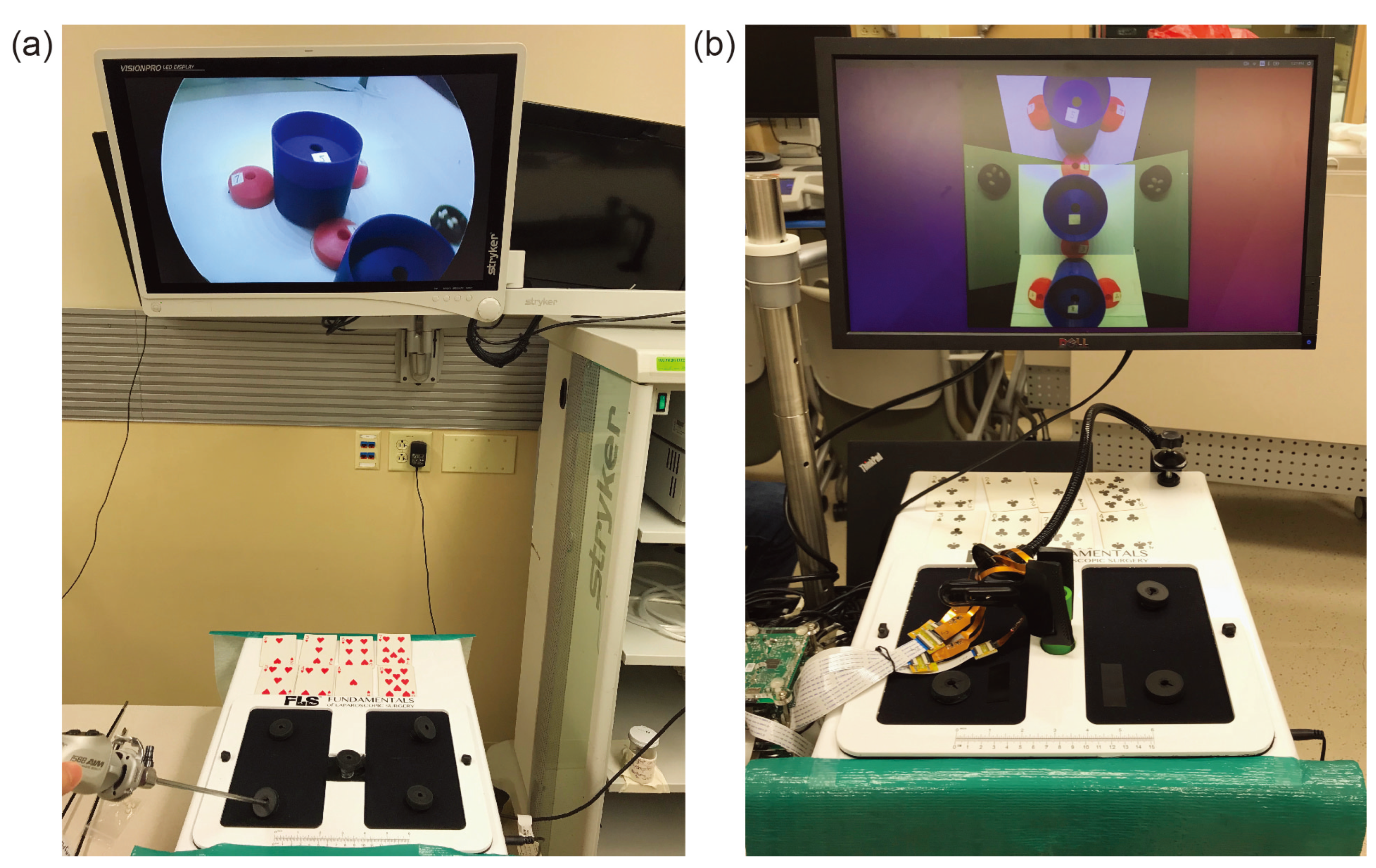

As shown in

Figure 6, in this experiment, the built-in camera is removed to make room for the micro-camera array and extra incisions were added. The micro-camera array is mounted through the central incision to provide a central viewpoint with a stitched FoV covering the entire bottom of the trainer box. A clamp was applied outside of the box to ensure that the array stayed fixed in its location. Other incisions were placed on top of the trainer box so that the surgeon could easily view or reach any location at the bottom of the trainer box. As shown in

Figure 5, several cups and saucers were affixed to the bottom of the trainer box using Velcro. These cups and saucers facilitate a modified bean-drop task to be performed in this experiment. The final result of this can be seen in

Figure 6.

2.5. Subjects and Test Protocol

We recruited 26 test subjects consisting of a medical student, surgical residents, and faculty of the University Hospital of the University of Wisconsin. The group consisted of 1 medical student, 3 first-year residents, 5 second-year residents, 6 third-year residents, 2 fourth-year residents, 3 fifth-year residents, and 6 faculty surgeons. The subjects were randomly recruited based on availability. The medical student had no prior experience with laparoscopic surgery. The first-year residents had less than a year of experience while the other residents had experience approximately equal to their years of residency. The faculty surgeons had extensive prior laparoscopic experience and were recruited based on the fact that they routinely perform minimally invasive (laparoscopic) surgery.

Testing took place in the laparoscopic trainer room of the Simulation Center in the University Hospital over the course of 5 days. During testing, subjects were asked to stop by the testing facility at their leisure to perform a short laparoscopic training task to help test out a new visualization system. There was no restriction on how many subjects were allowed in the testing room, nor were subjects prohibited from watching other subjects perform the task, although they were discouraged from doing so.

When a subject arrived at the testing facility, the subject was given a brief explanation of the difference between the two visualization systems and then given a description of the tasks to be completed. The subject was given a picture similar to

Figure 5a but with the numbers removed. The subject did not have access to this picture during the experiment. The subject was told that cards numbered 1–8 would be dealt out in front of them in a random order, that the completion of the task would be timed, and that the task should be attempted to complete as quickly as possible. No strategic advice was given to the surgeon. At no point was any instruction given to the subject as to which ports should be used, or how the camera systems or how the surgical graspers should be manipulated. The subject was allowed to use one or both hands to manipulate the graspers.

After the explanation, the subject was directed to one of the two trainer boxes to begin the task. Each setup as seen in

Figure 6 consisted of one visualization system, two surgical graspers, a video display, a set of 8 playing cards, and an FLS trainer box. The cups for the modified bean drop task and 8 white beans were located inside each of the trainer boxes as seen in

Figure 5. The test giver made sure that the subject was comfortable with their understanding of the task and ready to begin. After attaining verbal confirmation of the subject’s readiness, the test giver gave the subject a command to begin the task. The subject would then attempt to use the provided visualization system to pick up the beans from the black saucers and place them in the numbered cups in the order dictated by the dealt cards. The test giver proceeded to watch as the subject performed the task. The time to complete was measured manually by the test giver with a stopwatch who gave the subject a command to start and stopped timing when the subject placed the last bean or stated verbally that they were done with the task. The overall completion time was chosen to be the difference between the ending and the starting time readings. At the end, the number of dropped beans was also counted and recorded.

Each subject repeated the above procedure four times during the experiment using the two training boxes: twice with the traditional single-camera visualization system and twice with the proposed micro-camera array visualization system. The experiment protocol did not allow a subject to practice on the modified bean drop task in advance. To mitigate the concern that the subject will perform better in later trials (the third or the fourth) due to familiarity with the modified bean drop task in earlier trials, we chose to have the subject switch visualization systems after the first and third attempts. Specifically, the first and fourth attempts were performed with one visualization system and the second and third attempts were performed with the other. Furthermore, either type of visualization system was chosen alternatively for the first trial.

3. Results

The outcome of each modified bean drop task was the total completion time for completing the task. However, not all subjects completed all eight required sub-tasks. Sometimes a bean was dropped prematurely outside the destination cup and there was no way to recover it. When this happened, the protocol required the subject to abort the current sub-task and continue to the next one. As such, the completion time with aborted sub-tasks was shorter than if all eight sub-tasks had been completed successfully. Using the traditional laparoscope, there were a total of 26 aborted sub-tasks. Using the micro-camera array, the number of aborted subtasks increased to 45. Note that the total number of sub-tasks performed was .

To rectify the reduction of the completion time due to aborted bean drop, a penalty of 28 s was added to the measured completion time for each occurrence of a failed sub-task. The 28 s penalty was the averaged single sub-task completion time of all subjects for both treatments.

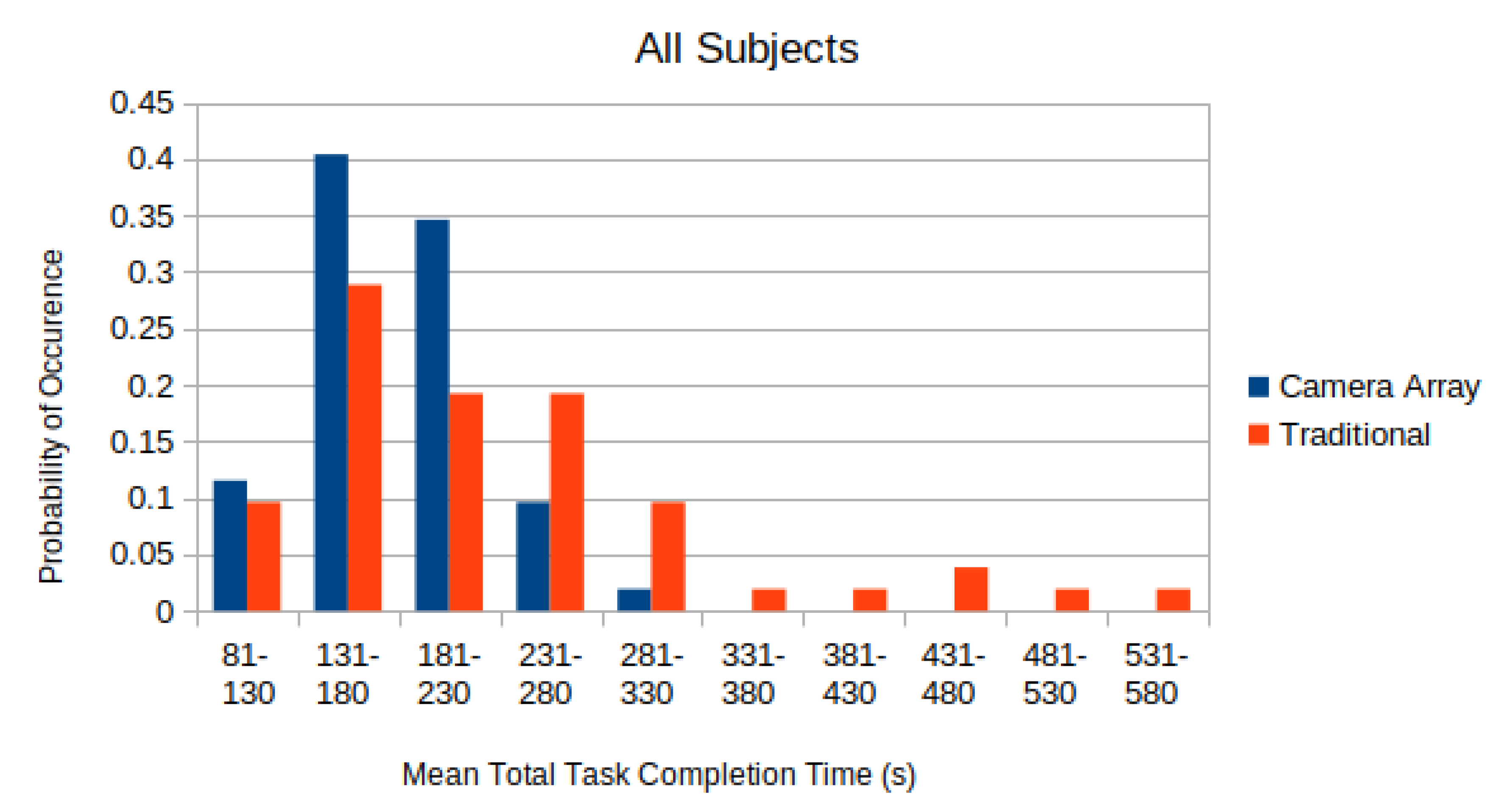

In

Figure 7, the distributions of the completion time of all test subjects, with 28 s penalty added per aborted sub-task, are depicted. The distribution was normalized so that the sum of all elements equals to unity.

A two-sided paired T-test was applied to each of these distributions in

Figure 7 to compare whether the difference in the completion time using the micro-camera array versus the traditional laparoscope was statistically significant. The mean adjusted time-to-complete for the traditional laparoscope was found to be

s. The mean adjusted time-to-complete on the micro-camera array was found to be

s. Applying a paired T-test to the data found that subjects completed the tasks faster with the micro-camera array than with the traditional laparoscope (

).

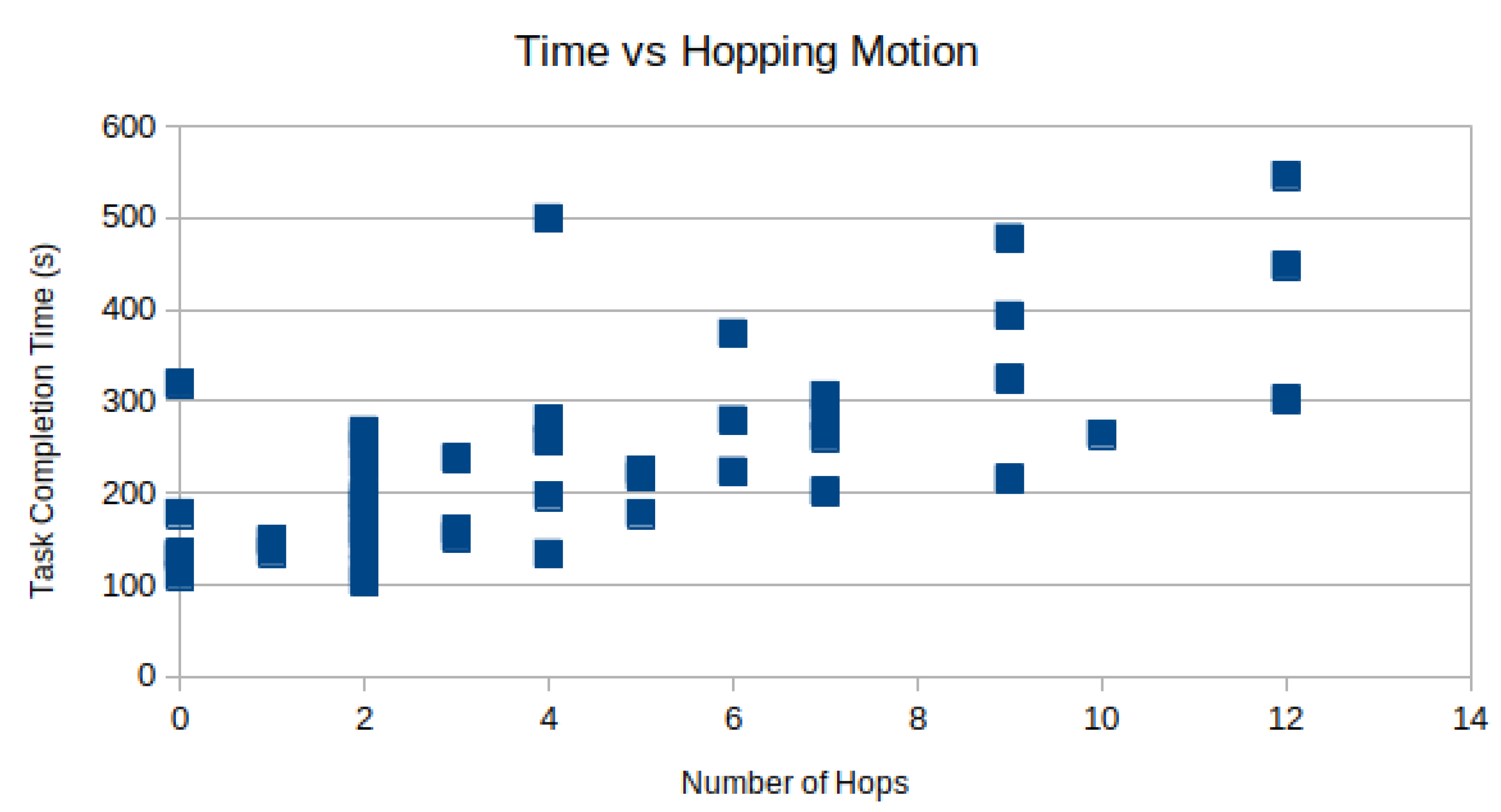

Camera hopping is the act of removing and reinserting a laparoscope through the trocar to provide a better viewing angle during the procedure. In the modified bean-dropping test, we recorded multiple camera hopping operations when the laparoscope was used. There was no camera hopping when the micro-camera array was used. Manual inspection of the recorded videos found that on average subjects performed the camera hopping motion times per test.

However, the time spent moving the camera can not be spent performing the task. In

Figure 8, the task completion time is plotted against the number of camera hops for each subject as a scatter plot. As expected, there is a positive correlation between the number of hops and the total time-to-complete. Using linear regression, R = 0.69 is observed.

4. Discussion

4.1. Validation: Benefit of Larger Field of View

From

Figure 7, it is clear that the completion time using the micro-camera array is shorter than that using the traditional laparoscope. Using a significance level of

, the null hypothesis that states the distribution of the completion time difference using the micro-camera array and the traditional laparoscope has zero mean clearly should be rejected. Hence, this experiment result validates the hypothesis that larger FoV afforded by the micro-camera array can significantly reduce the completion time when performing a simulated laparoscopic surgery (the modified bean dropping test).

Moreover, the completion time utilizing the micro-camera array consistently has a smaller standard deviation than that using the traditional laparoscope. This is also shown in

Figure 7 where the distribution of the laparoscope completion time has longer tails leading to much larger standard deviations.

4.2. Impact of Subjects’ Experience Using the Traditional Laparoscope

The subjects recruited in this experiment are all familiar with using the laparoscope trainer box except the medical school student. All subjects are unfamiliar with using the micro-camera array viewing system. This familiarity and prior experiences with the traditional laparoscope may contribute to shorter completion time.

4.3. Number of Aborted Sub-Tasks

There were a total of 71 aborted sub-tasks out of a total of 832 performed by all 26 subjects. The abort rate was approximately . The number of aborted sub-tasks decreased as the experience increased. The abort rate for the micro-camera array was almost twice of the laparoscope. This might be due to the fact that the subjects had variant experience with, and thus were more familiar with the traditional laparoscope, while they were completely new to and were unfamiliar with this new type of micro-camera array visualization system.

4.4. Using Both Hands for Operation Using Micro-Camera Array

We observed that with the micro-camera array visualization system, some more experienced subjects used both hands to hold an additional surgical instrument to improve the overall efficiency of completing the modified bean drop task. This illustrates an important benefit of the micro-camera array visualization system. Namely, there will be no need to occupy a dedicated port for inserting the laparoscope and there will be no need to have an assistant to hold the laparoscope during the procedure.

4.5. Micro-Camera Array Design

In the proof-of-concept design in this experiment, the cameras were chosen based on their small form factor to fit into the inside of a surgical trocar. The image quality of individual micro-cameras is inferior to that produced by a traditional single-camera laparoscope. Since the micro-camera array is hanging high, it provides a bird-eye view, rather than a close-up view as does the traditional laparoscope. In the next phase of this project, we plan to develop heterogeneous camera arrays that can simultaneously provide a global bird-eye view to facilitate navigation and close-up view to facilitate delicate surgical operations.

In the interest of real-time video stitching, the video stitching algorithm does not compensate for the severe parallax distortion in the stitched video. The image of a laparoscopic surgical instrument may show discontinuity across the boundary between adjacent camera views when the surgical instrument is moving during the experiment. The default viewpoint is also fixed to the top-down view of the center camera. Hence, the subjects are unable to view the scene from an angle to acquire depth perception. In the following development, a multi-planar image stitching algorithm will be developed to mitigate the parallax anomaly. An any-view 3D dynamic rendering viewing system will also be implemented so that surgeons may shift their viewing angle from head-mounted augmented reality goggles to examine the surgical spots from an optimal angle.