Abstract

In recent years, hyperspectral image classification (HSI) has attracted considerable attention. Various methods based on convolution neural networks have achieved outstanding classification results. However, most of them exited the defects of underutilization of spectral-spatial features, redundant information, and convergence difficulty. To address these problems, a novel 3D-2D multibranch feature fusion and dense attention network are proposed for HSI classification. Specifically, the 3D multibranch feature fusion module integrates multiple receptive fields in spatial and spectral dimensions to obtain shallow features. Then, a 2D densely connected attention module consists of densely connected layers and spatial-channel attention block. The former is used to alleviate the gradient vanishing and enhance the feature reuse during the training process. The latter emphasizes meaningful features and suppresses the interfering information along the two principal dimensions: channel and spatial axes. The experimental results on four benchmark hyperspectral images datasets demonstrate that the model can effectively improve the classification performance with great robustness.

1. Introduction

With the development of remote sensing, hyperspectral imaging technology has been widely applied in meteorological warning [1], agricultural monitoring [2], and marine safety [3]. Hyperspectral images are composed of hundreds of spectral bands and contain rich land-cover information. Hyperspectral image classification has received increasing attention as a crucial issue in the field of remote sensing.

The conventional classification methods include random forest (RF) [4], multiple logistic regression (MLP) [5], and support vector machine (SVM) [6]. They are all classified based on one-dimensional spectral information. Additionally, principal component analysis (PCA) [7] tends to be used to compress spectral dimensions while retaining essential spectral features, reduce band redundancy, and improve model robustness. Although these traditional methods obtain great results, they have a limited representation capacity and can only extract low-level features due to the shallow nonlinear structure.

Recently, hyperspectral images classification methods based on deep learning (DL) [8,9,10] have been increasingly favored by researchers to make up for the shortcomings of traditional methods. CNN has made a great breakthrough in the field of computer vision due to its excellent image representation ability and has been proved successful in the field of hyperspectral image classification. Makantasis et al. [11] developed a network based on 2D CNNs, where each pixel was packed into image patches of fixed size for spatial feature extraction and sent to multilayer perceptron for classification. However, 2D convolution can only extract features in height and width dimensions, ignoring the rich information of spectral bands. To further enhance the utilization of the spectral dimensions, the researchers turned their attention to 3D CNNs [12,13,14]. He et al. [12] proposed a multiscale 3D deep convolutional neural network (M3D-DCNN) for HSI classification, which learns the spatial and spectral features in the raw data of hyperspectral images in an end-to-end manner. Zhong et al. [13] developed a 3D spectral-spatial residual network (SSRN) that continuously learns discriminative features and spatial context information from redundant spectral signatures. Although 3D CNNs can make up for the defects of 2D CNNs in this regard, while 3D CNNs introduce a large number of computational parameters, increasing the training time and memory cost. In addition, due to the HSI having the characteristics of solid correlations between bands, there are phenomena of the same material that may present spectral dissimilarity. Different materials may have homologous spectral features, which seriously interfere with the extraction of spectral information and lead to the degradation of classification performance. How to distinguish the discriminative features of HSI is the key to improving the classification performance. Recent studies have shown that discriminative features can be enhanced using attention mechanisms [15,16]. Studies in cognitive biology reveal that human beings acquire the information of significance by paying attention to only a few critical features while ignoring the others. Similarly, attention has been effectively applied to various tasks in computer vision [17,18]. Numerous approaches based on existing attention mechanisms are also involved in the hyperspectral image classification tasks, demonstrating their efficiency in improving performance.

Additionally, with the continuous deepening of feature extraction, the neural network will inevitably become deeper. The phenomenon of gradient vanishing and network degradation becomes more and more serious, which deteriorates the classification performance. Furthermore, screening the crucial features from the complex features of hyperspectral images has become critical for network performance improvement. The paper proposed a 3D-2D multiscale feature fusion and dense attention network (MFFDAN) for hyperspectral image classification considering the above problems. In summary, the main contributions of this paper are as follows:

- (1)

- The whole network structure is composed of 3D and 2D convolutional layers, which not only avoids the problems of insufficient feature extraction when only 2D CNNs are used but also reduces the large number of training parameters caused by 3D CNNs alone, thereby improving the model efficiency.

- (2)

- The proposed 3D multiscale feature fusion module obtains the scaled features by combining multiple convolutional filters. In general, filters with large sizes are unable to capture the fine-grained structure of the images, whereas filters with small sizes are frequently eliminate the coarse-grained features of the images. Combining multiple convolutional filters of varying sizes allows for the extraction of more detailed features.

- (3)

- A 2D densely connected attention module is developed to overcome the gradient vanishing problem and select discriminative channel-spatial features from redundant hyperspectral images. A factorized spatial-channel attention block is proposed that can adaptively prioritize critical features and suppress less useful ones. Additionally, a simple 2D dense block is introduced to facilitate the information propagation and feature reuse and comprehensively utilizes features of different scales in 3D HSI cubes.

2. Material and Methods

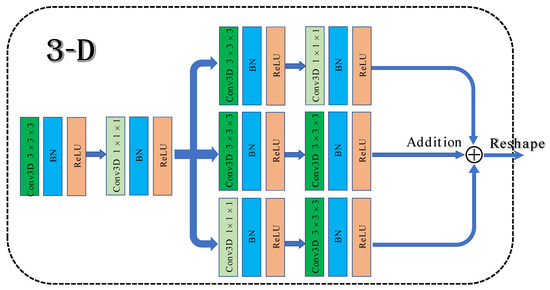

2.1. D Multibranch Fusion Module

Three-dimensional CNNs work on hyperspectral images’ spectrum and spatial dimensions simultaneously through 3D convolution kernels and can directly extract spatial and spectral information from the raw hyperspectral images. The formula is as follows:

where , , and represents the height, width, and spectral dimension of convolution kernels. The denotes the output value of the convolution kernel in the layer at the position of .

The normal 3D CNN methods for hyperspectral image classification involve stacking convolutional blocks of convolutional layers (Conv), batch normalization (BN), and activation functions to extract detailed and discriminative features from raw hyperspectral images. While these methods improve the classification results to a certain degree, they also introduce numerous calculating parameters and increase the training time. Additionally, building deep convolutional neural networks tends to cause gradients vanishing and to suffer from classification performance degradation.

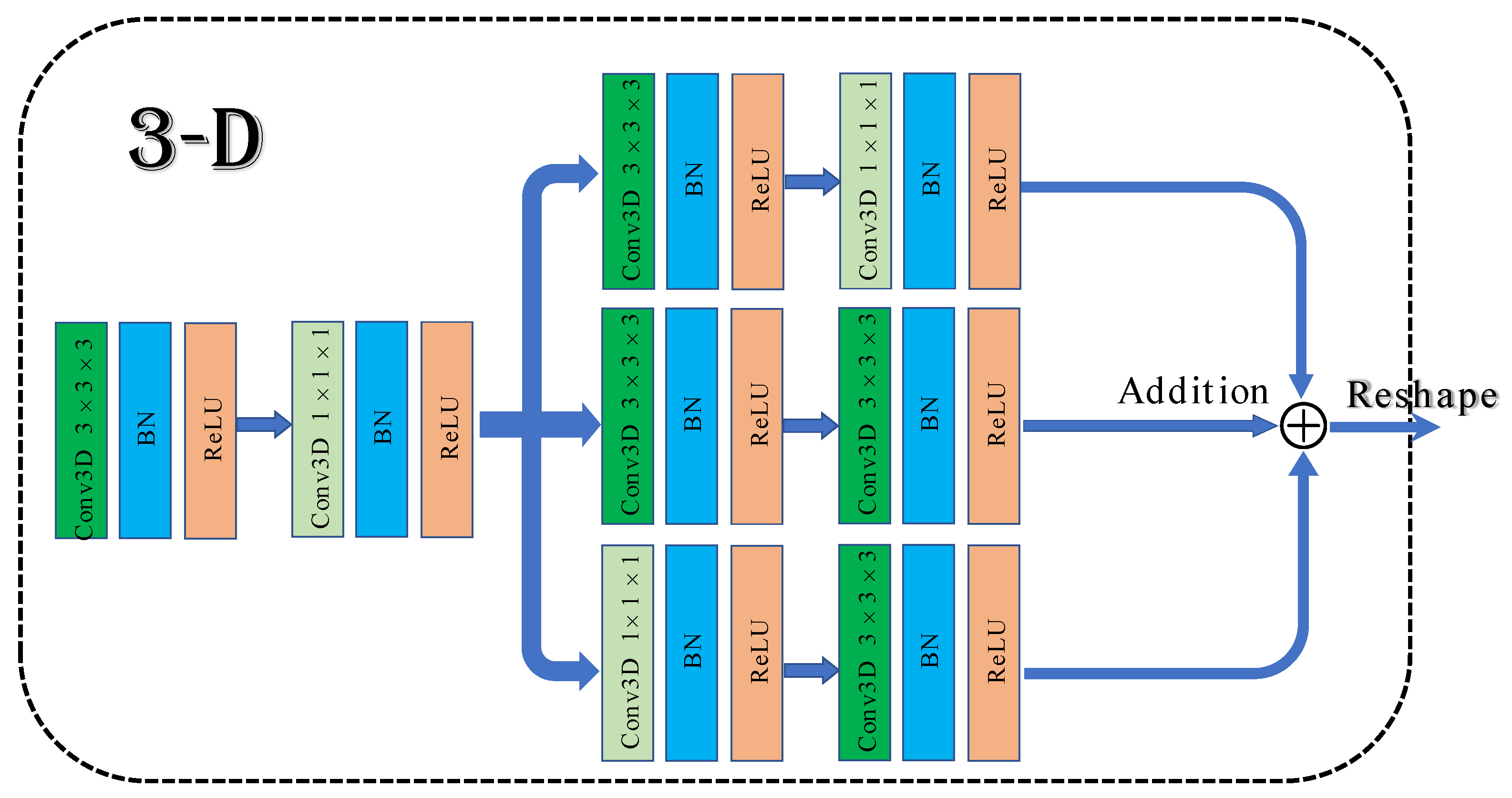

To solve the above problems, a 3D multibranch fusion module is proposed in this work. The architecture of the module is shown in Figure 1. First, 3 × 3 × 3 and 1 × 1 × 1 convolutional blocks are employed to form the shallow network, which can expand the information flow and allow the network to learn texture features. Then, it adds three branches that are composed of multiple convolution kernels in sequence. Different sizes of convolutional filers can be used to extract multiscale features from hyperspectral data. Merging with the shallow network frequently results in superior classification performance compared to stacked convolutional layers.

Figure 1.

The architecture of 3D multibranch fusion module.

2.2. D-2D CNN

On the one hand, the features extracted by 2D CNNs alone are limited. On the other hand, 3D CNN consumes a substantial amount of computational resources. The combination of 2D CNNs and 3D CNNs can effectively make up for these defects. Roy et al. [14] proposed a hybrid spectral-spatial neural network HybridSN. First, 3D CNNs facilitate the joint spatial-spectral feature representation from a stack of spectral bands. Then, the 2D CNNs on top of the 3D CNNs further learn more abstract-level spatial representation. Compared with 3D CNNs alone, the hybrid CNNs can not only avoid the problem of insufficient feature extraction but also reduce model training parameters and improve model efficiency.

2.3. Attention Mechanism

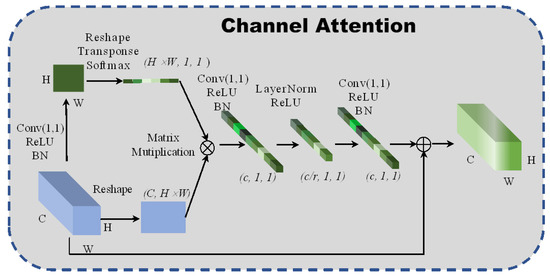

2.3.1. Channel Attention

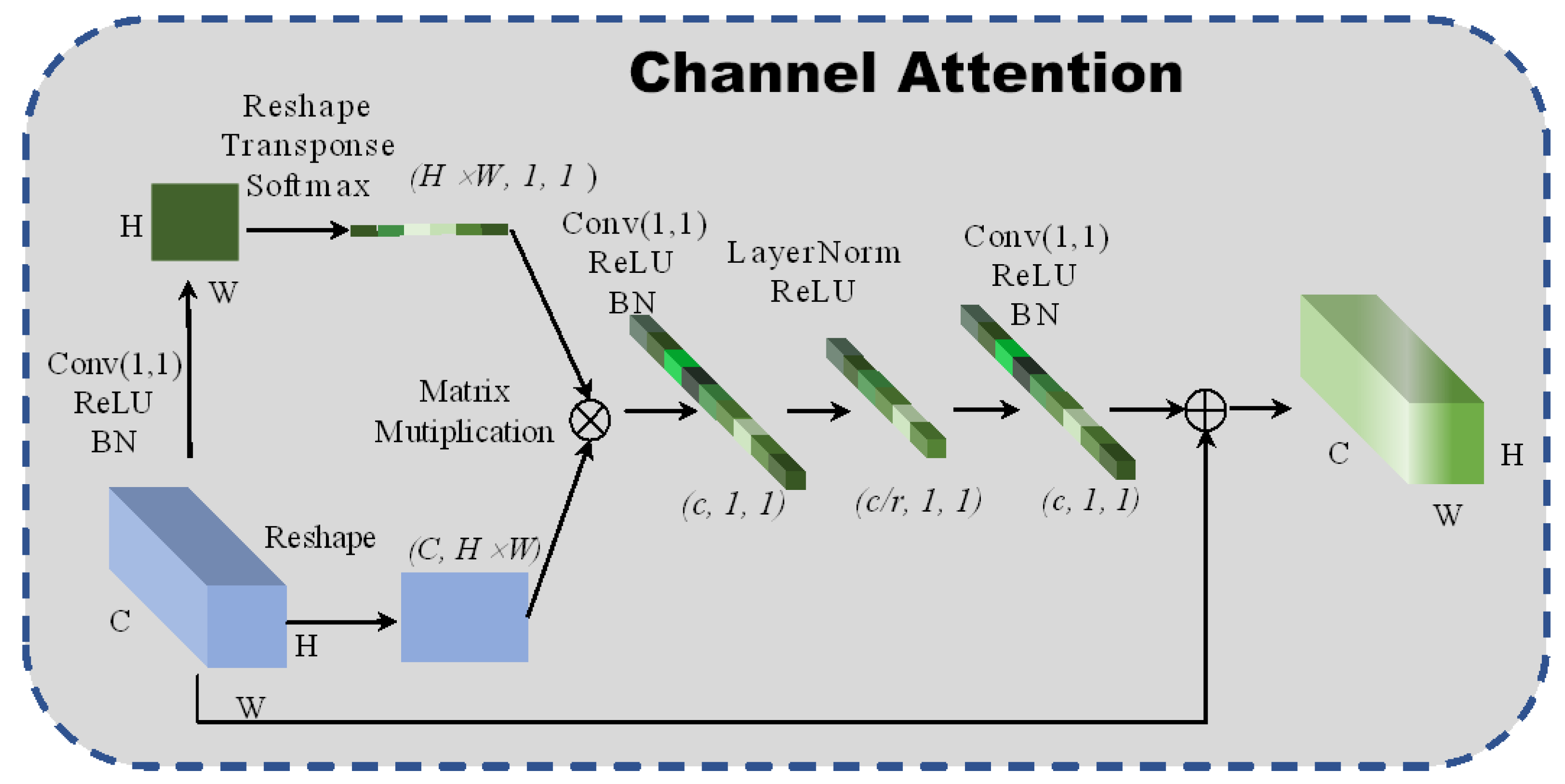

A new global context channel attention block is designed to enable the network to pay attention to the relationship between adjacent pixels in the channel dimensions. Simultaneously, the channel attention block also gains a long-distance dependence between pixels and improves the network’s global perception. The structure of the spectral attention is shown in Figure 2. The fully connected layer increases computational parameters. For this reason, a 1 × 1 convolutional neural network is intended to replace the fully connected layers, in which the scaling factor is set to 4 to reduce the calculation cost and prevent the network from overfitting. Additionally, layer normalization [19] is introduced to sparse the weights of the network:

where and represent the parameter vectors of zooming and translation, respectively. The layer normalization is used to normalize the weights’ matrix, which can accelerate the convergence and regularization of the network. The formula of the channel attention is:

where represents the global pooling and denotes the bottleneck transform. The channel attention module uses global attention pooling to model the long-distance dependences and capture discriminative channel features from the redundant hyperspectral images.

Figure 2.

The architecture of 2D channel attention block.

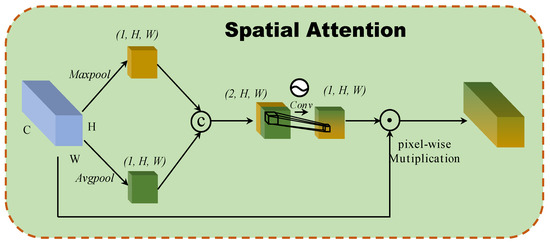

2.3.2. Spatial Attention

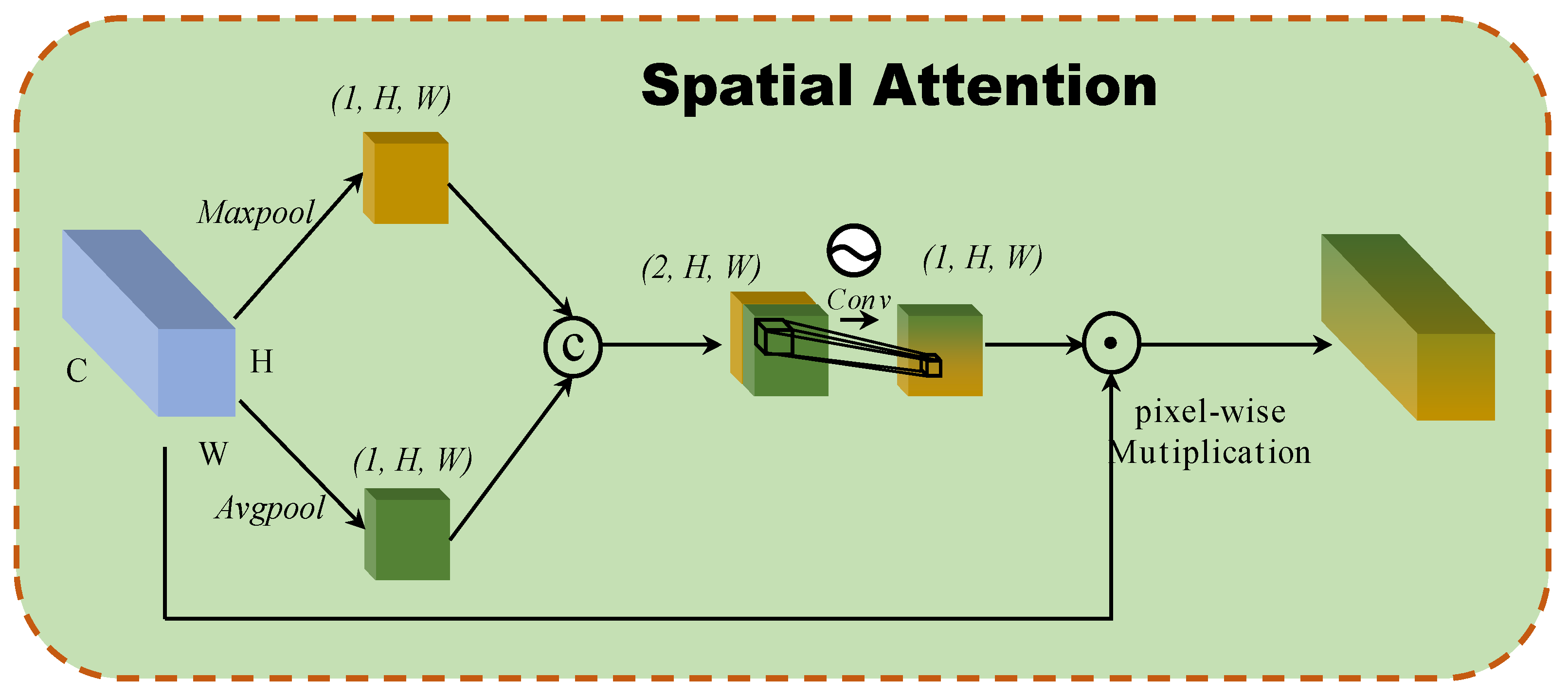

A spatial attention block based on the interspatial relationships of features is developed, as inspired by CBAM [20]. Figure 3 illustrates the structure of the spatial attention block. To generate an efficient feature descriptor, average-pooling and max-pooling operations are applied along the channel axis, and they concatenate them. Pooling operations along the channel axis are shown to be effective at highlighting informative regions. Then, a convolution layer is applied to the concatenated feature descriptor to create a spatial attention map that specifies which features to emphasize or suppress. These are then convolved using a standard convolution layer to create a two-dimensional spatial attention map. In short, spatial attention is calculated as follows:

where denoted the sigmoid function and represents a convolution operation with the filter size of .

Figure 3.

The architecture of 2D spatial attention block.

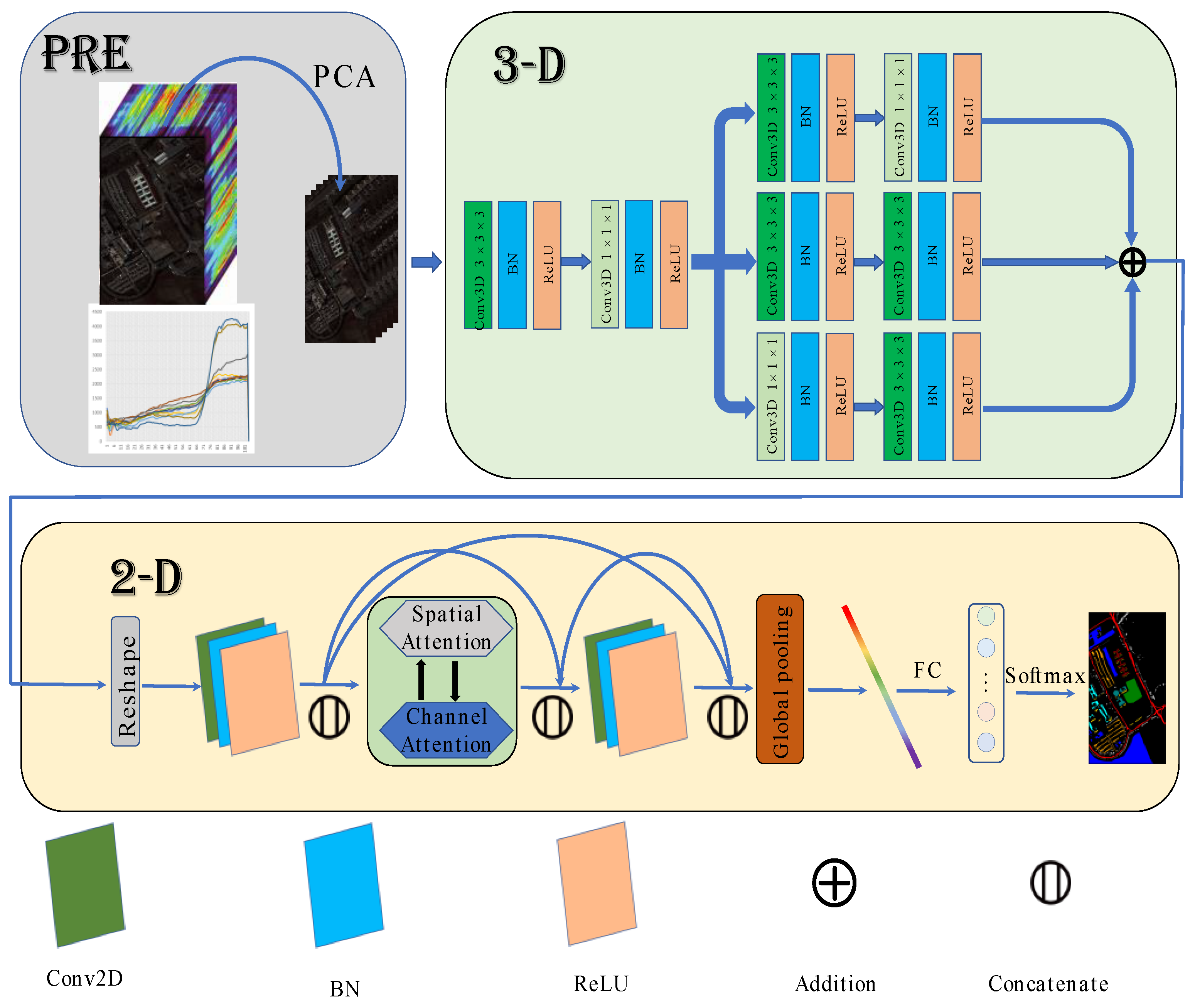

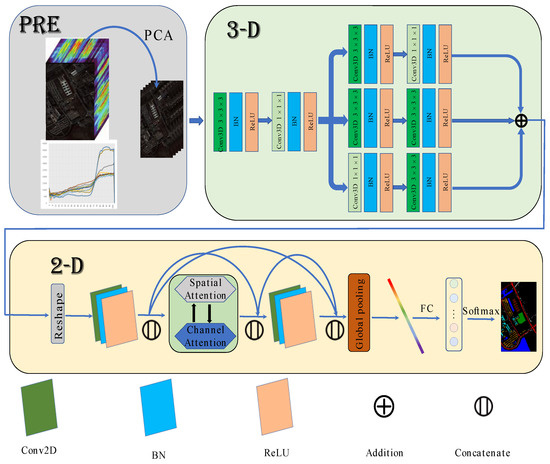

2.4. HSI Classification Based on MFFDAN

The architecture of MFFDAN is depicted in Figure 4. The University of Pavia dataset is used to demonstrate the algorithm’s detailed process. The raw data are normalized to zero mean value with unit variance for preprocessing. Then, the PCA is employed to compress the spectral dimensions and eliminate band noise in the raw HSIs. Finally, hyperspectral image data were segmented into the fixed spatial size of 3D image patches centered on labeled pixels. After completing the above steps, they are sent to the 3D multibranch fusion module for feature extraction. The module is intended to extract multiscale features with multiple sizes of convolutional filters. Following that, the 3D feature maps reshape to 2D after dimension transformation and are sent to the 2D dense attention module. Then, a dense block [21] is arranged with spatial and channel attention in the middle of the module, which is used to enhance the information flow and adaptively select out the discriminative spatial-channel features. Finally, a fully connected layer with a softmax function is used for classification.

Figure 4.

The Flowchart of MFFDAN network.

3. Results

3.1. Datasets

The University of Pavia (PU) dataset was acquired by the reflective optics system imaging spectrometer (ROSIS) sensor. The dataset consists of 103 spectral bands. There are 610 × 340 pixels and nine ground-truth classes in total. The number of training, validation, and testing samples in experiments is given in Table 1.

Table 1.

The number of training, validation and testing samples for University of Pavia dataset.

The Kennedy Space Center (KSC) dataset was gathered in 1996 by AVIRIS with wavelengths ranging from 400 to 2500 nm. The images have a spatial dimension of 512 × 614 pixels and 176 spectral bands. The KSC dataset consists of in total 5202 samples of 13 upland and wetland classes. The number of training, validation, and test samples in experiments is given in Table 2.

Table 2.

The number of training, validation, and testing samples for Kennedy Space Center dataset.

The Salinas Valley (SA) dataset contains 512 × 217 pixels with a spatial resolution of 3.7 m and 224 bands with wavelengths ranging from 0.36 to 2.5 μm. Additionally, 20 spectral bands of the dataset were eliminated due to water absorption. The SA dataset contains 16 labeled material classes in total. The number of training, validation, and testing samples in experiments is given in Table 3.

Table 3.

The number of training, validation, and testing samples for Salinas Valley dataset.

The Grass_dfc_2013 dataset was acquired by the compact airborne spectrographic imager (CASI) over the campus of the University of Houston and the neighboring urban area [22]. The dataset contains 349 × 1905 pixels, with a spatial resolution of 2.5 m and 144 spectral bands ranging from 0.38 to 1.05 µm. It includes 15 classes in total. The number of training, validation, and testing samples in experiments is given in Table 4.

Table 4.

The number of training, validation, and testing samples for Grass_Dfc_2013 dataset.

3.2. Experimental Setup

The model proposed in this paper is implemented base on Python language and Pytorch deep learning framework. All experiments are carried out on a computer with Windows 10 operating system, NVIDIA RTX 2060 Super GPU, and 64 GB RAM. The overall accuracy (OA), average accuracy (AA), and kappa coefficient (Kappa) are adopted as the evaluation criteria. Different proportions of training, validation, and testing samples for each dataset are used to verify the effectiveness of the proposed model considering the unbalanced categories in four benchmarks.

The batch size and epochs are set to 16 and 200, respectively. Stochastic gradient descent (SGD) is adopted to optimize the training parameters. The initial learning rate is 0.05 and decreases by 1% every 50 epochs. All the experiments are repeated five times to avoid errors.

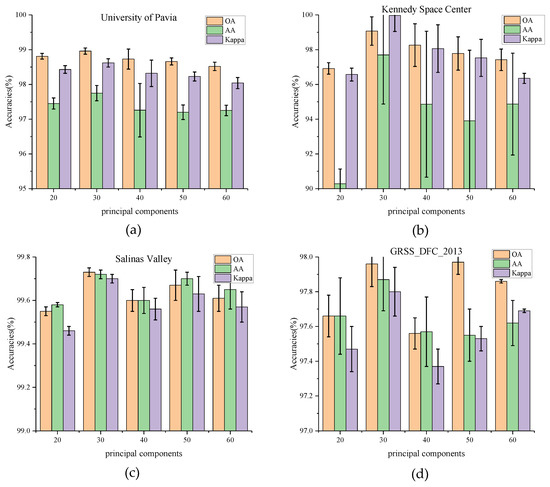

3.3. Analysis of Parameters

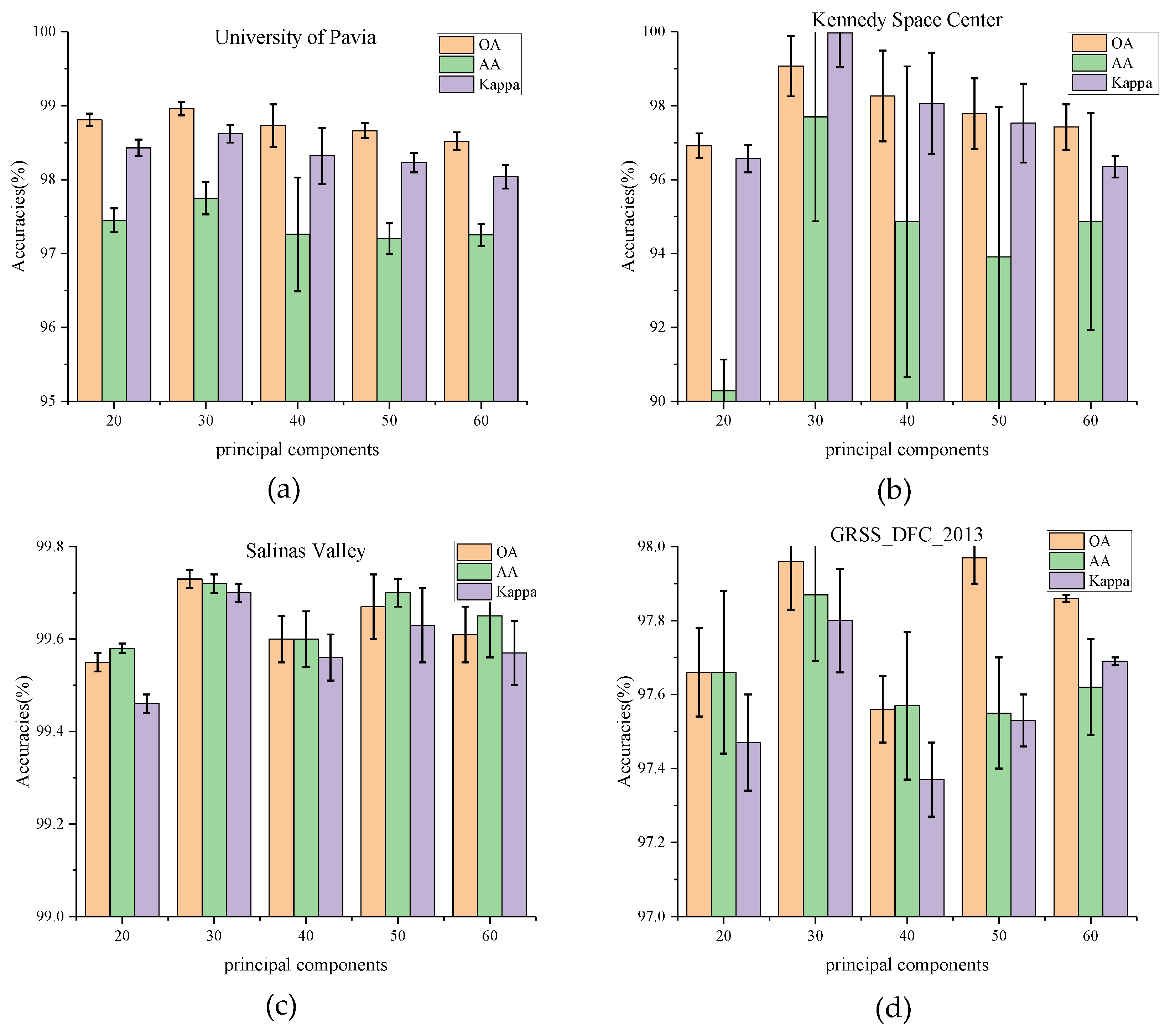

(1) Impact of Principal Component: In this section, the influence of the number of principal components C is tested on classification results. PCA is first used to reduce the dimensionality of the bands to 20, 30, 40, 50, and 60, respectively. The experimental results on four datasets are shown in Figure 5. For the University of Pavia and Kennedy Space Center datasets, the values of OA, AA, and Kappa rise from 20 (PU_OA = 98.81%, KSC_OA = 96.92%) and reach a peak at 30(PU_OA = 98.96%, KSC_OA = 99.07%). The increase in OA values on the KSC dataset is much higher than that on the PU dataset. It can be observed that the number of principal components has a significant impact on the KSC dataset. When the principal component bands exceed 30, these indicators decline to vary degrees. While for the Salinas Valley and GRSS_DFC_2013, the values of OA, AA, and Kappa seem to have no such relationships with the principal components. The OA values fluctuate in various number of principal components. The phenomenon is most likely caused by the fact that the latter two datasets have a higher land-cover resolution but a lower spectral band sensitivity.

Figure 5.

OA, AA, and Kappa accuracies with different principal components on four datasets. (a) Effect of principal components on University of Pavia dataset, (b) Effect of principal components on Kennedy Space Center, (c) Effect of principal components on Salinas Valley dataset, (d) Effect of principal components on GRSS_DFC_2013 dataset.

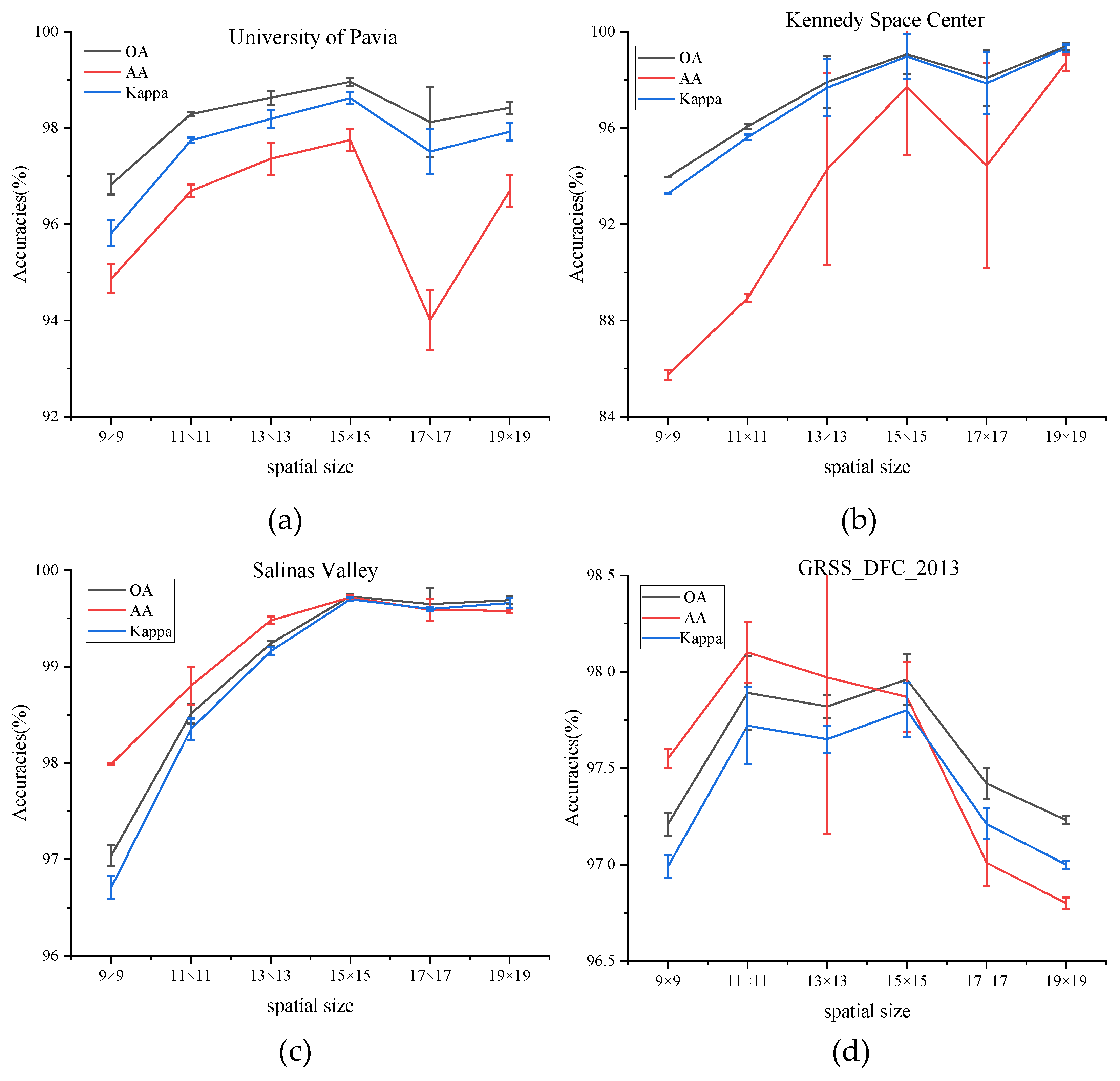

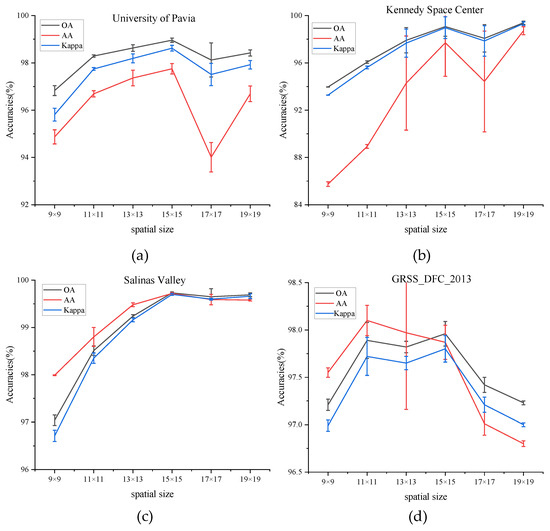

(2) Impact of Spatial Size: The choice of the spatial size of the input image block has a crucial influence on classification accuracy. To find the best spatial size, it is necessary to test the model by adopting different spatial sizes: C × 9 × 9, C × 11 × 11, C × 13 × 13, C × 15 × 15, C × 17 × 17, and C × 19 × 19, where C is the fixed number of the principal component. The number of the principal component is set to 30 in all experiments to guarantee fairness.

Figure 6 shows the values of OA, AA, and Kappa of different spatial sizes on four datasets. The values of OA, AA, and Kappa rise steadily from spatial sizes of C × 9 × 9 to C × 15 × 15 on PU, KSC, and SA dataset and then decrease in the larger spatial sizes. That is to say, a target pixel and its adjacent neighbors usually belong to the same class to certain spatial sizes, while oversized regions may present additional noise and deteriorate the classification performance. While for the dataset of GRSS_DFC_2013, the values of three indicators fluctuate between the spatial size of C × 11 × 11 and C × 15 × 15 and decrease with larger spatial sizes. To sum up, the size of the patch for all datasets is set to C × 15 × 15.

Figure 6.

OA, AA, and Kappa accuracies with different spatial size on four datasets. (a) Effect of spatial size on University of Pavia dataset, (b) Effect of spatial size on Kennedy Space Center dataset, (c) Effect of spatial size on Salinas Valley dataset, (d) Effect of spatial size on GRSS_DFC_2013 dataset.

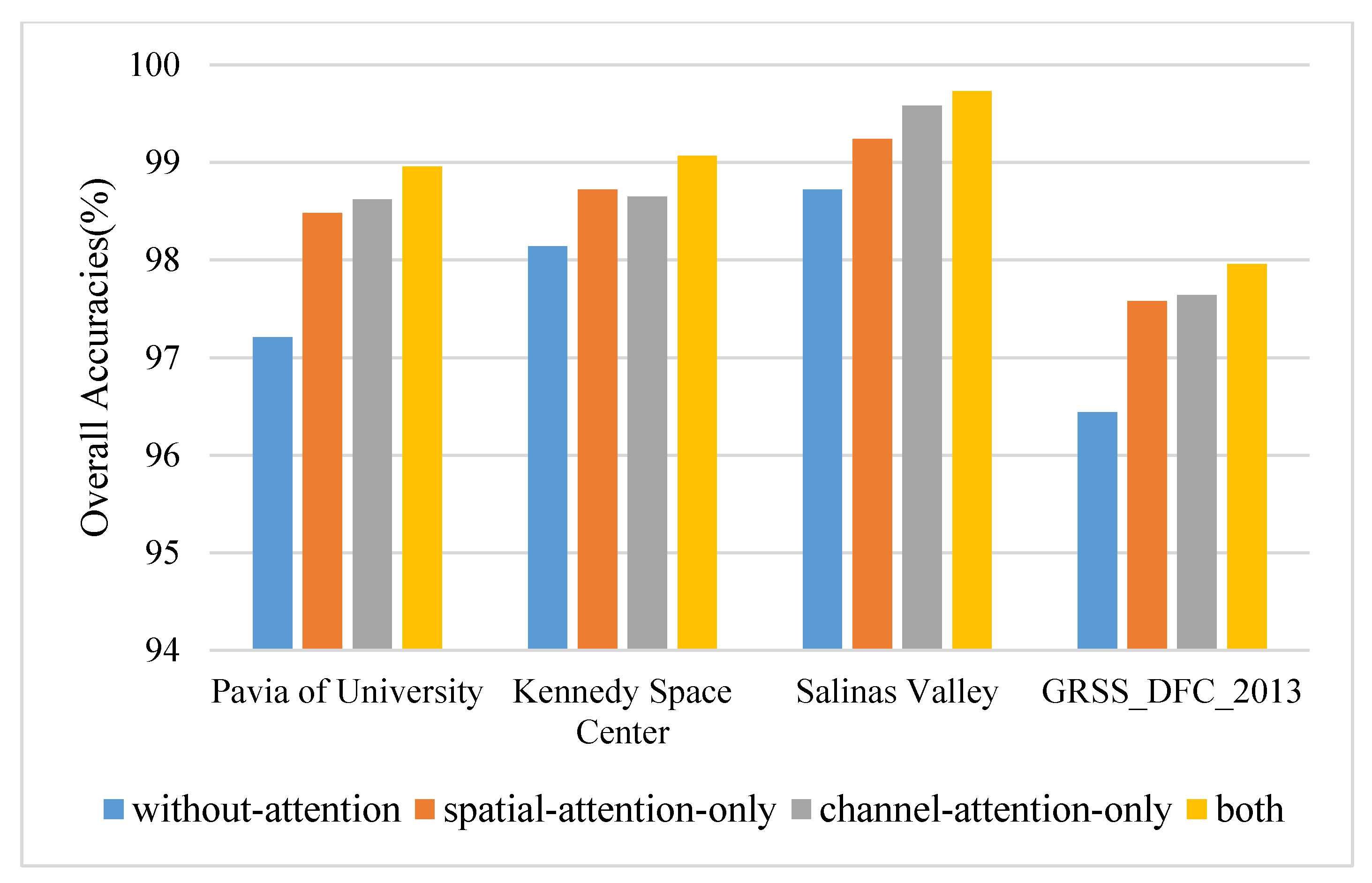

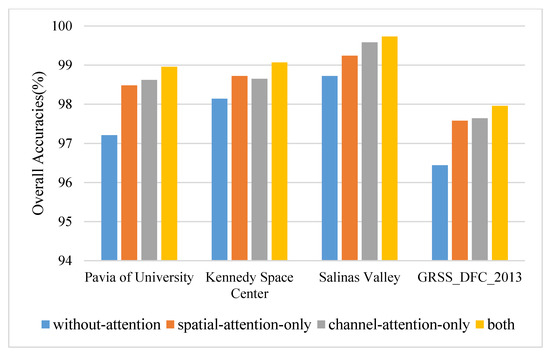

3.4. Ablation Study

In order to test the effectiveness of the proposed densely connected attention module, several specific ablation experiments are designed. The models used for comparison are consistent with the network of the proposed method except for the removal of the densely connected attention module. The principal components and the spatial size are set to 30 and 15 × 15. The results on four datasets are displayed in Figure 7. The densely connected attention module improves the values of OA by approximately 0.93–1.75% on four datasets. Specifically, on most occasions (such as PU, SA, and GRSS dataset), a single-channel attention block outperforms a single spatial attention block by approximately 0.06–0.34% OA values. However, that does not mean that the spatial attention mechanism does not work, which plays a significant role in improving classification performance. Spatial attention alone has improved (0.52–1.27% OA) compared with nonattention block. The reason is likely that the densely connected attention module introduces the combination of attention mechanisms and densely connected layers. On the one hand, the attention mechanism can adaptively assign different weights to spatial-channel regions and suppress the effects of interfering pixels. On the other hand, densely connected layers relieve the gradient vanishing when the model bursts into deep layers and enhances the feature reuse during the convergence of the network.

Figure 7.

The overall accuracies of ablation experiments on four datasets.

3.5. Compared with Other Different Methods

To evaluate the performance of the proposed method, seven classification methods were selected to be compared. The methods are RBF-SVM with radial basis function kernel, multinomial logistic regression (MLR), random forest, spatial CNN with 2-D kernels [11], PyResNet [23], Hybrid-SN [14], and SSRN [13]. Figure 5, Figure 6 and Figure 7 show the classification maps of different methods on PU, KSC, SA, and Grss_dfc_2013 datasets.

The spatial size and the number of principal components are set to C × 15 × 15 and 30 for all DL methods to guarantee fairness. All the comparison experiments are carried out five times and calculate the average values and standard deviations. Due to the SSRN model not performing PCA in the manner described in the original paper, the results are the same when omitting this process in experiments. Other hyperparameters of the network are configured according to their papers.

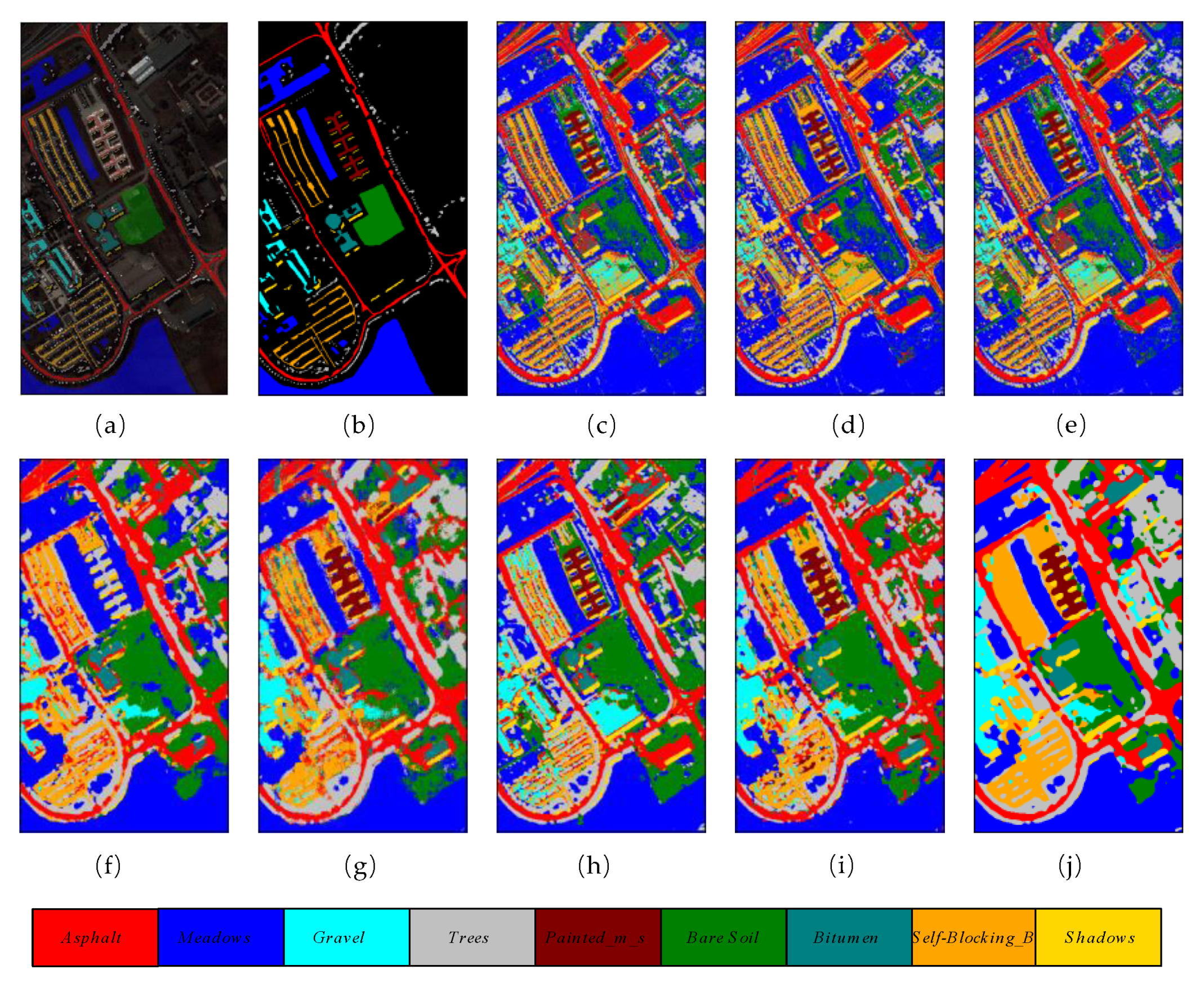

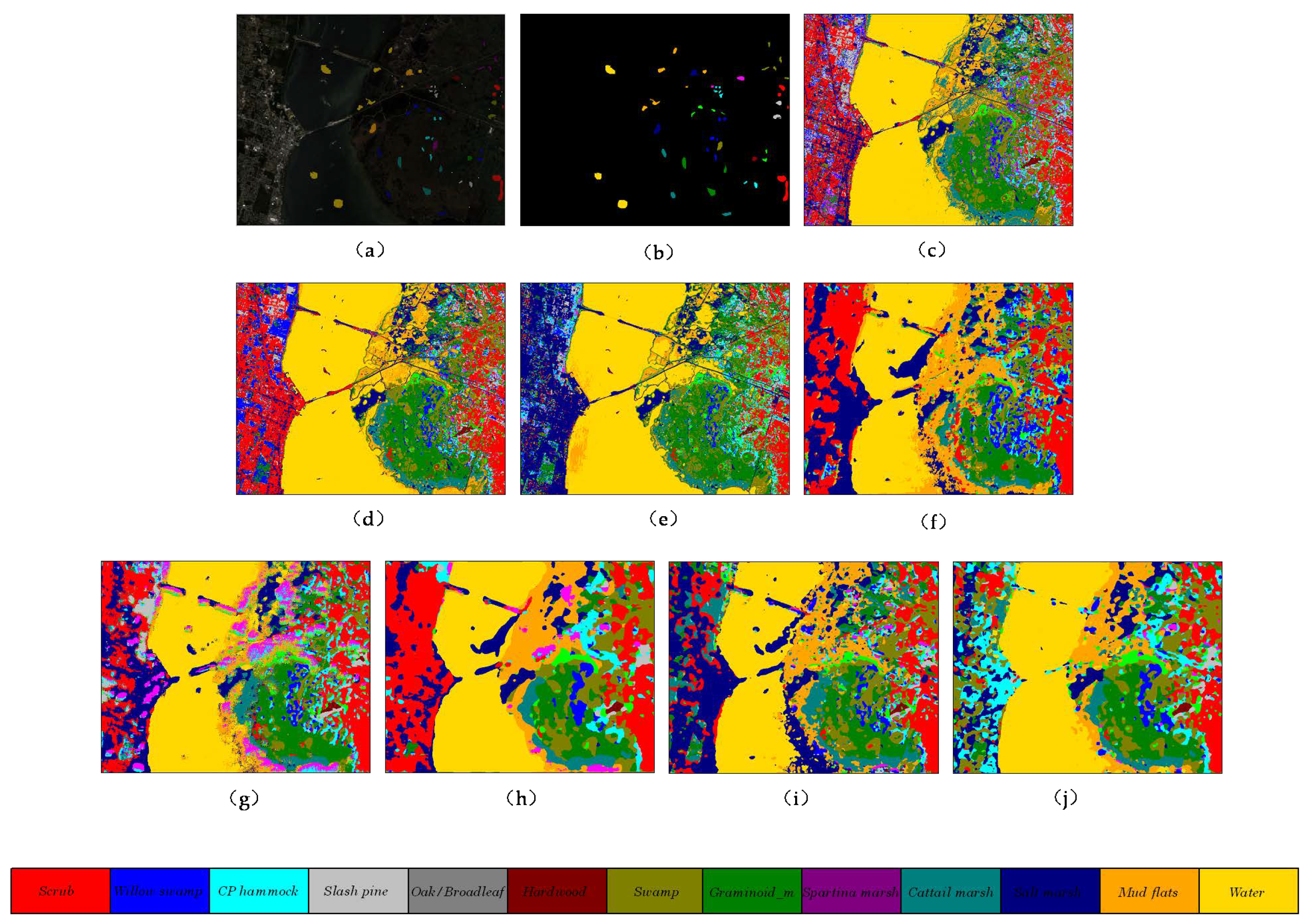

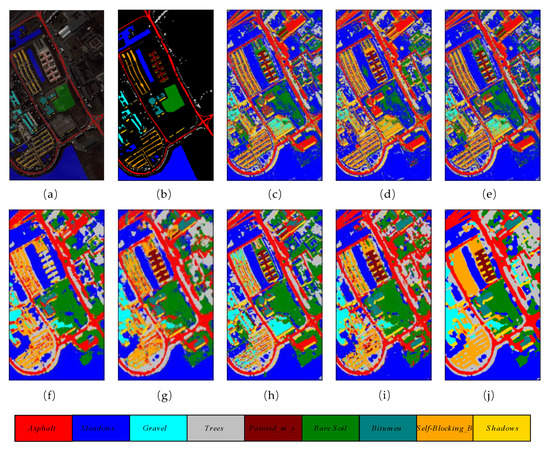

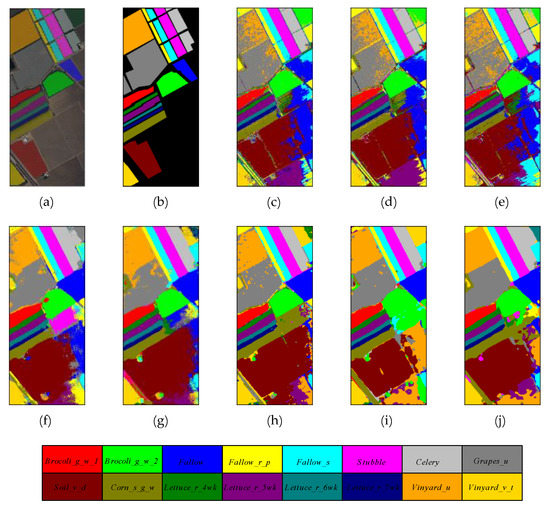

The number of training, validation, and testing samples on University of Pavia dataset for comparison are in accordance with the list of samples in Table 1. Table 5 reveals the overall accuracy, average accuracy, and kappa coefficient of the different methods. It is obvious that classic machine learning methods such as RBF-SVM, RF, and MLR achieve relatively lower overall accuracies compared with other DL methods. They classify through the spectral dimensions of HSIs, which ignore the importance of 2D spatial characteristics. The proposed method obtained the best results among all the comparison methods, with 98.96% overall accuracy, which is 1.63% higher than the second-best (97.33%) achieved by HybridSN. Figure 8 shows the classification maps of these methods.

Table 5.

The categorized results of different methods on the Paiva of University dataset.

Figure 8.

The classification maps of different methods on University of Pavia dataset. (a) False color map with truth labels, (b) ground truth, (c) RBF-SVM (d), MLR (e), RF (f) 2D-CNN (g), PyResNet (h) SSRN, (i) HybridSN, and (j) proposed.

The selection of samples for training, validation, and testing on Kennedy Space Center dataset are consistent with the list of samples in Table 2. It is necessary to increase the training samples for the KSC dataset to avoid the underfit of the network. The 2D CNN model achieves the worst results among all the DL methods, which is difficult to obtain complex spectral-spatial features via 2D convolutional filters. The SSRN model obtains the second-best results due to its stacked 3D convolutional layers, which extract the discriminative spectral-spatial features from raw images.

The proposed method achieves the best results with (OA = 99.07%, AA = 97.70%, and Kappa = 98.97%). The quantitative classification results in terms of three indices, and the accuracies for each class are reported in Table 6. The classification maps of these methods are displayed in Figure 9.

Table 6.

The categorized results of different methods on the Kennedy Space Center dataset.

Figure 9.

The classification maps of different methods on Kennedy Space Center dataset. (a) false color map with truth labels, (b) ground truth, (c) RBF-SVM, (d) MLR, (e) RF, (f) 2D-CNN, (g) PyResNet, (h) SSRN, (i) HybridSN, and (j) proposed.

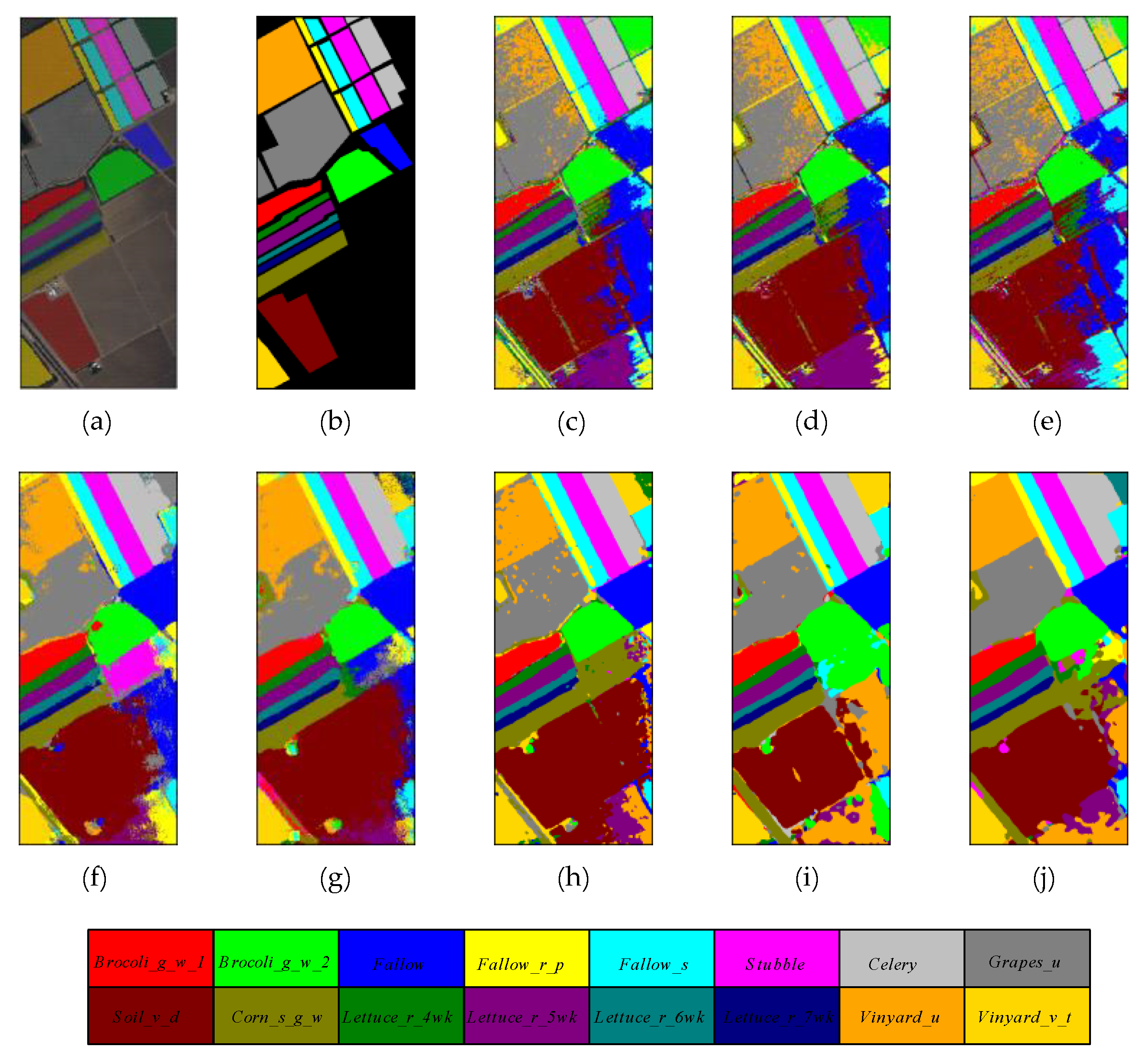

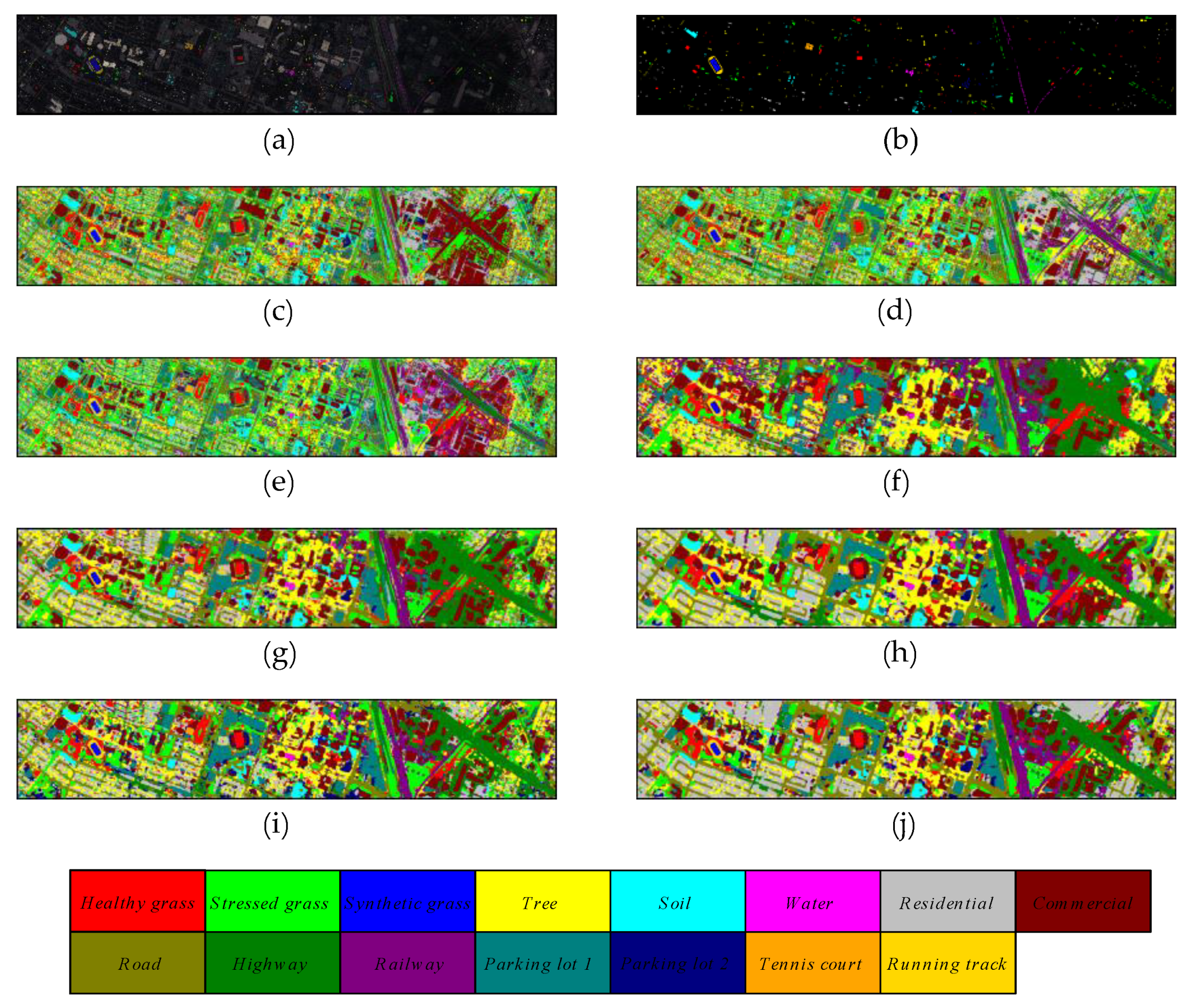

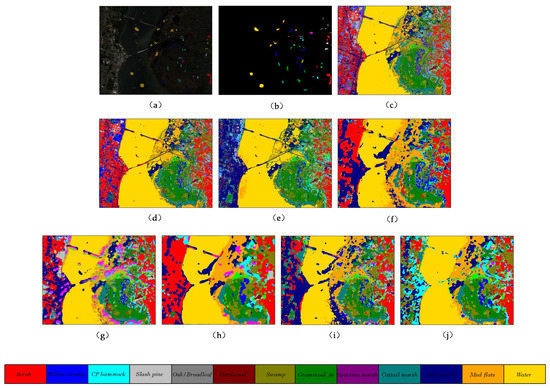

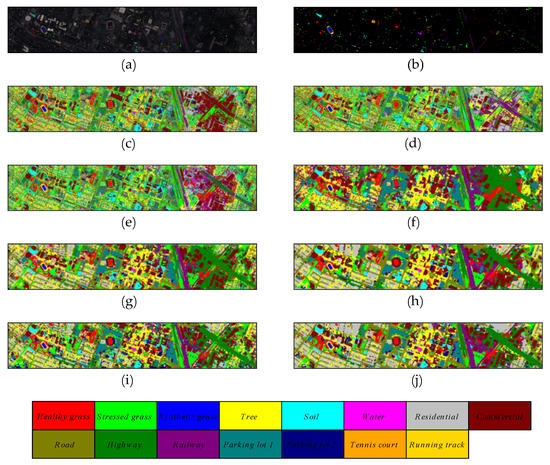

The selection of samples for training, validation, and testing on Salinas Valley and Grass_dfc_2013 datasets is consistent with the list of samples in Table 3 and Table 4. Meanwhile, the quantitative results of different methods on these two datasets are reported in Table 7 and Table 8, respectively. The proposed method outperforms other comparison methods in terms of OA, AA, and Kappa indicators. The 3D multibranch feature fusion module can extract the multiscale features from raw hyperspectral images and improve the performance significantly. Figure 10 and Figure 11 reveal the classification maps of methods on these two datasets, which clearly show that the proposed model has better visual impressions than other comparison methods. For other models, the HybridSN and SSRN models have better classification performance than traditional machine learning methods and shallow DL classifiers. Specifically, the HybridSN model achieves 98.97% in OA, 98.95% in terms of AA, and 98.85% in terms of Kappa on the SA dataset, demonstrating the excellent feature representation ability in deep neural networks. SSRN model achieves 97.62% in OA, 98.47% in AA, and 97.36% in Kappa. The large kernel filters are good at extracting original features from HSIs without the PCA process. By comparison, the shallow 2D classifiers such as 2D CNN and PyResNet cannot obtain comprehensive features and miss rich spectral information during the training process. Therefore, they do not achieve competitive classification performance as HybridSN and SSRN models.

Table 7.

The categorized results of different methods on the Salinas Valley dataset.

Table 8.

The categorized results of different methods on the Grass_dfc_2013 dataset.

Figure 10.

The classification maps of different methods on Salinas Valley dataset. (a) False color map with truth labels, (b) ground truth, (c) RBF-SVM, (d) MLR, (e) RF, (f) 2D-CNN, (g) PyResNet, (h) SSRN, (i) HybridSN, and (j) proposed.

Figure 11.

The classification maps of different methods on Grass_dfc_2013 dataset. (a) false color map with truth labels, (b) ground truth, (c) RBF-SVM, (d) MLR, (e) RF, (f) 2D-CNN, (g) PyResNet, (h) SSRN, (i) HybridSN, and (j) proposed.

4. Conclusions

In this paper, a novel deep learning method called 3D-2D multibranch feature fusion and dense attention network is proposed for hyperspectral images classification. Both 3D and 2D CNNs are combined in an end-to-end network. Specifically, the 3D multibranch feature fusion module is designed to extract multiscale features from the spatial and spectrum of the hyperspectral images. Following that, a 2D dense attention module is introduced. The module consists of a densely connected block and a spatial-channel attention block. The dense block is intended to alleviate gradient vanishing in deep layers and enhance the reuse of features. The attention module includes the spatial attention block and the spectral attention block. The two blocks can adaptively select the discriminative features from the space and the spectrum of redundant hyperspectral images. Combining the densely connected block and attention block can significantly improve the classification performance and accelerate the convergence of the network. The elaborate hybrid module raises the OA by 0.93–1.75% on four different datasets. Additionally, the proposed model outperforms other comparison methods in terms of OA by 1.63–18.11% on the PU dataset, 0.26–16.06% on the KSC dataset, 0.76–13.48% on the SA dataset, and 0.46–23.39% on the Grass_dfc_2013 dataset. These experimental results demonstrate that the model proposed can achieve satisfactory classification performance.

Author Contributions

Y.Z. (Yiyan Zhang) and H.G. conceived the ideas; Z.C., C.L. and Y.Z. (Yunfei Zhang) gave suggestions for improvement; Y.Z. (Yiyan Zhang) and H.G. conducted the experiment and compiled the paper. H.Z. assisted and revised the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by National Natural Science Foundation of China (62071168), Natural Science Foundation of Jiangsu Province (BK20211201), Fundamental Research Funds for the Central Universities (No. B200202183), China Postdoctoral Science Foundation (No. 2021M690885), National Key R&D Program of China (2018YFC1508106).

Data Availability Statement

Some or all data used during the study are available online in accordance with funder data retention polices. (http://www.ehu.eus/ccwintco/index.phptitle=Hyperspectral_Remote_Sensing_Scenes, https://hyperspectral.ee.uh.edu, accessed on 20 August 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Banerjee, B.; Raval, S.; Cullen, P.J. UAV-hyperspectral imaging of spectrally complex environments. Int. J. Remote Sens. 2020, 41, 4136–4159. [Google Scholar] [CrossRef]

- Govender, M.; Chetty, K.; Bulcock, H. A review of hyperspectral remote sensing and its application in vegetation and water resource studies. Water SA 2007, 33, 145–151. [Google Scholar] [CrossRef] [Green Version]

- Mcmanamon, P.F. Dual Use Opportunities for EO Sensors-How to Afford Militarysensing. In Proceedings of the 15th Annual AESS/IEEE Dayton Section Symposium, Fairborn, OH, USA, 14–15 May 1998. [Google Scholar]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semisupervised Hyperspectral Image Classification Using Soft Sparse Multinomial Logistic Regression. IEEE Geosci. Remote Sens. Lett. 2012, 10, 318–322. [Google Scholar] [CrossRef]

- Kuo, B.-C.; Ho, H.-H.; Li, C.-H.; Hung, C.-C.; Taur, J.-S. A Kernel-Based Feature Selection Method for SVM With RBF Kernel for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 317–326. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Principal component discriminant analysis for feature extraction and classification of hyperspectral images. In Proceedings of the 2014 Iranian Conference on Intelligent Systems (ICIS), Bam, Iran, 4–6 February 2014; pp. 1–5. [Google Scholar]

- Gao, H.; Zhang, Y.; Chen, Z.; Li, C. A Multiscale Dual-Branch Feature Fusion and Attention Network for Hyperspectral Images Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8180–8192. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature Extraction for Hyperspectral Imagery: The Evolution From Shallow to Deep: Overview and Toolbox. IEEE Geosci. Remote Sens. Mag. 2020, 8, 60–88. [Google Scholar] [CrossRef]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Deep supervised learning for hyperspectral data classification through convolutional neural networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar]

- He, M.; Li, B.; Chen, H. Multi-scale 3D deep convolutional neural network for hyperspectral image classification. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar] [CrossRef] [Green Version]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Itti, L.C.; Koch, C. Computational Modelling of Visual Attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.-S. SCA-CNN: Spatial and Channel-Wise Attention in Convolutional Networks for Image Captioning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6298–6306. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. IEEE Comput. Soc. 2017, 4700–4708. [Google Scholar] [CrossRef] [Green Version]

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; Van Kasteren, T.; Liao, W.; Bellens, R.; Pizurica, A.; Gautama, S.; et al. Hyperspectral and LiDAR Data Fusion: Outcome of the 2013 GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2405–2418. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Fernandez-Beltran, R.; Plaza, J.; Plaza, A.J.; Pla, F. Deep Pyramidal Residual Networks for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 740–754. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).