Smart Fall Detection Framework Using Hybridized Video and Ultrasonic Sensors

Abstract

1. Introduction

2. Materials and Methods

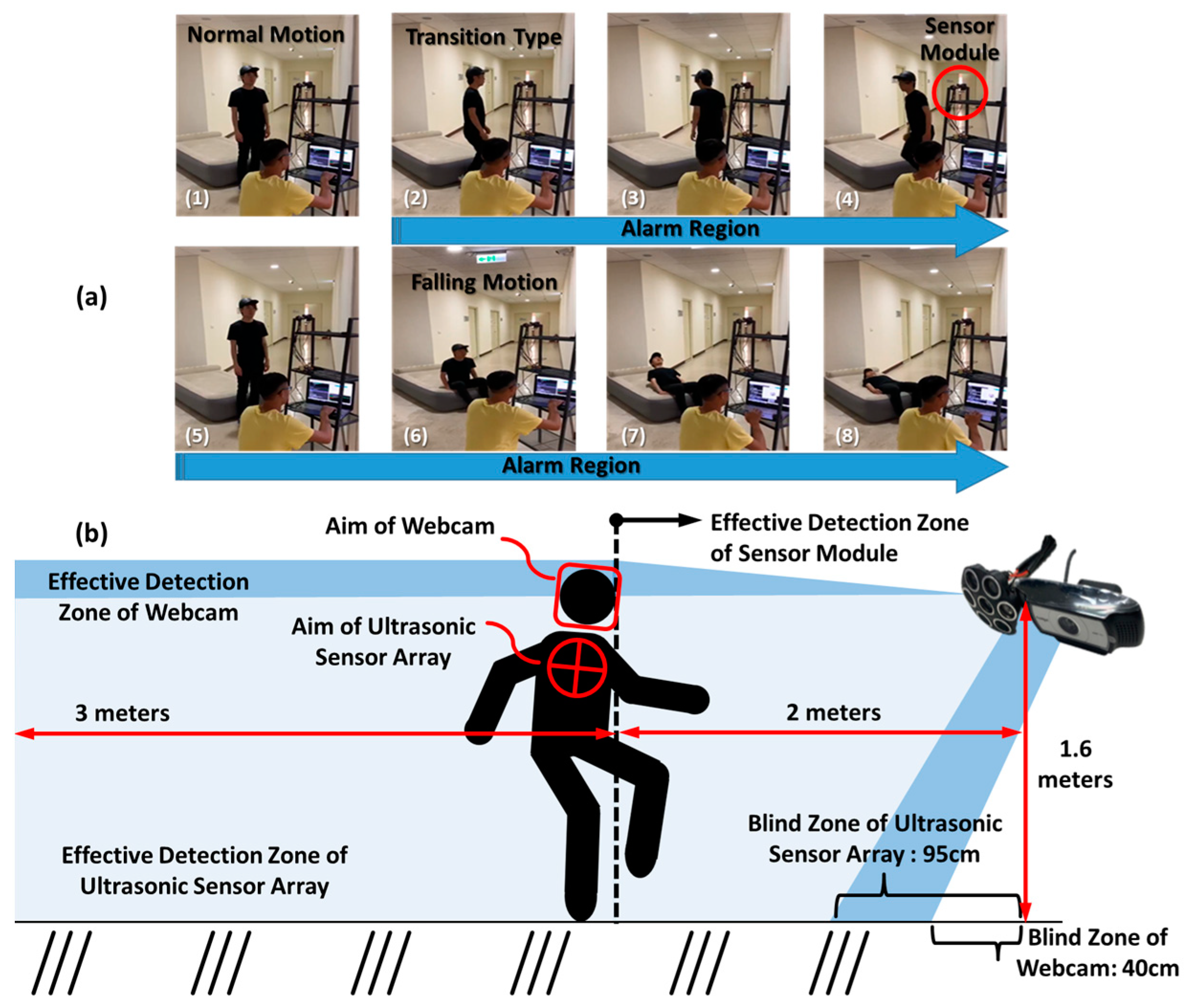

2.1. The Hybridized Platform

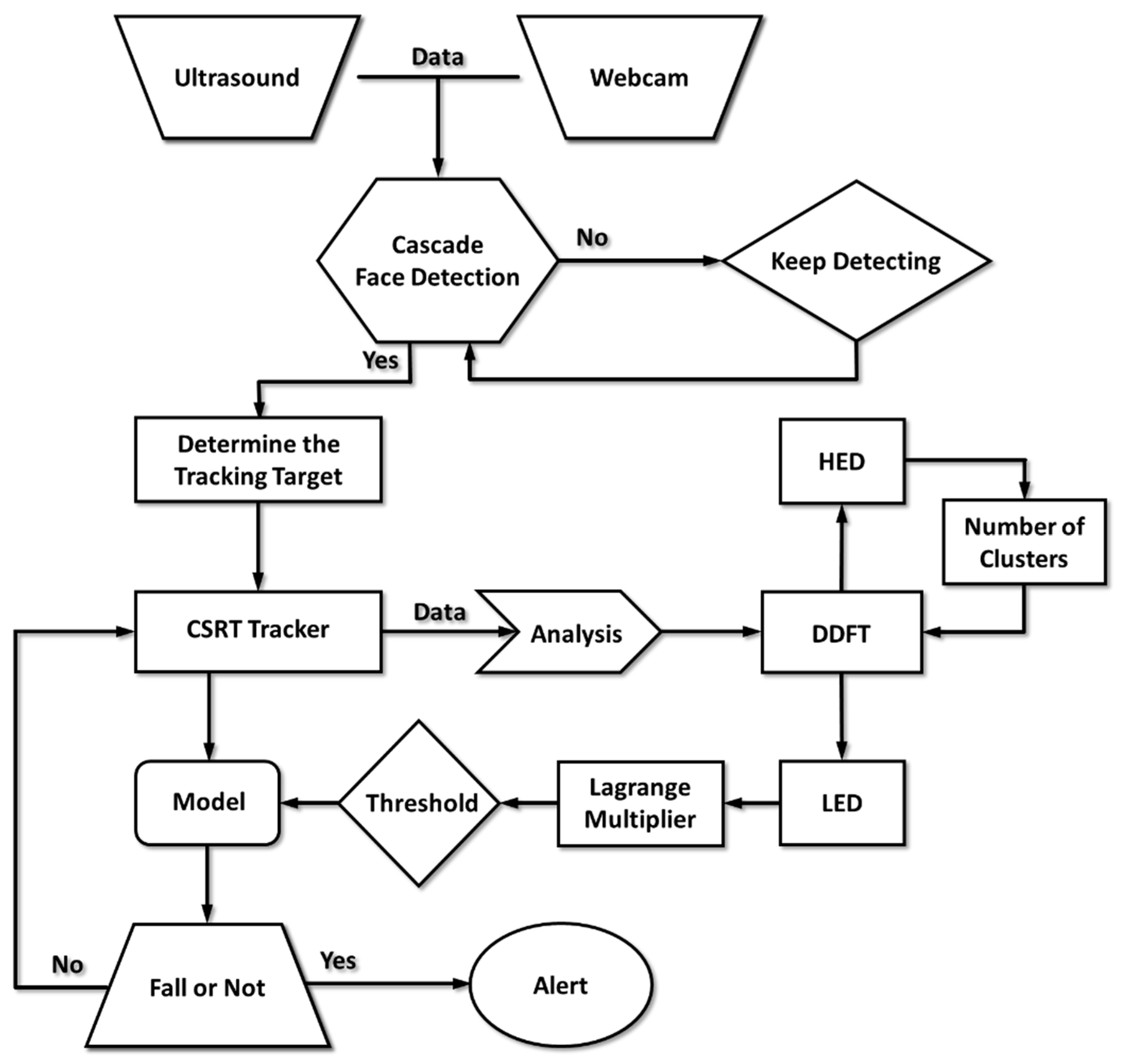

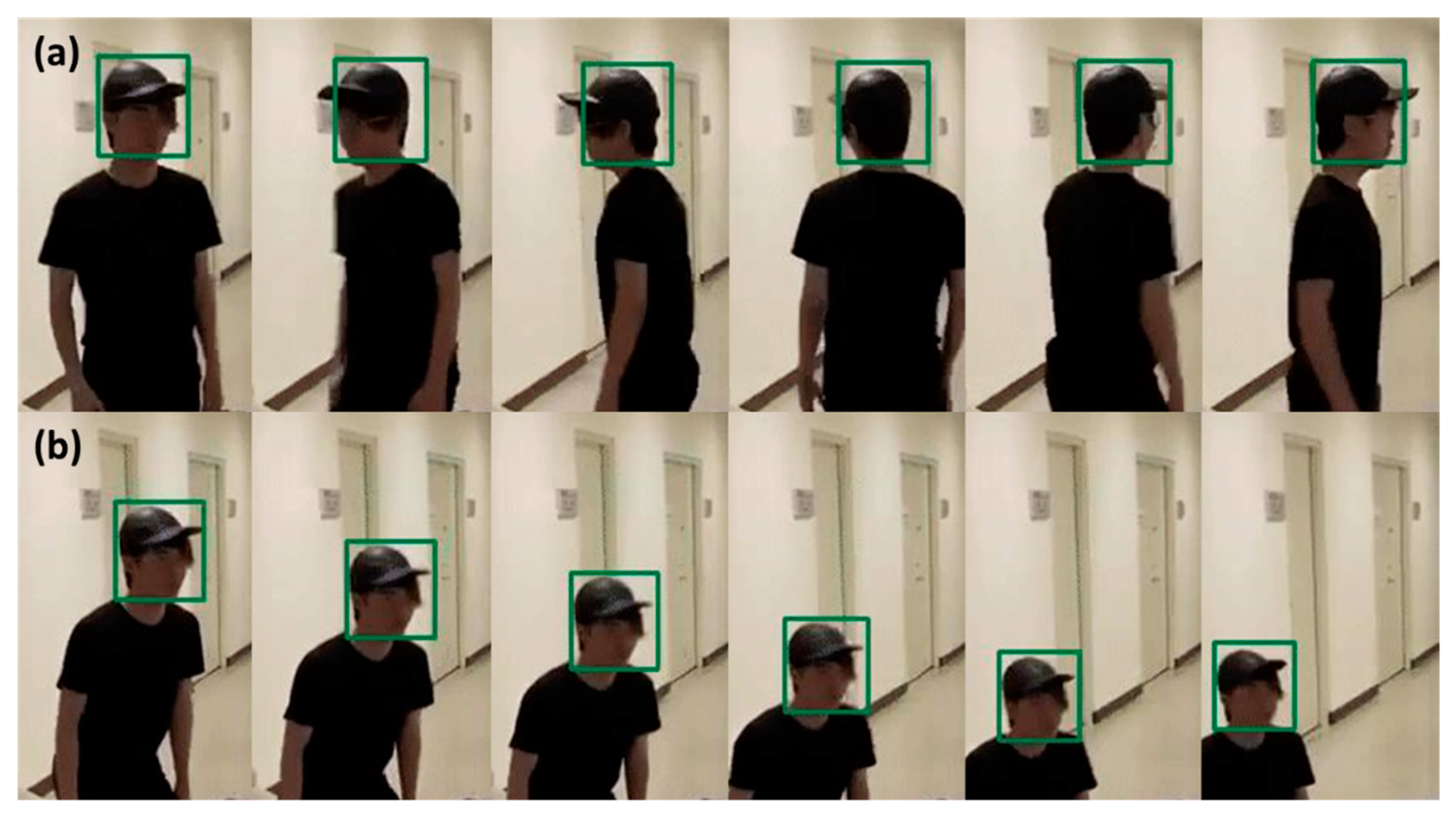

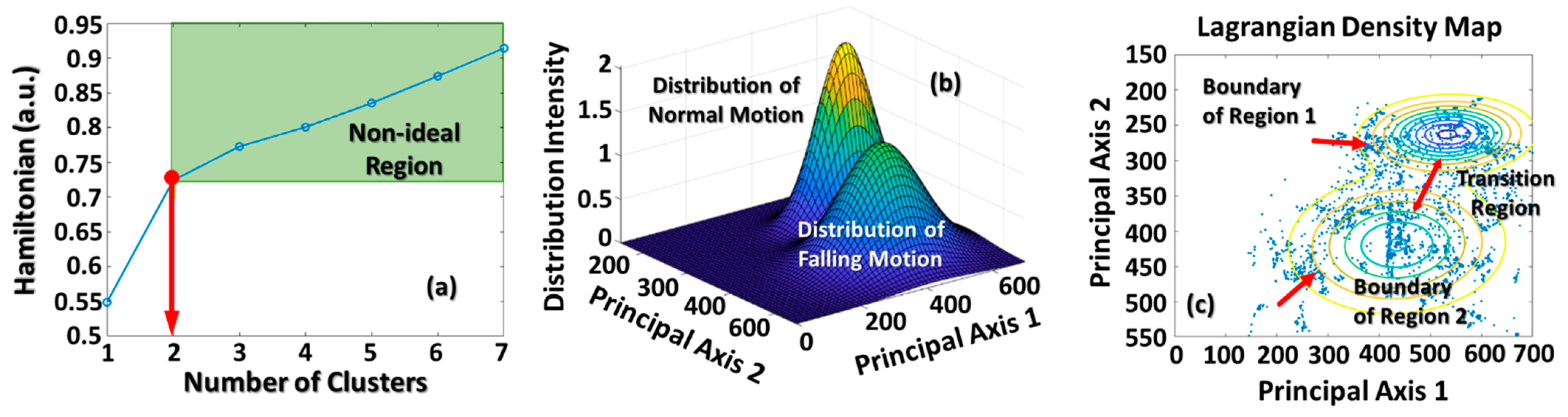

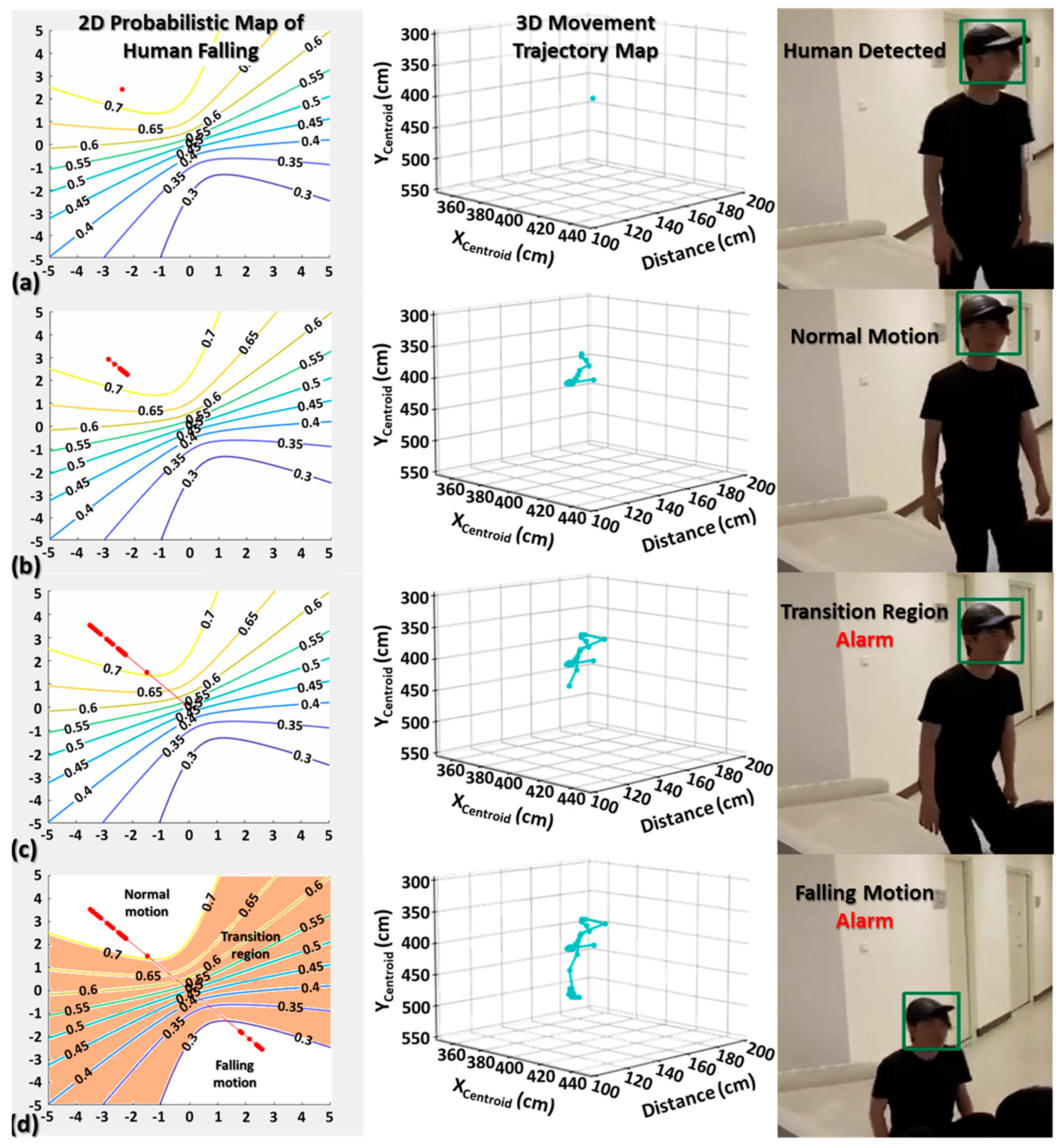

2.2. Tracking Algorithms and Probabilistic Modeling

2.3. Experimental Framework

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Falls Prevention in Older Age. Available online: https://www.who.int/ageing/projects/falls_prevention_older_age/en/ (accessed on 18 February 2021).

- Hill, K.D.; Suttanon, P.; Lin, S.-I.; Tsang, W.W.N.; Ashari, A.; Hamid, T.A.A.; Farrier, K.; Burton, E. What works in falls prevention in Asia: Asystematic review and meta-analysis of randomized controlled trials. BMC Geriatr. 2018, 18, 3. [Google Scholar] [CrossRef] [PubMed]

- Nho, Y.; Lim, J.G.; Kwon, D. Cluster-Analysis-Based User-Adaptive Fall Detection Using Fusion of Heart Rate Sensor and Accelerometer in a Wearable Device. IEEE Access 2020, 8, 40389–40401. [Google Scholar] [CrossRef]

- Huang, S.-J.; Wu, C.-J.; Chen, C.-C. Pattern Recognition of Human Postures Using the Data Density Functional Method. Appl. Sci. 2018, 8, 1615. [Google Scholar] [CrossRef]

- Santos, G.L.; Endo, P.T.; Monteiro, K.H.d.C.; Rocha, E.d.S.; Silva, I.; Lynn, T. Accelerometer-Based Human Fall Detection Using Convolutional Neural Networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef] [PubMed]

- Khojasteh, S.B.; Villar, J.R.; Chira, C.; González, V.M.; De la Cal, E. Improving Fall Detection Using an On-Wrist Wearable Accelerometer. Sensors 2018, 18, 1350. [Google Scholar] [CrossRef] [PubMed]

- Jefiza, A.; Pramunanto, E.; Boedinoegroho, H.; Purnomo, M.H. Fall detection based on accelerometer and gyroscope using back propagation. In Proceedings of the 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017; pp. 1–6. [Google Scholar]

- Wang, F.-T.; Chan, H.-L.; Hsu, M.-H.; Lin, C.-K.; Chao, P.-K.; Chang, Y.-J. Threshold-based fall detection using a hybrid of tri-axial accelerometer and gyroscope. Physiol. Meas. 2018, 39, 105002. [Google Scholar] [CrossRef]

- Casilari, E.; Álvarez-Marco, M.; García-Lagos, F. A Study of the Use of Gyroscope Measurements in Wearable Fall Detection Systems. Symmetry 2020, 12, 649. [Google Scholar] [CrossRef]

- Pierleoni, P.; Belli, A.; Palma, L.; Pellegrini, M.; Pernini, L.; Valenti, S. A High Reliability Wearable Device for Elderly Fall Detection. IEEE Sens. J. 2015, 15, 4544–4553. [Google Scholar] [CrossRef]

- Abbate, S.; Avvenuti, M.; Bonatesta, F.; Cola, G.; Corsini, P.; Vecchio, A. A smartphone-based fall detection system. Pervasive Mob. Comput. 2012, 8, 883–899. [Google Scholar] [CrossRef]

- Guvensan, M.A.; Kansiz, A.O.; Camgoz, N.C.; Turkmen, H.I.; Yavuz, A.G.; Karsligil, M.E. An Energy-Efficient Multi-Tier Architecture for Fall Detection on Smartphones. Sensors 2017, 17, 1487. [Google Scholar] [CrossRef] [PubMed]

- Saleh, M.; Jeannès, R.L.B. Elderly Fall Detection Using Wearable Sensors: A Low Cost Highly Accurate Algorithm. IEEE Sens. J. 2019, 19, 3156–3164. [Google Scholar] [CrossRef]

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principlesandapproaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Kong, Y.; Huang, J.; Huang, S.; Wei, Z.; Wang, S. Learning spatiotemporal representations for human fall detection in surveillance video. J. Vis. Commun. Image R. 2019, 59, 215–230. [Google Scholar] [CrossRef]

- Sin, H.; Lee, G. Additional virtual reality training using Xbox Kinect in stroke survivors with hemiplegia. Am. J. Phys. Med. Rehabil. 2013, 92, 871–880. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, C.; Wang, Y. Human Fall Detection Based on Body Posture Spatio-Temporal Evolution. Sensors 2020, 20, 946. [Google Scholar] [CrossRef]

- Ding, W.; Hu, B.; Liu, H.; Wang, X.; Huang, X. Human posture recognition based on multiple features and rule learning. Int. J. Mach. Learn. Cyber. 2020, 11, 2529–2540. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Liu, Y.; Xiang, S.; Pan, C. 3D PostureNet: A unified framework for skeleton-based posture recognition. Pattern Recognit. Lett. 2020, 140, 143–149. [Google Scholar] [CrossRef]

- Panahi, L.; Ghods, V. Human fall detection using machine vision techniques on RGB–Dimages. Biomed. Signal. Process. Control. 2018, 44, 146–153. [Google Scholar] [CrossRef]

- Du, Y.; Fu, Y.; Wang, L. Skeleton based action recognition with convolutional neural network. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 579–583. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 7444–7452. [Google Scholar]

- Andriluka, M.; Roth, S.; Schiele, B. People-tracking-by-detection and people-detection-by-tracking. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Zhang, K.; Zhang, L.; Yang, M. Fast Compressive Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2002–2015. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef]

- Lukežič, A.; Vojiř, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative Correlation Filter With Channel and Spatial Reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6309–6318. [Google Scholar]

- Sharifara, A.; Rahim, M.S.M.; Anisi, Y. A general review of human face detection including a study of neural networks and Haar feature-based cascade classifier in face detection. In Proceedings of the 2014 International Symposium on Biometrics and Security Technologies (ISBAST), Kuala Lumpur, Malaysia, 26–27 August 2014; pp. 73–78. [Google Scholar]

- Choudhury, S.; Chattopadhyay, S.P.; Hazra, T.K. Vehicle detection and counting using haar feature-based classifier. In Proceedings of the 8th Annual Industrial Automation and Electromechanical Engineering Conference (IEMECON), Bangkok, Thailand, 16–18 August 2017; pp. 106–109. [Google Scholar]

- Haar Feature-Based Cascade Classifiers—OpenCV Blog. Available online: https://docs.opencv.org/3.4/d2/d99/tutorial_js_face_detection.html (accessed on 30 March 2020).

- Farhodov, X.; Kwon, O.; Kang, K.W.; Lee, S.; Kwon, K. Faster RCNN Detection Based OpenCV CSRT Tracker Using Drone Data. In Proceedings of the 2019 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 4–6 November 2019; pp. 1–3. [Google Scholar]

- OpenCV: TrackerCSRT Class Reference. Available online: https://docs.opencv.org/3.4/d2/da2/classcv_1_1TrackerCSRT.html (accessed on 30 March 2020).

- Chen, C.-C.; Juan, H.-H.; Tsai, M.-Y.; Lu, H.H.-S. Unsupervised Learning and Pattern Recognition of Biological Data Structures with Density Functional Theory and Machine Learning. Sci. Rep. 2018, 8, 557. [Google Scholar] [CrossRef]

- Chen, C.-C.; Tsai, M.-Y.; Kao, M.-Z.; Lu, H.H.-S. Medical Image Segmentation with Adjustable Computational Complexity Using Data Density Functionals. Appl. Sci. 2019, 9, 1718. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B Stat. Methodol. 1977, 39, 1–38. [Google Scholar]

- Huang, L.; Yang, D.; Lang, B.; Deng, J. Decorrelated Batch Normalization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 791–800. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Tai, Y.-L.; Huang, S.-J.; Chen, C.-C.; Lu, H.H.-S. Computational Complexity Reduction of Neural Networks of Brain Tumor Image Segmentation by Introducing Fermi–Dirac Correction Functions. Entropy 2021, 23, 223. [Google Scholar] [CrossRef] [PubMed]

| True Positive (TP) | True Negative (TN) | False Position (FP) | False Negative (FN) |

|---|---|---|---|

| 25,725 | 12,026 | 1292 | 2956 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsu, F.-S.; Chang, T.-C.; Su, Z.-J.; Huang, S.-J.; Chen, C.-C. Smart Fall Detection Framework Using Hybridized Video and Ultrasonic Sensors. Micromachines 2021, 12, 508. https://doi.org/10.3390/mi12050508

Hsu F-S, Chang T-C, Su Z-J, Huang S-J, Chen C-C. Smart Fall Detection Framework Using Hybridized Video and Ultrasonic Sensors. Micromachines. 2021; 12(5):508. https://doi.org/10.3390/mi12050508

Chicago/Turabian StyleHsu, Feng-Shuo, Tang-Chen Chang, Zi-Jun Su, Shin-Jhe Huang, and Chien-Chang Chen. 2021. "Smart Fall Detection Framework Using Hybridized Video and Ultrasonic Sensors" Micromachines 12, no. 5: 508. https://doi.org/10.3390/mi12050508

APA StyleHsu, F.-S., Chang, T.-C., Su, Z.-J., Huang, S.-J., & Chen, C.-C. (2021). Smart Fall Detection Framework Using Hybridized Video and Ultrasonic Sensors. Micromachines, 12(5), 508. https://doi.org/10.3390/mi12050508