A Non-Contact Fall Detection Method for Bathroom Application Based on MEMS Infrared Sensors

Abstract

:1. Introduction

2. Materials and Methods

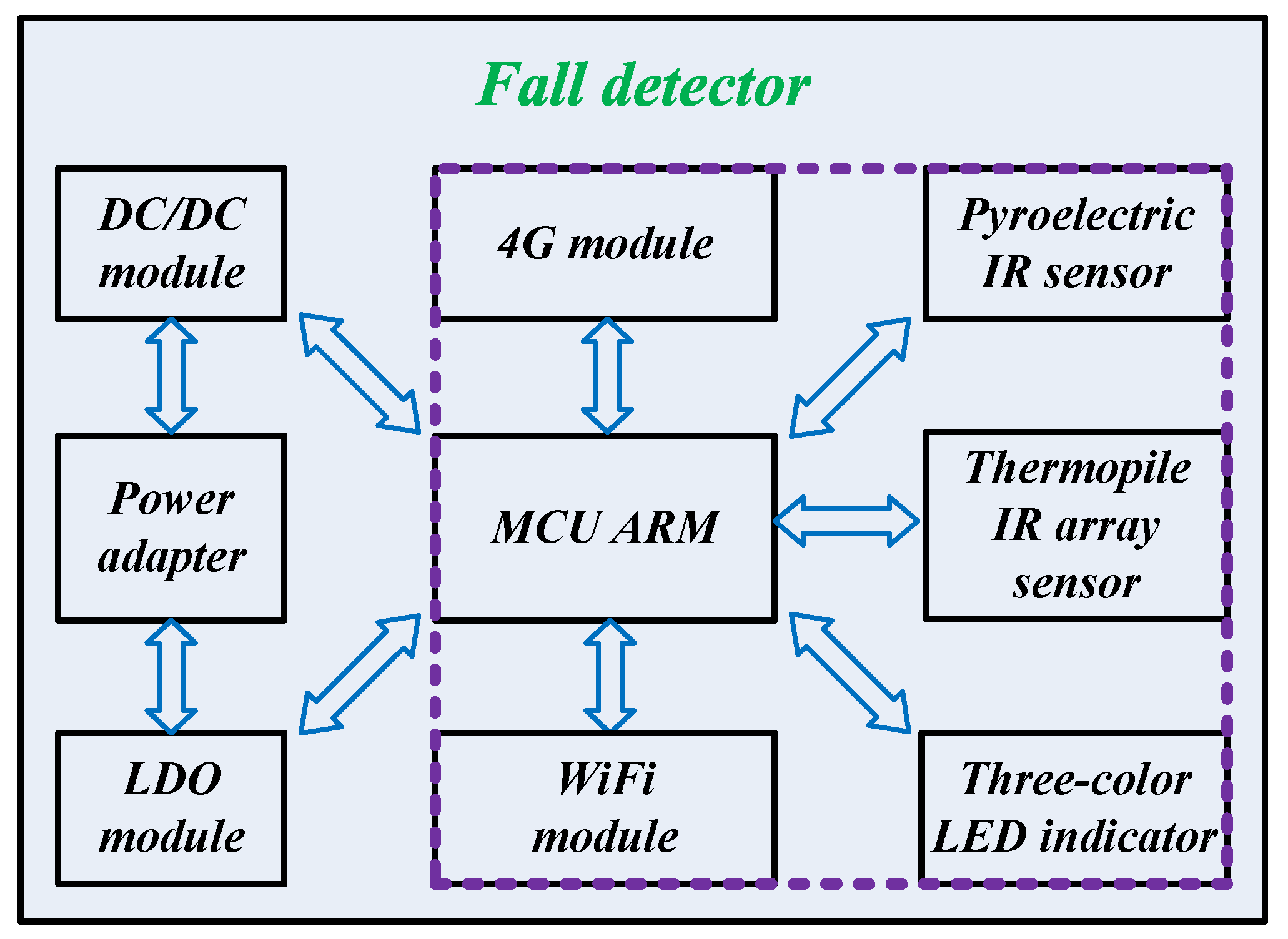

2.1. System Design

- (1)

- Power supply subsystem: Low Dropout Regulator (LDO) and DC/DC converter are powered by a power adapter, then power the whole system.

- (2)

- Processor subsystem: STM32F411 ARM is applied as the edge-computing MCU. WiFi module (WIFI_WRG1, powered by Tuya Co. Ltd., Hangzhou, China) is adopted to conduct remote communication. The alarm information is sent to the management system operated by the caregiver. Meanwhile, the emergency contacts registered in the APP will be contacted with IP call and message. Given that the WiFi signal is sometimes unstable and that the detector is easy to drop out of the network; hence, a 4G module (PAD_ML302, powered by China Mobile Co. Ltd., Chongqing, China) is added in the detector. In this way, the success rate of alarm can be greatly improved through WiFi and 4G dual communication. Furthermore, the positioning with WiFi and 4G modules is also conducive to rapid rescue.

- (3)

- Sensor subsystem: A PIR sensor and a thermopile IR array sensor are applied to detect the body movement and the thermal image, respectively, which are utilized for fall recognition. If a fall event is detected, the detector will send a remote alarm with wireless modules, and the LED indicator will light up in red.

2.2. Image Processing

2.2.1. Signal Filtering

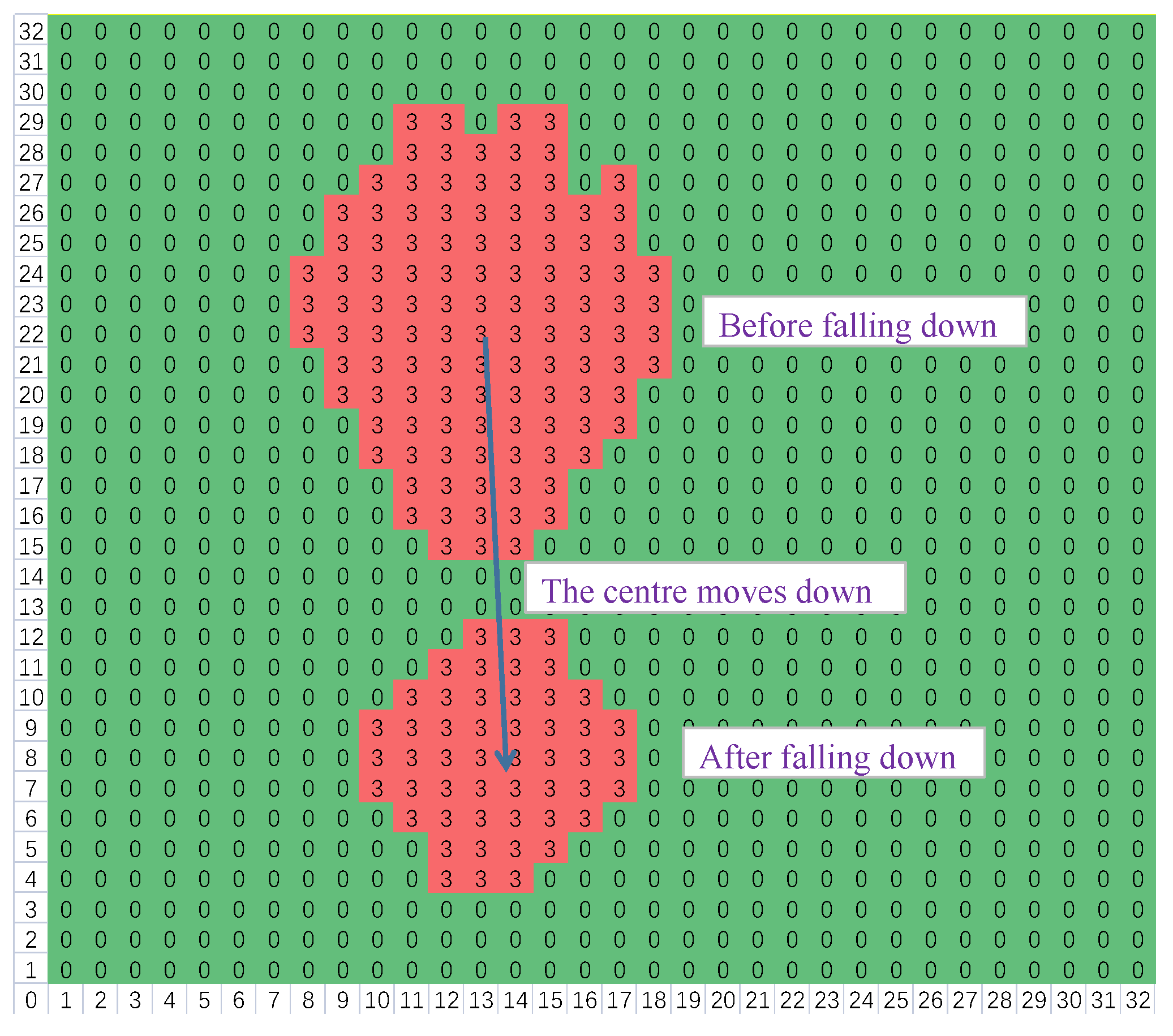

2.2.2. Body Positioning

- (1)

- The first scan: Define a label array L whose initial values are all 0. Taking boundary extension into account, the size of L is 34 × 34. Furthermore, set the block number bn to be 1. Define a new set P1 as {L[r − 1][c − 1], L[r − 1][c], L[r − 1][c + 1], L[r][c − 1], L[r][c + 1], L[r + 1][c − 1], L[r + 1][c], L[r + 1][c + 1]}. r (1 ≤ r ≤ 32) and c (1 ≤ c ≤ 32) are the row index and column index, respectively. Delete the repeated values or 0 from P1, then a new set P2 can be obtained. During progressive scanning, if P2 is empty, then bn is assigned to L[r][c], and bn is updated as (bn + 1). Otherwise, the minimum in P2 will be assigned to L[r][c]. In addition, if the size of P2 is more than 1, the corresponding blocks are adjacent, then P2 will be added to a relationship table Q. Q is a two-dimensional (2D) array used to save a series of sets. The pseudo-code is shown as follows:

- (2)

- The second scan: After the first scan, there may be some adjacent blocks; as depicted in Figure 10, blocks 3, 4, and 5 are connected. Thus, they should be merged together, and the second scan is necessary. Firstly, compare the elements in Q in pairs; if their intersection is not empty, then merge them to form a union. Secondly, for each element in Q, select the points corresponding to all the block numbers in this set and then modify their labels to the minimum block number of the set. Thus, all the adjacent blocks are merged. As illustrated in Figure 11, blocks 3, 4, and 5 are merged to form block 3. The pseudo-code is depicted as (5), where cnt is a counter vector applied to record the number of the points of every block.

- (3)

- Owing to the environmental interference, several high-temperature blocks may be picked out. Considering that the area of the human’s block should be the largest, so finally only the largest block is reserved, and others will be all removed. The pseudo-code is shown as (6), where id is the block number of the largest block. As depicted in Figure 12, blocks 1, 2, and 6 have been eliminated. If a locked potential body area appears, the signal output by the PIR sensor will be combined together to judge whether there is a fall event, then feature extraction is important.

2.3. Feature Extraction

2.4. Pattern Recognition

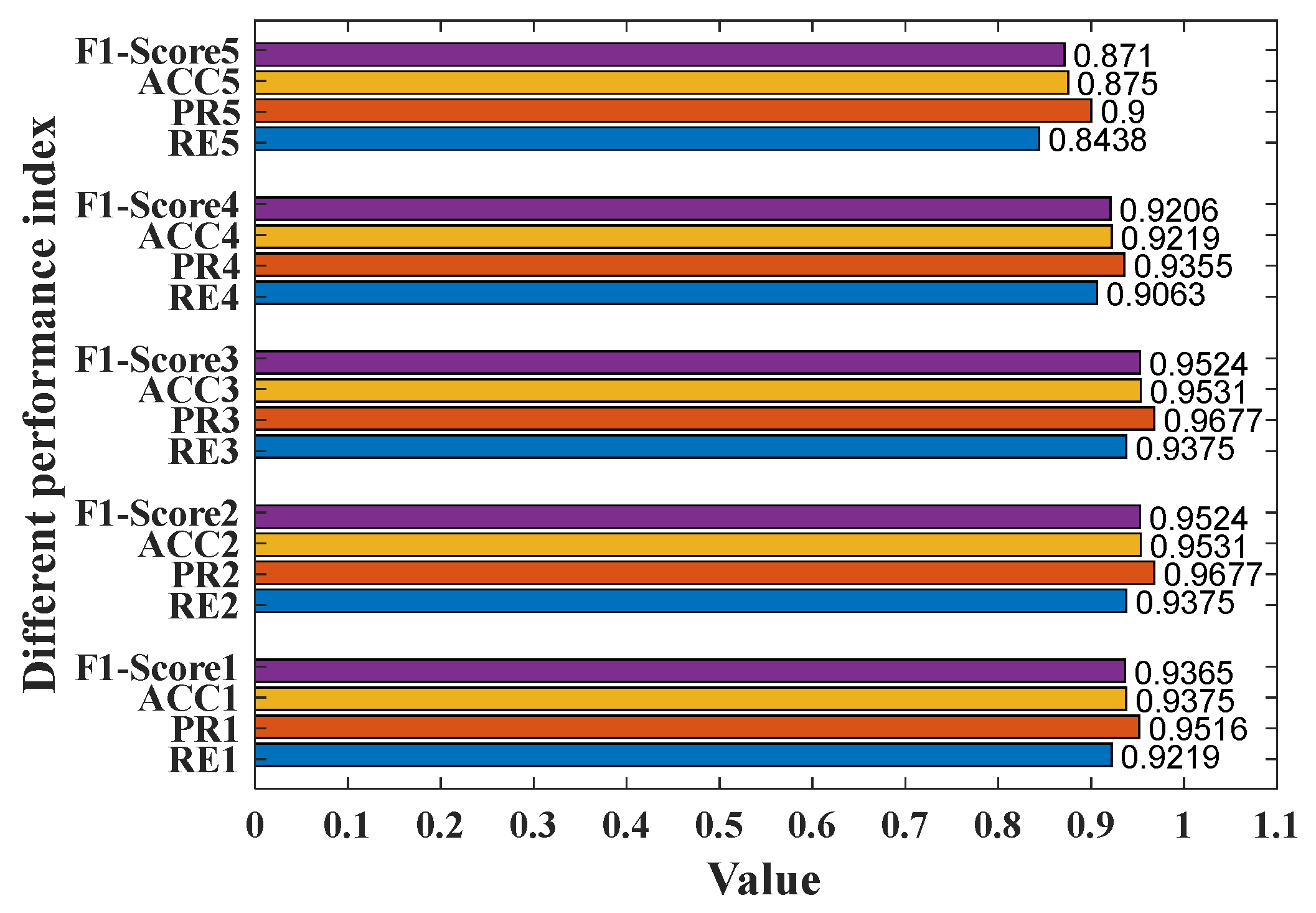

3. Experimental Results

3.1. Performance Indices

3.2. Test Scheme

3.3. Test Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations, Department of Economic and Social Affairs. World Population Ageing. 2015. Available online: http://www.un.org/en/development/desa/population/publications/pdf/ageing/WPA2015_Report.pdf (accessed on 1 June 2019).

- Naja, S.; Makhlouf, M.M.E.D.; Chehab, M.A.H. An ageing world of the 21st century: A literature review. Int. J. Community Med. Public Health 2017, 4, 4363. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization. Number of People Over 60 Years Set to Double by 2050; Major Societal Changes Required. 2015. Available online: https://www.who.int/news/item/30-09-2015-who-number-of-people-over-60-years-set-to-double-by-2050-major-societal-changes-required (accessed on 1 June 2019).

- Singh, A.; Rehman, S.U.; Yongchareon, S.; Chong, P.H.J. Sensor Technologies for Fall Detection Systems: A Review. IEEE Sens. J. 2020, 20, 6889–6919. [Google Scholar] [CrossRef]

- Chaccour, K.; Darazi, R.; El Hassani, A.H.; Andres, E. From Fall Detection to Fall Prevention: A Generic Classification of Fall-Related Systems. IEEE Sens. J. 2016, 17, 812–822. [Google Scholar] [CrossRef]

- Yacchirema, D.; De Puga, J.S.; Palau, C.; Esteve, M. Fall detection system for elderly people using IoT and big data. Procedia Comput. Sci. 2018, 130, 603–610. [Google Scholar] [CrossRef]

- Al Nahian, J.; Ghosh, T.; Al Banna, H.; Aseeri, M.A.; Uddin, M.N.; Ahmed, M.R.; Mahmud, M.; Kaiser, M.S. Towards an Accelerometer-Based Elderly Fall Detection System Using Cross-Disciplinary Time Series Features. IEEE Access 2021, 9, 39413–39431. [Google Scholar] [CrossRef]

- Boutellaa, E.; Kerdjidj, O.; Ghanem, K. Covariance matrix based fall detection from multiple wearable sensors. J. Biomed. Inform. 2019, 94, 103189. [Google Scholar] [CrossRef]

- Hashim, H.A.; Mohammed, S.L.; Gharghan, S.K. Accurate fall detection for patients with Parkinson’s disease based on a data event algorithm and wireless sensor nodes. Measurement 2020, 156, 107573. [Google Scholar] [CrossRef]

- Kostopoulos, P.; Kyritsis, A.I.; Deriaz, M.; Konstantas, D. F2D: A Location Aware Fall Detection System Tested with Real Data from Daily Life of Elderly People. Procedia Comput. Sci. 2016, 98, 212–219. [Google Scholar] [CrossRef] [Green Version]

- Xi, X.; Jiang, W.; Lü, Z.; Miran, S.M.; Luo, Z.-Z. Daily Activity Monitoring and Fall Detection Based on Surface Electromyography and Plantar Pressure. Complexity 2020, 2020, 9532067. [Google Scholar] [CrossRef]

- Cotechini, V.; Belli, A.; Palma, L.; Morettini, M.; Burattini, L.; Pierleoni, P. A dataset for the development and optimization of fall detection algorithms based on wearable sensors. Data Brief 2019, 23, 103839. [Google Scholar] [CrossRef]

- Astriani, M.S.; Bahana, R.; Kurniawan, A.; Yi, L.H. Promoting Data Availability Framework by Using Gamification on Smartphone Fall Detection Based Human Activities. Procedia Comput. Sci. 2021, 179, 913–919. [Google Scholar] [CrossRef]

- Cippitelli, E.; Fioranelli, F.; Gambi, E.; Spinsante, S. Radar and RGB-Depth Sensors for Fall Detection: A Review. IEEE Sens. J. 2017, 17, 3585–3604. [Google Scholar] [CrossRef] [Green Version]

- Shu, F.; Shu, J. An eight-camera fall detection system using human fall pattern recognition via machine learning by a low-cost android box. Sci. Rep. 2021, 11, 2417. [Google Scholar] [CrossRef] [PubMed]

- de Miguel, K.; Brunete, A.; Hernando, M.; Gambao, E. Home Camera-Based Fall Detection System for the Elderly. Sensors 2017, 17, 2864. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kong, X.; Meng, Z.; Nojiri, N.; Iwahori, Y.; Meng, L.; Tomiyama, H. A HOG-SVM Based Fall Detection IoT System for Elderly Persons Using Deep Sensor. Procedia Comput. Sci. 2019, 147, 276–282. [Google Scholar] [CrossRef]

- Rafferty, J.; Synnott, J.; Nugent, C.; Morrison, G.; Tamburini, E. Fall detection through thermal vision sensing. In Ubiquitous Computing and Ambient Intelligence; Springer: Cham, Switzerland, 2016; pp. 84–90. [Google Scholar]

- Chaccour, K.; Darazi, R.; el Hassans, A.H.; Andres, E. Smart carpet using differential piezoresistive pressure sensors for elderly fall detection. In Proceedings of the 2015 IEEE 11th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Abu-Dhabi, United Arab Emirates, 19–21 October 2015; pp. 225–229. [Google Scholar]

- Nakamura, T.; Bouazizi, M.; Yamamoto, K.; Ohtsuki, T. Wi-fi-CSI-based fall detection by spectrogram analysis with CNN. In Proceedings of the GLOBECOM 2020–2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Jokanovic, B.; Amin, M. Fall Detection Using Deep Learning in Range-Doppler Radars. IEEE Trans. Aerosp. Electron. Syst. 2017, 54, 180–189. [Google Scholar] [CrossRef]

- Tateno, S.; Meng, F.; Qian, R.; Hachiya, Y. Privacy-Preserved Fall Detection Method with Three-Dimensional Convolutional Neural Network Using Low-Resolution Infrared Array Sensor. Sensors 2020, 20, 5957. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, M.; Yuan, Y.; Kan, K.Y. Fall Detection and Personnel Tracking System Using Infrared Array Sensors. IEEE Sens. J. 2020, 20, 9558–9566. [Google Scholar] [CrossRef]

- Fan, X.; Zhang, H.; Leung, C.; Shen, Z. Robust unobtrusive fall detection using infrared array sensors. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Republic of Korea, 16–18 November 2017; pp. 194–199. [Google Scholar]

- Adolf, J.; Macas, M.; Lhotska, L.; Dolezal, J. Deep neural network based body posture recognitions and fall detection from low resolution infrared array sensor. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2394–2399. [Google Scholar] [CrossRef]

- Hayashida, A.; Moshnyaga, V.; Hashimoto, K. The use of thermal ir array sensor for indoor fall detection. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 594–599. [Google Scholar]

- Liang, Q.; Yu, L.; Zhai, X.; Wan, Z.; Nie, H. Activity recognition based on thermopile imaging array sensor. In Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018; pp. 770–773. [Google Scholar]

- Guan, Q.; Li, C.; Guo, X.; Shen, B. Infrared signal based elderly fall detection for in-home monitoring. In Proceedings of the 2017 9th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2017; Volume 1, pp. 373–376. [Google Scholar]

- Jansi, R.; Amutha, R. Detection of fall for the elderly in an indoor environment using a tri-axial accelerometer and Kinect depth data. Multidimens. Syst. Signal Process. 2020, 31, 1207–1225. [Google Scholar] [CrossRef]

- Nadeem, A.; Mehmood, A.; Rizwan, K. A dataset build using wearable inertial measurement and ECG sensors for activity recognition, fall detection and basic heart anomaly detection system. Data Brief 2019, 27, 104717. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, Y. Infrared–ultrasonic sensor fusion for support vector machine–based fall detection. J. Intell. Mater. Syst. Struct. 2018, 29, 2027–2039. [Google Scholar] [CrossRef] [Green Version]

- Casilari, E.; Santoyo-Ramón, J.A.; Cano-García, J.M. UMAFall: A Multisensor Dataset for the Research on Automatic Fall Detection. Procedia Comput. Sci. 2017, 110, 32–39. [Google Scholar] [CrossRef]

- Karayaneva, Y.; Sharifzadeh, S.; Jing, Y.; Chetty, K.; Tan, B. Sparse Feature Extraction for Activity Detection Using Low-Resolution IR Streams. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 1837–1843. [Google Scholar]

- Mazurek, P.; Wagner, J.; Morawski, R.Z. Use of kinematic and mel-cepstrum-related features for fall detection based on data from infrared depth sensors. Biomed. Signal Process. Control 2018, 40, 102–110. [Google Scholar] [CrossRef]

- Singh, K.; Rajput, A.; Sharma, S. Human Fall Detection Using Machine Learning Methods: A Survey. Int. J. Math. Eng. Manag. Sci. 2020, 5, 161–180. [Google Scholar] [CrossRef]

- Iuga, C.; Drăgan, P.; Bușoniu, L. Fall monitoring and detection for at-risk persons using a UAV. IFAC PapersOnLine 2018, 51, 199–204. [Google Scholar] [CrossRef]

- Jacob, J.; Nguyen, T.; Lie, D.Y.C.; Zupancic, S.; Bishara, J.; Dentino, A.; Banister, R.E. A fall detection study on the sensors placement location and a rule-based multi-thresholds algorithm using both accelerometer and gyroscopes. In Proceedings of the 2011 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE 2011), Taipei, Taiwan, 27–30 June 2011; pp. 666–671. [Google Scholar] [CrossRef]

- Farsi, M. Application of ensemble RNN deep neural network to the fall detection through IoT environment. Alex. Eng. J. 2021, 60, 199–211. [Google Scholar] [CrossRef]

- El Kaid, A.; Baïna, K.; Baïna, J. Reduce False Positive Alerts for Elderly Person Fall Video-Detection Algorithm by convolutional neural network model. Procedia Comput. Sci. 2019, 148, 2–11. [Google Scholar] [CrossRef]

- Mrozek, D.; Koczur, A.; Małysiak-Mrozek, B. Fall detection in older adults with mobile IoT devices and machine learning in the cloud and on the edge. Inf. Sci. 2020, 537, 132–147. [Google Scholar] [CrossRef]

- Divya, V.; Sri, R.L. Docker-Based Intelligent Fall Detection Using Edge-Fog Cloud Infrastructure. IEEE Internet Things J. 2020, 8, 8133–8144. [Google Scholar] [CrossRef]

- Chen, Y.; Kong, X.; Meng, L.; Tomiyama, H. An Edge Computing Based Fall Detection System for Elderly Persons. Procedia Comput. Sci. 2020, 174, 9–14. [Google Scholar] [CrossRef]

- Nakamura, T.; Bouazizi, M.; Yamamoto, K.; Ohtsuki, T. Wi-Fi-Based Fall Detection Using Spectrogram Image of Channel State Information. IEEE Internet Things J. 2022, 9, 17220–17234. [Google Scholar] [CrossRef]

- Dumitrache, M.; Pasca, S. Fall detection system for elderly with GSM communication and GPS localization. In Proceedings of the 2013 8th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 23–25 May 2013; pp. 1–6. [Google Scholar]

- Chauhan, H.; Rizwan, R.; Fatima, M. IoT Based Fall Detection of a Smart Helmet. In Proceedings of the 2022 7th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 22–24 June 2022; pp. 407–412. [Google Scholar]

| Factor | Level |

|---|---|

| Ambient temperature | 18 °C, 21 °C, 24 °C, 27 °C, 30 °C |

| Objective | female (1.6 m), male (1.8 m) |

| Illumination | LED light, sunlight |

| Fall speed | fast, slow |

| Fall state | sitting, lying |

| Fall area | at the boundary, in the center |

| Fall scene | shower, without shower |

| Fold No. | TP | FN | TN | FP |

|---|---|---|---|---|

| 1 | 59 | 5 | 61 | 3 |

| 2 | 60 | 4 | 62 | 2 |

| 3 | 60 | 4 | 62 | 2 |

| 4 | 58 | 6 | 60 | 4 |

| 5 | 54 | 10 | 58 | 6 |

| Average | 58.2 | 5.8 | 60.6 | 3.4 |

| Detection Method | Sensor | Accuracy | Comment | References |

|---|---|---|---|---|

| Wearable techniques | inertial sensors, IMU | 96~100% | The elderly are not willing to wear the product and are apt to forget to charge it. | [6,7,8,9,10,11,12,13] |

| Vision-based techniques | video cameras, depth cameras, or thermal cameras | 96~100% | high-cost and privacy violation | [14,15,16,17,18] |

| Ambient-based techniques | pressure sensors, WiFi, or radar sensors | 85~90% | expensive, and the accuracy is not high | [19,20,21] |

| IR sensors | low resolution IR sensors | 85~97% | Complex bathroom application scenes are not considered. | [22,23,24,25,26,27] |

| Multi-sensors | gyroscope, accelerometer, ECG, ultrasonic sensor, depth sensor, etc. | 90~97% | Complex bathroom application scenes are not considered. | [29,30,31,32] |

| This work | PIR + low resolution IR sensor | 87.5~95.31% | suitable for bathroom application | / |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, C.; Liu, S.; Zhong, G.; Wu, H.; Cheng, L.; Lin, J.; Huang, Q. A Non-Contact Fall Detection Method for Bathroom Application Based on MEMS Infrared Sensors. Micromachines 2023, 14, 130. https://doi.org/10.3390/mi14010130

He C, Liu S, Zhong G, Wu H, Cheng L, Lin J, Huang Q. A Non-Contact Fall Detection Method for Bathroom Application Based on MEMS Infrared Sensors. Micromachines. 2023; 14(1):130. https://doi.org/10.3390/mi14010130

Chicago/Turabian StyleHe, Chunhua, Shuibin Liu, Guangxiong Zhong, Heng Wu, Lianglun Cheng, Juze Lin, and Qinwen Huang. 2023. "A Non-Contact Fall Detection Method for Bathroom Application Based on MEMS Infrared Sensors" Micromachines 14, no. 1: 130. https://doi.org/10.3390/mi14010130

APA StyleHe, C., Liu, S., Zhong, G., Wu, H., Cheng, L., Lin, J., & Huang, Q. (2023). A Non-Contact Fall Detection Method for Bathroom Application Based on MEMS Infrared Sensors. Micromachines, 14(1), 130. https://doi.org/10.3390/mi14010130