Wide-Viewing-Angle Integral Imaging System with Full-Effective-Pixels Elemental Image Array

Abstract

1. Introduction

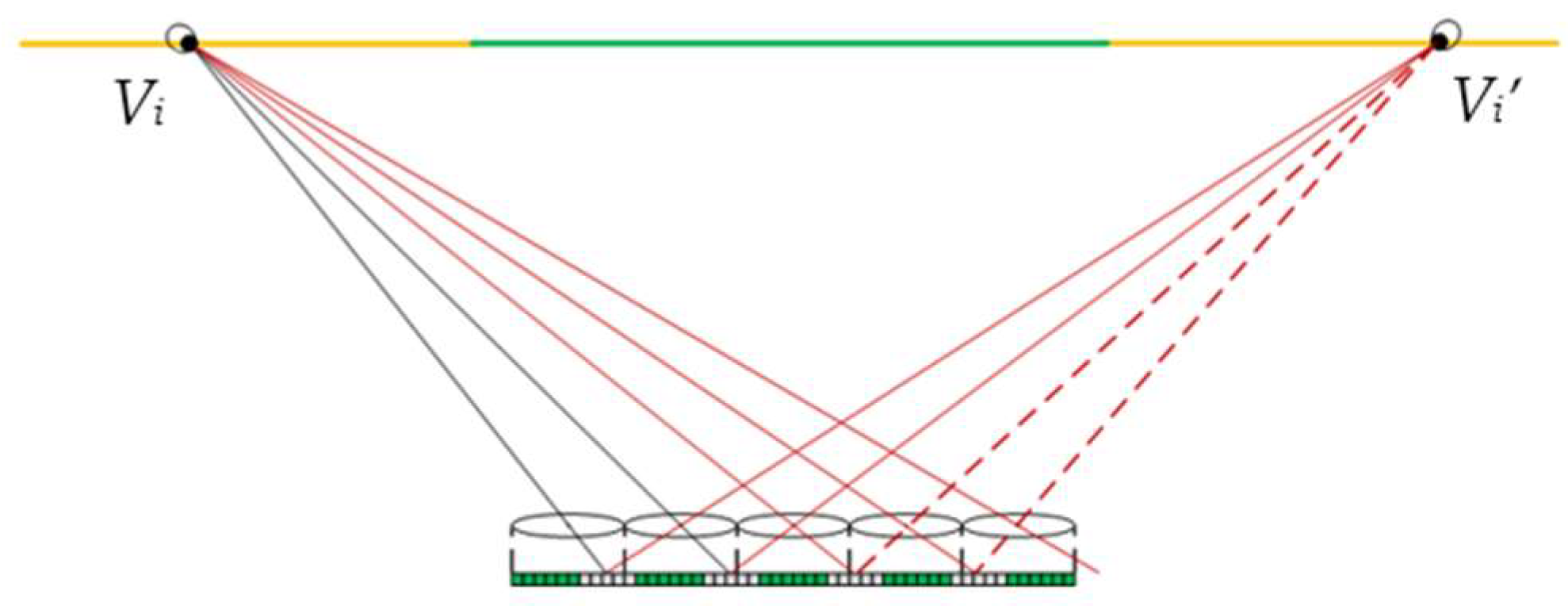

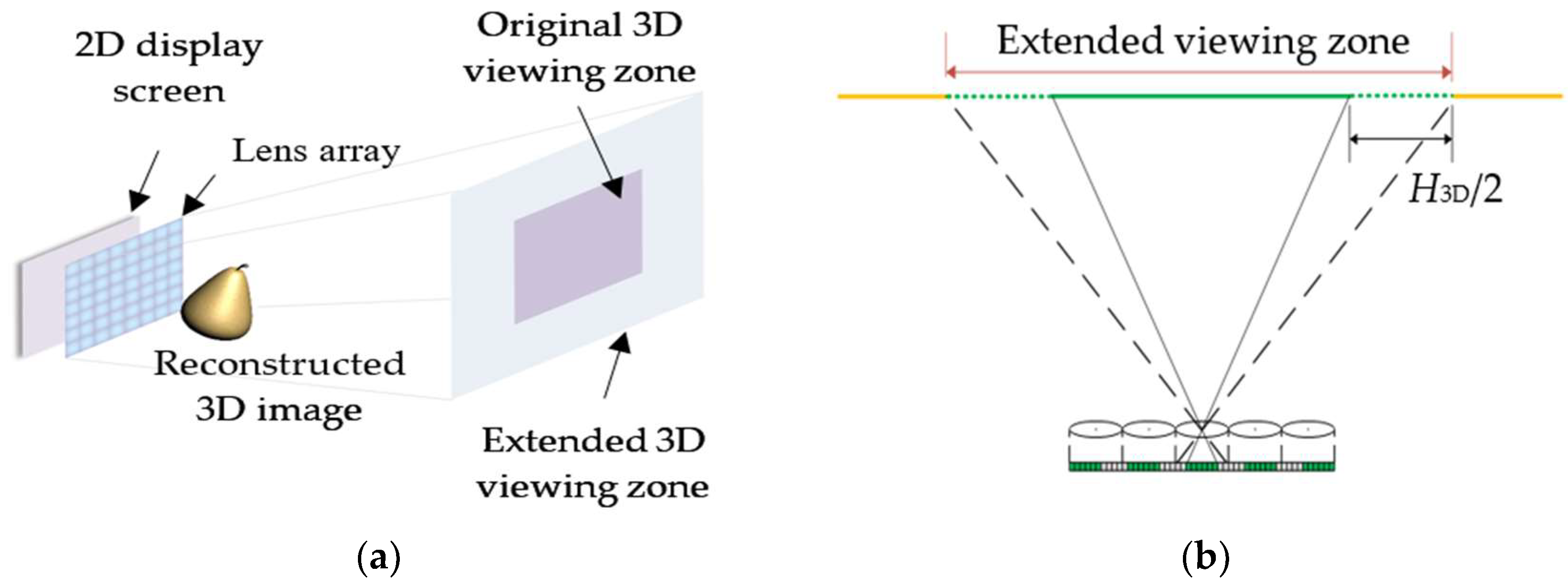

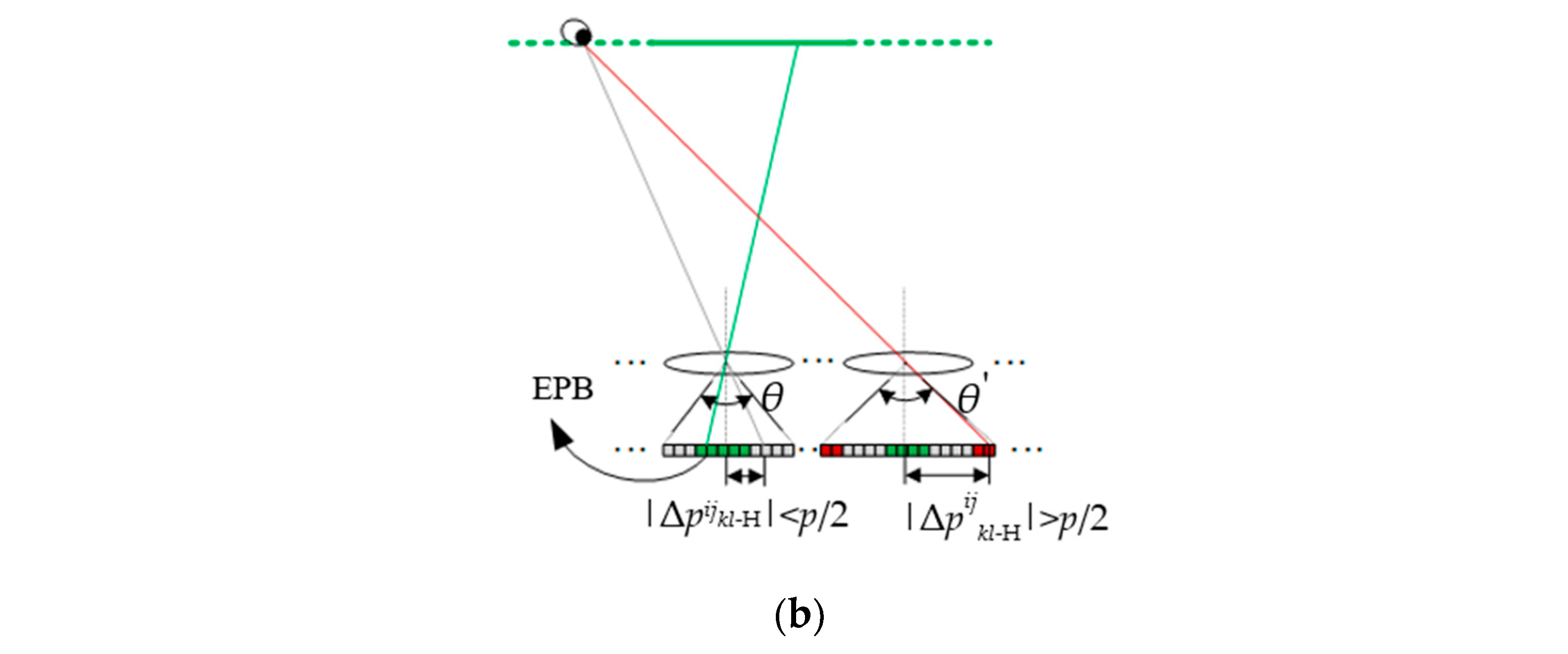

2. Analysis of Viewing Zones and Effective Pixel Block

3. Proposed Wide-Viewing-Angle InIm System with FEP-EIA

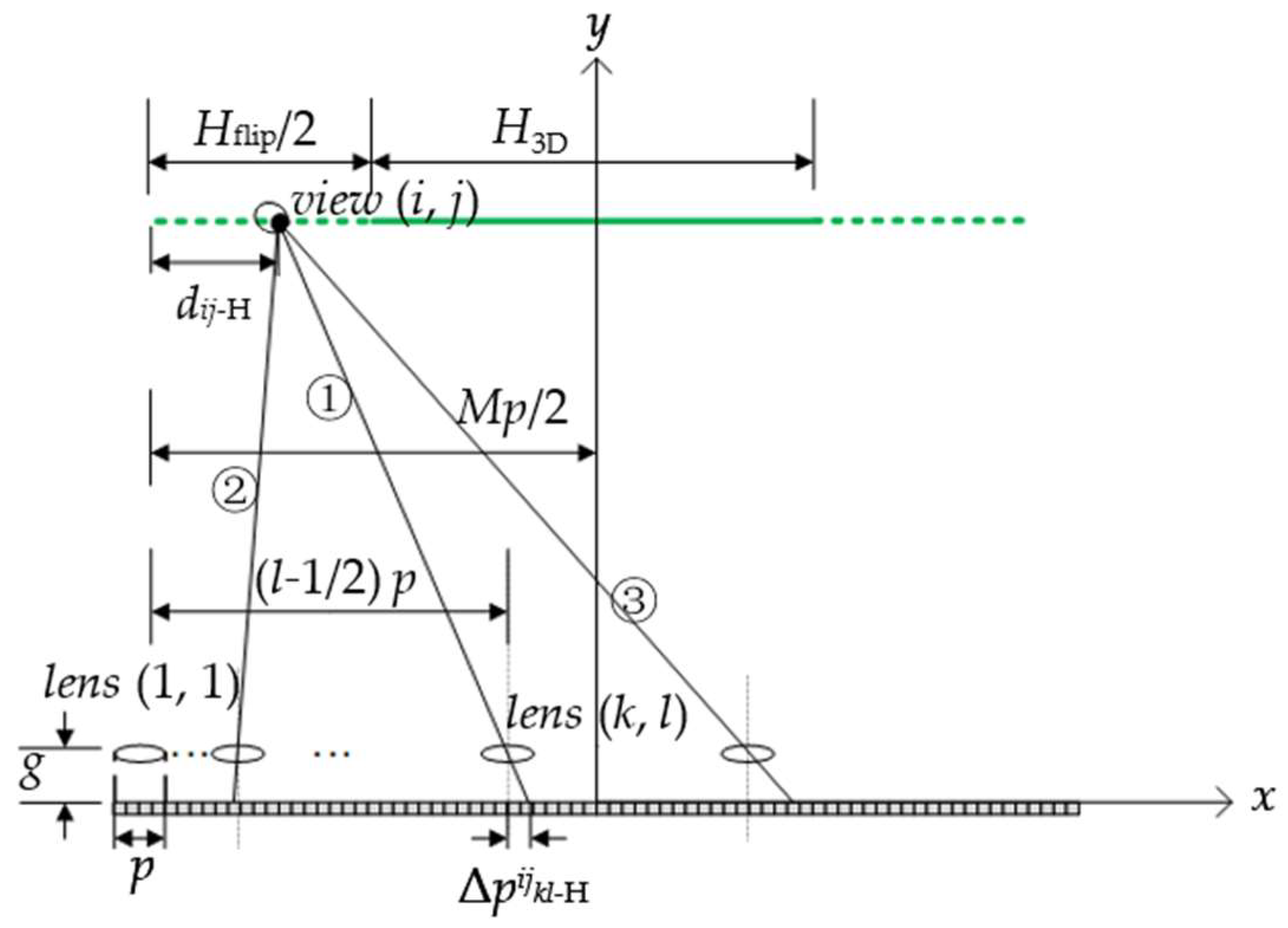

3.1. Structure and Principles

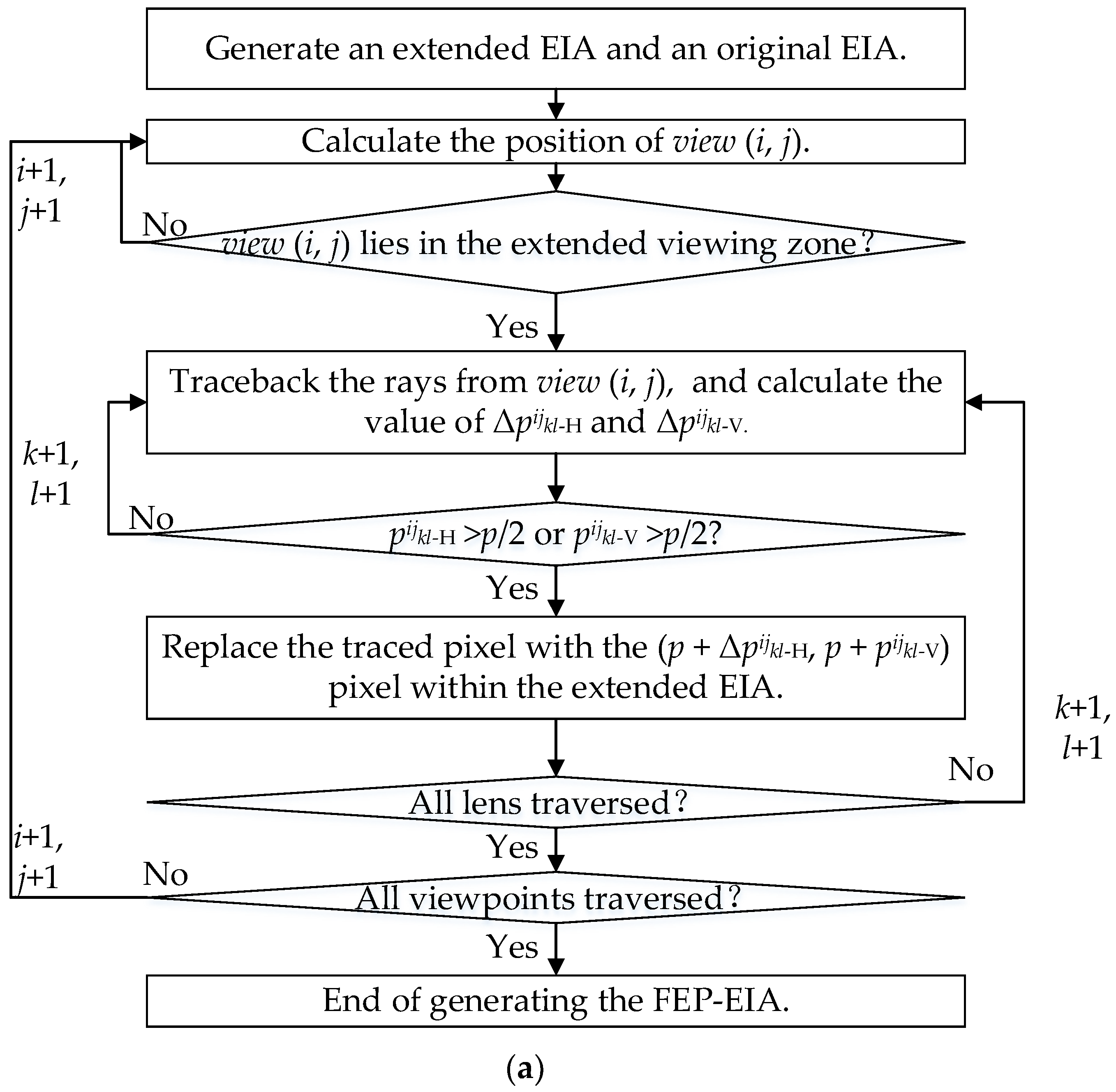

3.2. Generation of the FEP-EIA

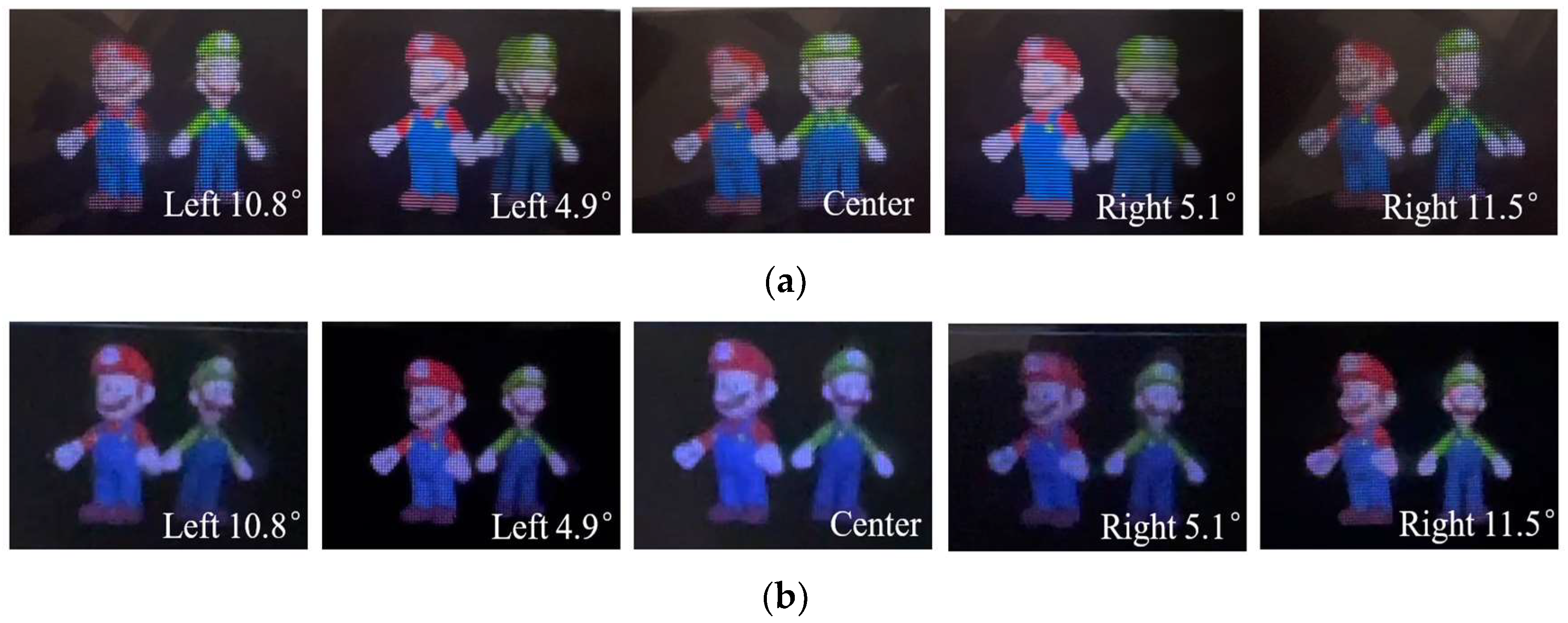

4. Experiments and Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Martínez-Corral, M.; Javidi, B. Fundamentals of 3D imaging and displays: A tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 2018, 10, 512–566. [Google Scholar] [CrossRef]

- Xiao, X.; Javidi, B. Advances in three-dimensional integral imaging sensing, display, and applications. Appl. Opt. 2013, 52, 546–560. [Google Scholar] [CrossRef] [PubMed]

- Hui, R.; Li, X.N. Review on tabletop true 3D display. J. Soc. Inf. Display 2020, 28, 75–91. [Google Scholar]

- Deng, H.; Wang, Q.H. Realization of undistorted and orthoscopic integral imaging without black zone in real and virtual fields. J. Display Technol. 2011, 7, 255–257. [Google Scholar] [CrossRef]

- Min, S.W.; Kim, J. New characteristic equation of three-dimensional integral imaging system and its applications. Jpn. J. Appl. Phys. 2005, 44, L71–L74. [Google Scholar] [CrossRef]

- Wang, T.H.; Deng, H. High-resolution integral imaging display with precise light control unit and error compensation. Opt. Commun. 2022, 518, 128363–128369. [Google Scholar] [CrossRef]

- Kim, Y.; Park, J.H. Wide-viewing-angle integral three-dimensional imaging system by curving a screen and a lens array. Appl. Opt. 2005, 44, 546–552. [Google Scholar] [CrossRef]

- Kim, Y.; Park, J.H. Viewing-angle-enhanced integral imaging system using a curved lens array. Opt. Express 2004, 12, 421–429. [Google Scholar] [CrossRef]

- Wang, W.W.; Chen, G.X. Large-scale microlens arrays on flexible substrate with improved numerical aperture for curved integral imaging 3D display. Sci. Rep. 2020, 10, 11741. [Google Scholar] [CrossRef]

- Wei, X.; Wang, Y. Viewing angle-enhanced integral imaging system using three lens arrays. Chin. Opt. Lett. 2014, 12, 011101–11104. [Google Scholar]

- Sang, X.Z.; Gao, X. Interactive floating full-parallax digital three-dimensional light-field display based on wavefront recomposing. Opt. Express 2018, 26, 8883–8889. [Google Scholar] [CrossRef] [PubMed]

- Xia, Y.P.; Xing, Y. Integral imaging tabletop 3D display system based on compound lens array. In Proceedings of the International Conference on VR/AR and 3D Displays, Hangzhou, China, 2 December 2020. [Google Scholar]

- Jang, J.Y.; Lee, H.S. Viewing angle enhanced integral imaging display by using a high refractive index medium. Appl. Opt. 2011, 50, B71–B76. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.; Jung, S. Viewing-angle-enhanced integral imaging by lens switching. Opt. Lett. 2002, 27, 818–820. [Google Scholar] [CrossRef]

- Alam, M.A.; Kwon, K.C. Viewing-angle-enhanced integral imaging display system using a time-multiplexed two-directional sequential projection scheme and a DEIGR algorithm. IEEE Photonics J. 2017, 7, 1–14. [Google Scholar] [CrossRef]

- Liu, B.; Sang, X. Time-multiplexed light field display with 120-degree wide viewing angle. Opt. Express 2019, 27, 35728–35739. [Google Scholar] [CrossRef] [PubMed]

- Park, G.; Jung, J.H. Multi-viewer tracking integral imaging system and its viewing zone analysis. Opt. Express 2009, 17, 17895–17908. [Google Scholar] [CrossRef]

- Shen, X.; Martínez-Corral, M. Head tracking three-dimensional integral imaging display using smart pseudoscopic-to-orthoscopic conversion. J. Display Technol. 2016, 12, 542–548. [Google Scholar] [CrossRef]

- Dorado, A.; Hong, S. Toward 3D integral-imaging broadcast with increased viewing angle and parallax. Opt. Lasers Eng. 2018, 107, 83–90. [Google Scholar] [CrossRef]

- Martínez-Corral, M.; Dorado, A. Three-dimensional display by smart pseudoscopic-to-orthoscopic conversion with tunable focus. Appl. Opt 2014, 53, E19–E25. [Google Scholar] [CrossRef]

- Xiong, Z.L.; Wang, Q.H. Partially-overlapped viewing zone based integral imaging system with super wide viewing angle. Opt. Express 2014, 22, 22268–22277. [Google Scholar] [CrossRef]

- Deng, H.; Wang, Q.H. An integral-imaging three-dimensional display with wide viewing angle. J. Soc. Inf. Display 2012, 19, 679–684. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, A.T. Resolution-enhanced integral imaging using two micro-lens arrays with different focal lengths for capturing and display. Opt. Express 2015, 23, 28970–28977. [Google Scholar] [CrossRef] [PubMed]

- Takaki, Y.; Tanaka, K. Super multi-view display with a lower resolution flat-panel display. Opt. Express 2011, 19, 4129–4139. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Deng, H. Dual-view integral imaging three-dimensional display. Appl. Opt. 2013, 52, 4911–4914. [Google Scholar] [CrossRef]

| Components | Parameters | Specifications |

|---|---|---|

| 2D display screen | Product model | SONY XPERIA Z5 PREMIUM |

| Screen size | 5.5 inches | |

| Resolution | 3840 × 2160 pixels | |

| Pixel pitch | 31.5 µm | |

| Pinhole array | Pitch of the pinhole | 0.946 mm |

| Number of pinholes | 128 × 72 | |

| The gap between the 2D display and the pinhole array | 2.2 mm | |

| EIA generated by the conventional method | Resolution per elemental image | 30 × 30 |

| Number of elemental images | 128 × 72 |

| Viewing Distances | Methods | Viewing Angle Calculated in Theory | Viewing Angle Measured with Experiments |

|---|---|---|---|

| 450 mm | Conventional InIm | 9.3° | 10.1° |

| The proposed method | 24.2° | 22.9° | |

| 250 mm | Conventional InIm | −2.9° | N/A |

| The proposed method | 24.2° | 22.3° |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Li, D.; Deng, H. Wide-Viewing-Angle Integral Imaging System with Full-Effective-Pixels Elemental Image Array. Micromachines 2023, 14, 225. https://doi.org/10.3390/mi14010225

Liu Z, Li D, Deng H. Wide-Viewing-Angle Integral Imaging System with Full-Effective-Pixels Elemental Image Array. Micromachines. 2023; 14(1):225. https://doi.org/10.3390/mi14010225

Chicago/Turabian StyleLiu, Zesheng, Dahai Li, and Huan Deng. 2023. "Wide-Viewing-Angle Integral Imaging System with Full-Effective-Pixels Elemental Image Array" Micromachines 14, no. 1: 225. https://doi.org/10.3390/mi14010225

APA StyleLiu, Z., Li, D., & Deng, H. (2023). Wide-Viewing-Angle Integral Imaging System with Full-Effective-Pixels Elemental Image Array. Micromachines, 14(1), 225. https://doi.org/10.3390/mi14010225