A Two-Stage Screw Detection Framework for Automatic Disassembly Using a Reflection Feature Regression Model

Abstract

:1. Introduction

- (1)

- The texture features obtained from the original screws cannot characterise the used screws accurately due to the unavoidable structural damage during the use of the product.

- (2)

- The robustness of texture features extracted from a small number of available used and damaged screws is limited due to the uncertain conditions of used screws.

- (3)

- The texture features are easily affected by illumination conditions. Current detection methods cannot operate stably under uneven illumination conditions.

- (1)

- It presents a robust feature descriptor for structurally damaged screws by integrating reflection features and texture features. This is beneficial in weakening the influence of structural damage on modelling screws.

- (2)

- It proposes a linear regression model that enables the reflection features to be updated based on the illumination conditions automatically, which contributes to the stable operation of feature modelling under various illumination conditions. In addition, an illumination label is defined to measure illumination conditions automatically and conveniently from the point of view of the image.

- (3)

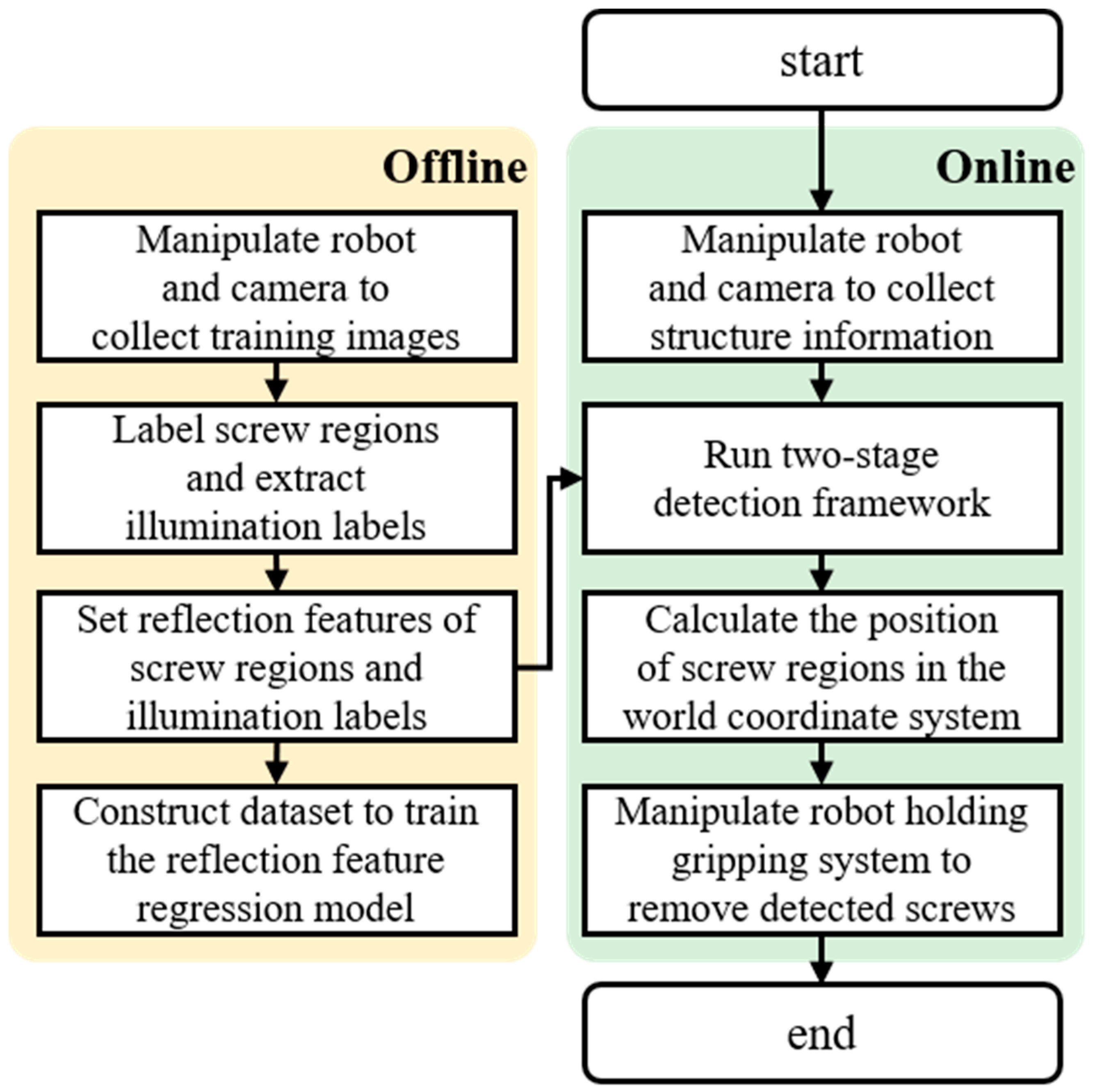

- It details a two-stage detection framework based on the proposed feature descriptor. With the help of the linear regression model, the two-stage detection framework can extract used screws from EOL products under uneven illumination conditions.

2. Related Work

2.1. Screw Detection for Disassembly

2.2. Reflection Feature for Object Detection

3. Method

3.1. Characterise Reflection Features

3.2. Measure Illumination Conditions

| Algorithm 1: illumination label extraction algorithm |

| Input: |

| Captured image ; |

| Screw region ; |

| Screw region position ; |

| Output: |

| Illumination label ; |

|

|

|

|

|

|

|

|

|

|

|

|

3.3. Reflection Feature Regression Model

3.4. Two-Stage Detection Framework

4. Experiments

4.1. Experimental Setup

4.2. Experimental Procedure

4.3. Evaluation System

5. Results and Discussion

5.1. Performance of the Reflection Feature Regression Model

5.2. Performance of the Two-Stage Detection Framework

5.3. Generalisation

5.4. Comparison

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ijomah, W.L.; McMahon, C.A.; Hammond, G.P.; Newman, S.T. Development of design for remanufacturing guidelines to support sustainable manufacturing. Robot. Comput.-Integr. Manuf. 2007, 23, 712–719. [Google Scholar] [CrossRef]

- Li, R.; Pham, D.T.; Huang, J.; Tan, Y.; Qu, M.; Wang, Y.; Kerin, M.; Jiang, K.; Su, S.; Ji, C.; et al. Unfastening of hexagonal headed screws by a collaborative robot. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1455–1468. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, M.; Zhang, H.; Jiang, Z.; Liu, C.; Cai, W. A review on energy, environment and economic assessment in remanufacturing based on life cycle assessment method. J. Clean. Prod. 2020, 255, 120160. [Google Scholar] [CrossRef]

- Yuksel, H. Design of automobile engines for remanufacture with quality function deployment. Int. J. Sustain. Eng. 2010, 3, 170–180. [Google Scholar] [CrossRef]

- Hashemi, V.; Chen, M.; Fang, L. Modelling and analysis of aerospace remanufacturing systems with scenario analysis. Int. J. Adv. Manuf. Technol. 2016, 87, 2135–2151. [Google Scholar] [CrossRef]

- Zheng, H.; Li, E.; Wang, Y.; Shi, P.; Xu, B.; Yang, S. Environmental life cycle assessment of remanufactured engines with advanced restoring technologies. Robot. Computer-Integr. Manuf. 2019, 59, 213–221. [Google Scholar] [CrossRef]

- Ahmed, F.; Almutairi, G.; Hasan, P.M.Z.; Rehman, S.; Kumar, S.; Shaalan, N.M.; Aljaafari, A.; Alshoaibi, A.; AIOtaibi, B.; Khan, K. Fabrication of a biomass-derived activated carbon-based anode for high-performance li-ion batteries. Micromachines 2023, 14, 192. [Google Scholar] [CrossRef] [PubMed]

- Ong, S.K.; Chang, M.M.L.; Nee, A.Y.C. Product disassembly sequence planning: State-of-the-art, challenges, opportunities and future directions. Int. J. Prod. Res. 2021, 59, 3493–3508. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, C.; Zhang, M.; Jia, Y.; Xu, Y. A novel simulated annealing-based hyper-heuristic algorithm for stochastic parallel disassembly line balancing in smart remanufacturing. Sensors 2023, 23, 1652. [Google Scholar] [CrossRef]

- Bahubalendruni, M.V.A.R.; Varupala, V.P. Disassembly sequence planning for safe disposal of end-of-life waste electric and electronic equipment. Natl. Acad. Sci. Lett. 2021, 44, 243–247. [Google Scholar] [CrossRef]

- Poschmann, H.; Brueggemann, H.; Goldmann, D. Disassembly 4.0: A review on using robotics in disassembly tasks as a way of automation. Chem. Ing. Tech. 2020, 92, 341–359. [Google Scholar] [CrossRef]

- Nowakowski, P. A novel, cost efficient identification method for disassembly planning of waste electrical and electronic equipment. J. Clean. Prod. 2018, 172, 2695–2707. [Google Scholar] [CrossRef]

- Chen, W.H.; Foo, G.; Kara, S.; Pagnucco, M. Automated generation and execution of disassembly actions. Robot. Comput.-Integr. Manuf. 2021, 68, 102056. [Google Scholar] [CrossRef]

- Vongbunyong, S.; Kara, S.; Pagnucco, M. Learning and revision in cognitive robotics disassembly automation. Robot. Comput.-Integr. Manuf. 2015, 34, 79–94. [Google Scholar] [CrossRef]

- Gli, P.; Pomares, J.; Diaz, S.T.P.C.; Candelas, F.; Torres, F. Flexible multisensorial system for automatic disassembly using cooperative robots. Int. J. Comput. Integr. Manuf. 2007, 20, 757–772. [Google Scholar] [CrossRef]

- Bdiwi, M.; Rashid, A.; Putz, M. Autonomous disassembly of electric vehicle motors based on robot cognition. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016. [Google Scholar] [CrossRef]

- DiFilippo, N.M.; Jouaneh, M.K. A system combining force and vision sensing for automated screw removal on laptops. IEEE Trans. Autom. Sci. Eng. 2017, 15, 887–895. [Google Scholar] [CrossRef]

- DiFilippo, N.M.; Jouaneh, M.K. Using the soar cognitive architecture to remove screws from different laptop models. IEEE Trans. Autom. Sci. Eng. 2018, 16, 767–780. [Google Scholar] [CrossRef]

- Foo, G.; Kara, S.; Pagnucco, M. Screw detection for disassembly of electronic waste using reasoning and retraining of a deep learning model. Procedia CIRP 2021, 98, 666–671. [Google Scholar] [CrossRef]

- Mangold, S.; Steiner, C.; Friedmann, M.; Fleischer, J. Vision-based screw head detection for automated disassembly for remanufacturing. Procedia CIRP 2022, 105, 1–6. [Google Scholar] [CrossRef]

- Li, X.; Li, M.; Wu, Y.; Zhou, D.; Liu, T.; Hao, F.; Yue, J.; Ma, Q. Accurate screw detection method based on faster R-CNN and rotation edge similarity for automatic screw disassembly. Int. J. Comput. Integr. Manuf. 2021, 34, 1177–1195. [Google Scholar] [CrossRef]

- Sun, Y.; Chang, Z.; Zhao, Y.; Hua, Z.; Li, S. Progressive two-stage network for low-light image enhancement. Micromachines 2021, 12, 1458. [Google Scholar] [CrossRef] [PubMed]

- Tang, Q.; Yang, J.; He, X.; Jia, W.; Zhang, Q.; Liu, H. Nighttime image dehazing based on retinex and dark channel prior using taylor series expansion. Comput. Vis. Image Underst. 2021, 202, 103086. [Google Scholar] [CrossRef]

- Cui, Y.; Sun, Y.; Jian, M.; Zhang, X.; Yao, T.; Gao, X.; Li, Y.; Zhang, Y. A novel underwater image restoration method based on decomposition network and physical imaging model. Int. J. Intell. Syst. 2022, 37, 5672–5690. [Google Scholar] [CrossRef]

- Sudo, H.; Yukushige, S.; Muramatsu, S.; Inagaki, K.; Chugo, D.; Hashimoto, H. Detection of glass surface using reflection characteristic. In Proceedings of the Annual Conference of the IEEE Industrial Electronics Society, Toronto, ON, Canada, 13–16 October 2021. [Google Scholar] [CrossRef]

- Wu, J.; Ji, Z. Seeing the unseen: Locating objects from reflections. In Proceedings of the Annual Conference Towards Autonomous Robotic Systems, Bristol, UK, 25–27 July 2018. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, W.; Lei, Y.; Lu, H. Hyperfusion-Net: Hyper-densely reflective feature fusion for salient object detection. Pattern Recognit. 2019, 93, 521–533. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, W.; Lu, H.; Shen, C. Salient object detection with lossless feature reflection and weighted structural loss. IEEE Trans. Image Process. 2019, 28, 3048–3060. [Google Scholar] [CrossRef] [PubMed]

- Tan, L.; Tang, T.; Yuan, D. An ensemble learning aided computer vision method with advanced colour enhancement for corroded bolt detection in tunnels. Sensors 2022, 22, 9715. [Google Scholar] [CrossRef] [PubMed]

- Lalonde, J.F.; Efros, A.A.; Narasimhan, S.G. Estimating the natural illumination conditions from a single outdoor image. Int. J. Comput. Vis. 2012, 98, 123–145. [Google Scholar] [CrossRef]

- Barron, J.T.; Malik, J. Shape, illumination, and reflectance from shading. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1670–1687. [Google Scholar] [CrossRef]

- Zhou, T.; Krahenbuhl, P.; Efros, A.A. Learning data-driven reflectance priors for intrinsic image decomposition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Lee, H.W. The study of mechanical arm and intelligent robot. IEEE Access 2020, 8, 119624–119634. [Google Scholar] [CrossRef]

- Sanakkayala, D.C.; Varadarajan, V.; Kumar, N.; Soni, G.; Kamat, P.; Kumar, S.; Patil, S.; Kotecha, K. Explainable AI for bearing fault prognosis using deep learning techniques. Micromachines 2022, 13, 1471. [Google Scholar] [CrossRef]

- Deng, S.; Du, L.; Li, C.; Ding, J.; Liu, H. SAR automatic target recognition based on Euclidean distance restricted autoencoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3323–3333. [Google Scholar] [CrossRef]

- Zeng, X.; Wang, X.; Chen, K.; Zhang, Y.; Li, D. Dividing the neighbours is not enough: Adding confusion makes local descriptor stronger. IEEE Access 2019, 7, 136106–136115. [Google Scholar] [CrossRef]

- Dmytriyev, Y.; Zaki, A.M.A.; Carnevale, M.; Insero, F.; Giberti, H. Brain computer interface for human-cobot interaction in industrial applications. In Proceedings of the International Congress on Human-Computer Interaction, Optimisation and Robotic Applications, Ankara, Türkiye, 11–13 June 2021. [Google Scholar] [CrossRef]

- Song, Q.; Li, S.; Bai, Q.; Yang, J.; Zhang, X.; Li, Z.; Duan, Z. Object detection method for grasping robot based on improved YOLOv5. Micromachines 2021, 12, 1273. [Google Scholar] [CrossRef]

- Gong, C.S.A.; Su, C.H.S.; Chen, Y.H.; Guu, D.Y. How to implement automotive fault diagnosis using artificial intelligence scheme. Micromachines 2022, 13, 1380. [Google Scholar] [CrossRef] [PubMed]

| Notation | Description |

|---|---|

| Reflection features | |

| Reflection abilities | |

| Illumination conditions | |

| The illumination condition of the screw region | |

| The illumination condition of the illumination label | |

| The L value of the reflection component for the screw region | |

| The L value of the reflection component for the illumination label | |

| The L value of the screw region | |

| The L value of the illumination label | |

| The L value of the illumination component for the screw region | |

| The L value of the illumination component for the illumination label | |

| The reflection ability of the screw region | |

| The reflection ability of the illumination label |

| The Length of the Screw Region () | The Width of the Screw Region () | The Number of Extracted Reflection-Based Screw Regions () | The Size of the Extracted Texture Feature Point () | The Number of Extracted Reflection-Based Screw Regions () | The Difference Threshold for Comparing Neighbouring Detected Screws () |

|---|---|---|---|---|---|

| 100 | 100 | 20 | 128 | 10 | 0.05 |

| Capture Position (X, Y, Z, Rx, Ry, Rz) | Illumination Conditions Measured by Light Meters | |||

|---|---|---|---|---|

| Figure 7a | (140 mm, −534 mm, 424 mm, 179°, −3°, −8°) | 58.4615 | 14.7876 | 770 lx |

| Figure 7b | (160 mm, −534 mm, 424 mm, 179°, −3°, −8°) | 59.3731 | 14.9791 | 770 lx |

| Figure 7c | (140 mm, −515 mm, 424 mm, 179°, −3°, −8°) | 56.8118 | 12.1517 | 770 lx |

| Maximum R-Squared | Minimum R-Squared | Average R-Squared | |

|---|---|---|---|

| Training dataset-1 | 0.909 | 0.797 | 0.860 |

| Training dataset-2 | 0.915 | 0.806 | 0.856 |

| Training dataset-3 | 0.920 | 0.802 | 0.858 |

| Training dataset-4 | 0.914 | 0.800 | 0.862 |

| Training dataset-5 | 0.918 | 0.804 | 0.867 |

| Training dataset-6 | 0.898 | 0.801 | 0.855 |

| Training dataset-7 | 0.910 | 0.804 | 0.851 |

| Training dataset-8 | 0.907 | 0.802 | 0.861 |

| Training dataset-9 | 0.904 | 0.804 | 0.844 |

| Maximum Value | Minimum Value | Average Value | |

|---|---|---|---|

| 1.00 | 0.80 | 0.99 | |

| 1.00 | 0.02 | 0.78 | |

| 0.68 | 0.00 | 0.25 | |

| 1.00 | 0.80 | 0.99 |

| Difference between Using the Two-Stage Detection Framework and Using the Texture Stage | Difference between Using the Two-Stage Detection Framework and Using the Reflection Stage | |

|---|---|---|

| 1.00 | 0.24 | |

| 0.76 | −0.02 | |

| 0.98 | 0.02 | |

| 1.00 | 0.06 | |

| 0.82 | −0.02 | |

| 0.99 | 0.01 |

| P1 screws | 0.99 | 0.99 |

| P2 screws | 0.97 | 0.97 |

| P3 screws | 0.97 | 0.97 |

| P4 screws | 0.82 | 0.82 |

| P5 screws | 0.84 | 0.84 |

| P6 screws | 0.86 | 0.86 |

| average | 0.91 | 0.91 |

| Average R-Squared | Average | Average | |

|---|---|---|---|

| Reflection feature regression model | 0.857 | \ | \ |

| Two-stage detection framework | \ | 0.91 | 0.91 |

| Experience-based methods [15,16,17] | 0.10 | 0.17 |

| Soar [18] | 0.76 | 0.78 |

| ResNet [19] | 0.80 | 0.80 |

| YOLO [20] | 0.94 | 0.94 |

| Faster R-CNN [21] | 0.87 | 0.87 |

| Proposed | 0.91 | 0.91 |

| YOLO [20] | Proposed | |

|---|---|---|

| Number of used training data | 6720 | 200 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Deng, W.; Pham, D.T.; Hu, J.; Wang, Y.; Zhou, Z. A Two-Stage Screw Detection Framework for Automatic Disassembly Using a Reflection Feature Regression Model. Micromachines 2023, 14, 946. https://doi.org/10.3390/mi14050946

Liu Q, Deng W, Pham DT, Hu J, Wang Y, Zhou Z. A Two-Stage Screw Detection Framework for Automatic Disassembly Using a Reflection Feature Regression Model. Micromachines. 2023; 14(5):946. https://doi.org/10.3390/mi14050946

Chicago/Turabian StyleLiu, Quan, Wupeng Deng, Duc Truong Pham, Jiwei Hu, Yongjing Wang, and Zude Zhou. 2023. "A Two-Stage Screw Detection Framework for Automatic Disassembly Using a Reflection Feature Regression Model" Micromachines 14, no. 5: 946. https://doi.org/10.3390/mi14050946