A Depth-Enhanced Holographic Super Multi-View Display Based on Depth Segmentation

Abstract

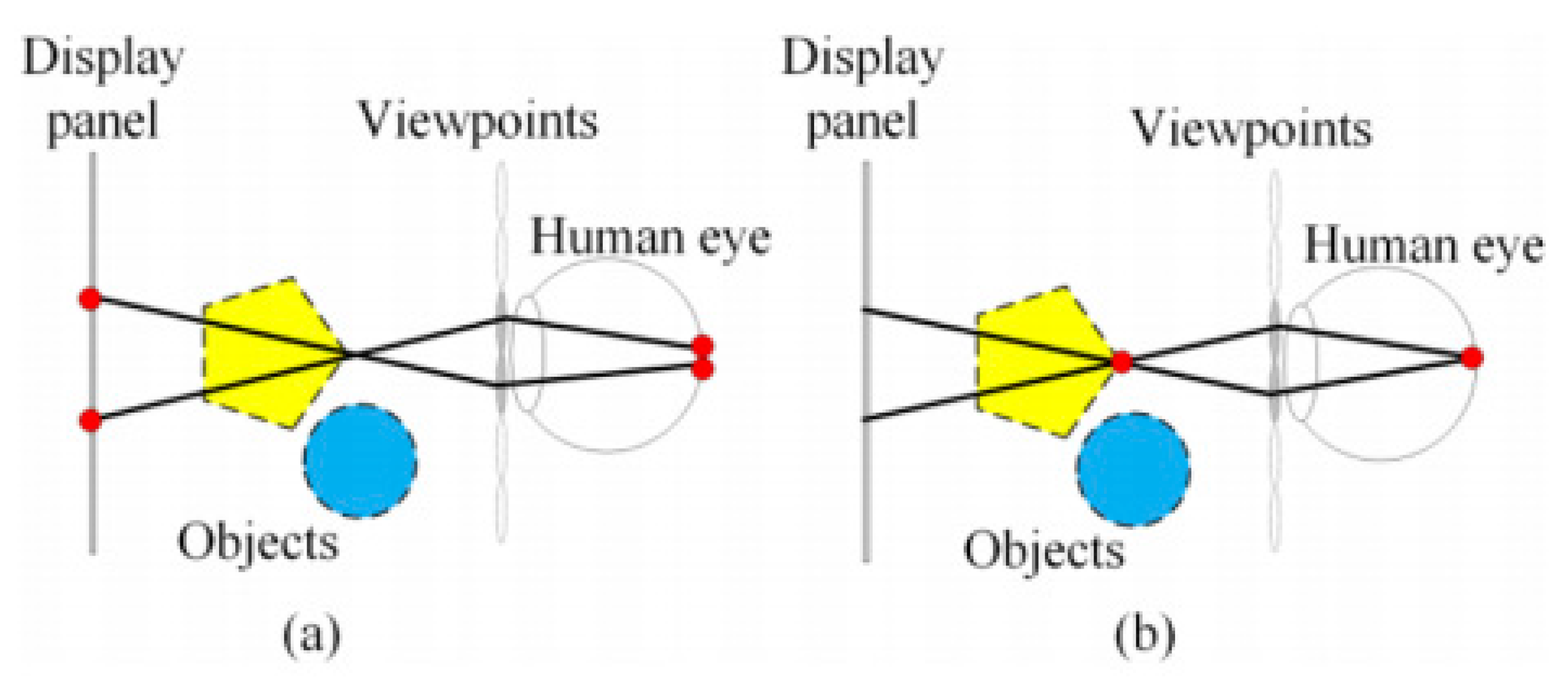

:1. Introduction

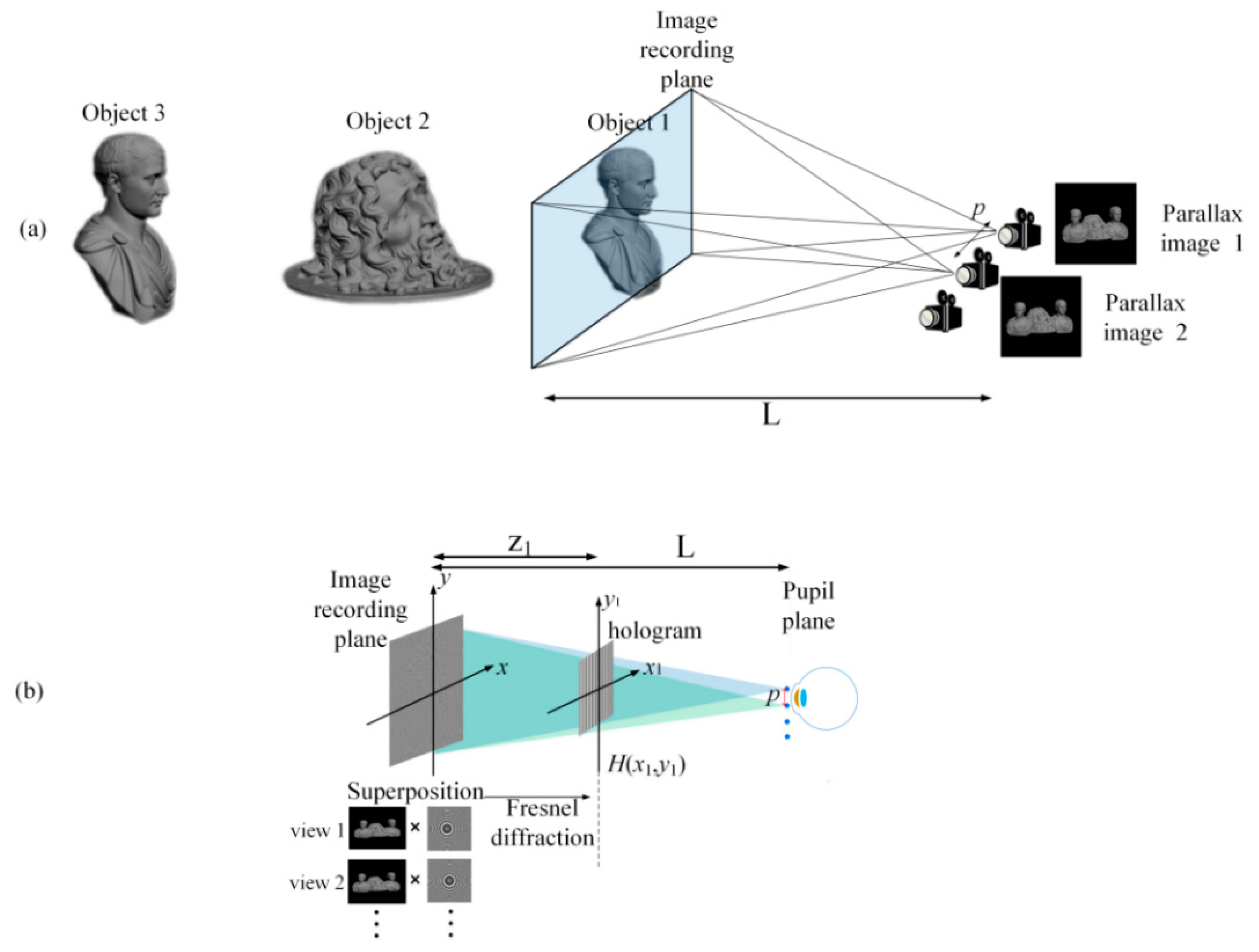

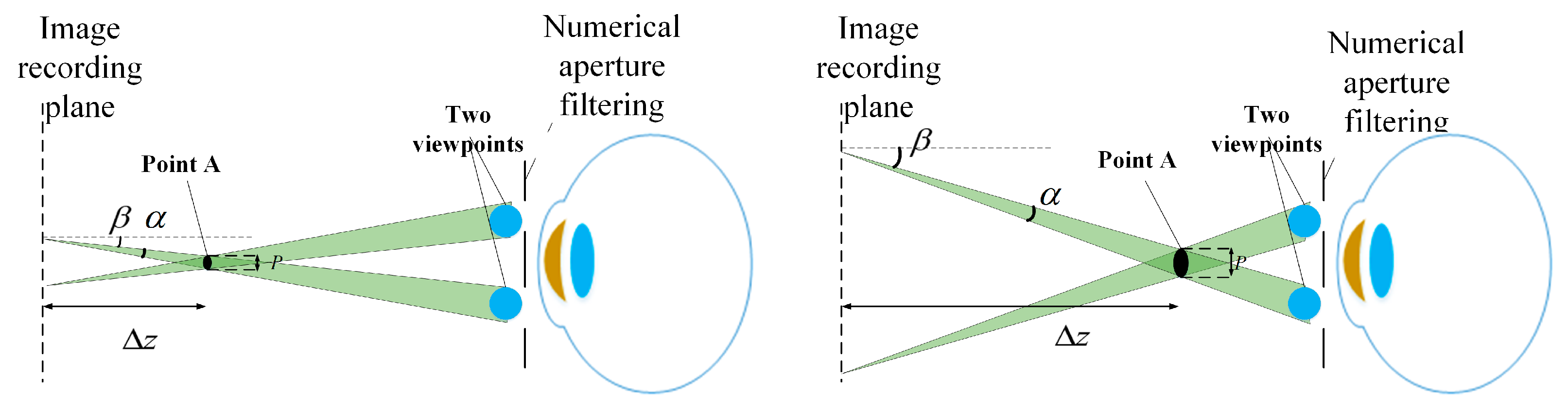

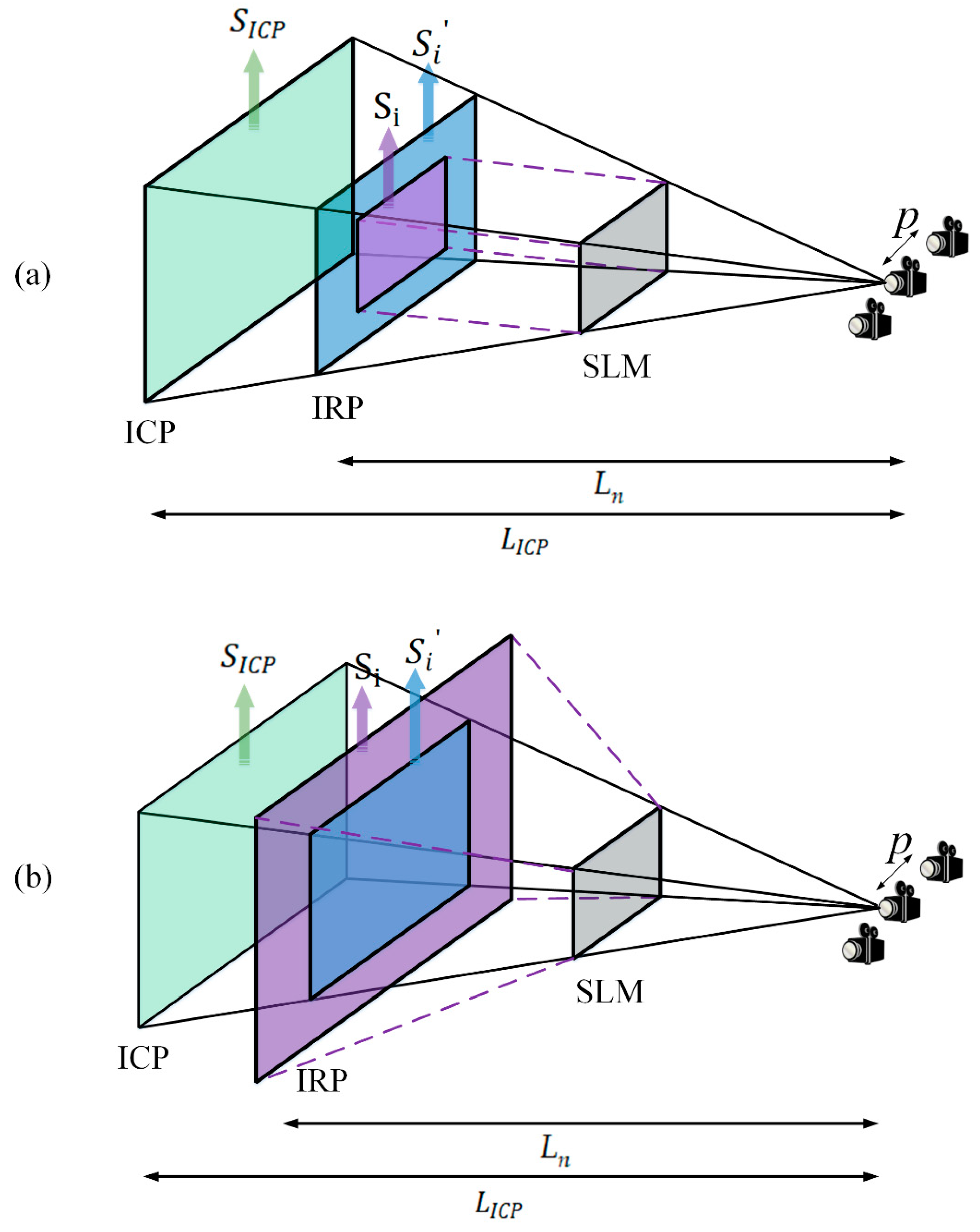

2. Conventional Holographic SMV Maxwellian Display with Limited Depth of Field

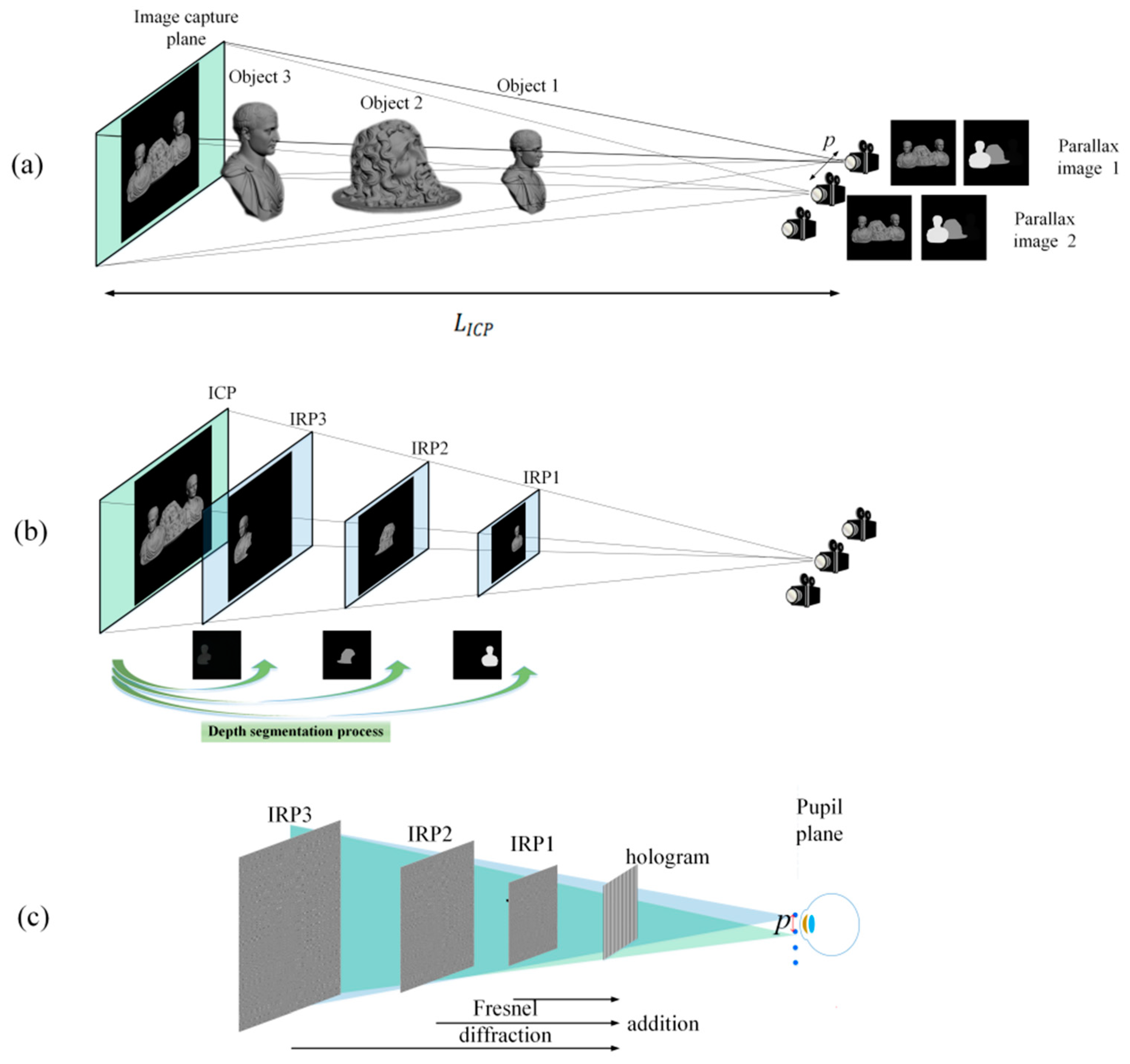

3. Depth-Enhanced Holographic SMV Maxwellian Display Based on Depth Segmentation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cheng, D.; Wang, Q.; Liu, Y.; Chen, H.; Ni, D.; Wang, X.; Yao, C.; Hou, Q.; Hou, W.; Luo, G.; et al. Design and Manufacture AR Head-Mounted Displays: A Review and Outlook. Light Adv. Manuf. 2021, 2, 350–369. [Google Scholar] [CrossRef]

- Xiong, J.; Hsiang, E.-L.; He, Z.; Zhan, T.; Wu, S.-T. Augmented Reality and Virtual Reality Displays: Emerging Technologies and Future Perspectives. Light Sci. Appl. 2021, 10, 216. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Bang, K.; Wetzstein, G.; Lee, B.; Gao, L. Toward the Next-Generation VR/AR Optics: A Review of Holographic near-Eye Displays from a Human-Centric Perspective. Optica 2020, 7, 1563–1578. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.-X.; Wang, Q.-H.; Wang, A.-H.; Li, D.-H. Autostereoscopic Display Based on Two-Layer Lenticular Lenses. Opt. Lett. 2010, 35, 4127–4129. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, J.; Fang, F. Vergence-Accommodation Conflict in Optical See-through Display: Review and Prospect. Results Opt. 2021, 5, 100160. [Google Scholar] [CrossRef]

- Hua, H.; Javidi, B. A 3D Integral Imaging Optical See-through Head-Mounted Display. Opt. Express 2014, 22, 13484–13491. [Google Scholar] [CrossRef]

- Wang, Q.-H.; Ji, C.-C.; Li, L.; Deng, H. Dual-View Integral Imaging 3D Display by Using Orthogonal Polarizer Array and Polarization Switcher. Opt. Express 2016, 24, 9–16. [Google Scholar] [CrossRef]

- He, M.-Y.; Zhang, H.-L.; Deng, H.; Li, X.-W.; Li, D.-H.; Wang, Q.-H. Dual-View-Zone Tabletop 3D Display System Based on Integral Imaging. Appl. Opt. 2018, 57, 952–958. [Google Scholar] [CrossRef]

- Xing, Y.; Wang, Q.-H.; Ren, H.; Luo, L.; Deng, H.; Li, D.-H. Optical Arbitrary-Depth Refocusing for Large-Depth Scene in Integral Imaging Display Based on Reprojected Parallax Image. Opt. Commun. 2019, 433, 209–214. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Cheng, D.; Liu, Y. Light f Ield Head-Mounted Display with Correct Focus Cue Using Micro Structure Array. Chin. Opt. Lett. 2014, 12, 060010. [Google Scholar] [CrossRef]

- He, Z.; Sui, X.; Jin, G.; Cao, L. Progress in Virtual Reality and Augmented Reality Based on Holographic Display. Appl. Opt. 2019, 58, A74–A81. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Choi, S.; Kim, J.; Wetzstein, G. Speckle-Free Holography with Partially Coherent Light Sources and Camera-in-the-Loop Calibration. Sci. Adv. 2021, 7, eabg5040. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Liu, K.; Sui, X.; Cao, L. High-Speed Computer-Generated Holography Using an Autoencoder-Based Deep Neural Network. Opt. Lett. 2021, 46, 2908–2911. [Google Scholar] [CrossRef]

- Wang, D.; Liu, C.; Shen, C.; Xing, Y.; Wang, Q.-H. Holographic Capture and Projection System of Real Object Based on Tunable Zoom Lens. PhotoniX 2020, 1, 6. [Google Scholar] [CrossRef]

- Pi, D.; Liu, J.; Wang, Y. Review of Computer-Generated Hologram Algorithms for Color Dynamic Holographic Three-Dimensional Display. Light Sci. Appl. 2022, 11, 231. [Google Scholar] [CrossRef]

- Song, W.; Liang, X.; Li, S.; Moitra, P.; Xu, X.; Lassalle, E.; Zheng, Y.; Wang, Y.; Paniagua-Domínguez, R.; Kuznetsov, A.I. Retinal Projection Near-Eye Displays with Huygens’ Metasurfaces. Adv. Opt. Mater. 2023, 11, 2202348. [Google Scholar] [CrossRef]

- Liu, S.; Li, Y.; Zhou, P.; Chen, Q.; Su, Y. Reverse-Mode PSLC Multi-Plane Optical See-through Display for AR Applications. Opt. Express 2018, 26, 3394–3403. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Peng, F.; Wu, S.-T. Fast-Response Switchable Lens for 3D and Wearable Displays. Opt. Express 2016, 24, 1668–1675. [Google Scholar] [CrossRef]

- Hu, X.; Hua, H. Design and Assessment of a Depth-Fused Multi-Focal-Plane Display Prototype. J. Display Technol. 2014, 10, 308–316. [Google Scholar] [CrossRef]

- Liu, S.; Li, Y.; Zhou, P.; Li, X.; Rong, N.; Huang, S.; Lu, W.; Su, Y. A Multi-Plane Optical See-through Head Mounted Display Design for Augmented Reality Applications: Head Mounted Display for Augmented Reality. J. Soc. Inf. Disp. 2016, 24, 246–251. [Google Scholar] [CrossRef]

- Chen, H.-S.; Wang, Y.-J.; Chen, P.-J.; Lin, Y.-H. Electrically Adjustable Location of a Projected Image in Augmented Reality via a Liquid-Crystal Lens. Opt. Express 2015, 23, 28154–28162. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Zhang, Z.; Liu, J. Adjustable and Continuous Eyebox Replication for a Holographic Maxwellian Near-Eye Display. Opt. Lett. 2022, 47, 445–448. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, X.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Conjugate Wavefront Encoding: An Efficient Eyebox Extension Approach for Holographic Maxwellian near-Eye Display. Opt. Lett. 2021, 46, 5623–5626. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Tu, K.; Pang, Y.; Zhang, X.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Simultaneous Multi-Channel near-Eye Display: A Holographic Retinal Projection Display with Large Information Content. Opt. Lett. 2022, 47, 3876–3879. [Google Scholar] [CrossRef] [PubMed]

- Takaki, Y.; Fujimoto, N. Flexible Retinal Image Formation by Holographic Maxwellian-View Display. Opt. Express 2018, 26, 22985–22999. [Google Scholar] [CrossRef]

- Mi, L.; Chen, C.P.; Lu, Y.; Zhang, W.; Chen, J.; Maitlo, N. Design of Lensless Retinal Scanning Display with Diffractive Optical Element. Opt. Express 2019, 27, 20493–20507. [Google Scholar] [CrossRef]

- Choi, M.-H.; Shin, K.-S.; Jang, J.; Han, W.; Park, J.-H. Waveguide-Type Maxwellian near-Eye Display Using a Pin-Mirror Holographic Optical Element Array. Opt. Lett. 2022, 47, 405–408. [Google Scholar] [CrossRef]

- Wang, Z.; Tu, K.; Pang, Y.; Xu, M.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Lensless Phase-Only Holographic Retinal Projection Display Based on the Error Diffusion Algorithm. Opt. Express 2022, 30, 46450–46459. [Google Scholar] [CrossRef]

- Wang, Z.; Tu, K.; Pang, Y.; Lv, G.Q.; Feng, Q.B.; Wang, A.T.; Ming, H. Enlarging the FOV of Lensless Holographic Retinal Projection Display with Two-Step Fresnel Diffraction. Appl. Phys. Lett. 2022, 121, 081103. [Google Scholar] [CrossRef]

- Chang, C.; Cui, W.; Park, J.; Gao, L. Computational Holographic Maxwellian Near-Eye Display with an Expanded Eyebox. Sci. Rep. 2019, 9, 18749. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; Tan, C.; Wu, Y.; Zhang, Y.; Chen, N. Large Field-of-View Holographic Maxwellian Display Based on Spherical Crown Diffraction. Opt. Express 2023, 31, 22660–22670. [Google Scholar] [CrossRef] [PubMed]

- Takaki, Y.; Nago, N. Multi-Projection of Lenticular Displays to Construct a 256-View Super Multi-View Display. Opt. Express 2010, 18, 8824–8835. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Ye, Q.; Pang, Z.; Huang, H.; Lai, C.; Teng, D. Polarization Enlargement of FOV in Super Multi-View Display Based on near-Eye Timing-Apertures. Opt. Express 2022, 30, 1841–1859. [Google Scholar] [CrossRef] [PubMed]

- Wan, W.; Qiao, W.; Pu, D.; Chen, L. Super Multi-View Display Based on Pixelated Nanogratings under an Illumination of a Point Light Source. Opt. Lasers Eng. 2020, 134, 106258. [Google Scholar] [CrossRef]

- Teng, D.; Lai, C.; Song, Q.; Yang, X.; Liu, L. Super Multi-View near-Eye Virtual Reality with Directional Backlights from Wave-Guides. Opt. Express 2023, 31, 1721–1736. [Google Scholar] [CrossRef]

- Han, W.; Han, J.; Ju, Y.-G.; Jang, J.; Park, J.-H. Super Multi-View near-Eye Display with a Lightguide Combiner. Opt. Express 2022, 30, 46383–46403. [Google Scholar] [CrossRef]

- Ueno, T.; Takaki, Y. Super Multi-View near-Eye Display to Solve Vergence—Accommodation Conflict. Opt. Express 2018, 26, 30703–30715. [Google Scholar] [CrossRef]

- Wang, L.; Li, Y.; Liu, S.; Su, Y.; Teng, D. Large Depth of Range Maxwellian-Viewing SMV Near-Eye Display Based on a Pancharatnam-Berry Optical Element. IEEE Photonics J. 2022, 14, 1–7. [Google Scholar] [CrossRef]

- Zhang, X.; Pang, Y.; Chen, T.; Tu, K.; Feng, Q.; Lv, G.; Wang, Z. Holographic Super Multi-View Maxwellian near-Eye Display with Eyebox Expansion. Opt. Lett. 2022, 47, 2530–2533. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, X.; Lv, G.; Feng, Q.; Ming, H.; Wang, A. Hybrid Holographic Maxwellian Near-Eye Display Based on Spherical Wave and Plane Wave Reconstruction for Augmented Reality Display. Opt. Express 2021, 29, 4927–4935. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Sang, X.; Chen, Z.; Zhang, Y.; Leng, J.; Guo, N.; Yan, B.; Yuan, J.; Wang, K.; Yu, C. Fresnel Hologram Reconstruction of Complex Three-Dimensional Object Based on Compressive Sensing. Chin. Opt. Lett. 2014, 12, 080901–080904. [Google Scholar] [CrossRef]

- Gilles, A.; Gioia, P.; Cozot, R.; Morin, L. Hybrid Approach for Fast Occlusion Processing in Computer-Generated Hologram Calculation. Appl. Opt. 2016, 55, 5459–5470. [Google Scholar] [CrossRef] [PubMed]

- Gilles, A.; Gioia, P.; Cozot, R.; Morin, L. Computer Generated Hologram from Multiview-plus-Depth Data Considering Specular Reflections. In Proceedings of the 2016 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Seattle, WA, USA, 11–15 July 2016; pp. 1–6. [Google Scholar]

- Wang, Z.; Zhang, X.; Tu, K.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Lensless Full-Color Holographic Maxwellian near-Eye Display with a Horizontal Eyebox Expansion. Opt. Lett. 2021, 46, 4112–4115. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Enhanced Resolution of Holographic Stereograms by Moving or Diffusing a Virtual Pinhole Array. Opt. Express 2020, 28, 22755–22766. [Google Scholar] [CrossRef]

- Wang, Z.; Lv, G.Q.; Feng, Q.B.; Wang, A.T.; Ming, H. Resolution Priority Holographic Stereogram Based on Integral Imaging with Enhanced Depth Range. Opt. Express 2019, 27, 2689–2702. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, R.S.; Zhang, X.; Lv, G.Q.; Feng, Q.B.; Hu, Z.A.; Ming, H.; Wang, A.T. Resolution-Enhanced Holographic Stereogram Based on Integral Imaging Using Moving Array Lenslet Technique. Appl. Phys. Lett. 2018, 113, 221109. [Google Scholar] [CrossRef]

- Wang, Z.; Lv, G.; Feng, Q.; Wang, A.; Ming, H. Simple and Fast Calculation Algorithm for Computer-Generated Hologram Based on Integral Imaging Using Look-up Table. Opt. Express 2018, 26, 13322–13330. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Su, Y.; Pang, Y.; Feng, Q.; Lv, G. A Depth-Enhanced Holographic Super Multi-View Display Based on Depth Segmentation. Micromachines 2023, 14, 1720. https://doi.org/10.3390/mi14091720

Wang Z, Su Y, Pang Y, Feng Q, Lv G. A Depth-Enhanced Holographic Super Multi-View Display Based on Depth Segmentation. Micromachines. 2023; 14(9):1720. https://doi.org/10.3390/mi14091720

Chicago/Turabian StyleWang, Zi, Yumeng Su, Yujian Pang, Qibin Feng, and Guoqiang Lv. 2023. "A Depth-Enhanced Holographic Super Multi-View Display Based on Depth Segmentation" Micromachines 14, no. 9: 1720. https://doi.org/10.3390/mi14091720