1. Introduction

Since the development of molecular genetics techniques allowed to obtain nucleotide sequences for the study of populations genetics [

1], a number of neutrality tests have been developed with the objective to facilitate the interpretation of an increasing volume of molecular data. Statistical tests for neutrality have been generated exploiting the different properties of the stationary neutral model. Examples of tests are the HKA [

2], which takes advantage of the polymorphism/divergence relationship across independent loci in a multilocus framework, and the Lewontin–Krakauer test [

3], which looks for an unexpected level of population differentiation in a locus in relation to other loci. Additionally, another family of popular tests are the ones related to linkage disequilibrium, such as the one developed by [

4], which detects long haplotypes at unusual elevated frequencies in candidate regions.

An important family of these tests, often used as summary statistics, is built on the frequency spectrum of biallelic polymorphisms. This family includes the well known tests by Tajima [

5], Fu and Li [

6], and Fay and Wu [

7]. If an outgroup is available, these tests are based on the unfolded spectrum

, that is, the number of segregating sites with a derived allele frequency of

i in a sample of (haploid) size

n. Without an outgroup, it is not possible to distinguish derived and ancestral alleles and the only available data correspond to the folded spectrum

, that is, the number of segregating sites with a minor allele frequency of

i. The quantities

and

are all positive and the range of allele frequencies is

for the unfolded spectrum,

for the folded spectrum. The average spectra for the standard Wright–Fisher neutral model are given by:

where

L is the length of the sequence and

, where

is the mutation rate per base,

p is the ploidy and

is the effective population size. Note that we define

as the rescaled mutation rate per base and not per sequence. Apart from this, we follow the notation of [

8,

9]. Note that the spectra are proportional to

.

A general framework for linear tests was presented in [

8]. The general tests proposed there were of the form:

that are centred (i.e., they have a null expectation value) if the weights

satisfy the conditions

and

. This framework allows the construction of many new neutrality tests and has been used to obtain optimal tests for specific alternative scenarios [

10]. However, the original framework does not take into account the dependence of the tests on the sample size, as emphasised in [

10]. It is important to choose this dependence in order to obtain results that are as independent as possible on sample size. Moreover, the framework presented in [

8] covers just a large subfamily of neutrality tests based on the frequency spectrum, that is, the class of tests that are linear functions of the spectrum. This subfamily contains almost all the tests that can be found in the literature with the exception of the

tests of Fu [

11], which are second order polynomials in the spectrum whose form is related with Hotelling statistics. Since these

tests were shown to be quite effective in some scenarios, it would be interesting to build a general framework for these quadratic (and more generally nonlinear) tests. New optimal tests based on extensions of the site frequency spectrum [

12,

13] were recently applied successfully to the detection of selection pressure on human chromosomal inversions [

14].

In this work we provide a detailed study of the properties of the whole family of tests based on the site frequency spectrum, i.e., focus on the structure and the properties of neutrality tests that consider the frequency of the variants per position. Technical details and proofs can be found in

Appendix A and

Appendix B.

We begin with the discussion of the most interesting case, i.e., linear tests. Achaz [

8] developed a general framework for constructing linear tests comparing two different estimators of variability, which are based on linear combinations of the frequency, each one containing different weights. We summarise his approach in the

Section 2.1.

In

Section 2.2, we discuss the importance of scaling the weights with the aim to avoid a dependence with the sample size. This allows us to interpret the information of the test in the same way for any sample size analysed. We provide a thorough analysis of a simple proposal for the scaling of the tests with the sample size. Different scaling strategies (including alternatives to scaling) are analysed and evaluated, demonstrating the importance but also the suitability of different weighting methods depending on the nature of the statistic.

In

Section 2.3, we present and expand the theory of optimal tests, i.e., tests that have maximum power to detect an alternative scenario versus a null scenario, introduced in [

10]. We show that generic linear tests cannot detect arbitrary deviations from the neutral spectrum, and why tests must be optimised with respect to a specific alternative scenario. A geometrical interpretation of neutrality test is developed, showing how these tests depend on the differences between the null and the alternative scenario, clarifying the theoretical basis for these tests.

In

Section 2.4, we extend this approach to nonlinear tests that consider different moments of the frequency spectrum combined in generic polynomials or in power series. In contrast to linear tests, nonlinear tests are dependent on the level of variability

and thus need an accurate estimation of

to have good statistical properties (e.g., unbiased, high statistical power). These tests are classified in strongly and weakly centred (that is, having null expectation value). Strongly centred tests are those tests that are centred for any value for any estimate of

, even if this estimate is far from the actual value, and thus are more robust to deviations of

estimates than weakly centred tests (simulations shown already in the Results section (

Section 3)). Instead, weakly centred tests are only centred if the

estimate is equal to the actual value, but this feature also makes the tests more powerful if the inference of

is reliable.

In

Section 3.1 in the Results, we see some consequences of the framework outlined in Methods; it is possible to optimise neutrality tests following a general maximisation approach that depends on the proxy used for the power to reject the null model in the alternative scenario, which depends on the mean spectra, the covariance matrices, and the critical

p-value used. Moreover, we show that under some conditions, the power maximisation can be always achieved by tuning a parameter in a new family of linear “tunable” optimal tests developed here, which depend only on the mean spectra of the alternative model.

In

Section 3.2, we present the results for the power to detect deviations from the neutral spectrum in coalescent simulations. We show how linear optimal tests have more power than usual neutrality tests such as Tajima’s

D, nonlinear optimal tests are more powerful than linear ones, and weakly centred tests are more powerful than strongly centred ones if

is known.

In summary, our research augments the understanding of neutrality tests, encompassing weight normalisation, optimal test formulation, and both linear and nonlinear paradigms. The outcome not only enriches theoretical foundations but also provides novel methodologies for increasing power and effectiveness of neutrality tests across diverse scenarios.

3. Results

3.1. General Optimisation of Linear Tests

The condition for optimal tests is the maximisation of under the alternative scenario. However, a better approach would by the maximisation of the power of the test to reject the neutral model in the alternative scenario, given a choice of significance level . This approach requires the knowledge of the form of the probability distributions , where and are the null and alternative model, or equivalently of all the moments of the spectrum and .

Since this information is usually not available in analytic form and hard to obtain computationally, we limit to the case where the distribution can be well approximated by a Gaussian both for the null and for the expected model. Then, the only information needed are the spectra , and their covariance matrices , .

We expect that both in this approximation and in the general case, the tests with maximum power will depend on the significance level chosen, therefore limiting the interest of these test and the possibilities of comparison between results of the test on samples from different experiments.

We call

the

z-value corresponding to the critical

p-value

. In the Gaussian approximation, the power is given by the following expression:

then its maximisation is equivalent to the maximisation of:

In the general case, the weights corresponding to the maximum depend explicitly on and therefore on . This dependence is expected but unwanted, since the interpretation of the test depends explicitly on the critical p-value chosen.

There is only one case with weights independent on

, that is the case of

(approximately) proportional to

. In this case the maximisation of the power of the test is (approximately) equivalent to the maximisation of the average result of the test, which is precisely the condition for optimal tests in the sense of [

10]. In fact, in this case, optimal tests correspond precisely to an approximation of the likelihood-ratio tests under the assumption of Gaussian likelihood functions, and are therefore approximately the most powerful tests because of the Neyman–Pearson lemma.

As a side note, there is also a regime of values of such that the weights corresponding to maximum power are independent of , that is, the regime . In this case the power is an increasing function of and the weights are simply given by the null eigenvector (or linear combination of null eigenvectors) of the matrix , where is uniquely defined by the requirement that be a negative semidefinite matrix with at least a null eigenvalue. However, this regime is uninteresting because such small significance levels are practically useless (if , the corresponding critical p-value is ). However, it could be possible to build interesting tests with higher power by selecting linear combinations of the weights of the two -independent tests discussed above, that is, optimal tests and tests that maximise the alternative/null variance ratio .

3.1.1. Generalised Optimality Conditions

In a more general linear framework, the (normalised) test has the form

. We denote its moments under the standard neutral model (SNM) and the alternative model by:

where

,

,

and

. For the standard neutral model

.

The optimality condition can be the maximisation of a general function

of these quantities with respect to the weights

. Since we are dealing with linear combination of the frequency spectrum with mean

and variance

, the function effectively depends on

only. Maximisation of power (in the Gaussian approximation), given a significance level

, is equivalent to the maximisation of:

If there is some knowledge of the variance of the alternative spectrum, or at least of the contribution for unlinked sites, maximisation of power is not the only possible optimisation. In other words, it is possible to optimise with respect to other functions of the alternative mean and variance of the test, in order to obtain, e.g., more robust or conservative tests.

For example:

“Optimal tests”: if

is assumed to be completely unknown, the best we can do is minimise the

p-value of the expected alternative spectrum, that is, in the Gaussian approximation

where

is the cumulative distribution function for the standard normal distribution. This is equivalent to the maximisation of:

Most powerful one-tail tests: in the Gaussian approximation, the power of the right tail of test to reject the neutral Wright–Fisher model given a significance level

is

, where

. Maximising this power is equivalent to the maximisation of:

If

, this is equivalent to the previous case and we retrieve the optimal tests of [

10] that are therefore the most powerful tests in this approximation.

Most powerful two-tails tests: in the Gaussian approximation, the power of both tails of the test to reject the neutral Wright–Fisher model is:

where

and

is the significance level. If

, this maximisation is equivalent to the maximisation of

similar to the previous case.

Penalisations for high or low variance, depending on an extra parameter

, such as:

3.1.2. Tunable Optimal Tests

Interestingly, it turns out that the choice of an optimisation criterion—i.e., of a function to be maximised—can be performed simply by tuning a parameter in a simple class of tests that we call “tunable optimal tests”.

The family of optimised tests with tunable parameter

has the simple form:

with weights:

where

.

The interest of this family of tests lies in the following property: for every possible choice of the optimisation function

, the test corresponding to the maximum value of this function belongs to this family (see

Appendix B).

Usual optimal tests [

10] are obtained from the maximisation of

and correspond to

, that is, to the usual weights

.

More generally,

can be obtained directly by evaluating

,

with the weights (

80) and then looking for the maximisation of

. Note that there could be several local maxima.

This class of tests is simple but far more flexible than usual optimal tests. However, note that the exact choice of weights depends explicitly on and . Furthermore, the smoothness of the choice of weights with respect to the evolutionary parameters is not assured. In principle, a slight change of evolutionary scenario could change the optimal weights abruptly.

3.2. Simulations of the Power of Optimal Tests

Since a theoretical evaluation of the power of optimal tests of different degree is not possible, we evaluate numerically the power of some of these tests in different scenarios. We consider the best possible case, that is, we assume that the precise value of

is known. Moreover we assume unlinked sites and

. In this approximation, as shown in

Appendix A.7, the moments

depend only on the first moments

and similarly

depend only on

, therefore optimal tests depend only on the alternative and null average spectra.

Note that for numerical simulations of optimal tests of higher degree, the numerical implementation can be made easier if all the occurrences of inverse covariance matrices

in the the above formulae are replaced with the corresponding second moments

, both in the expressions (

50), (

63), and in the definition (

52). The test is the same because of the centredness condition, as it can be verified explicitly.

We compare four optimal tests. The first two are the linear and quadratic strongly centred optimal tests, which are denoted by

and

respectively. The third test is the weakly centred optimal test

based on a first order polynomial and presented in (

59). The last optimal test

is also weakly centred and based on on a quadratic polynomial. The explicit formulae for the computation of the weights of

and

were given in Equations (

54)–(

56) and (

66)–(

69).

We simulated two demographic processes: (A) subdivision, where two populations having identical size exchange individuals given a symmetric migration rate

M, then individuals are sampled from one population only; (B) expansion, where the population size changes by a factor

at a time

T before present (in units of

generations). For each value of the parameters

M and

T,

simulations were performed with

mlcoalsim v1.98b [

20] for a region of 1000 bases with variability

and recombination

and a sample size of

(haploid) individuals. Confidence intervals at 95% level were estimated from

simulations of the standard neutral coalescent with the same parameters.

In

Figure 3 we compare the power of the tests in the best possible situation, namely when

is known with good precision. In this condition all optimal tests should give the best results. In fact, the power of weakly centred tests (

and

) is impressive, being around

for a large part of the parameter space and decreasing for large migration rates (

Figure 3A) and long times (

Figure 3B) as every other test, because the frequency spectrum for these cases becomes very similar to the standard spectrum. So, weakly centred tests show a very good theoretical performance, counterbalanced by their lack of robustness. The power of

and

are almost identical, therefore the contribution of the quadratic part to

is probably not relevant.

On the other hand, strongly centred optimal tests are more powerful than Tajima’s D but less powerful than weakly centred tests, as expected. However, there is a sensible difference in power between and : in the range of parameters where the power of weakly centred tests is around , both strongly centred tests show a good performance not so far from the weakly centred ones, while in the less favourable range the quadratic test , while performing worse than the weakly centred tests, has a power that is higher than the linear test . Taking into account the robustness of the tests, these simulations show that optimal tests like could be an interesting alternative to the usual linear tests.

4. Discussion

In this paper we have presented a systematic analysis of neutrality tests based on the site frequency spectrum. This study is intended to extend and complete the recent works in [

8,

10] by extending the study of the linear neutrality tests presented by Achaz. The properties of linear neutrality tests and optimal tests have been studied using this framework. A new class of “tunable” optimal tests that include usual optimal tests as a special case have been proposed, using a generalisation of the optimisation approach for linear tests.

The aim of the paper is to give mathematical guidelines to build new and more effective tests to detect deviations from neutrality. The proposed guidelines are the scaling relation (

8) and the optimality condition based on the maximisation of

. Both these guidelines are thoroughly explained and discussed.

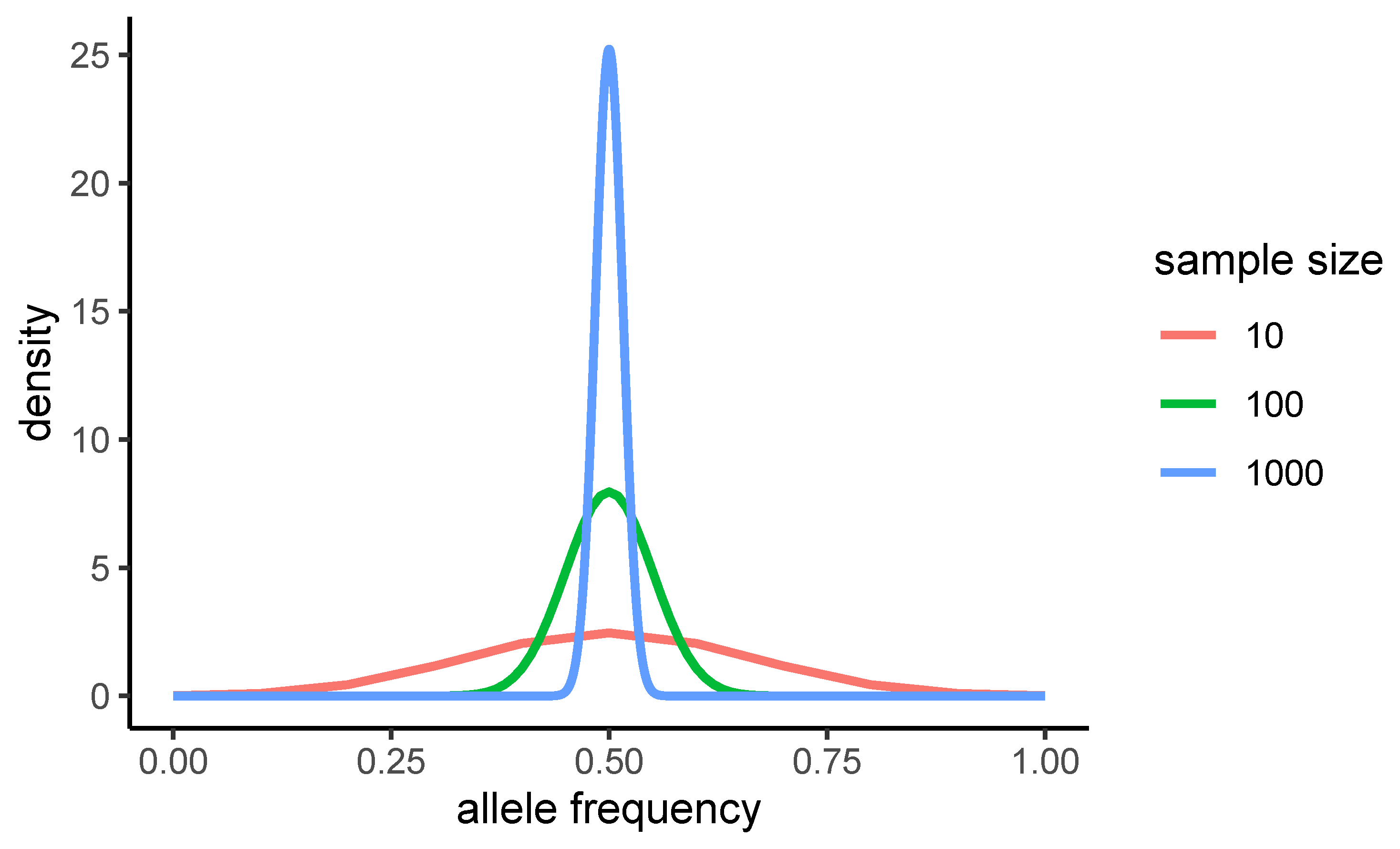

One of the strengths of optimal neutrality tests (initially presented in [

10]) is the fact that their weights scale automatically with the aim to avoid a dependence with the sample size, enabling interpretation of the results of the test in a sample-size-independent way. For other tests, different scaling strategies were analysed and evaluated, discussing the suitability of weighting methods and the scaling of existing neutrality tests.

The different neutral tests studied and developed in this paper have different features, which make them suitable for different purposes. For example, a general neutrality test where the weights are scaled to obtain interpretable results may be sufficient, with minimum effort, to reject the null model and to interpret results, but the statistical power when faced with a specific alternative hypothesis can be low. Instead, optimal tests become a better approach if the alternative hypothesis is clearly formulated and the data is not clearly from the null hypothesis. In respect to the differences between linear and nonlinear optimal test, while nonlinear optimal tests have been shown to be more powerful than linear ones (and weakly centred tests more powerful than strongly centred ones), power is not the only important issue: robustness must also be taken into consideration.

In fact, there are three important remarks on the relative robustness of these tests. The first one is that, as already discussed, centredness of weakly centred tests is not robust with respect to a biased estimate of , therefore these tests should be preferred to strongly centred tests only in situations where the value of is well known or a good estimate is available.

The second remark is that neither the weights nor the results of linear optimal tests do depend on the value of

and on the number of segregating sites

S, while the weights of nonlinear optimal tests have an explicit dependence on

and their results depend not only on the spectrum but also on

S; therefore, the interpretation of the results of these tests is more complicated. However, this is not necessarily true for homogeneous tests of any degree, like the quadratic

test by Fu. An interesting development of this work could be a study of homogeneous tests of a given degree

k satisfying the optimality condition, which can be easily obtained from Equation (

50) by restricting all ordered sequences of indices

to contain precisely

k indices (along with some “traceless” condition, in case). These homogeneous optimal tests (or at least some subclass of them) should depend weakly on

S.

The third remark is that linear optimal tests have two interesting properties that are not shared by other tests: they depend only on the deviations from the null spectrum and they have an easy interpretation in terms of these deviations, that is, they are positive if the observed deviations are similar to the expected ones and negative if the observed deviations are opposite to the expected ones. These features give an important advantage to linear optimal tests.

A fourth remark is that “tunable” optimal tests can be built to achieve weights that correspond to maximum power to reject the null hypothesis for a given alternative scenario but uncertain power calculations (e.g., when the variance of the alternative scenario is not known). This new class of neutrality tests is highly flexible and only depends on a single parameter and the mean alternative spectrum. Nevertheless, since the test can be used even when the alternative scenario is not fully defined, there may be situations where optimising its power can result in the interpretability or robustness of the test being compromised.

Tests based on the frequency spectrum of polymorphic sites are fast, being based on simple matrix multiplications, and can therefore be applied to genome-wide data. Moreover, they can be used as summary statistics for Approximate Bayesian Computation or other statistical approaches to the analysis of sequence data. While linear tests are often used in this way, the nonlinear tests presented in this paper contain more information (related to the covariances and higher moments of the frequency spectrum) that could increase the power of these analyses.