1. Introduction

In oil exploration, the seismic method plays the role of the primary geophysical tool because it provides, in general, higher resolving power than other geophysical methods when investigating on the same scale. For instance, the finer details of structural definition and targets can be determined from seismic images. Other methods, such as gravity surveys, however, are often used to provide complementary information to assist seismic interpretation. For example, qualitative gravity analysis is used in regional studies to identify major structural trends, whereas quantitative techniques, such as gravity inversion, can be used to assist seismic depth migration in salt imaging (e.g., [

1,

2,

3]). Because of its valuable contribution, the use of the quantitative analysis of gravity data, especially detailed 2D and 3D modeling of complex structures, has significantly increased in recent years. The combination of gravity data and seismic imaging is now common in salt imaging. However, similar efforts seem to be lagging in terms of basement mapping. We hope to contribute to this by integrating gravity inversion with seismic and geologic constraints.

One case in point is the following scenario. Seismic processing and interpretation often produce an image of the subsurface, but the structural image is rarely evaluated against the basic criterion that all available geophysical data should be reproduced through forward modeling. The main reason for the lack of such an evaluation is the prohibitive cost required to perform this for seismic data. However, such evaluation can be carried out for other information, such as gravity data. The benefit of utilizing gravity data is two-fold. First, gravity processing is inexpensive compared to seismic processing, and it can be performed much faster. Second, gravity data provide complementary information about the density distribution in a subsurface, which might potentially improve upon a seismic image in a similar manner, as it helps improve base-salt imaging. We submit that gravity modeling and inversion may be used as valuable tools to crosscheck and improve seismic interpretation for basement mapping.

The basic premise is that the basement model interpreted from seismic data should be consistent with the known geology and, therefore, should reproduce the gravity anomaly over the same area. If the gravity data produced by the seismic model agree (within the error tolerance) with the measured gravity data, this would have independently verified the validity of the seismic interpretation. On the other hand, a large difference between the predicted and measured gravity data would suggest that the seismic basement image is not entirely valid and needs to be modified. The modification can be guided by structural gravity inversion constrained by available well log information. The changes suggested by the inversion must then come back to the seismic interpretation to refine the previously obtained seismic image. This effectively creates a loop that is completed only when a geological basement model respecting all the available information is generated.

In this paper, we follow the above philosophy and propose an approach that combines the resolving power of the seismic image with the ease of gravity modeling and inversion in mapping basement structures. We assume that a seismic model of the basement relief exists, but it does not agree with surface gravity data. We, therefore, invert the gravity data to construct a modified basement model that is consistent with the seismic result. The central problem is one of estimating the shape and depth of the interface separating two contrasting media by using gravity data. Theoretically, this problem has a unique solution if the density contract is known. In practice, however, this is an ill-posed nonlinear inverse problem, and the solution can be non-unique. The non-uniqueness arises from two distinct sources. The first is the fact that we only know the gravity field at the surface, so many different source distributions in the subsurface can reproduce that field. There will be trade-offs between the density contrast and basin depth. The second reason is the all-present difficulty in applied geophysics that we acquire only a finite number of inaccurate measurements, and there are many models that will reproduce the data within the error tolerance. More information is needed to transform this problem into a well-posed one. Since we are attempting to improve upon a seismically derived basement model, it is logical to use that model as the needed prior information. In addition, we can also use borehole logs as another source of prior information.

There are several approaches to introduce prior information in gravity inversion in order to stabilize the process. For example, Ref. [

4] used successive linear approximations to derive a stable solution that is implicitly constrained in shape; Ref. [

5] applied low-pass filters to dampen the solution so that a well-behaved basement topography was obtained. Others used a more explicit approach by minimizing an objective function of the model. The advantage of using an explicit model objective function is that it allows for the incorporation of several different types of a priori information by changing the form of the function to be minimized. The authors of [

6], for example, minimized the total volume of the causative body. Ref. [

7] choose to minimize the moment of inertia with respect to the center of the body or to an axis passing through it. Ref. [

8] minimized a function that includes relative and absolute equality constraints in order to introduce smoothness and prior depth-to-interface information. Ref. [

9] imposed a smoothness requirement on the vertices of a polyhedron body in salt imaging. Ref. [

10] minimized an objective function of density that required the model to be close to a given reference model, and this was smoothed in three spatial directions.

Our method has its principles in the method proposed by [

10] but involves absolute constraints and has a model parameterization similar to the method proposed by [

8]. The method minimizes an objective function of the model that requires not only the model to be smooth and close to the seismic-derived model, which is used as a reference model, but also to honor well-log constraints. The latter are introduced through the use of logarithmic barrier terms in the objective function (e.g., [

11,

12,

13]).

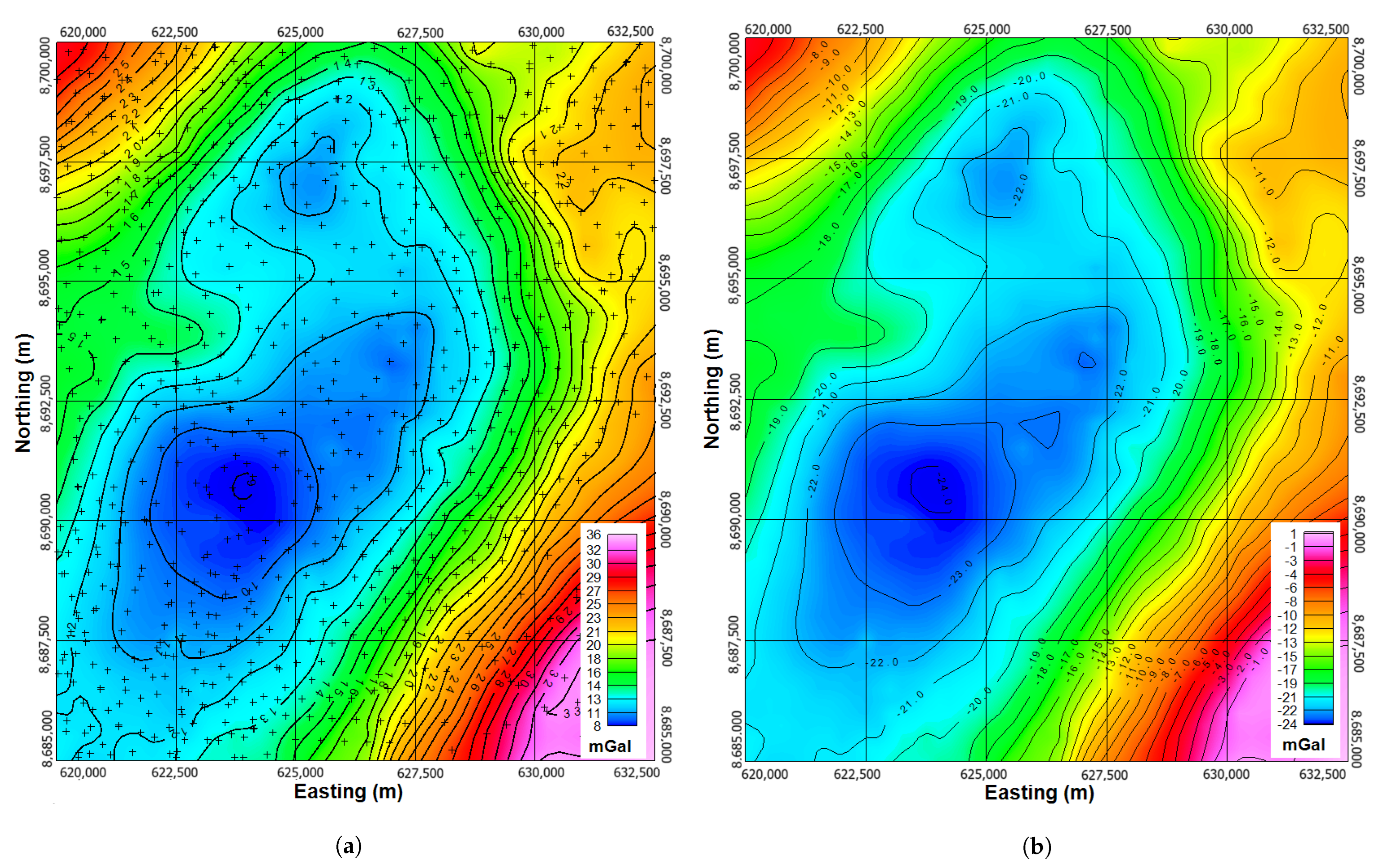

We first present our inversion method and illustrate it using synthetic gravity data, simulating a portion of a sedimentary basin. We then apply the method to a set of field gravity data acquired from the Recôncavo Basin, Brazil.

2. Methodology

The goal of our inversion is to find a reliable model that approximates the interface separating the sediments and the basement. The interface is assumed to represent the geometry of the basement in a portion of a sedimentary basin. The importance of defining this interface lies in the fact that in some sedimentary basins, especially rift-related ones, the basement geometry controls the distribution of potential oil fields. We restrict ourselves to working with only a portion of a basin since the assumptions involving the physical characteristics of the media, such as constant density, for instance, are more likely to be valid in smaller areas. In addition, this approach seeks to broaden the contribution of the gravity method in oil exploration because it focuses the work at an oil-field scale rather than at a basin scale for study.

To solve the problem numerically, we discretize the basement depth into a set of rectangular patches of a constant size and, therefore, represent the 3D sedimentary basin with a set of contiguous rectangular prisms of a constant density contrast (

Figure 1). The tops of the prisms are at the surface, and their thicknesses (or heights) are to be determined from observed gravity data. To allow for flexibility in the model in terms of representing varied basement structures, we required the number of prisms in the model,

M, to be always greater than the number of gravity observations,

N. This approach allows for a higher resolution in the recovered models because, in contrast to other inversion methodologies that require the number of observations and prisms to be the same, here, we can have a large number of prisms even when only a small number of field observations are available.

The general relationship between gravity anomaly and its sources is given by (e.g., [

14]):

where

is the gravity field at the observation position

r outside of the volume

V that is occupied by the source;

is the source density at location

, and

is a function that depends on the geometric relations between positions

r and

. Gravity inversion makes use of field measurements to find the main characteristics of either density

(linear problem) or some aspects of

, such as the region of the source. The former is a linear problem, whereas the latter is nonlinear since it intends to recover a geometric aspect of the problem. The problem of recovering the basement depth falls into the latter category. The relationship between the gravity field at the origin and a single prism with a constant density,

, and corner positions at

,

, and

, as derived by [

15] is,

where

is the gravitational constant, and

. We note that the vertical co-ordinate of the bottom of the prism,

, is the unknown quantity to be recovered through our inversion.

As discussed in the preceding section, this inversion is ill-posed because we have only a finite number of inaccurate data on the surface, and we attempt to recover a basement relief that is more complex in structure than the smoothly varying gravity data. Consequently, there are a multitude of models that can fit the data to the same degree. In order to find a unique solution for interpretational purposes, we select one that is consistent with known information and is structurally simple. We choose to follow the Tikhonov regularization. This approach allows for the construction of different models by changing the form of the objective function according to prior information. We minimize a total objective function

, defined as a weighted sum of a model objective function

and a data misfit function

,

where

is the regularization parameter, and it determines the trade-off between the two terms. The data misfit function

is defined to be:

where

, in which

is the error standard deviation related to the

ith observation,

represents the observed data, and

g is the data predicted from the model. If the noise contaminating the data is uncorrelated and has a zero mean, the misfit

is a chi-squared variable with

N degrees of freedom. The number of observations,

N, therefore, becomes the target misfit (

) for the inversion since the expected value for a chi-squared distribution is

N. In the case where the noise statistics are unknown, we must resort to different approaches to determine the optimal data misfit.

The model objective function

allows us to incorporate prior information about the model. The choice of prior information is problem-dependent, but in a general sense, the inverted model should be close to a reference model and be as smooth as the data allows in all directions. We, therefore, choose a model objective function having the following form:

where

h is the recovered model,

is the reference model, and

is a coefficient that controls the relative importance of the first term to the others. In Equation (

5), the first term provides a measure of the deviation from the reference model, whereas the remaining terms control the structural complexity of the model. Given the discretization used for the forward modeling, the recovered basement depth

becomes a piece-wise constant function, and it can be represented by a vector

. When evaluating the integrals in Equation (

5) according to the above-described discretization, we obtain a discrete form of the objective function:

where

is the model weighting matrix.

The choice of the reference model is often left open in many publications since it is highly problem-dependent. In our inversion, however, the goal is to improve upon seismic interpretation by finding modifications using gravity data. We would like to find a model that deviates as little as possible from the seismic model while still fitting the gravity data. It is, therefore, optimal to use the seismic model as the reference model.

Since the unknown model to be recovered is the height of each prism, the relationship between the data and the model is nonlinear, as discussed earlier. Consequently, the misfit of the data in Equation (

4) is not a quadratic function. As a result, we have a nonlinear inverse problem, and we choose to solve it iteratively through linearization. We assume that the thickness is

at the

th iteration, and a small perturbation

can be added to improve the data misfit. By expanding the predicted gravity data using a Taylor series expansion in

and ignoring higher order terms yield a linear relationship,

where

. Equation (

7) can be compactly represented in a matrix form as:

where

is the

length vector of the predicted data,

is the

length vector of model perturbations, and

J is the

N ×

M sensitivity matrix relating the predicted data to the changes in the model at each iteration according to Equation (

2). Substituting Equation (

8) into the discretized objective function yields the linearized form:

Minimizing Equation (

9) with respect to the model perturbation yields the desired

, which allows us to update the model and proceed to the next iteration.

In addition to the smoothness and similarity to the seismic model, depth-to-basement information (from boreholes) is also available to constrain the solutions. The use of localized prior information as constraints is not new, and examples can be found in [

8,

16,

17], among others. There are different means to introduce localized information, and the majority of methods rely on slightly different ways of minimizing the differences between the estimates and the known depths at well locations. In this paper, we have chosen to apply the logarithm barrier method (e.g., [

11]), which has been used by [

12,

13] in the inversion of different geophysical datasets. One advantage is that this approach allows one to set different limits to every element of the model instead of only at those locations where depth-to-basement information is present. The log barrier method presents the additional advantage of allowing for the introduction of specific degrees of confidence (by narrowing or enlarging the barrier limits) to different information. In other words, it is possible to set very narrow limits at positions where reliable depth information is present and to relax the constraints in regions where the information is less accurate. A fundamental application of these advantages is the introduction of the well log information coming from those boreholes that have not reached the basement but that contain information about depths where the basement certainly is not present. In a very similar way, seismic information can be used in areas where no wells are available. In the log barrier method, such information can be easily incorporated into the inversion by setting the constraints to the minimum depth only. To the best of our knowledge, the use of such information as constraints in the inversion of potential field data is new. The logarithmic barrier method was implemented in our problem by adding a logarithmic term to the objective function of Equation (

9) to form a new objective function:

where the last term is the barrier function,

is the barrier parameter,

and

are, respectively, the minimum and maximum depth, and

M is the total number of prisms in the model. The barrier term forms a barrier at the boundary of the feasible interval of the unknowns and prevents the minimization from producing unknowns outside their respective bounds. The value of

is decreased during the minimization so that at the end, as

approaches zero, the solution to Equation (

10) approaches that of the original problem. Carrying out the complete minimization of Equation (

10) for each value of

is an expensive process, and it is also unnecessary. Instead, for each value of the barrier parameter

, we take one Newton step towards minimizing Equation (

10) to yield the model perturbation equation:

where

,

, and

. The matrix system in Equation (

11) is solved for

by using the conjugate gradient (CG) method. The model is then updated by a limited step-length:

where

is the maximum permissible step length, and

is a parameter that limits the step length actually taken. The parameter

is given by:

The maximum step length is the value that will take the updated model to the bounds. Limiting it by the

prescribed within the interval (0, 1) ensures that the updated model remains within the bounds. After each iteration, the value of

is reduced by:

so that the barrier term becomes negligible as we move towards the final solution. The iterative process is terminated once the barrier term has become negligibly small and the original objective function has reached a plateau. This yields one solution for a given regularization parameter

. The solutions for several values of

are required to find the solution that produces the target misfit

.

3. Synthetic Example

We now apply our method to the synthetic dataset shown in

Figure 2. The data simulates the gravity response of the model (

Figure 2a) at 100 random locations (crosses). Gaussian noise with a zero mean and a standard deviation of 0.04 mGal was added to the entire set of synthetic measurements, resulting in the gravity response shown in

Figure 2b. The synthetic gravity data were gridded using 500 m intervals for the purpose of display only.

The synthetic model shown in

Figure 2a simulates a small portion of a sedimentary basin covering an area of 15,000 m × 15,000 m. The basement structures are represented by four rectangular blocks (A, B, C, and D), for which the tops are at, respectively, 500, 1000, 1500, and 2000 m. The maximum depth in the model is 3000 m. The density contrast between the sediments and the basement is considered to be constant and equal to −0.30 g/cm

. The model is discretized into 441 rectangular prisms, having a width of 750 m in

and

directions. Since the prisms represent the sedimentary section, the top of each prism is fixed at the surface, and its bottom will determine the depth to the basement at each location, as represented in

Figure 1.

The well log constraints were imposed on the problem by assuming the depth-to-basement information at five locations (the black dots in

Figure 2b), as listed in

Table 1. The wells were incorporated into the model by setting the model’s cells at the well locations to provide depth information and keep them fixed during the inversion. Except for the five positions where the depth to the basement is known, a model with a constant depth of 1500 m was chosen as the reference model.

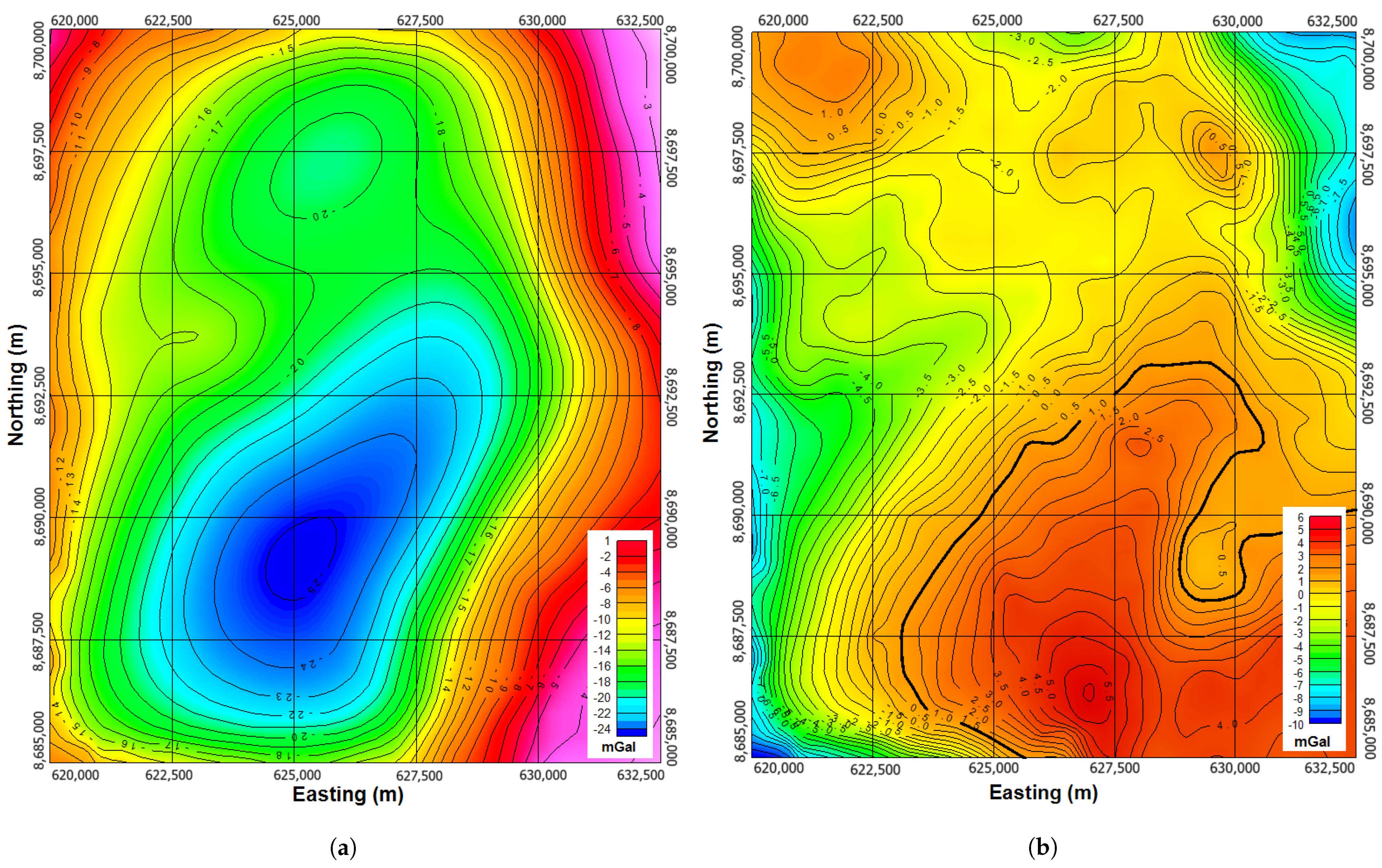

The final result of the inversion is shown in

Figure 3a. It is clear that the inversion was not able to completely recover the model, but the results represent a satisfactory solution in terms of the location and average depth for all four structures. The histogram of the absolute data misfit in

Figure 3c shows that

of misfits are smaller than

mGal, with

below

mGal, which is the standard deviation of the added noise. Such a result was expected mainly due to the noise and the limited number of observation points. As a comparison,

Figure 3b shows the results of a new inversion that used 250 randomly spaced data points. In

Figure 3d, the histogram of the absolute data misfit of the new inversion shows that all the misfits are below

mGal, with

of them below the standard deviation of the noise. The increase in the amount of observed data allows for a better definition of the gravity field by reducing ambiguity and helping to improve the final model.

In inversion methods, the correct choice of parameters is usually problem-dependent, and there is no simple rule of thumb available. Therefore, in addition to showing the effectiveness of the proposed method, we also provide the reader with a short discussion on the effects of some of the parameters involved in this inversion process: the parameter , the logarithm-barrier parameter (), and the regularization parameter (). Based on our experience, we hope that such a discussion can help the readers to develop a feeling for how to choose these parameters for their own problems.

The tests that used different values of the

-parameter showed that the influence of this parameter on the improvement of the solution is minor, and it is mainly restricted to the speed of convergence. Within the theoretically valid range, the number of iterations increases as

approaches zero since the actual step taken at each iteration is too small. As

approaches unity, the solution of Equation (

11) becomes much more difficult. This is because of the disparity in the elements of matrices

X and

Y, which causes the matrix system to be poorly conditioned. Our tests indicate that values of

ranging from 0.9 to nearly 1.0 lead to similar convergence rates and computational costs. For the final solutions shown in

Figure 3a,b, the

-parameter was chosen to be 0.99.

For the logarithm-barrier parameter, we usually start with a large value that must be reduced after each iteration (e.g., [

11]). The tests that used different values for

showed that, as expected, the initial choice of

does not produce significant changes in the final solution, and this does not change the effectiveness of the depth constraints. We have chosen the approach in [

12] to calculate the starting value of

as:

The choice of the regularization parameter is the most important step towards a good inversion result. It should be noted that is an auxiliary parameter that does not directly change the final results, whereas is the parameter that determines the trade-off between model complexity and data misfit. Therefore, the parameter directly affects the final result, and its choice is crucial. The parameter is often chosen so that the misfit term reaches the target misfit at the final iteration. Such a criterion works well for cases where the noise is uncorrelated and zero-mean, and a good estimate of the standard deviation of this noise is available, as in the synthetic example presented here. Unfortunately, such cases are rare in practical applications.

When no information about data errors is available, other methods for estimating the regularization parameter must be used. [

18] suggested the use of either GCV or L-curve criteria as an effective automatic estimator of the trade-off parameter in nonlinear inverse problems. We have found that the L-curve criterion produces good

estimates for the synthetic examples in our problem. Therefore, we have incorporated this criterion in our inversion methodology by using the maximum curvature approach proposed by [

19] to automatically locate the L-curve corner.