Prediction of Reflection Seismic Low-Frequency Components of Acoustic Impedance Using Deep Learning

Abstract

:1. Introduction

2. Workflow and Method

2.1. Convolutional Neural Networks

2.2. Recurrent Neural Networks

3. Results

3.1. Synthetic Data Test

3.1.1. Synthetic Data Test Based on Rock Physics Modeling

3.1.2. Marmousi Model 2 Test

3.1.3. Low-Frequency Attributes

3.1.4. Predicting the Low-Frequency Components of Seismic Data

3.1.5. Predicting the Low Frequencies of AI

- ▪

- Depth attribute

- ▪

- Interval velocity

- ▪

- Average frequency

- ▪

- Time

- ▪

- Instantaneous amplitude

- ▪

- Apparent polarity

- ▪

- Apparent time thickness

- ▪

- Amplitude weighted frequency

- ▪

- Integrated instantaneous amplitude

- ▪

- Relative geological age

- ▪

- Filter 5/10–15/20

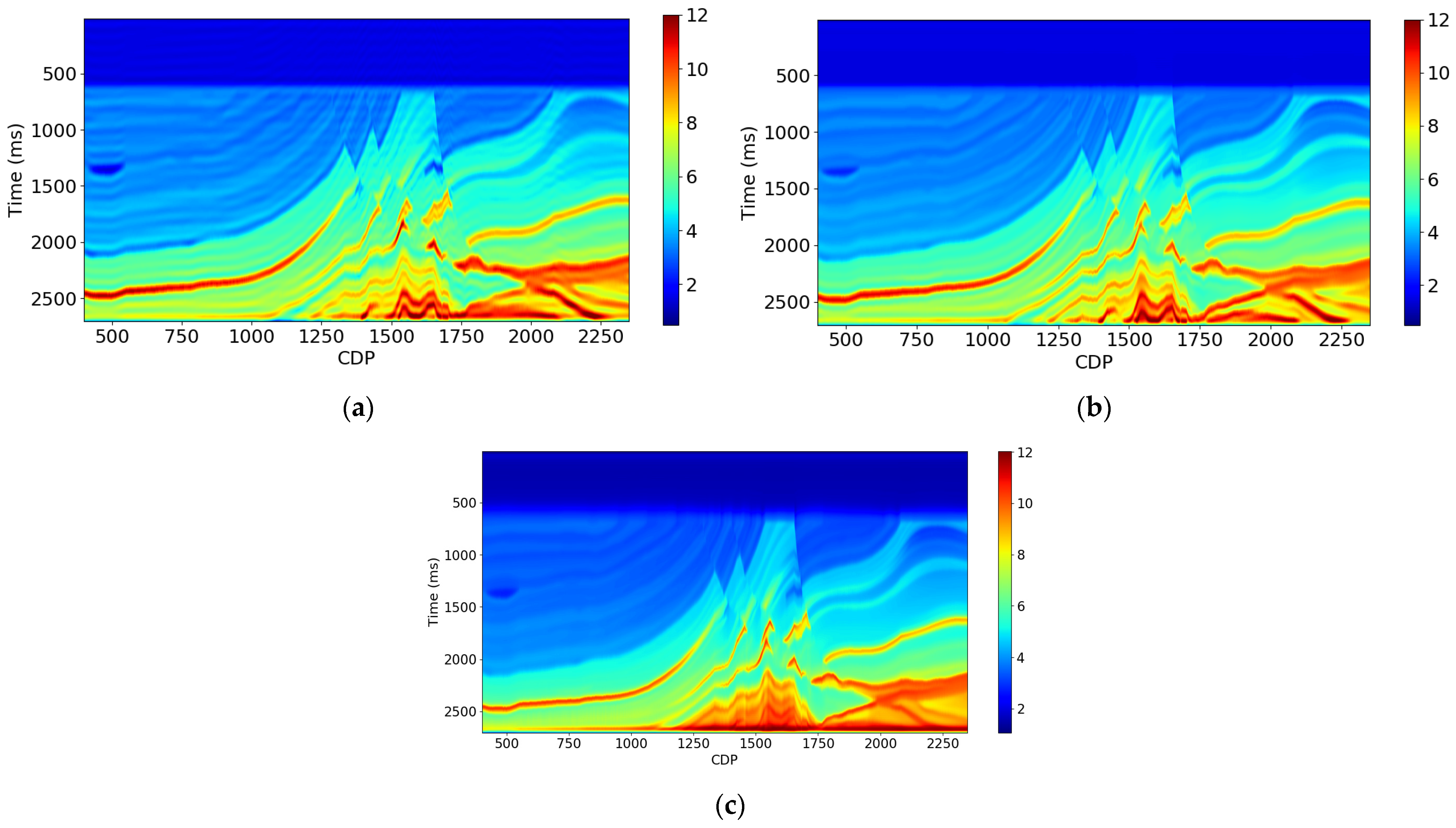

3.1.6. Predicting the Low Frequencies Using RMS Velocity

3.2. Real Data Example

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Levy, S.; Fullagar, P.K. Reconstruction of a sparse spike train from a portion of its spectrum and application to high-resolution deconvolution. Geophysics 1981, 46, 1235–1243. [Google Scholar] [CrossRef]

- Oldenburg, D.W.; Scheuer, T.; Levy, S. Recovery of the acoustic impedance from reflection seismograms. Geophysics 1983, 48, 1318–1337. [Google Scholar]

- Sacchi, M.D.; Velis, D.R.; Comínguez, A.H. Minimum entropy deconvolution with frequency-domain constraints. Geophysics 1994, 59, 938–945. [Google Scholar] [CrossRef]

- Velis, D.R. Stochastic sparse-spike deconvolution. Geophysics 2008, 73, R1–R9. [Google Scholar] [CrossRef]

- Zhang, R. Seismic reflection inversion by basis pursuit. Geophysics 2010, 76, Z27. [Google Scholar] [CrossRef]

- Liang, C. Seismic Spectral Bandwidth Extension and Reflectivity Decomposition. Ph.D. Thesis, University of Houston, Houston, TX, USA, 2018. [Google Scholar]

- Bianchin, L.; Forte, E.; Pipan, M. Acoustic impedance estimation from combined harmonic reconstruction and interval velocity. Geophysics 2019, 84, 385–400. [Google Scholar]

- Wu, R.-S.; Luo, J.; Wu, B. Seismic envelope inversion and modulation signal model. Geophysics 2014, 79, WA13–WA24. [Google Scholar]

- Hu, Y.; Wu, R.-S. Instantaneous-phase encoded direct envelope inversion in the time-frequency domain and the application to subsalt inversion. In SEG Technical Program Expanded Abstracts; Society of Exploration Geophysicists: Houston, TX, USA, 2020; pp. 775–779. [Google Scholar]

- Hu, W.; Jin, Y.; Wu, X.; Chen, J. A progressive deep transfer learning approach to cycle-skipping mitigation in FWI. In SEG International Exposition and Annual Meeting; SEG: Houston, TX, USA, 2019; pp. 2348–2352. [Google Scholar]

- Li, Y.E.; Demanet, L. A short note on phase and amplitude tracking for seismic event separation. Geophysics 2015, 80, WD59–WD72. [Google Scholar] [CrossRef]

- Li, Y.E.; Demanet, L. Full Waveform Inversion with Extrapolated Low Frequency Data. Geophysics 2016, 81, R339–R348. [Google Scholar] [CrossRef]

- Sun, H.; Demanet, L. Extrapolated full-waveform inversion with deep learning. Geophysics 2020, 85, R275–R288. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S.; Lau, H.; Kouider, S. What is consciousness, and could machines have it? Science 2017, 358, 486–492. [Google Scholar] [CrossRef] [PubMed]

- Fernando, C.; Banarse, D.; Blundell, C.; Zwols, Y.; Ha, D.; Rusu, A.A.; Pritzel, A.; Wierstra, D. PathNet: Evolution Channels Gradient Descent in Super Neural Networks. arXiv 2017, arXiv:1701.08734v1. [Google Scholar]

- George, D.; Lehrach, W.; Kansky, K.; Lázaro-Gredilla, M.; Laan, C.; Marthi, B.; Lou, X.; Meng, Z.; Liu, Y.; Wang, H.; et al. A generative vision model that trains with high data efficiency and breaks text-based CAPTCHAs. Science 2017, 358, eaag2612. [Google Scholar] [CrossRef]

- Sanchez-Lengeling, B.; Aspuru-Guzik, A. Inverse molecular design using machine learning: Generative models for matter engineering. Science 2019, 361, 360–365. [Google Scholar] [CrossRef]

- Das, V.; Pollack, A.; Wollner, U.; Mukerji, T. Convolutional neural network for seismic impedance inversion. Geophysics 2019, 84, R869–R880. [Google Scholar]

- Hampson, D.; James, S.S.; John, A.Q. Use of multiattribute transforms to predict log properties from seismic data. Geophysics 2001, 66, 220–236. [Google Scholar]

- Li, J.; Castagna, J. Support Vector Machine (SVM) pattern recognition to AVO classification. Geophys. Res. Lett. 2004, 31. [Google Scholar] [CrossRef]

- Saggaf, M.M.; Toksöz, M.N.; Marhoon, M.I. Seismic facies classification and identification by competitive neural networks. Geophysics 2003, 68, 1984–1999. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for super-resolution. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1664–1673. [Google Scholar] [CrossRef]

- Anwar, S.; Barnes, N. Densely Residual Laplacian Super-Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1192–1204. [Google Scholar] [CrossRef]

- Xiong, W.; Ji, X.; Ma, Y.; Wang, Y.; AlBinHassan, N.M.; Ali, M.N.; Luo, Y. Seismic fault detection with convolutional neural network. Geophysics 2018, 83, O97–O103. [Google Scholar] [CrossRef]

- Wrona, T.; Pan, I.; Gawthorpe, R.L.; Fossen, H. Seismic facies analysis using machine learning. Geophysics 2018, 83, O83–O95. [Google Scholar] [CrossRef]

- Chopra, S.; Marfurt, K.J. Seismic facies classification using some unsupervised machine-learning methods. In SEG Technical Program Expanded Abstracts; SEG: Houston, TX, USA, 2018; pp. 2056–2059. [Google Scholar]

- Zhang, Z.; Halpert, A.D.; Bandura, L.; Coumont, A.D. Machine-learning based technique for lithology and fluid content prediction: Case study from offshore West Africa. In SEG Technical Program Expanded Abstracts; SEG: Houston, TX, USA, 2018; pp. 2271–2276. [Google Scholar]

- Alfarraj, M.; AlRegib, G. Semi-supervised Sequence Modeling for Elastic Impedance Inversion. Interpretation 2020, 7, SE237–SE249. [Google Scholar] [CrossRef]

- Russell, B. Machine learning and geophysical inversion—A numerical study. Lead. Edge 2019, 38, 498–576. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Martin, G.S. The Marmousi2 Model, Elastic Synthetic Data, and an Analysis of Imaging and AVO in a Structurally Complex Environment. Master’s Thesis, University of Houston, Houston, TX, USA, 2004. [Google Scholar]

- Versteeg, R. The Marmousi experience: Velocity model determination on a synthetic complex data set. Lead. Edge 1994, 13, 927–936. [Google Scholar] [CrossRef]

- Yilmaz, O. Seismic Data Analysis: Processing, Inversion, and Interpretation of Seismic Data; Society of Exploration Geophysicists: Tulsa, OK, USA, 2001; 1028p. [Google Scholar]

- Robinson, K. Petroleum Geology and Hydrocarbon Plays of the Permain Basin Petroleum Province West Texas and Southeast New Mexico; US Geological Survey: Reston, VA, USA, 1988.

| Attributes and Methods | AI (0.0–5.0 Hz) | |

|---|---|---|

| R2 Score | CC | |

| Conventional attributes + PNN | 0.84 | 0.94 |

| New and conventional attributes + Linear regression | 0.87 | 0.94 |

| New and conventional attributes + PNN | 0.91 | 0.96 |

| New and conventional attributes + RNN | 0.90 | 0.95 |

| New attributes and predicted low-frequency seismic data + RNN | 0.93 | 0.97 |

| Attributes and Methods | AI (Absolute) | |

|---|---|---|

| R2 Score | CC | |

| Conventional attributes + PNN | 0.82 | 0.93 |

| Raw seismic + RNN | 0.82 | 0.93 |

| Predicted low-frequency components and conventional attributes + PNN | 0.88 | 0.94 |

| Predicted low-frequency components+ Raw seismic + RNN | 0.90 | 0.95 |

| Predicted low-frequency components from RNN + Raw seismic + RNN | 0.91 | 0.96 |

| Attributes/Methods | AI (0.0–5.0 Hz) | |||||

|---|---|---|---|---|---|---|

| Test 1 R2 Score | Test 1 CC | Test 2 R2 Score | Test 2 CC | Average R2 Score | Average CC | |

| Well-log interpolation along horizons | 0.13 | 0.83 | 0.91 | 0.98 | 0.52 | 0.91 |

| Relative geological age + Instantaneous amplitude + Integrated instantaneous amplitude + Apparent thickness | 0.47 | 0.89 | 0.14 | 0.49 | 0.30 | 0.69 |

| Relative geological age + Predicted low-frequency seismic data | 0.19 | 0.82 | 0.93 | 0.98 | 0.56 | 0.90 |

| Relative age + Predicted low-frequency seismic data + Instantaneous amplitude | 0.23 | 0.86 | 0.92 | 0.98 | 0.57 | 0.92 |

| Relative geological age + RMS stack velocity + Predicted low-frequency seismic data + Instantaneous amplitude | 0.41 | 0.90 | 0.82 | 0.93 | 0.61 | 0.91 |

| AI (0–2.0 Hz) (interpolated using well logs) + Instantaneous amplitude | 0.38 | 0.89 | 0.94 | 0.98 | 0.66 | 0.94 |

| AI (0–2.0 Hz) (interpolated using well logs) + RMS stack velocity | 0.16 | 0.83 | 0.93 | 0.97 | 0.55 | 0.90 |

| AI (0–2.0 Hz) + Predicted low-frequency seismic data | 0.42 | 0.92 | 0.94 | 0.98 | 0.68 | 0.95 |

| AI (0–2.0 Hz) + Predicted low-frequency seismic data + RMS stack velocity | 0.43 | 0.93 | 0.95 | 0.98 | 0.69 | 0.95 |

| AI (0–2.0 Hz) + Predicted low-frequency seismic data + RMS stack velocity + Instantaneous amplitude | 0.39 | 0.93 | 0.91 | 0.98 | 0.65 | 0.95 |

| AI (0–2.0 Hz) + RMS stack velocity + Predicted low-frequency seismic data + Integrated instantaneous amplitude | 0.63 | 0.88 | 0.80 | 0.92 | 0.71 | 0.90 |

| AI (0–2.0 Hz) + Integrated instantaneous amplitude | 0.59 | 0.91 | 0.45 | 0.68 | 0.52 | 0.80 |

| RMS stack velocity | 0.85 | 0.93 | 0.71 | 0.94 | 0.79 | 0.94 |

| RMS stack velocity + Predicted low-frequency seismic data | 0.81 | 0.91 | 0.82 | 0.97 | 0.82 | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, L.; Castagna, J.P.; Zhang, Z.; Russell, B. Prediction of Reflection Seismic Low-Frequency Components of Acoustic Impedance Using Deep Learning. Minerals 2023, 13, 1187. https://doi.org/10.3390/min13091187

Jiang L, Castagna JP, Zhang Z, Russell B. Prediction of Reflection Seismic Low-Frequency Components of Acoustic Impedance Using Deep Learning. Minerals. 2023; 13(9):1187. https://doi.org/10.3390/min13091187

Chicago/Turabian StyleJiang, Lian, John P. Castagna, Zhao Zhang, and Brian Russell. 2023. "Prediction of Reflection Seismic Low-Frequency Components of Acoustic Impedance Using Deep Learning" Minerals 13, no. 9: 1187. https://doi.org/10.3390/min13091187

APA StyleJiang, L., Castagna, J. P., Zhang, Z., & Russell, B. (2023). Prediction of Reflection Seismic Low-Frequency Components of Acoustic Impedance Using Deep Learning. Minerals, 13(9), 1187. https://doi.org/10.3390/min13091187