Modified Wild Horse Optimizer for Constrained System Reliability Optimization

Abstract

1. Introduction

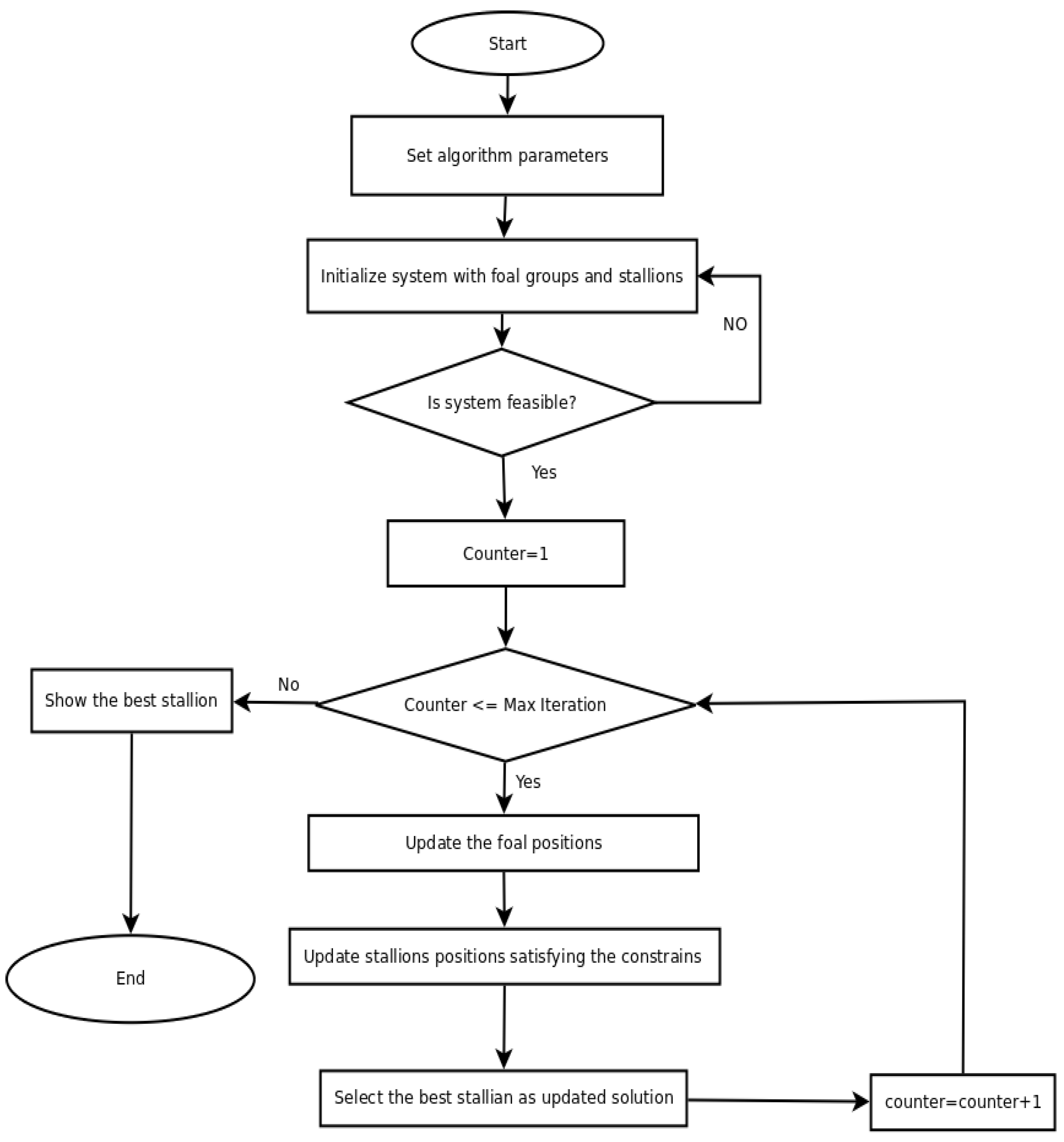

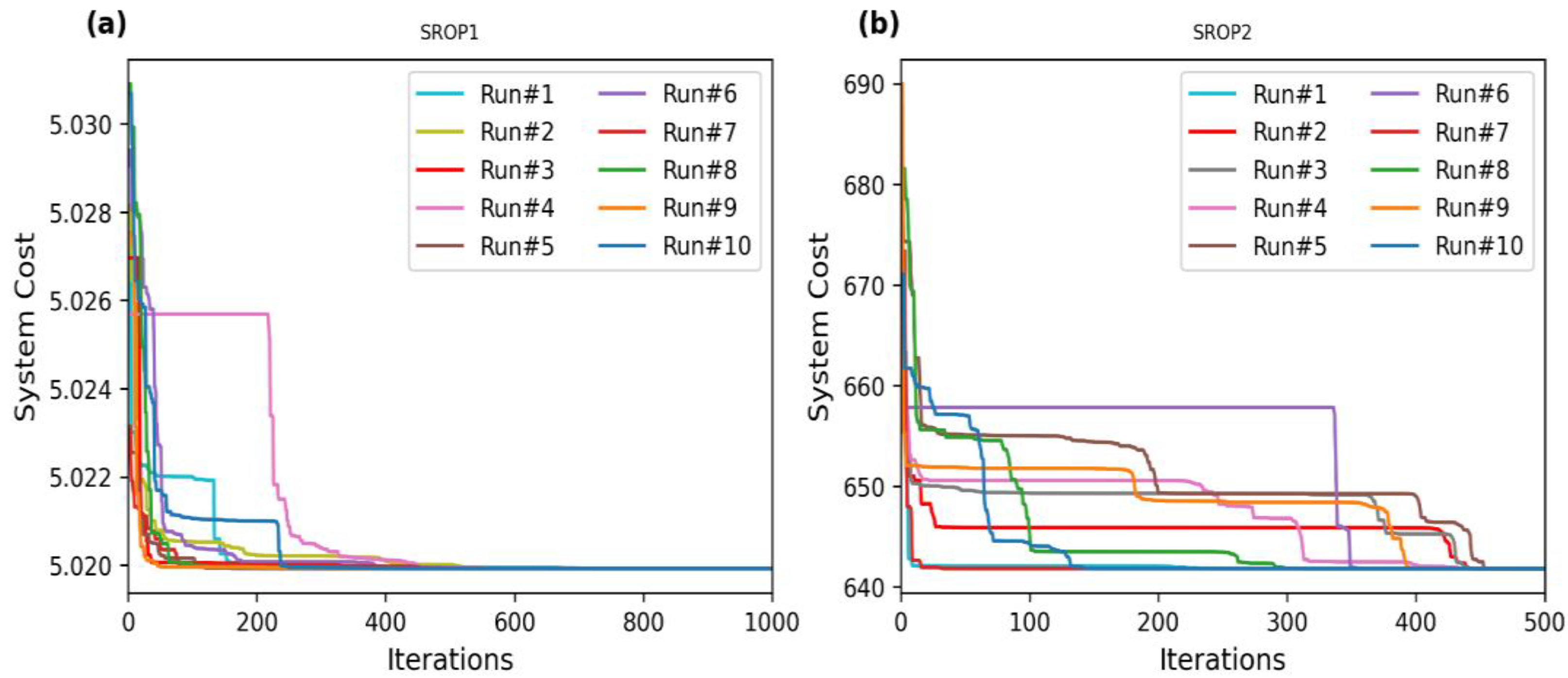

2. Modified Wild Horse Optimization (MWHO) Algorithm

2.1. Initialization, Group Construction and Stallion Selection

2.2. Grazing Behaviour of a Wild Horse

2.3. Breeding Behaviour of a Horse

2.4. Leadership Behaviour

2.5. Leader Selection and Exchange

3. Problem Description

3.1. Statement of the Optimization Problems

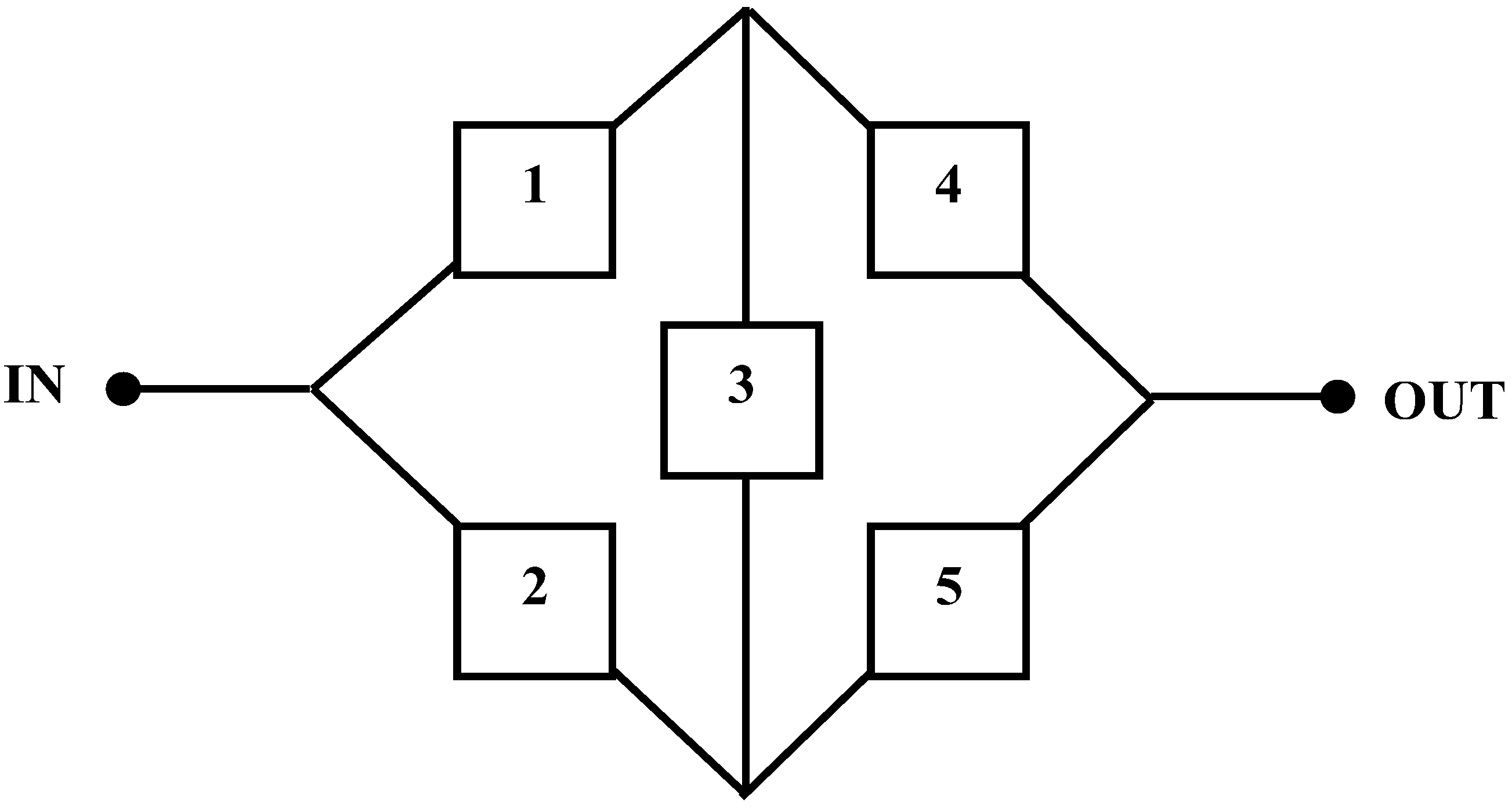

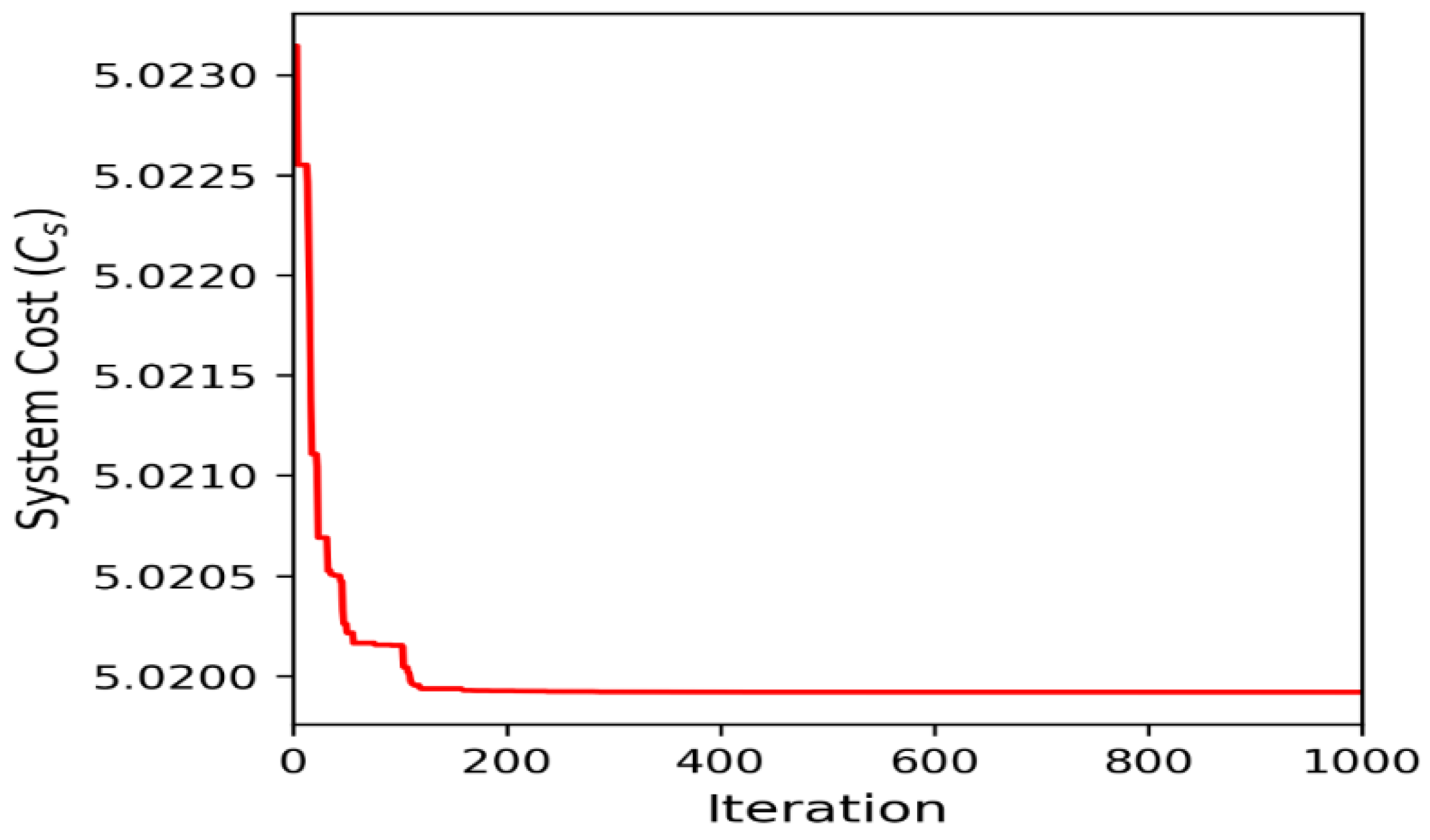

3.2. SROP 1: Complex Bridge System (CBS)

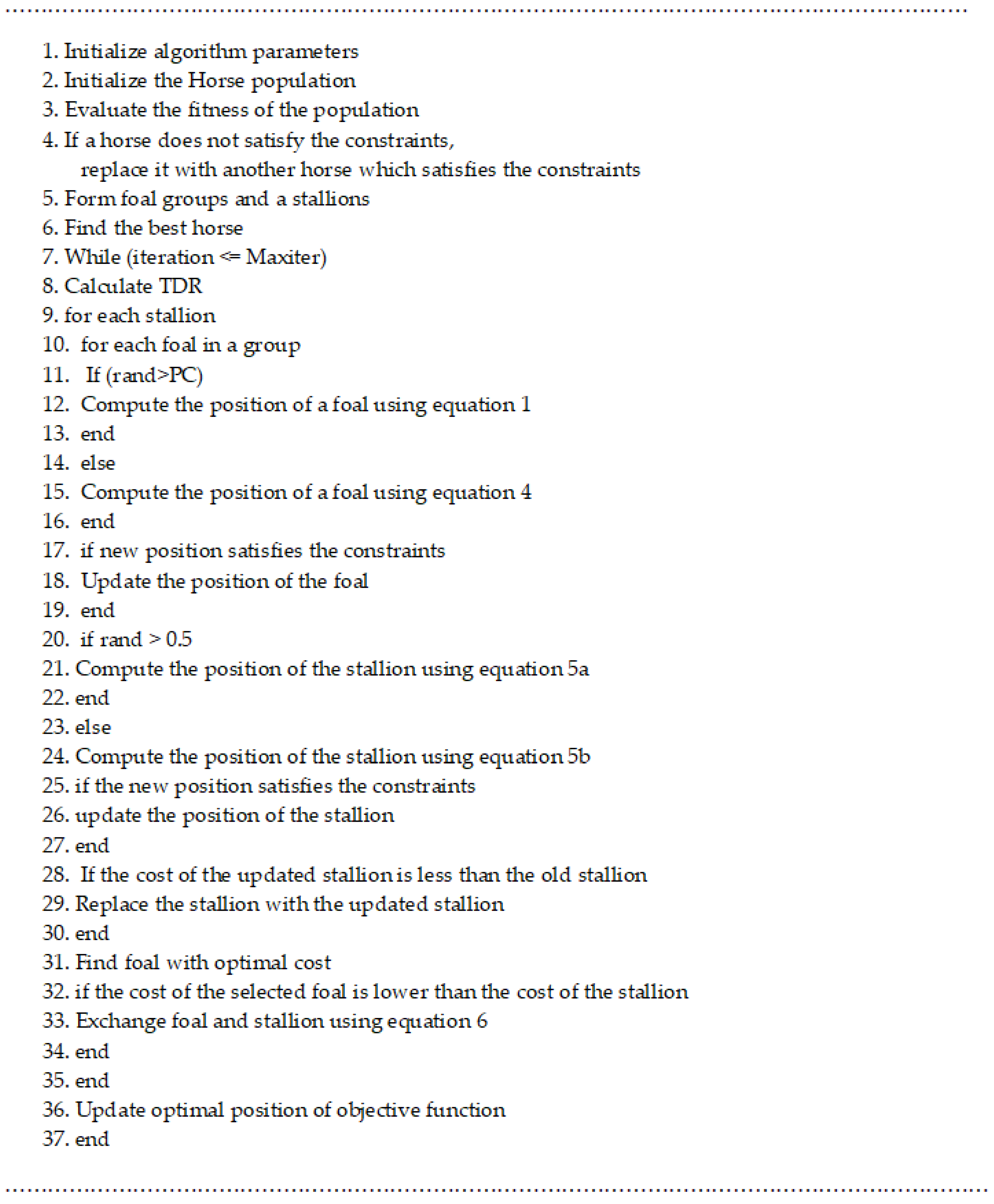

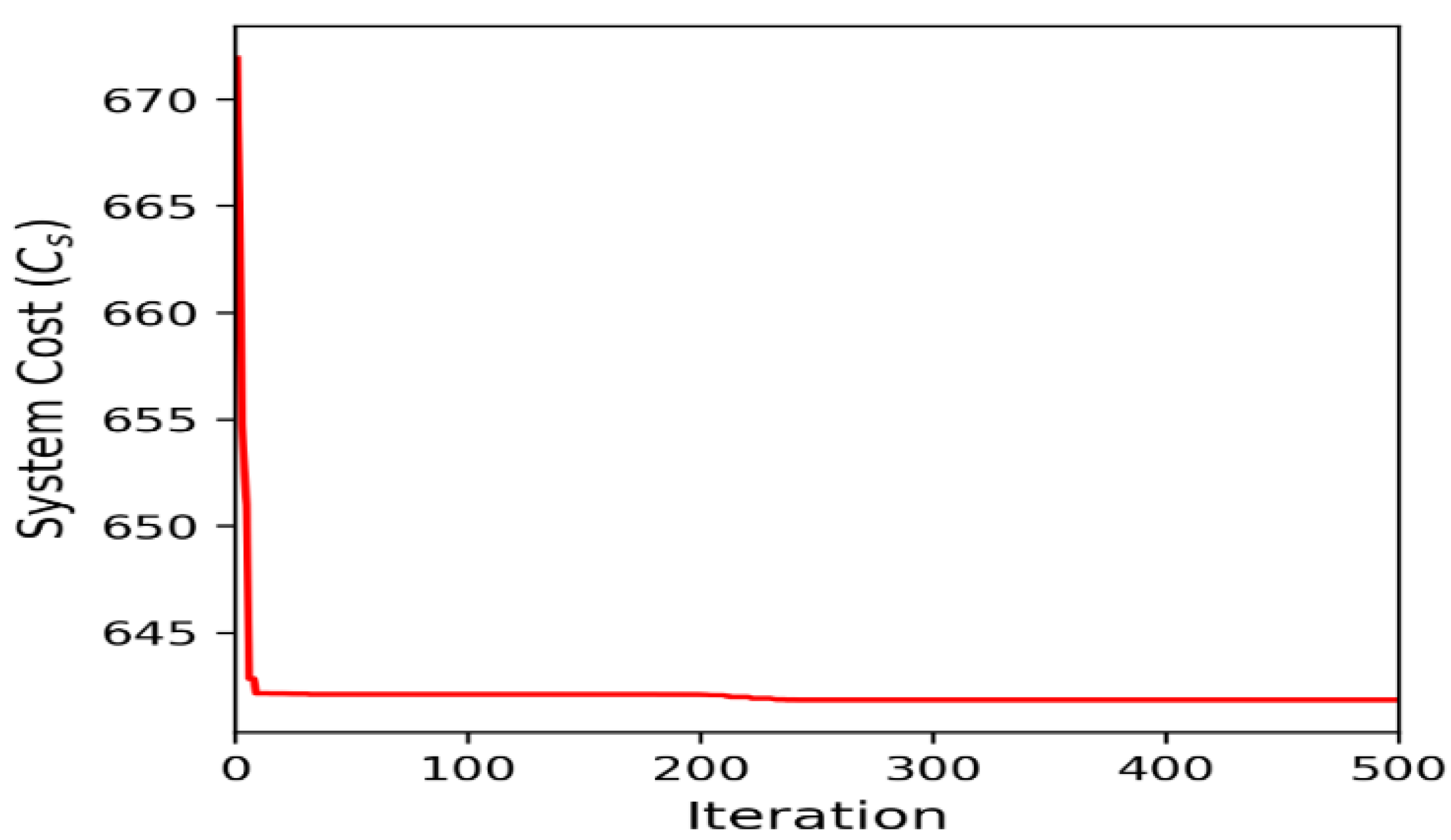

3.3. SROP 2: Life Support System in Space Capsule (LSSSC)

3.4. Engineering Optimization Problem (EOP): Pressure Vessel Design (PVD)

4. Results and Discussion

5. Conclusions and Future Scope

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Charles, E.E. An Introduction to Reliability and Maintainability Engineering; McGraw Hill: New York, NY, USA, 1997. [Google Scholar]

- Kumar, A.; Ram, M.; Pant, S.; Kumar, A. Industrial system performance under multistate failures with standby mode. In Modeling and Simulation in Industrial Engineering; Springer: Cham, Switzerland, 2018; pp. 85–100. [Google Scholar]

- Yazdi, M.; Mohammadpour, J.; Li, H.; Huang, H.Z.; Zarei, E.; Pirbalouti, R.G.; Adumene, S. Fault tree analysis improvements: A bibliometric analysis and literature review. Qual. Reliab. Eng. Int. 2023, Early View. [Google Scholar] [CrossRef]

- Li, H.; Yazdi, M.; Huang, H.Z.; Huang, C.G.; Peng, W.; Nedjati, A.; Adesina, K.A. A fuzzy rough copula Bayesian network model for solving complex hospital service quality assessment. Complex Intell. Syst. 2023, 1–27. [Google Scholar] [CrossRef]

- Pant, S.; Kumar, A.; Ram, M. Reliability optimization: A particle swarm approach. In Advances in Reliability and System Engineering; Springer: Cham, Switzerland, 2017; pp. 163–187. [Google Scholar]

- Kumar, A.; Pant, S.; Ram, M.; Yadav, O. (Eds.) Meta-Heuristic Optimization Techniques: Applications in Engineering; Walter de Gruyter GmbH & Co KG: Berlin, Germany, 2022; Volume 10. [Google Scholar]

- Coit, D.W.; Smith, A.E. Reliability optimization of series-parallel systems using a genetic algorithm. IEEE Trans. Reliab. 1996, 45, 254–260. [Google Scholar] [CrossRef]

- Liang, Y.-C.; Smith, A.E. An ant colony optimization algorithm for the redundancy allocation problem (RAP). IEEE Trans. Reliab. 2004, 53, 417–423. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Modibbo, U.M.; Arshad, M.; Abdalghani, O.; Ali, I. Optimization and estimation in system reliability allocation problem. Reliab. Eng. Syst. Saf. 2021, 212, 107620. [Google Scholar] [CrossRef]

- Ouzineb, M.; Nourelfath, M.; Gendreau, M. Tabu search for the redundancy allocation problem of homogenous series–parallel multi-state systems. Reliab. Eng. Syst. Saf. 2008, 93, 1257–1272. [Google Scholar] [CrossRef]

- Beji, N.; Jarboui, B.; Eddaly, M.; Chabchoub, H. A hybrid particle swarm optimization algorithm for the redundancy allocation problem. J. Comput. Sci. 2010, 1, 159–167. [Google Scholar] [CrossRef]

- Kumar, A.; Pant, S.; Ram, M. System reliability optimization using gray wolf optimizer algorithm. Qual. Reliab. Eng. Int. 2017, 33, 1327–1335. [Google Scholar] [CrossRef]

- Hsieh, Y.-C.; You, P.-S. An effective immune based two-phase approach for the optimal reliability–redundancy allocation problem. Appl. Math. Comput. 2011, 218, 1297–1307. [Google Scholar] [CrossRef]

- Wu, P.; Gao, L.; Zou, D.; Li, S. An improved particle swarm optimization algorithm for reliability problems. ISA Trans. 2011, 50, 71–81. [Google Scholar] [CrossRef]

- Zou, D.; Gao, L.; Li, S.; Wu, J. An effective global harmony search algorithm for reliability problems. Expert Syst. Appl. 2011, 38, 4642–4648. [Google Scholar] [CrossRef]

- Hsieh, T.-J.; Yeh, W.-C. Penalty guided bees search for redundancy allocation problems with a mix of components in series–parallel systems. Comput. Oper. Res. 2012, 39, 2688–2704. [Google Scholar] [CrossRef]

- Lins, I.D.; Droguett, E.L. Redundancy allocation problems considering systems with imperfect repairs using multi-objective genetic algorithms and discrete event simulation. Simul. Model. Pract. Theory 2011, 19, 362–381. [Google Scholar] [CrossRef]

- Wang, L.; Li, L.-P. A coevolutionary differential evolution with harmony search for reliability–redundancy optimization. Expert Syst. Appl. 2012, 39, 5271–5278. [Google Scholar] [CrossRef]

- Wang, Y.; Li, L. Heterogeneous redundancy allocation for series-parallel multi-state systems using hybrid particle swarm optimization and local search. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2012, 42, 464–474. [Google Scholar] [CrossRef]

- Pourdarvish, A.; Ramezani, Z. Cold standby redundancy allocation in a multi-level series system by memetic algorithm. Int. J. Reliab. Qual. Saf. Eng. 2013, 20, 1340007. [Google Scholar] [CrossRef]

- Valian, E.; Valian, E. A cuckoo search algorithm by Lévy flights for solving reliability redundancy allocation problems. Eng. Optim. 2013, 45, 1273–1286. [Google Scholar] [CrossRef]

- Valian, E.; Tavakoli, S.; Mohanna, S.; Haghi, A. Improved cuckoo search for reliability optimization problems. Comput. Ind. Eng. 2013, 64, 459–468. [Google Scholar] [CrossRef]

- Afonso, L.D.; Mariani, V.C.; dos Santos Coelho, L. Modified imperialist competitive algorithm based on attraction and repulsion concepts for reliability-redundancy optimization. Expert Syst. Appl. 2013, 40, 3794–3802. [Google Scholar] [CrossRef]

- Ardakan, M.A.; Hamadani, A.Z. Reliability optimization of series–parallel systems with mixed redundancy strategy in subsystems. Reliab. Eng. Syst. Saf. 2014, 130, 132–139. [Google Scholar] [CrossRef]

- Yeh, W.-C. Orthogonal simplified swarm optimization for the series–parallel redundancy allocation problem with a mix of components. Knowl. Based Syst. 2014, 64, 1–12. [Google Scholar] [CrossRef]

- Kumar, A.; Negi, G.; Pant, S.; Ram, M.; Dimri, S.C. Availability-Cost Optimization of Butter Oil Processing System by Using Nature Inspired Optimization Algorithms. Reliab. Theory Appl. 2021, 64, 188–200. [Google Scholar]

- Huang, C.-L. A particle-based simplified swarm optimization algorithm for reliability redundancy allocation problems. Reliab. Eng. Syst. Saf. 2015, 142, 221–230. [Google Scholar] [CrossRef]

- He, Q.; Hu, X.; Ren, H.; Zhang, H. A novel artificial fish swarm algorithm for solving large-scale reliability–redundancy application problem. ISA Trans. 2015, 59, 105–113. [Google Scholar] [CrossRef]

- Ardakan, M.A.; Hamadani, A.Z.; Alinaghian, M. Optimizing bi-objective redundancy allocation problem with a mixed redundancy strategy. ISA Trans. 2015, 55, 116–128. [Google Scholar] [CrossRef]

- Zhuang, J.; Li, X. Allocating redundancies to k-out-of-n systems with independent and heterogeneous components. Commun. Stat.-Theory Methods 2015, 44, 5109–5119. [Google Scholar] [CrossRef]

- Garg, H. An approach for solving constrained reliability-redundancy allocation problems using cuckoo search algorithm. Beni-Suef Univ. J. Basic Appl. Sci. 2015, 4, 14–25. [Google Scholar] [CrossRef]

- Mellal, M.A.; Zio, E. A penalty guided stochastic fractal search approach for system reliability optimization. Reliab. Eng. Syst. Saf. 2016, 152, 213–227. [Google Scholar] [CrossRef]

- Abouei Ardakan, M.; Sima, M.; Zeinal Hamadani, A.; Coit, D.W. A novel strategy for redundant components in reliability-redundancy allocation problems. IIE Trans. 2016, 48, 1043–1057. [Google Scholar] [CrossRef]

- Gholinezhad, H.; Hamadani, A.Z. A new model for the redundancy allocation problem with component mixing and mixed redundancy strategy. Reliab. Eng. Syst. Saf. 2017, 164, 66–73. [Google Scholar] [CrossRef]

- Kim, H.; Kim, P. Reliability models for a nonrepairable system with heterogeneous components having a phase-type time-to-failure distribution. Reliab. Eng. Syst. Saf. 2017, 159, 37–46. [Google Scholar] [CrossRef]

- Kim, H. Maximization of system reliability with the consideration of component sequencing. Reliab. Eng. Syst. Saf. 2018, 170, 64–72. [Google Scholar] [CrossRef]

- Garg, H. A hybrid GSA-GA algorithm for constrained optimization problems. Inf. Sci. 2019, 478, 499–523. [Google Scholar] [CrossRef]

- Al-Azzoni, I.; Iqbal, S. Meta-heuristics for solving the software component allocation problem. IEEE Access 2020, 8, 153067–153076. [Google Scholar] [CrossRef]

- Kumar, A.; Pant, S.; Ram, M. Gray wolf optimizer approach to the reliability-cost optimization of residual heat removal system of a nuclear power plant safety system. Qual. Reliab. Eng. Int. 2019, 35, 2228–2239. [Google Scholar] [CrossRef]

- Negi, G.; Kumar, A.; Pant, S.; Ram, M. Optimization of complex system reliability using hybrid grey wolf optimizer. Decis. Mak. Appl. Manag. Eng. 2021, 4, 241–256. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. 2021, 38, 3025–3056. [Google Scholar] [CrossRef]

- Li, H.; Soares, C.G.; Huang, H.Z. Reliability analysis of a floating offshore wind turbine using Bayesian Networks. Ocean Eng. 2020, 217, 107827. [Google Scholar] [CrossRef]

- Pant, S.; Garg, P.; Kumar, A.; Ram, M.; Kumar, A.; Sharma, H.K.; Klochkov, Y. AHP-based multi-criteria decision-making approach for monitoring health management practices in smart healthcare system. Int. J. Syst. Assur. Eng. Manag. 2023, 1–12. [Google Scholar] [CrossRef]

- Li, H.; Díaz, H.; Soares, C.G. A failure analysis of floating offshore wind turbines using AHP-FMEA methodology. Ocean. Eng. 2021, 234, 109261. [Google Scholar] [CrossRef]

| Literature Reviewed | SROP Type | Optimization Technique Used |

|---|---|---|

| Modibbo et al. [10] | ReAP | Simulation algorithm (SA) |

| Ouzineb et al. [11] | RAP | Tabu search (TS) |

| Beji et al. [12] | RAP | Hybrid particle swarm optimizer (HPSO) |

| Kumar et al. [13] | ReAP | Gray wolf optimizer (GWO) |

| Hsieh and You [14] | RRAP | Artificial immune search algorithm (AISA) |

| Wu et al. [15] | RRAP | Improved PSO (IPSO) |

| Zou et al. [16] | RRAP | PSO and harmony search algorithm (HSA) |

| Hsieh and Yeah [17] | RAP | Bee colony algorithm (BCA) |

| Lins and Droguett [18] | RAP | Genetic algorithm (GA) |

| Wang and Li [19] | RRAP | HAS |

| Wang and Li [20] | RAP | HPSO and local search algorithm (LSA) |

| Pourdarvish and Ramezani [21] | RAP | Memetic algorithm (MA) |

| Valian and Valian [22] | RRAP | Cuckoo search algorithm (CSA) |

| Valian et al. [23] | RAP | Improved CSA (ICSA) |

| Afonso et al. [24] | RRAP | Modified imperialist competitive algorithm (MICA) |

| Ardakan and Hamadani [25] | RAP | GA |

| Yeh [26] | RAP | Orthogonal simplified swarm optimization (OSSO) |

| Kumar et al. [27] | ReAP | CSA and GWO |

| Huang [28] | RRAP | Particle-based SSO |

| He et al. [29] | RRAP | Novel artificial fish swarm optimization (NAFSO) |

| Ardakan et al. [30] | RRAP | Non-sorting GA II (NSGA II) |

| Zhuang and Li [31] | RAP | Stochastic order technique (SOT) |

| Garg [32] | RRAP | CSA |

| Mellal and Zio [33] | RAP | Penalty-guided stochastic fractal search algorithm (PSFSA) |

| Abouei et al. [34] | RRAP | Modified GA (MGA) |

| Gholinezhad and Hamadani [35] | RAP | GA |

| Kim & Kim [36] | RAP | Continuous-time Markov chain technique (CMCT) |

| Kim [37] | RAP | Matrix-analytic technique (MAT) |

| Garg [38] | ReAP | GSA (gravitational search algorithm)- GA |

| Al-Azzoni and Iqbal [39] | ReAP | GA and ACO |

| Kumar et al. [40] | ReAP | GWO |

| Negi et al. [41] | ReAP | Hybrid GWO (HGWO) |

| Parameter | Rsys. | Minimum (Best) | Maximum (Worst) | Mean | Standard Deviation | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Run1 | 5.0199282842 | 0.9360195664 | 0.9337034472 | 0.7981921280 | 0.9371237883 | 0.9320169548 | 0.9900000000 | 5.0199282842 | 5.0309085098 | 5.0201892919 | 0.0013487858 |

| Run 2 | 5.0199280124 | 0.9355263294 | 0.9334803358 | 0.7822209813 | 0.9343527087 | 0.9371561818 | 0.9900000000 | 5.0199280124 | 5.0230545661 | 5.0200296731 | 0.0003101977 |

| Run 3 | 5.0199184060 | 0.9349331779 | 0.9348248186 | 0.7913341473 | 0.9353969594 | 0.9344941166 | 0.9900000000 | 5.0199184060 | 5.0231438177 | 5.0200024039 | 0.0003699874 |

| Run 4 | 5.0199242231 | 0.9340927010 | 0.9339336516 | 0.7986320543 | 0.9343808422 | 0.9365264065 | 0.9900000000 | 5.0199242231 | 5.0274058684 | 5.0202714576 | 0.0008451419 |

| Run 5 | 5.0199278748 | 0.9340675052 | 0.9347335655 | 0.8031105659 | 0.9327614533 | 0.9368741416 | 0.9900000000 | 5.0199278748 | 5.0269632952 | 5.0200872892 | 0.0009139384 |

| Run 6 | 5.0199261851 | 0.9327023268 | 0.9363847777 | 0.7910815478 | 0.9334170090 | 0.9370704737 | 0.9900000000 | 5.0199261851 | 5.0256903672 | 5.0213169039 | 0.0023479312 |

| Run 7 | 5.0199270145 | 0.9345176482 | 0.9339328057 | 0.7935430577 | 0.9333222283 | 0.9376149951 | 0.9900000000 | 5.0199270145 | 5.0275383672 | 5.0200320369 | 0.0007645991 |

| Run 8 | 5.0199260865 | 0.9336921006 | 0.9370912052 | 0.7980113819 | 0.9331846134 | 0.9349835171 | 0.9900000000 | 5.0199260865 | 5.0281582787 | 5.0202224804 | 0.0008470497 |

| Run 9 | 5.0199261773 | 0.9367178093 | 0.9337026258 | 0.7966995010 | 0.9361658666 | 0.9324564062 | 0.9900000000 | 5.0199261773 | 5.0294137197 | 5.0203531091 | 0.0014706063 |

| Run 10 | 5.0199194160 | 0.9351343121 | 0.9349204035 | 0.7930024286 | 0.9356598048 | 0.9337626176 | 0.9900000000 | 5.0199194160 | 5.0306942338 | 5.0204067134 | 0.0013594992 |

| Parameter | Rsys. | Minimum (Best) | Maximum (Worst) | Mean | Standard Deviation | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Run 1 | 641.8235682227 | 0.5000000000 | 0.8389200456 | 0.5000000000 | 0.5000000548 | 0.9000000000 | 641.8235682227 | 671.0551405545 | 644.2835421315 | 5.5640172063 |

| Run 2 | 641.8235623261 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8235623261 | 671.9281071267 | 642.1114292308 | 1.8683118611 |

| Run 3 | 641.8235623261 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8235623261 | 681.5619277020 | 645.2677115347 | 6.3491794550 |

| Run 4 | 641.8235623263 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8235623263 | 667.5127214183 | 645.5943686963 | 2.2888766297 |

| Run 5 | 641.8235623262 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8235623262 | 681.9891680944 | 648.0256628239 | 3.4253433355 |

| Run 6 | 641.8354495645 | 0.5004169091 | 0.8384997756 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8354495645 | 673.3826280527 | 642.1138823691 | 2.5553654839 |

| Run 7 | 641.8789866199 | 0.5019360000 | 0.8369721277 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8789866199 | 689.9675908673 | 648.2237515217 | 4.1702652678 |

| Run 8 | 641.8235623262 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8235623262 | 689.8633502043 | 647.1428418598 | 4.5464971105 |

| Run 9 | 641.8235624518 | 0.5000000000 | 0.8389201018 | 0.5000000000 | 0.5000000000 | 0.9000000003 | 641.8235624518 | 687.8662298184 | 650.8092023998 | 5.4946777022 |

| Run 10 | 641.8449422722 | 0.5007491730 | 0.8381651189 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 641.8449422722 | 657.8461365447 | 652.7289293272 | 7.3862098280 |

| Parameter | Minimum (Best) | Maximum (Worst) | Mean | Standard Deviation | |||||

|---|---|---|---|---|---|---|---|---|---|

| Run 1 | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 5885.332774 | 188,242.5454 | 6005.540996 | 2557.519377 |

| Run 2 | 5923.354381 | 0.799805064 | 0.395344057 | 41.44067687 | 184.9614972 | 5923.354381 | 213,759.7422 | 6033.154867 | 3775.860149 |

| Run 3 | 6003.185713 | 0.841774819 | 0.416089729 | 43.61527557 | 158.7055172 | 6003.185713 | 554,447.4169 | 6185.216133 | 6475.883025 |

| Run 4 | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 5885.332774 | 601,327.7629 | 6124.196893 | 9227.022274 |

| Run 5 | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 5885.332774 | 171,610.9702 | 6031.333811 | 2601.655011 |

| Run 6 | 5887.683154 | 0.77954125 | 0.385327644 | 40.39073833 | 199.0123263 | 5887.683154 | 84,546.53112 | 5972.584245 | 2096.40849 |

| Run 7 | 5955.328956 | 0.817134416 | 0.403909965 | 42.33857078 | 173.6836087 | 5955.328956 | 119,618.6572 | 6021.663479 | 1934.093259 |

| Run 8 | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 5885.332774 | 204,567.4258 | 6013.034189 | 3430.863708 |

| Run 9 | 5900.162061 | 0.786749181 | 0.388890528 | 40.76420625 | 193.9022357 | 5900.162061 | 344,169.3199 | 6096.501464 | 4300.237632 |

| Run 10 | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 5885.332774 | 131,447.596 | 5991.645744 | 3183.610924 |

| Parameter | Rsys. | FE | ||||||

|---|---|---|---|---|---|---|---|---|

| MWHO | 5.0199184060 | 0.9349331779 | 0.9348248186 | 0.7913341473 | 0.9353969594 | 0.9344941166 | 0.9900000000 | 40,000 |

| PSO | 5.019918 | 0.935028 | 0.791948 | 0.935005 | 0.934735 | 0.934821 | 0.990000 | 120,000 |

| GWO | 5.019900 | 0.934100 | 0.936350 | 0.791370 | 0.933880 | 0.935650 | 0.990028 | 9000 |

| CSA | 5.019980 | 0.935546 | 0.788534 | 0.941231 | 0.927708 | 0.934900 | 0.990000 | 60,000 |

| ACO | 5.019923 | 0.935073 | 0.798365 | 0.935804 | 0.934223 | 0.933869 | 0.990001 | 80,160 |

| Parameter | Rsys. | FE | |||||

|---|---|---|---|---|---|---|---|

| MWHO | 641.8235623261 | 0.5000000000 | 0.8389201009 | 0.5000000000 | 0.5000000000 | 0.9000000000 | 20,000 |

| PSO | 641.823562 | 0.838920 | 0.500000 | 0.500000 | 0.500000 | 0.900000 | 2040 |

| GWO | 641.823600 | 0.500000 | 0.838920 | 0.500000 | 0.500000 | 0.900000 | 50,000 |

| CSA | 641.823563 | 0.838920 | 0.500000 | 0.500000 | 0.500000 | 0.900000 | 15,000 |

| ACO | 641.823562 | 0.838920 | 0.500000 | 0.500000 | 0.500000 | 0.900000 | 20,100 |

| Parameter | FE | |||||

|---|---|---|---|---|---|---|

| MWHO | 5885.332774 | 0.778168641 | 0.384649163 | 40.31961872 | 200 | 4000 |

| PSO | 6061.0777 | 0.812500 | 0.437500 | 42.091266 | 176.746500 | - |

| GWO | 6051.5639 | 0.812500 | 0.434500 | 42.089181 | 176.758731 | - |

| GA | 6288.7445 | 0.812500 | 0.434500 | 40.323900 | 200.000000 | - |

| ACO | 6059.0888 | 0.812500 | 0.437500 | 42.103624 | 176.572656 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, A.; Pant, S.; Singh, M.K.; Chaube, S.; Ram, M.; Kumar, A. Modified Wild Horse Optimizer for Constrained System Reliability Optimization. Axioms 2023, 12, 693. https://doi.org/10.3390/axioms12070693

Kumar A, Pant S, Singh MK, Chaube S, Ram M, Kumar A. Modified Wild Horse Optimizer for Constrained System Reliability Optimization. Axioms. 2023; 12(7):693. https://doi.org/10.3390/axioms12070693

Chicago/Turabian StyleKumar, Anuj, Sangeeta Pant, Manoj K. Singh, Shshank Chaube, Mangey Ram, and Akshay Kumar. 2023. "Modified Wild Horse Optimizer for Constrained System Reliability Optimization" Axioms 12, no. 7: 693. https://doi.org/10.3390/axioms12070693

APA StyleKumar, A., Pant, S., Singh, M. K., Chaube, S., Ram, M., & Kumar, A. (2023). Modified Wild Horse Optimizer for Constrained System Reliability Optimization. Axioms, 12(7), 693. https://doi.org/10.3390/axioms12070693