Abstract

Nonlinear problems, which often arise in various scientific and engineering disciplines, typically involve nonlinear equations or functions with multiple solutions. Analytical solutions to these problems are often impossible to obtain, necessitating the use of numerical techniques. This research proposes an efficient and stable Caputo-type inverse numerical fractional scheme for simultaneously approximating all roots of nonlinear equations, with a convergence order of . The scheme is applied to various nonlinear problems, utilizing dynamical analysis to determine efficient initial values for a single root-finding Caputo-type fractional scheme, which is further employed in inverse fractional parallel schemes to accelerate convergence rates. Several sets of random initial vectors demonstrate the global convergence behavior of the proposed method. The newly developed scheme outperforms existing methods in terms of accuracy, consistency, validation, computational CPU time, residual error, and stability.

Keywords:

nonlinear problems; convergence theorem; local error; stability analysis; dynamical planes MSC:

65H04; 65H05; 65H10; 65H17

1. Introduction

Solving nonlinear equations is a fundamental problem in science and engineering, with a history dating back to the early days of modern mathematics. These equations, characterized by non-trivial relationships between variables, are crucial for simulating and understanding complex natural phenomena such as biological interactions, turbulent fluid dynamics, and chaotic systems [1,2,3]. The importance of solving nonlinear equations lies in their ability to provide precise descriptions and predictions of these systems, thereby leading to significant advances across various scientific and engineering disciplines [4]. Recent developments in computational methods and the increasing complexity of modern engineering problems have heightened the need for efficient and accurate solutions to nonlinear equations. For instance, in physics, solving Maxwell’s equations for electromagnetism [5] and the Navier–Stokes equations for fluid dynamics [6] is essential for understanding and predicting electromagnetic wave propagation [7] and turbulent flows [8]. These solutions are critical for designing advanced technologies in telecommunications, aerospace, and renewable energy [9,10]. In engineering, nonlinear equations are used to develop control systems that optimize performance and ensure stability in sectors such as aerospace, automotive, and manufacturing. The design of structures to withstand dynamic loads and the creation of sophisticated algorithms for digital signal processing also rely heavily on these equations [11]. In biology and medicine, nonlinear models help replicate the behavior of complex biological systems [12], enhance our understanding of brain networks [13], and improve disease prediction models [14]. Despite their significance, solving nonlinear equations remains a difficult task due to the equations’ inherent complexity and the need for high computational resources. Recent progress in numerical techniques, such as adaptive methods, machine learning algorithms, and parallel computing, offer promising routes for tackling these challenges.

In this paper, we address the solution of fractional differential equations of the form

where is free parameter and Differential equations of both integer and fractional orders [15] are crucial for simulating phenomena in physical science and engineering that require precise solutions [16]. Fractional-order differential equations, for example, effectively describe the memory and hereditary characteristics of viscoelastic materials and anomalous diffusion processes [17]. Accurate solutions to these equations are critical for understanding and designing systems with complex behaviors. Solving fractional nonlinear problems

requires advanced numerical iterative methods to obtain approximate solutions, see e.g., [18,19,20]. The intrinsic non-locality of these type of models, where the derivative at a point depends on the entire function’s history, makes them notoriously challenging to solve both analytically and numerically. Exact techniques [21], analytical techniques [22,23,24], or direct numerical methods, such as explicit single-step methods [25], multi-step methods [26], and hybrid block methods [27], have significant limitations, including high computational costs, stability issues, and sensitivity to small errors.

Numerical techniques for solving such equations can be classified into two groups: those that find a single solution at a time and those that find all solutions simultaneously. Well-known methods for finding simple roots include the Newton method [28], the Halley method [29], the Chun method [30], the Ostrowski method [31], the King method [32], the Shams method [33], the Cordero method [34], the Mir method [35], the Samima method [36], and the Neta method [37]. For multi-step methods, see, for example, Refs. [38,39] and references therein. Recent studies by Torres-Hernandez et al. [40], Akgül et al. [41], Gajori et al. [42], and Kumar et al. [43] describe fractional versions of single root-finding techniques with various fractional derivatives. These techniques are versatile and often straightforward to implement but have several significant drawbacks. While these methods converge rapidly near initial guesses, they can diverge if the guess is far from the solution or if the function is complex. They are sensitive to initial assumptions, requiring precise estimations for each root, making them time-consuming and computationally intensive. Evaluating both the function and its derivative increases computational costs, especially for complex functions. Additionally, distinguishing between real and complex roots can be challenging without modifications. In contrast, parallel root-finding methods offer greater stability, consistency, and global convergence compared to single root-finding techniques. They can be implemented on parallel computer architectures, utilizing multiple processes to approximate all solutions to (2) simultaneously.

Among parallel numerical schemes, the Weierstrass–Durand–Kerner approach [44] is particularly attractive from a computational standpoint. This method is given by

where

is Weierstrass’ correction. Method (3) has local quadratic convergence. Nedzibov et al. [45] present the modified Weierstrass method,

also known as the inverse Weierstrass method, which has quadratic convergence. The inverse parallel schemes outperform the classical simultaneous methods because the inverse parallel scheme efficiently handles nonlinear equations by utilizing parallel processing to accelerate convergence. It reduces computing time and improves accuracy by dynamically adapting to the unique properties of the problem. This strategy is especially useful in large-scale or complicated systems where conventional methods may be too slow or ineffectual. Shams et al. [46] presented the following inverse parallel scheme:

Inverse parallel scheme (6) has local quadratic convergence.

In 1977, Ehrlich [47] introduced a convergent simultaneous method of the third order given by

where Using as a correction in (4), Petkovic et al. [48] accelerated the convergence order from three to six:

where , and .

Except for the Caputo derivative, all fractional-type derivatives fail to satisfy if is not a natural number. Therefore, we will cover some basic ideas in fractional calculus, as well as the fractional iterative approach for solving nonlinear equations using Caputo-type derivatives.

Definition 1

(Gamma Function). The Gamma function, also known as the generalized factorial function, is defined as follows [49]:

where , , and for

Definition 2

(Caputo Fractional Derivative). For

the Caputo fractional derivative [50] of order ψ is defined as

where is the Gamma function with

Theorem 1.

Suppose for where . Then, the Generalized Taylor Formula [51] is given by

where

and

Consider the Caputo-type Taylor expansion of near as

Taking as a common factor, we have

where

The corresponding Caputo-type derivative of arround is

These expansions are used in the convergence analysis of the proposed method.

Using the Caputo-type fractional version of the classical Newton’s method, Candelario et al. [52] presented the following variant:

where for any The error equation satisfied by the fractional Newton method’s order of convergence, which is , is

where and and

The rest of the study is organized as follows: following the introduction, Section 2 investigates the construction, convergence, and stability analysis of fractional-order schemes for solving (2). Section 3 presents the development and analysis of a simultaneous method for determining all solutions to nonlinear equations. Section 4 evaluates the efficiency and stability of the proposed approach through numerical results and compares it with existing methods. Finally, Section 5 concludes the paper with a summary of findings and suggestions for future research.

2. Fractional Scheme Construction and Analysis

The fractional-order iterative method is a powerful tool for solving nonlinear equations, offering faster and more accurate convergence compared to classical algorithms. Shams et al. [53] proposed the following single-step fractional iterative method as

The order of convergence of the method in (19) is , which satisfies the following error equation:

where , and The Caputo-type fractional version of (20) was proposed in [54] as

where and for any The order of convergence of the (21) technique is , which satisfies the following error equation:

where , and

In this paper, we focus on the technique described in [55], which offers faster convergence speed, higher accuracy, better processing efficiency, and more robustness compared to other single root-finding methods. We extend this method to handle fractional derivatives, enabling the more precise modeling of systems with memory and non-local effects. The original method is given by

where By incorporating the Caputo-type fractional derivative into (23), we propose the following fractional version of the single root finding method:

where . We abbreviate this method as .

2.1. Convergence Analysis

For the iterative scheme (23), we prove the following theorem to establish its order of convergence.

Theorem 2.

Let

be a continuous function with fractional derivatives of order for any and , containing the exact root ξ of in the open interval ᘐ. Let us suppose be continuous and not null at ξ. Furthermore, for a sufficiently close starting value , the convergence order of the Caputo-type fractional iterative scheme

is at least , and the error equation is

where ,

,

and

Proof.

Let be a root of g and . By the Taylor series expansion of and around taking we get

and

where , ,

Next,

and

where

Using the generalized binomial theorem where expanding around we have

where

Then,

where

Therefore, using in second step, we have

where

Thus

where

Thus

Hence, the theorem is proven. □

2.2. Stability Analysis of the -Scheme

The stability of single root-finding methods for nonlinear equations is crucial for ensuring the reliability and robustness of the iterative solution process [56]. Stability, in this context, refers to a method’s ability to converge to a real root from an initial guess, even when minor perturbations or errors occur in the calculations. Single root-finding approaches exhibit local convergence around the root, making them effective when the initial guess is sufficiently close to the exact root. However, their stability is influenced by the nature of the function and the initial estimate. If the function is poorly behaved or the initial estimate is far from the root, single root-finding methods may diverge or converge to extraneous fixed points unrelated to the actual roots of the nonlinear equations [57]. The stability of single root-finding methods can be evaluated using concepts from complex dynamical systems, which measure the sensitivity of the root to changes in the input, and convergence criteria, which assess how rapidly the method approaches the root. To minimize the impact of computational errors and ensure consistent and reliable root-finding performance, stability is often achieved by balancing the method’s inherent convergence properties with the careful selection of the initial guess, function parameters, and stopping criteria [58,59]. The following rational map is obtained as

where

For , we have

where Thus, depends on , and the variable. Using Möbius transformation we see is conjugate with operator

for and independent of a and b. Therefore, exactly fits with:

which has interesting properties [60].

The next proposition examines the fixed points of the rational map, which are essential for understanding the behavior and convergence properties of these schemes.

Proposition 1.

The fixed points of are as follows:

- and are super attracting points.

- is a repelling point.

- The critical points are 0 and 1, which are super attracting and repelling points, respectively, for

Proof.

The fixed points of are determined by solving

Therefore, 0 is the fixed points. Further solving

gives the remaining fixed points. Furthermore, so is also a fixed point. The derivative of is

Thus, the critical points are and . Evaluating the derivative at these points, indicates that 0 is a super attracting point and indicates that 1 is a repelling point. □

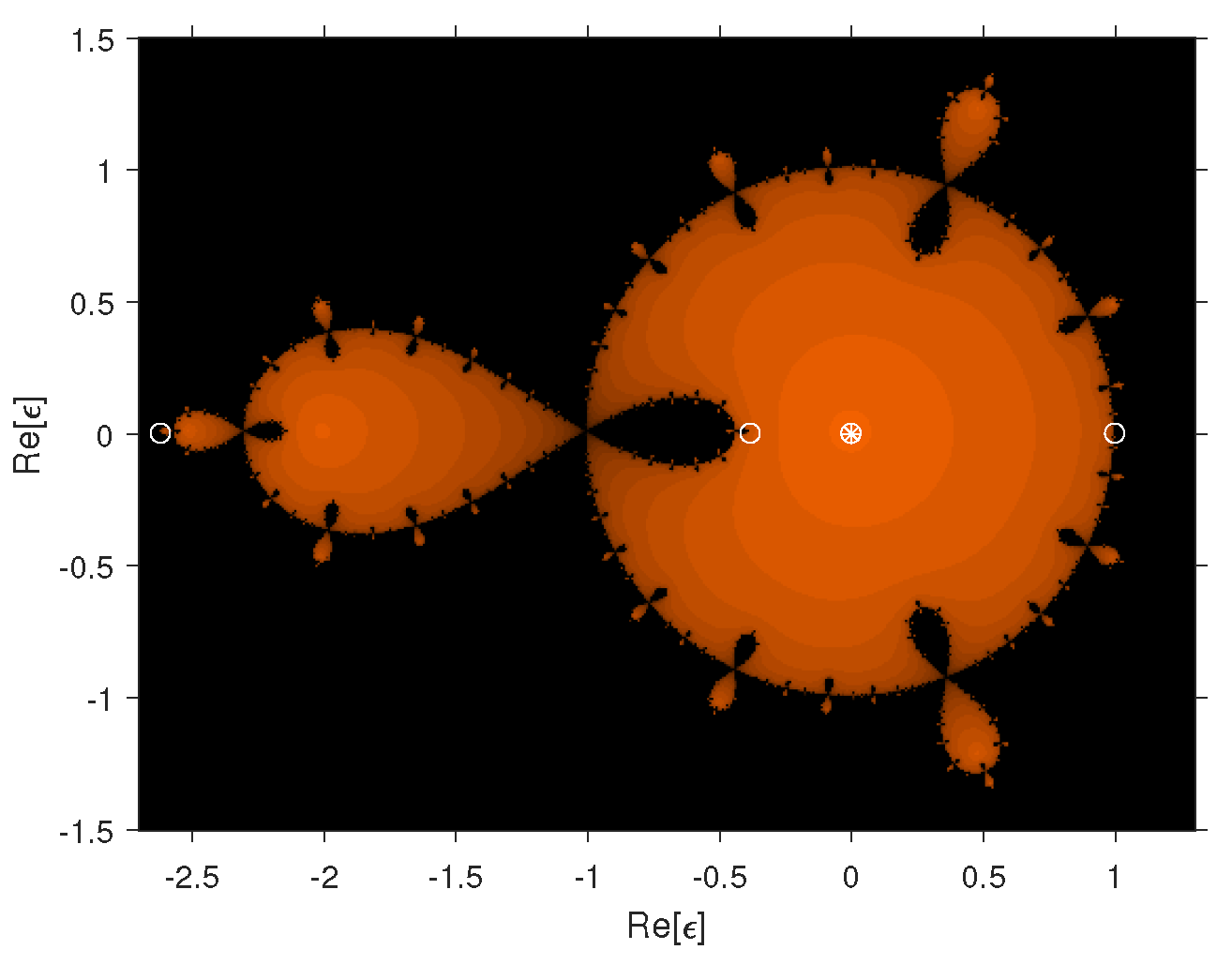

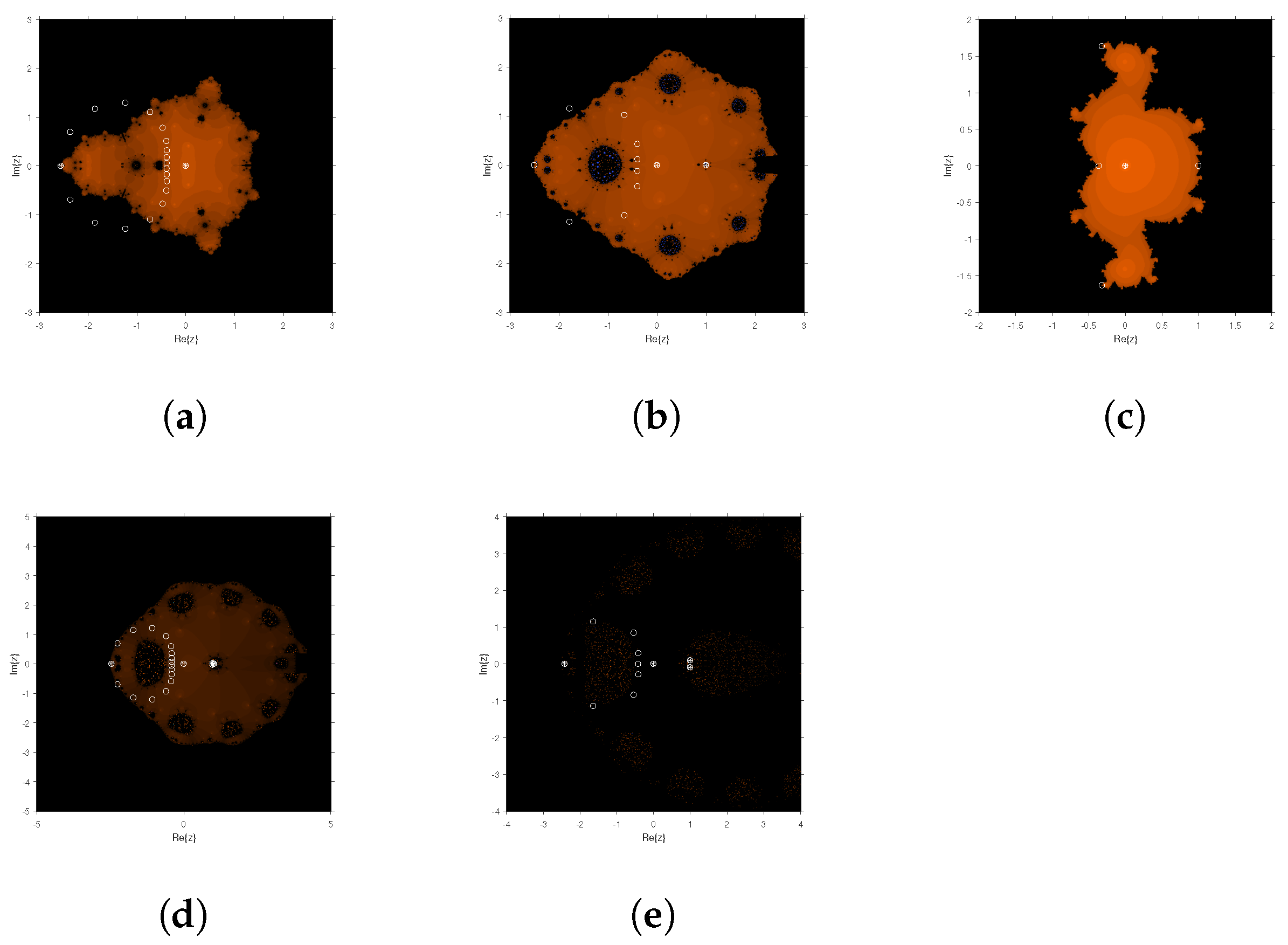

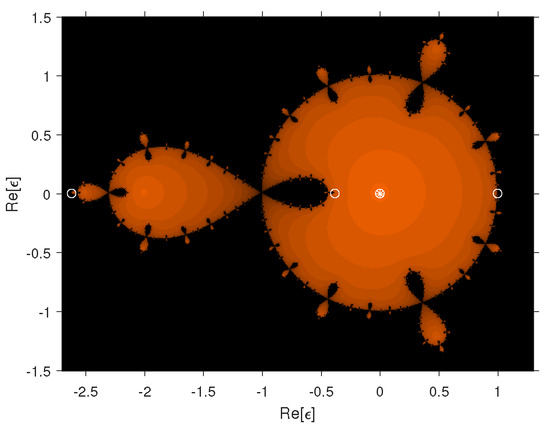

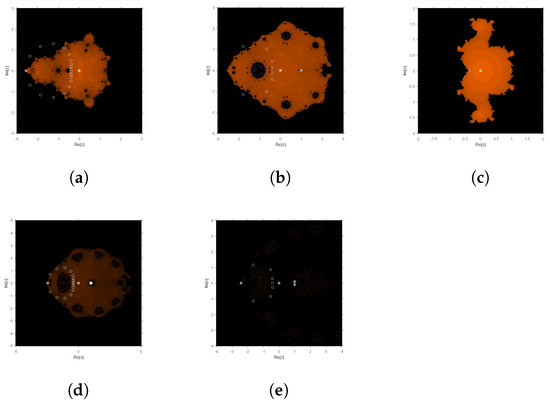

The dynamical planes in iterative methods are essential for solving nonlinear equations because they provide visual insights into the behavior and stability of iterating processes. By investigating the convergence and divergence patterns within these planes, fixed points, attractors, and chaotic zones can be identified, allowing the iterative process to be optimized for improved accuracy and efficiency. The stability of the single root-finding method for different fractional parameter values is examined using dynamical planes (see Figure 1 and Figure 2). In these figures, the orange color represents the basins of attraction of when mapped to 0. If the root of maps to infinity, it is marked in blue. If the map diverges, it is marked in black. Strange fixed points are depicted by white circles, free critical points by white squares, and fixed points by white squares with a star. The dynamical planes are generated by taking starting values from the square . In Figure 1, the dynamical planes show large basins where the rational map converges to 0 or infinity. In Figure 2a–e, the region of the basins of attraction decreases as the fractional parameter value decreases from 1 to 0.5 and diverges at 0. This indicates that the single-step method is more stable when the fractional parameter values are close to 1 and becomes unstable as the fractional parameter values approach 0. Using the newly developed stable fractional-order single root-finding method as a correction factor in (23), we propose a novel inverse fractional scheme for analyzing (1) in the following section.

Figure 1.

Dynamical planes of the rational map for .

Figure 2.

(a–e): Dynamical planes for various parameter values of the rational map . (a) Dynamical planes of the rational map for ; (b) dynamical planes of the rational map for ; (c) dynamical planes of the rational map for ; (d) dynamical planes of the rational map for ; (e) dynamical planes of the rational map for .

3. Development and Analysis of Inverse Fractional Parallel Scheme

The inverse fractional parallel iterative approach is useful for determining the roots of nonlinear equations because it efficiently finds all roots of (2) simultaneously. This iterative technique converges quickly from any initial value, has global convergence, and computes both real and complex roots simultaneously. The inverse fractional schemes provide greater stability, consistency and are well suited for parallel computing. Shams et al. [61] presented the fractional parallel approach with convergence order 2 for solving (2) as follows:

where Using (4) in (23), we propose the following inverse fractional parallel scheme () as

where , , , The parallel fractional scheme can also be written as

where

and

The following theorem presents the local order of convergence of the inverse fractional scheme.

Theorem 3.

Let be simple zeros of the nonlinear equation. For sufficiently close initial distinct estimates of the roots, respectively, has a convergence order of .

Proof.

Let and be the errors in , and , respectively. From the first step of , we have

where and from (27), . Therefore,

By assuming

Considering the second step, we have

where and [1]. Therefore,

Hence, the theorem is proven. □

4. Numerical Results

To examine the effectiveness and stability of our proposed method, several engineering applications are analyzed in this section. In our experiments, we use the following termination criteria:

where represents the residual error norm-2. Additionally, for percentage convergence, we use

Additionally, we measure the CPU execution time using an Intel(R) Core(TM) i7-4330m CPU running at 8.2 GHz with a 64-bit operating system. All computations are carried out in Maple 20 and C++ to determine the more realistic run time of the numerical approach for comparison. Further, we compare our newly developed method with the Nourien method [62] (), which has a convergence order of four:

and the Zhang et al. method [63] ():

where

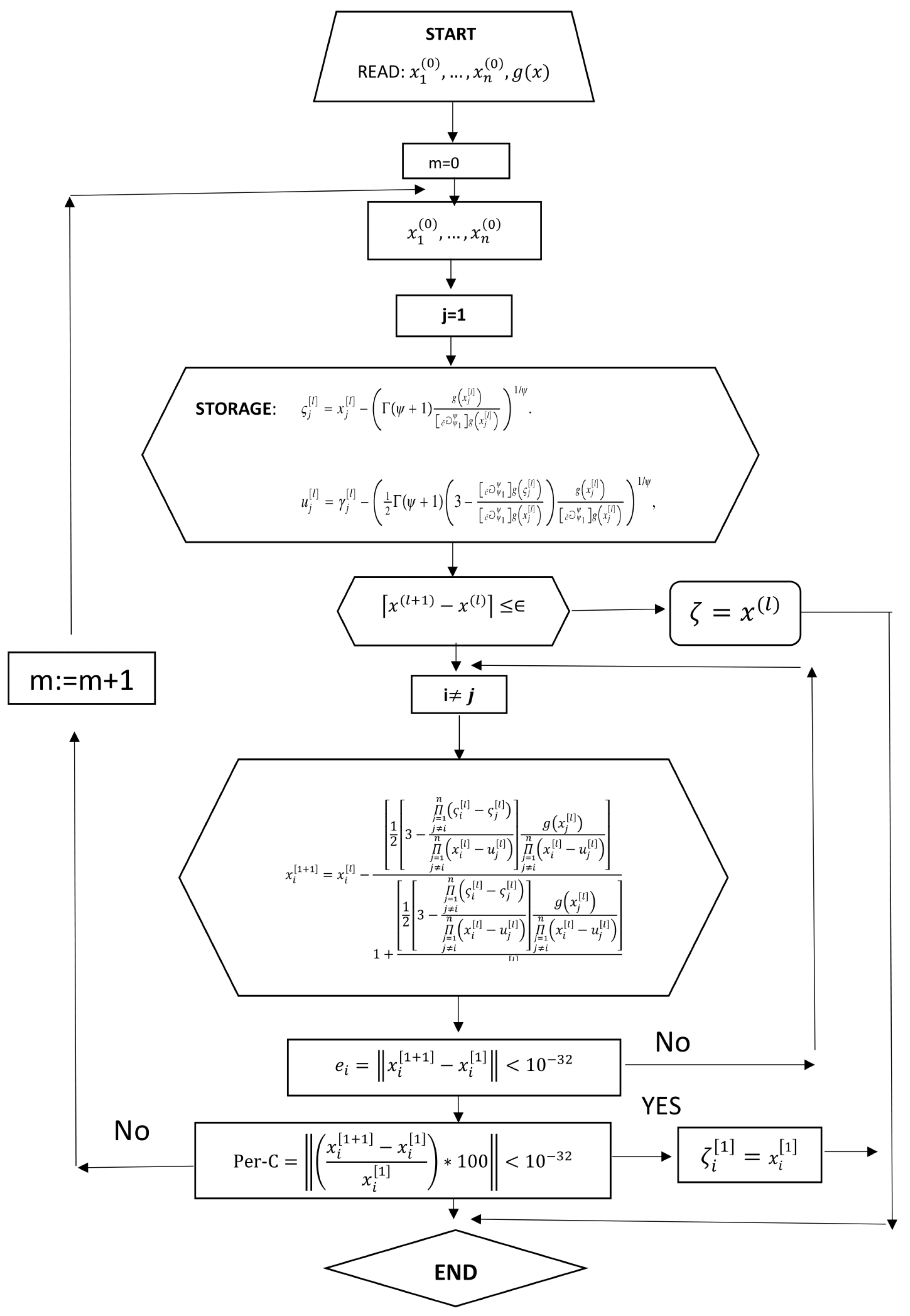

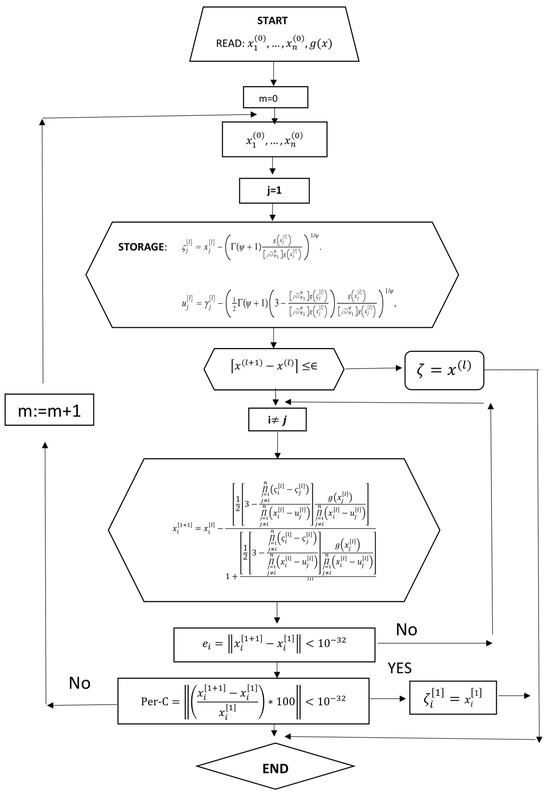

To compute all the roots of (2), we used the Algorithm 1 block diagram Figure 3, which depicts the flow chart of the inverse parallel scheme .

| Algorithm 1: Fractional numerical scheme |

Figure 3.

Flow chart of the inverse parallel scheme for solving (2).

4.1. Example 1: Fractional Relaxation–Oscillation Equation [64]

A fundamental tool for forecasting the dynamic behavior of systems exhibiting both relaxation and oscillatory properties is the fractional relaxation–oscillation equation. This equation has applications in materials science, engineering, and biology, as it incorporates fractional derivatives that account for the system’s memory and hereditary effects, extending traditional relaxation–oscillation models. The fractional relaxation–oscillation equation is given by

In viscoelastic materials with both elastic and viscous behavior, the fractional order explains how the material’s stress response is influenced by its current state and deformation history.

The numerical solution of (57) can be obtained by solving the following polynomial, using the approach described in [65]. For this example, we choose , , , and :

For this simplifies to

The Caputo-type derivative of Equation (58) is given by

The non-linear Equation (58) has the following exact roots, accurate up to five decimal places:

Using initial guesses close to the exact solution, the convergence rate of simultaneous methods increases, allowing the methods to approach the exact roots with fewer iterations (see Table 1).

Table 1.

Numerical results for Example 1.

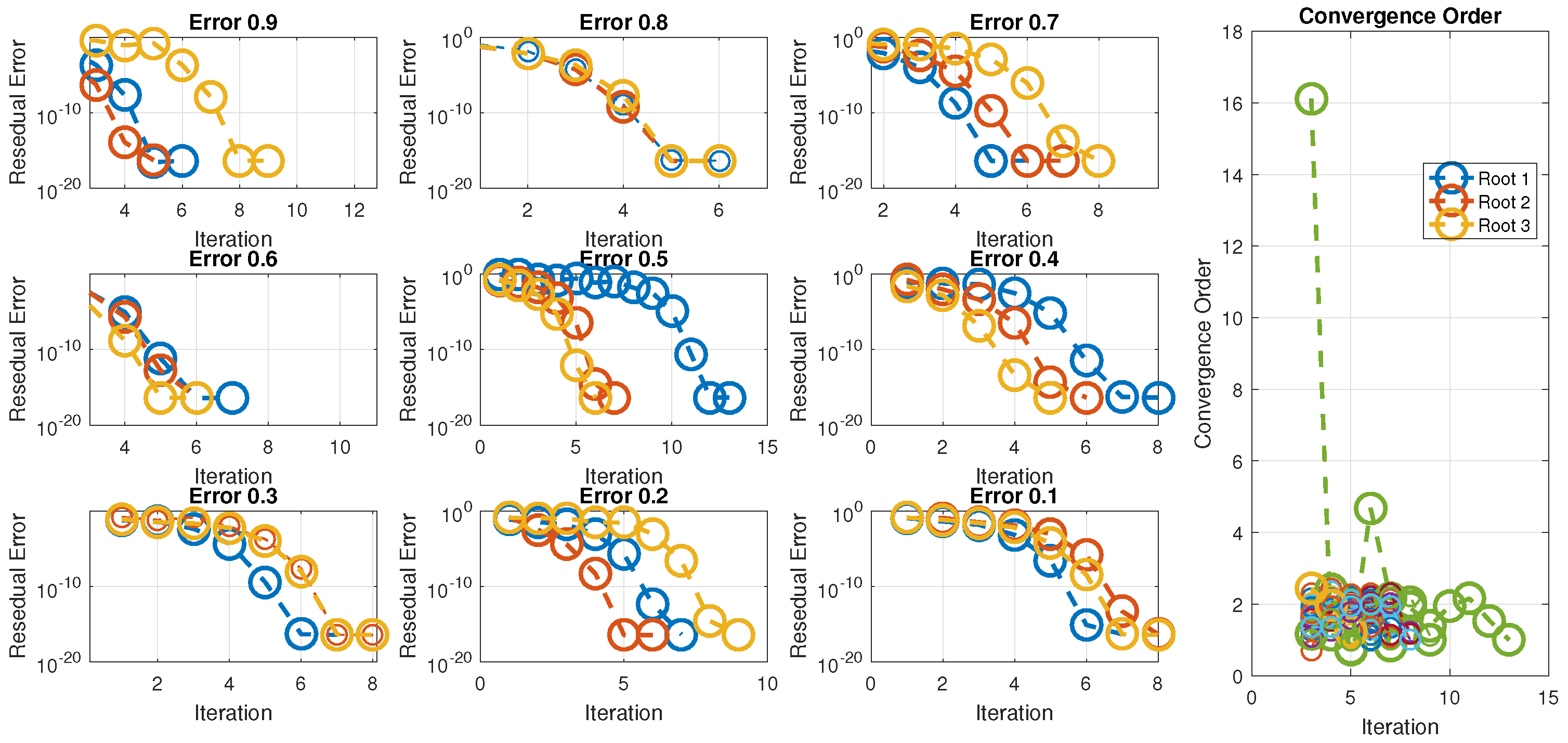

Table 2 clearly indicates that when , the residual error for each root and the order and rate of convergence are superior compared to existing methods, demonstrating better efficiency and stability. To observe the global convergence behavior, we selected some random initial guess values using the “rand()” command in Matlab to generate random initial guesses. The numerical outcomes for these random initial guesses are presented in Table 2.

Table 2.

Random initial test vectors − for scheme .

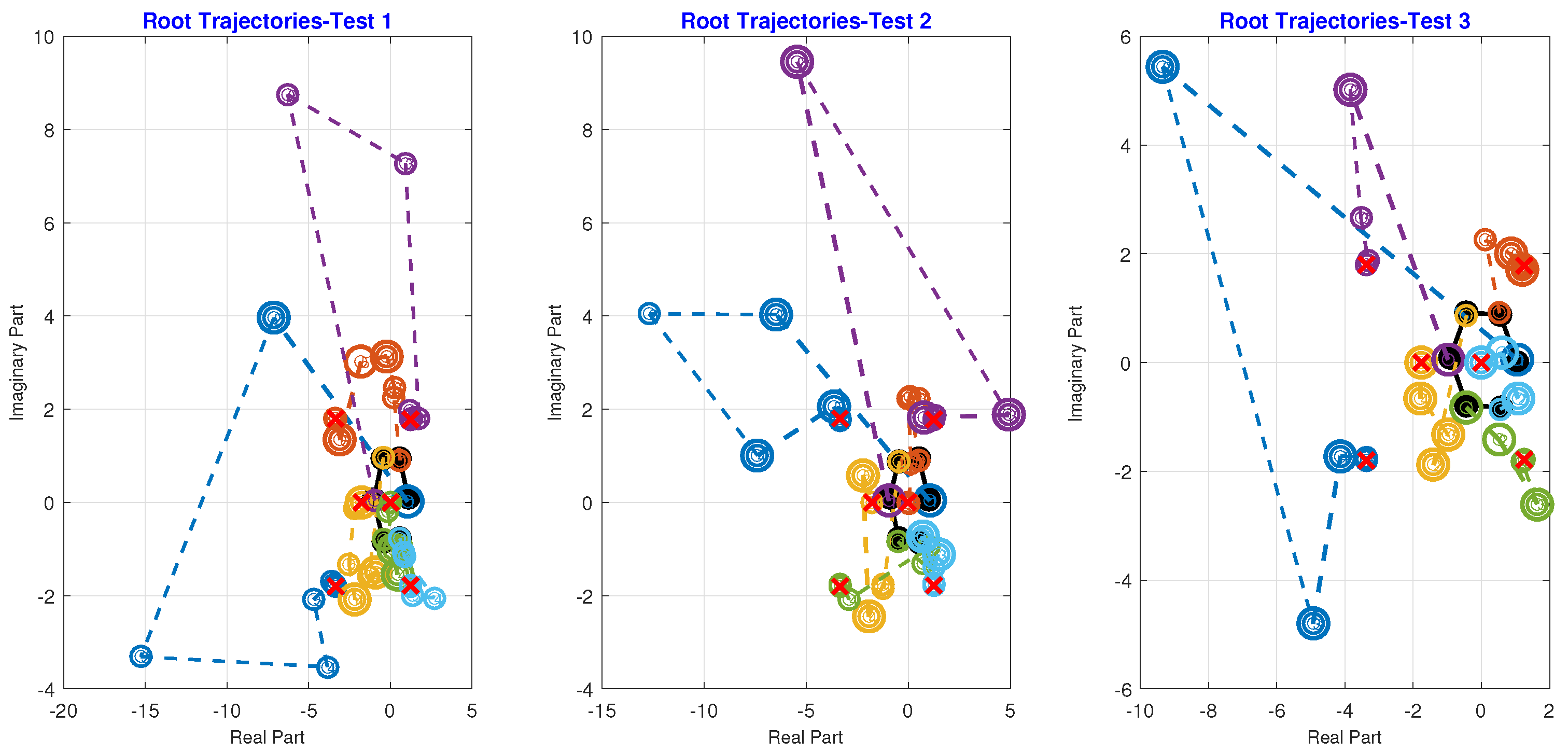

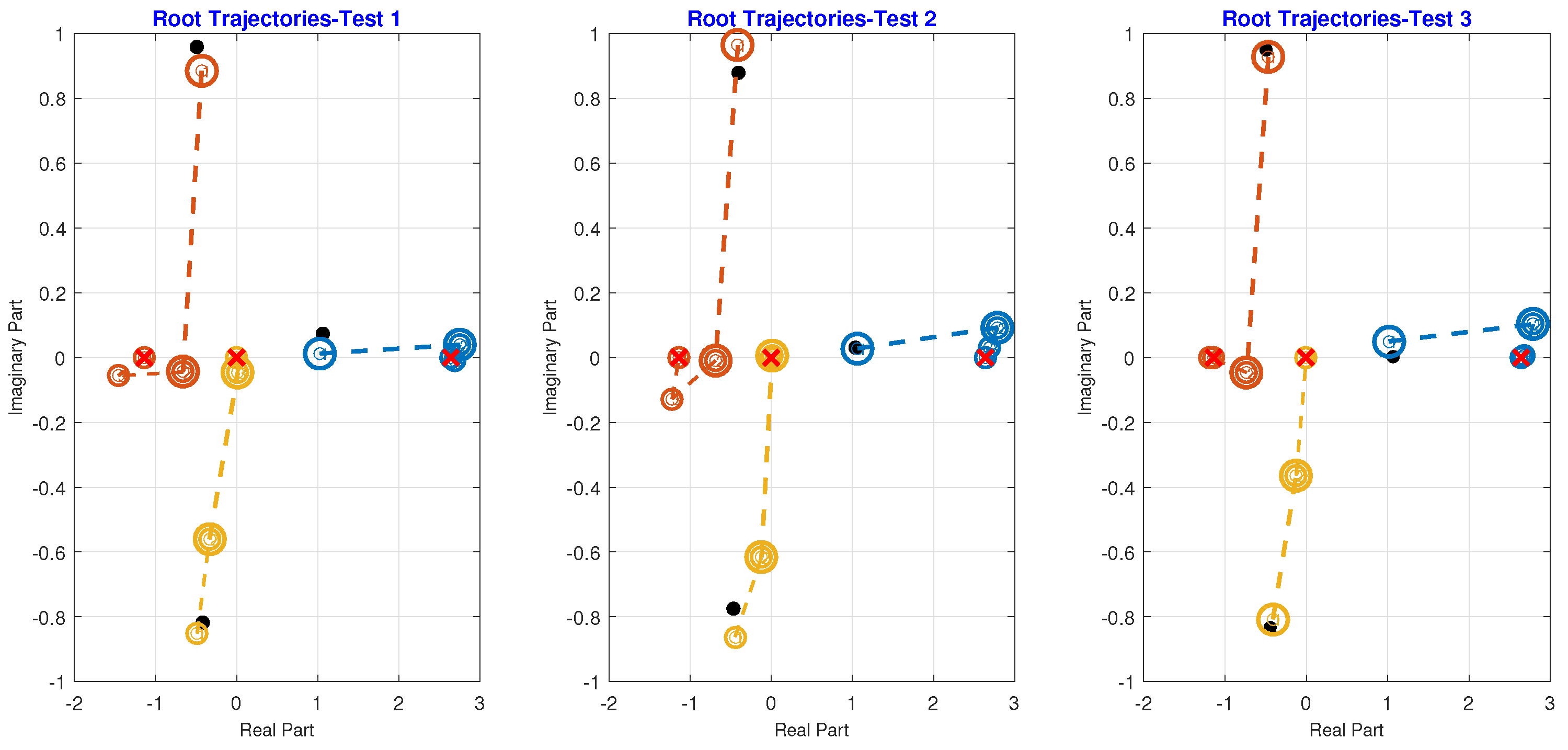

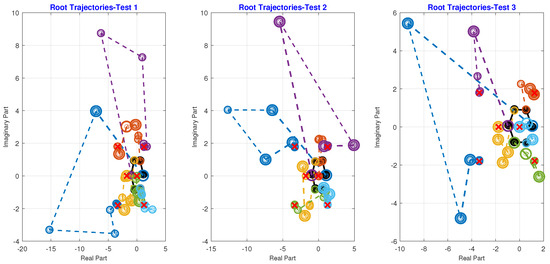

Root trajectories for roots 1–6 were determined by selecting random initial starting points using the Albearth initial approximation, with a maximum of 7, 8, 12, 10, and 12 iterations for to converge to the exact roots. In Figure 4, the black solid circle represents the initial starting roots using the Albearth initial approximation, the empty circle shows the number of iterations required to reach the precise roots, and the red cross on the circle indicates the exact root’s position in the complex plane. The numerical technique converges to the exact roots, displaying global convergence characteristics for any collection of initial test problems, as demonstrated in Figure 4.

Figure 4.

Root trajectories for all roots using three sets of test vectors.

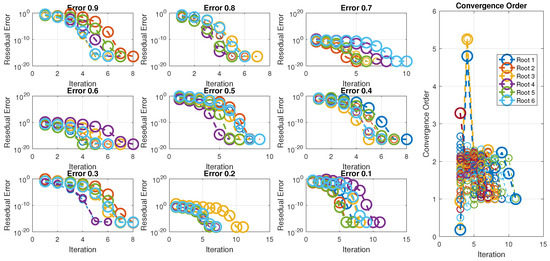

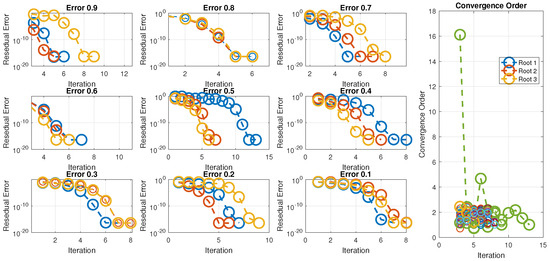

Figure 5 clearly demonstrates that as we start with values of 0.1 and proceed closer to 1, the number of iterations decreases, indicating an increase in the convergence rate, which reaches its maximum as approaches 1. This is because the fractional derivative equals the ordinary derivative at . The figure also illustrates that as increases from 0.1 to 0.9, the number of iterations decreases while accuracy increases, indicating that the newly developed approach becomes stable at . The scheme exhibits consistent behavior across all initial approximations, demonstrating global convergence and holding a prominent position in comparison to and .

Figure 5.

Residual error of the numerical technique for different fractional parameter values and computational orders of convergence.

The global convergence behavior of the numerical scheme for different sets of random starting vectors is investigated in Table 2, Table 3 and Table 4. Random sets of starting values, using the Albearth initial approximation, are presented in Table 2 to find all solutions to the considered application. The comparisons of the starting vectors with exact roots up to four decimal places are shown in the last column. Table 3 presents the numerical outputs for various fractional parameter values ranging from 0.1 to 0.9 when these three initial vectors are used to find all solutions. As shown in Table 3, accuracy increases and reaches a maximum at as the values increase from 0.1 to 0.9. To obtain this accuracy for different test vectors − , the method requires a varying number of iterations for , as shown in Table 4. It is also evident from Table 4 that the number of iterations decreases as increases from 0.1 to 0.9. Table 5 shows the scheme’s overall behavior with different sets of starting vectors.

Table 3.

Residual error on random initial vectors for solving using fractional for different values of .

Table 4.

Number of iterations for random initial test vectors for solving engineering application 1 using .

Table 5.

Consistency analysis for different values for solving engineering application 1 as described in Example 1.

To determine the degree of consistency between the method and other existing methods, Table 5 is utilized. Table 5 assesses the method’s consistency with other existing methods, specifically and . Table 5 shows that is the computational order of convergence, CPU is the average CPU time using various sets of initial vectors ( − ), Av-it is the average number of iterations, and Per-C is the percentage average. Comparing the results for with the outcomes presented in Table 1 clearly shows that the method is more consistent compared to existing methods and demonstrates improved convergence behavior of compared to and .

4.2. Example 2: Civil Engineering Application [66]

Consider the fractional differential equations

In viscoelastic materials with both elastic and viscous behavior, the fractional order explains how the material’s stress response is influenced by its current state and deformation history.

The numerical solution of (61) can be obtained by solving the following polynomial using the approach described in [67] by choosing and :

For we have

and for we have

The Caputo-type derivative of (63) is

The non-linear equation (63) has the following exact roots up to three decimal places:

Using initial guesses close to the exact solution, the convergence rate of simultaneous methods increases and approaches exact roots with fewer iterations, as shown in Table 6.

Table 6.

Numerical results for Example 2.

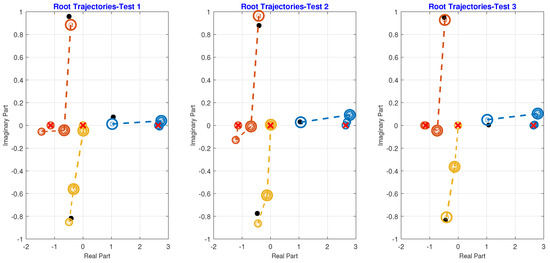

The root trajectories for roots 1 to 3 are determined by randomly selecting the initial starting values for each, using the Albearth approximation. This process requires a maximum of 7, 8, 12, 10, and 12 iterations for to converge to the precise root. The global convergence behavior of the numerical scheme is demonstrated by its consistent convergence to exact roots for any set of starting test problems − , as depicted in Figure 6.

Figure 6.

Root trajectories for all roots using three sets of test vectors.

Figure 7 clearly shows that as we start with values of 0.1 and move closer to 1, the number of iterations decreases. This indicates that the convergence rate increases and reaches a maximum as we approach , since the fractional derivative equals the ordinary derivative at that value. The scheme exhibits consistent behavior across all initial approximations, demonstrating global convergence and maintaining a prominent position compared to current schemes, such as and .

Figure 7.

Residual error of the numerical scheme for various fractional parameter values and computational orders of convergence.

The global convergence behavior of the numerical technique is examined in Table 7, Table 8 and Table 9 for various sets of random initial vectors. Using the Albearth initial approximation, random sets of starting values are generated to find all solutions to the specified application. These sets are displayed in Table 7. The last column compares the initial vectors with exact roots up to four decimal places. Table 8 presents the numerical outputs for fractional parameter values ranging from 0.1 to 0.9 when these three initial vectors are used to find all possible solutions. As values increase from 0.1 to 0.9, Table 8 demonstrates that accuracy rises and reaches a maximum at . To achieve this accuracy for different test vectors, the method requires a variable number of iterations for g, as shown in Table 9. Table 9 also clearly shows that the number of iterations decreases as grows from 0.1 to 0.9. Table 10 shows the scheme’s overall behavior with different sets of starting vectors.

Table 7.

Random initial test vectors − for scheme .

Table 8.

Residual error on random initial vectors for solving using .

Table 9.

Number of iterations for random initial test vectors for solving engineering application 2.

Table 10.

Consistancy analysis for different values for solving engineering application 2 using .

5. Conclusions

We developed a new Caputo-type fractional scheme with a convergence order of and transformed it into an inverse fractional parallel scheme to find all solutions to nonlinear fractional problems. Convergence analysis reveals that the parallel schemes have a convergence order of . To enhance the convergence rate of fractional schemes, dynamical planes are utilized to select the optimal initial guessed values for convergence to exact solutions. Several nonlinear problems were considered to evaluate the stability and consistency of in comparison to , . The numerical results demonstrate that the method is more stable and consistent in terms of residual error, CPU time, and error graphs for varied values of compared to and . The global convergence behavior is further examined using three initial test vectors, i.e., − . In the future, we plan to develop higher-order inverse parallel schemes using other fractional derivative notations to address more complex problems in biomedical engineering and epidemic modeling.

Author Contributions

Conceptualization, M.S. and B.C.; methodology, M.S.; software, M.S.; validation, M.S.; formal analysis, B.C.; investigation, M.S.; resources, B.C.; writing—original draft preparation, M.S. and B.C.; writing—review and editing, B.C.; visualization, M.S. and B.C.; supervision, B.C.; project administration, B.C.; funding acquisition, B.C. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by the Free University of Bozen-Bolzano (IN200Z SmartPrint) and by Provincia Autonoma di Bolzano/Alto Adige—Ripartizione Innovazione, Ricerca, Università e Musei (CUP codex I53C22002100003 PREDICT). Bruno Carpentieri is a member of the Gruppo Nazionale per il Calcolo Scientifico (GNCS) of the Istituto Nazionale di Alta Matematica (INdAM) and this work was partially supported by INdAM-GNCS under Progetti di Ricerca 2024.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this article.

Abbreviations

In this article, the following abbreviations are used:

| Newly developed Schemes | |

| n | Iterations |

| CPU time | Computational Time in Seconds |

| e- | |

| Computational local convergence order |

References

- Liu, Y.; Yang, Q. Dynamics of a new Lorenz-like chaotic system. Nonlinear Anal. Real World Appl. 2010, 11, 2563–2572. [Google Scholar] [CrossRef]

- Liu, P.; Zhang, Y.; Mohammed, K.J.; Lopes, A.M.; Saberi-Nik, H. The global dynamics of a new fractional-order chaotic system. Chaos Solitons Fractals 2023, 175, 114006. [Google Scholar] [CrossRef]

- Ye, X.; Wang, X. Hidden oscillation and chaotic sea in a novel 3d chaotic system with exponential function. Nonlinear Dyn. 2023, 111, 15477–15486. [Google Scholar] [CrossRef]

- Venkateshan, S.P.; Swaminathan, P. Computational Methods in Engineering; Academic Press: Cambridge, MA, USA, 2014; pp. 317–373. [Google Scholar]

- Hiptmair, R. Finite elements in computational electromagnetism. Acta Numer. 2002, 11, 237–339. [Google Scholar] [CrossRef]

- Lomax, H.; Pulliam, T.H.; Zingg, D.W.; Kowalewski, T.A. Fundamentals of computational fluid dynamics. Appl. Mech. Rev. 2002, 55, B61. [Google Scholar] [CrossRef]

- Warren, C.; Giannopoulos, A.; Giannakis, I. gprMax: Open source software to simulate electromagnetic wave propagation for Ground Penetrating Radar. Comput. Phys. Commun. 2016, 209, 163–170. [Google Scholar] [CrossRef]

- Cantwell, B.J. Organized motion in turbulent flow. Annu. Rev. Fluid Mech. 1981, 13, 457–515. [Google Scholar] [CrossRef]

- Peters, S.; Lanza, G.; Jun, N.; Xiaoning, J.; Pei Yun, Y.; Colledani, M. Automotive manufacturing technologies—An international viewpoint. Manuf. Rev. 2014, 1, 1–12. [Google Scholar] [CrossRef]

- Singh, J.; Singh, H. Application of lean manufacturing in automotive manufacturing unit. Int. J. Lean Six Sigma 2020, 11, 171–210. [Google Scholar] [CrossRef]

- Ma, C.Y.; Shiri, B.; Wu, G.C.; Baleanu, D. New fractional signal smoothing equations with short memory and variable order. Optik 2020, 218, 164507. [Google Scholar] [CrossRef]

- Tolstoguzov, V. Phase behaviour of macromolecular components in biological and food systems. Food/Nahrung 2000, 44, 299–308. [Google Scholar] [CrossRef] [PubMed]

- Bullmore, E.; Sporns, O. The economy of brain network organization. Nat. Rev. Neurosci. 2012, 13, 336–349. [Google Scholar] [CrossRef] [PubMed]

- Arino, J.; Van den Driessche, P. Disease spread in metapopulations. Fields Inst. Commun. 2006, 4, 1–13. [Google Scholar]

- Baleanu, D. Fractional Calculus: Models and Numerical Methods; World Scientific: Singapore, 2012; Volume 3. [Google Scholar]

- Polyanin, A.D.; Zaitsev, V.F. Handbook of Ordinary Differential Equations: Exact Solutions, Methods, and Problems; Chapman and Hall/CRC: Boca Raton, FL, USA, 2017. [Google Scholar]

- Diethelm, K.; Ford, N.J. Analysis of fractional differential equations. J. Math. Anal. Appl. 2002, 265, 229–248. [Google Scholar] [CrossRef]

- Gu, X.M.; Huang, T.-Z.; Zhao, Y.L.; Carpentieri, B. A fast implicit difference scheme for solving the generalized time–space fractional diffusion equations with variable coefficients. Numer. Methods Partial. Differ. Equ. 2021, 37, 1136–1162. [Google Scholar] [CrossRef]

- Gu, X.M.; Huang, T.Z.; Ji, C.C.; Carpentieri, B.; Alikhanov, A.A. Fast iterative method with a second-order implicit difference scheme for time-space fractional convection–diffusion equation. J. Sci. Comput. 2017, 72, 957–985. [Google Scholar] [CrossRef]

- Huang, Y.Y.; Gu, X.M.; Gong, Y.; Li, H.; Zhao, Y.L.; Carpentieri, B. A fast preconditioned semi-implicit difference scheme for strongly nonlinear space-fractional diffusion equations. Fractal Fract. 2021, 5, 230. [Google Scholar] [CrossRef]

- Karaagac, B. New exact solutions for some fractional order differential equations via improved sub-equation method. Discret. Contin. Dyn. Syst.-S 2019, 12, 447–454. [Google Scholar] [CrossRef]

- Manafian, J.; Allahverdiyeva, N. An analytical analysis to solve the fractional differential equations. Adv. Math. Models Appl. 2021, 6, 128–161. [Google Scholar]

- Qazza, A.; Saadeh, R. On the analytical solution of fractional SIR epidemic model. Appl. Comput. Intell. Soft Comput. 2023, 2023, 6973734. [Google Scholar] [CrossRef]

- Yépez-Martínez, H.; Rezazadeh, H.; Inc, M.; Akinlar, M.A.; Gomez-Aguilar, J.F. Analytical solutions to the fractional Lakshmanan–Porsezian–Daniel model. Opt. Quantum Electron. 2022, 54, 32. [Google Scholar] [CrossRef]

- Reynolds, D.R.; Gardner, D.J.; Woodward, C.S.; Chinomona, R. ARKODE: A flexible IVP solver infrastructure for one-step methods. ACM Trans. Math. Soft. 2023, 49, 1–26. [Google Scholar] [CrossRef]

- Ikhile, M.N.O. Coefficients for studying one-step rational schemes for IVPs in ODEs: III. Extrapolation methods. Comput. Math. Appl. 2004, 47, 1463–1475. [Google Scholar] [CrossRef][Green Version]

- Rufai, M.A.; Ramos, H. A variable step-size fourth-derivative hybrid block strategy for integrating third-order IVPs, with applications. Int. J. Comput. Math. 2022, 99, 292–308. [Google Scholar] [CrossRef]

- Argyros, I.K.; Hilout, S. Weaker conditions for the convergence of Newton’s method. J. Complex. 2012, 28, 364–387. [Google Scholar] [CrossRef]

- Gutierrez, J.M.; Hernández, M.A. An acceleration of Newton’s method: Super-Halley method. Appl Math Comput. 2001, 117, 223–239. [Google Scholar] [CrossRef]

- Chun, C. A new iterative method for solving nonlinear equations. Appl. Math. Comput. 2006, 178, 415–422. [Google Scholar] [CrossRef]

- Sharma, J.R.; Guha, R.K. A family of modified Ostrowski methods with accelerated sixth order convergence. Appl. Math. Comput. 2007, 190, 111–115. [Google Scholar] [CrossRef]

- King, P.R. The use of field theoretic methods for the study of flow in a heterogeneous porous medium. J. Phys. A Math. Gen. 1987, 20, 3935. [Google Scholar] [CrossRef]

- Shams, M.; Carpentieri, B. On highly efficient fractional numerical method for solving nonlinear engineering models. Mathematics 2023, 11, 4914. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; MartÃ-nez, E.; Torregrosa, J.R. Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 2012, 25, 2369–2374. [Google Scholar] [CrossRef]

- Mir, N.A.; Anwar, M.; Shams, M.; Rafiq, N.; Akram, S. On numerical schemes for determination of all roots simultaneously of non-linear equation. Mehran Univ. Res. J. Eng. Technol. 2022, 41, 208–218. [Google Scholar] [CrossRef]

- Akram, S.; Shams, M.; Rafiq, N.; Mir, N.A. On the stability of Weierstrass type method with King’s correction for finding all roots of non-linear function with engineering application. Appl. Math. Sci. 2020, 14, 461–473. [Google Scholar] [CrossRef]

- Neta, B. New third order nonlinear solvers for multiple roots. Appl. Math. Comput. 2008, 202, 162–170. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Gutiérrez, J.M. Third-order iterative methods with applications to Hammerstein equations: A unified approach. J. Comput. Appl. Math. 2011, 235, 2936–2943. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, Q.; Zhao, P. A variant of Steffensen’s method of fourth-order convergence and its applications. Appl. Math. Comput. 2010, 216, 1978–1983. [Google Scholar] [CrossRef]

- Torres-Hernandez, A.; Brambila-Paz, F. Sets of fractional operators and numerical estimation of the order of convergence of afamily of fractional fixed-point methods. Fractal Fract. 2021, 4, 240. [Google Scholar] [CrossRef]

- Akgül, A.; Cordero, A.; Torregrosa, J.R. A fractional Newton method with 2th-order of convergence and its stability. Appl. Math. Lett. 2019, 98, 344–351. [Google Scholar] [CrossRef]

- Cajori, F. Historical note on the Newton-Raphson method of approximation. Am. Math. Mon. 1911, 18, 29–32. [Google Scholar] [CrossRef]

- Kumar, P.; Agrawal, O.P. An approximate method for numerical solution of fractional differential equations. Signal Process 2006, 86, 2602–2610. [Google Scholar] [CrossRef]

- Falcão, M.I.; Miranda, F.; Severino, R.; Soares, M.J. Weierstrass method for quaternionic polynomial root-finding. Math. Methods Appl. Sci. 2018, 41, 423–437. [Google Scholar] [CrossRef]

- Nedzhibov, G.H. Inverse Weierstrass-Durand-Kerner Iterative Method. Int. J. Appl. Math. 2013, 28, 1258–1264. [Google Scholar]

- Shams, M.; Rafiq, N.; Ahmad, B.; Mir, N.A. Inverse numerical iterative technique for finding all roots of nonlinear equations with engineering applications. J. Math. 2021, 2021, 6643514. [Google Scholar] [CrossRef]

- Iliev, A.I. A generalization of Obreshkoff-Ehrlich method for multiple roots of polynomial equations. arXiv 2001, arXiv:math/0104239. [Google Scholar]

- Petković, M.S.; Petković, L.D.; Džunić, J. On an efficient method for the simultaneous approximation of polynomial multiple roots. Appl. Anal. Discret. Math. 2014, 1, 73–94. [Google Scholar] [CrossRef]

- Sebah, P.; Gourdon, X. Introduction to the gamma function. Am. J. Sci. Res. 2002, 1, 2–18. [Google Scholar]

- Almeida, R. A Caputo fractional derivative of a function with respect to another function. Commun. Nonlinear Sci. Numer. Simul. 2017, 44, 460–481. [Google Scholar] [CrossRef]

- Odibat, Z.M.; Shawagfeh, N.T. Generalized Taylor’s formula. Appl. Math. Comput. 2007, 186, 286–293. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. An optimal and low computational cost fractional Newton-type method for solving nonlinear equations. Appl. Math. Lett. 2022, 124, 107650. [Google Scholar] [CrossRef]

- Shams, M.; Kausar, N.; Agarwal, P.; Jain, S.; Salman, M.A.; Shah, M.A. On family of the Caputo-type fractional numerical scheme for solving polynomial equations. Appl. Math. Sci. Eng. 2023, 31, 2181959. [Google Scholar] [CrossRef]

- Candelario, G.; Cordero, A.; Torregrosa, J.R. Multipoint fractional iterative methods with (2α+1) th-order of convergence for solving nonlinear problems. Mathematics 2020, 8, 452. [Google Scholar] [CrossRef]

- Jarratt, P. Some fourth order multipoint iterative methods for solving equations. Math. Comput. 1966, 20, 434–437. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. Stability and applicability of iterative methods with memory. J. Math. Chem. 2019, 57, 1282–1300. [Google Scholar] [CrossRef]

- Cordero, A.; Leonardo Sepúlveda, M.A.; Torregrosa, J.R. Dynamics and stability on a family of optimal fourth-order iterative methods. Algorithms 2022, 15, 387. [Google Scholar] [CrossRef]

- Cordero, A.; Lotfi, T.; Khoshandi, A.; Torregrosa, J.R. An efficient Steffensen-like iterative method with memory. Bull. Math. Soc. Sci. Math. Roum. 2015, 1, 49–58. [Google Scholar]

- Shams, M.; Ahmad Mir, N.; Rafiq, N.; Almatroud, A.O.; Akram, S. On dynamics of iterative techniques for nonlinear equation with applications in engineering. Math. Probl. Eng. 2020, 2020, 5853296. [Google Scholar] [CrossRef]

- Campos, B.; Canela, J.; Vindel, P. Dynamics of Newton-like root finding methods. Numer. Alg. 2023, 93, 1453–1480. [Google Scholar] [CrossRef]

- Shams, M.; Carpentieri, B. Efficient Inverse Fractional Neural Network-Based Simultaneous Schemes for Nonlinear Engineering Applications. Fractal Fract. 2023, 7, 849. [Google Scholar] [CrossRef]

- Anourein, A.W.M. An improvement on two iteration methods for simultaneous determination of the zeros of a polynomial. Inter. J. Comput. Math. 1977, 6, 241–252. [Google Scholar] [CrossRef]

- Zhang, X.; Peng, H.; Hu, G. A high order iteration formula for the simultaneous inclusion of polynomial zeros. Appl. Math. Comput. 2006, 179, 545–552. [Google Scholar] [CrossRef]

- Wu, G.C. Adomian decomposition method for non-smooth initial value problems. Math. Comput. Model. 2018, 54, 2104–2108. [Google Scholar] [CrossRef]

- Az-Zo’bi, E.A.; Qousini, M.M. Modified Adomian-Rach decomposition method for solving nonlinear time-dependent IVPs. Appl. Math. Sci. 2017, 11, 387–395. [Google Scholar] [CrossRef]

- Khodabakhshi, N.; Mansour Vaezpour, S.; Baleanu, D. Numerical solutions of the initial value problem for fractional differential equations by modification of the Adomian decomposition method. Fract. Calc. Appl. 2014, 17, 382–400. [Google Scholar] [CrossRef]

- Wazwaz, A.M.; Rach, R.; Bougoffa, L. Dual solutions for nonlinear boundary value problems by the Adomian decomposition method. Int. J. Numer. Meth. Heat. Fluid Flow 2016, 26, 2393–2409. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).