Abstract

This study presents an iterative method for approximating common fixed points of a finite set of G-nonexpansive mappings within a real Hilbert space with a directed graph. We establish definitions for left and right coordinate convexity and demonstrate both weak and strong convergence results based on reasonable assumptions. Furthermore, our algorithm’s effectiveness in solving the heat equation is highlighted, contributing to energy optimization and sustainable development.

MSC:

65Y05; 68W10; 47H05; 47J25; 49M37

1. Introduction

Recent global challenges, particularly in urbanization and climate change, have intensified the urban heat island (UHI) effect. This phenomenon leads to significantly higher temperatures in cities compared to rural areas due to factors such as extensive concrete surfaces and reduced vegetation. UHI exacerbates energy consumption, increases pollution, and negatively impacts public health, especially during heatwaves. The heat equation offers a solution by modeling temperature dynamics to address these issues, see [1]. In smart cities, it helps mitigate the UHI effect by optimizing energy use in buildings and improving infrastructure resilience. In agriculture, the heat equation is used for precision farming, improving irrigation efficiency, and managing soil temperature to enhance crop yields and water conservation, see [2,3]. These applications, grounded in heat transfer modeling, are vital for climate resilience and sustainable development in the face of increasing environmental pressures. Algorithms used to solve the heat equation often rely on concepts from fixed point theorems. These theorems are central to the process, as they provide a framework, making them a fundamental tool in the development of algorithms for solving the heat equation, as seen in [4,5,6].

The study of fixed points is a rich field with applications not only in differential equations but also across various branches of mathematics, including geometry and algebra. In geometry, fixed point theorems have been instrumental in solving problems related to the behavior of transformations, such as automorphisms. For instance, Gamboa and Gromadzki [7] explored the fixed points of automorphisms on bordered Klein surfaces, further emphasizing the role of automorphisms in complex surface theory. In algebra, Cooper [8] focused on the fixed points of automorphisms in free groups, demonstrating that these fixed point sets are finitely generated. These applications highlight the importance of fixed point theorems in understanding transformations and algebraic structures across diverse mathematical fields.

The concept of a fixed point theorem for metric spaces endowed with graphs was first introduced by Jachymski [9] in 2008. Later, Aleomraninejad et al. [10] defined G-contractive and G-nonexpansive mappings within metric spaces that included directed graphs, providing convergence results for these types of mappings. Subsequently, numerous researchers have proposed various iterations for G-nonexpansive mappings in Hilbert spaces that involve a directed graph , see [11,12,13,14], for instance. The development of iterative processes that achieve faster convergence remains a significant challenge. Suparatulatorn et al. [11] presented the following lemma, which we will utilize in our findings:

Lemma 1

([11]). Let C be a nonempty closed convex subset of a Hilbert space . Suppose that , and that is a G-nonexpansive self-mapping on C. Given that is a sequence in C satisfying (weak convergence) and (strong convergence), where and , if there is a subsequence of satisfying for all , then .

Moreover, Karahan and Ozdemir [15] introduced an iteration for nonexpansive mappings T in a Banach space, demonstrating that their iteration method outperforms the Picard, Mann, and S iterations in terms of speed. The iteration is as follows:

where , and are real sequences in . Recently, Yambangwai and Thianwan [16] introduced a parallel inertial SP-iteration monotone hybrid algorithm (PISPMHA) for which a weak convergence theorem has been established in Hilbert spaces endowed with graphs. Given initial points and , PISPMHA for G-nonexpansive mapping is presented as follows:

where for each and . Inspired by prior research, our study introduces Algorithm for approximating a common fixed point of a finite family of G-nonexpansive mappings in a real Hilbert space with a directed graph G.

This paper is organized as follows: Section 2 outlines the definitions of left and right coordinate convexity and their properties while also presenting the weak and strong convergence results of the proposed algorithm based on reasonable assumptions. The final section applies our algorithm to solve linear systems in order to obtain the numerical solutions of the heat equation.

2. Main Results

Let be a real Hilbert space with a directed graph , where . Assume that for each , the mapping is G-nonexpansive, and that the set of common fixed points

We then introduce new definitions and their corresponding properties, followed by a review of key lemmas from the previous study.

Definition 1.

Let X be a vector space and E be a nonempty subset of . For all and for all , the set E is said to be left coordinate convex if

Definition 2.

Let X be a vector space and E be a nonempty subset of . For all and for all , the set E is said to be right coordinate convex if

Moreover, E is both left and right coordinate convex if and only if E is coordinate convex, as defined by Van Dung and Trung Hieu in [17].

Example 1.

Suppose . Let , and . From the convexity of and the fact that , we obtain , which implies that . Thus,

so we can conclude that E is left coordinate convex. On the other hand, if we let , and , then for any , we have , but

Therefore, E is not right coordinate convex, and thus, E is not coordinate convex. Likewise, if we set , it follows that E is right coordinate convex, while it is not left coordinate convex.

In the Example 1, we observe that if the set E is generated by both convex and non-convex sets, then E is either left or right coordinate convex. This observation allows us to derive the following theorem:

Theorem 1.

Let and be nonempty subsets of a vector space, and let . We obtain the following statements:

- (i)

- If is convex, then E is left coordinate convex.

- (ii)

- If is convex, then E is right coordinate convex.

- (iii)

- If and are convex, then E is coordinate convex.

Proof.

Assume that is convex. Let , and . Then, and . By assumption, we have , so

Therefore, E is left coordinate convex, which means that the statement holds. We can verify statement in a similar way. Additionally, statement follows from combining statements and . □

Lemma 2

([18], Lemma 1). Let and be nonnegative sequences of real numbers satisfying and . Then, the sequence converges.

Lemma 3

([19], Opial). Let C be a nonempty subset of a Hilbert space , and let be a sequence in . Suppose that

- (i)

- the sequence converges for all ,

- (ii)

- all weak sequential cluster points of belong to C.

Then, converges weakly to some point in C.

We now present our iterative method, as described below (Algorithm 1).

| Algorithm 1 Inertial parallel algorithm |

|

We will provide proofs for the lemmas that support our findings, assuming that is a sequence generated by Algorithm 1.

Lemma 4.

Let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1, satisfying the following conditions:

- (i)

- ,

- (ii)

- is left coordinate convex, and .

Then, the sequence is bounded, and exists for all .

Proof.

Since is nonempty, we can let . Based on supposition and the fact that preserves edges, we have for every . The left coordinate convexity of ensures that . Similarly, we can conclude that , , , and , for all . Furthermore, we derive the following results by considering the fact that is G-nonexpansive:

for all . We can infer from the definition of that

From supposition and Lemma 2, it follows that exists, implying that the sequence is bounded. □

Lemma 5.

Let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1 satisfying the following conditions:

- (i)

- ,

- (ii)

- is right coordinate convex, and .

Then, the sequence is bounded, and exists for all .

Proof.

Since is nonempty, we can let . Based on supposition and the fact that preserves edges, we have for every . The right coordinate convexity of ensures that . Similarly, we can conclude that , , , and , for all . Using the same argument as in Lemma 4, we can determine that the sequence is bounded, and exists. □

Some helpful equalities and inequalities are presented below. For ,

for any .

Lemma 6.

For all , let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1, satisfying the following conditions:

- (i)

- ,

- (ii)

- is left coordinate convex, and for all ,

- (iii)

- ,

- (iv)

- , and ,

- (v)

- G is transitive, and for all .

Then, for all .

Proof.

Since is nonempty, we can let . According to Lemma 4, we can conclude that exists and the sequence is bounded. Consequently, the sequence is also bounded for all , and it yield the following:

For some , the following inequality can be obtained by rearranging the terms:

Thus, there exists an such that

As exists, by combining it with suppositions , , and , we obtain

By referencing the proof of Lemma 4, we can see that . Combining this with leads us to conclude that . We also obtain the following result by using Equation (2):

which implies that

Lemma 7.

For all , let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1, satisfying the following conditions:

- (i)

- ,

- (ii)

- is right coordinate convex, and for all ,

- (iii)

- ,

- (iv)

- , and ,

- (v)

- G is transitive, and for all .

Then, for all .

Proof.

By applying supposition in the same way as in Lemma 6, there exists an such that

According to Lemma 5 and suppositions , , and , we have

By referencing the proof of Lemma 5, we can see that . Combining this with leads us to conclude that . Following the same steps in Lemma 6, we get

for all . □

Lemma 8.

For all , let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1, satisfying the following conditions:

- (i)

- ,

- (ii)

- is left coordinate convex, and for all ,

- (iii)

- ,

- (iv)

- , and ,

- (v)

- for all ,

Then, for all .

Lemma 9.

For all , let be a real Hilbert space with a directed graph . Suppose that is a sequence generated by Algorithm 1, satisfying the following conditions:

- (i)

- ,

- (ii)

- is right coordinate convex, and for all ,

- (iii)

- ,

- (iv)

- , and ,

- (v)

- for all ,

Then, for all .

Next, we outline several weak convergence theorems pertaining to Algorithm 1.

Theorem 2.

Suppose all conditions in Lemma 6 and condition A,

hold. Then, the sequence converges weakly to an element in .

Proof.

By applying Lemmas 4 and 6, it follows that exists for every , and for each . We now show that all weak sequential cluster points of the sequence belong to . Let u be a weak sequential cluster point. This means that there exists a subsequence such that . From supposition , we know that as . Thus, . By condition A, we obtain that . Therefore, using Lemma 1, we conclude that . Consequently, by Lemma 3, the sequence converges weakly to an element in . □

By applying Lemmas 7–9 along with the reasoning used in the proof of Theorem 2, we can derive the following theorems:

Theorem 3.

Suppose all conditions in Lemma 7 and condition A hold. Then, the sequence converges weakly to an element in .

Theorem 4.

Suppose all conditions in Lemma 8 and condition A hold. Then, the sequence converges weakly to an element in .

Theorem 5.

Suppose all conditions in Lemma 9 the condition A hold. Then, the sequence converges weakly to an element in .

To reinforce our main theorems, we present the following example:

Example 2.

Let . Define a mapping as follows:

for all , and . Thus, is G-nonexpansive, and the set of common fixed points is . If we take and , for we have

which implies that is not nonexpansive. Set the initial values , and parameters for all and . It is evident that the conditions of Lemma 6 hold. Now, suppose there exists a subsequence of such that for some . From this setting, it follows that . Therefore, , and the condition A is satisfied. According to Theorem 2, the sequence converges to π in .

For a family of nonexpansive mappings on a real Hilbert space, we also obtain the following weak convergence theorem:

Theorem 6.

Let be a family of nonexpansive mappings on a real Hilbert space such that , and let be a sequence generated by Algorithm 1. Suppose that:

- (i)

- ,

- (ii)

- ,

- (iii)

- , and .

Then, the sequence converges weakly to an element in .

Before presenting the strong convergence theorems, we first recall condition , as introduced in [14].

Definition 3

([14]). Let C be a nonempty subset of a metric space . For each , suppose that is a self-mapping on C. Then, the set is said to satisfy condition if there is a non-decreasing function with , and for such that for each ,

where and .

Theorem 7.

Let . Suppose all conditions in Lemma 6 hold, along with

and that satisfies condition , where is closed. Then, the sequence converges strongly to an element in .

Proof.

Based on conditions and in Lemma 6, it follows that either or . Thus, we conclude that

In accordance with Lemma 4, exists for every , which implies that exists. According to condition , there is a nondecreasing function , such that , for all , and

From Equation (6), we obtain . Utilizing the property of , we get . As a result, we can identify a subsequence from and a corresponding sequence in such that . We set for some . From the proof of Lemma 4, we recall that . Thus, we can conclude that

Additionally,

Condition in Lemma 6 indicates that the right-hand side of the inequality converges to zero as . This implies that the sequence is a Cauchy sequence in . Since is closed, there exists an such that . Furthermore, noting that , we obtain . Moreover, since exists, it follows that . Therefore, the sequence converges strongly to . □

Theorem 8.

Let . Suppose all conditions in Lemma 7 hold, along with

and that satisfies condition , where is closed. Then, the sequence converges strongly to an element in .

Theorem 9.

Let . Suppose all conditions in Lemma 8 hold, along with

and that satisfies condition , where is closed. Then, the sequence converges strongly to an element in .

Theorem 10.

Let . Suppose all conditions in Lemma 9 hold, along with

and that satisfies condition , where is closed. Then, the sequence converges strongly to an element in .

Finally, we establish the following strong convergence theorem for a family of nonexpansive mappings on a real Hilbert space.

Theorem 11.

Let be a family of nonexpansive mappings on a real Hilbert space such that , and let be a sequence generated by Algorithm 1. Suppose all conditions in Theorem 6 hold, and that satisfies condition . Then, the sequence converges strongly to an element in .

3. Application in Numerical Method

Efficient and accurate numerical methods for solving equations are an important tool in science and engineering. The heat equation is a fundamental partial differential equation (PDE) that describes the distribution and flow of heat in a material or system over time. To evaluate the efficacy of the proposed algorithm, we apply it to find the numerical solution of the heat equation using Crank–Nicolson scheme [20] described as follows:

where

- is the temperature distribution function over space x and time t,

- is the thermal diffusivity constant,

- are sufficiently smooth functions.

Due to the complexity of most PDEs, exact solutions are often unattainable. Consequently, numerical methods are employed to approximate these solutions. In this context, the Crank–Nicolson scheme is utilized to approximate the solution to the heat equation. This method involves discretizing the space x with a step size and the time t with a step size . The solution at position and time step , where N and T represent total number of the discretizing in space and time, respectively. Then, , we write for short, is the approximation solution for space at time . For each point and time step , the scheme based on Crank–Nicolson can be written as

where

The initial conditions are defined as follows:

By rearranging terms, the scheme can be written in a tridiagonal matrix form as follows:

where

Therefore, to find the next time step , the linear system needs to be solved. In this case, due to the advantages in speed of convergence, iterative methods are often used to solve the linear systems, particularly for large and sparse matrices. The general form of the linear system can be written as

where A is an matrix, u is an unknown vector, and b is an constant vector. In particular, for each time step, our algorithm can alternatively be applied to solve the linear system with and .

Solving linear systems of the form is a fundamental step for obtaining the solution in various scientific and engineering domains. Direct methods, such as Gaussian elimination, LU decomposition, etc., are well known and efficient, but they can be computationally costly and impractical for large linear systems. In practice, the linear system must be solved multiple times when addressing a problem. For this reason, iterative methods provide an efficient alternative approach by utilizing iterative calculations that converge to the solution. Specifically, the iterative method is a form of the fixed point method. When converted to a fixed point approach, the problem is transformed into , where is a mapping or function that satisfies the fixed point properties. There are different ways to define fixed point mapping for solving linear system, as follows:

- Gauss–Seidel: ,

- Jacobi: ,

- Successive over relaxation: ,where is a weight parameter, D is the diagonal part of matrix A, and L is the upper part of matrix A.

From PISPMHA, we can solve the linear system by integrating the fixed point approaches followed from the proposed algorithm. The Algorithm 2 aims to solve the linear system using the proposed fixed point iteration, starting with two vectors through the set of fixed point mapping . Note that we can choose to be any subset of . Consequently, there are seven possible subsets. The generated sequence is defined as follows. Note that the parameters (lines 6, 7, 8, and 9) can be any sequence that satisfies the conditions.

| Algorithm 2 Inertial parallel algorithm |

|

To better assess performance, we compare our algorithm to two other algorithms from the literature, referred to as “Pd” and “Drs”, which correspond to [12] and [16], respectively. Note that the sequences within the algorithms have been adjusted and differ from those originally proposed in the references. Additionally, to facilitate comparison, the symbols used in the references have been changed to match those in our Algorithms 3 and 4.

| Algorithm 3 Pd |

|

| Algorithm 4 Drs |

|

In this case, we consider the heat equation defined in interval , and we aim to approximate the solution at time using a time step of . To ensure fairness, the sequences and parameter settings for each algorithm are identical. All algorithms in this study are implemented in the Julia programming language [21,22] and execute on an M2 processor. The parameters are set as follows: , and ,

where the system has the exact solution .

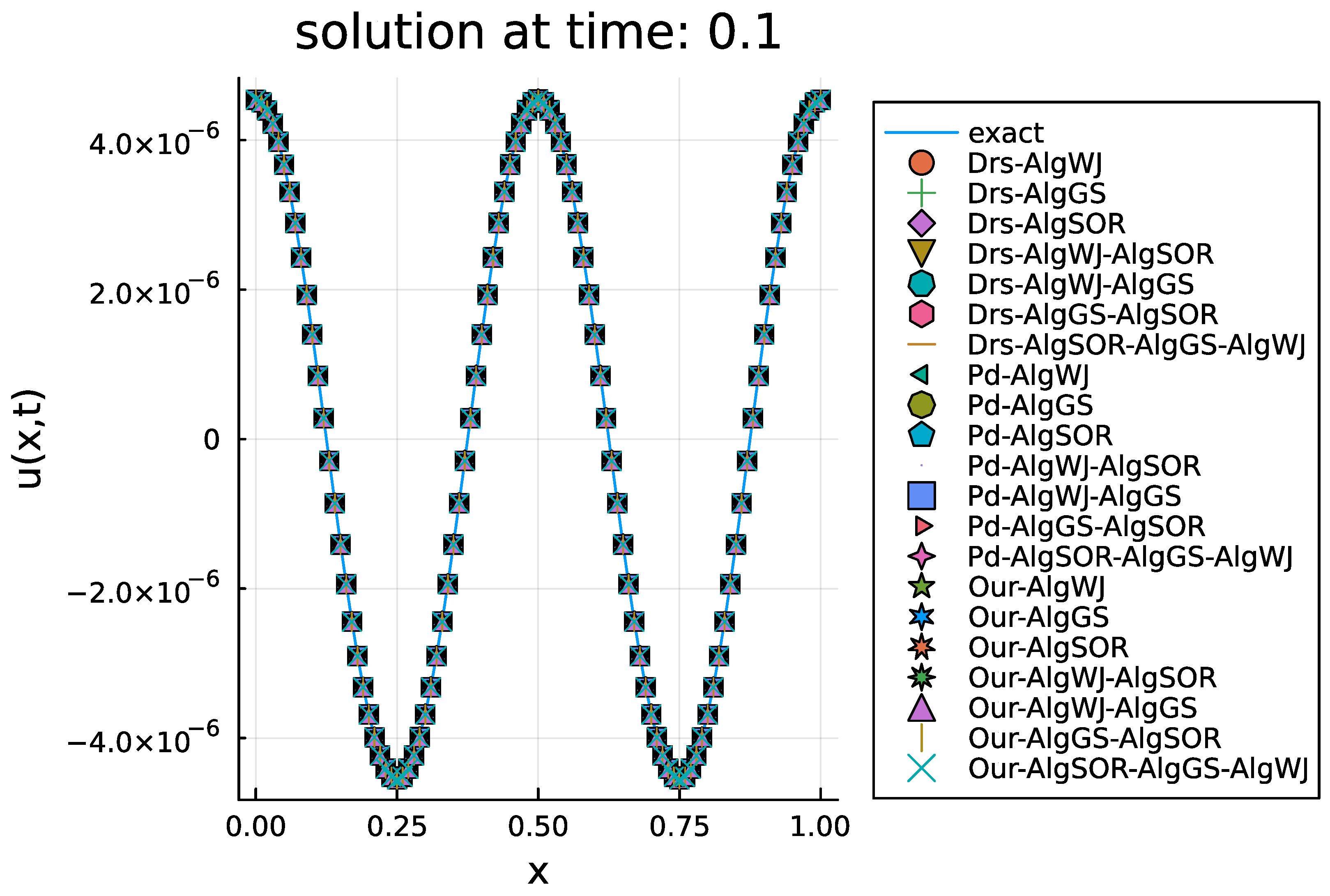

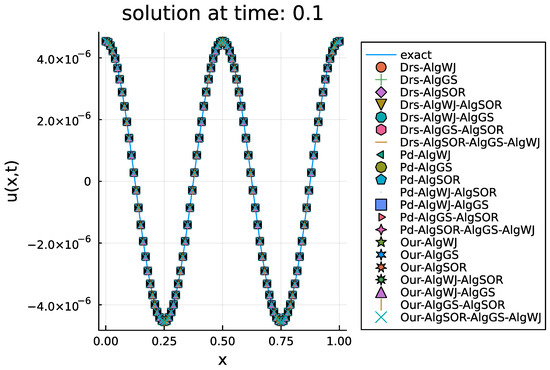

Figure 1 shows the comparison between the exact solution and numerical solutions. To clarify the labels in Figure 1, “Our” refers to our proposed algorithm. In this figure, “Alg” followed by the name of the iterative method, such as WJ, GS, or SOR, represents the fixed point iteration used in the main algorithm for solving linear systems at each time step. We can see from this figure that every method produces almost the same solution. Therefore, our algorithm is capable of solving the linear system effectively.

Figure 1.

The exact and approximate solutions for the heat equation at time and .

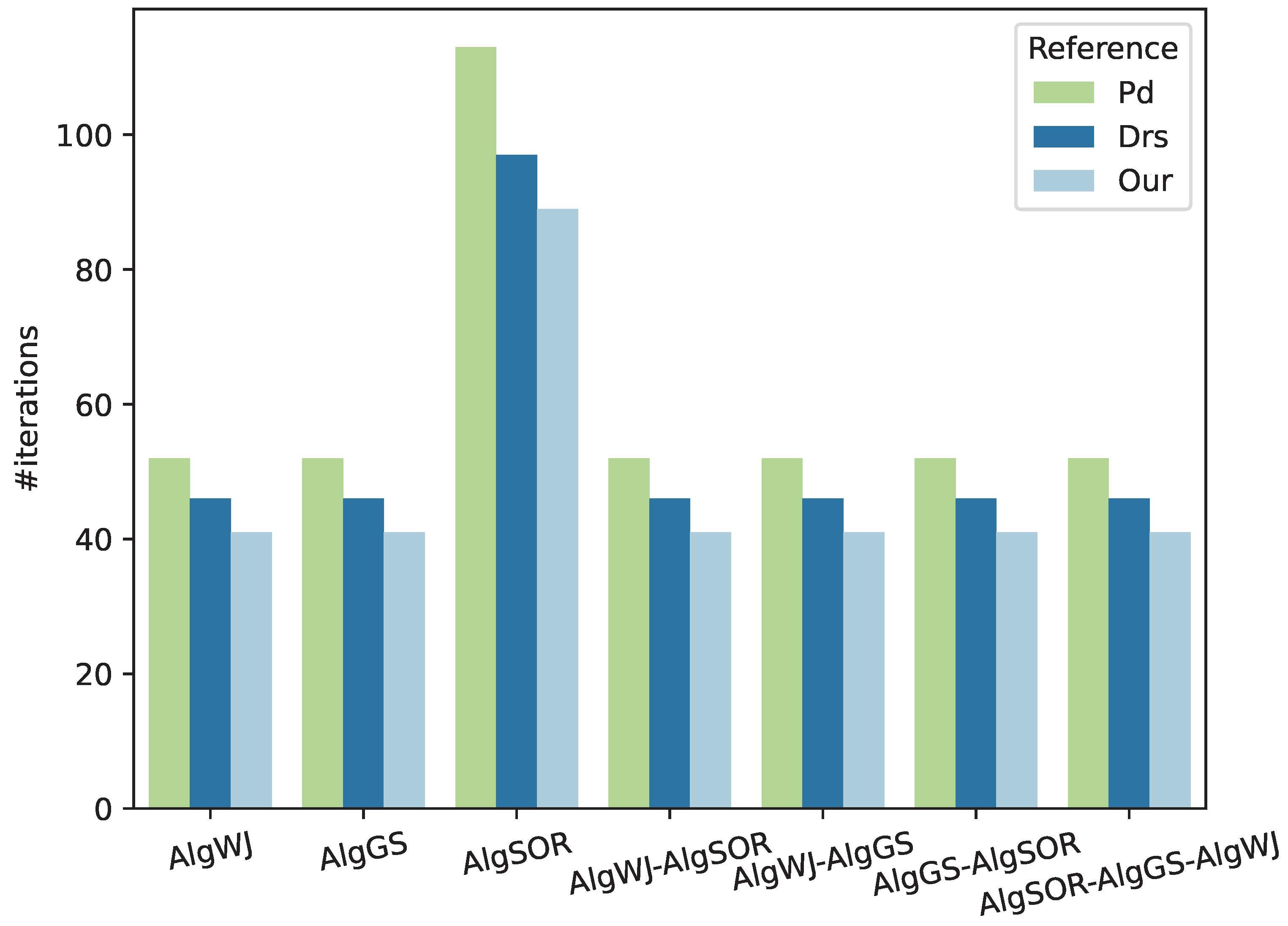

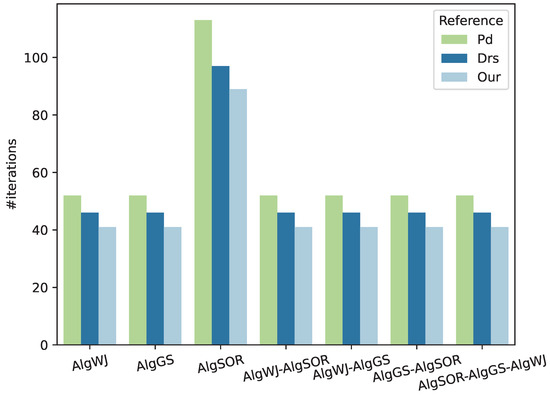

Figure 2 displays a bar plot showing the number of iterations for both the proposed and existing algorithms. The results clearly demonstrate that our algorithm requires fewer iterations to solve the linear system compared to existing algorithms, highlighting the efficiency of our approach. Furthermore, the performance of different fixed point iterations is consistent across each algorithm, suggesting that lower-complexity fixed point iterations can yield the same results. This consistency reduces overall computational time. Additionally, the SOR algorithm, when used alone, has the highest average number of iterations. However, when SOR is combined with another algorithm, the number of iterations decreases to match that of the other algorithm, indicating that SOR is not efficient in this context. Moreover, SOR performs the worst even when combined with other algorithms.

Figure 2.

The comparison of the average number of iterations between the literature and our proposed algorithm.

4. Conclusions

This study introduces a novel iterative method for approximating common fixed points of G-nonexpansive mappings in real Hilbert spaces structured by directed graphs. The introduction of left and right coordinate convexity concepts proved crucial in establishing both weak and strong convergence under reasonable assumptions. The proposed algorithm demonstrated its effectiveness in addressing real-world problems, notably in solving the heat equation through linear systems, highlighting its potential application in energy optimization. The results contribute to advancements in computational methods that support sustainable development by offering improved tools for energy-efficient solutions.

Author Contributions

Conceptualization, R.S.; methodology, R.S.; software, P.S. and T.S.; formal analysis, K.C.; writing—original draft preparation, K.C.; writing—review and editing, P.S. and T.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by (1) Fundamental Fund 2025, Chiang Mai University, Chiang Mai, Thailand; (2) Chiang Mai University, Chiang Mai, Thailand; and (3) Centre of Excellence in Mathematics, MHESI, Bangkok 10400, Thailand.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dudorova, N.V.; Belan, B.D. The Energy Model of Urban Heat Island. Atmosphere 2022, 13, 457. [Google Scholar] [CrossRef]

- Allahem, A.; Boulaaras, S.; Zennir, K.; Haiour, M. A new mathematical model of heat equations and its application on the agriculture soil. Eur. J. Pure Appl. Math. 2018, 11, 110–137. [Google Scholar] [CrossRef]

- Ruby, T.; Sutrisno, A. Mathematical modeling of heat transper in agricultural drying machine room (box dryer). J. Phys. Conf. Ser. 2021, 1751, 012029. [Google Scholar] [CrossRef]

- Emmanuel, E.C.; Chinelo, A. On the Numerical Fixed Point Iterative Methods of Solution for the Boundary Value Problems of Elliptic Partial Differential Equation Types. Asian J. Math. Sci. 2018, 2. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3812257 (accessed on 29 September 2024).

- Atangana, A.; Araz, S.I. An Accurate Iterative Method for Ordinary Differential Equations with Classical and Caputo-Fabrizio Derivatives; Hindustan Aeronautics Limited: Bangalore, India, 2023. [Google Scholar]

- Young, D. Iterative methods for solving partial difference equations of elliptic type. Trans. Am. Math. Soc. 1954, 76, 92–111. [Google Scholar] [CrossRef]

- Gamboa, J.M.; Gromadzki, G. On the set of fixed points of automorphisms of bordered Klein surfaces. Rev. Mat. Iberoam. 2012, 28, 113–126. [Google Scholar] [CrossRef]

- Cooper, D. Automorphisms of free groups have finitely generated fixed point sets. J. Algebra 1987, 111, 453–456. [Google Scholar] [CrossRef]

- Jachymski, J. The contraction principle for mappings on a metric space with a graph. Proc. Am. Math. Soc. 2008, 136, 1359–1373. [Google Scholar] [CrossRef]

- Aleomraninejad, S.M.A.; Rezapour, S.; Shahzad, N. Some fixed point results on a metric space with a graph. Topol. Its Appl. 2012, 159, 659–663. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Suantai, S.; Cholamjiak, W. Hybrid methods for a finite family of G-nonexpansive mappings in Hilbert spaces endowed with graphs. AKCE Int. J. Graphs Comb. 2017, 14, 101–111. [Google Scholar] [CrossRef]

- Charoensawan, P.; Yambangwai, D.; Cholamjiak, W.; Suparatulatorn, R. An inertial parallel algorithm for a finite family of G-nonexpansive mappings with application to the diffusion problem. Adv. Differ. Equ. 2021, 2021, 1–13. [Google Scholar] [CrossRef]

- Jun-On, N.; Suparatulatorn, R.; Gamal, M.; Cholamjiak, W. An inertial parallel algorithm for a finite family of G-nonexpansive mappings applied to signal recovery. AIMS Math 2022, 7, 1775–1790. [Google Scholar] [CrossRef]

- Khemphet, A.; Suparatulatorn, R.; Varnakovida, P.; Charoensawan, P. A Modified Parallel Algorithm for a Common Fixed-Point Problem with Application to Signal Recovery. Symmetry 2023, 15, 1464. [Google Scholar] [CrossRef]

- Karahan, I.; Ozdemir, M. A general iterative method for approximation of fixed points and their applications. Adv. Fixed Point Theory 2013, 3, 510–526. [Google Scholar]

- Yambangwai, D.; Thianwan, T. A parallel inertial SP-iteration monotone hybrid algorithm for a finite family of G-nonexpansive mappings and its application in linear system, differential, and signal recovery problems. Carpathian J. Math. 2024, 40, 535–557. [Google Scholar] [CrossRef]

- Van Dung, N.; Trung Hieu, N. Convergence of a new three-step iteration process to common fixed points of three G-nonexpansive mappings in Banach spaces with directed graphs. RACSAM 2020, 114, 140. [Google Scholar] [CrossRef]

- Auslender, A.; Teboulle, M.; Ben-Tiba, S. A Logarithmic-Quadratic Proximal Method for Variational Inequalities. Comput. Optim. Appl. 1999, 12, 31–40. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; CMS Books in Mathematics; Springer: New York, NY, USA, 2011. [Google Scholar]

- Yambangwai, D.; Cholamjiak, W.; Thianwan, T.; Dutta, H. On a new weight tri-diagonal iterative method and its applications. Soft Comput. 2021, 25, 725–740. [Google Scholar] [CrossRef]

- Bezanson, J.; Karpinski, S.; Shah, V.B.; Edelman, A. Julia: A fast dynamic language for technical computing. arXiv 2012, arXiv:1209.5145. [Google Scholar]

- Bezanson, J.; Edelman, A.; Karpinski, S.; Shah, V.B. Julia: A fresh approach to numerical computing. SIAM Rev. 2017, 59, 65–98. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).