Brain Connectivity Dynamics and Mittag–Leffler Synchronization in Asymmetric Complex Networks for a Class of Coupled Nonlinear Fractional-Order Memristive Neural Network System with Coupling Boundary Conditions

Abstract

:1. Introduction and Mathematical Setting of the Problem

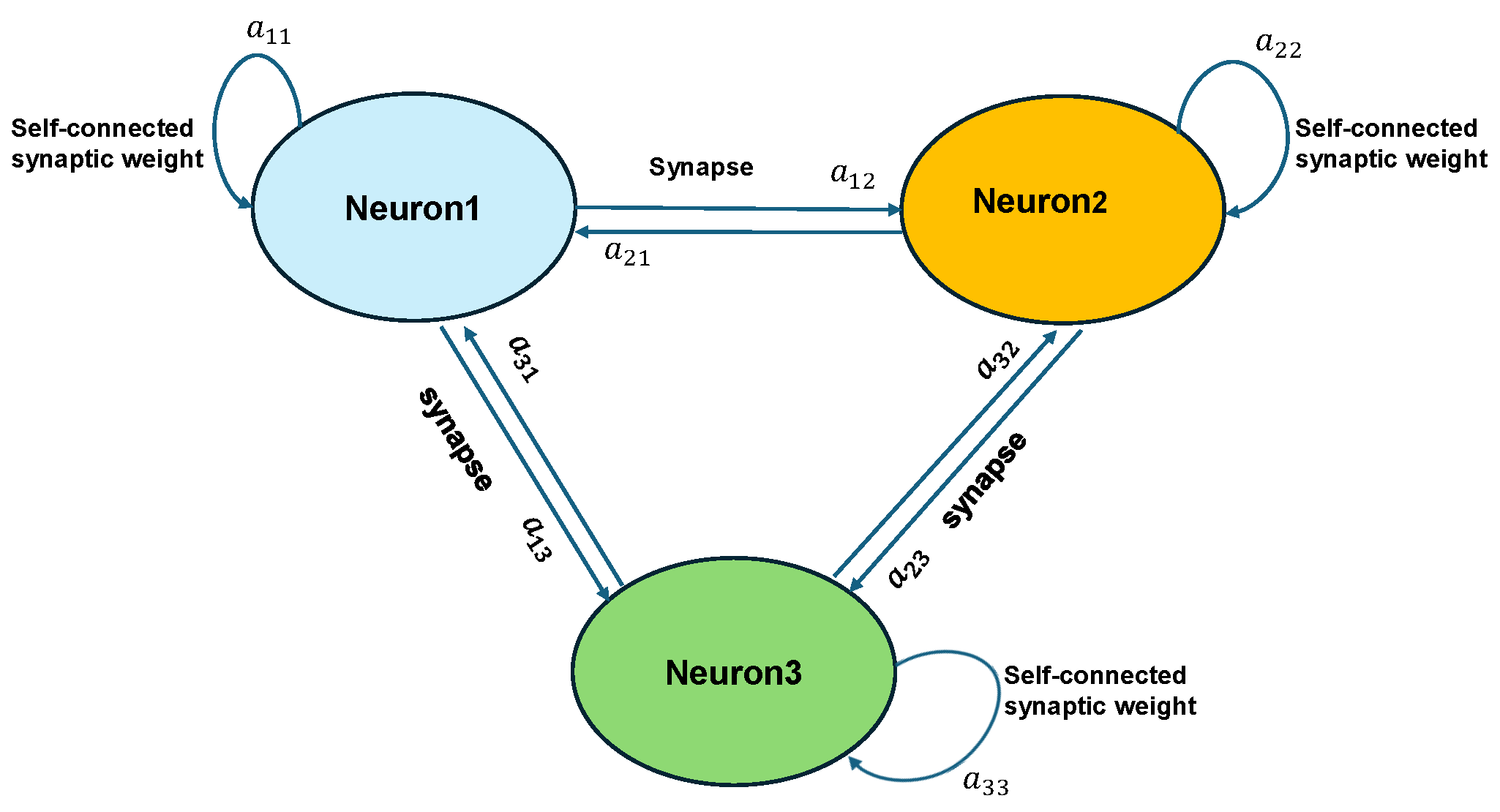

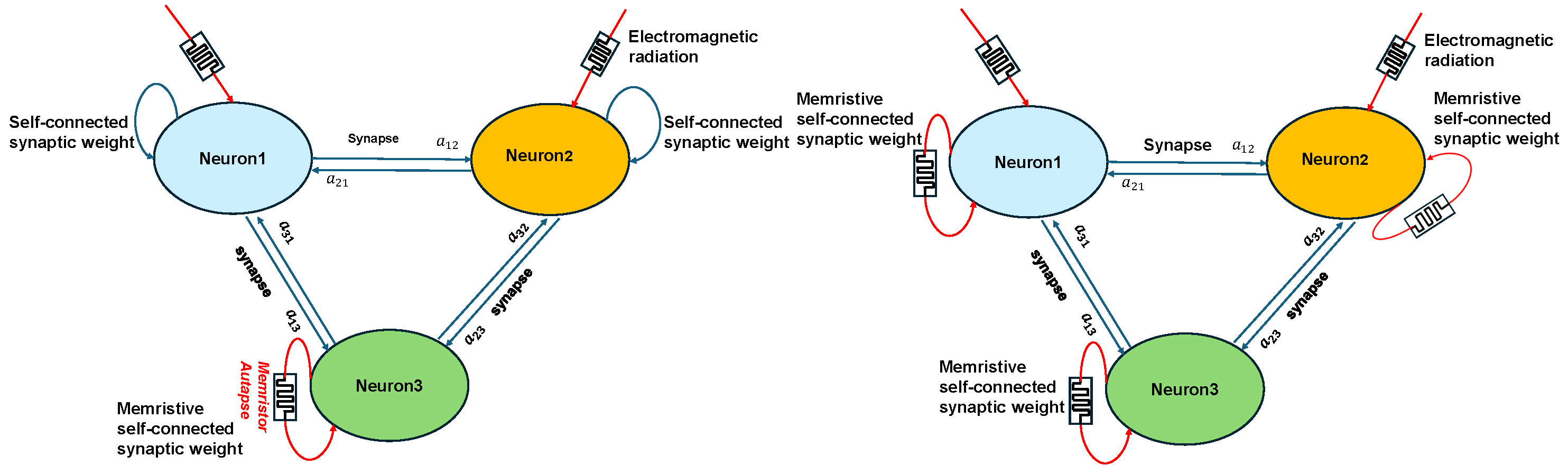

2. Formulation of Memristive-Based Neural Network Problem

- (HG)

- for and , i.e., the matrix has vanishing row and column sums and non-negative off-diagonal elements.

3. Assumptions, Notations, and Some Fundamental Inequalities

- (i) Hölder’s inequality: , where

- (ii) Young’s inequality ( and ):

- (iii) Minkowski’s integral inequality ():

- The nonlinear scalar activation function , which can be taken as with as a decreasing function on the second variable, satisfies ():

- (i)

- ;

- (ii)

- ;

- (iii)

- and ;

- (iv)

- ;

- (v)

- and .

, , and , for , are positive constants, , for , are given functions, and is the primitive function of . - The nonlinear scalar activation functions are bounded with and satisfy -Lipschitz condition, i.e.,

- (vi)

- and , , with and .

4. Well-Posedness of the System

5. Dissipative Dynamics of the Solution

6. Synchronization Phenomena

6.1. Uniform Boundedness in

- (i)

- ;

- (ii)

6.2. Local Complete Synchronization

6.3. Master–Slave Synchronization via Pinning Control

6.3.1. Feedback Control

- - First form:

- where (for ) and (for ) are arbitrary positive constants, and , for . We prove easily that satisfies the condition (44) (for ).

- - Second form:

- where if and otherwise, , (for ) and (for ) are arbitrary positive constants, and , for .For this example, we have ifand thenConsequently, we obtain that

6.3.2. Adaptive Control

7. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

- Step 1. We show first that, for every N, the system (A1) admits a local solution. The system (A1) is equivalent to an initial value for a system of nonlinear fractional differential equations for functions , in which the nonlinear term is a Carathéodory function. The existence of a local absolutely continuous solution on interval , with is insured by the standard FODE theory (see, e.g., [69,70]). Thus, we have a local solution of (A1) on .

- Step 2. We next derive a priori estimates for functions , which entail that , by applying iteratively step 1. For simplicity, in the next step, we omit the “ ” on T. Now, we setwhere , and are absolutely continuous coefficients.

- Step 3. We can now show the existence of weak solutions to (1). From results (A12), (A15), (A21) and (A20), Theorem A1 and compactness argument, it follows that there exist and such that there exists a subsequence of also denoted by , such thatFirst, we show that exists in the weak sense and that . Indeed, we take and (then ).

Appendix B

- For , the forward (respectively, backward) γth-order Riemann–Liouville and Caputo fractional derivatives of f converge to the classical derivative (respectively, to ). Moreover, the γth-order Riemann–Liouville fractional derivative of constant function (with k a constant) is not 0, since

- We can show that the difference between Riemann–Liouville and Caputo fractional derivatives depends only on the values of f on endpoint. More precisely, for , we have ( and )

- (i)

- into , for any ;

- (ii)

- into , for any and ;

- (iii)

- into , for any ;

- (iii)

- into , for any ;

- (iv)

- into .

- (i)

- if f is an -function on with values in X and g is an -function on with values in X, then

- (ii)

- if and , then

- (i)

- (ii)

- (iii)

References

- Sporns, O.; Zwi, J.D. The small world of the cerebral cortex. Neuroinformatics 2004, 2, 145–162. [Google Scholar] [CrossRef] [PubMed]

- Barrat, A.; Barthelemy, M.; Vespignani, A. Dynamical Processes on Complex Networks; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Belmiloudi, A. Mathematical modeling and optimal control problems in brain tumor targeted drug delivery strategies. Int. J. Biomath. 2017, 10, 1750056. [Google Scholar] [CrossRef]

- Venkadesh, S.; Horn, J.D.V. Integrative Models of Brain Structure and Dynamics: Concepts, Challenges, and Methods. Front. Neurosci. 2021, 15, 752332. [Google Scholar] [CrossRef] [PubMed]

- Craddock, R.C.; Jbabdi, S.; Yan, C.G.; Vogelstein, J.T.; Castellanos, F.X.; Di Martino, A.; Kelly, C.; Heberlein, K.; Colcombe, S.; Milham, M.P. Imaging human connectomes at the macroscale. Nat. Methods 2013, 10, 524–539. [Google Scholar] [CrossRef] [PubMed]

- Deco, G.; McIntosh, A.R.; Shen, K.; Hutchison, R.M.; Menon, R.S.; Everling, S.; Hagmann, P.; Jirsa, V.K. Identification of optimal structural connectivity using functional connectivity and neural modeling. J. Neurosci. 2014, 34, 7910–7916. [Google Scholar] [CrossRef] [PubMed]

- Damascelli, M.; Woodward, T.S.; Sanford, N.; Zahid, H.B.; Lim, R.; Scott, A.; Kramer, J.K. Multiple functional brain networks related to pain perception revealed by fMRI. Neuroinformatics 2022, 20, 155–172. [Google Scholar] [CrossRef]

- Hipp, J.F.; Engel, A.K.; Siegel, M. Oscillatory synchronization in large-scale cortical networks predicts perception. Neuron 2011, 69, 387–396. [Google Scholar] [CrossRef] [PubMed]

- Varela, F.; Lachaux, J.P.; Rodriguez, E.; Martinerie, J. The brainweb: Phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2001, 2, 229–239. [Google Scholar] [CrossRef] [PubMed]

- Brookes, M.J.; Woolrich, M.; Luckhoo, H.; Price, D.; Hale, J.R.; Stephenson, M.C.; Barnes, G.R.; Smith, S.M.; Morris, P.G. Investigating the electrophysiological basis of resting state networks using magnetoencephalography. Proc. Natl. Acad. Sci. USA 2011, 108, 16783–16788. [Google Scholar] [CrossRef]

- Das, S.; Maharatna, K. Fractional dynamical model for the generation of ECG like signals from filtered coupled Van-der Pol oscillators. Comput. Methods Programs Biomed. 2013, 122, 490–507. [Google Scholar] [CrossRef]

- Schirner, M.; Kong, X.; Yeo, B.T.; Deco, G.; Ritter, P. Dynamic primitives of brain network interaction. NeuroImage 2022, 250, 118928. [Google Scholar] [CrossRef] [PubMed]

- Axmacher, N.; Mormann, F.; Fernández, G.; Elger, C.E.; Fell, J. Memory formation by neuronal synchronization. Brain Res. Rev. 2006, 52, 170–182. [Google Scholar] [CrossRef] [PubMed]

- Breakspear, M. Dynamic models of large-scale brain activity. Nat. Neurosci. 2017, 20, 340–352. [Google Scholar] [CrossRef] [PubMed]

- Lundstrom, B.N.; Higgs, M.H.; Spain, W.J.; Fairhall, A.L. Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 2008, 11, 1335–1342. [Google Scholar] [CrossRef] [PubMed]

- Belmiloudi, A. Dynamical behavior of nonlinear impulsive abstract partial differential equations on networks with multiple time-varying delays and mixed boundary conditions involving time-varying delays. J. Dyn. Control Syst. 2015, 21, 95–146. [Google Scholar] [CrossRef]

- Gilding, B.H.; Kersner, R. Travelling Waves in Nonlinear Diffusion-Convection Reaction; Birkhäuser: Basel, Switzerland, 2012. [Google Scholar]

- Kondo, S.; Miura, T. Reaction-diffusion model as a framework for understanding biological pattern formation. Science 2010, 329, 1616–1620. [Google Scholar] [CrossRef] [PubMed]

- Babiloni, C.; Lizio, R.; Marzano, N.; Capotosto, P.; Soricelli, A.; Triggiani, A.I.; Cordone, S.; Gesualdo, L.; Del Percio, C. Brain neural synchronization and functional coupling in Alzheimer’s disease as revealed by resting state EEG rhythms. Int. J. Psychophysiol. 2016, 103, 88–102. [Google Scholar] [CrossRef] [PubMed]

- Lehnertz, K.; Bialonski, S.; Horstmann, M.T.; Krug, D.; Rothkegel, A.; Staniek, M.; Wagner, T. Synchronization phenomena in human epileptic brain networks. J. Neurosci. Methods 2009, 183, 42–48. [Google Scholar] [CrossRef]

- Schnitzler, A.; Gross, J. Normal and pathological oscillatory communication in the brain. Nat. Rev. Neurosci. 2005, 6, 285–296. [Google Scholar] [CrossRef]

- Touboul, J.D.; Piette, C.; Venance, L.; Ermentrout, G.B. Noise-induced synchronization and antiresonance in interacting excitable systems: Applications to deep brain stimulation in Parkinson’s disease. Phys. Rev. X 2020, 10, 011073. [Google Scholar] [CrossRef]

- Uhlhaas, P.J.; Singer, W. Neural synchrony in brain disorders: Relevance for cognitive dysfunctions and pathophysiology. Neuron 2006, 52, 155–168. [Google Scholar] [CrossRef] [PubMed]

- Bestmann, S. (Ed.) Computational Neurostimulation; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Chua, L.O. Memristor-the missing circuit element. IEEE Trans. Circuit Theory 1971, 18, 507–519. [Google Scholar] [CrossRef]

- Lin, H.; Wang, C.; Tan, Y. Hidden extreme multistability with hyperchaos and transient chaos in a Hopfield neural network affected by electromagnetic radiation. Nonlinear Dyn. 2020, 99, 2369–2386. [Google Scholar] [CrossRef]

- Njitacke, Z.T.; Kengne, J.; Fotsin, H.B. A plethora of behaviors in a memristor based Hopfield neural networks (HNNs). Int. J. Dyn. Control 2019, 7, 36–52. [Google Scholar] [CrossRef]

- Farnood, M.B.; Shouraki, S.B. Memristor-based circuits for performing basic arithmetic operations. Procedia Comput. Sci. 2011, 3, 128–132. [Google Scholar]

- Jo, S.H.; Chang, T.; Ebong, I.; Bhadviya, B.B.; Mazumder, P.; Lu, W. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 2010, 10, 1297–1301. [Google Scholar] [CrossRef]

- Snider, G.S. Cortical computing with memristive nanodevices. SciDAC Rev. 2008, 10, 58–65. [Google Scholar]

- Anbalagan, P.; Ramachandran, R.; Alzabut, J.; Hincal, E.; Niezabitowski, M. Improved results on finite-time passivity and synchronization problem for fractional-order memristor-based competitive neural networks: Interval matrix approach. Fractal Fract. 2022, 6, 36. [Google Scholar] [CrossRef]

- Bao, G.; Zeng, Z.G.; Shen, Y.J. Region stability analysis and tracking control of memristive recurrent neural network. Neural Netw. 2018, 98, 51–58. [Google Scholar] [CrossRef]

- Chen, J.; Chen, B.; Zeng, Z. O(t-α)-synchronization and Mittag-Leffler synchronization for the fractional-order memristive neural networks with delays and discontinuous neuron activations. Neural Netw. 2018, 100, 10–24. [Google Scholar] [CrossRef]

- Rakkiyappan, R.; Sivaranjani, K.; Velmurugan, G. Passivity and passification of memristor-based complex-valued recurrent neural networks with interval time-varying delays. Neurocomputing 2014, 144, 391–407. [Google Scholar] [CrossRef]

- Takembo, C.N.; Mvogo, A.; Ekobena Fouda, H.P.; Kofané, T.C. Effect of electromagnetic radiation on the dynamics of spatiotemporal patterns in memristor-based neuronal network. Nonlinear Dyn. 2018, 95, 1067–1078. [Google Scholar] [CrossRef]

- Tu, Z.; Wang, D.; Yang, X.; Cao, J. Lagrange stability of memristive quaternion-valued neural networks with neutral items. Neurocomputing 2020, 399, 380–389. [Google Scholar] [CrossRef]

- Zhu, S.; Bao, H. Event-triggered synchronization of coupled memristive neural networks. Appl. Math. Comput. 2022, 415, 126715. [Google Scholar] [CrossRef]

- Baleanu, D.; Lopes, A.M. (Eds.) Applications in engineering, life and social sciences. In Handbook of Fractional Calculus with Applications; De Gruyter: Berlin, Germany, 2019. [Google Scholar]

- Belmiloudi, A. Cardiac memory phenomenon, time-fractional order nonlinear system and bidomain-torso type model in electrocardiology. AIMS Math. 2021, 6, 821–867. [Google Scholar] [CrossRef]

- Hilfer, R. Applications of Fractional Calculus in Physics; World Scientific: Singapore, 2000. [Google Scholar]

- Maheswari, M.L.; Shri, K.S.; Sajid, M. Analysis on existence of system of coupled multifractional nonlinear hybrid differential equations with coupled boundary conditions. AIMS Math. 2024, 9, 13642–13658. [Google Scholar] [CrossRef]

- Magin, R.L. Fractional calculus models of complex dynamics in biological tissues. Comput. Math. Appl. 2010, 59, 1586–1593. [Google Scholar] [CrossRef]

- West, B.J.; Turalska, M.; Grigolini, P. Networks of Echoes: Imitation, Innovation and Invisible Leaders; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Caputo, M. Linear models of dissipation whose Q is almost frequency independent. II. Fract.Calc. Appl. Anal. 2008, 11, 414, Reprinted from Geophys. J. R. Astr. Soc. 1967, 13, 529–539.. [Google Scholar] [CrossRef]

- Ermentrout, G.B.; Terman, D.H. Mathematical Foundations of Neuroscience; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Izhikevich, E.M. Dynamical Systems in Neuroscience; The MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Osipov, G.V.; Kurths, J.; Zhou, C. Synchronization in Oscillatory Networks; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Wu, C.W. Synchronization in Complex Networks of Nonlinear Dynamical Systems; World Scientific: Singapore, 2007. [Google Scholar]

- Ambrosio, B.; Aziz-Alaoui, M.; Phan, V.L. Large time behaviour and synchronization of complex networks of reaction–diffusion systems of FitzHugh-Nagumo type. IMA J. Appl. Math. 2019, 84, 416–443. [Google Scholar] [CrossRef]

- Ding, K.; Han, Q.-L. Synchronization of two coupled Hindmarsh-Rose neurons. Kybernetika 2015, 51, 784–799. [Google Scholar] [CrossRef]

- Huang, Y.; Hou, J.; Yang, E. Passivity and synchronization of coupled reaction-diffusion complex-valued memristive neural networks. Appl. Math. Comput. 2020, 379, 125271. [Google Scholar] [CrossRef]

- Miranville, A.; Cantin, G.; Aziz-Alaoui, M.A. Bifurcations and synchronization in networks of unstable reaction–diffusion system. J. Nonlinear Sci. 2021, 6, 44. [Google Scholar] [CrossRef]

- Yang, X.; Cao, J.; Yang, Z. Synchronization of coupled reaction-diffusion neural networks with time-varying delays via pinning-impulsive controllers. SIAM J. Contr. Optim. 2013, 51, 3486–3510. [Google Scholar] [CrossRef]

- You, Y. Exponential synchronization of memristive Hindmarsh–Rose neural networks. Nonlinear Anal. Real World Appl. 2023, 73, 103909. [Google Scholar] [CrossRef]

- Hymavathi, M.; Ibrahim, T.F.; Ali, M.S.; Stamov, G.; Stamova, I.; Younis, B.A.; Osman, K.I. Synchronization of fractional-order neural networks with time delays and reaction-diffusion Terms via Pinning Control. Mathematics 2022, 10, 3916. [Google Scholar] [CrossRef]

- Li, W.; Gao, X.; Li, R. Dissipativity and synchronization control of fractional-order memristive neural networks with reaction-diffusion terms. Math. Methods Appl. Sci. 2019, 42, 7494–7505. [Google Scholar] [CrossRef]

- Wu, X.; Liu, S.; Wang, H.; Wang, Y. Stability and pinning synchronization of delayed memristive neural networks with fractional-order and reaction–diffusion terms. ISA Trans. 2023, 136, 114–125. [Google Scholar] [CrossRef]

- Tonnesen, J.; Hrabetov, S.; Soria, F.N. Local diffusion in the extracellular space of the brain. Neurobiol. Dis. 2023, 177, 105981. [Google Scholar] [CrossRef]

- Adams, R.A. Sobolev Spaces; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Ern, A.; Guermond, J.L. Finite Elements I: Approximation and Interpolation, Texts in Applied Mathematics; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Belmiloudi, A. Stabilization, Optimal and Robust Control: Theory and Applications in Biological and Physical Sciences; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Belykh, I.; Belykh, V.N.; Hasler, M. Sychronization in asymmetrically coupled networks with node balance. Chaos 2006, 16, 015102. [Google Scholar] [CrossRef]

- Ye, H.; Gao, J.; Ding, Y. A generalized Gronwall inequality and its application to a fractional differential equation. J. Math. Anal. Appl. 2007, 328, 1075–1081. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Gorenflo, R.; Kilbas, A.A.; Mainardi, F.; Rogosin, S.V. Mittag-Leffler Functions: Related Topics and Applications; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Chueshov, I.D. Introduction to the Theory of Infinite-Dimensional Dissipative Systems; ACTA Scientific Publishing House: Kharkiv, Ukraine, 2002. [Google Scholar]

- Bauer, F.; Atay, F.M.; Jost, J. Synchronization in time-discrete networks with general pairwise coupling. Nonlinearity 2009, 22, 2333–2351. [Google Scholar] [CrossRef]

- Li, L.; Liu, J.G. A generalized definition of Caputo derivatives and its application to fractional ODEs. SIAM J. Math. Anal. 2018, 50, 2867–2900. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J. Analysis of fractional differential equations. J. Math. Anal. Appl. 2002, 265, 229–248. [Google Scholar] [CrossRef]

- Kubica, A.; Yamamoto, M. Initial-boundary value problems for fractional diffusion equations with time-dependent coefficients. Fract. Calc. Appl. Anal. 2018, 21, 276–311. [Google Scholar] [CrossRef]

- Hardy, G.H.; Littlewood, J.E. Some properties of fractional integrals I. Math. Z. 1928, 27, 565–606. [Google Scholar] [CrossRef]

- Zhou, Y. Basic Theory of Fractional Differential Equations; World Scientific: Singapore, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belmiloudi, A. Brain Connectivity Dynamics and Mittag–Leffler Synchronization in Asymmetric Complex Networks for a Class of Coupled Nonlinear Fractional-Order Memristive Neural Network System with Coupling Boundary Conditions. Axioms 2024, 13, 440. https://doi.org/10.3390/axioms13070440

Belmiloudi A. Brain Connectivity Dynamics and Mittag–Leffler Synchronization in Asymmetric Complex Networks for a Class of Coupled Nonlinear Fractional-Order Memristive Neural Network System with Coupling Boundary Conditions. Axioms. 2024; 13(7):440. https://doi.org/10.3390/axioms13070440

Chicago/Turabian StyleBelmiloudi, Aziz. 2024. "Brain Connectivity Dynamics and Mittag–Leffler Synchronization in Asymmetric Complex Networks for a Class of Coupled Nonlinear Fractional-Order Memristive Neural Network System with Coupling Boundary Conditions" Axioms 13, no. 7: 440. https://doi.org/10.3390/axioms13070440