Statistics for Continuous Time Markov Chains, a Short Review

Abstract

1. Introduction

- In Section 2, we mention the main concepts and results on continuous time Markov chains that provide a context and reference for the remainder of this text.

- Section 3, dealing with homogeneous Markov chains, is devoted to a brief presentation of the most important ideas on statistical inference for these chains, mainly for those having a finite state space.

- Section 4 addresses the case of nonhomogeneous Markov chains, and, besides referencing several works on the subject, provides some details of two procedures which enable the determination of parameters of the intensity matrix; the procedure termed calibration covers the case were the departure point for the procedure is some family of discrete time probability transition matrices with numerical entries, and the procedure termed estimation considers data composed of the trajectories of a chain with an absorbing state and with intensities of arbitrary functional form depending on parameters.

- In Section 5, we provide some comment on references on alternative estimation or calibration procedures for Markov chains.

- In Section 6, we present some conclusions drawn from the presentation in this work.

2. Definitions, Notations and Results on Continuous Time Markov Chains

- (i)

- ;

- (ii)

- ;

- (iii)

- .

3. Homogeneous Markov Chains

- 1.

- The trajectories of are right continuous step functions; we have that ; the transition probabilities satisfy Formula (3) and the intensities satisfy Formula (4) stated in Theorem 2.

- 2.

- The set does not depend on θ; we have that and the matrix,has rank d for all .

- 3.

- For each , the Markov chain has only one ergodic set and there are no recurrent states.

- (i)

- With the limit being taken in probability,

- (ii)

- With the limit being taken in probability,

- (iii)

- With the limit being taken in law (denoted by ):

4. Nonhomogeneous Markov Chains

- Generally, we will try to determine the intensities (see Definition 3) that will generate the transition probabilities (see Definition 2), following the classical and very successful modelling approach, tantamount to finding a model of differential equations for the phenomena, in this case, the Kolmogorov equations. In actuarial mathematics, this methodology has been referred to as the Transition Intensity Approach (TIA) (see [48] and…).

- The intensities may have arbitrary functional forms—linear, piecewise constant (see [49] (p. 42)), polynomial, exponential of Gompertz, Makeham or Gompertz–Makeham type (see [49] (pp. 24–25) or [50] (pp. 205–206)) used in health insurance and long-term care modelling—where the functional forms are dependent on parameters that must be fitted to the data either by estimation or calibration.

- The characteristics of the available data relating to observations of phenomena, together with the general—and most often, the qualitative properties expected from the model—are determining for both the choice of the modelling approach and, in the case of modelling by the Kolmogorov equations—the choice of the functional form of the intensities and of the relevant parameters to be estimated or calibrated.

4.1. Calibration of Intensities of a Nonhomogeneous Markov Chain

- 1.

- For every fixed λ, the functions are measurable as functions of u.

- 2.

- For every fixed u, the functions are continuous as functions of λ.

- 3.

- There exists a locally integrable function , such that for all , , and , the following conditions are verified:

- 1.

- We know that there exists a probability transition matrix, with entries absolutely continuous in s and t, such that the conditions in Definition 2, the Chapman–Kolmogorov equations in Theorems 1 and 2 are verified.

- 2.

- For each fixed , we can consider the loss function given by,Then, we have that for the optimisation problem, , there exists such that,the unique minimum being attained at possibly several distinct points .

4.2. Estimation of Nonhomogeneous Markov Chains

- Initially, the intensities are supposed to be constant over a partition of the time interval under study in intervals; this interval may cover one year in the case where the age of the subject matters or cover a period of more than a year in the case where the variation in intensities over the period is assumed to be negligible. The estimation is performed in each interval using the effective methods of maximum likelihood for homogeneous continuous time Markov chains (see Section 3); for this first step, see [56] (pp. 683–690) for details and examples of applications.

- Using additional data of the population for each specific age—such as gender, smoking habits, weight, exercise habits—perform a fitting of the intensity parameters by means of generalised linear models; this complementary methodology is termed graduation of the intensities. See [57] (pp. 126–128) and [58,59] for detailed explanations of the graduation and [60,61] for examples of applications.

- 1.

- We define by induction the jump sequence of the stopping times as follows; firstly, we pose .

- 2.

- Then, the stopping time , that is, the sojourn time in state i and also the time of the first jump, has an exponential distribution function given by:The exponential distribution for is a consequence of a general result on the distribution of the sojourn times of a continuous time Markov chain (see Theorem 2.3.15 in [26] (p. 221)).

- 3.

- Given that the process is in state i, it now may jump to state j at time with probability defined in Formula (5), that is,and so we have that for .

- 4.

- Given that and , we have that , the stopping time of the second jump, has, again, the exponential distribution function given by:and we also have that,and, consequently, we have for .

- 5.

- We proceed inductively to define the stopping time and so on.

- (i)

- Given a state i, we have to find a fitting for the distribution of the random times of jumps. According to Formula (19), these times have an exponential distribution with density .

- (ii)

- For every other state j, by using , possibly with an approximation, by Formula (20), we can obtain an approximation of .

- (iii)

- By using Formula (18) and the approximation obtained for we can obtain an approximation for .

- (iv)

- Finally, we fit an intensity of a chosen functional form to .

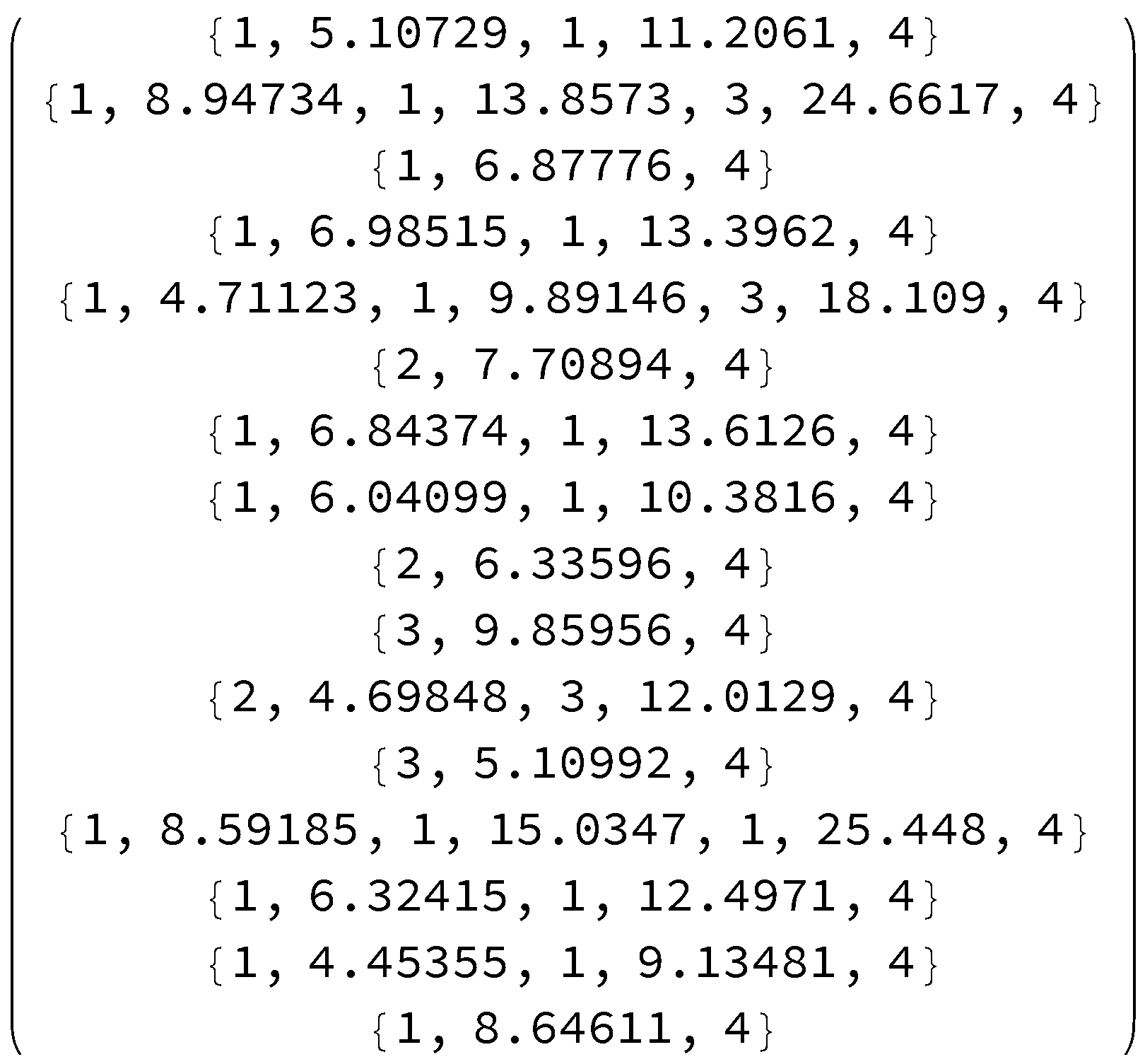

- Recall that an observed trajectory has the following structure: (first state, time spent in state, second state, time spent in state, third state…). In a finite set of trajectories we have as real data, under the hypothesis of an unbounded time horizon for the observations of the trajectories, the maximum length for all trajectories is finite. We select all the trajectories of length greater than 3 that start at state . If the next state is also , the time spent in state—in this case, in state —is the first part of the sample for obtaining . Select all the trajectories of length greater than 5 for which the second state is ; this set of trajectories already contains the previously considered set of trajectories, and so, if the third state is also , the sum of the times spent in the first state and the time spent in the second state will be the second part of the sample for obtaining . Repeat, successively, the procedure for all trajectories of length greater than 7, then of length greater than 9, of length greater than 11, until the maximum length of all the trajectories is attained to obtain the full sample for the intensity .

- Fit a smooth kernel distribution to the sample obtained for the intensity .

- Repeat the procedure used for getting the sample for , but this time selecting the transitions , that is, the transitions from state to state . Fit a smooth kernel distribution to this data.

- Now, we look for an estimate of given by Formulas (18) and (20). For that, we will consider rounding the sojourn times—say to unity, in order to have enough observations—and then group all the observations of jumps from the first state according to this rounding. Consider then the observations towards state . We will then have that:and the most left-hand side of the formula will be estimable with the observations by using the smooth kernel distributions.

- Resorting to Formula (18), we can compute values for and fit a piecewise linear density. That is, using again Formula (18), since , we have that for an arbitrary time :

- If we consider a set of values of and then fit the multidimensional parameter to these values, using for that purpose a functional form previously selected.

- These procedures are to be repeated in order to obtain the parameters for and for .

- The intensities for are obtained in the usual way as a sum of the remaining intensities in line with index i of the intensity matrix.

5. Comments on Some Additional References

- In [65], using the formalism of product integration (see [32,66] for expositions of the concept and [67] for an application to the integrated probability transition functions with fixed points of discontinuity), the authors propose an estimator for the transition probabilities of a nonhomogeneous Markov chain with a finite number of states in the presence of censoring, in particular, when the processes are only observed part of the time. As a first step, an estimator for the integrated transition intensities is obtained using a multivariate counting process, and, in a second step, the product integration is used to obtain the desired estimator for the transition probabilities having useful asymptotic properties. An application of this methodology, using firstly kernel smoothing to estimate the transition intensities, is presented in [68]. An application of the product limit estimator to credit migration matrices is given in [4].

- The work [69] is an extension of the previously referred to Aallen’s work for which the state space has an arbitrary but finite number of both transient and absorbing states. A non-parametric product limit estimator is introduced and shown to be uniformly consistent.

- The Ph.D. thesis [70] develops a method for estimating the parameters of a nonhomogeneous continuous time Markov chain discretely observed by Poisson sampling by using the Dynkin martingale. The estimators are proven to be strongly consistent and asymptotically normal. A simulation study evaluates the performance of the model against the maximum likelihood estimators with continuously observed trajectories.

- The authors of [71] propose a kind of moment estimation procedure to estimate the parameters in the infinitesimal generator of a time-homogeneous continuous-time Markov process: a type of process that may serve as a model of, for instance, high frequency financial data. Several properties of the estimation procedure are proved, such as strong consistency, asymptotic normality, and a characterisation of standard errors.

- The article [72] also develops a calibration procedure by seeking, by means of solving a quadratic objective function minimisation problem subjected to linear constraints, a generator—that is an intensity transition matrix—of a continuous time Markov chain, having a probability transition matrix with a spectrum as close as possible to the spectrum of an estimated discrete time Markov chain transition probability matrix. In the case the chain is embeddable, the procedure returns the generator; if not, the procedure returns a best approximation of the generator, in the sense of the minimisation problem solved. Besides testing the procedure with synthetic data, there is an application to data coming from a time series generated from a model of large scale atmospheric flow.

- For the content of the work [73], the authors say: “In the present work, we focus on Bayesian inference for the partially observed CTMC without using latent variables; we use the likelihood function directly by evaluation of matrix exponentials and perform posterior inference via a Metropolis–Hastings approach, where the generator matrices are fully specified and not constrained”.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Christiansen, M.C. Multistate models in health insurance. AStA Adv. Stat. Anal. 2012, 96, 155–186. [Google Scholar] [CrossRef]

- Gill, R.D. On Estimating Transition Intensities of a Markov Process with Aggregate Data of a Certain Type: “Occurrences but No Exposures”. Scand. J. Stat. 1986, 13, 113–134. [Google Scholar]

- Israel, R.B.; Rosenthal, J.S.; Wei, J.Z. Finding Generators for Markov Chains via Empirical Transition Matrices, with Applications to Credit Ratings. Math. Financ. 2001, 11, 245–265. [Google Scholar] [CrossRef]

- Lando, D.; Skødeberg, T.M. Analyzing rating transitions and rating drift with continuous observations. J. Bank. Financ. 2002, 26, 423–444. [Google Scholar] [CrossRef]

- Jafry, Y.; Schuermann, T. Measurement, estimation and comparison of credit migration matrices. J. Bank. Financ. 2004, 28, 2603–2639. [Google Scholar] [CrossRef]

- Inamura, Y. Estimating Continuous Time Transition Matrices from Discretely Observed Data; Bank of Japan Working Paper Series 06-E-7; Bank of Japan: Tokyo, Japan, 2006. [Google Scholar]

- Möstel, L.; Pfeuffer, M.; Fischer, M. Statistical inference for Markov chains with applications to credit risk. Comput. Statist. 2020, 35, 1659–1684. [Google Scholar] [CrossRef]

- Bartholomew, D.J. Stochastic Models for Social Processes, 3rd ed.; Wiley Series in Probability and Mathematical Statistics; John Wiley & Sons, Ltd.: Chichester, UK, 1982; pp. xii+365. [Google Scholar]

- Vassiliou, P.C.; Georgiou, A.C. Markov and Semi-Markov Chains, Processes, Systems, and Emerging Related Fields. Mathematics 2021, 9, 2490. [Google Scholar] [CrossRef]

- Iosifescu, M.; Tăutu, P. Stochastic Processes and Applications in Biology and Medicine. II: Models; Biomathematics, Editura Academiei, Bucharest; Springer: Berlin, Germany, 1973; Volume 4, p. 337. [Google Scholar]

- Garg, L.; McClean, S.; Meenan, B.; Millard, P. Non-homogeneous Markov models for sequential pattern mining of healthcare data. IMA J. Manag. Math. 2009, 20, 327–344. [Google Scholar] [CrossRef]

- Wan, L.; Lou, W.; Abner, E.; Kryscio, R.J. A comparison of time-homogeneous Markov chain and Markov process multi-state models. Commun. Stat. Case Stud. Data Anal. Appl. 2016, 2, 92–100. [Google Scholar] [CrossRef]

- Chang, J.; Chan, H.K.; Lin, J.; Chan, W. Non-homogeneous continuous-time Markov chain with covariates: Applications to ambulatory hypertension monitoring. Stat. Med. 2023, 42, 1965–1980. [Google Scholar] [CrossRef]

- Norris, J.R. Markov Chains; Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 1998; Volume 2, pp. xvi+237. [Google Scholar]

- Resnick, S. Adventures in Stochastic Processes; Birkhäuser Boston, Inc.: Boston, MA, USA, 1992; pp. xii+626. [Google Scholar]

- Suhov, Y.; Kelbert, M. Markov chains: A primer in random processes and their applications. In Probability and Statistics by Example. II; Cambridge University Press: Cambridge, UK, 2008; pp. x+487. [Google Scholar] [CrossRef]

- Liggett, T.M. Continuous Time Markov Processes: An Introduction; Graduate Studies in Mathematics; American Mathematical Society: Providence, RI, USA, 2010; Volume 113, pp. xii+271. [Google Scholar] [CrossRef]

- Levin, D.A.; Peres, Y. Markov Chains and Mixing Times, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2017; pp. xvi+447. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications. Vol. I, 3rd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 1968; pp. xviii+509. [Google Scholar]

- Feller, W. An Introduction to Probability Theory and Its Applications. Vol. II, 2nd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 1971; pp. xxiv+669. [Google Scholar]

- Dynkin, E.B. Theory of Markov Processes; Brown, D.E., Köváry, T., Eds.; Dover Publications, Inc.: Mineola, NY, USA, 2006; pp. xii+210. [Google Scholar]

- Chung, K.L. Markov Chains with Stationary Transition Probabilities, 2nd ed.; Die Grundlehren der Mathematischen Wissenschaften; Springer, Inc.: New York, NY, USA, 1967; Volume 104, pp. xi+301. [Google Scholar]

- Freedman, D. Markov Chains; Springer: New York, NY, USA, 1983; pp. xiv+382. [Google Scholar]

- Stroock, D.W. An Introduction to Markov Processes; Graduate Texts in Mathematics; Springer: Berlin, Germany, 2005; Volume 230, pp. xiv+171. [Google Scholar]

- Kallenberg, O. Foundations of Modern Probability, 3rd ed.; Probability Theory and Stochastic Modelling; Springer: Cham, Switzerland, 2021; Volume 99, pp. xii+946. [Google Scholar] [CrossRef]

- Iosifescu, M.; Tăutu, P. Stochastic Processes and Applications in Biology and Medicine. I: Theory; Biomathematics; Editura Academiei RSR: Bucharest, Romania; Springer: Berlin, Germany; New York, NY, USA, 1973; Volume 3, p. 331. [Google Scholar]

- Iosifescu, M. Finite Markov Processes and Their Applications; Wiley Series in Probability and Mathematical Statistics; John Wiley & Sons, Ltd.: Chichester, UK; Editura Tehnică: Bucharest, Romania, 1980; p. 295. [Google Scholar]

- Rolski, T.; Schmidli, H.; Schmidt, V.; Teugels, J. Stochastic Processes for Insurance and Finance; Wiley Series in Probability and Statistics; John Wiley & Sons Ltd.: Chichester, UK, 1999; pp. xviii+654. [Google Scholar] [CrossRef]

- Feinberg, E.A.; Mandava, M.; Shiryaev, A.N. On solutions of Kolmogorov’s equations for nonhomogeneous jump Markov processes. J. Math. Anal. Appl. 2014, 411, 261–270. [Google Scholar] [CrossRef]

- Feinberg, E.A.; Shiryaev, A.N. Kolmogorov’s equations for jump Markov processes and their applications to control problems. Theory Probab. Appl. 2022, 66, 582–600. [Google Scholar] [CrossRef]

- Feinberg, E.; Mandava, M.; Shiryaev, A.N. Kolmogorov’s equations for jump Markov processes with unbounded jump rates. Ann. Oper. Res. 2022, 317, 587–604. [Google Scholar] [CrossRef]

- Slavík, A. Product Integration, Its History and Applications; Nečas Center for Mathematical Modeling; Matfyzpress: Prague, Czech Republic, 2007; Volume 1, pp. iv+147. [Google Scholar]

- Billingsley, P. Statistical Inference for Markov Processes; Statistical Research Monographs; University of Chicago Press: Chicago, IL, USA, 1961; Volume II, pp. vii+75. [Google Scholar]

- Doob, J.L. Stochastic Processes; John Wiley & Sons, Inc.: New York, NY, USA, 1953; pp. viii+654. [Google Scholar]

- Kingman, J.F.C. The imbedding problem for finite Markov chains. Z. Wahrscheinlichkeitstheorie Und Verw. Geb. 1962, 1, 14–24. [Google Scholar] [CrossRef]

- Johansen, S. Some results on the imbedding problem for finite Markov chains. J. Lond. Math. Soc. 1974, 8, 345–351. [Google Scholar] [CrossRef]

- Lencastre, P.; Raischel, F.; Rogers, T.; Lind, P.G. From empirical data to time-inhomogeneous continuous Markov processes. Phys. Rev. E 2016, 93, 032135. [Google Scholar] [CrossRef]

- Böttcher, B. Embedded Markov chain approximations in Skorokhod topologies. Probab. Math. Statist. 2019, 39, 259–277. [Google Scholar] [CrossRef]

- Billingsley, P. Statistical methods in Markov chains. Ann. Math. Statist. 1961, 32, 12–40. [Google Scholar] [CrossRef]

- Prakasa Rao, B.L.S. Maximum likelihood estimation for Markov processes. Ann. Inst. Statist. Math. 1972, 24, 333–345. [Google Scholar] [CrossRef]

- Prakasa Rao, B.L.S. Moderate deviation principle for maximum likelihood estimator for Markov processes. Statist. Probab. Lett. 2018, 132, 74–82. [Google Scholar] [CrossRef]

- Foutz, R.V.; Srivastava, R.C. Statistical inference for Markov processes when the model is incorrect. Adv. Appl. Probab. 1979, 11, 737–749. [Google Scholar] [CrossRef]

- Bladt, M.; Sørensen, M. Statistical inference for discretely observed Markov jump processes. J. R. Stat. Soc. Ser. B Stat. Methodol. 2005, 67, 395–410. [Google Scholar] [CrossRef]

- Mitrophanov, A.Y. Stability and exponential convergence of continuous-time Markov chains. J. Appl. Probab. 2003, 40, 970–979. [Google Scholar] [CrossRef]

- Mitrophanov, A.Y. The spectral gap and perturbation bounds for reversible continuous-time Markov chains. J. Appl. Probab. 2004, 41, 1219–1222. [Google Scholar] [CrossRef]

- Mitrofanov, A.Y. Stability estimates for continuous-time finite homogeneous Markov chains. Teor. Veroyatn. Primen. 2005, 50, 371–379. [Google Scholar] [CrossRef]

- Mitrophanov, A.Y. Ergodicity coefficient and perturbation bounds for continuous-time Markov chains. Math. Inequal. Appl. 2005, 8, 159–168. [Google Scholar] [CrossRef]

- Waters, H.R. An approach to the study of multiple state models. J. Inst. Actuar. 1984, 111, 363–374. [Google Scholar] [CrossRef]

- Haberman, S.; Pitacco, E. Actuarial Models for Disability Insurance; Chapman & Hall/CRC: Boca Raton, FL, USA, 1999; pp. xx+280. [Google Scholar]

- Olivieri, A.; Pitacco, E. Introduction to Insurance Mathematics, 2nd ed.; European Actuarial Academy (EAA) Series; Springer: Cham, Switzerland, 2015; pp. xviii+508. [Google Scholar] [CrossRef]

- Mitrophanov, A.Y. The Arsenal of Perturbation Bounds for Finite Continuous-Time Markov Chains: A Perspective. Mathematics 2024, 12, 1608. [Google Scholar] [CrossRef]

- Esquível, M.L.; Krasii, N.P.; Guerreiro, G.R. Estimation–Calibration of Continuous-Time Non-Homogeneous Markov Chains with Finite State Space. Mathematics 2024, 12, 668. [Google Scholar] [CrossRef]

- Zhao, M.d.; Shi, Z.y.; Yang, W.g.; Wang, B. A class of strong deviation theorems for the sequence of real valued random variables with respect to continuous-state non-homogeneous Markov chains. Comm. Statist. Theory Methods 2021, 50, 5475–5487. [Google Scholar] [CrossRef]

- Esquível, M.L.; Guerreiro, G.R.; Oliveira, M.C.; Corte Real, P. Calibration of Transition Intensities for a Multistate Model: Application to Long-Term Care. Risks 2021, 9, 37. [Google Scholar] [CrossRef]

- Esquível, M.L.; Krasii, N.P.; Guerreiro, G.R. Open Markov Type Population Models: From Discrete to Continuous Time. Mathematics 2021, 9, 1496. [Google Scholar] [CrossRef]

- Dickson, D.C.M.; Hardy, M.R.; Waters, H.R. Actuarial Mathematics for Life Contingent Risks, 3rd ed.; International Series on Actuarial Science; Cambridge University Press: Cambridge, UK, 2020; pp. xxiv+759. [Google Scholar] [CrossRef]

- Wolthuis, H. Life Insurance Mathematics (The Markovian Model); CAIRE: Brussels, Belgium, 1994; pp. xi+288. [Google Scholar]

- Forfar, D.O.; McCutcheon, J.J.; Wilkie, A.D. On graduation by mathematical formula. J. Inst. Actuar. 1988, 115, 1–149. [Google Scholar] [CrossRef]

- Haberman, S.; Renshaw, A.E. Generalized Linear Models and Actuarial Science. J. R. Stat. Society. Ser. D (The Stat.) 1996, 45, 407–436. [Google Scholar]

- Renshaw, A.; Haberman, S. On the graduations associated with a multiple state model for permanent health insurance. Insur. Math. Econ. 1995, 17, 1–17. [Google Scholar] [CrossRef]

- Fong, J.H.; Shao, A.W.; Sherris, M. Multistate actuarial models of functional disability. N. Am. Actuar. J. 2015, 19, 41–59. [Google Scholar] [CrossRef]

- de Mol van Otterloo, S.; Alonso-García, J. A multi-state model for sick leave and its impact on partial early retirement incentives: The case of the Netherlands. Scand. Actuar. J. 2023, 2023, 244–268. [Google Scholar] [CrossRef]

- Naka, P.; Boado-Penas, M.d.C.; Lanot, G. A multiple state model for the working-age disabled population using cross-sectional data. Scand. Actuar. J. 2020, 2020, 700–717. [Google Scholar] [CrossRef]

- Brémaud, P. Markov Chains—Gibbs Fields, Monte Carlo Simulation and Queues, 2nd ed.; Texts in Applied Mathematics; Springer: Cham, Switzerland, 2020; Volume 31, pp. xvi+557. [Google Scholar] [CrossRef]

- Aalen, O.O.; Johansen, S. An empirical transition matrix for non-homogeneous Markov chains based on censored observations. Scand. J. Statist. 1978, 5, 141–150. [Google Scholar]

- Friedman, C.N. Product integration and solution of ordinary differential equations. J. Math. Anal. Appl. 1984, 102, 509–518. [Google Scholar] [CrossRef]

- Johansen, S. The product limit estimator as maximum likelihood estimator. Scand. J. Stat. 1978, 5, 195–199. [Google Scholar]

- Keiding, N.; Andersen, P.K. Nonparametric Estimation of Transition Intensities and Transition Probabilities: A Case Study of a Two-State Markov Process. J. R. Stat. Society. Ser. C (Appl. Stat.) 1989, 38, 319–329. [Google Scholar] [CrossRef]

- Fleming, T.R.; Harrington, D.P. Estimation for discrete time nonhomogeneous Markov chains. Stoch. Process. Appl. 1978, 7, 131–139. [Google Scholar] [CrossRef][Green Version]

- Cramer, R.D. Parameter Estimation for Discretely Observed Continuous-Time Markov Chains. Ph.D. Thesis, Rice University, Houston, TX, USA, 2001. [Google Scholar]

- Duffie, D.; Glynn, P. Estimation of continuous-time Markov processes sampled at random time intervals. Econometrica 2004, 72, 1773–1808. [Google Scholar] [CrossRef]

- Crommelin, D.; Vanden-Eijnden, E. Fitting timeseries by continuous-time Markov chains: A quadratic programming approach. J. Comput. Phys. 2006, 217, 782–805. [Google Scholar] [CrossRef]

- Riva-Palacio, A.; Mena, R.H.; Walker, S.G. On the estimation of partially observed continuous-time Markov chains. Comput. Statist. 2023, 38, 1357–1389. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G. Non-Homogeneous Markov Chains and Systems—Theory and Applications; CRC Press: Boca Raton, FL, USA, 2023; pp. xxi+450. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G. Laws of large numbers for non-homogeneous Markov systems with arbitrary transition probability matrices. J. Stat. Theory Pract. 2022, 16, 18. [Google Scholar] [CrossRef]

| H or nH (a) | Articles | F/iF Dt/Ct (b) | TrI/Pr (c) | Est/Cal (d) | App (e) | Cmp/Sim (f) | Data (g) |

|---|---|---|---|---|---|---|---|

| nH | [65] | F & Ct | TrI | Cst & As | – | – | FtjCo |

| [67] | F & Ct | TrI | MLE | – | – | FtjCo | |

| [69] | F & Dt | TrI | MLE & Cst & As | – | Cmp | TrjsDo | |

| [68] | F & Ct | TrI & Pr | K & K | H | Cmp | FtjCo | |

| [70] | iF | TrI | PSam & Cst & As | – | Sim | TrjsDo | |

| [55] | F & Ct | TrI | Cal | LTC | – | – | |

| [75] | F & Ct | TrI | MLE | H | – | TrjsDo | |

| [52] | F & Ct | TrI | nP & Cal | – | Sim | TrjsDo | |

| H | [39] | F & iF & Dt & Ct | TrI & Pr | MLE & Cst & As | – | – | – |

| [33] | F & iF & Dt & Ct | TrI & Pr | MLE & Cst & As | – | – | – | |

| [40] | iF & Dt | Pr | MLE & Cst & As | – | – | TrjsDo | |

| [42] | iF & Dt | Pr | MLE & Cst & As | – | – | TrjsDo | |

| [71] | F& iF& Ct & Dt | TrI & Df | ME & Cst & As | – | – | SmpDo | |

| [73] | F & Ct | TrI | MLE & Bay | – | Sim | TrjsDo | |

| [41] | iF & Dt | Pr | MLE & MD | – | – | TrjsDo |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esquível, M.L.; Krasii, N.P. Statistics for Continuous Time Markov Chains, a Short Review. Axioms 2025, 14, 283. https://doi.org/10.3390/axioms14040283

Esquível ML, Krasii NP. Statistics for Continuous Time Markov Chains, a Short Review. Axioms. 2025; 14(4):283. https://doi.org/10.3390/axioms14040283

Chicago/Turabian StyleEsquível, Manuel L., and Nadezhda P. Krasii. 2025. "Statistics for Continuous Time Markov Chains, a Short Review" Axioms 14, no. 4: 283. https://doi.org/10.3390/axioms14040283

APA StyleEsquível, M. L., & Krasii, N. P. (2025). Statistics for Continuous Time Markov Chains, a Short Review. Axioms, 14(4), 283. https://doi.org/10.3390/axioms14040283