1. Introduction

With the recent advancements in autonomous mobility technology for mobile robots, multiple service robots have been proposed to give assistance in a variety of industries, including manufacturing, warehousing, health care, agriculture, and restaurants [

1]. The rapid development of various technologies facilitates people’s lives. However, the urgent requirements caused by COVID-19 pandemic brought a lot of change to people’s lives. In that case, contact tracing systems were proposed to curb the development of the epidemic [

2]. In fact, people’s experience and acceptance of artificial intelligence are constantly improving with the emergence and application of artificial intelligence (AI) products such as robotic entities [

3]. In addition, staff shortages, the ongoing demand for productivity development, and the change of the service sectors have pushed the restaurant business to adopt robotics. In an effort to increase labor productivity, service robots, such as the automated guided vehicle (AGV) operation system, have been implemented in Japanese restaurants [

4,

5]. AGV systems have evolved into autonomous mobile robots (AMR) to provide more operational flexibility and improved productivity as a result of the development of ai technologies, powerful onboard processors, ubiquitous sensors, and simultaneous location and mapping (SLAM) technology. Traditional low-cost service robots move by patrolling the line. In recent years, radar-based autonomous navigation robots have been widely studied, but their prices are even as high as

$17,000 [

6]. Although the use of artificial intelligence and robots in restaurants is still in its infancy, restaurant managers are seeking assistance in using modern technologies to improve service [

7]. COVID-19 has altered the restaurant industry because the contactless paradigm may provide customers and staff with comfortability when dining and working [

8].

Many restaurant managers are considering implementing service AMRs in order to achieve frictionless service and mitigate the staff shortage. Cheong et al. [

6] developed a prototype of a Mecanum wheel-based mobile waiter robot for testing in a dining food outlet. Yu et al. [

9] designed a restaurant service robot for ordering, fetching, and sending food. In response to the challenges presented by COVID-19, more and more restaurants utilizing robots have emerged in China and the U.S. [

10]. Yang et al. [

11] proposed a robotic delivery authentication system, which includes the client, the server, and the robot. Multiple robots for varied use cases, including hotpot, Chinese food, and coffee, have been developed to meet the needs of a range of restaurants [

12]. Nonetheless, several institutions are attempting to build a universal, unified, and practical robot system that can be deployed to a variety of restaurant application situations. At the 2022 Winter Olympics in Beijing, one of the most successful applications of robots handling plates was implemented. This promising example is anticipated to raise demand for restaurant robots even more. However, the cost of robotics is essential for restaurant management to consider before adopting the technology.

As an alternative to the aforementioned development strategies, restaurant service robots could be built on a generic framework to save development expenses. Chitta et al. [

13] provided a generic and straightforward control framework to implement and manage robot controllers to improve both real-time performance and sharing of controllers. Ladosz et al. [

14] presented a robot operating system (ROS) to develop and test autonomous control functions. This ROS can support an extensive range of robotic systems and can be regarded as a low cost, simple, highly flexible, and re-configurable system. Chivarov et al. [

15] aimed to develop a cost-oriented autonomous humanoid robot to assist humans in daily life tasks and studied the interaction and cooperation between robots and Internet of Things (IoT) devices. Tkáčik et al. [

16] presented a prototype for a modular mobile robotic platform for universal use in both research and teaching activities. Noh et al. [

17] introduced an autonomous navigation system for indoor mobile robots based on open source and achieved three main modules, including mapping, localization, and planning. Based on the robot design, customer features, and service task characteristics, it is feasible to determine the optimal adaption to different service tasks [

18]. Silva et al. [

19] proposed an embedded architecture to utilize cognitive agents in cooperation with the ROS. Ref. Wang et al. [

20] integrated augmented reality in human–robot collaboration and suggested an advanced compensation mechanism for accurate robot control. Oliveira et al. [

21] proposed a multi-modal ROS framework for multi-sensor fusion. They established a transformation model between multiple sensors and achieved interactive positioning of sensors and labeling of data to facilitate the calibration procedure. Fennel et al. [

22] discussed the method of seamless and modular real-time control under the ROS system.

In addition to the restaurant business, more and more industries are contemplating the implementation of AMRs. Fragapane et al. [

23] summarized five application scenes of AMRs in hospital logistics, and they found AMRs can increase the value-added time of hospital personnel for patient care. In order to suit the trend of Industry 4.0, manufacturing systems have effectively adopted AMRs for material feeding [

24]. However, it is difficult to integrate robots into the smart environment [

25]. Furthermore, the service scenarios had an effect on service robot adoption intention [

26]. da Rosa Tavares and Victória Barbosa [

27] observed a trend of using robots to improve environmental and actionable intelligence. Still necessary is the development of a general mobile robot system based on open-source robot platforms for the management of a variety of difficult service scenarios. In this circumstance, the developers migrate the prior scenario’s environment perception in order to implement the related functionality.

In this study, we employ the iFLYTEK-provided UCAR intelligent car platform to create a ROS-based robot control architecture. In addition, we present the open-source platforms used to implement the robot’s functionalities, including path planning, image recognition, and voice interaction. Particularly, we incorporate the feedback data of lidar and acceleration sensors to alter the settings of the Time Elastic Band (TEB) algorithm to assure positioning accuracy. Notably, we examine the viability of the proposed mobile robotic system by analyzing the outcomes of demonstration scenario tests conducted at the 16th Chinese University Student Intelligent Car race. In addition, we assemble a mobile robot as an alternative to iFLYTEK’s UCAR, and our robot offers a significant cost advantage.

The main contributions of our study are as follows:

We present a novel framework for the hardware and software development of mobile robotic systems. To achieve regulated functionalities, we incorporate a Mecanum-wheeled chassis, mechanical damping suspension, sensors, camera, and microphone. In addition, we implement autonomous navigation, voice interaction, and image recognition using open-source platforms such as YOLOV4 and SIFT.

We assembled a mobile robot in accordance with the functional specifications outlined by the Chinese University Student Intelligent Car competition. The components are acquired from the website Taobao. We closely regulate the pricing of these components and compile a consumption list for other developers’ reference.

We test the viability of the introduced mobile robotic system utilizing the demonstration test scenario. We also present the test results of the robot manufactured in-house and guarantee that it is capable of performing the necessary activities at a cheaper cost.

This paper’s intended scientific contribution focuses mostly on how to resolve waste and expense issues generated by new technology in the smart restaurant industry. We replace the acquired full-featured robot with the assembly robot with core functions in order to construct a digital twin system and alleviate the resource waste-related cost issue.

The rest of the paper is organized as follows: The following section provides the hardware architecture of the developed robot and introduces the implementation details of our self-assembled mobile robot.

Section 3 provides a systematic framework for the developed robot in the software design. After that,

Section 4 presents the experimental results for our framework. Finally, conclusions and future research directions are given in

Section 5.

2. Robots Design

2.1. Hardware and Software Design of UCAR

We designed an autonomous mobile robot for accomplishing delivery jobs in smart restaurants. The mobile robot recognizes its surroundings in real-time while traveling and autonomously avoids obstructions. According to its open-source schematic diagram, its hardware structure includes a master computer used to deploy the model and analyze the comprehensive data and a slave computer used to operate the external circuit directly. Information sharing between them is done using serial communication. The master computer is a control board with an x86 architecture installing the Ubuntu development environment, which is suited for development using C++, C, Python, and other programming languages. The slave computer is an STM32f407 control board, which controls the base of a car through the I/O circuit by the interruption response mode, collects pertinent information from sensors, and is responsible for motion control and environment perception as shown in

Table 1.

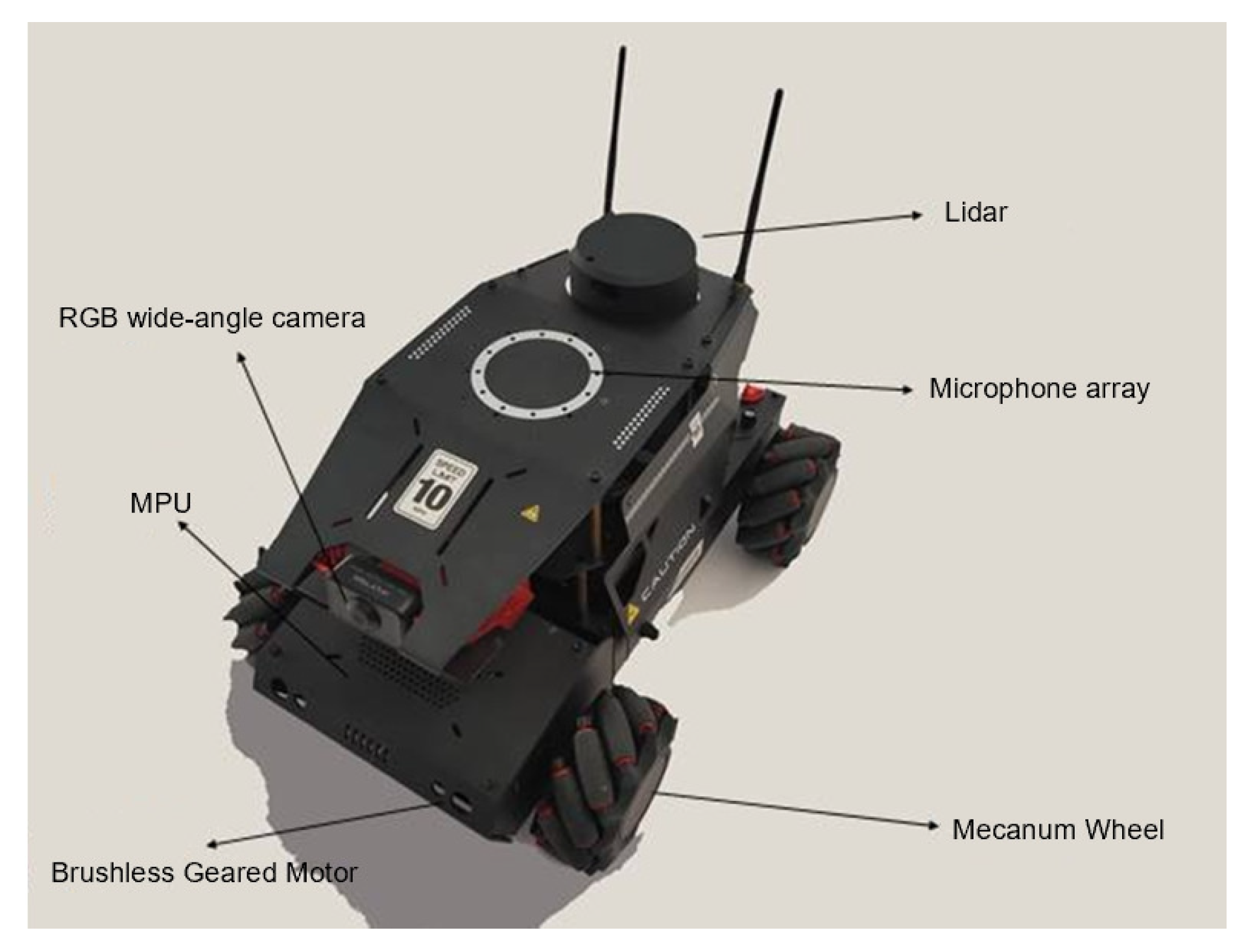

The structure of the UCAR platform upon which the robot is based is shown in

Figure 1. The chassis of the mobile robot uses Mecanum wheels. A Mecanum wheel can move omnidirectionally by tilting the rim, so enabling many forms of motion, including translation and rotation. In our project, the posture estimation approach is based on the motion form of the Mecanum wheel. The current posture state is obtained via continuous iteration of the initial pose conditions and is employed for attitude correction during autonomous navigation.

We employed attitude sensors to measure the linear acceleration of the three coordinate axes and the rotational angular acceleration of the three axes in the Cartesian coordinate system. The collected dynamic force and torque in the Cartesian coordinate system are changed into matching electrical quantities according to Newton’s rules through the force electric conversion module. After that, the collected acceleration values are obtained. After taking UCAR as a rigid body kinematics model, the displacement and velocity values in six directions may be acquired by integration according to the six accelerations of its inertial measurement unit (IMU), and the current pose information is gradually gathered from the beginning point.

UCAR equips a triangular ranging lidar, which scans the environment at a frequency of 5–12 Hz at the height of 30 cm from the ground. The radar creates point cloud information through the return value. It then uses the Simultaneous Localization and Mapping (SLAM) technique to build a real-time surrounding point cloud map employed for dynamic local path planning. The specific parameters of lidar are displayed in

Table 2.

Limited by the mechanical structure of the car body, UCAR is equipped with a modest wide-angle camera to deal with computer vision tasks in complex situations. The camera takes photographs in a vast-angle range and applies machine vision models using a neural network. It is of significant utility when robots need to assess real-world scenes.

Table 3 provides the specifications of the wide-angle camera.

With the advancement of human–computer interaction capabilities, more and more application settings are supporting voice-based human–computer interactions. Voice input has led to faster task performance and fewer errors than keyboard typing. UCAR provides a developer interface based on the iFLYTEK AI platform. In this study, we trained a voice recognition model by registering the online voice module training tool, which can be used as an intelligent vehicle’s voice interface function package.

2.2. Robot Assembly

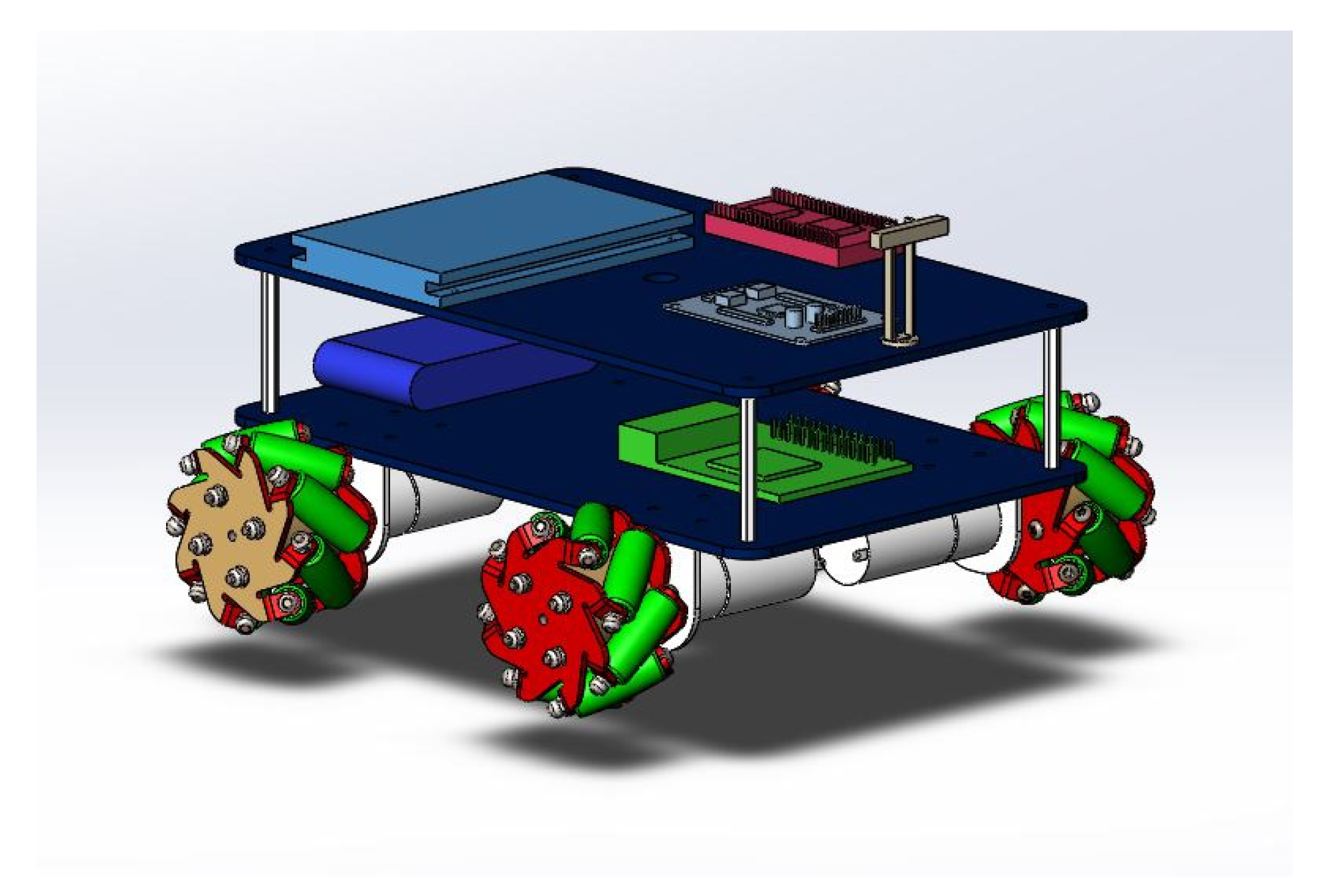

After establishing the basic functionalities of an autonomous navigation robot for restaurant delivery based on the UCAR platform given by iFLYTEK, we wanted to construct an autonomous mobile robot with lower cost and guaranteed performance. The rationale for deciding to construct a low-cost robot separately is that the development of the ROS-based system increases the cost of maintaining and learning the operation of the system function package, as indicated before. Mastering another robot operating system is unnecessary and not in step with company production reality. When altering algorithms, a high degree of encapsulation and modularity leads to challenges with compatibility and version commonality. In addition, underlying hardware interfaces may increase the equipment price. ROS functions are redundant for single-field researchers. Our team’s research focuses on cluster communication and path planning. Although we quickly learned the ROS development method in the UCAR-based development process, it is not particularly beneficial for non-ROS devices meant for real-world application. The robot we assemble in-house basically comprises the following parts. The final built robot is illustrated in

Figure 2.

The motion part is used to realize the omnidirectional movement of the robot on plane terrain for various mobile tasks. The Mecanum wheel is used in this mobile robot, although it has a large power loss in forwarding motion. A low-cost wheel with a 60 cm diameter of plastic hub and rubber roller surface is used. The wheel coupling is a six-sided cylindrical structure with internal thread made by 3D printing. In the current market, set screws are typically used to directly match the motor D-shaped shaft with the wheel boss hole. In contrast, the matching method adopted in this study is more modular and involves separating the bushing that bears a large amount of torque from the wheel. This change does not have much value for metal wheels and materials with greater tensile strength, but it significantly improves the service life of plastic wheels, which are less rigid. This improves the fatigue strength of plastic wheels subjected to alternating loads, and the damage is more concentrated on the low-cost printed hexagonal plastic column part. The sports chassis is supported by four hexagonal copper columns. The lower chassis is arranged with a battery and drive circuit, and the motor bracket is installed symmetrically on both sides. The motor bracket and D-axis DC motor are connected by screw fastening, and a through-hole thread connects the DC motor and hexagonal coupling. The coupling is externally connected to a Mecanum wheel with hexagonal connection holes. There are four Mecanum wheels for omnidirectional movement.

The power supply for the robot is a multi-channel voltage stabilizer and voltage division module, which can access the power supply of 12 V - 2 A and divide it into 15 voltage channels to suit different needs, such as 3.3 V, 5 V, and 12 V (adjustable) for the sensor, main control board, and motor drive voltage, respectively. The battery combines numerous output voltages and currents through multiple 3V lithium batteries in series and in parallel; this battery is used in most rechargeable consumer electronics designs in China. The milliamperes of the primary control board output cannot directly drive a high-current component such as a DC motor. Therefore, a TB6612N proportional current power amplifier is employed to generate sufficient driving current. By extending the latter, the steady speed control of four 12-0.6A DC reduction motors is obtained to fulfill the needs of autonomous robot movement.

Multi-thread control, including a central decision-making main thread and many unit sub-threads, is adopted for the robot. Each thread is responsible for an independent scanning task, which implies that each output and input port can work separately. Each sub-thread offers a system state to the main process by altering global variables. The main thread can carry out sensor fusion and control by scanning the real-time changing state table. Navigation decisions are decided on real-time IMU data and camera picture data provided by sub-threads in motion control, which will be discussed in the next portion.

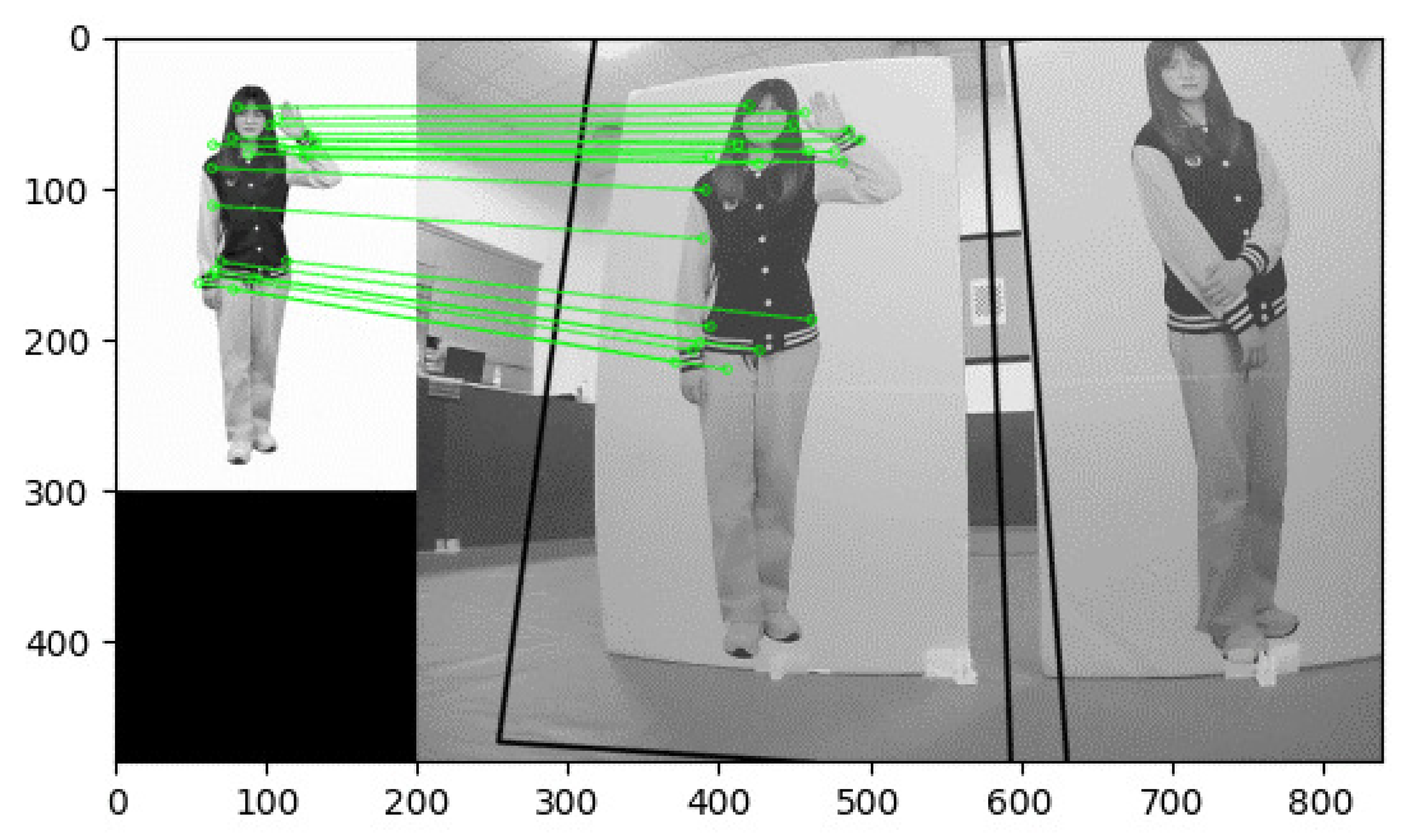

Most current navigation technologies are based on radar or camera. Due to the constraint of the sampling frequency of time-varying signals, there will always be cumulative inaccuracies between the real state change and the measured state change for inertial components such as gyroscopes and accelerometers. The longer the operation period, the less precise the measured displacement and angle changes will be. Scanning the surrounding environment in real-time can allow the actual position to be known so that sensor mistakes can be corrected frequently. The advantage of lidar calibration is that the accurate position value can be acquired by employing the stability of the laser, and the correction is accurate. The downside is that the laser signal frequency is too high to be particularly sensitive to tiny changes. When the radar equipment exhibits weak vibration, and the scanning surface is not parallel to the ground for a short time, substantial drift will occur. Algorithms such as DWA or TEB often lead to complete system instability and crash in the face of large drift. In order to further lower the development cost, we employed the camera for calibration and used the neural network approach to achieve the target identification of feature points in the image, including data set arrangement, network design, model training, and model invocation. The position of the landmark pattern laid at the site of the camera is acquired through the convolution and deconvolution of the input image. The cumulative mistake of IMU is appraised and repaired. Target detection is still carried out by YOLOv4 introduced in the previous phase. The sole difference is that the detecting object and the data set during training are modified.

Figure 3 is the architecture schematic of our built robot. Based on this architecture diagram, the equipment assembled in the experimental phase considerably regulates the cost and reduces the production cycle.

5. Conclusions and Future Work

In this study, we participated in an intelligent car competition to test the performance of the open-source robot platform built by iFLYTEK. Furthermore, we deployed the sophisticated robot function package through the upper computer in the ROS general framework. We effectively implemented path planning, autonomous navigation, voice interaction, two-dimensional code identification, feature detection and other functions on this new device. We proved that the UCAR mobile robot platform could promote robot research and project development. We obtained the optimal parameters for deploying the TEB algorithm on the device through experiments and determined the actual influence of various parameters on the operation effect of the robot based on practical debugging, and obtained the operation result of 20.58s in the 16 square meter test site. In addition, we deployed two machine vision approaches, including deep learning and image processing, to test the feasibility and practical benefits of image detection on UCAR robots for discovering a low-cost open robot platform based on ROS. Moreover, we tested the AI voice recognition tool built by iFLYTEK based on UCAR in terms of two functionalities, speech recognition, and audio converter. Furthermore, we designed and assembled a mobile robot using parts acquired from the Taobao website; this robot was cheaper than the UCAR offered by iFLYTEK.

In the future, we will develop our mobile robot design with reference to environmental sensing and aesthetics. By the way, more realistic tests will be developed to evaluate the performance of our assembled robots. Moreover, developing the mobile robot control software will be an important component of our future effort. We will also examine employing context histories for recording the data generated during the operation of robots in smart settings. Context prediction can be used to forecast better courses and future instances where the robot can act. For example, predict the prioritization to attend in a smart restaurant and crises in the restaurant.