Expanding the Frontiers of Industrial Robots beyond Factories: Design and in the Wild Validation

Abstract

:1. Introduction

2. Related Work

3. Objectives and Contributions

4. Design and Implementation

4.1. Hardware

4.2. Software

4.2.1. Eye Camera Algorithms

4.2.2. Hand Cameras Algorithms

4.2.3. Sensors Algorithms

4.2.4. Control Algorithm

4.2.5. Human–Robot Graphical User Interface

5. Validation in the Wild

- Q1:

- What are the emotional reactions and perceptions of visitors towards the proposed interactive scenario?

- Q2:

- What is the attitude of visitors towards robots after direct interactions with an industrial robot with affective and cognitive skills?

- Q3:

- What are the potential expectations of visitors towards robots in their working and everyday environment?

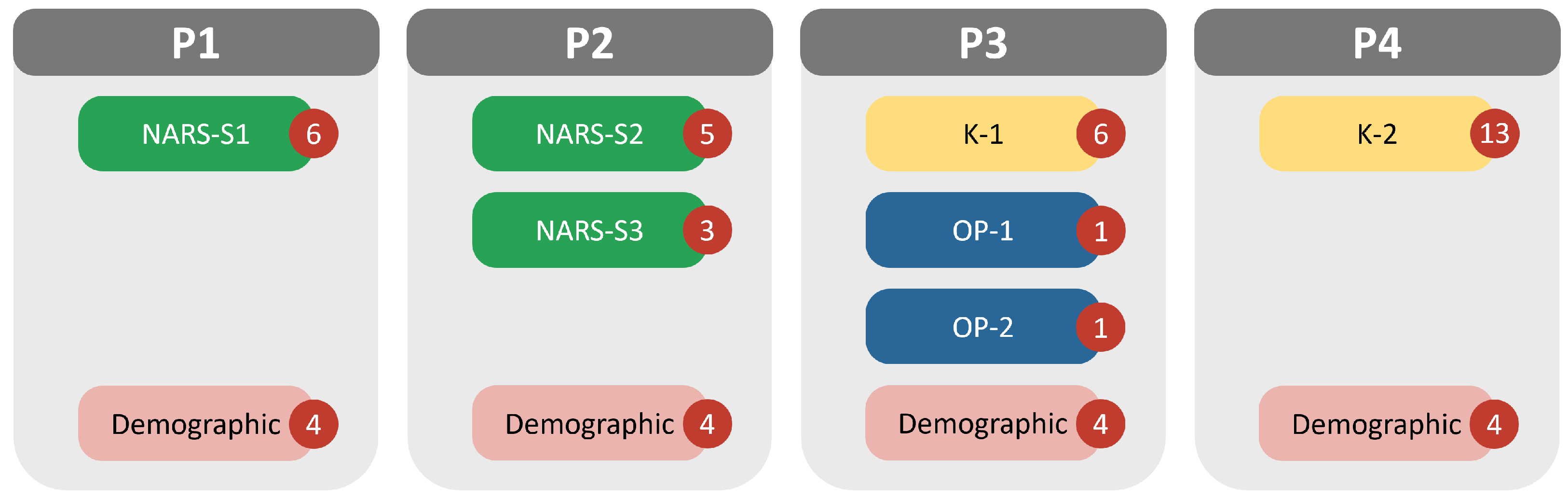

5.1. Subjective Validation of the Proposed System

- OP-1:

- If you had to work together with a robot, what would be the main characteristics you think the robot should have?

- OP-2:

- If you had to live with a robot, what would be the main characteristics you think the robot should have?

5.2. Participants

6. Results

6.1. User Perceptions and Emotional Reactions

6.2. Negative Attitudes Towards Robots

6.3. User Needs and Desires

6.4. Hypothesis

- H1.

- The cultural background (country of origin) has an influence on their perception of the robot.

- H2.

- The gender of the participants has an influence on their perception of the robot.

- H3.

- The knowledge about the robots has an influence on their perception of the robot.

7. Discussion

7.1. Regarding Q1 and Q2

7.2. Regarding Q3

7.3. Limitations

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BB | Bounding Box |

| CPU | Central Processing Unit |

| DoF | Degree of Fredom |

| EC | Eye Camera |

| GPU | Graphics Processing Unit |

| GUI | Graphical User Interface |

| HC | Hand Camera |

| HCI | Human–Computer Interaction |

| HRI | Human–Robot interaction |

| IREX | International Robotics EXhibition |

| OP | OPen question |

| P1–4 | Questionnaire 1–4 |

| RAT | Robot Assisted Therapy |

| RCNN | Region-based Convolutional Neural Network |

| RS | RealSense |

| US | Ultrasonic Sensor |

| WLAN | Wireless Local Area Network |

References

- Darvish, K.; Wanderlingh, F.; Bruno, B.; Simetti, E.; Mastrogiovanni, F.; Casalino, G. Flexible human–robot cooperation models for assisted shop-floor tasks. Mechatronics 2018, 51, 97–114. [Google Scholar] [CrossRef] [Green Version]

- Müller-Abdelrazeq, S.L.; Schönefeld, K.; Haberstroh, M.; Hees, F. Interacting with collaborative robots—A study on attitudes and acceptance in industrial contexts. In Social Robots: Technological, Societal and Ethical Aspects of Human-Robot Interaction; Springer: Berlin/Heidelberg, Germany, 2019; pp. 101–117. [Google Scholar]

- Naneva, S.; Gou, M.S.; Webb, T.L.; Prescott, T.J. A Systematic Review of Attitudes, Anxiety, Acceptance, and Trust Towards Social Robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Wendt, T.M.; Himmelsbach, U.B.; Lai, M.; Waßmer, M. Time-of-flight cameras enabling collaborative robots for improved safety in medical applications. Int. J. Interdiscip. Telecommun. Netw. IJITN 2017, 9, 10–17. [Google Scholar] [CrossRef]

- Oron-Gilad, T.; Hancock, P.A. From ergonomics to hedonomics: Trends in human factors and technology—The role of hedonomics revisited. In Emotions and Affect in Human Factors and Human-Computer Interaction; Elsevier: Amsterdam, The Netherlands, 2017; pp. 185–194. [Google Scholar]

- Fukuyama, M. Society 5.0: Aiming for a new human-centered society. Jpn. Spotlight 2018, 27, 47–50. [Google Scholar]

- Kadir, B.A.; Broberg, O.; da Conceicao, C.S. Current research and future perspectives on human factors and ergonomics in Industry 4.0. Comput. Ind. Eng. 2019, 137, 106004. [Google Scholar] [CrossRef]

- Kummer, T.F.; Schäfer, K.; Todorova, N. Acceptance of hospital nurses toward sensor-based medication systems: A questionnaire survey. Int. J. Nurs. Stud. 2013, 50, 508–517. [Google Scholar] [CrossRef]

- Sundar, S.S.; Waddell, T.F.; Jung, E.H. The Hollywood robot syndrome media effects on older adults’ attitudes toward robots and adoption intentions. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; IEEE: New Jersey, NJ, USA, 2016; pp. 343–350. [Google Scholar]

- Beer, J.M.; Prakash, A.; Mitzner, T.L.; Rogers, W.A. Understanding Robot Acceptance; Technical Report; Georgia Institute of Technology: Atlanta, GA, USA, 2011. [Google Scholar]

- Bröhl, C.; Nelles, J.; Brandl, C.; Mertens, A.; Schlick, C.M. TAM reloaded: A technology acceptance model for human-robot cooperation in production systems. In Proceedings of the International Conference on Human-Computer Interaction, Paris, France, 14–16 September 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 97–103. [Google Scholar]

- Jung, M.; Hinds, P. Robots in the wild: A time for more robust theories of human-robot interaction. ACM Trans. Hum.-Robot Interact. THRI 2018, 7, 2. [Google Scholar] [CrossRef] [Green Version]

- Šabanović, S.; Reeder, S.; Kechavarzi, B. Designing robots in the wild: In situ prototype evaluation for a break management robot. J. Hum.-Robot Interact. 2014, 3, 70–88. [Google Scholar] [CrossRef] [Green Version]

- Coronado, E.; Indurkhya, X.; Venture, G. Robots Meet Children, Development of Semi-Autonomous Control Systems for Children-Robot Interaction in the Wild. In Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), Toyonaka, Japan, 3–5 July 2019; pp. 360–365. [Google Scholar] [CrossRef]

- Venture, G.; Indurkhya, B.; Izui, T. Dance with me! Child-robot interaction in the wild. In Proceedings of the International Conference on Social Robotics, Tsukuba, Japan, 22–24 November 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 375–382. [Google Scholar]

- Foster, M.E.; Alami, R.; Gestranius, O.; Lemon, O.; Niemelä, M.; Odobez, J.M.; Pandey, A.K. The MuMMER project: Engaging human-robot interaction in real-world public spaces. In Proceedings of the International Conference on Social Robotics, Kansas City, MO, USA, 1–3 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 753–763. [Google Scholar]

- Bremner, P.; Koschate, M.; Levine, M. Humanoid robot avatars: An ‘in the wild’usability study. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; IEEE: New Jersey, NJ, USA, 2016; pp. 624–629. [Google Scholar]

- Björling, E.A.; Thomas, K.; Rose, E.J.; Cakmak, M. Exploring teens as robot operators, users and witnesses in the wild. Front. Robot. AI 2020, 7, 5. [Google Scholar] [CrossRef] [Green Version]

- Zguda, P.; Kołota, A.; Venture, G.; Sniezynski, B.; Indurkhya, B. Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies. Electronics 2021, 10, 347. [Google Scholar] [CrossRef]

- Nomura, T.T.; Syrdal, D.S.; Dautenhahn, K. Differences on social acceptance of humanoid robots between Japan and the UK. In Proceedings of the Procs 4th Int Symposium on New Frontiers in Human-Robot Interaction, The Society for the Study of Artificial Intelligence and the Simulation of Behaviour, Canterbury, UK, 21–22 April 2015. [Google Scholar]

- Haring, K.S.; Mougenot, C.; Ono, F.; Watanabe, K. Cultural differences in perception and attitude towards robots. Int. J. Affect. Eng. 2014, 13, 149–157. [Google Scholar] [CrossRef] [Green Version]

- Brondi, S.; Pivetti, M.; Di Battista, S.; Sarrica, M. What do we expect from robots? Social representations, attitudes and evaluations of robots in daily life. Technol. Soc. 2021, 66, 101663. [Google Scholar] [CrossRef]

- Sinnema, L.; Alimardani, M. The Attitude of Elderly and Young Adults Towards a Humanoid Robot as a Facilitator for Social Interaction. In Proceedings of the International Conference on Social Robotics, Madrid, Spain, 26–29 November 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 24–33. [Google Scholar]

- Smakman, M.H.; Konijn, E.A.; Vogt, P.; Pankowska, P. Attitudes towards social robots in education: Enthusiast, practical, troubled, sceptic, and mindfully positive. Robotics 2021, 10, 24. [Google Scholar] [CrossRef]

- Chen, S.C.; Jones, C.; Moyle, W. Health Professional and Workers Attitudes Towards the Use of Social Robots for Older Adults in Long-Term Care. Int. J. Soc. Robot. 2019, 12, 1–13. [Google Scholar] [CrossRef]

- Elprama, S.A.; Jewell, C.I.; Jacobs, A.; El Makrini, I.; Vanderborght, B. Attitudes of factory workers towards industrial and collaborative robots. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 113–114. [Google Scholar]

- Aaltonen, I.; Salmi, T. Experiences and expectations of collaborative robots in industry and academia: Barriers and development needs. Procedia Manuf. 2019, 38, 1151–1158. [Google Scholar] [CrossRef]

- Elprama, B.; El Makrini, I.; Jacobs, A. Acceptance of collaborative robots by factory workers: A pilot study on the importance of social cues of anthropomorphic robots. In Proceedings of the International Symposium on Robot and Human Interactive Communication, New York, NY, USA, 26–31 August 2016. [Google Scholar]

- Kim, M.G.; Lee, J.; Aichi, Y.; Morishita, H.; Makino, M. Effectiveness of robot exhibition through visitors experience: A case study of Nagoya Science Hiroba exhibition in Japan. In Proceedings of the 2016 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 28–30 November 2016; IEEE: New Jersey, NJ, USA, 2016; pp. 1–5. [Google Scholar]

- Vidal, D.; Gaussier, P. Visitor or artefact! An experiment with a humanoid robot at the Musée du Quai Branly in Paris. In Wording Robotics; Springer: Berlin/Heidelberg, Germany, 2019; pp. 101–117. [Google Scholar]

- Karreman, D.; Ludden, G.; Evers, V. Visiting cultural heritage with a tour guide robot: A user evaluation study in-the-wild. In Proceedings of the International Conference on Social Robotics, Paris, France, 26–30 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 317–326. [Google Scholar]

- Joosse, M.; Evers, V. A guide robot at the airport: First impressions. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 149–150. [Google Scholar]

- Kanda, T.; Shiomi, M.; Miyashita, Z.; Ishiguro, H.; Hagita, N. A Communication Robot in a Shopping Mall. IEEE Trans. Robot. 2010, 26, 897–913. [Google Scholar] [CrossRef]

- Drolshagen, S.; Pfingsthorn, M.; Gliesche, P.; Hein, A. Acceptance of Industrial Collaborative Robots by People With Disabilities in Sheltered Workshops. Front. Robot. AI 2021, 7, 173. [Google Scholar] [CrossRef] [PubMed]

- Rossato, C.; Pluchino, P.; Cellini, N.; Jacucci, G.; Spagnolli, A.; Gamberini, L. Facing with Collaborative Robots: The Subjective Experience in Senior and Younger Workers. Cyberpsychology Behav. Soc. Netw. 2021, 24, 349–356. [Google Scholar] [CrossRef]

- Kawada Robotics. 2022. Available online: http://nextage.kawada.jp/en/open/ (accessed on 2 December 2022).

- Coronado, E.; Venture, G. Towards IoT-Aided Human–Robot Interaction Using NEP and ROS: A Platform-Independent, Accessible and Distributed Approach. Sensors 2020, 20, 1500. [Google Scholar] [CrossRef] [Green Version]

- Shi, Y.; Chen, Y.; Rincon Ardila, L.; Venture, G.; Bourguet, M.L. A Visual Sensing Platform for Robot Teachers. In Proceedings of the 7th International Conference on Human-Agent Interaction, Kyoto, Japan, 6–10 October 2019; pp. 200–201. [Google Scholar] [CrossRef]

- Nagamachi, M.; Lokman, A.M. Innovations of Kansei Engineering; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Coronado, E.; Venture, G.; Yamanobe, N. Applying Kansei/Affective Engineering Methodologies in the Design of Social and Service Robots: A Systematic Review. Int. J. Soc. Robot. 2021, 13, 1161–1171. [Google Scholar] [CrossRef]

- Nagamachi, M. Kansei/Affective Engineering; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Nomura, T.; Suzuki, T.; Kanda, T.; Kato, K. Measurement of negative attitudes toward robots. Interact. Stud. 2006, 7, 437–454. [Google Scholar] [CrossRef]

- Nomura, T.; Suzuki, T.; Kanda, T.; Kato, K. Altered attitudes of people toward robots: Investigation through the Negative Attitudes toward Robots Scale. In Proceedings of the AAAI-06 Workshop on Human Implications of Human-Robot Interaction, Menlo Park, CA, USA, 17 July 2006; Volume 2006, pp. 29–35. [Google Scholar]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, Milwaukee, WI, USA, 13–15 October 2003. [Google Scholar]

- Drolet, A.L.; Morrison, D.G. Do we really need multiple-item measures in service research? J. Serv. Res. 2001, 3, 196–204. [Google Scholar] [CrossRef]

- Diamantopoulos, A.; Sarstedt, M.; Fuchs, C.; Wilczynski, P.; Kaiser, S. Guidelines for choosing between multi-item and single-item scales for construct measurement: A predictive validity perspective. J. Acad. Mark. Sci. 2012, 40, 434–449. [Google Scholar] [CrossRef] [Green Version]

- Bergkvist, L. Appropriate use of single-item measures is here to stay. Mark. Lett. 2015, 26, 245–255. [Google Scholar] [CrossRef]

- Freed, L. Innovating Analytics: How the Next Generation of Net Promoter Can Increase Sales and Drive Business Results; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Sauro, J. Is a Single Item Enough to Measure a Construct? 2018. Available online: https://measuringu.com/single-multi-items/ (accessed on 2 December 2022).

- Martinez-Molina, A.; Boarin, P.; Tort-Ausina, I.; Vivancos, J.L. Assessing visitors’ thermal comfort in historic museum buildings: Results from a Post-Occupancy Evaluation on a case study. Build. Environ. 2018, 132, 291–302. [Google Scholar] [CrossRef] [Green Version]

- Burchell, B.; Marsh, C. The effect of questionnaire length on survey response. Qual. Quant. 1992, 26, 233–244. [Google Scholar] [CrossRef]

- Steyn, R. How many items are too many? An analysis of respondent disengagement when completing questionnaires. Afr. J. Hosp. Tour. Leis. 2017, 6, 41. [Google Scholar]

- Krosnick, J.A. Questionnaire design. In The Palgrave Handbook of Survey Research; Springer: Berlin/Heidelberg, Germany, 2018; pp. 439–455. [Google Scholar]

- Jiang, H.; Cheng, L. Public Perception and Reception of Robotic Applications in Public Health Emergencies Based on a Questionnaire Survey Conducted during COVID-19. Int. J. Environ. Res. Public Health 2021, 18, 10908. [Google Scholar] [CrossRef]

- Bröhl, C.; Nelles, J.; Brandl, C.; Mertens, A.; Nitsch, V. Human–Robot Collaboration Acceptance Model: Development and Comparison for Germany, Japan, China and the USA. Int. J. Soc. Robot. 2019, 11, 709–726. [Google Scholar] [CrossRef] [Green Version]

- Liang, Y.; Lee, S.A. Fear of Autonomous Robots and Artificial Intelligence: Evidence from National Representative Data with Probability Sampling. Int. J. Soc. Robot. 2017, 9, 379–384. [Google Scholar] [CrossRef]

- Venture, G.; Kulić, D. Robot expressive motions: A survey of generation and evaluation methods. ACM Trans. Hum.-Robot Interact. THRI 2019, 8, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Nomura, T. Cultural differences in social acceptance of robots. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 534–538. [Google Scholar] [CrossRef]

- Romero, J.; Lado, N. Service robots and COVID-19: Exploring perceptions of prevention efficacy at hotels in generation Z. Int. J. Contemp. Hosp. Manag. 2021, 33, 4057–4078. [Google Scholar] [CrossRef]

- Hoffman, G.; Ju, W. Designing robots with movement in mind. J. Hum.-Robot Interact. 2014, 3, 91–122. [Google Scholar] [CrossRef]

- Helander, M.G.; Khalid, H.M. Underlying theories of hedonomics for affective and pleasurable design. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Orlando, FL, USA, 26–30 September 2005; SAGE Publications Sage CA: Los Angeles, CA, USA, 2005; Volume 49, pp. 1691–1695. [Google Scholar]

- Díaz, C.E.; Fernández, R.; Armada, M.; García, F. A research review on clinical needs, technical requirements, and normativity in the design of surgical robots. Int. J. Med. Robot. Comput. Assist. Surg. 2017, 13, e1801. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Glende, S.; Conrad, I.; Krezdorn, L.; Klemcke, S.; Krätzel, C. Increasing the acceptance of assistive robots for older people through marketing strategies based on stakeholder needs. Int. J. Soc. Robot. 2016, 8, 355–369. [Google Scholar] [CrossRef]

- Kirschgens, L.A.; Ugarte, I.Z.; Uriarte, E.G.; Rosas, A.M.; Vilches, V.M. Robot hazards: From safety to security. arXiv 2018, arXiv:1806.06681. [Google Scholar]

- Prassida, G.F.; Asfari, U. A conceptual model for the acceptance of collaborative robots in industry 5.0. Procedia Comput. Sci. 2022, 197, 61–67. [Google Scholar] [CrossRef]

| Article | Robotic Platform | Setting | Task | Autonomy | Training Required | Participants |

|---|---|---|---|---|---|---|

| Muller et al. [2] | Universal Robot 5 robot arm | Laboratory | Assembly task | Fully autonomous | Yes | 90 subjects mainly students from a technical university |

| Rossato et al. [35] | Universal Robot 10e robot arm | Laboratory | Collaborative task | Fully autonomous | Yes | 20 industrial senior and younger workers |

| Drolshagen et al. [34] | KUKA LBR iiwa 7 R800 (robot arm) | Closed room | The robot picks up wooden sticks to hand them over to the worker | Fully autonomous | No | 10 participants with mental or physical disabilities. |

| Elprama et al. [26] | Baxter dual-arm robot | Closed room | Participants instruct the robot to put blocks inside boxes | Remote controlled | Yes | 11 car factory employees |

| This work | NEXTAGE Open dual-arm robot | Public space | The robot gives gifts to visitors according to their instructions and facial expression | Fully autonomous | No | Hundreds, but only 207 answered some questionnaires. |

| Dimension | Semantic Evaluation () | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive (1) | Negative (5) | Japanese | Non-J. | p | Male | Female | p | Novice | Expert | p | Total | |

| Happy | Unhappy | 1.430.68 | 1.140.38 | 0.136 | 1.460.71 | 1.290.56 | 0.346 | 1.240.60 | 1.550.67 | 0.109 | 1.380.64 | |

| Interested | Boring | 1.280.55 | 1.140.38 | 0.447 | 1.270.53 | 1.240.54 | 0.844 | 1.240.52 | 1.270.55 | 0.836 | 1.260.52 | |

| Disappointed | Amused | 4.600.63 | 5.000.00 | 0.000 | 4.540.65 | 4.810.51 | 0.116 | 0.720.61 | 4.590.59 | 0.467 | 4.660.59 | |

| Relaxed | Anxious | 2.101.22 | 1.861.46 | 0.690 | 1.880.99 | 2.291.49 | 0.297 | 2.281.43 | 1.820.96 | 0.196 | 2.061.23 | |

| Safe | Danger | 1.250.54 | 1.711.50 | 0.447 | 1.230.51 | 1.430.98 | 0.409 | 1.280.89 | 1.360.58 | 0.702 | 1.320.75 | |

| Confused | Clear | 4.201.14 | 3.711.70 | 0.491 | 4.231.18 | 4.001.30 | 0.532 | 4.201.29 | 4.051.17 | 0.669 | 4.131.21 | |

| Dimension | Semantic Evaluation () | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive (1) | Negative (5) | Japanese | Non-J. | p | Male | Female | p | Novice | Expert | p | Total | |

| Smart | Stupid | 1.610.72 | 1.330.52 | 0.290 | 1.440.58 | 1.760.83 | 0.176 | 1.570.66 | 1.570.75 | 0.977 | 1.570.69 | |

| Simple | Complicated | 3.581.00 | 3.171.72 | 0.590 | 3.671.11 | 3.291.10 | 0.284 | 3.611.12 | 3.431.12 | 0.597 | 3.521.10 | |

| Dynamic | Static | 3.531.20 | 2.201.10 | 0.050 | 3.261.10 | 3.561.50 | 0.488 | 3.361.33 | 3.381.20 | 0.964 | 3.371.24 | |

| Responsive | Slow | 3.001.23 | 2.500.55 | 0.16 | 2.961.26 | 2.881.05 | 0.820 | 3.041.26 | 2.811.08 | 0.511 | 2.931.16 | |

| Lifelike | Artificial | 2.891.29 | 2.171.17 | 0.205 | 2.481.19 | 3.291.31 | 0.046 | 2.571.27 | 3.051.28 | 0.218 | 2.801.27 | |

| Emotional | Emotionless | 3.051.11 | 2.831.33 | 0.714 | 2.931.11 | 3.181.19 | 0.489 | 3.041.15 | 3.001.14 | 0.900 | 3.021.12 | |

| Useful | Useless | 1.891.01 | 1.500.84 | 0.330 | 1.810.96 | 1.881.05 | 0.832 | 1.650.88 | 2.051.07 | 0.192 | 1.840.98 | |

| Familiar | Unknown | 3.391.35 | 2.500.84 | 0.053 | 3.111.45 | 3.531.07 | 0.278 | 3.261.39 | 3.291.27 | 0.951 | 3.271.30 | |

| Desirable | Undesirable | 1.840.89 | 1.200.45 | 0.029 | 1.630.84 | 2.000.89 | 0.189 | 1.781.04 | 1.750.64 | 0.901 | 1.770.86 | |

| Cute | Ugly | 1.951.09 | 1.330.52 | 0.043 | 1.810.96 | 1.941.20 | 0.716 | 1.870.97 | 1.861.15 | 0.969 | 1.861.04 | |

| Modern | Old | 1.570.83 | 1.330.52 | 0.374 | 1.350.69 | 1.820.88 | 0.070 | 1.610.78 | 1.450.83 | 0.523 | 1.530.79 | |

| Attractive | Unattractive | 1.791.04 | 1.330.52 | 0.116 | 1.671.00 | 1.821.01 | 0.619 | 1.741.01 | 1.711.01 | 0.935 | 1.730.99 | |

| Like | Dislike | 1.680.87 | 1.000.00 | 0.000 | 1.440.75 | 1.820.95 | 0.175 | 1.650.78 | 1.520.93 | 0.623 | 1.590.83 | |

| P1 | P2 | P3 | P4 | |||||

|---|---|---|---|---|---|---|---|---|

| Considered answers | 78 | 100% | 67 | 100% | 47 | 100% | 44 | 100% |

| Japanese | 57 | 73% | 47 | 70% | 40 | 85% | 38 | 86% |

| Non Japanese | 22 | 28% | 20 | 30% | 7 | 15% | 6 | 14% |

| Male | 62 | 79% | 55 | 82% | 26 | 55% | 27 | 61% |

| Female | 15 | 19% | 12 | 18% | 21 | 45% | 17 | 39% |

| Novice | 21 | 27% | 22 | 33% | 25 | 53% | 23 | 52% |

| Expert | 57 | 73% | 45 | 67% | 22 | 47% | 20 | 46% |

| OP-1 | 18 | 38% | ||||||

| OP-2 | 21 | 45% | ||||||

| Type | ||

|---|---|---|

| Interaction (S1) | 1.90 | 0.72 |

| Social (S2) | 2.60 | 0.96 |

| Emotion (S3) | 2.62 | 0.97 |

| Interaction (S1) | Social (S2) | Emotion (S3) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Groups | |||||||||

| Japanese | 1.90 | 0.64 | 0.890 | 2.47 | 0.97 | 0.084 | 2.73 | 1.01 | 0.130 |

| Non Japanese | 1.87 | 0.92 | 2.90 | 0.88 | 2.37 | 0.82 | |||

| Male | 1.91 | 0.71 | 0.890 | 2.61 | 1.02 | 0.809 | 2.62 | 0.99 | 0.963 |

| Female | 1.88 | 0.78 | 2.55 | 0.66 | 2.61 | 0.86 | |||

| Novice | 2.07 | 0.65 | 0.168 | 2.71 | 0.85 | 0.484 | 2.59 | 0.92 | 0.852 |

| Expert | 1.83 | 0.74 | 2.54 | 1.01 | 2.64 | 1.00 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Capy, S.; Rincon, L.; Coronado, E.; Hagane, S.; Yamaguchi, S.; Leve, V.; Kawasumi, Y.; Kudou, Y.; Venture, G. Expanding the Frontiers of Industrial Robots beyond Factories: Design and in the Wild Validation. Machines 2022, 10, 1179. https://doi.org/10.3390/machines10121179

Capy S, Rincon L, Coronado E, Hagane S, Yamaguchi S, Leve V, Kawasumi Y, Kudou Y, Venture G. Expanding the Frontiers of Industrial Robots beyond Factories: Design and in the Wild Validation. Machines. 2022; 10(12):1179. https://doi.org/10.3390/machines10121179

Chicago/Turabian StyleCapy, Siméon, Liz Rincon, Enrique Coronado, Shohei Hagane, Seiji Yamaguchi, Victor Leve, Yuichiro Kawasumi, Yasutoshi Kudou, and Gentiane Venture. 2022. "Expanding the Frontiers of Industrial Robots beyond Factories: Design and in the Wild Validation" Machines 10, no. 12: 1179. https://doi.org/10.3390/machines10121179

APA StyleCapy, S., Rincon, L., Coronado, E., Hagane, S., Yamaguchi, S., Leve, V., Kawasumi, Y., Kudou, Y., & Venture, G. (2022). Expanding the Frontiers of Industrial Robots beyond Factories: Design and in the Wild Validation. Machines, 10(12), 1179. https://doi.org/10.3390/machines10121179