An Integrated Application of Motion Sensing and Eye Movement Tracking Techniques in Perceiving User Behaviors in a Large Display Interaction

Abstract

:1. Introduction

2. Related Work

2.1. Large Display-Based Interaction

2.2. Parallel Use of Public Large Displays

2.3. Sensor-Supported Interaction Evaluation on Large Displays

3. Methods

3.1. Participants

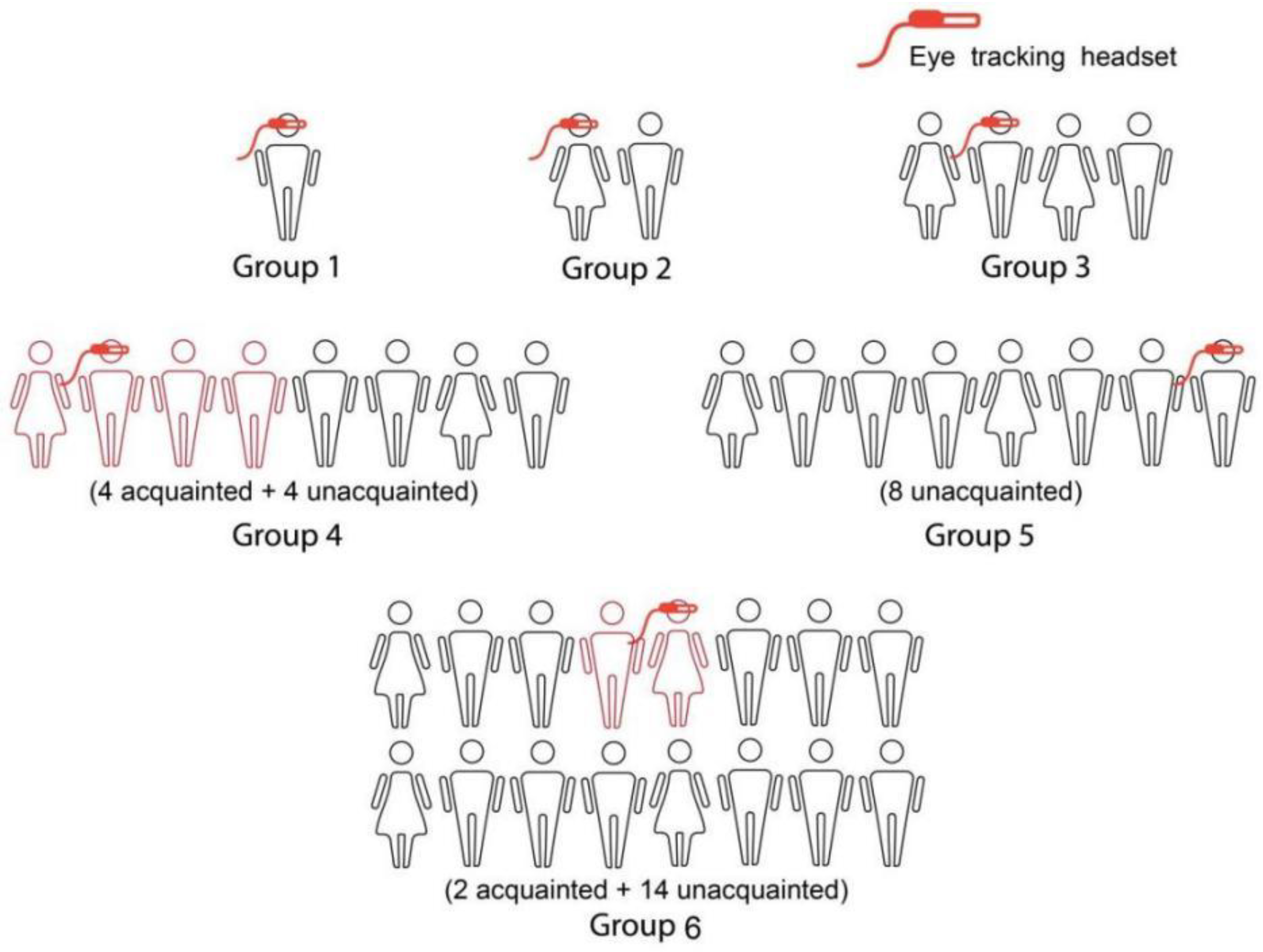

- (1)

- The 1st form was the smallest group, having only one participant, set to reflect the performance of one user application;

- (2)

- The 2nd form was a paired group having 2 unacquainted participants, set to reflect the simple case of two users’ parallel use of the large display;

- (3)

- The 3rd form was a middle-size group consisting of 4 unacquainted participants, set to simulate a small group’s parallel use of the large display;

- (4)

- The 4th form was a larger group having 8 participants, half of which were acquainted participants having a close social connection with each other, e.g., couples, classmates, family members, cooperative partners, and so on. This group form was set to simulate the most common composition of the parallel interaction in actual contexts: some users were strangers, while some were acquainted friends or family members;

- (5)

- The 5th form also had 8 participants, but they were all unacquainted members who did not know each other. This group form was set to simulate a loosely coupled large group’s parallel use of the large display;

- (6)

- The 6th form was the largest group, which had 16 participants, including both acquainted and unacquainted members, and the amount of acquainted participants ranged from 2 to 8 in different groups. This group form was set to simulate a crowd group in front of a specific information display.

3.2. Apparatus

3.3. Procedures

3.4. Evaluation Metrics

- (1)

- Task completion time efficiency: The task completion time refers to the interval from when the task interface was initiated to when the participant reported that he or she had completed all tasks (i.e., found out all required information of 10 questions). The task completion time of each participant was recorded and a mean completion time was calculated for each group.

- (2)

- User concentration: This measures how intently the participants engaged in the information-searching task. In this study, user concentration particularly refers to the group’s overall concentration on the task. A high concentration means all participants are deeply engaged in the task. It seems a universal consensus that user-to-object proximity reflects the user’s attention or concentration on the object [22]. Generally, a close proximity indicates that the user has a deep concentration on the object, but a larger distance indicates a reduced concern on the object. Based on this, researchers developed ‘proxemic interface’ [22] and ‘ambient display’ [23] techniques in which user-to-display proximity was detected and the user interface was generated responsively. In the present study, the participant’s spatial distance toward the center of the large display was regarded and detected as a quantitative evaluation metric of user concentration. However, considering that the group size or the number of parallel users also have a potential influence on the participant’s standing position in front of the large display, the user-to-display distance is thus still not a sufficiently accurate measurement of user concentration in the task. To gain a more objective result, the participant’s eye movement tracking data were also collected to check their visual attention on the large display.

- (3)

- User perceived system usability: This provides an overall evaluation on the large display information-searching system in terms of use convenience, easiness, functional satisfaction, use efficiency, and so on. It was measured through a system usability scale (SUS) questionnaire, which was derived from the Brooke’s version [41]. This scale consists of 10 statements: 5 of them are stated positively, e.g., “the system interface is easy to use”; while other 5 are stated negatively, e.g., “the system is cumbersome to use”. Each statement was rated in 5-point Likert score from “absolutely disagree” to “absolutely agree”. The 10 statements in the SUS questionnaire are presented in Section 4.3;

- (4)

- Overall user experience: A user experience scale (UES) derived from AttrakDiff scale [42] was adopted to measure the participants’ perceived user experience on the large display system and the entire task procedure. The UES contains 28 bipolar options, and each option can be rated in a 7-step score from “−3” to “+3”. The 28 options were categorized into 4 dimensions: (1) pragmatic quality; (2) hedonic quality (simulation); (3) hedonic quality (identity); and (4) attractiveness; see in Section 4.3.

4. Analyses and Results

4.1. How Participants Approach the Large Display

4.2. How Participants Participate in the Large Display Interaction

4.3. How Participants Interact with the Large Display

- (1)

- “Pragmatic quality” (PQ): measures whether the system functions and the interface display are appropriate to achieve the task;

- (2)

- “Hedonic quality–identity” (HQ-I): measures whether the system and the interface use are comfortable and satisfying in behaviors;

- (3)

- “Hedonic quality–stimulation” (HQ-S): measures whether the system use is beneficial for developing personal skills and knowledge;

- (4)

- “Attractiveness” (ATT): measures an overall assessment on the appeal and subjective preference of the system and its interfaces.

5. Discussion and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Serial No. | Question Statement |

|---|---|

| Q1 | What is the departure time of the flight ‘CA147’ ? |

| Q2 | What is the estimated arrival time of the flight ‘AK542’ ? |

| Q3 | What is the earliest flight from Hangzhou to Chiengmai in this month? |

| Q4 | How long is the flight duration of the ‘D5816’ ? |

| Q5 | What is the status of the flight ‘3K832’ in 17th August ? |

| Q6 | What is the origin of the flight ‘FD497’ ? |

| Q7 | What is the operating company of the flight ‘CA1711’ ? |

| Q8 | What is the destination of the flight ‘DRA321’ ? |

| Q9 | What is the status of the flight ‘CA672’ at the end of August ? |

| Q10 | What is the estimated arrival time of the first flight to Bali Island in 1st September ? |

| Q11 | What is the last flight to Bali Island in this month ? |

| Q12 | How long is the flight duration from Hangzhou to Bali Island in 1st September ? |

| Q13 | How many flights from the AIR China company operate in 18th August ? |

| Q14 | What is the earliest departure time of the flight to Dubai in the next month ? |

| Q15 | What is the operating company of the flight ‘4AS35’ ? |

| Q16 | What is the status of the last flight from the Spring company today ? |

| Q17 | What is the status of the flight ‘FD497’ in the 8th September ? |

| Q18 | What is the departure time of the earliest flight to Cambodia ? |

| Q19 | How long is the flight duration of the ‘D7303’ to Colombo ? |

| Q20 | What is the latest flight to Chiengmai at the end of this month ? |

| Q21 | How many flights operate from Hangzhou to Phuket? |

| Q22 | What is the estimated arrival time of the fight ‘AKP781′ to Beijing? |

| Q23 | What is the earliest flight No. from Hangzhou to Tokyo in following days? |

| Q24 | How many hours the flight ‘FD497’ will fly from Hangzhou to Chiengmai? |

| Q25 | What is the departure time of the first flight in 18th August ? |

| Q26 | What air company the flight No. ‘FD497’ belongs to? |

| Q27 | Whether the flight No. ‘3K832’ takes off before the noon of the day? |

| Q28 | What is the air company name who operates the flight No. ‘CA1711’? |

| Q29 | What is the fast flight No. from Hangzhou to Colombo? |

| Q30 | How long it will take from Hangzhou to Chiengmai, through a flight from the Thai Air company? |

References

- Reipschlager, P.; Flemisch, T.; Dachselt, R. Personal Augmented Reality for Information Visualization on Large Interactive Displays. IEEE Trans. Vis. Comput. Graph. 2021, 27, 1182–1192. [Google Scholar] [CrossRef] [PubMed]

- Vetter, J. Tangible Signals—Prototyping Interactive Physical Sound Displays. In Proceedings of the 15th International Conference on Tangible, Embedded, and Embodied Interaction (TEI ’21), Salzburg, Austria, 14–17 February 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Finke, M.; Tang, A.; Leung, R.; Blackstock, M. Lessons learned: Game design for large public displays. In Proceedings of the 3rd International Conference on Digital Interactive Media in Entertainment and Arts, Athens, Greece, 10–12 September 2008; pp. 26–33. [Google Scholar] [CrossRef]

- Ardito, C.; Lanzilotti, R.; Costabile, M.F.; Desolda, G. Integrating Traditional Learning and Games on Large Displays: An Experimental Study. J. Educ. Technol. Soc. 2013, 16, 44–56. [Google Scholar]

- Chen, L.; Liang, H.-N.; Wang, J.; Qu, Y.; Yue, Y. On the Use of Large Interactive Displays to Support Collaborative Engagement and Visual Exploratory Tasks. Sensors 2021, 21, 8403. [Google Scholar] [CrossRef] [PubMed]

- Lischke, L.; Mayer, S.; Wolf, K.; Henze, N.; Schmidt, A. Screen arrangements and interaction areas for large display work places. In Proceedings of the 5th ACM International Symposium on Pervasive Displays, Oulu, Finland, 20–26 June 2016; pp. 228–234. [Google Scholar] [CrossRef] [Green Version]

- Rui, N.M.; Santos, P.A.; Correia, N. Using Personalisation to improve User Experience in Public Display Systems with Mobile Interaction. In Proceedings of the 17th International Conference on Advances in Mobile Computing & Multimedia, Munich, Germany, 2–4 December 2019; pp. 3–12. [Google Scholar] [CrossRef]

- Coutrix, C.; Kai, K.; Kurvinen, E.; Jacucci, G.; Mäkelä, R. FizzyVis: Designing for playful information browsing on a multi-touch public display. In Proceedings of the 2011 Conference on Designing Pleasurable Products and Interfaces, Milano, Italy, 22–25 June 2011; pp. 1–8. [Google Scholar] [CrossRef]

- Veriscimo, E.D.; Junior, J.; Digiampietri, L.A. Evaluating User Experience in 3D Interaction: A Systematic Review. In Proceedings of the XVI Brazilian Symposium on Information Systems, São Bernardo do Campo, Brazil, 3–6 November 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Mateescu, M.; Pimmer, C.; Zahn, C.; Klinkhammer, D.; Reiterer, H. Collaboration on large interactive displays: A systematic review. Hum. Comput. Interact. 2019, 36, 243–277. [Google Scholar] [CrossRef] [Green Version]

- Wehbe, R.R.; Dickson, T.; Kuzminykh, A.; Nacke, L.E.; Lank, E. Personal Space in Play: Physical and Digital Boundaries in Large-Display Cooperative and Competitive Games. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Ghare, M.; Pafla, M.; Wong, C.; Wallace, J.R.; Scott, S.D. Increasing Passersby Engagement with Public Large Interactive Displays: A Study of Proxemics and Conation. In Proceedings of the 2018 ACM International Conference on Interactive Surfaces and Spaces, Tokyo, Japan, 25–28 November 2018; pp. 19–32. [Google Scholar] [CrossRef] [Green Version]

- Ardito, C.; Buono, P.; Costabile, M.F.; Desolda, G. Interaction with Large Displays: A Survey. ACM Comput. Surv. 2015, 47, 1–38. [Google Scholar] [CrossRef]

- Tan, D.; Czerwinski, M.; Robertson, G. Women go with the (optical) flow. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 209–215. [Google Scholar] [CrossRef]

- Peltonen, P.; Kurvinen, E.; Salovaara, A.; Jacucci, G.; Saarikko, P. It’s Mine, Don’t Touch!: Interactions at a large multi-touch display in a city centre. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 1285–1294. [Google Scholar] [CrossRef]

- Müller, J.; Walter, R.; Bailly, G.; Nischt, M.; Alt, F. Looking glass: A field study on noticing interactivity of a shop window. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin Texas, TX, USA, 5–10 May 2012; pp. 297–306. [Google Scholar] [CrossRef]

- Nacenta, M.A.; Jakobsen, M.R.; Dautriche, R.; Hinrichs, U.; Carpendale, S. The LunchTable: A multi-user, multi-display system for information sharing in casual group interactions. In Proceedings of the 2012 International Symposium on Pervasive Displays, Porto, Portugal, 4–5 June 2012; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Dostal, J.; Hinrichs, U.; Kristensson, P.O.; Quigley, A. SpiderEyes: Designing attention- and proximity-aware collaborative interfaces for wall-sized displays. In Proceedings of the 19th International Conference on Intelligent User Interfaces, Haifa, Israel, 24–27 February 2014; pp. 143–152. [Google Scholar] [CrossRef]

- Marshall, P.; Hornecker, E.; Morris, R.; Dalton, N.S.; Rogers, Y. When the fingers do the talking: A study of group participation with varying constraints to a tabletop interface. In Proceedings of the 3rd IEEE International Workshop on Horizontal Interactive Human Computer Systems, Amsterdam, The Netherlands, 1–3 October 2008; pp. 33–40. [Google Scholar] [CrossRef] [Green Version]

- Sakakibara, Y.; Matsuda, Y.; Komuro, T.; Ogawa, K. Simultaneous interaction with a large display by many users. In Proceedings of the 8th ACM International Symposium on Pervasive Displays, Palermo, Italy, 12–14 June 2019; pp. 1–2. [Google Scholar] [CrossRef]

- Norman, D.A.; Nielsen, J. Gestural interfaces: A step backward in usability. Interactions 2010, 17, 46–49. [Google Scholar] [CrossRef]

- Greenberg, S.; Marquardt, N.; Ballendat, T.; Diaz-Marino, R.; Wang, M. Proxemic interactions: The new ubicomp? Interactions 2011, 18, 42–50. [Google Scholar] [CrossRef]

- Raudanjoki, Z.; Gen, A.; Hurtig, K.; Hkkil, J. ShadowSparrow: An Ambient Display for Information Visualization and Notification. In Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia, Essen, Germany, 22–25 November 2020; pp. 351–353. [Google Scholar] [CrossRef]

- Paul, S.A.; Morris, M.R. Sensemaking in Collaborative Web Search. Hum. Comput. Interact. 2011, 26, 72–122. [Google Scholar] [CrossRef]

- Jakobsen, M.; HornbæK, K. Proximity and physical navigation in collaborative work with a multi-touch wall-display. In Proceedings of the CHI 12 Extended Abstracts on Human Factors in Computing Systems, Austin, Texas, TX, USA, 5–10 May 2012; pp. 2519–2524. [Google Scholar] [CrossRef]

- Shoemaker, G.; Tsukitani, T.; Kitamura, Y.; Booth, K.S. Body-centric interaction techniques for very large wall displays. In Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries, Reykjavik, Iceland, 16–20 October 2010; pp. 463–472. [Google Scholar] [CrossRef] [Green Version]

- Jakobsen, M.R.; Haile, Y.S.; Knudsen, S.; Hornbaek, K. Information Visualization and Proxemics: Design Opportunities and Empirical Findings. IEEE Trans. Vis. Comput. Graph. 2013, 19, 2386–2395. [Google Scholar] [CrossRef] [PubMed]

- Huang, E.M.; Koster, A.; Borchers, J. Overcoming Assumptions and Uncovering Practices: When Does the Public Really Look at Public Displays? In Proceedings of the International Conference on Pervasive Computing, Sydney, Australia, 19–22 May 2008; pp. 228–243. [Google Scholar] [CrossRef] [Green Version]

- Houben, S.; Weichel, C. Overcoming interaction blindness through curiosity objects. In Proceedings of the CHI ’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April 2013; pp. 1539–1544. [Google Scholar] [CrossRef]

- Ju, W.; Sirkin, D. Animate Objects: How Physical Motion Encourages Public Interaction. In Proceedings of the 5th International Conference on Persuasive Technology, Copenhagen, Denmark, 7–10 June 2010; pp. 40–51. [Google Scholar] [CrossRef]

- Alt, F.; Schneega, S.; Schmidt, A.; Müller, J.; Memarovic, N. How to evaluate public displays. In Proceedings of the 2012 International Symposium on Pervasive Displays, Porto, Portugal, 4–5 June 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Williamson, J.R.; Hansen, L.K. Designing performative interactions in public spaces. In Proceedings of the ACM Conference on Designing Interactive Systems, Newcastle, UK, 11–15 June 2012; pp. 791–792. [Google Scholar] [CrossRef]

- Hansen, L.K.; Rico, J.; Jacucci, G.; Brewster, S.A.; Ashbrook, D. Performative interaction in public space. In Proceedings of the CHI 11 Extended Abstracts on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 49–52. [Google Scholar] [CrossRef] [Green Version]

- Lou, X.; Fu, L.; Yan, L.; Li, X.; Hansen, P. Distance Effects on Visual Searching and Visually-Guided Free Hand Interaction on Large Displays. Int. J. Ind. Ergon. 2022, 90, 103318. [Google Scholar] [CrossRef]

- Faity, G.; Mottet, D.; Froger, J. Validity and Reliability of Kinect v2 for Quantifying Upper Body Kinematics during Seated Reaching. Sensors 2022, 22, 2735. [Google Scholar] [CrossRef] [PubMed]

- Lou, X.; Chen, Z.; Hansen, P.; Peng, R. Asymmetric Free-Hand Interaction on a Large Display and Inspirations for Designing Natural User Interfaces. Symmetry 2022, 14, 928. [Google Scholar] [CrossRef]

- Shehu, I.S.; Wang, Y.; Athuman, A.M.; Fu, X. Remote Eye Gaze Tracking Research: A Comparative Evaluation on Past and Recent Progress. Electronics 2021, 10, 3165. [Google Scholar] [CrossRef]

- Lee, H.C.; Lee, W.O.; Cho, C.W.; Gwon, S.Y.; Park, K.R.; Lee, H.; Cha, J. Remote Gaze Tracking System on a Large Display. Sensors 2013, 13, 13439–13463. [Google Scholar] [CrossRef] [PubMed]

- Bhatti, O.S.; Barz, M.; Sonntag, D. EyeLogin—Calibration-free Authentication Method for Public Displays Using Eye Gaze. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications (ETRA ’21), Virtual Event, 25–27 May 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, Y.; Ding, X.; Yuan, G.; Fu, X. Dual-Cameras-Based Driver’s Eye Gaze Tracking System with Non-Linear Gaze Point Refinement. Sensors 2022, 22, 2326. [Google Scholar] [CrossRef] [PubMed]

- Brooke, J. SUS-A Quick and Dirty Usability Scale. Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; Volume 189, pp. 4–7. [Google Scholar]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. Mensch Comput. 2003, 57, 187–196. [Google Scholar] [CrossRef]

| Group Form | 1st Form | 2nd Form | 3rd Form | 4th Form | 5th Form | 6th Form |

| Group Amount | 10 Groups | 10 Groups | 10 Groups | 10 Groups | 10 Groups | 10 Groups |

| Group Size | N = 1 | N = 2 | N = 4 | N = 8 | N = 8 | N = 16 |

| Group Composition (gender, age) | Group 1: Male, 22; | Group 1: 2 Males, 20 + 21; | Group 1: 2 Males + 2 Females, 20, 20, 28, 35; | Group 1: (acquainted): 4 Males, all aged 22; (unacquainted): 1 Male + 3 Females, 22 × 2, 26, 30; | Group 1: 5 Males + 3 Females, 20 ×2, 21 × 3, 24, 27, 30; | Group 1: 10 Males + 6 Females, 19, 20 × 2, 21 × 4, 24 × 2, 26, 27, 30, 33 × 2, 37, 41; |

| Group 2: Female, 21; | Group 2: Male + Female, 21 + 21; | Group 2: 3 Males + 1 Female, 22 × 4; | Group 2: (acquainted): 1 Male + 3 Females, 23 × 2, 23, 44; (unacquainted): 2 Males + 2 Females, 20, 23, 30, 58; | Group 2: 8 Males, all aged 27; | Group 2: 7 Males + 9 Females, 22 × 5, 26 × 3, 30 × 2, 32 × 2, 34, 36, 40, 46; | |

| Group 3: Male, 23; | Group 3: 2 Females, 21 + 21; | Group 3: 2 Males + 2 Females, 20 × 2, 27, 40; | Group 3: (acquainted): 2 Males + 2 Females, all aged 23; (unacquainted): 3 Males + 1 Females, 30 × 2, 33, 37; | Group 3: 4 Males + 4 Females, 25 × 4, 27, 30, 43, 55; | Group 3: 8 Males + 8 Females, 22 × 6, 23 × 6, 26 × 4; | |

| Group 4: Female, 23; | Group 4: Male + Female, 22 + 24; | Group 4: 1 Male + 3 Females, 24, 28, 30, 44; | Group 4: (acquainted) 1 Male + 3 Females, 24 × 3, 40; (unacquainted) 4 Males, 20, 24, 25, 41; | Group 4: 3 Males + 5 Females, 26 × 3, 27 × 2, 33, 37, 45; | Group 4: 10 Males + 6 Females, 21 × 4, 23 × 4, 25 × 4, 28 × 4; | |

| Group 5: Male, 23; | Group 5: 2 Females, 19 + 26; | Group 5: 4 Females, 30 × 2, 36, 46; | Group 5: (acquainted) 3 Males + 1 Female, 30 × 2, 33, 35; (unacquainted) 2 Males + 2 Females, 27 × 2, 30, 32; | Group 5: 8 Males, 27 × 2, 30 × 3, 32 × 2, 40; | Group 5: 4 Males + 12 Females, 24 × 4, 25 × 3, 27 × 3, 29 × 3, 33 × 2, 38; | |

| Group 6: Male, 25; | Group 6: 2 Males, 23 + 30; | Group 6: 2 Males + 2 Females, all aged 24; | Group 6: (acquainted) 2 Males + 2 Females, 25 × 3, 35; (unacquainted) 3 Males + 1 Female, 23, 24, 25, 30; | Group 6: 5 Males + 3 Females, 30 × 4, 37, 40, 41, 47; | Group 6: 7 Males + 9 Females, 20 × 2, 21 × 4, 22 × 3, 27 × 2, 30 × 2, 34 × 2, 40; | |

| Group 7: Female, 30; | Group 7: 2 Females, 27 + 33; | Group 7: 3 Males + 1 Females, 30 × 3, 35; | Group 7: (acquainted) 2 Males + 1 Female, 24 × 2, 30, 35; (unacquainted) 2 Males + 2 Females, 23 × 3, 42; | Group 7: 4 Males + 4 Females, 25 × 4, 27 × 2, 33, 35; | Group 7: 9 Males + 7 Females, 19 × 2, 21 × 3, 22 × 3, 25 × 2, 27 × 2, 29 × 2, 33, 35; | |

| Group 8: Male, 32; | Group 8: 2 Males, 30 + 33; | Group 8: 2 Males + 2 Females, 22 × 2, 33, 50; | Group 8: (acquainted) 3 Males + 1 Female, all aged 24; (unacquainted) 4 Males, 26 × 2, 29, 30; | Group 8: 4 Males + 4 Females, 30 × 3, 32 × 3, 41; | Group 8: 6 Males + 10 Females, 23 × 3, 24 × 5, 25 × 3, 26 × 2, 35 × 2, 38; | |

| Group 9: Male, 40; | Group 9: Male + Female, 38 + 46; | Group 9: 1 Male + 3 Females, 23, 30, 37, 42; | Group 9: (acquainted) 2 Males + 2 Females, all aged 23; (unacquainted) 2 Males + 2 Females, 20 × 2, 26, 49; | Group 9: 6 Males + 2 Females, 27 × 2, 30 × 2, 31 × 2, 33, 38; | Group 9: 8 Males + 8 Females, 30 × 5, 32 × 6, 37 × 2, 40, 42, 47; | |

| Group 10: Female, 47; | Group 10: 2 Males, 40 + 52; | Group 10: 2 Males + 2 Females, 35 × 2, 37, 39; | Group 10: (acquainted) 2 Males + 2 Females, 23 × 3, 30; (unacquainted) 4 Males, all aged 25; | Group 10: 5 Males + 3 Females, 23 × 3, 25 × 3, 30, 34; | Group 10: 9 Males + 7 Females, 20 × 2, 25 × 4, 26 × 3, 30 × 2, 31 × 2, 32 × 2, 36; |

| Group Form | Sample Size | Mean Distance (mm) | Std. Dev. | F-Value | p-Value |

|---|---|---|---|---|---|

| 1st form | 50 | 637.52 | / | F(4, 196) = 69.38 | <0.001 |

| 2nd form | 100 | 729.76 | 189.26 | ||

| 3rd form | 200 | 1211.05 | 327.38 | ||

| 4th form | 200 (acquainted) + 200 (unacquainted) | 1506.27 | 296.53 | ||

| 5th form | 400 | 2000.12 | 388.18 | ||

| 6th form | 210 (acquainted) + 590 (unacquainted) | 2388.29 | 511.17 |

| Group Form | Sample Size | Mean Proximity (mm) | Std. Dev. | F-Value | p-Value |

|---|---|---|---|---|---|

| 2nd form | 100 | 862.79 | 82.20 | F(3, 147) = 823.56 | < 0.001 |

| 3rd form | 200 | 589.63 | 61.93 | ||

| 4th form | 200 (acquainted) + 200 (unacquainted) | 269.74 | 34.01 | ||

| 5th form | 400 | 340.05 | 54.43 | ||

| 6th form | 210 (acquainted) + 590 (unacquainted) | 149.86 | 29.28 |

| Group Form | Mean Position Frequencies of Each Participant in the Group | Mean | Std. Dev. | F-Value | p-Value | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 | Group 7 | Group 8 | Group 9 | Group 10 | ||||||

| 1st form | 5.20 | 4.40 | 4.20 | 4.00 | 4.60 | 3.80 | 5.00 | 4.80 | 4.80 | 4.40 | 4.52 | 0.68 | F(4, 196) = 19.93 | <0.001 | |

| 2nd form | 7.60 | 7.20 | 8.00 | 7.80 | 7.40 | 8.40 | 8.20 | 8.00 | 7.40 | 7.20 | 7.72 | 0.86 | |||

| 3rd form | 12.20 | 11.40 | 11.20 | 10.60 | 11.60 | 12.40 | 12.00 | 11.80 | 11.40 | 10.80 | 11.54 | 1.16 | |||

| 4th form | (acq.) | 8.80 | 8.60 | 7.60 | 9.60 | 10.40 | 10.00 | 9.80 | 9.60 | 10.40 | 9.80 | 11.80 | 2.59 | ||

| (unacq.) | 12.00 | 13.00 | 14.80 | 14.60 | 15.20 | 13.60 | 13.40 | 15.60 | 14.40 | 14.80 | |||||

| 5th form | 14.80 | 21.80 | 19.80 | 17.00 | 18.60 | 20.40 | 17.40 | 15.20 | 16.00 | 16.40 | 17.74 | 2.34 | |||

| 6th form | (acq.) | 11.0 | 13.40 | 10.80 | 7.80 | 9.40 | 11.20 | 12.00 | 13.20 | 12.80 | 11.60 | 17.63 | 6.81 | ||

| (unacq.) | 24.00 | 22.00 | 25.60 | 27.80 | 20.40 | 19.60 | 26.00 | 24.80 | 24.40 | 24.80 | |||||

| Group Form | Participants’ Mean Task Completion Time in Different Group Forms | Mean | Std. Dev. | T-Test Value | p-Value | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 | Group 7 | Group 8 | Group 9 | Group 10 | ||||||

| 1st form | 260.0 | 253.8 | 264.4 | 260.4 | 279.8 | 267.2 | 256.4 | 265.0 | 262.8 | 254.2 | 262.40 | 7.63 | |||

| 2nd form | 282.8 | 256.6 | 270.4 | 263.2 | 260.8 | 269.4 | 268.8 | 272.0 | 270.4 | 268.8 | 268.32 | 7.09 | |||

| 3rd form | 293.8 | 242.6 | 277.4 | 251.7 | 273.4 | 270.4 | 270.6 | 274.4 | 282.2 | 268.4 | 270.49 | 11.16 | |||

| 4th form | (acq.) | 222.6 | 243.6 | 222.5 | 217.0 | 204.4 | 211.2 | 209.8 | 225.2 | 210.4 | 224.6 | 250.98 | 33.98 | t(49) = −4.31 | <0.001 |

| (unacq.) | 276.8 | 282.4 | 279.2 | 288.4 | 298.8 | 279.6 | 271.4 | 283.8 | 288.2 | 279.6 | |||||

| 5th form | 266.4 | 260.5 | 276.5 | 268.8 | 282.2 | 266.8 | 285.5 | 276.6 | 270.8 | 262.0 | 271.61 | 9.66 | |||

| 6th form | (acq.) | 225.4 | 215.6 | 209.8 | 186.8 | 200.5 | 220.4 | 213.8 | 204.6 | 226.0 | 214.4 | 255.55 | 47.00 | t(49) = −6.25 | <0.001 |

| (unacq.) | 325.5 | 294.5 | 290.6 | 274.8 | 290.2 | 311.4 | 295.5 | 304.6 | 286.2 | 320.4 | |||||

| System Usability Relevant Statements: 5 (Positive) + 5 (Negative) | Mean Rating Score | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1st Group Form | 2nd Group Form | 3rd Group Form | 4th Group Form | 5th Group Form | 6th Group Form | ||||

| (acq.) | (unacq.) | (acq.) | (unacq.) | ||||||

| P1 | I would like to use this system frequently. | 5.00 | 4.50 | 4.00 | 4.50 | 4.00 | 3.88 | 4.00 | 3.57 |

| P2 | The system interface is easy to use. | 4.00 | 3.50 | 3.50 | 3.25 | 3.75 | 3.13 | 3.50 | 3.00 |

| P3 | Functions in the system are well designed. | 5.00 | 5.00 | 4.75 | 4.75 | 4.25 | 4.25 | 3.50 | 3.79 |

| P4 | Common users can learn to use this system very quickly. | 4.00 | 4.50 | 4.25 | 5.00 | 3.50 | 3.63 | 4.00 | 3.71 |

| P5 | I am confident to use this system. | 5.00 | 4.00 | 4.25 | 4.75 | 4.00 | 3.88 | 3.50 | 3.50 |

| N1 | This system is unnecessarily complex. | 1.00 | 1.00 | 1.00 | 1.25 | 1.50 | 1.50 | 1.00 | 1.29 |

| N2 | I need a technical person to help me while using this system. | 1.00 | 1.00 | 1.00 | 1.25 | 1.00 | 1.13 | 1.50 | 1.57 |

| N3 | The system is cumbersome to use. | 1.00 | 1.00 | 1.25 | 0.75 | 1.50 | 1.13 | 1.00 | 2.14 |

| N4 | There are too many inconsistent functions in the system. | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.25 | 1.25 |

| N5 | I need to learn a lot before I can operate this system. | 2.00 | 1.50 | 1.50 | 1.25 | 1.50 | 1.50 | 1.25 | 1.25 |

| Overall usability rating score | 92.50 | 90.00 | 87.50 | 91.88 | 82.50 | 81.25 | 81.25 | 75.18 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lou, X.; Fu, L.; Song, X.; Ma, M.; Hansen, P.; Zhao, Y.; Duan, Y. An Integrated Application of Motion Sensing and Eye Movement Tracking Techniques in Perceiving User Behaviors in a Large Display Interaction. Machines 2023, 11, 73. https://doi.org/10.3390/machines11010073

Lou X, Fu L, Song X, Ma M, Hansen P, Zhao Y, Duan Y. An Integrated Application of Motion Sensing and Eye Movement Tracking Techniques in Perceiving User Behaviors in a Large Display Interaction. Machines. 2023; 11(1):73. https://doi.org/10.3390/machines11010073

Chicago/Turabian StyleLou, Xiaolong, Lili Fu, Xuanbai Song, Mengzhen Ma, Preben Hansen, Yaqin Zhao, and Yujie Duan. 2023. "An Integrated Application of Motion Sensing and Eye Movement Tracking Techniques in Perceiving User Behaviors in a Large Display Interaction" Machines 11, no. 1: 73. https://doi.org/10.3390/machines11010073

APA StyleLou, X., Fu, L., Song, X., Ma, M., Hansen, P., Zhao, Y., & Duan, Y. (2023). An Integrated Application of Motion Sensing and Eye Movement Tracking Techniques in Perceiving User Behaviors in a Large Display Interaction. Machines, 11(1), 73. https://doi.org/10.3390/machines11010073