Anthropomorphic Design and Self-Reported Behavioral Trust: The Case of a Virtual Assistant in a Highly Automated Car

Abstract

:1. Introduction

2. Materials and Methods

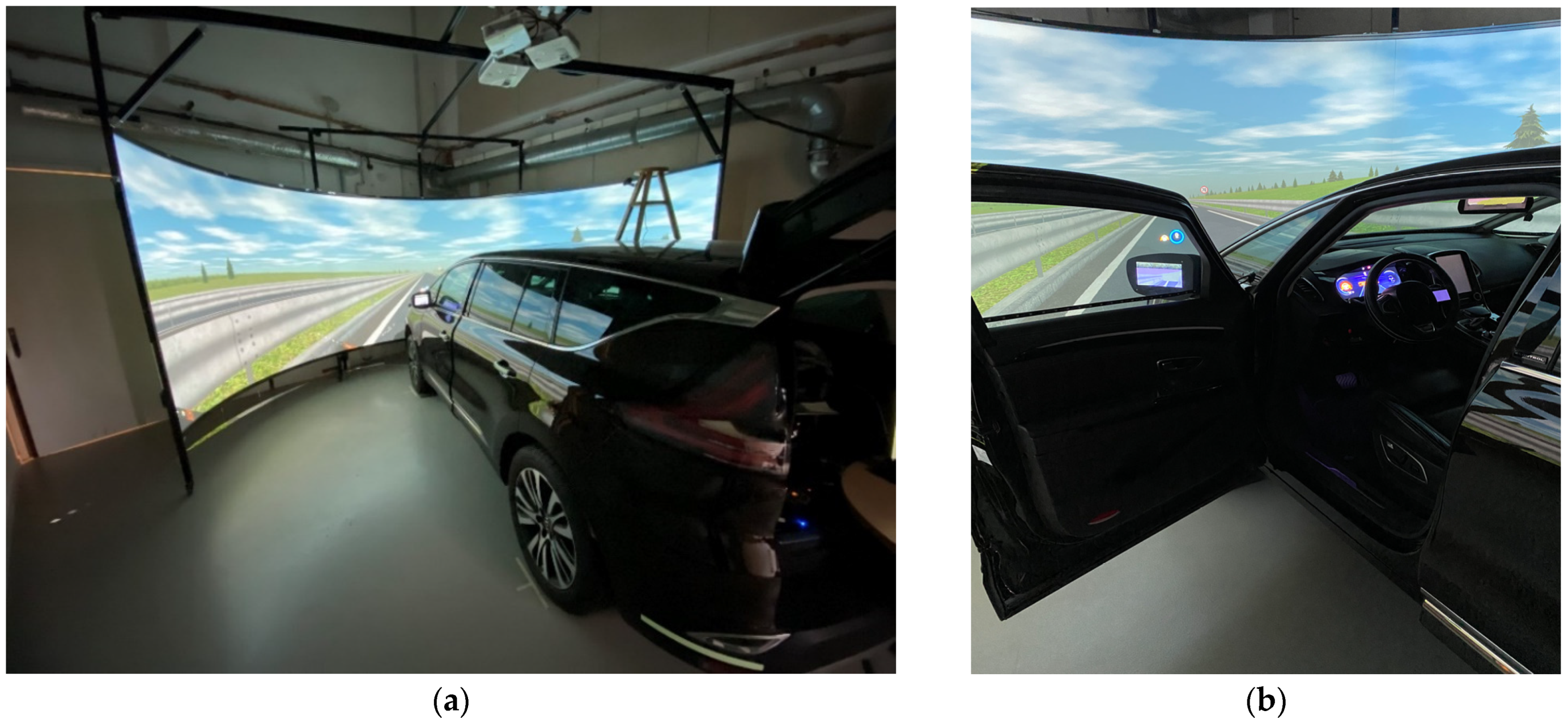

2.1. Driving Simulation and Automated Driving System (Environment)

2.2. Experimantal Factors

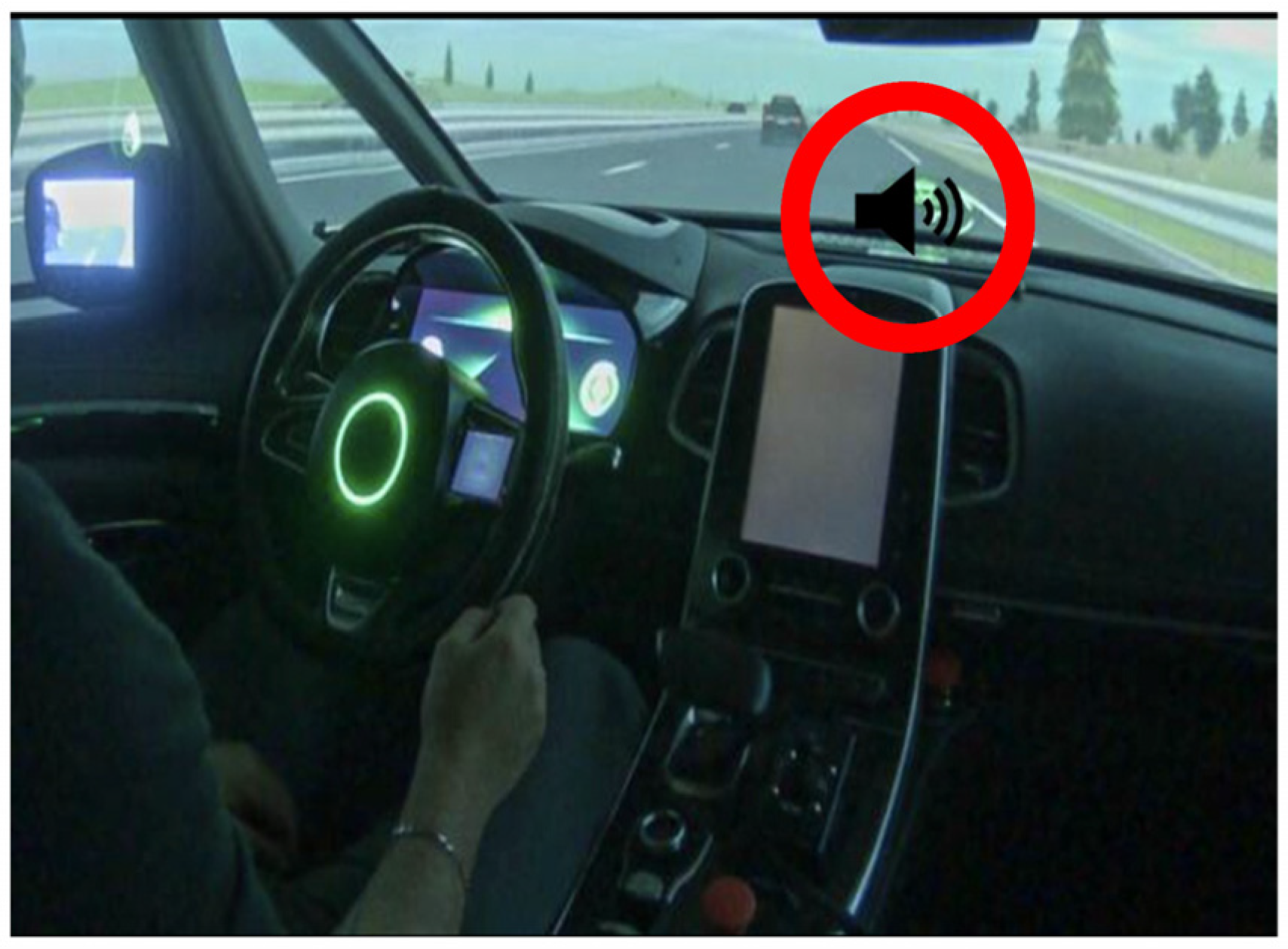

2.2.1. Baseline Interface

- Visual interface including, on the dashboard, information on speed, driving mode availability, and time available in automated mode. On the steering wheel, a small integrated screen allows the driver to change driving modes (automated to/from manual) and displays the status of the autonomous mode (available, activated, and deactivated). The head-up display (HUD) replicates most of the dashboard’s information. In the middle of the windshield, an icon replicates the status of the autonomous mode and time remaining before having to take over the driving.

- Sound interface comprising alert tones with different tonality and rhythms played locally in the driver’s headrest.

- Haptic messages are created through the integration of actuators in the backrest and seat of the driver.

2.2.2. The “Vocal Assistant” Interface

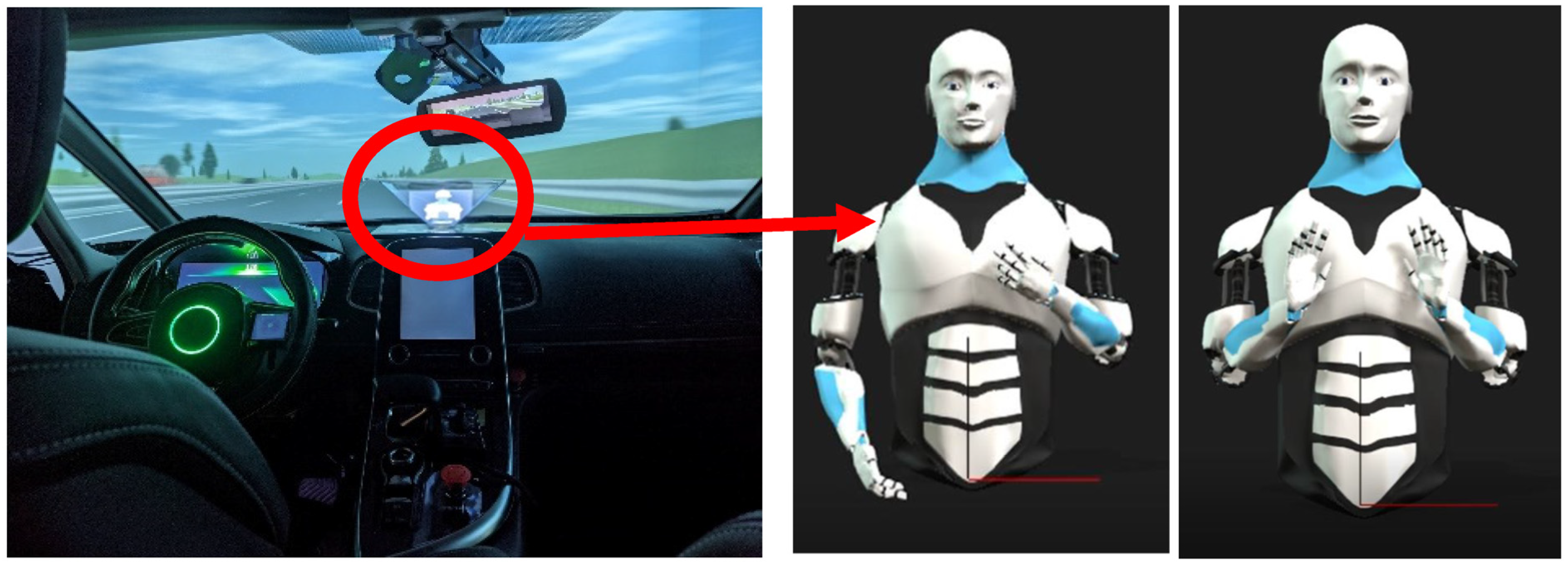

2.2.3. The “Visual Assistant” Interface

2.3. Participants

2.4. Procedure

2.5. Tasks

“The driving scenario starts at on a highway ramp. I ask you to start the vehicle and merge onto the highway. Once on the highway, activate the autonomous mode as soon as it is proposed. When you have completed the activation, I will give you a smartphone that you will use to play the 2048 game. If the takeover alert is triggered at any time during the scenario, you must stop the game and regain driving control as soon as possible”.

2.6. Data Collection and Analysis

3. Results

3.1. Perciption of Anthropomorphism

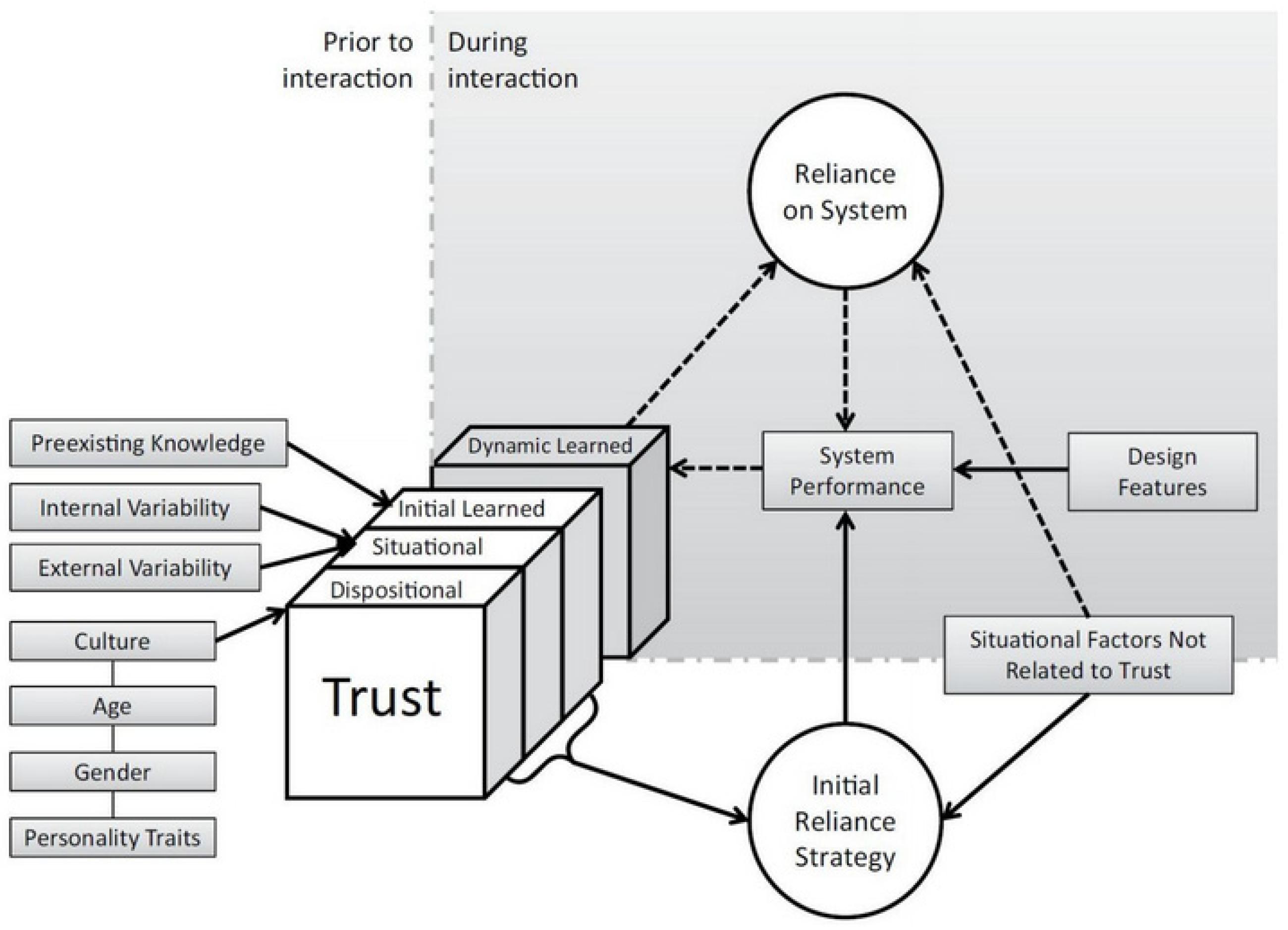

3.2. Trust

3.3. Correlation between Anthropomorphism and Trust

3.4. Performance

3.4.1. TOR 60 Scenario

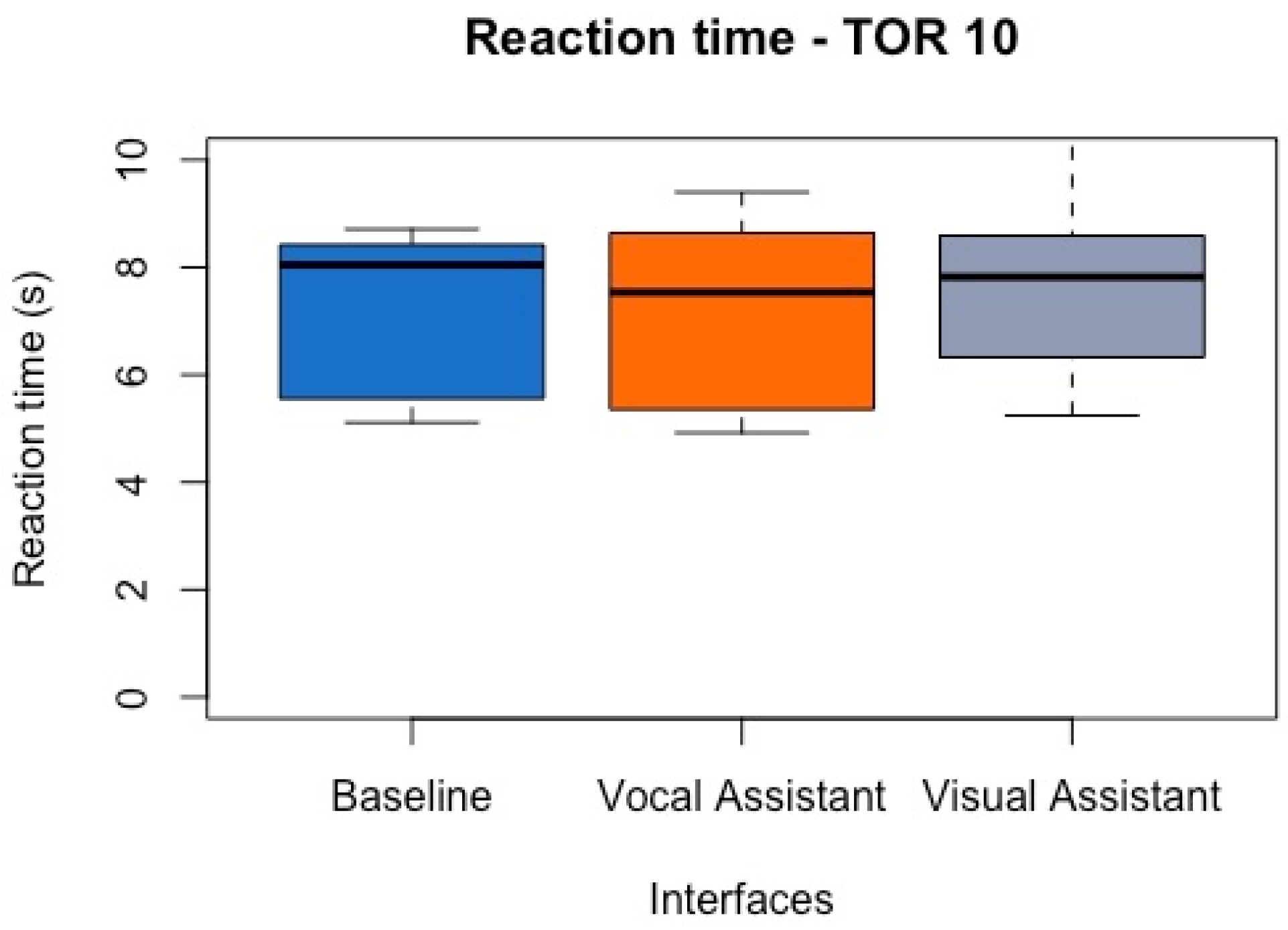

3.4.2. TOR 10 Scenario

4. Conclusions

4.1. Anthropomorphism

4.2. Trust

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Litman, T. Autonomous Vehicle Implementation Predictions: Implications for Transport Planning; Victoria Transport Policy Institute: Victoria, BC, Canada, 2018; Volume 28. [Google Scholar]

- Biondi, F.; Alvarez, I.; Jeong, K.-A. Human–Vehicle Cooperation in Automated Driving: A Multidisciplinary Review and Appraisal. Int. J. Hum.–Comput. Interact. 2019, 35, 932–946. [Google Scholar] [CrossRef]

- Biondi, F.; Lohani, M.; Hopman, R.; Mills, S.; Cooper, J.; Strayer, D. 80 MPH and out-of-the-loop: Effects of real-world semi-automated driving on driver workload and arousal. In Proceedings of the Conference of the Human Factors and Ergonomics Society, Los Angeles, CA, USA, 1–5 October 2018. [Google Scholar]

- Stanton, N.A.; Young, M.S. Driver behaviour with adaptive cruise control. Ergonomics 2005, 48, 1294–1313. [Google Scholar] [CrossRef] [PubMed]

- Vollrath, M.; Schleicher, S.; Gelau, C. The influence of cruise control and adaptive cruise control on driving behaviour—A driving simulator study. Accid. Anal. Prev. 2011, 43, 1134–1139. [Google Scholar] [CrossRef]

- Liu, P.; Yang, R.; Xu, Z. Public Acceptance of Fully Automated Driving: Effects of Social Trust and Risk/Benefit Perceptions. Risk Anal. 2019, 39, 326–341. [Google Scholar] [CrossRef] [PubMed]

- Knack, S. Trust, Associational Life and Economic Performance. In The Contribution of Human and Social Capital to Sustained Economic Growth and Well-Being; Helliwell, J.F., Ed.; Human Resources Development: Quebec, QC, Canada, 2001. [Google Scholar]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Muir, B.M. Trust in automation: I. Theoretical issues in the study of trust and human intervention in automated systems. Ergonomics 1994, 37, 1905–1922. [Google Scholar] [CrossRef]

- Rajaonah, B. Rôle de la Confiance de L’opérateur dans son Interaction avec une Machine Autonome sur la Coopération Humain-Machine. Doctor’s Thesis, Paris 8, Paris, French, 2006. [Google Scholar]

- French, B.; Duenser, A.; Heathcote, A. Trust in Automation A Literature Review; Commonwealth Scientific and Industrial Research Organisation (CSIRO): Canberra, Australia, 2018.

- Kohn, S.C.; de Visser, E.J.; Wiese, E.; Lee, Y.-C.; Shaw, T.H. Measurement of Trust in Automation: A Narrative Review and Reference Guide. Front. Psychol. 2021, 12, 604977. [Google Scholar] [CrossRef]

- Jian, J.-Y.; Bisantz, A.M.; Drury, C.G. Foundations for an Empirically Determined Scale of Trust in Automated Systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Waytz, A.; Heafner, J.; Epley, N. The Mind in the Machine: Anthropomorphism Increases Trust in an Autonomous Vehicle. J. Exp. Soc. Psychol. 2014, 52, 113–117. [Google Scholar] [CrossRef]

- Garcia, D.; Kreutzer, C.; Badillo-Urquiola, K.; Mouloua, M. Measuring Trust of Autonomous Vehicles: A Development and Validation Study. In HCI International 2015—Posters’ Extended Abstracts; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2015; Volume 529, pp. 610–615. [Google Scholar] [CrossRef]

- Yagoda, R.E.; Gillan, D.J. You want me to trust a ROBOT? The development of a human–robot interaction trust scale. Int. J. Soc. Robot. 2012, 4, 235–248. [Google Scholar] [CrossRef]

- Hester, M.; Lee, K.; Dyre, B.P. “Driver Take Over”: A preliminary exploration of driver trust and performance in autonomous vehicles. In Human Factors and Ergonomics Society Annual Meeting; Sage: Los Angeles, CA, USA, 2017; Volume 61, pp. 1969–1973. [Google Scholar]

- Gaudiello, I.; Zibetti, E.; Lefort, S.; Chetouani, M.; Ivaldi, S. Trust as indicator of robot functional and social acceptance. An experimental study on user conformation to iCub answers. Comput. Hum. Behav. 2016, 61, 633–655. [Google Scholar] [CrossRef]

- Hergeth, S.; Lorenz, L.; Vilimek, R.; Krems, J.F. Keep your scanners peeled: Gaze behavior as a measure of automation trust during highly automated driving. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 509–519. [Google Scholar] [CrossRef] [PubMed]

- Morris, D.M.; Erno, J.M.; Pilcher, J.J. Electrodermal response and automation trust during simulated self-driving car use. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2017, 61, 1759–1762. [Google Scholar] [CrossRef]

- Miller, D.; Johns, M.; Mok, B.; Gowda, N.; Sirkin, D.; Lee, K.; Ju, W. Behavioral measurement of trust in automation. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 1849–1853. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Parasuraman, R.; Riley, V. Humans and Automation: Use, Misuse, Disuse, Abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Niu, D.; Terken, J.; Eggen, B. Anthropomorphizing information to enhance trust in autonomous vehicles. Hum. Factors Ergon. Manuf. Serv. Ind. 2017, 28, 352–359. [Google Scholar] [CrossRef]

- Large, D.R.; Harrington, K.; Burnett, G.; Luton, J.; Thomas, P.; Bennett, P. To Please in a Pod: Employing an Anthropomorphic Agent-Interlocutor to Enhance Trust and User Experience in an Autonomous, Self-Driving Vehicle. In Proceedings of the Automotive UI ‘19: 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 49–59. [Google Scholar]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Pla. In Bibliovault OAI Repository; the University of Chicago Press: Chicago, IL, USA, 1996. [Google Scholar]

- Valette-Florence, R.; de Barnier, V. Les lecteurs sont-ils capables d’anthropomorphiser leur magazine? Une réponse par la méthode de triangulation. Manag. Avenir 2009, 27, 54–72. [Google Scholar] [CrossRef]

- Admoni, H.; Scassellati, B. A Multi-Category Theory of Intention. CogSci, 2012. Available online: https://scazlab.yale.edu/sites/default/files/files/Admoni-Cogsci-12.pdf (accessed on 19 October 2023).

- Epley, N.; Waytz, A.; Cacioppo, J.T. On Seeing Human: A Three-Factor Theory of Anthropomorphism. Psychol. Rev. 2007, 114, 864–886. [Google Scholar] [CrossRef]

- Złotowski, J.; Strasser, E.; Bartneck, C. Dimensions of anthropomorphism: From humanness to human likeness. In Proceedings of the HRI’14: ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 66–73. [Google Scholar]

- DiSalvo, C.F.; Gemperle, F.; Forlizzi, J.; Kiesler, S. All robots are not created equal: The design and perception of humanoid robot heads. In Proceedings of the DIS02: Designing Interactive Systems 2002, London, UK, 25–28 June 2002; pp. 321–326. [Google Scholar]

- Fink, J. Anthropomorphism and Human Likeness in the Design of Robots and Human-Robot Interaction. In Social Robotics; Ge, S.S., Khatib, O., Cabibihan, J.-J., Simmons, R., Williams, M.-A., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 199–208. [Google Scholar] [CrossRef]

- Häuslschmid, R.; von Bülow, M.; Pfleging, B.; Butz, A. SupportingTrust in Autonomous Driving. In Proceedings of the IUI’17: 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; pp. 319–329. [Google Scholar]

- Zihsler, J.; Hock, P.; Walch, M.; Dzuba, K.; Schwager, D.; Szauer, P.; Rukzio, E. Carvatar: Increasing Trust in Highly-Automated Driving Through Social Cues. In Proceedings of the AutomotiveUI’16: 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 9–14. [Google Scholar]

- Vermersch, P. L’entretien d’explicitation en Formation Initiale et Continue; ESF: Paris, France, 1994. [Google Scholar]

- Holthausen, B.E.; Wintersberger, P.; Walker, B.N.; Riener, A. Situational Trust Scale for Automated Driving (STS-AD): Development and Initial Validation. In Proceedings of the AutomotiveUI ‘20: 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, 21–22 September 2020. [Google Scholar]

- Cahour, B.; Forzy, J.-F. Does projection into use improve trust and exploration? An example with a cruise control system. Saf. Sci. 2009, 47, 1260–1270. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; de Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Schaefer, K.E.; Billings, D.R.; Szalma, J.L.; Adams, J.K.; Sanders, T.L.; Chen, J.Y.; Hancock, P.A. A Meta-Analysis of Factors Influencing the Development of Trust in Automation: Implications for Human-Robot Interaction (ARL-TR-6984). Army Research Lab Aberdeen Proving Ground MD Human Research and Engineering Directorate. Available online: https://apps.dtic.mil/docs/citations/ADA607926 (accessed on 1 December 2020).

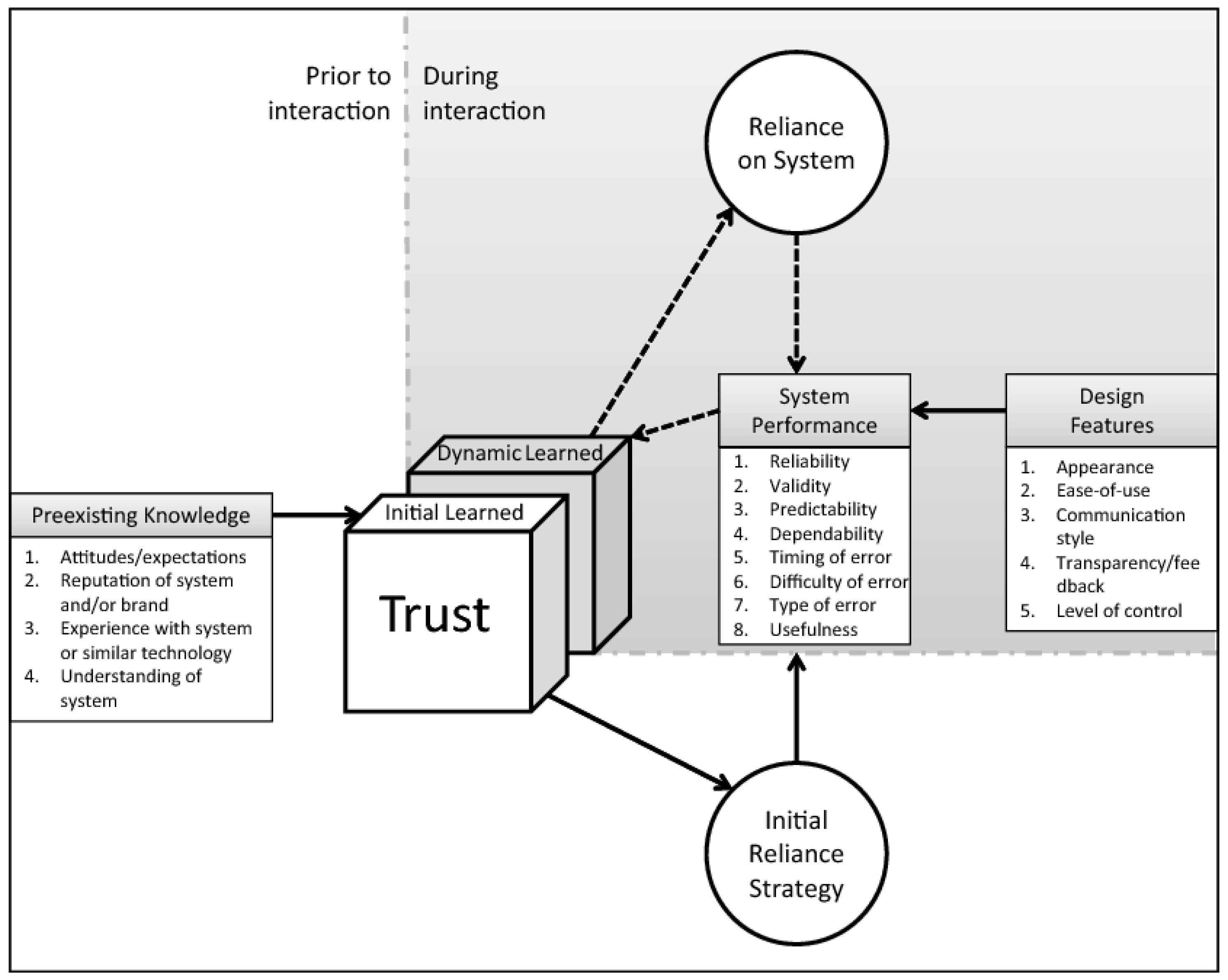

- Hoff, K.A.; Bashir, M. Trust in Automation: Integrating Empirical Evidence on Factors That Influence Trust. Hum. Factors J. Hum. Factors Ergon. Soc. 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Haslam, N.; Loughnan, S.; Kashima, Y.; Bain, P. Attributing and denying humanness to others. Eur. Rev. Soc. Psychol. 2008, 19, 55–85. [Google Scholar] [CrossRef]

- Moussawi, S.; Koufaris, M. Perceived Intelligence and Perceived Anthropomorphism of Personal Intelligent Agents: Scale Development and Validation. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 8–11 January 2019. [Google Scholar]

- Kiesler, S.; Powers, A.; Fussell, S.R.; Torrey, C. Anthropomorphic interactions with a robot and robot-like agent. Soc. Cogn. 2008, 26, 169–181. [Google Scholar] [CrossRef]

- Powers, A.; Kiesler, S. The advisor robot: Tracing people’s mental model from a robot’s physical attributes. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Kim, Y.; Sundar, S.S. Anthropomorphism of computers: Is it mindful or mindless? Comput. Hum. Behav. 2012, 28, 241–250. [Google Scholar] [CrossRef]

- Esfahani, M.S.; Reynolds, N.; Ashleigh, M. Mindful and mindless anthropomorphism: How to facilitate consumer comprehension towards new products. Int. J. Innov. Technol. Manag. 2020, 17, 2050016. [Google Scholar] [CrossRef]

| Scenario | Description | Related Research Question and Aims |

|---|---|---|

| Training phase | ||

| Training scenario 1 | This scenario allows the participant to discover the different user interfaces of the cockpit and the activation/deactivation procedures for the autonomous mode. | |

| Training scenario 2 | This scenario allows the participant to discover the hand back procedure (takeover requested by the system). In this situation, the driver has 60 s to take over the driving. | |

| Experimental phase | ||

| Activation Scenario | This scenario is the first of the experimental phase. First, the driver must merge onto a highway in manual mode before activating the autonomous mode in light traffic. It aims to evaluate how increasing anthropomorphic attributes in the interface increases participants’ performance, specifically their ability to activate the autonomous mode. | Q1, Q2, Q3, and Q4. |

Takeover request in 60 s (TOR 60) | The takeover request in 60 s (TOR 60) scenario starts with 6 min of automated driving on the same road as in the activation scenario. It ends with a non-urgent takeover request during which the participant has 60 s to regain control of the vehicle. This request is triggered by the system and due to the presence of a highway exit on the path followed by the vehicle. | Q1, Q2, Q3, and Q4. It aims to evaluate how increasing anthropomorphic attributes in the interface impact participants’ performance, specifically their ability to handle a non-urgent takeover request, and also the variation in their trust and perception of anthropomorphism. |

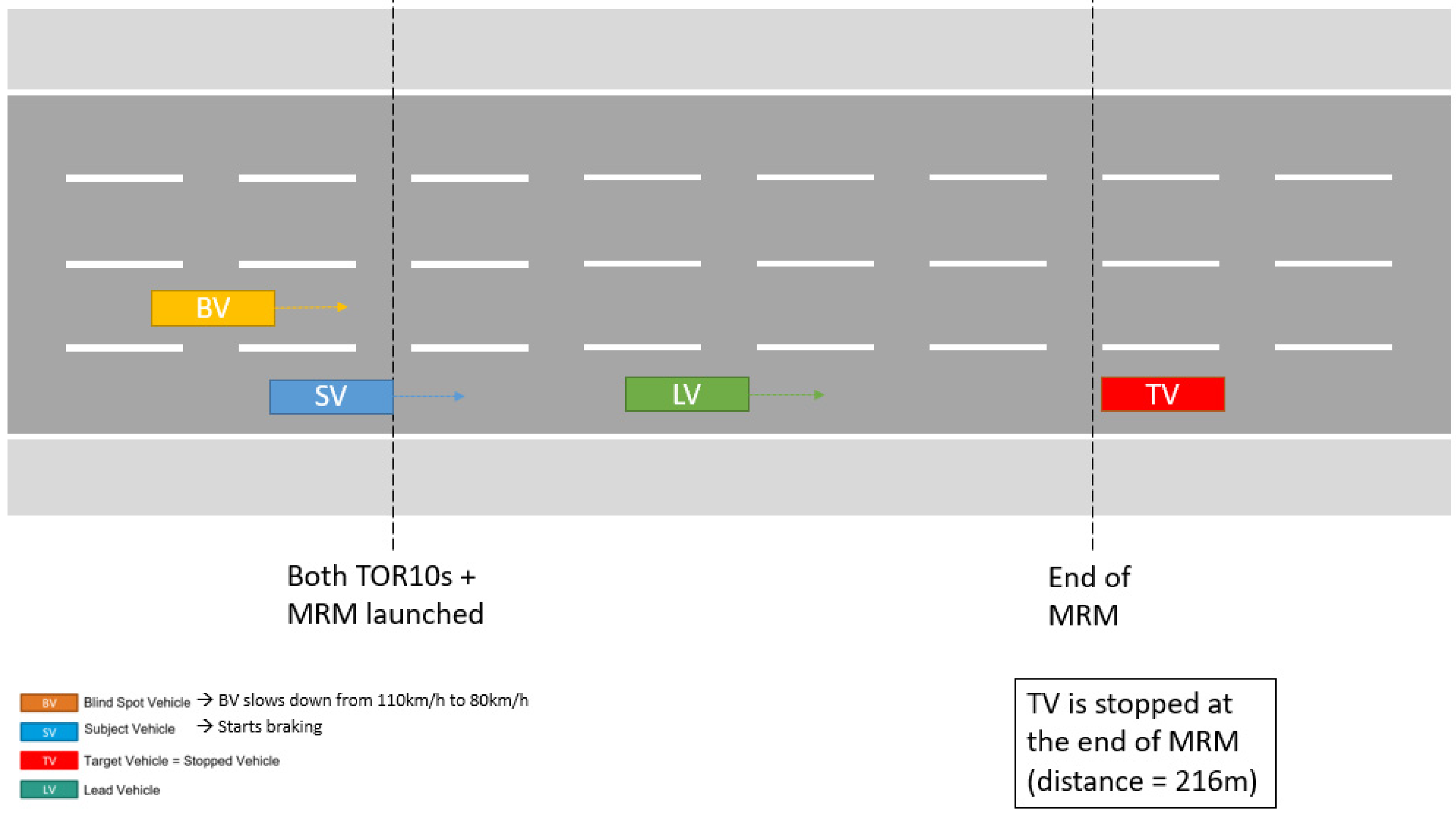

Takeover request in 10 s (TOR 10) | The takeover request in 10 s (TOR 10) scenario starts with 4 min of automated driving on a straight highway in moderately dense traffic. It ends with an urgent takeover request during which the participant has only 10 s to regain control of the vehicle in a complex situation (dense traffic, with a vehicle stopped in the participant’s vehicle lane vehicle lane and another in the left blind spot). This request is triggered by the system and due to a sensor failure. | Q1, Q2, Q3, and Q4. It aims to evaluate how increasing anthropomorphic attributes in the interface impact participants’ performance, specifically their ability to handle an urgent takeover request, and also the variation in their trust and perception of anthropomorphism. |

| Situation | Message Example | Message Type |

|---|---|---|

| AD activated | You can regain control at any time. This is only possible if your hands are on the steering wheel and your eyes are on the road. I wish you an excellent journey. | Information |

| TOR 60 at 60 s before takeover | You must take over control to take the next exit. I won’t be able to drive in 60 s. | Explanation |

| TOR 60 at 10 s before takeover | Take over control. | Explanation |

| TOR 10 | Sensor failure detected; vehicle stops; take over control! | Explanation |

| Trust Scale Used before Experiment [10] (Initial and Post-Training) | Trust Scale Used During Experiment [36] (after Each Driving Scenario) | Trust Scale Used at the End of Experiment [37] |

|---|---|---|

| 1—Do you think you know the automated car? 2—How much trust would you have in the automated car? 3—Do you think the automated car is useful? 4—Do you think the automated car is necessary? 5—Do you think that the automated car would interfere with your usual driving? 6—In everyday life, you tend to take risks 7—You tend to trust people 8—You believe that trusting someone means being able to trust them with something to do 9—You believe that it is necessary to know a person well to trust him/her 10—You think that the decision to trust someone depends on how you interact with them 11—You are generally suspicious of new technologies (cell phones, computers, internet, microwave ovens, etc.) 12—You think that new technologies are interesting 13—You think that new technologies are dangerous What risks would you associate with using an automated car? 14—The risk of driving more dangerously 15—The risk of not knowing how to use the system 16—The risk of an accident with the car ahead 17—The risk of an accident with the car behind 18—The risk of being dependent on the system 19—The risk of losing the pleasure of driving What benefits would you get from using an automated car? 20—Less stressful driving 21—Lightening the driving task (the automated car would allow me to perform a secondary activity such as reading or using my phone) 22—Easier driving task 23—Improved driving comfort 24—Safer driving | 1—I trust the automated car in this situation 2—I would have performed better than the automated vehicle in this situation 3—In this situation, the automated vehicle performs well enough for me to engage in other activities (using my smartphone) 4—The situation was risky 5—The automated vehicle made an unsafe judgement in this situation 6—The automated vehicle reacted appropriately to the environment 7—In this situation, the behavior of the automated car surprised me | Overall, how much do you trust the automated car? |

| Initial | Post-Training | Activation | TOR 60 | TOR 10 | Post-Experiment | |

|---|---|---|---|---|---|---|

| Baseline | 7 (1) | 8 (0) | 10 (1.25) | 9.5 (1.25) | 7 (3.5) | 8 (2) |

| Vocal Assistant | 6 (1) | 7 (1.25) | 8 (2.25) | 9 (1.25) | 8 (4.25) | 7.5 (1.25) |

| Visual Assistant | 6 (1) | 7 (2) | 8.5 (2) | 9 (1.5) | 7 (1.5) | 7.5 (2) |

| Kruskal–Wallis test | 0.0002 * | 0.0284 * | 0.0603 | 0.415 | 0.7895 | 0.6246 |

| Visual Assistant | Vocal Assistant | |

|---|---|---|

| Vocal Assistant | 1.00 | |

| Baseline | 0.0005 * | 0.0012 * |

| Visual Assistant | Vocal Assistant | |

|---|---|---|

| Vocal Assistant | 1.00 | |

| Baseline | 0.029 * | 0.031 * |

| Initial | Post-Training | Activation | TOR60 | TOR10 | |

|---|---|---|---|---|---|

| Baseline | 6.7 (1.67) | 8.03 (0.98) | 8.43 (1.14) | 9.07 (1.00) | 5.71 (3.64) |

| Vocal Assistant | 6.07 (1.68) | 6.78 (1.70) | 8.79 (1.64) | 9.29 (1.39) | 6.71 (2.96) |

| Visual Assistant | 5.74 (1.05) | 6.55 (1.54) | 8 (1.25) | 8.93 (1.11) | 5.29 (2.61) |

| Kruskal–Wallis test | 0.1271 | 0.0122 * | 0.3823 | 0.5545 | 0.7003 |

| Visual Assistant | Vocal Assistant | |

|---|---|---|

| Vocal Assistant | 1.00 | |

| Baseline | 0.0068 * | 0.0307 * |

| Visual Assistant | Vocal Assistant | |

|---|---|---|

| Vocal Assistant | 0.7706 | |

| Baseline | 0.0068 * | 0.0895 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lawson-Guidigbe, C.; Amokrane-Ferka, K.; Louveton, N.; Leblanc, B.; Rousseaux, V.; André, J.-M. Anthropomorphic Design and Self-Reported Behavioral Trust: The Case of a Virtual Assistant in a Highly Automated Car. Machines 2023, 11, 1087. https://doi.org/10.3390/machines11121087

Lawson-Guidigbe C, Amokrane-Ferka K, Louveton N, Leblanc B, Rousseaux V, André J-M. Anthropomorphic Design and Self-Reported Behavioral Trust: The Case of a Virtual Assistant in a Highly Automated Car. Machines. 2023; 11(12):1087. https://doi.org/10.3390/machines11121087

Chicago/Turabian StyleLawson-Guidigbe, Clarisse, Kahina Amokrane-Ferka, Nicolas Louveton, Benoit Leblanc, Virgil Rousseaux, and Jean-Marc André. 2023. "Anthropomorphic Design and Self-Reported Behavioral Trust: The Case of a Virtual Assistant in a Highly Automated Car" Machines 11, no. 12: 1087. https://doi.org/10.3390/machines11121087

APA StyleLawson-Guidigbe, C., Amokrane-Ferka, K., Louveton, N., Leblanc, B., Rousseaux, V., & André, J.-M. (2023). Anthropomorphic Design and Self-Reported Behavioral Trust: The Case of a Virtual Assistant in a Highly Automated Car. Machines, 11(12), 1087. https://doi.org/10.3390/machines11121087