An Evaluation Method for Automated Vehicles Combining Subjective and Objective Factors

Abstract

1. Introduction

1.1. Motivation

1.2. Literature Review

1.2.1. Test Scenario Data and Test Scenario Construction Methods

1.2.2. Evaluation Methods for Automated Vehicle Testing

1.3. Contribution and Section Arrangement

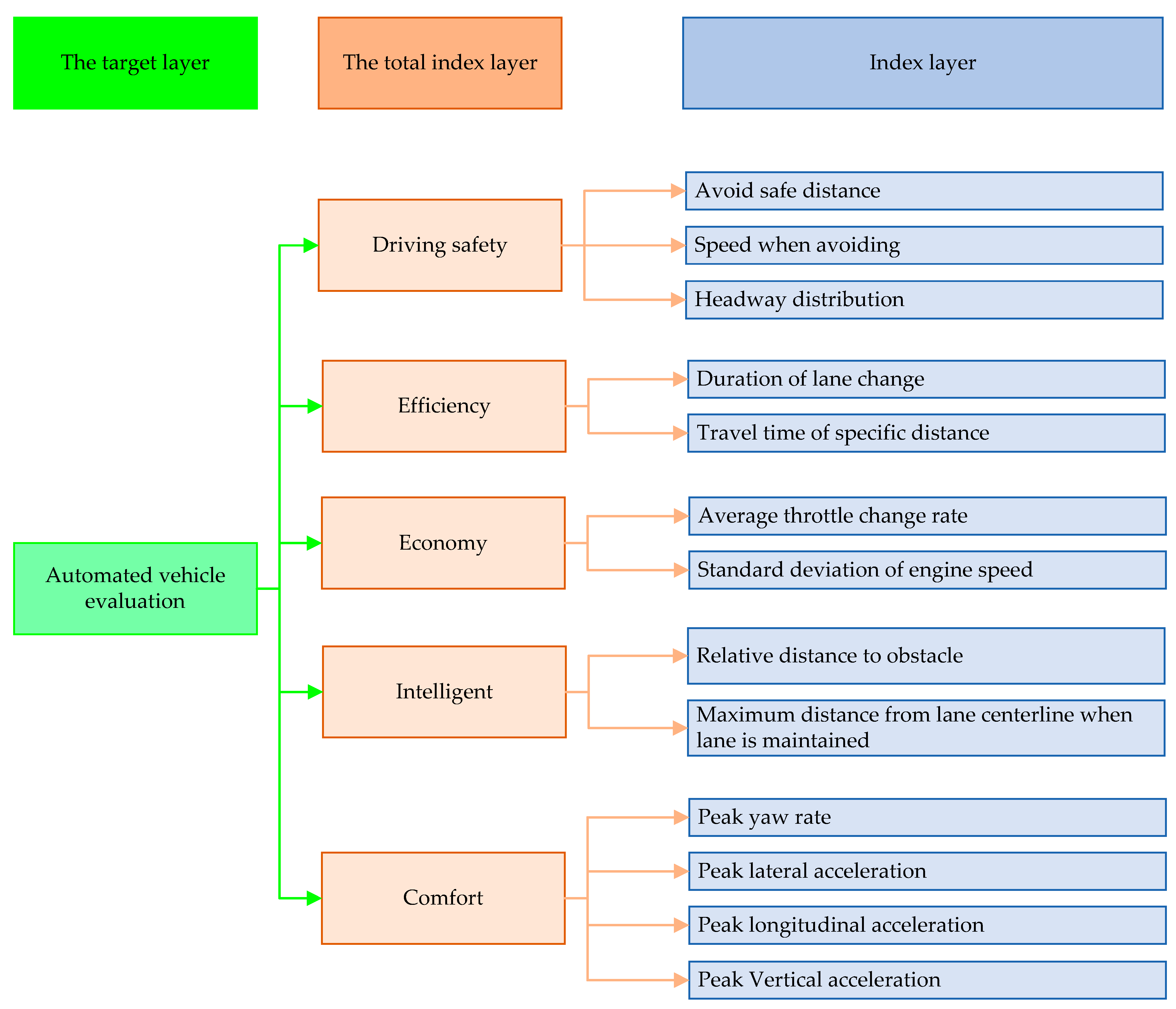

- The five evaluation dimensions of safety, efficiency, economy, intelligence, and comfort, as well as 13 indicators, are applied to establish a more comprehensive evaluation system for automated vehicles.

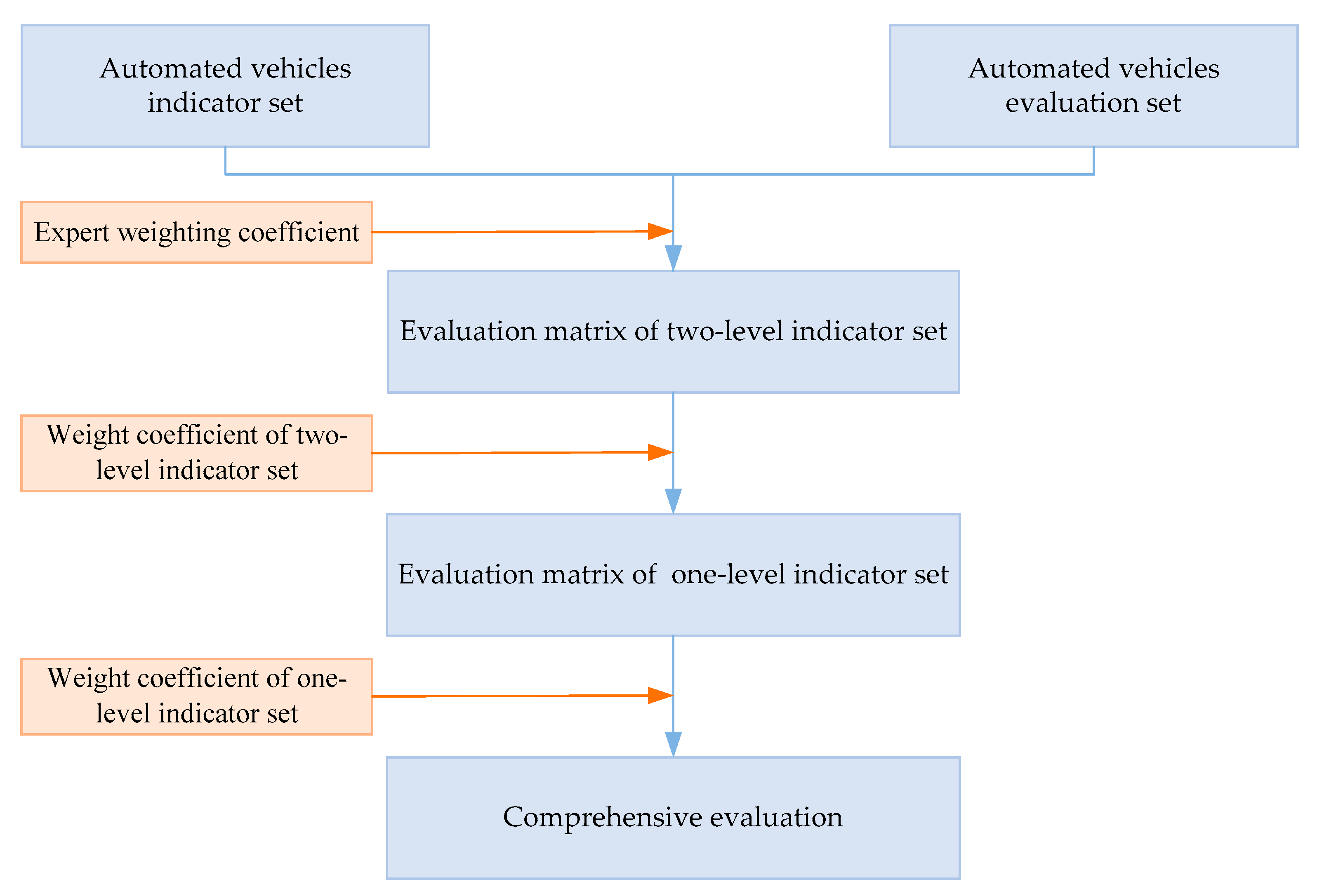

- AHP (subjective) and improved CTITIC (objective) methods are combined to determine the weights of indicators, and a two-level fuzzy comprehensive (subjective and objective) evaluation method is adopted to comprehensively evaluate the performance of automated vehicles.

- An evaluation method combining subjective and objective factors is proposed to obtain more reasonable vehicle performance test results and achieve a more comprehensive and effective evaluation of automated vehicles.

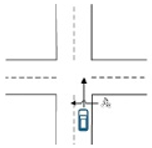

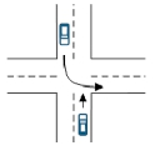

2. Automatic Generation of Test Scenarios

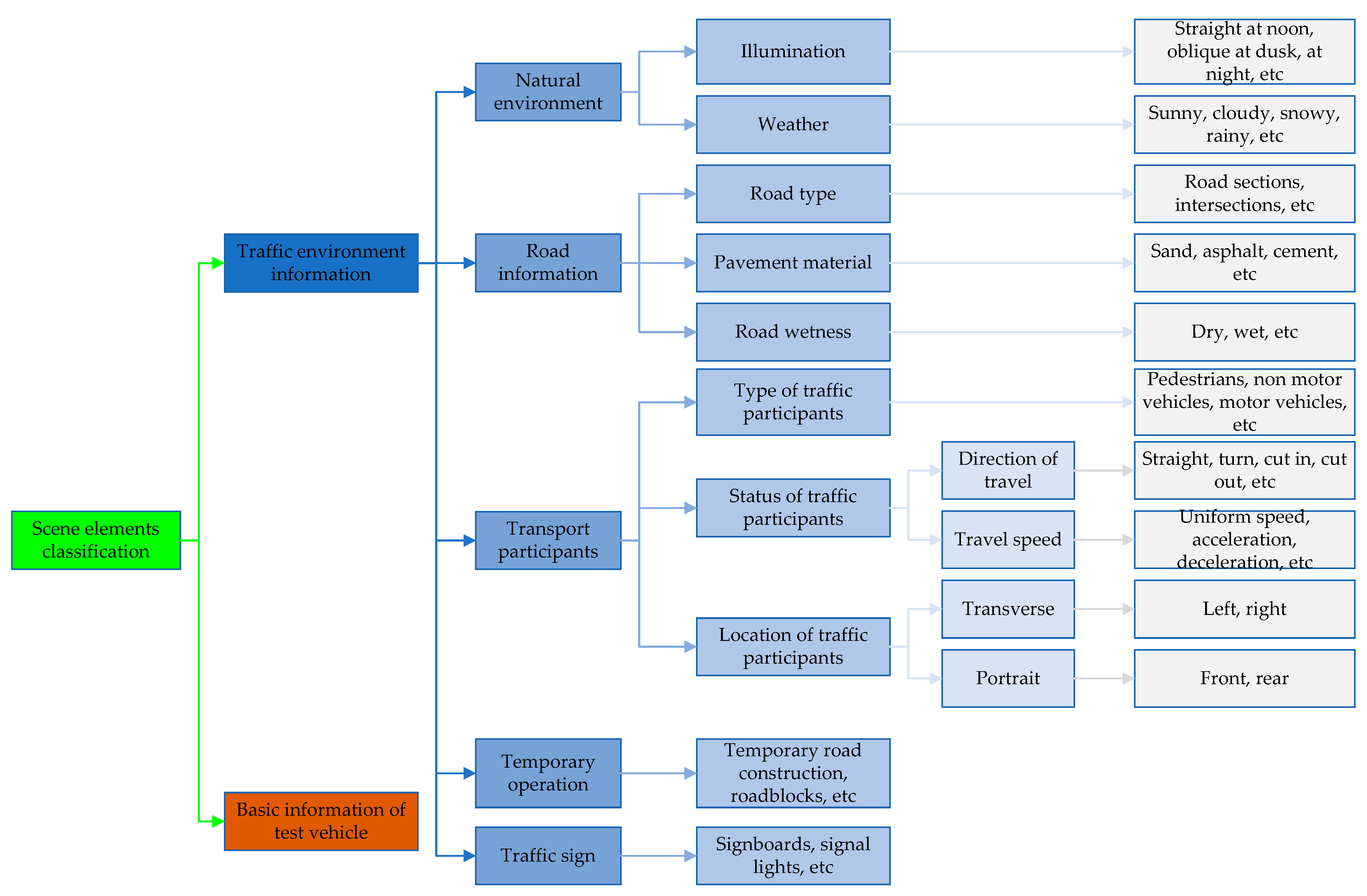

2.1. Scene Element Classification

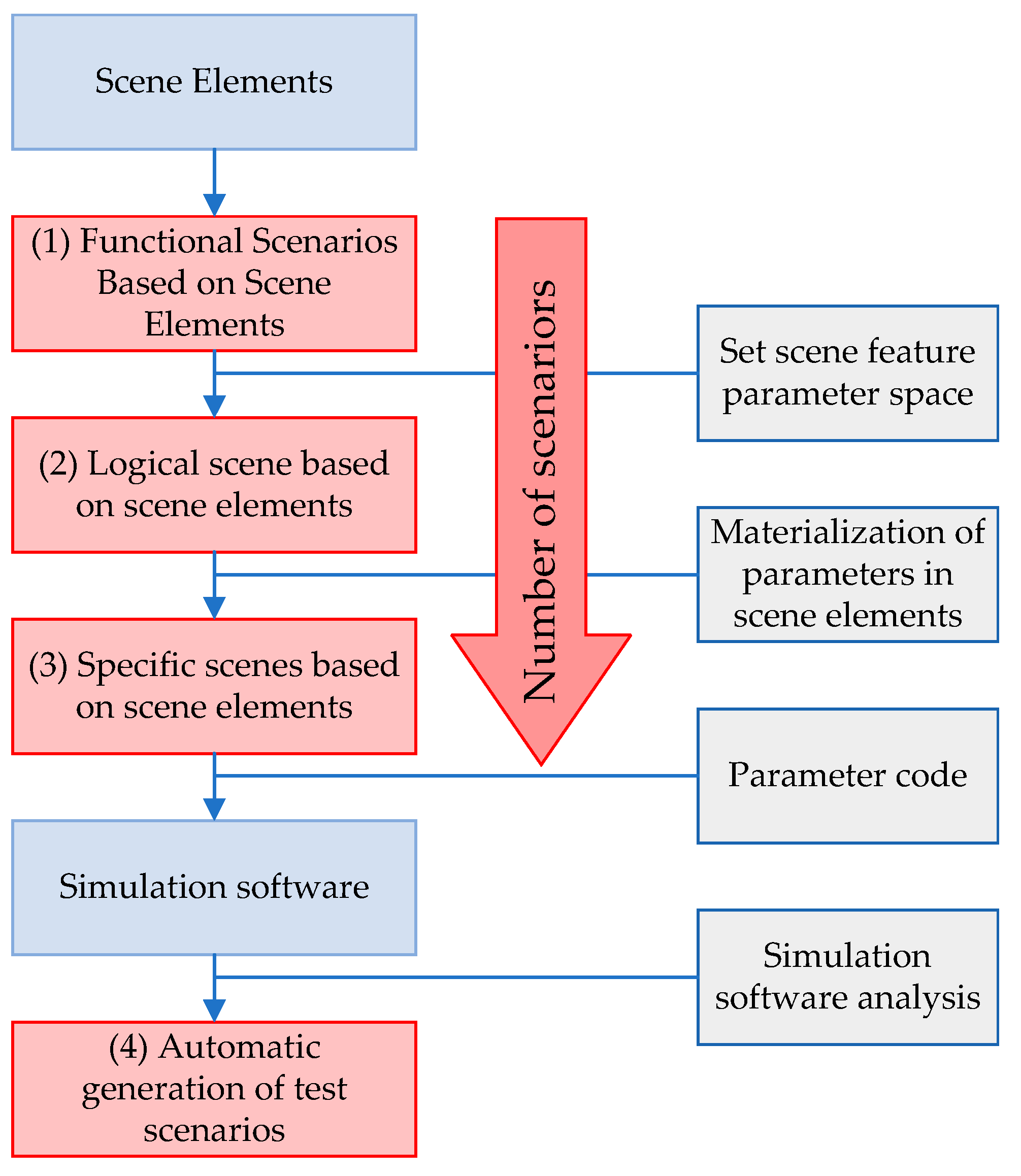

2.2. Automatic Generation of Test Scenarios

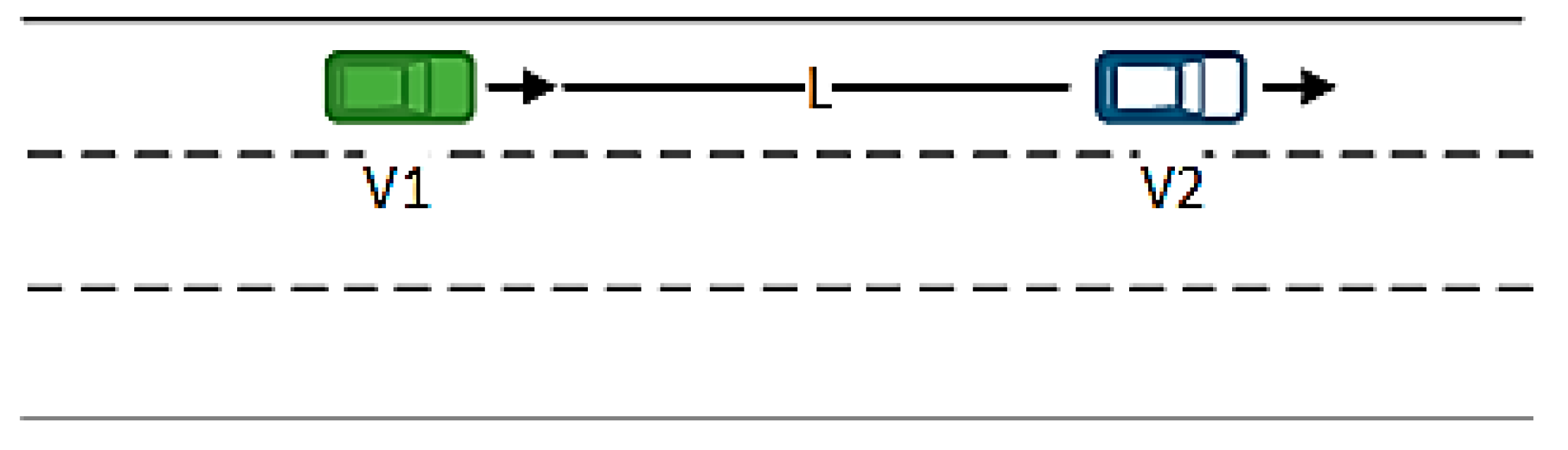

2.3. Batch Testing

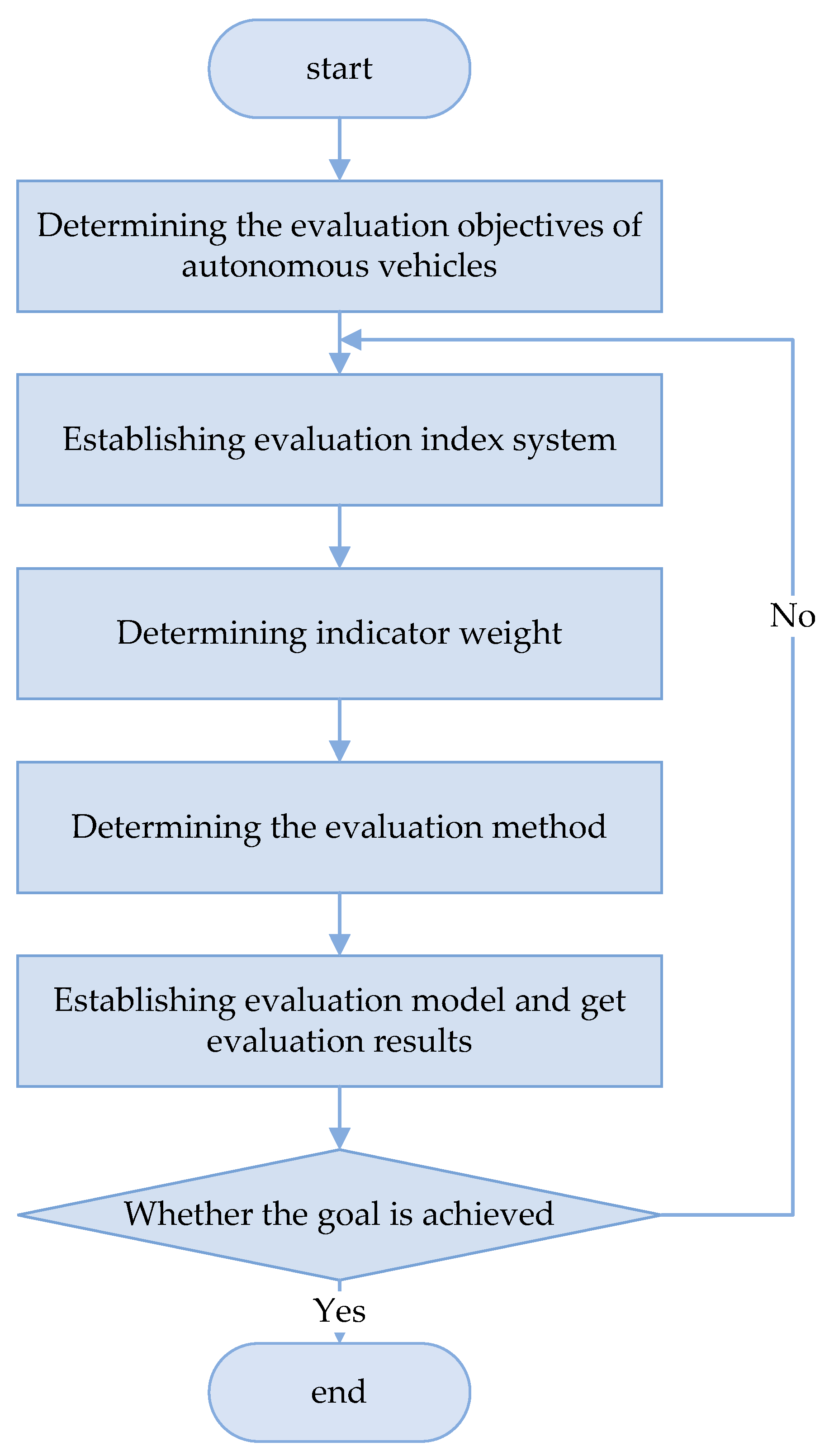

3. Comprehensive Evaluation Combining Subjective and Objective Methods

3.1. Establishment of an Evaluation Index System for Automated Vehicles

3.2. Determination of Index Weights

3.2.1. AHP Method for Determining the Weight of the Total Index Layer

3.2.2. Improved CRITIC Method for Determining the Weight of the Index Layer

3.3. Determination of Evaluation Methods

4. Examples of the Subjective and Objective Evaluation of Automated Vehicles

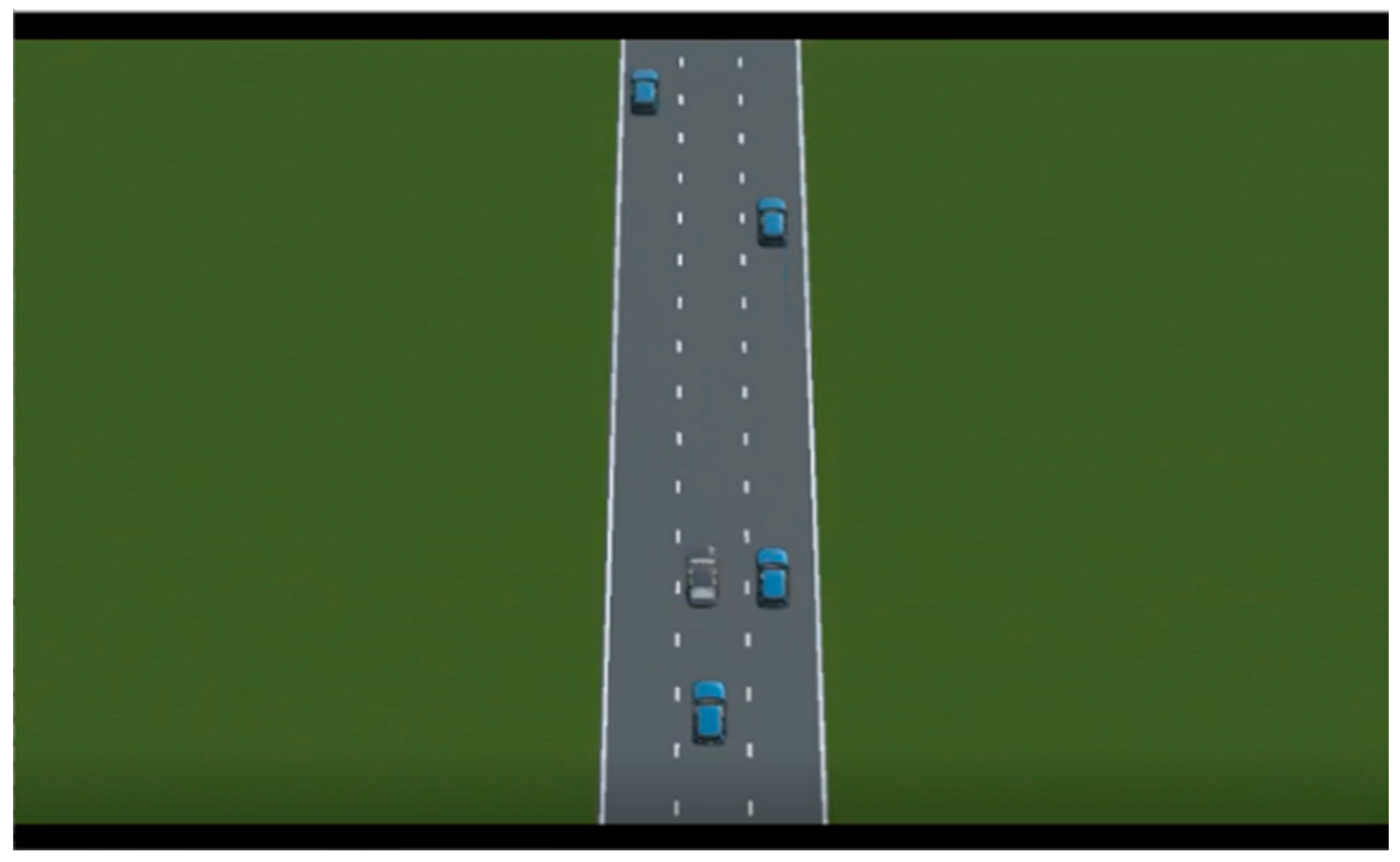

4.1. Virtual Testing and Evaluation of Automated Vehicles

4.1.1. Determination of Index Weights

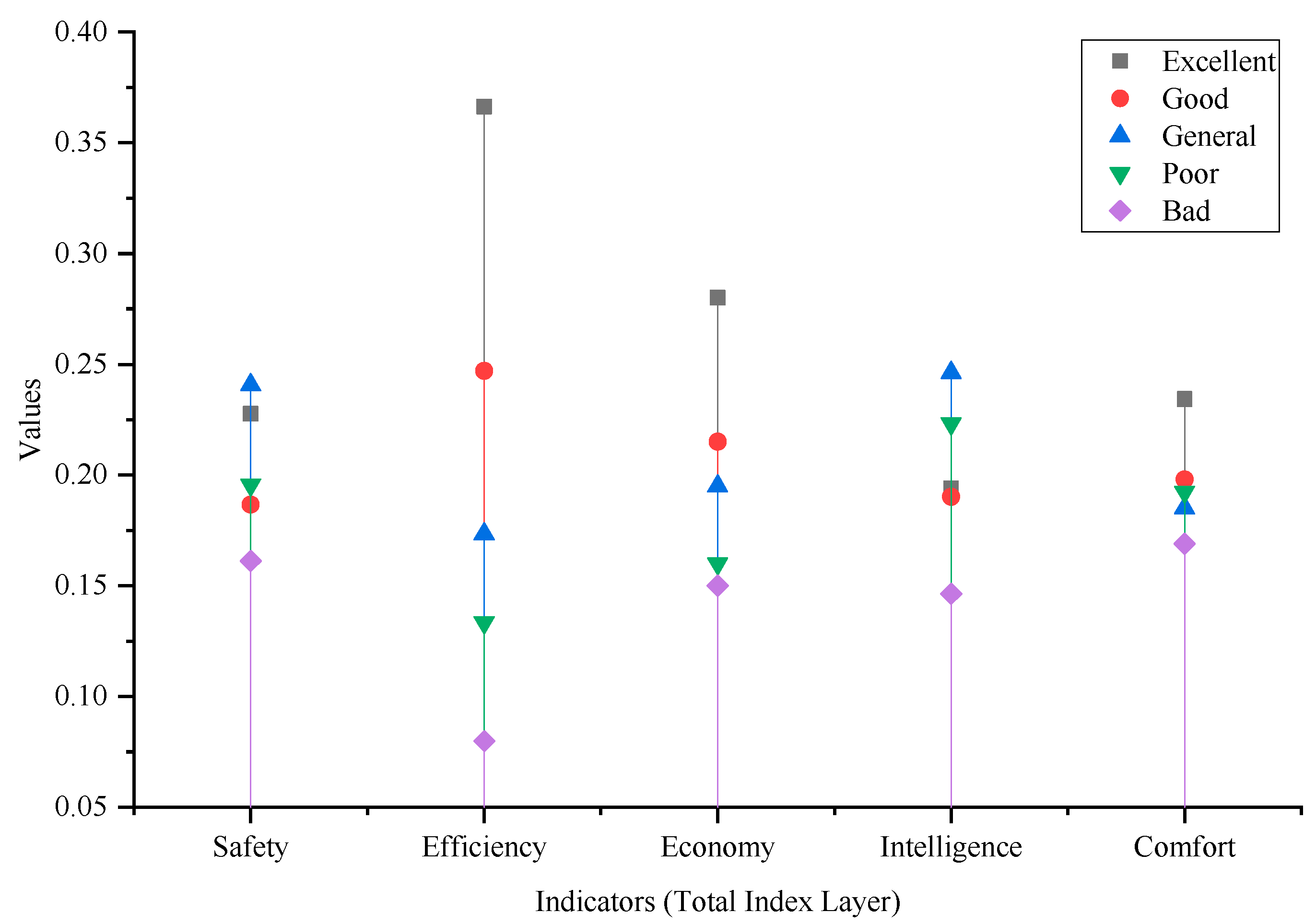

4.1.2. Evaluation by Two-Level Fuzzy Comprehensive Evaluation Method

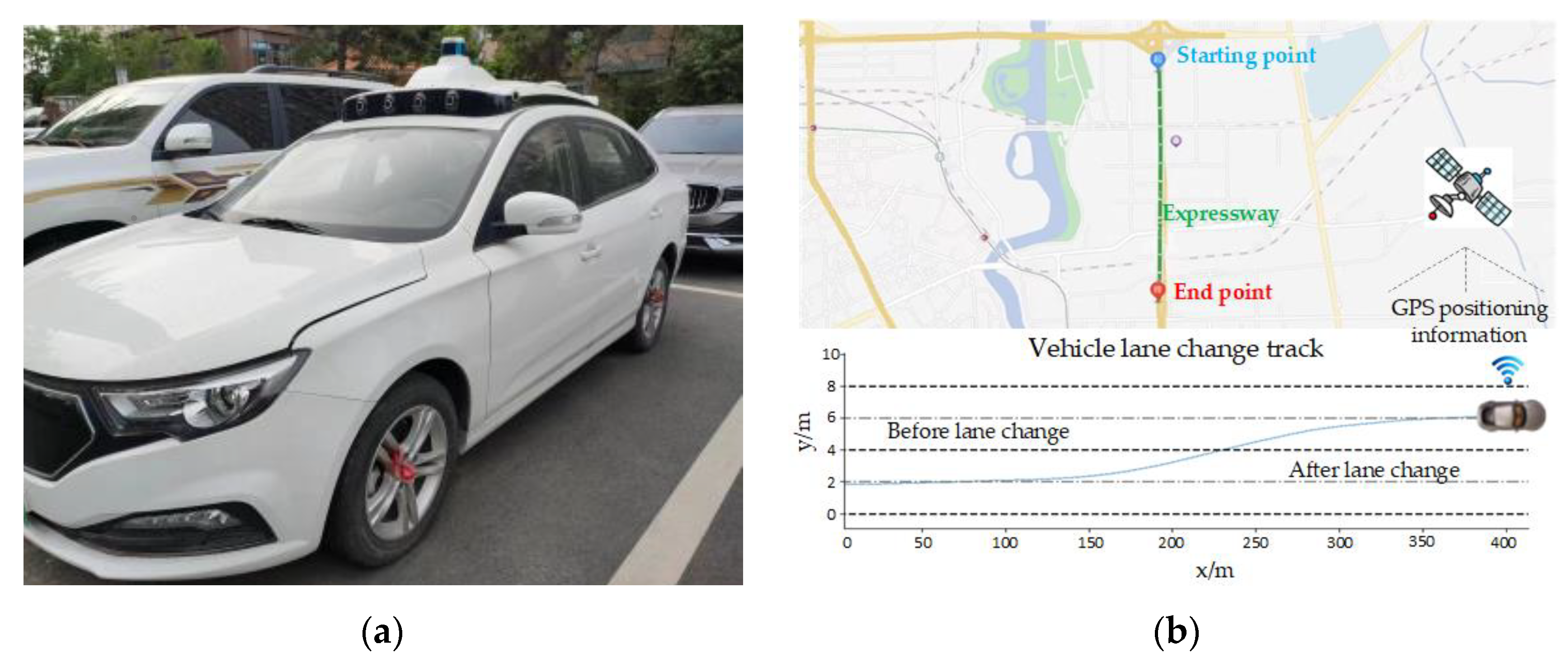

4.2. Real Testing and Evaluation of Automated Vehicles

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pei, S.S.; Ma, C.; Zhu, H.T.; Luo, K. An Evaluation System Based on User Big Data Management and Artificial Intelligence for Automatic Vehicles. J. Organ. End User Comput. 2023, 34, 10. [Google Scholar]

- Chan, V.W.S. Autonomous Vehicles Safety. IEEE Commun. Mag. 2021, 59, 4. [Google Scholar] [CrossRef]

- Xu, Z.; Bai, Y.; Wang, G.; Gan, C.; Sun, Y. Research on Scenarios Construction for Automated Driving Functions Field Test. In Proceedings of the 2022 International Conference on Intelligent Dynamics and Control Technology (IDC 2022), Guilin, China, 22–24 April 2022. [Google Scholar]

- Luo, Y.; Xiang, D.; Zhang, S.; Liang, W.; Sun, J.; Zhu, L. Evaluation on the Fuel Economy of Automated Vehicles with Data-Driven Simulation Method. Energy AI 2021, 3, 100051. [Google Scholar] [CrossRef]

- Zhang, P.; Zhu, B.; Zhao, J.; Fan, T.; Sun, Y. Performance Evaluation Method for Automated Driving System in Logical Scenario. Automot. Innov. 2022, 5, 299–310. [Google Scholar] [CrossRef]

- Manuel, M.S.; Jos, E.; Emilia, S.; van de Molengraft, R. Scenario-based Evaluation of Prediction Models for Automated Vehicles. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022. [Google Scholar]

- Gottschalg, G.; Leinen, S. Comparison and evaluation of integrity algorithms for vehicle dynamic state estimation in different scenarios for an application in automated driving. Sensors 2021, 21, 1458. [Google Scholar] [CrossRef]

- Li, S.; Li, W.; Li, P.; Ma, P.; Yang, M. Novel Test Scenario Generation Technology for Performance Evaluation of Automated Vehicle. Int. J. Automot. Technol. 2022, 23, 1295–1312. [Google Scholar] [CrossRef]

- Kibalama, D.; Tulpule, P.; Chen, B.S. AV/ADAS Safety-Critical Testing Scenario Generation from Vehicle Crash Data. In Proceedings of the SAE 2022 Annual World Congress Experience (WCX 2022), Online, 5–7 April 2022. [Google Scholar]

- Zhu, B.; Zhang, P.; Zhao, J.; Deng, W. Hazardous Scenario Enhanced Generation for Automated Vehicle Testing Based on Optimization Searching Method. IEEE Transp. Intell. Transp. 2022, 23, 7321–7331. [Google Scholar] [CrossRef]

- Lv, H.; Gao, P.; Yuan, K.; Shu, H. Research on Critical Test Scenarios of Automated Vehicle Overtaking on Highway. In Proceedings of the SAE 2021 Intelligent and Connected Vehicles Symposium (ICVS 2021), Chongqing, China, 4–6 November 2021. [Google Scholar]

- Menzel, T.; Bagschik, G.; Maurer, M. Scenarios for Development, Test and Validation of Automated Vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018. [Google Scholar]

- Gelder, E.; Paardekooper, J. Assessment of Automated Driving Systems using real-life scenarios. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar]

- Li, Y.; Tao, J.; Wotawa, F. Ontology-based test generation for automated and autonomous driving functions. Inform. Softw. Technol. 2020, 117, 106–200. [Google Scholar] [CrossRef]

- Menzel, T.; Bagschik, G.; Isensee, L.; Schomburg, A.; Maurer, M. From functional to logical scenarios: Detailing a keyword-based scenario description for execution in a simulation environment. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Zheng, B.; Hong, Z.; Tang, J.; Han, M.; Chen, J.; Huang, X. A Comprehensive Method to Evaluate Ride Comfort of Autonomous Vehicles under Typical Braking Scenarios: Testing, Simulation and Analysis. Mathematics 2023, 11, 474. [Google Scholar] [CrossRef]

- Buckman, N.; Hansen, A.; Karaman, S.; Rus, D. Evaluating Autonomous Urban Perception and Planning in a 1/10th Scale MiniCity. Sensors 2022, 22, 6793. [Google Scholar] [CrossRef]

- Niu, W.; Liu, X.; Yue, D.; Zhang, F.; Yu, Y. A Comprehensive Evaluation Approach for Vehicle-Infrastructure Cooperation System Using AHP and Entropy Method. In Proceedings of the 12th International Conference on Green Intelligent Transportation Systems and Safety, Singapore, 28 October 2022. [Google Scholar]

- Ito, N.; Aktar, M.S.; Horita, Y. Construction of subjective vehicle detection evaluation model considering shift from ground truth position. EICE Trans. Fundam. Electron. Commun. Comput. Sci. 2019, 102, 1246–1249. [Google Scholar] [CrossRef]

- Dong, F.; Zhao, Y.N.; Gao, L. Application of Gray Correlation and Improved AHP to Evaluation on Intelligent U-Turn Behavior of Unmanned Vehicles. In Proceedings of the 8th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 12–13 December 2015. [Google Scholar]

- Zhao, J.; Liu, J.; Yang, L.; He, P. Exploring the relationships between subjective evaluations and objective metrics of vehicle dynamic performance. J. Adv. Transp. 2018, 2018, 2638474. [Google Scholar] [CrossRef]

- Kim, J.; Jeong, C.; Jung, D.; Kim, B. Development of a quantitative evaluation method for vehicle control systems based on road information. In Proceedings of the 10th International Scientific Conference on Future Information Technology (Future Tech), Hanoi, Vietnam, 18–20 May 2015. [Google Scholar]

- Guo, R.L.; Yang, C.; Liang, D. Applied study on functional safety limit scenario test of a steering system based on whole vehicle in the loop. In Proceedings of the 2022 6th CAA International Conference on Vehicular Control and Intelligence (CVCI), Nanjing, China, 28–30 October 2022. [Google Scholar]

- Abdel-Aty, M.; Cai, Q.; Wu, Y.N.; Zheng, O. Evaluation of automated emergency braking system’s avoidance of pedestrian crashes at intersections under occluded conditions within a virtual simulator. Accid. Anal. Prev. 2022, 176, 106797. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Huang, W.L.; Liu, Y.H.; Zheng, N.N.; Wang, F.Y. Intelligence Testing for Autonomous Vehicles: A New Approach. IEEE Trans. Intell. Veh. 2016, 1, 158–166. [Google Scholar] [CrossRef]

- Zhu, J.; Zhang, W.; Wu, M.X. Evaluation of Ride Comfort and Driving Safety for Moving Vehicles on Slender Coastal Bridges. J. Vib. Acoust. 2018, 140, 051012. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.B.; Wang, H.; Wang, W.; Xing, L. Evaluating the safety impact of adaptive cruise control in traffic oscillations on freeways. Accid. Anal. Prev. 2017, 104, 137–145. [Google Scholar] [CrossRef]

- Jiang, C.; Mita, A. SISG4HEI_Alpha: Alpha version of simulated indoor scenario generator for houses with elderly individuals. J. Build. Eng. 2021, 35, 101963. [Google Scholar] [CrossRef]

- Hempen, T.; Biank, S.; Huber, W.; Diedrich, C. Model Based Generation of Driving Scenarios. In Proceedings of the 1st International Conference on Intelligent Transport Systems (INTSYS 2017), Hyvinkaa, Finland, 29–30 November 2017. [Google Scholar]

- Song, Y.; Chitturi, M.V.; Noyce, D.A. Intersection two-vehicle crash scenario specification for automated vehicle safety evaluation using sequence analysis and Bayesian networks. Accid. Anal. Prev. 2022, 176, 106814. [Google Scholar] [CrossRef] [PubMed]

- Bke, T.A.; Albak, E.L.; Kaya, N.; Bozkurt, R.; Ergul, M.; Ozturk, D.; Emiroglu, S.; Ozturk, F. Correlation between objective and subjective tests for vehicle ride comfort evaluations. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2023, 237, 706–721. [Google Scholar] [CrossRef]

- Feng, S.; Feng, Y.H.; Yan, X.T.; Shen, S.Y.; Xu, S.B.; Liu, H.X. Safety assessment of highly automated driving systems in test tracks: A new framework. Accid. Anal. Prev. 2020, 144, 105664. [Google Scholar] [CrossRef]

- He, H.J.; Tian, C.; Jin, G.; An, L. An improved uncertainty measure theory based on game theory weighting. Math. Probl. Eng. 2019, 2019, 3893129. [Google Scholar] [CrossRef]

- Ho, W.; Ma, X. The state-of-the-art integrations and applications of the analytic hierarchy process. Eur. J. Oper. Res. 2018, 267, 399–414. [Google Scholar] [CrossRef]

- Brady, S.R. Utilizing and Adapting the Delphi Method for Use in Qualitative Research. Int. J. Qual. Meth. 2015, 14, 1609406915621381. [Google Scholar] [CrossRef]

- Wang, D.; Ha, M.M.; Zhao, M.M. The intelligent critic framework for advanced optimal control. Artif. Intell. Rev. 2022, 55, 1–22. [Google Scholar] [CrossRef]

- Krishnan, A.R.; Kasim, M.M.; Hamid, R.; Ghazali, M.F. A Modified CRITIC Method to Estimate the Objective Weights of Decision Criteria. Symmetry 2021, 13, 973. [Google Scholar] [CrossRef]

- Stadler, C.; Montanari, F.; Baron, W.; Sippl, C.; Djanatliev, A. A Credibility Assessment Approach for Scenario-Based Virtual Testing of Automated Driving Functions. IEEE Open J. Intell. Transp. Syst. 2022, 3, 45–60. [Google Scholar] [CrossRef]

- Piazzoni, A.; Vijay, R.; Cherian, J.; Chen, L.; Dauwels, J. Challenges in Virtual Testing of Autonomous Vehicles. In Proceedings of the 17th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 11–13 December 2022. [Google Scholar]

- Sippl, C.; Schwab, B.; Kielar, P.; Djanatliev, A. Distributed Real-Time Traffic Simulation for Autonomous Vehicle Testing in Urban Environments. In Proceedings of the 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018. [Google Scholar]

| Number | A: Vehicle–Pedestrian Typical Scenarios | B: Vehicle–Non-Motor Vehicle Typical Scenarios | C: Vehicle–Motor Vehicle Typical Scenarios |

|---|---|---|---|

| 1 |  |  |  |

| 2 |  |  |  |

| 3 |  |  |  |

| Number of Tests | V1 (m/s) | V2 (m/s) | L (m) | Test Results |

|---|---|---|---|---|

| 1 | 15.0 | 14.0 | 6.0 | Pass |

| 15.0 | 13.9 | 6.0 | Pass | |

| 15.0 | 13.8 | 6.0 | Pass | |

| 15.0 | 13.7 | 6.0 | Fail | |

| 2 | 15.0 | 13.7 | 6.1 | Fail |

| 15.0 | 13.7 | 6.2 | Pass | |

| 3 | 14.1 | 13.7 | 6.1 | Pass |

| 14.2 | 13.7 | 6.1 | Pass | |

| 14.3 | 13.7 | 6.1 | Pass | |

| 14.4 | 13.7 | 6.1 | Fail |

| Index | Safety | Efficiency | Economy | Intelligence | Comfort |

|---|---|---|---|---|---|

| Safety | 1 | 4 | 3 | 6 | 2 |

| Efficiency | 1/4 | 1 | 1/2 | 7 | 1/3 |

| Economy | 1/3 | 2 | 1 | 3 | 1/2 |

| Intelligence | 1/6 | 1/7 | 1/3 | 1 | 1/4 |

| Comfort | 1/2 | 3 | 2 | 4 | 1 |

| Index | Indicator Dimensionless Values | ||

|---|---|---|---|

| Scenario 1 | Scenario 2 | Scenario 3 | |

| Avoidance safety distance (m) | 0.31 | 0 | 1.00 |

| Speed when avoiding (m/s) | 0.33 | 0 | 1.00 |

| Headway distribution effect | 0 | 1.00 | 0.55 |

| Duration of lane change (s) | 0.02 | 1.00 | 0 |

| Travel time for a specific distance (s) | 0 | 1.00 | 1.00 |

| Average rate of throttle change (%) | 0.50 | 1.00 | 0 |

| Standard deviation of engine speed (rpm) | 1.00 | 0 | 0.50 |

| Relative distance to obstacles (m) | 1.00 | 0 | 0.92 |

| Maximum distance from lane centerline during lane maintenance (m) | 0.02 | 1.00 | 0 |

| Peak yaw rate (deg/s) | 1.00 | 0 | 1.00 |

| Peak lateral acceleration (m/s2) | 1.00 | 0 | 0.75 |

| Peak longitudinal acceleration (m/s2) | 0 | 1.00 | 0 |

| Peak vertical acceleration (m/s2) | 0 | 1.00 | 0.67 |

| Indicators | Discrete Coefficients | Indicators | Discrete Coefficients |

|---|---|---|---|

| Avoidance safety distance (m) | 1.170 | Relative distance to obstacles (m) | 0.868 |

| Speed when avoiding (m/s) | 1.150 | Maximum distance from lane centerline when lane is maintained (m) | 1.680 |

| Headway distribution effect | 0.969 | ||

| Duration of lane change (s) | 0.970 | Peak yaw rate (deg/s) | 0.866 |

| Travel time for a specific distance (s) | 0.866 | Peak lateral acceleration (m/s2) | 0.892 |

| Average rate of throttle change (%) | 1.000 | Peak longitudinal acceleration (m/s2) | 1.735 |

| Standard deviation of engine speed (rpm) | 1.000 | Peak vertical acceleration (m/s2) | 0.917 |

| Total Index Layer | Weight | Index Layer | Weight |

|---|---|---|---|

| Safety | 0.41 | Avoidance safety distance | 0.39 |

| Speed when avoiding | 0.39 | ||

| Headway distribution effect | 0.22 | ||

| Efficiency | 0.14 | Duration of lane change | 0.66 |

| Travel time for a specific distance | 0.34 | ||

| Economy | 0.15 | Average throttle change rate | 0.50 |

| Standard deviation of engine speed | 0.50 | ||

| Intelligence | 0.05 | Relative distance to obstacles | 0.34 |

| Maximum distance from lane centerline when lane is maintained | 0.66 | ||

| Comfort | 0.25 | Peak yaw rate | 0.25 |

| Peak lateral acceleration | 0.21 | ||

| Peak longitudinal acceleration | 0.35 | ||

| Peak Vertical acceleration | 0.19 |

| Scoring Standards | ||||

|---|---|---|---|---|

| Excellent | Good | General | Poor | Bad |

| 9 | 7 | 5 | 3 | 1 |

| Comprehensive Abilities | Scoring Standards | Score |

|---|---|---|

| Driving experience | More than 8 years | 10 |

| 5 to 8 years | 8 | |

| 3 to 5 years | 6 | |

| Less than 3 years | 4 | |

| Driving level | Skilled | 10 |

| Familiar | 6 | |

| Unfamiliar | 2 | |

| Professional skill | Familiar with the working principles of vehicle systems | 10 |

| Understands the working principles of vehicle systems | 8 | |

| Has a cursory understanding of the working principles of vehicle systems | 6 | |

| Not familiar with the working principles of vehicle systems | 2 | |

| Driving style | Stable type | 10 |

| Radical type | 6 | |

| Rough driving type | 2 |

| Experts | Weight | Experts | Weight |

|---|---|---|---|

| 1 | 0.0145 | 11 | 0.0064 |

| 2 | 0.0097 | 12 | 0.0097 |

| 3 | 0.0137 | 13 | 0.0121 |

| 4 | 0.0153 | 14 | 0.0088 |

| 5 | 0.0080 | 15 | 0.0113 |

| 6 | 0.0105 | 16 | 0.0105 |

| 7 | 0.0064 | 17 | 0.0056 |

| 8 | 0.0097 | 18 | 0.0064 |

| 9 | 0.0072 | 19 | 0.0072 |

| 10 | 0.0137 | 20 | 0.0080 |

| Indicators (Index Layer) | Excellent | Good | General | Poor | Bad | |

|---|---|---|---|---|---|---|

| A | Avoidance safety distance (m) | 0.13 | 0.2 | 0.26 | 0.23 | 0.21 |

| B | Speed when avoiding (m/s) | 0.29 | 0.16 | 0.25 | 0.17 | 0.13 |

| C | Headway distribution effect | 0.29 | 0.21 | 0.19 | 0.18 | 0.13 |

| D | Duration of lane change (s) | 0.38 | 0.23 | 0.17 | 0.13 | 0.09 |

| E | Travel time for a specific distance (s) | 0.34 | 0.28 | 0.18 | 0.14 | 0.06 |

| F | Average rate of throttle change (%) | 0.32 | 0.21 | 0.20 | 0.13 | 0.14 |

| G | Standard deviation of engine speed (rpm) | 0.16 | 0.14 | 0.16 | 0.14 | 0.28 |

| H | Relative distance to obstacles (m) | 0.26 | 0.21 | 0.20 | 0.21 | 0.12 |

| I | Maximum distance from lane centerline when lane is maintained (m) | 0.16 | 0.18 | 0.27 | 0.23 | 0.16 |

| J | Peak yaw rate (deg/s) | 0.29 | 0.23 | 0.20 | 0.17 | 0.11 |

| K | Peak lateral acceleration (m/s2) | 0.12 | 0.17 | 0.12 | 0.21 | 0.28 |

| L | Peak longitudinal acceleration (m/s2) | 0.26 | 0.18 | 0.20 | 0.21 | 0.15 |

| M | Peak vertical acceleration (m/s2) | 0.24 | 0.22 | 0.21 | 0.17 | 0.16 |

| Indicators (Total Index Layer) | Excellent | Good | General | Poor | Bad |

|---|---|---|---|---|---|

| Safety | 0.2276 | 0.1866 | 0.2407 | 0.1956 | 0.1612 |

| Efficiency | 0.3664 | 0.2470 | 0.1734 | 0.1334 | 0.0798 |

| Economy | 0.2800 | 0.2150 | 0.1950 | 0.1600 | 0.1500 |

| Intelligence | 0.1940 | 0.1902 | 0.2462 | 0.2232 | 0.1464 |

| Comfort | 0.2343 | 0.1980 | 0.1851 | 0.1924 | 0.1690 |

| Excellent | Good | General | Poor | Bad | |

|---|---|---|---|---|---|

| Membership | 0.26 | 0.20 | 0.21 | 0.18 | 0.15 |

| Indicators | Score | |

|---|---|---|

| Total Index Layer | Safety | 54.9 |

| Efficiency | 66.2 | |

| Economy | 58.5 | |

| Intelligence | 53.1 | |

| Comfort | 54.0 | |

| Target Layer | Comprehensive | 56.7 |

| Index | Indicator Dimensionless Values | ||

|---|---|---|---|

| Experiment 1 | Experiment 2 | Experiment 3 | |

| Avoidance safety distance (m) | 36.53 | 36.33 | 36.61 |

| Speed when avoiding (m/s) | 25.64 | 25.73 | 25.43 |

| Headway distribution effect | 0.091 | 0.094 | 0.103 |

| Duration of lane change (s) | 3.78 | 3.73 | 3.62 |

| Travel time for a specific distance (s) | 78.43 | 78.91 | 78.53 |

| Average rate of throttle change (%) | 5.59 | 5.56 | 5.61 |

| Standard deviation of engine speed (rpm) | 865 | 858 | 862 |

| Relative distance to obstacles (m) | 94.56 | 98.65 | 97.32 |

| Maximum distance from lane centerline during lane maintenance (m) | 0.26 | 0.45 | 0.36 |

| Peak yaw rate (deg/s) | 4.36 | 4.35 | 4.26 |

| Peak lateral acceleration (m/s2) | 2.16 | 2.18 | 2.15 |

| Peak longitudinal acceleration (m/s2) | 4.752 | 4.756 | 4.757 |

| Peak vertical acceleration (m/s2) | 0.051 | 0.052 | 0.052 |

| Indicators | Score | |

|---|---|---|

| Total Index Layer | Safety | 54.9 |

| Efficiency | 66.2 | |

| Economy | 58.5 | |

| Intelligence | 53.1 | |

| Comfort | 54.0 | |

| Target Layer | Comprehensive | 56.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Wu, L.; Li, X.; Qu, F.; Li, W.; Ma, Y.; Ma, D. An Evaluation Method for Automated Vehicles Combining Subjective and Objective Factors. Machines 2023, 11, 597. https://doi.org/10.3390/machines11060597

Wang W, Wu L, Li X, Qu F, Li W, Ma Y, Ma D. An Evaluation Method for Automated Vehicles Combining Subjective and Objective Factors. Machines. 2023; 11(6):597. https://doi.org/10.3390/machines11060597

Chicago/Turabian StyleWang, Wei, Liguang Wu, Xin Li, Fufan Qu, Wenbo Li, Yangyang Ma, and Denghui Ma. 2023. "An Evaluation Method for Automated Vehicles Combining Subjective and Objective Factors" Machines 11, no. 6: 597. https://doi.org/10.3390/machines11060597

APA StyleWang, W., Wu, L., Li, X., Qu, F., Li, W., Ma, Y., & Ma, D. (2023). An Evaluation Method for Automated Vehicles Combining Subjective and Objective Factors. Machines, 11(6), 597. https://doi.org/10.3390/machines11060597