A Reconfigurable Architecture for Industrial Control Systems: Overview and Challenges

Abstract

1. Introduction

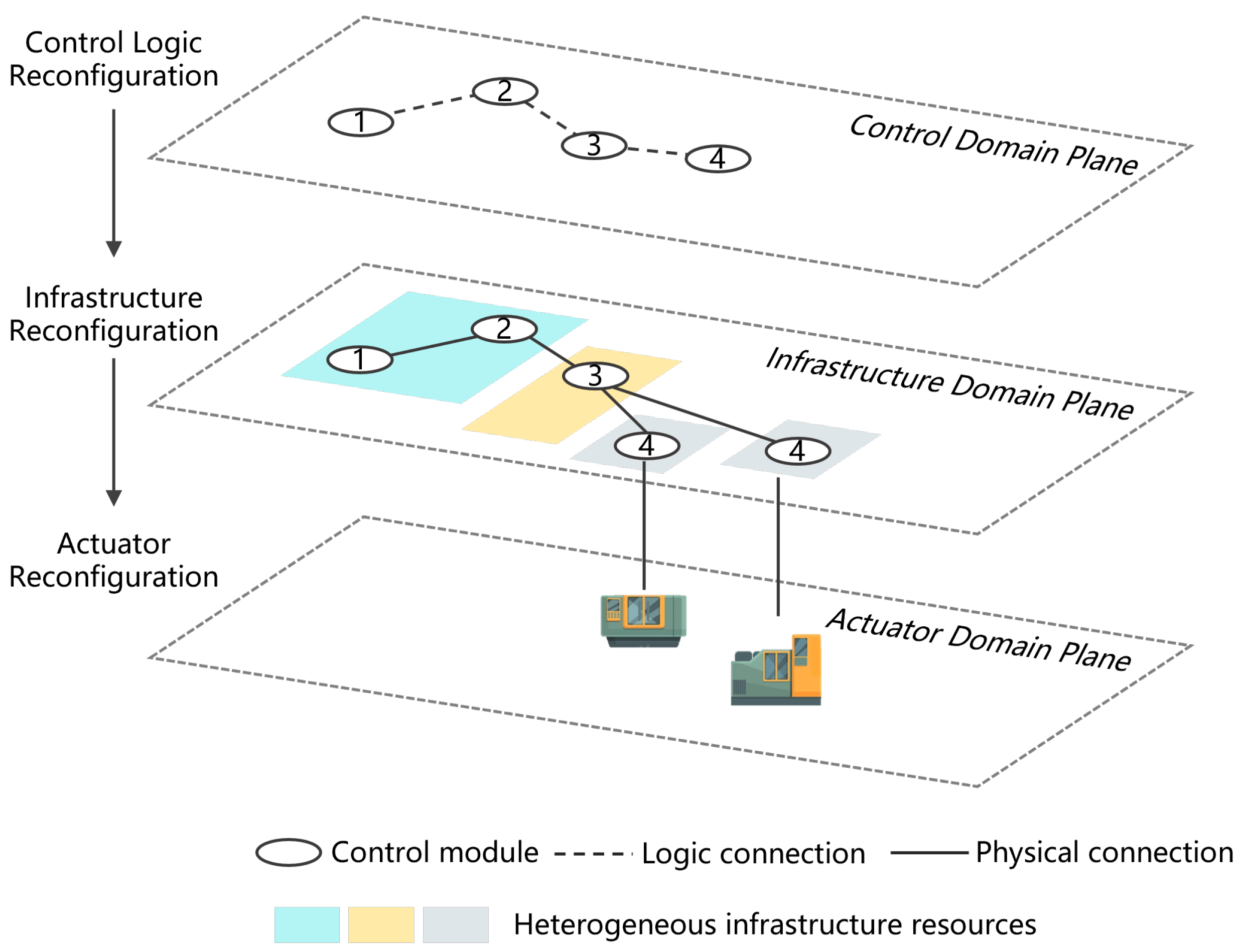

- Control domain plane: CNC functionality is reconfigured based on specific machining requirements, enabling flexible adaptation to various tasks.

- Infrastructure domain plane: CNC modules implementing various CNC functions are distributed across heterogeneous infrastructure resources, ensuring high portability and resource efficiency.

- Actuator domain plane: CNC systems are dynamically equipped to machine tools on demand by connecting infrastructure resources and machine tools.

2. Brief Review of Industrial Control System Architectures

2.1. A Brief Introduction to CNC Systems

2.2. Open Architecture

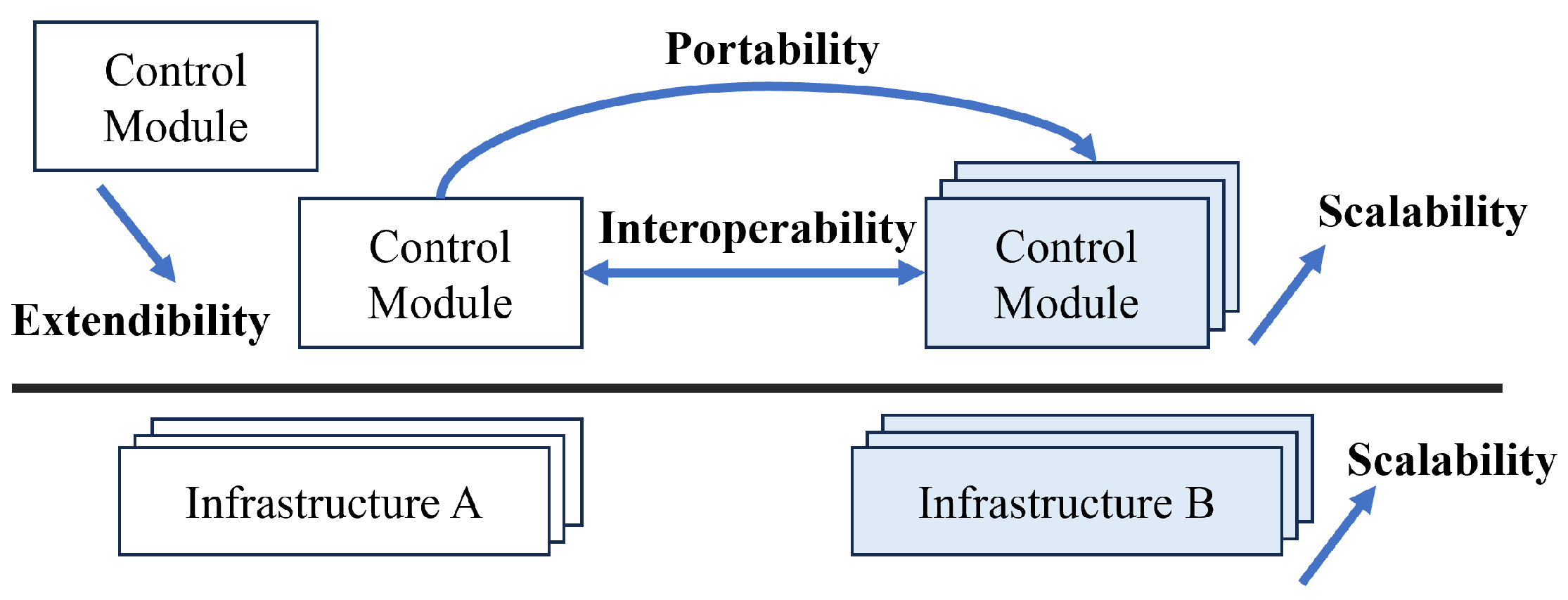

- Extendibility and interoperability: Carious control modules running on diverse infrastructures can interact in a standard way to compose control software.

- Portability: Control modules can run on different infrastructures.

- Scalability: Control modules and infrastructures can scale on demand.

2.3. Networked Architecture

- Extra-low-time-sensitivity CNC modules (e.g., management and maintenance functions) are deployed on the cloud (or other remote infrastructures) and interact with traditional CNC systems via general networks, while traditional CNC systems connect actuators (i.e., machine tools) via conventional real-time communication technologies (e.g., field bus and real-time Ethernet). This approach extends traditional CNC systems by adding intelligence and remote operation capabilities [6].

- CNC software is deployed on edge infrastructures near the machine tools, connecting multiple machine tools simultaneously through scaling. However, this configuration compromises real-time performance, as multiple processes must share the constrained and static resources of the edge infrastructure.

- High-time-sensitivity CNC modules remain at edge-level infrastructures that connect machine tools via conventional real-time communication technologies, while other CNC modules are all migrated to remote infrastructures. To mitigate potential latency issues, an additional cache is often deployed at the edge to buffer data [20].

3. Overview of Reconfigurable Architecture

3.1. Functional Framework of Reconfigurable Architecture

3.2. Case Study

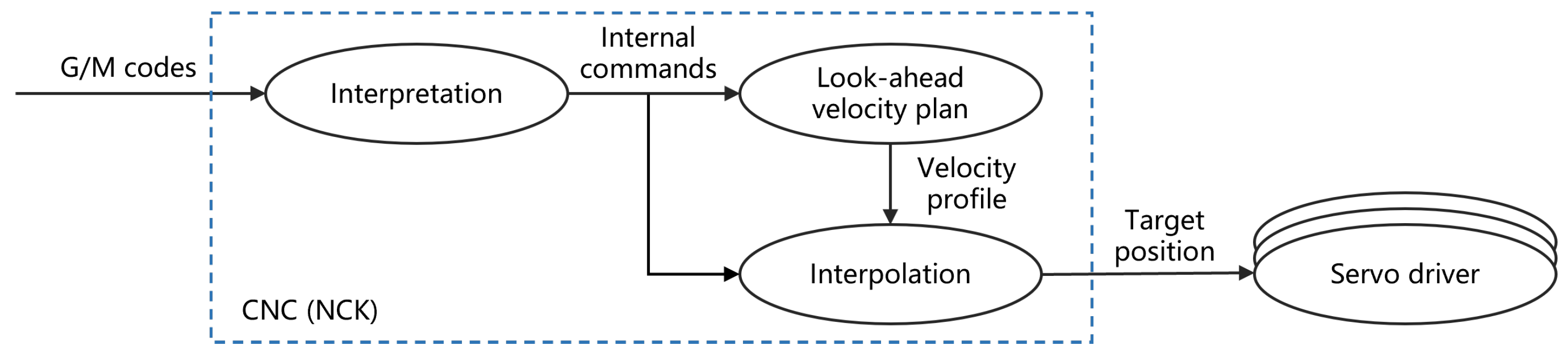

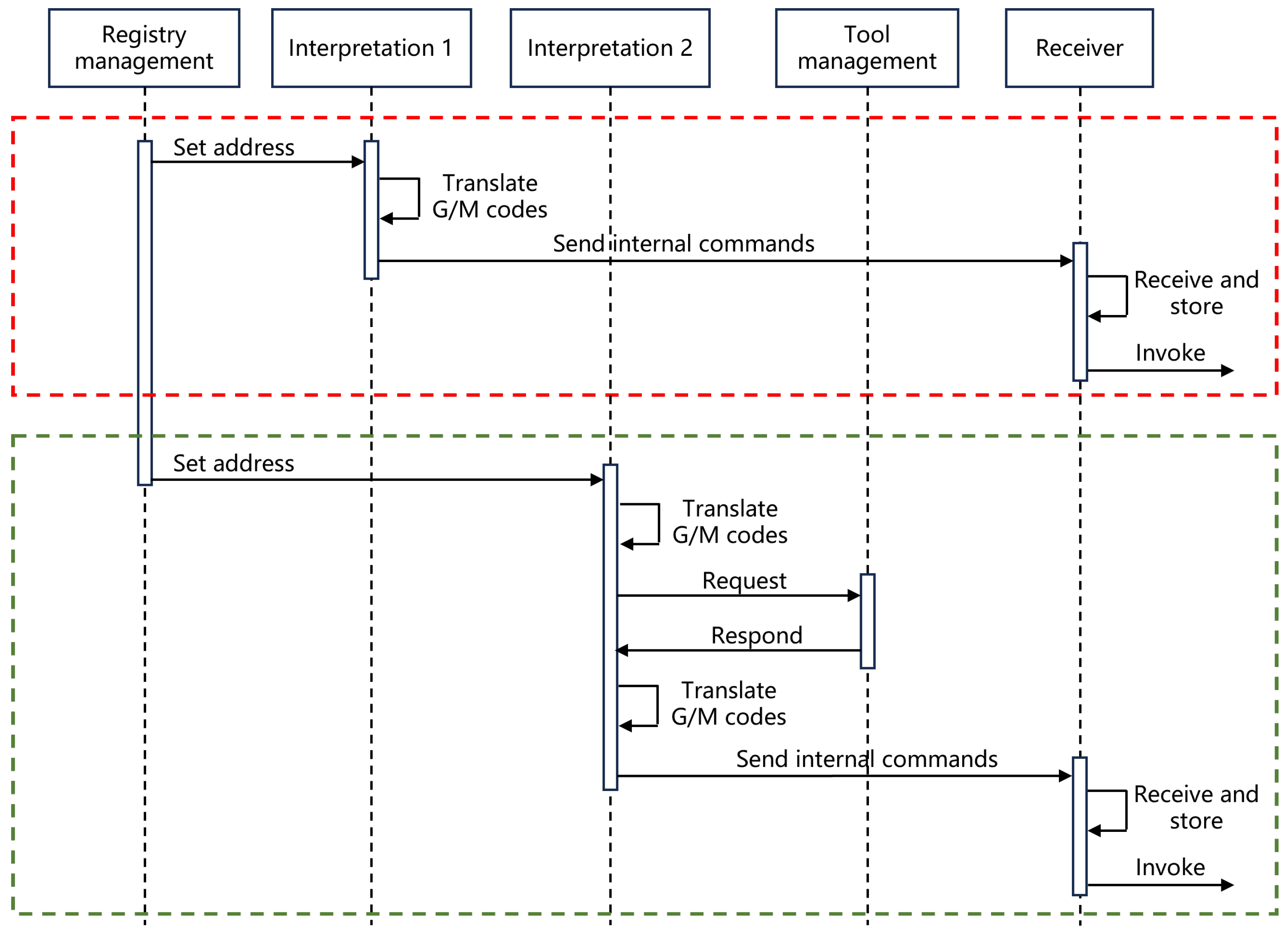

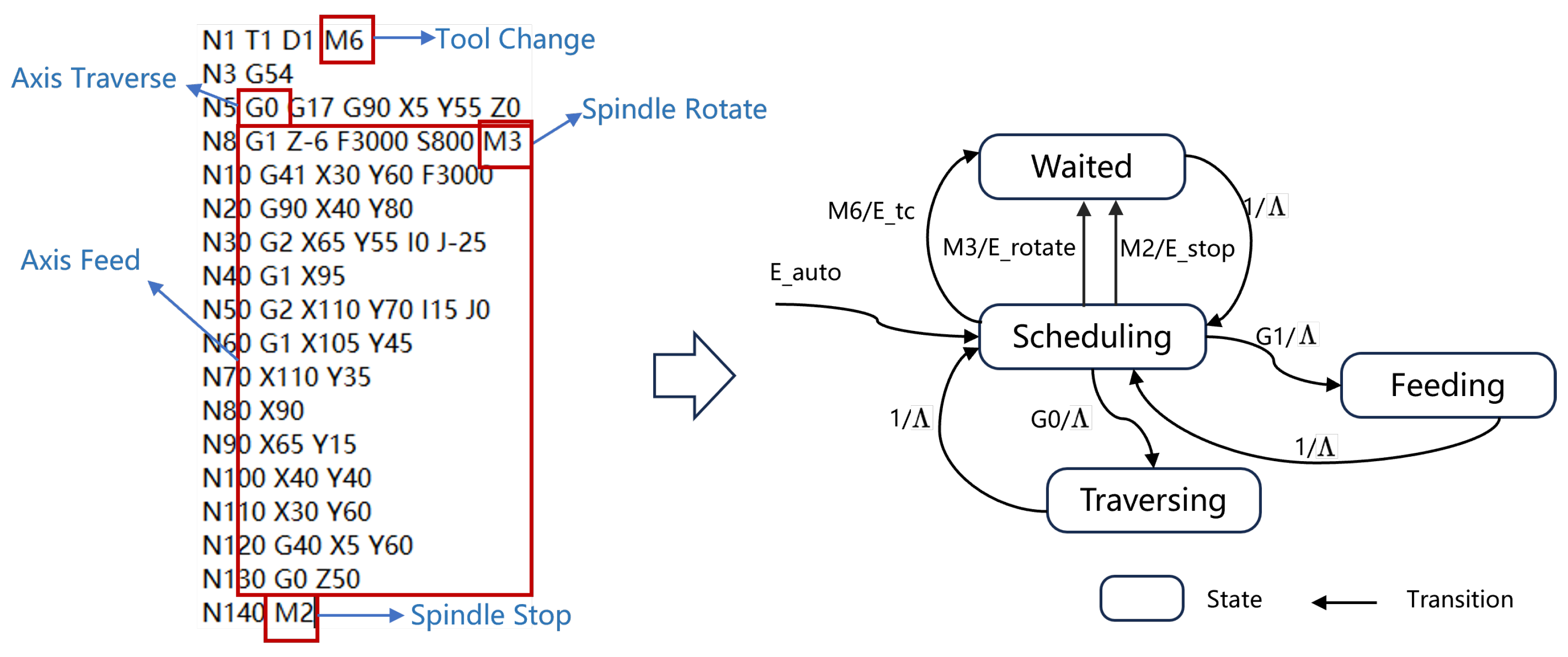

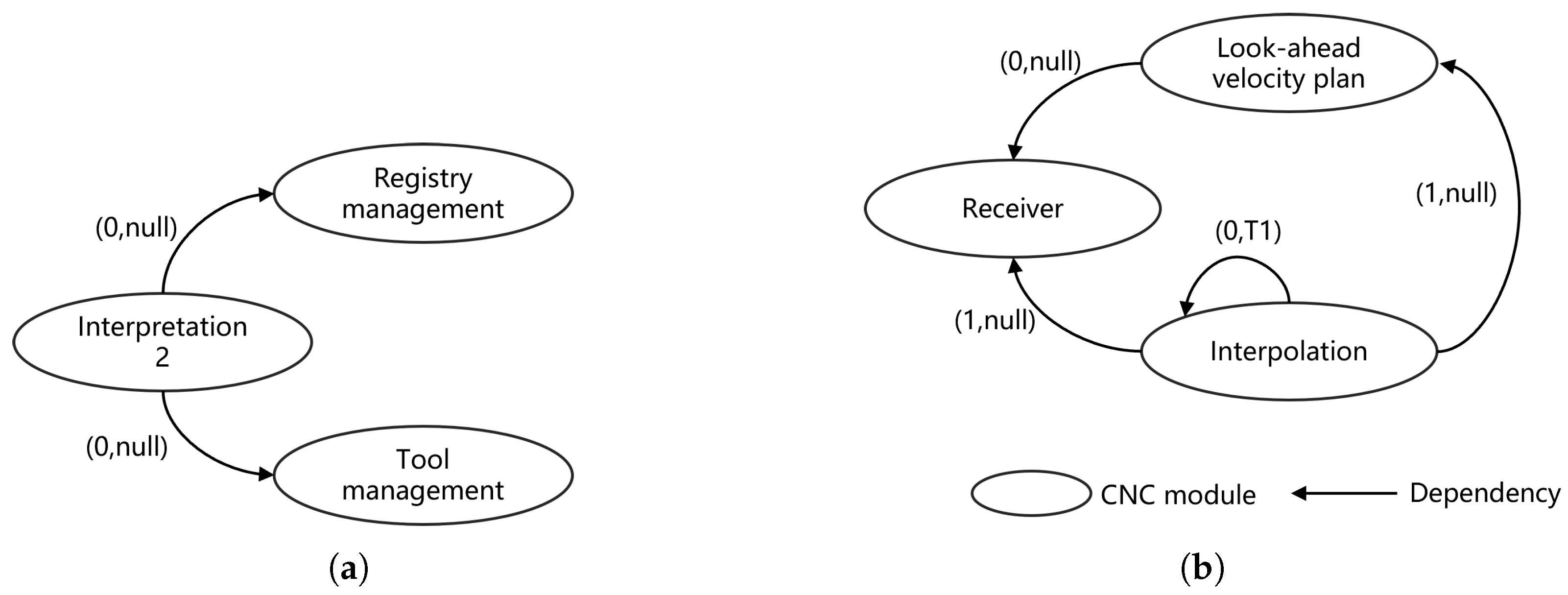

- Interpretation: This module translates G/M codes into internal commands, ensuring that the high-level instructions from the part program are converted into NCK-readable formats.

- Look-ahead velocity plan: This module plans the speed of the feed axes in advance. By considering the kinematic constraints of the machine tool (e.g., acceleration and jerk limits) and the sharp corners in the tool trajectory, this module ensures smooth and efficient transitions between different movements, preventing sudden changes in speed that could lead to mechanical stress or suboptimal machining quality.

- Interpolation: This module discretizes the planned tool trajectory into a series of small, precise target positions. These positions are sent to the servo drivers at regular intervals. This module ensures that the tool follows the programmed trajectory accurately by calculating the exact position of each axis at every time step.

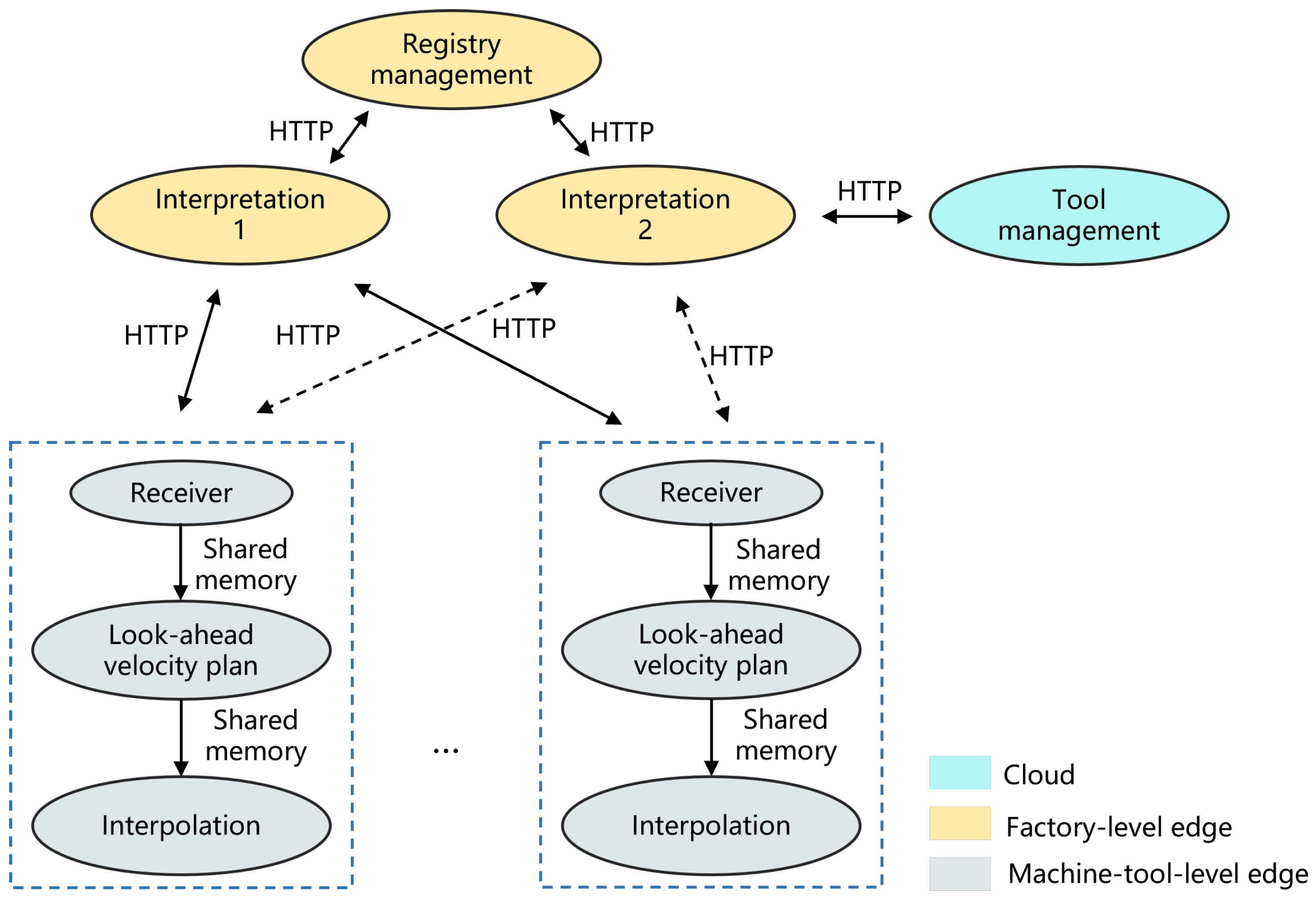

- Tool management: This module oversees the lifecycle of all tools within the factory. The interpretation module (2) establishes a logical connection with this module to retrieve necessary tool information.

- Registry management: This module manages the registration of machine tools and their associated edge devices (e.g., network addresses of machine-tool-level edges). Every machine tool and its associated edge must be registered within this module. It connects to the interpretation modules to configure network addresses.

- Receiver: This module receives the internal commands generated by the interpretation module and stores them at the edge. Subsequently, it invokes the look-ahead velocity planning and interpolation modules. As such, it also connects to the interpretation modules.

3.3. Characteristics of Reconfigurable Architecture

- First, it enables a dynamic, many-to-many interaction among CNC modules, infrastructure resources, and machine tools, optimizing their utilization and allowing for adaptation to diverse production tasks in the mass customization market.

- Second, it establishes a dynamic, many-to-many relationship between users and vendors. In this context, users, who can be either end-users of machine tools or machine tool vendors, consume CNC modules. Vendors, on the other hand, supply these CNC modules. This flexible arrangement allows users to assemble CNC systems by integrating modules from multiple vendors, while a single vendor’s CNC modules can serve multiple users.

4. Challenges and Methods of the Reconfigurable Architecture

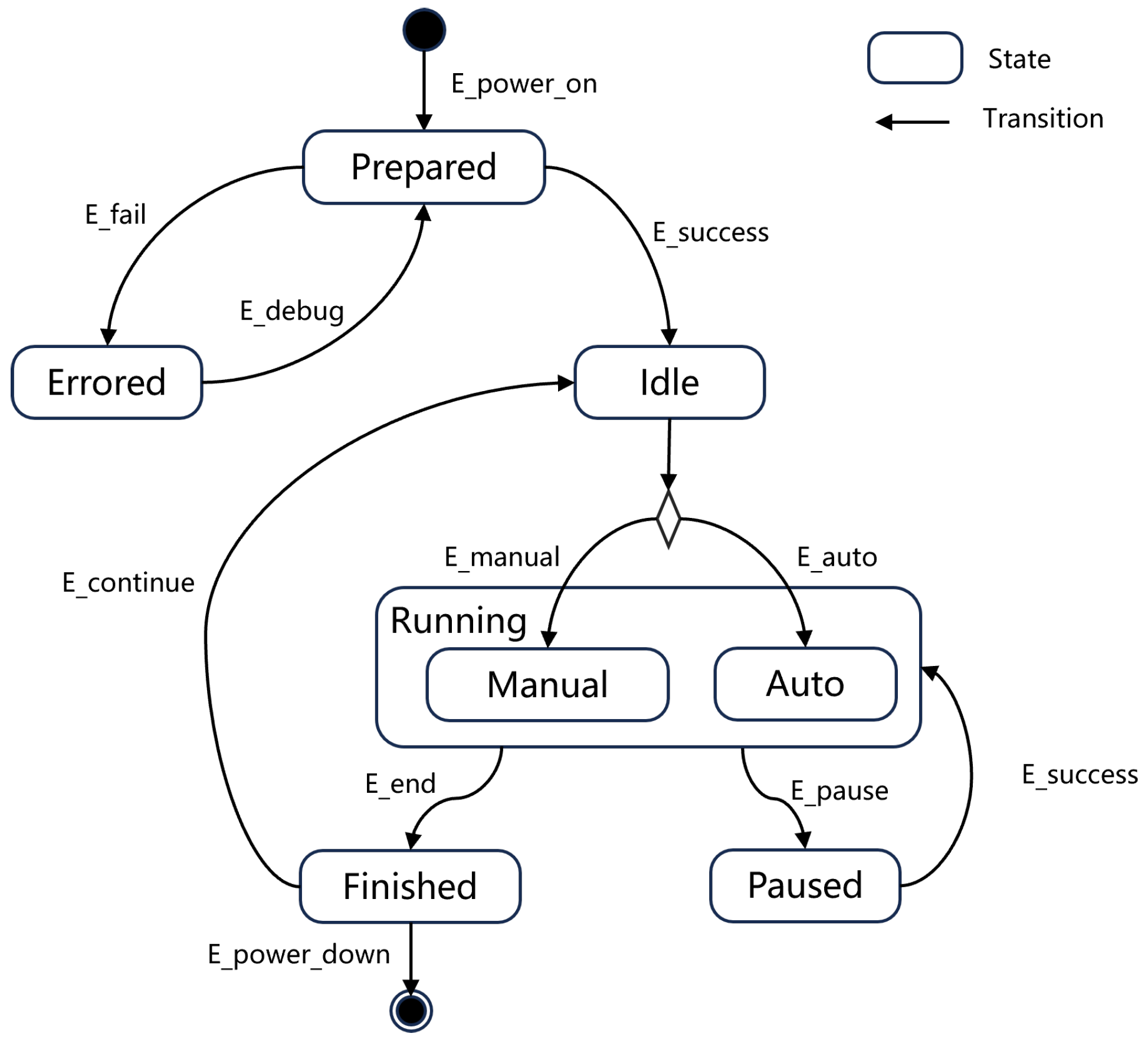

4.1. Deterministic Description of CNC Functionality

4.2. Decoupling of CNC Modules and Infrastructures

4.3. Management of CNC Modules, Infrastructures, and Machine Tools

5. Conclusions and Future Work

- Deterministic description of control functionality: Defining a standard granularity for control modules is challenging, and the commonly used FSM-based behavior model is more suited for discrete behaviors. To address this, a hybrid semantic model combining the FSM and the DNM is proposed. The DNM is used to describe continuous behaviors, allowing the development of a C-DSL that emphasizes the dependency relationships among control modules rather than fixed granularity.

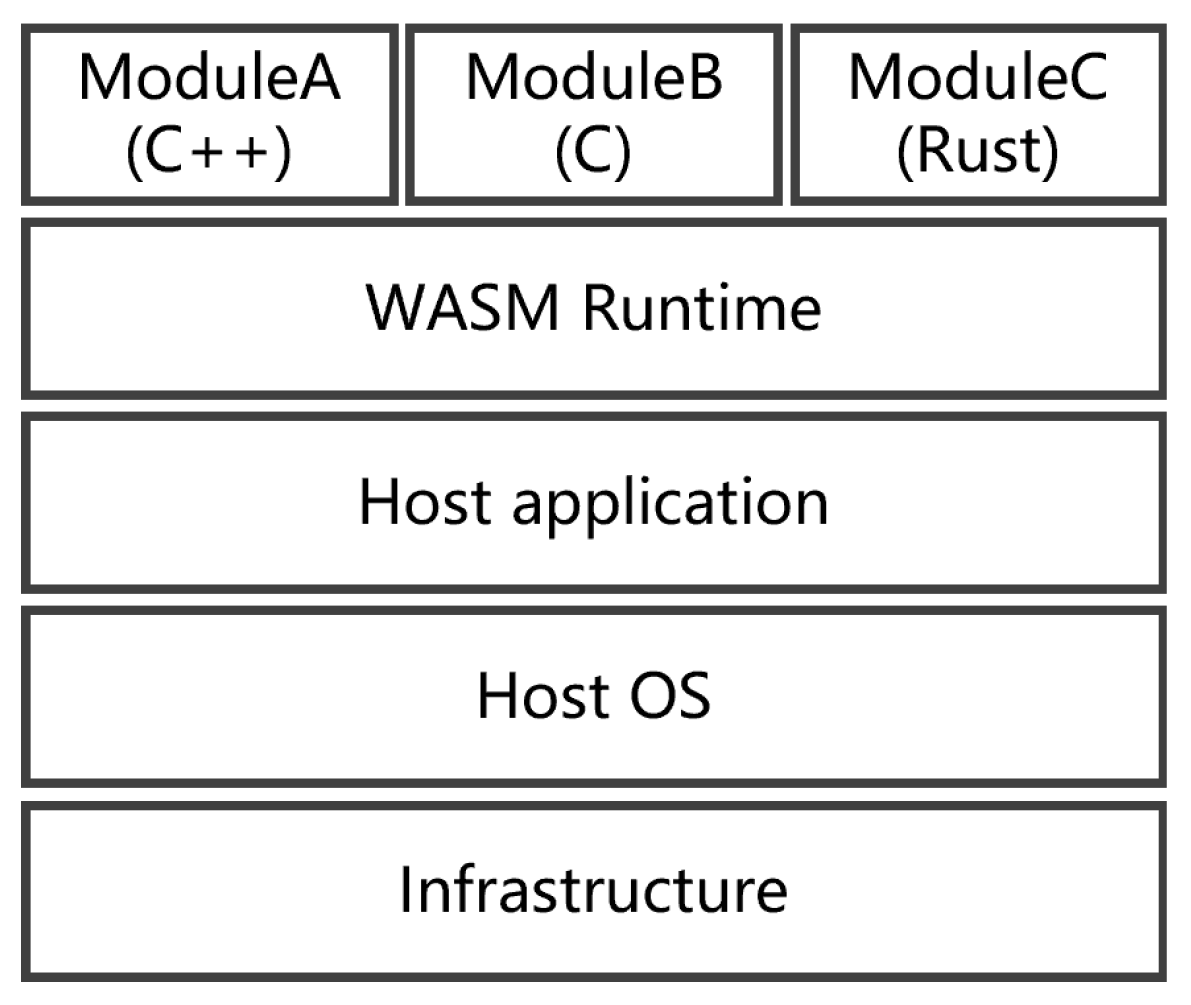

- Decoupling of control modules and infrastructures: Achieving independence for control modules requires their isolation from underlying infrastructures. Virtualization technologies provide a viable solution, with OS-level virtualization being more suitable for this reconfigurable architecture. WebAssembly complements container-based virtualization for virtualizing control modules. However, it is acknowledged that currently, not all control modules can be entirely decoupled from their infrastructures.

- Management of control modules, infrastructures, and actuators: A platform-oriented operation model is proposed, extending the functionality of the reconfigurable architecture beyond mere control functionality. It is noted that addressing this challenge is interconnected with the solutions to the first two challenges.

- The proposed methods for the first two challenges primarily focus on core functional issues while overlooking non-functional aspects. For instance, the construction of a C-DSL as a declarative language requires the definition of its lexical and syntactic specifications. Moreover, the feasibility of the control functionality described by the C-DSL must be verified according to these specifications.

- The backward compatibility issue poses a challenge for traditional control systems with closed architectures and stand-alone operation models. It may be difficult to utilize these systems as intended in the reconfigurable architecture—decoupling control software into independent control modules and from underlying infrastructures. Instead, these legacy systems could be restructured as a whole into an independent resource, serving as a transitional step towards a user-centric operation model.

- Finally, the development of the platform itself is crucial. Solutions to all the aforementioned challenges need to be integrated into the platform as its core functional properties, making platform development a vital aspect of the reconfigurable architecture.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mantravadi, S.; Møller, C.; Chen, L.I.; Schnyder, R. Design Choices for Next-Generation IIoT-Connected MES/MOM: An Empirical Study on Smart Factories. Robot. Comput.-Integr. Manuf. 2022, 73, 102225. [Google Scholar] [CrossRef]

- Givehchi, M.; Liu, Y.; Wang, X.V.; Wang, L. Function Block-enabled Operation Planning And Machine Control in Cloud-DPP. Int. J. Prod. Res. 2002, 61, 1168–1184. [Google Scholar] [CrossRef]

- Liu, C.; Su, Z.; Xu, X.; Lu, Y. Service-Oriented Industrial Internet of Things Gateway for Cloud Manufacturing. Robot. Comput.-Integr. Manuf. 2022, 73, 102217. [Google Scholar] [CrossRef]

- Wang, L.-C.; Chen, C.-C.; Liu, J.-L.; Chu, P.-C. Framework and Deployment of a Cloud-based Advanced Planning and Scheduling System. Robot. Comput.-Integr. Manuf. 2021, 70, 102088. [Google Scholar] [CrossRef]

- Yang, H.; Ong, S.K.; Nee, C.; Jiang, G.; Mei, X. Microservices-based Cloud-edge Collaborative Condition Monitoring Platform for Smart Manufacturing Systems. Int. J. Prod. Res. 2022, 60, 7492–7501. [Google Scholar] [CrossRef]

- Yu, H.; Yu, D.; Wang, C.; Hu, Y.; Li, Y. Edge Intelligence-driven Digital Twin of CNC System: Architecture and Deployment. Robot. Comput.-Integr. Manuf. 2023, 79, 102418. [Google Scholar] [CrossRef]

- Kalyvas, M. An Innovative Industrial Control System Architecture for Real-time Response, Fault-tolerant Operation and Seamless Plant Integration. J. Eng. 2021, 2021, 569–581. [Google Scholar] [CrossRef]

- Suh, S.-H.; Kang, S.K.; Chung, D.-H.; Stroud, I. Theory and Design of CNC Systems; Springer: London, UK, 2008. [Google Scholar]

- Pritschow, G.; Altintas, Y.; Jovane, F.; Koren, Y.; Mitsuishi, M.; Takata, S.; Van Brussel, H.; Weck, M.; Yamazaki, K. Open Controller Architecture—Past, Present and Future. CIRP Ann. 2001, 50, 463–470. [Google Scholar] [CrossRef]

- Michaloski, J.; Birla, S.; Yen, C.J.; Igou, R.; Weinert, G. An Open System Framework for Component-Based CNC Machines. ACM Comput. Surv. 2000, 32, 23. [Google Scholar] [CrossRef]

- Michaloski, J. Analysis of Module Interaction in an OMAC Controller. In Proceedings of the World Automation Congress Conference, Maui, HI, USA, 11–16 June 2000. [Google Scholar]

- Wei, H.; Duan, X.; Chen, Y.; Zhang, X. Research on Open CNC System Based on CORBA. In Proceedings of the Fifth IEEE International Symposium on Embedded Computing, Beijing, China, 6–8 October 2008. [Google Scholar]

- Ma, X.; Han, Z.; Wang, Y.; Fu, H. Development of a PC-based Open Architecture Software-CNC System. Chin. J. Aeronaut. 2007, 20, 272–281. [Google Scholar] [CrossRef]

- Minhat, M.; Vyatkin, V.; Xu, X.; Wong, S.; Al-Bayaa, Z. A Novel Open CNC Architecture Based on STEP-NC Data Model and IEC 61499 Function Blocks. Robot. Comput.-Integr. Manuf. 2009, 25, 560–569. [Google Scholar] [CrossRef]

- Harbs, E.; Negri, G.H.; Jarentchuk, G.; Hasegawa, A.Y.; Rosso, R.S.U., Jr.; da Silva Hounsell, M.; Lafratta, F.H.; Ferreira, J.C. CNC-C2: An ISO14649 and IEC61499 Compliant Controller. Int. J. Comput. Integr. Manuf. 2021, 34, 621–640. [Google Scholar] [CrossRef]

- Park, S.; Kim, S.-H.; Cho, H. Kernel Software for Efficiently Building, Re-configuring, and Distributing an Open CNC Controller. Int. J. Adv. Manuf. Technol. 2005, 27, 788–796. [Google Scholar] [CrossRef]

- Wang, T.; Wang, L.; Liu, Q. A Three-ply Reconfigurable CNC System Based on FPGA and Field-bus. Int. J. Adv. Manuf. Technol. 2011, 57, 671–682. [Google Scholar] [CrossRef]

- Liu, L.; Yao, Y.; Li, J. A Review of the Application of Component-based Software Development in Open CNC Systems. Int. J. Adv. Manuf. Technol. 2020, 107, 3727–3753. [Google Scholar] [CrossRef]

- Givehchi, O.; Imtiaz, J.; Trsek, H.; Jasperneite, J. Control-as-a-Service from the Cloud: A Case Study for Using Virtualized PLCs. In Proceedings of the 10th IEEE Workshop on Factory Communication Systems, Toulouse, France, 5–7 May 2014. [Google Scholar]

- Sang, Z.; Xu, X. The Framework of a Cloud-based CNC System. Procedia CIRP 2017, 63, 82–88. [Google Scholar] [CrossRef]

- Bigheti, J.A.; Fernandes, M.M.; Godoy, E.P. Control as a Service: A Microservice Approach to Industry 4.0. In Proceedings of the II Workshop on Metrology for Industri 4.0 and IoT, Naples, Italy, 4–6 June 2019. [Google Scholar]

- Cruz, T.; Simoes, P.; Monteiro, E. Virtualizing Programmable Logic Controllers: Toward a Convergent Approach. IEEE Embed. Syst. Lett. 2016, 8, 69–72. [Google Scholar] [CrossRef]

- Gupta, R.A.; Chow, M.-Y. Networked Control System: Overview and Research Trends. IEEE Trans. Ind. Electron. 2010, 57, 2527–2535. [Google Scholar] [CrossRef]

- Zhang, X.-M.; Han, Q.-L.; Ge, X.; Ding, D.; Ding, L.; Yue, D.; Peng, C. Networked Control Systems: A Survey of Trends and Techniques. IEEE/CAA J. Autom. Sin. 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Morabito, R.; Kjällman, J.; Komu, M. Hypervisors vs. Lightweight Virtualization: A Performance Comparison. In Proceedings of the IEEE International Conference on Cloud Engineering, Tempe, AZ, USA, 9–13 March 2015. [Google Scholar]

- Queiroz, R.; Cruz, T.; Mendes, J.; Sousa, P.; Simões, P. Container-based Virtualization for Real-Time Industrial Systems—A Systematic Review. ACM Comput. Surv. 2023, 56, 1–38. [Google Scholar] [CrossRef]

- Struhár, V.; Behnam, M.; Ashjaei, M.; Papadopoulos, A.V. Real-time Containers: A Survey. Open Access Ser. Inform. 2020, 80, 7:1–7:9. [Google Scholar]

- Mansouri, Y.; Babar, M.A. A Review of Edge Computing: Features and Resource Virtualization. J. Parallel Distrib. Comput. 2021, 150, 155–183. [Google Scholar] [CrossRef]

- Haas, A.; Rossberg, A.; Schuff, D.L.; Titzer, B.L.; Holman, M.; Gohman, D.; Wagner, L.; Zakai, A.; Bastien, J.F. Bringing the Web Up to Speed with WebAssembly. In Proceedings of the 38th ACM SIGPLAN Conference on Programming Language Design and Implementation—PLDI 2017, Barcelona, Spain, 18–23 June 2017. [Google Scholar]

- Ray, P.P. An Overview of WebAssembly for IoT: Background, Tools, State-of-the-Art, Challenges, and Future Directions. Future Internet 2023, 15, 275. [Google Scholar] [CrossRef]

- Wallentowitz, S.; Kersting, B.; Dumitriu, D.M. Potential of WebAssembly for Embedded Systems. In Proceedings of the 11th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 7–10 June 2022. [Google Scholar]

- LinuxCNC. Available online: https://linuxcnc.org/ (accessed on 23 September 2024).

- Liu, L.; Yao, Y.; Du, J. A Universal and Scalable CNC Interpreter for CNC Systems. Int. J. Adv. Manuf. Technol. 2019, 103, 4453–4466. [Google Scholar] [CrossRef]

- Liu, L.; Yao, Y. Development of a CNC Interpretation Service with Good Performance and Variable Functionality. Int. Comput. Integr. Manuf. 2022, 35, 725–742. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Xu, Z.; Qu, X. A Reconfigurable Architecture for Industrial Control Systems: Overview and Challenges. Machines 2024, 12, 793. https://doi.org/10.3390/machines12110793

Liu L, Xu Z, Qu X. A Reconfigurable Architecture for Industrial Control Systems: Overview and Challenges. Machines. 2024; 12(11):793. https://doi.org/10.3390/machines12110793

Chicago/Turabian StyleLiu, Lisi, Zijie Xu, and Xiaobin Qu. 2024. "A Reconfigurable Architecture for Industrial Control Systems: Overview and Challenges" Machines 12, no. 11: 793. https://doi.org/10.3390/machines12110793

APA StyleLiu, L., Xu, Z., & Qu, X. (2024). A Reconfigurable Architecture for Industrial Control Systems: Overview and Challenges. Machines, 12(11), 793. https://doi.org/10.3390/machines12110793