In-Depth Evaluation of Automated Fruit Harvesting in Unstructured Environment for Improved Robot Design

Abstract

1. Introduction

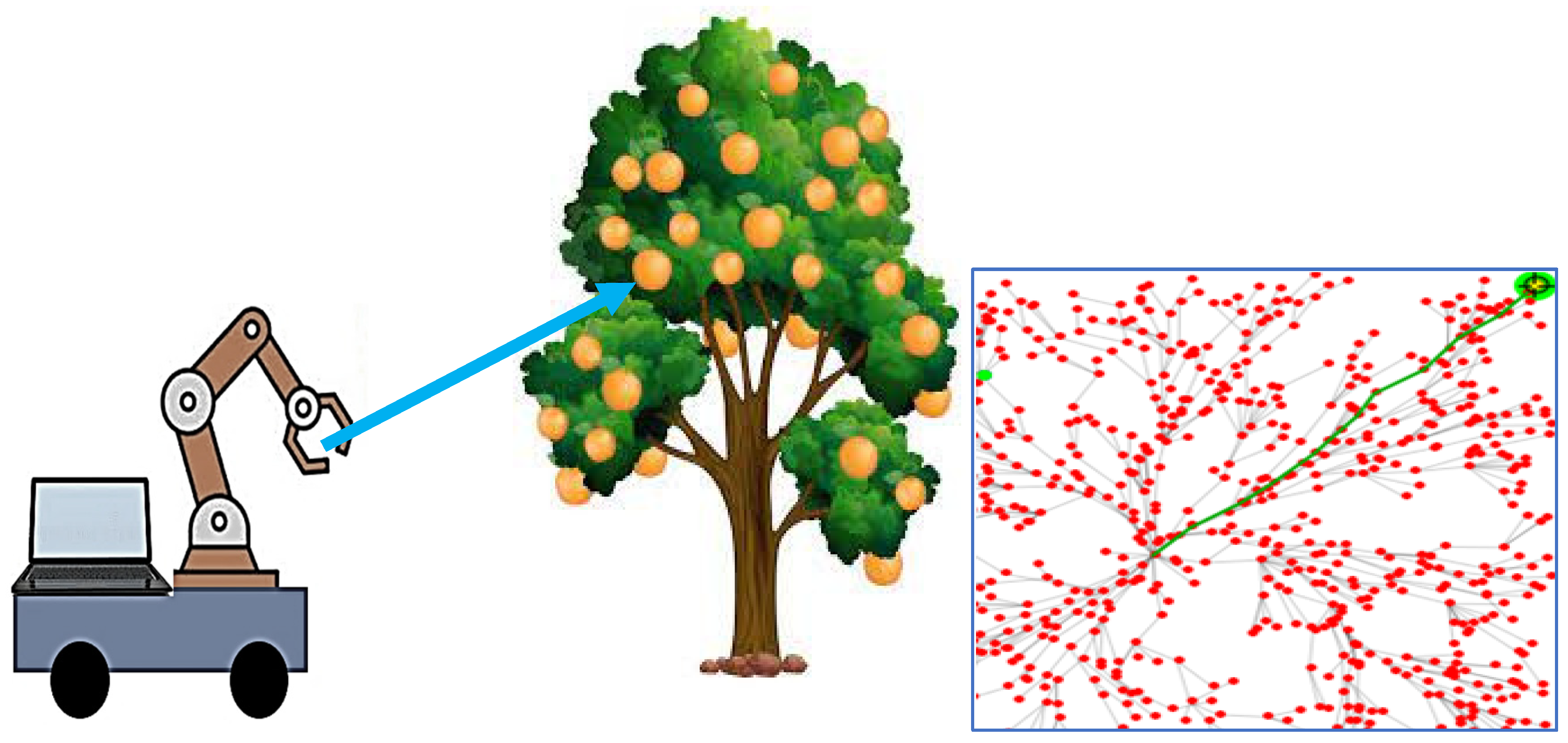

- Developing an efficient orange harvesting robot featuring an optimized path-planning algorithm that utilizes an enhanced RRT* model, ultimately improving the fruit pick-up time and testing it in a real-time scenario.

- Creating an improved fine-tuned fruit detection model employing CNN, seamlessly integrated with position visual servoing, to enhance the precision of robots identifying high-quality oranges.

- Conducting a comprehensive performance evaluation of the harvesting machine across five domains, including precise fruit detection, efficient fruit-picking time, success rate of fruit picking, damage rate of picked fruit, and consistency of robot performance under varying illumination and occlusion conditions.

2. Literature Analysis

3. Design and Methodology

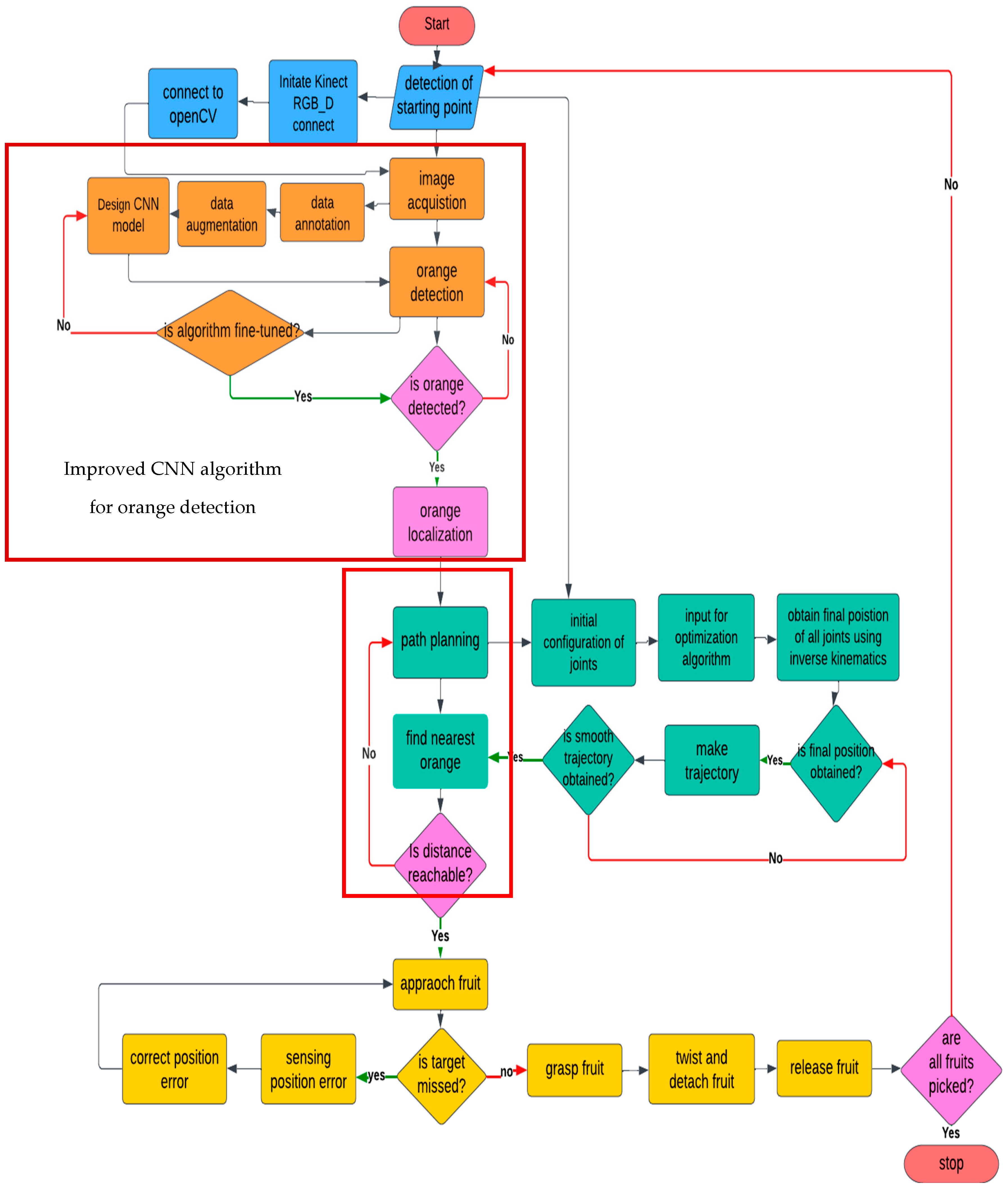

3.1. Methodology of Development of Fruit Harvesting Robot

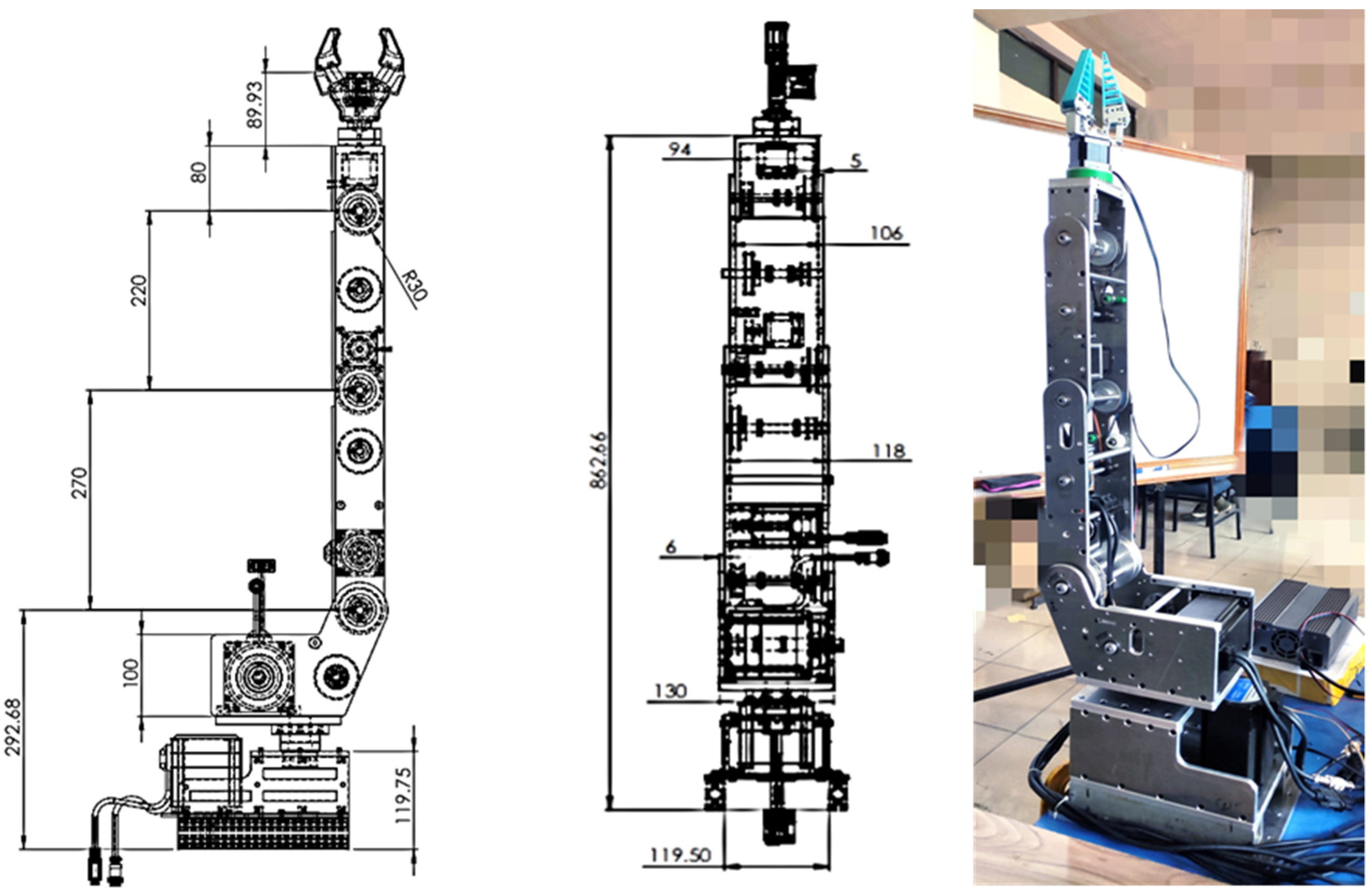

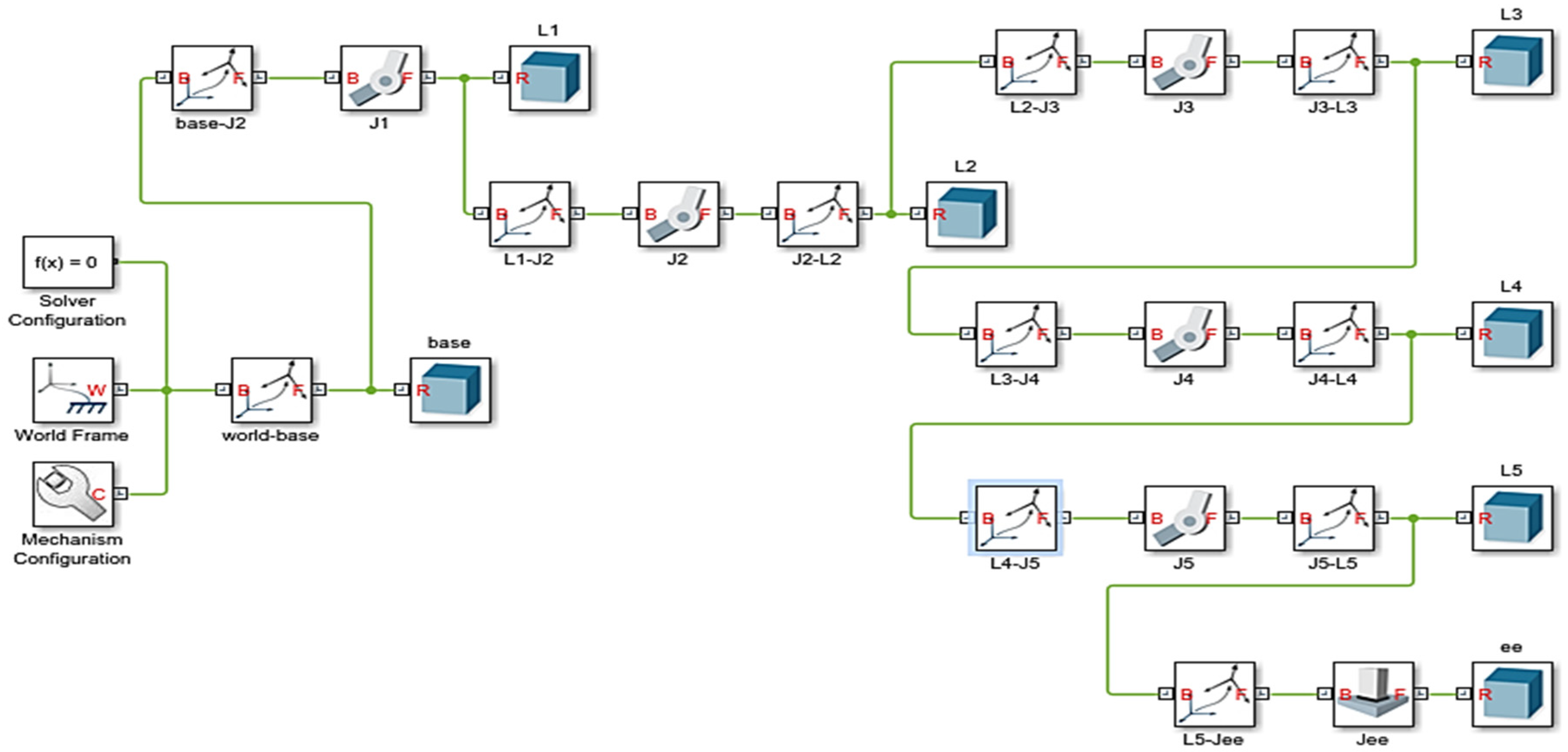

3.2. Design of Fruit Harvesting Robot

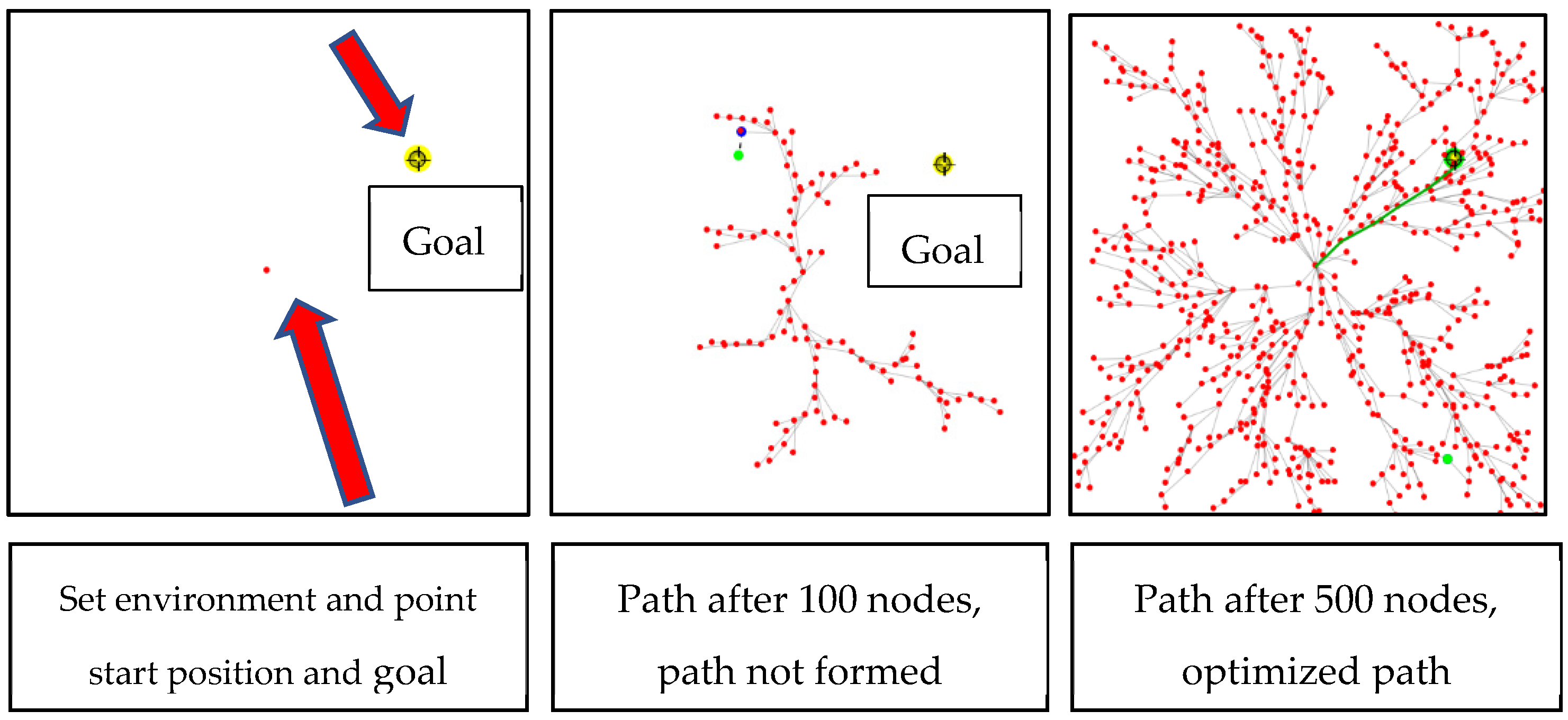

3.3. Path Planning of Robot Using RRT*

| Algorithm 1 RRT* R = (V, E) |

| Set up ainit, agoal |

R  Initialize Tree (); Initialize Tree (); |

| for i = 1…....n do |

arand  Sample (i); Sample (i); |

anear  Nearest(R, arand); Nearest(R, arand); |

anew  Steer(anear, arand); Steer(anear, arand); |

| If ObstacleFree (anew) then |

aclose  Near (R, anew); Near (R, anew); |

amin  Choose parent (aclose, anear, anew); Choose parent (aclose, anear, anew); |

| R.addNodeEdge (amin, anew); |

| R.rewire(); |

| return |

3.4. Fruit Detection

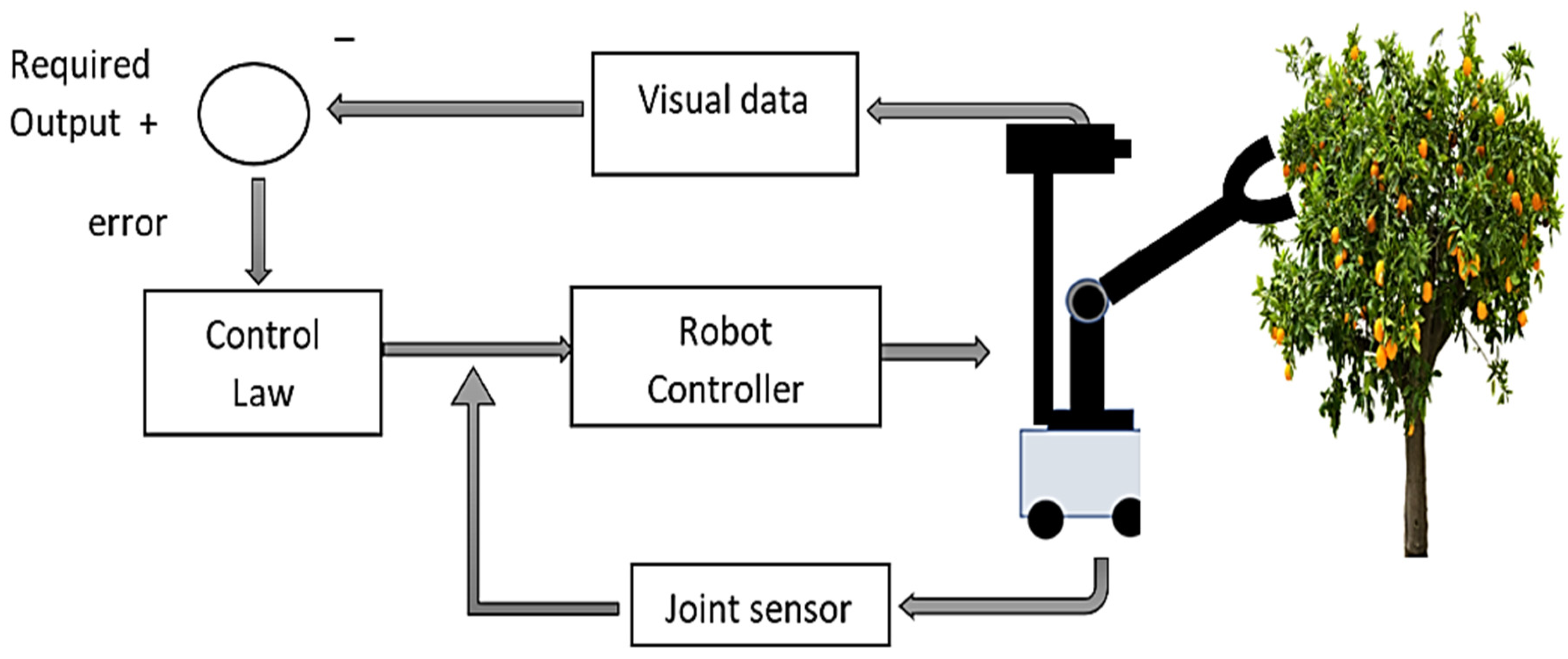

3.5. Visual Servoing

3.6. Experimental Setup and Testing in Laboratory before Real-Time Evaluation

4. Results and Discussion

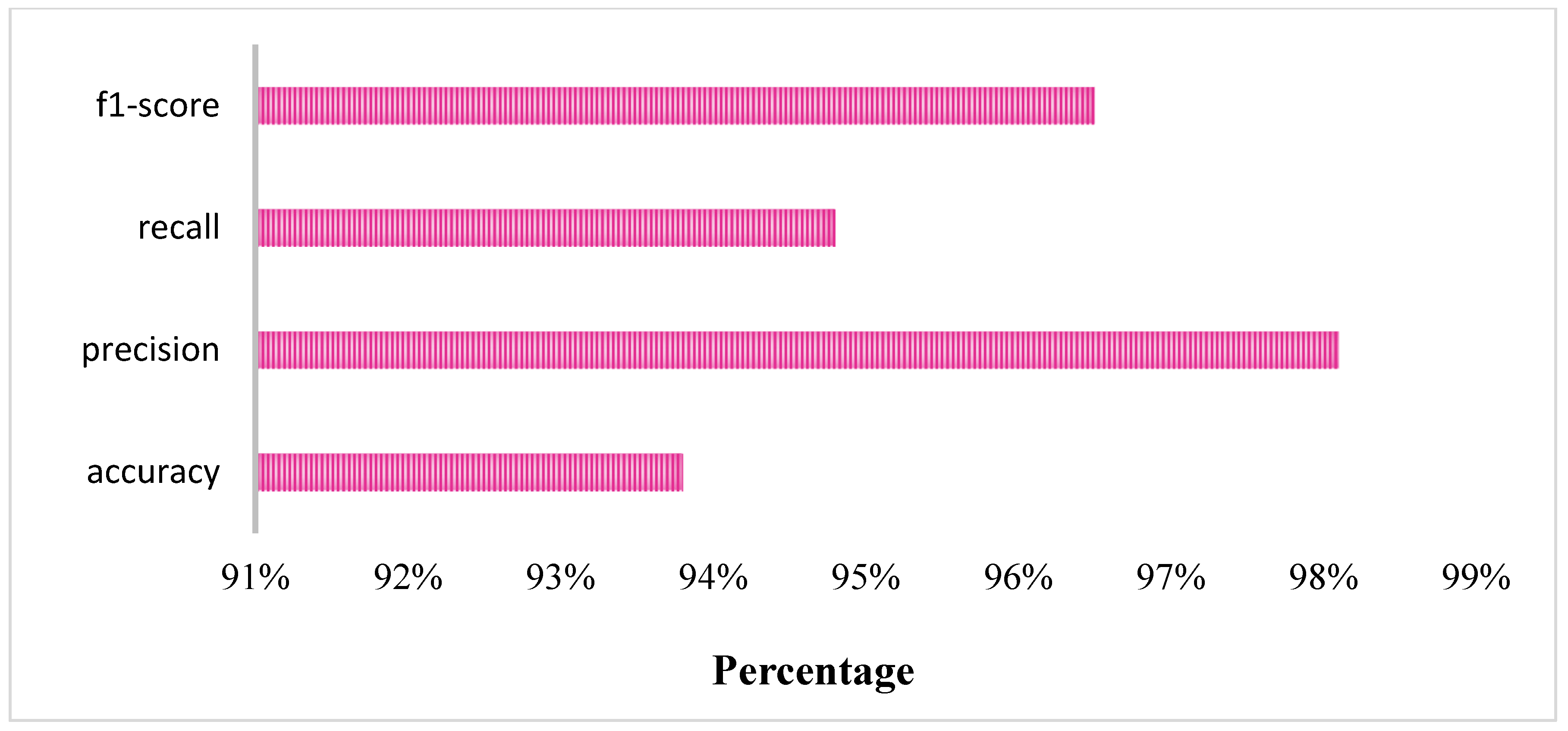

4.1. Fruit Detection in Real-Time Testing

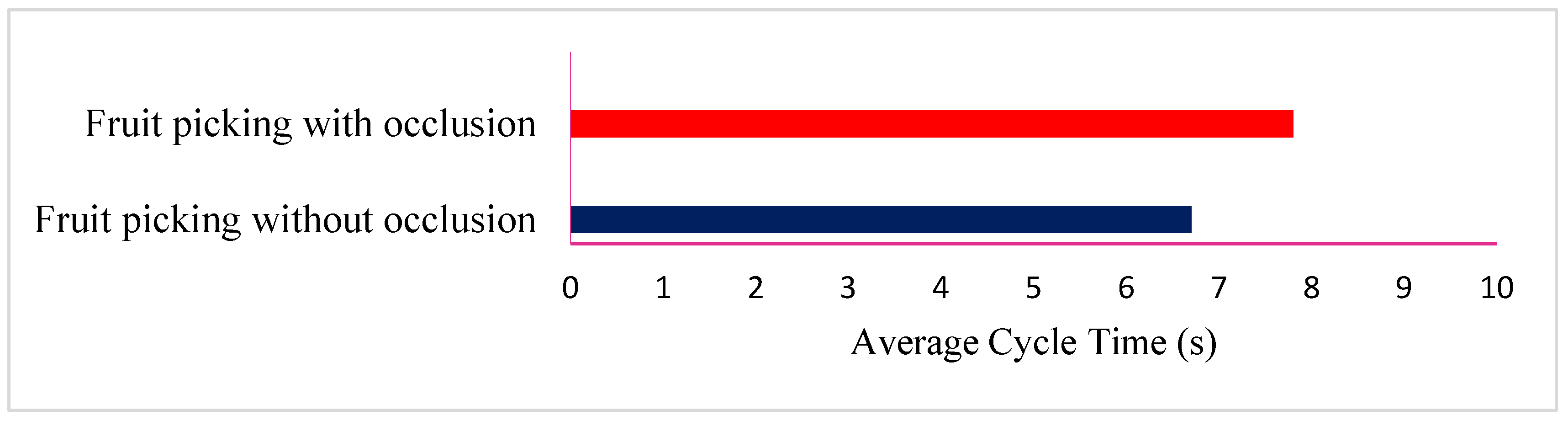

4.2. Cycle Time for Fruit Picking in Real-Time Testing

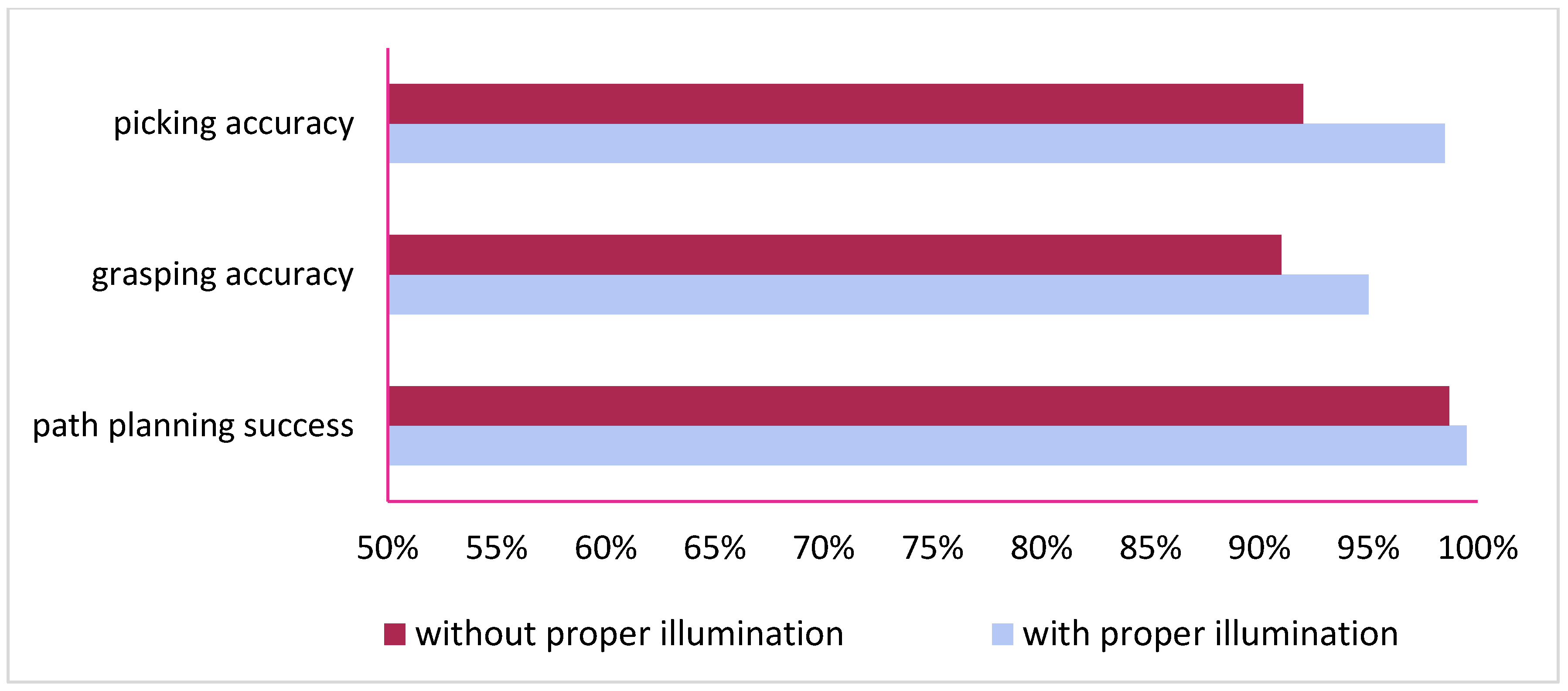

4.3. Success Rate in Real-Time Testing

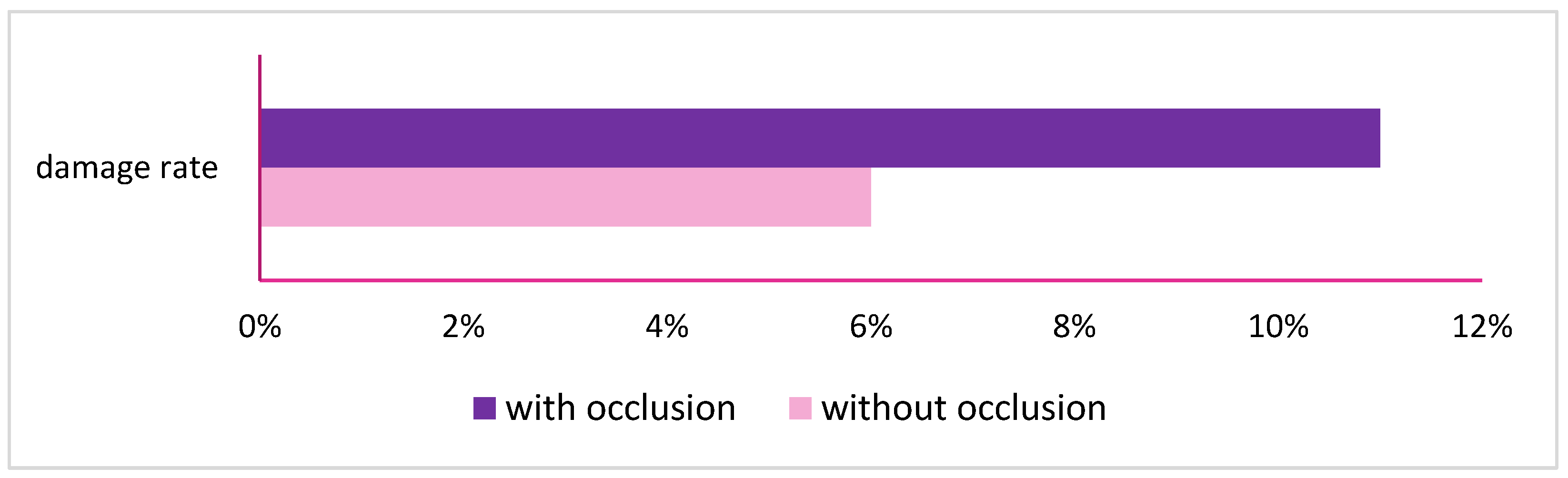

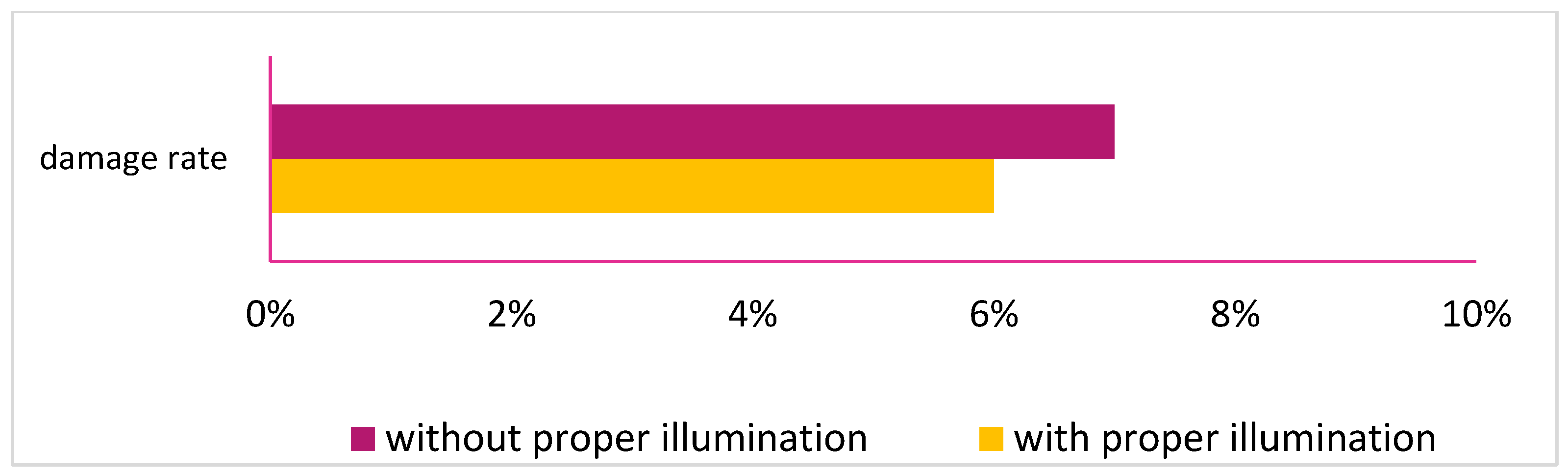

4.4. Damage Rate in Real-Time Testing

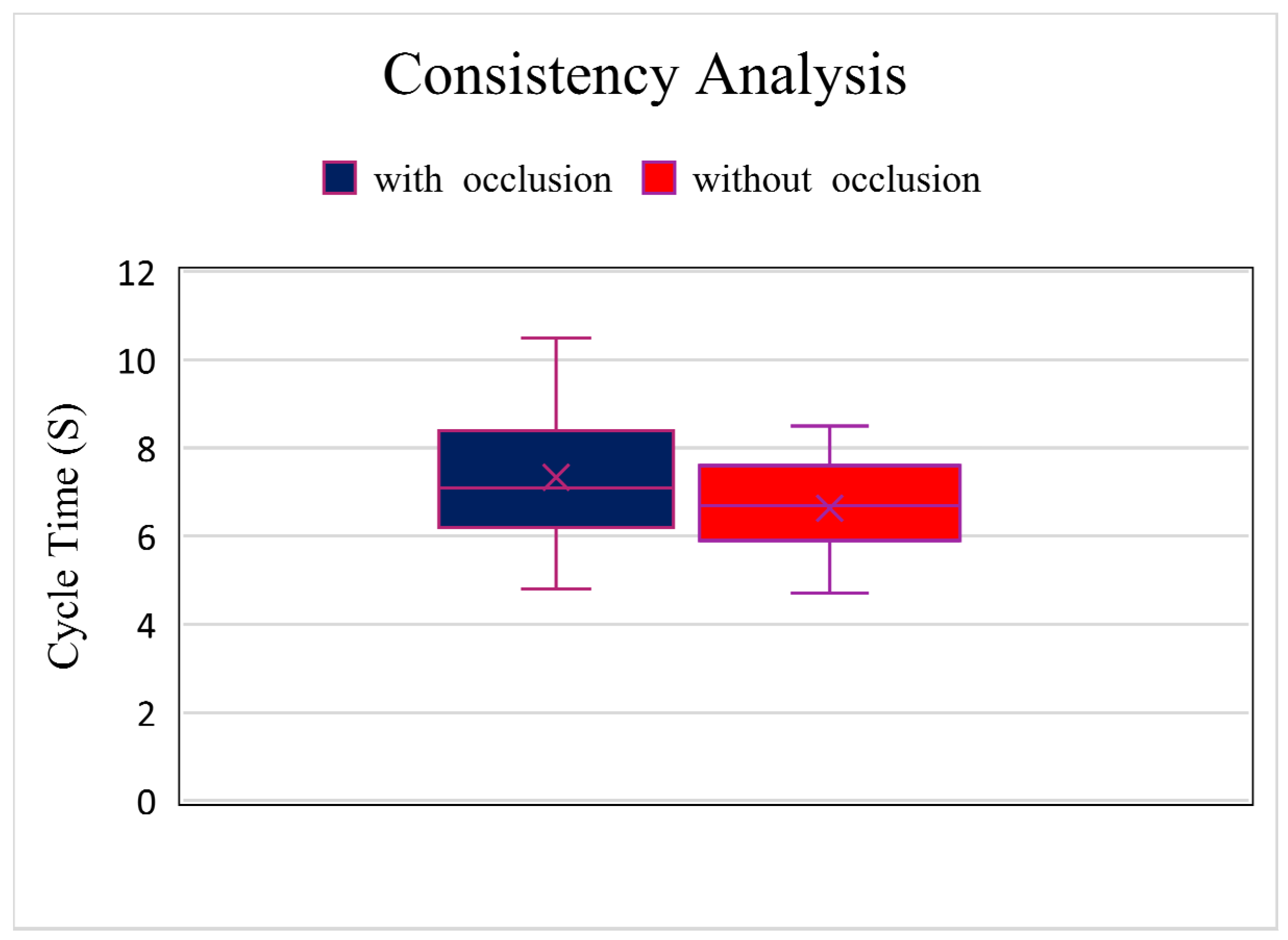

4.5. Consistency of Fruit Pick-Up in Real-Time Testing

4.6. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kootstra, G.; Wang, X.; Blok, P.M.; Hemming, J.; Henten, E.v. Selective Harvesting Robotics: Current Research, Trends, and Future Directions. Curr. Robot. Rep. 2021, 2, 95–104. [Google Scholar] [CrossRef]

- Wu, X.; Bai, J.; Hao, F.; Cheng, G.; Tang, Y.; Li, X. Field Complete Coverage Path Planning Based on Improved Genetic Algorithm for Transplanting Robot. Machines 2023, 11, 659. [Google Scholar] [CrossRef]

- Zeeshan, S.; Aized, T. Performance Analysis of Path Planning Algorithms for Fruit Harvesting Robot. J. Biosyst. Eng. 2023, 48, 178–197. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, F.; Li, B. A heuristic tomato-bunch harvest manipulator path planning method based on a 3D-CNN-based position posture map and rapidly-exploring random tree. Comput. Electron. Agric. 2023, 213, 108183. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, D.; Zhao, H.; Li, Y.; Song, W.; Liu, M.; Tian, L.; Yan, X. Rapid citrus harvesting motion planning with pre-harvesting point and quad-tree. Comput. Electron. Agric. 2022, 202, 107348. [Google Scholar] [CrossRef]

- Lehnert, C.; McCool, C.; Sa, I.; Perez, T. Performance improvements of a sweet pepper harvesting robot in protected cropping environments. J. Field Robot. 2020, 37, 1197–1223. [Google Scholar] [CrossRef]

- Wei, K.; Ren, B. A Method on Dynamic Path Planning for Robotic Manipulator Autonomous Obstacle Avoidance Based on an Improved RRT Algorithm. Sensors 2018, 18, 571. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, C.; Sun, X.; Fu, L. A Refined Apple Binocular Positioning Method with Segmentation-Based Deep Learning for Robotic Picking. Agronomy 2023, 13, 1469. [Google Scholar] [CrossRef]

- Gao, M.; Ma, S.; Zhang, Y.; Xue, Y. Detection and counting of overlapped apples based on convolutional neural networks. J. Intell. Fuzzy Syst. 2023, 44, 2019–2029. [Google Scholar] [CrossRef]

- Liu, S.; Zhai, B.; Zhang, J.; Yang, L.; Wang, J.; Huang, K.; Liu, M. Tomato detection based on convolutional neural network for robotic application. J. Food Process Eng. 2022, 46, e14239. [Google Scholar] [CrossRef]

- Momeny, M.; Jahanbakhshi, A.; Jafarnezhad, K.; Zhang, Y.-D. Accurate classification of cherry fruit using deep CNN based on hybrid pooling approach. Postharvest Biol. Technol. 2020, 166, 111204. [Google Scholar] [CrossRef]

- Williams, H.A.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Zeeshan, S.; Aized, T.; Riaz, F. The Design and Evaluation of an Orange-Fruit Detection Model in a Dynamic Environment Using a Convolutional Neural Network. Sustainability 2023, 15, 4329. [Google Scholar] [CrossRef]

- Yin, W.; Wen, H.; Ning, Z.; Ye, J.; Dong, Z.; Luo, L. Fruit Detection and Pose Estimation for Grape Cluster–Harvesting Robot Using Binocular Imagery Based on Deep Neural Networks. Front. Robot. AI 2021, 8, 626989. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.-P.; Yang, C.-H.; Ling, H.; Mabu, S.; Kuremoto, T. A Visual System of Citrus Picking Robot Using Convolutional Neural Networks. In Proceedings of the 5th International Conference on Systems and Informatics (ICSAI), Nanjing, China, 10–12 November 2018. [Google Scholar]

- Park, Y.; Seol, J.; Pak, J.; Jo, Y.; Kim, C.; Son, H.I. Human-centered approach for an efficient cucumber harvesting robot system: Harvest ordering, visual servoing, and end-effector. Comput. Electron. Agric. 2023, 212, 108116. [Google Scholar] [CrossRef]

- Li, Y.-R.; Lien, W.-Y.; Huang, Z.-H.; Chen, C.-T. Hybrid Visual Servo Control of a Robotic Manipulator for Cherry Tomato Harvesting. Actuators 2023, 12, 253. [Google Scholar] [CrossRef]

- Shi, Y.; Jin, S.; Zhao, Y.; Huo, Y.; Liu, L.; Cui, Y. Lightweight force-sensing tomato picking robotic arm with a “global-local” visual servo. Comput. Electron. Agric. 2023, 204, 107549. [Google Scholar] [CrossRef]

- Liu, J.; Liang, J.; Zhao, S.; Jiang, Y.; Wang, J.; Jin, Y. Design of a Virtual Multi-Interaction Operation System for Hand–Eye Coordination of Grape Harvesting Robots. Agronomy 2023, 13, 829. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2019, 37, 202–224. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Wang, M.Y.; Chen, C. Development and evaluation of a robust soft robotic gripper for apple harvesting. Comput. Electron. Agric. 2023, 204, 107552. [Google Scholar] [CrossRef]

- Yin, H.; Sun, Q.; Ren, X.; Guo, J.; Yang, Y.; Wei, Y.; Huang, B.; Chai, X.; Zhong, M. Development, integration, and field evaluation of an autonomous citrus-harvesting robot. J. Field Robot. 2023, 40, 1363–1387. [Google Scholar] [CrossRef]

- Williams, H.; Ting, C.; Nejati, M.; Jones, M.H.; Penhall, N.; Lim, J.; Seabright, M.; Bell, J.; Ahn, H.S.; Scarfe, A.; et al. Improvements to and large-scale evaluation of a robotic kiwifruit harvester. J. Field Robot. 2019, 37, 187–201. [Google Scholar] [CrossRef]

- Lili, W.; Bo, Z.; Jinwei, F.; Xiaoan, H.; Shu, W.; Yashuo, L.; Zhou, Q.; Chongfeng, W. Development of a tomato harvesting robot used in greenhouse. Int. J. Agric. Biol. Eng. 2017, 10, 140–149. [Google Scholar] [CrossRef]

- Yoshida, T.; Fukao, T.; Hasegawa, T. Fast Detection of Tomato Peduncle Using Point Cloud with a Harvesting Robot. J. Robot. Mechatron. 2018, 30, 180–186. [Google Scholar] [CrossRef]

- Li, T.; Feng, Q.; Qiu, Q.; Xie, F.; Zhao, C. Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting. Remote Sens. 2022, 14, 482. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Mehdizadeh, S.A. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Ling, Y.; Wang, X.; Meng, D.; Nie, L.; An, G.; Wang, X. An improved Faster R-CNN model for multi-object tomato maturity detection in complex scenarios. Ecol. Inform. 2022, 72, 101886. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sens. 2023, 15, 1516. [Google Scholar] [CrossRef]

- Huang, L.; Chen, Y.; Wang, J.; Cheng, Z.; Tao, L.; Zhou, H.; Xu, J.; Yao, M.; Liub, M.; Chen, T. Online identification and classification of Gannan navel oranges with Cu contamination by LIBS with IGA-optimized SVM. Anal. Methods 2023, 15, 738–745. [Google Scholar] [CrossRef] [PubMed]

- Hu, T.; Wang, W.; Gu, J.; Xia, Z.; Zhang, J.; Wan, B. Research on Apple Object Detection and Localization Method Based on Improved YOLOX and RGB-D Images. Agronomy 2023, 13, 1816. [Google Scholar] [CrossRef]

- Farisqi, B.A.; Prahara, A. Guava Fruit Detection and Classification Using Mask Region-Based Convolutional Neural Network. Bul. Ilm. Sarj. Tek. Elektro 2022, 4, 186–193. [Google Scholar]

- Xie, J.; Peng, J.; Wang, J.; Chen, B.; Jing, T.; Sun, D.; Gao, P.; Wang, W.; Lu, J.; Yetan, R.; et al. Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model. Agronomy 2022, 12, 3054. [Google Scholar] [CrossRef]

- Yuan, T.; Lv, L.; Zhang, F.; Fu, J.; Gao, J.; Zhang, J.; Li, W.; Zhang, C. Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD. Agriculture 2020, 10, 160. [Google Scholar] [CrossRef]

- Coll-Ribes, G.; Torres-Rodríguez, I.J.; Grau, A.; Guerra, E.; Sanfeliu, A. Accurate detection and depth estimation of table grapes and peduncles for robot harvesting, combining monocular depth estimation and CNN methods. Comput. Electron. Agric. 2023, 215, 108362. [Google Scholar] [CrossRef]

- Qin, Z.; Xiaoliang, Y.; Bin, L.; Xianping, J.; Zheng, X.; Can, X.U. Motion planning of picking manipulator based CTB_RRT* algorithm. Trans. Chin. Soc. Agric. Mach. 2021, 52, 129–136. [Google Scholar]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collison-free path planning for guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. Eng. 2017, 33, 55–62. [Google Scholar]

- Kothiyal, S. Perception Based UAV Path Planning for Fruit Harvesting; John Hopkins University: Baltimore, MD, USA, 2021. [Google Scholar]

- Wecheng, W.; Gege, Z.; Xinlin, C.; Weixian, W. Research on path planning of orchard spraying robot based on improved RRT algorithm. In Proceedings of the 2nd International Conference on Big Data and Artificial Intelligence, Manchester, UK, 15–17 October 2020. [Google Scholar]

- Kurtser, P.; Edan, Y. Planning the sequence of tasks for harvesting robots. Robot. Auton. Syst. 2020, 131, 103591. [Google Scholar] [CrossRef]

- Liu, Y.; Qingyong, Z.; Yu, L. Picking robot path planning based on improved any colony algorithm. In Proceedings of the 34th Youth Acedemic Annual Conference of Chinese Association of Automation, Jinzhou, China, 6–8 June 2019. [Google Scholar]

- Sarabu, H.; Ahlin, K.; Hu, A.-P. Graph-based cooperative robot path planning in agricultural environments. In Proceedings of the International Conference on Advanced Intelligent Mechatronics, Hong Kong, China, 8–12 July 2019. [Google Scholar]

- Wang, L.; Wu, Q.; Lin, F.; Li, S.; Chen, D. A new trajectory-planning beetle swarm optimization algorithm for trajectory planning of robotic manipulators. IEEE Access 2019, 7, 154332–154645. [Google Scholar]

- Magalhaes, S.A.; Santos, F.N.D.; Martins, R.; Rocha, L.; Brito, J. Path planning algorithms benchmarking for grapevines pruning and monitoring. Prog. Artif. Intell. 2019, 11805, 295–306. [Google Scholar]

- Zhang, K.; Lammers, K.; Chu, P.; Li, Z.; Lu, R. An automated apple harvesting robot—From system design to field evaluation. J. Field Robot. 2023, 40, 1–17. [Google Scholar] [CrossRef]

- Hu, X.; Yu, H.; Lv, S.; Wu, J. Design and experiment of a new citrus harvesting robot. In Proceedings of the 2021 International Conference on Control Science and Electric Power Systems (CSEPS), Shanghai, China, 28–30 May 2021. [Google Scholar]

| Reference | Fruit | Year | Classification Algorithm | Results |

|---|---|---|---|---|

| Takeshi Yoshida [26] | tomatoes | 2018 | SVM | Success rate: 95% |

| Tao Li [27] | apples | 2022 | CNN | Errors reduced by 59% |

| Hamzeh Mirhaji [28] | oranges | 2021 | YOLO | Accuracy 90.8% Precision: 91.23% Recall: 92.8% |

| Hanwen Kang [29] | apples | 2020 | DasNet v-1 | Accuracy: 90% Recall: 82.6% F1 score: 0.85 |

| Zan Wang [30] | tomatoes | 2022 | CNN | mAP: 96.14% |

| P.K. Sekharamantry [31] | apples | 2023 | YOLO | Precision: 87% F1 score: 0.98 |

| Lin Hunag [32] | oranges | 2023 | IGA-SVM | Accuracy: 98% |

| T. Hu [33] | apples | 2023 | YOLO | mAP: 94% F1 score: 0.93 |

| Bayu Alif Farisqi [34] | guava | 2022 | CNN | mAP: 88% F1 score: 0.89 |

| Jiaxing Xie [35] | litchi | 2022 | YOLO | Precision: 87.1% |

| T. Yuan [36] | tomatoes | 2020 | SSD | Precision: 98.85% |

| G. Coll-Ribes [37] | grapes | 2023 | CNN | mAP: 94.9% |

| Author | Year | Fruit | Algorithm | Salient Features |

|---|---|---|---|---|

| Z. Qin [38] | 2021 | grapes | RRT* | Rewiring, optimized path |

| G. Lin [39] | 2021 | guava | DDPG | Versatile, efficient |

| S. Kothiyal [40] | 2021 | orange | DDPG | Sample efficiency, off-policy algorithm |

| W. Wencheng [41] | 2021 | grapes | RRT | Easy implementation, non-optimal |

| A. Zahid [42] | 2020 | apple | RRT | Random samples, suitable for real-time |

| C. Lehnert [6] | 2020 | sweet pepper | RRT* | Efficient, optimal path |

| P. Kurtser [43] | 2020 | sweet pepper | RRT | Suitable for real-time, non-optimal |

| P. Kurtser [43] | 2020 | sweet pepper | GA | Optimal path, flexible, adaptive |

| Y. Liu [23] | 2019 | tomato | ACO | Optimal path, robust |

| H. Sarabu [44] | 2019 | apple | RRT | Efficient, suitable for real-time |

| L. Wang [45] | 2019 | apple | BSO | Global optimization, robust |

| Magalhães [46] | 2019 | grapes | BiT RRT | Efficient, complex implementation |

| Batch Size | 64 |

| Neuron number per layer | 128 |

| Number of hidden layers | 4 |

| Method of Optimization | RMSprop |

| Model loss function | Cross-entropy |

| Activation function | ReLU |

| Kernel size of filters | 3 × 3 |

| Number of filters | 64 |

| Epoch Number | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeeshan, S.; Aized, T.; Riaz, F. In-Depth Evaluation of Automated Fruit Harvesting in Unstructured Environment for Improved Robot Design. Machines 2024, 12, 151. https://doi.org/10.3390/machines12030151

Zeeshan S, Aized T, Riaz F. In-Depth Evaluation of Automated Fruit Harvesting in Unstructured Environment for Improved Robot Design. Machines. 2024; 12(3):151. https://doi.org/10.3390/machines12030151

Chicago/Turabian StyleZeeshan, Sadaf, Tauseef Aized, and Fahid Riaz. 2024. "In-Depth Evaluation of Automated Fruit Harvesting in Unstructured Environment for Improved Robot Design" Machines 12, no. 3: 151. https://doi.org/10.3390/machines12030151

APA StyleZeeshan, S., Aized, T., & Riaz, F. (2024). In-Depth Evaluation of Automated Fruit Harvesting in Unstructured Environment for Improved Robot Design. Machines, 12(3), 151. https://doi.org/10.3390/machines12030151