A Review of Time-Series Forecasting Algorithms for Industrial Manufacturing Systems

Abstract

1. Introduction

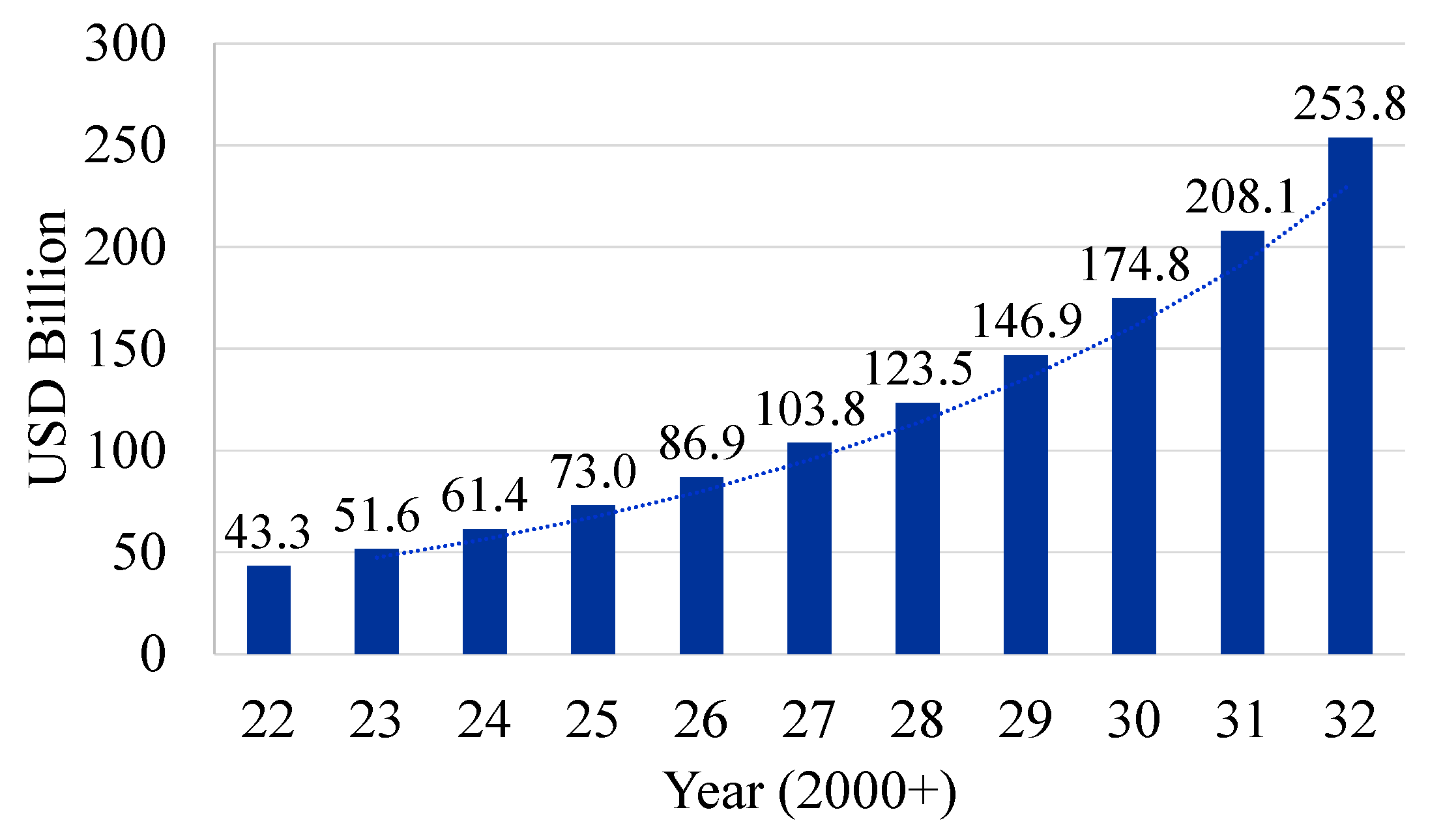

1.1. Significance of Time-Series Forecasting in Industrial Manufacturing Systems

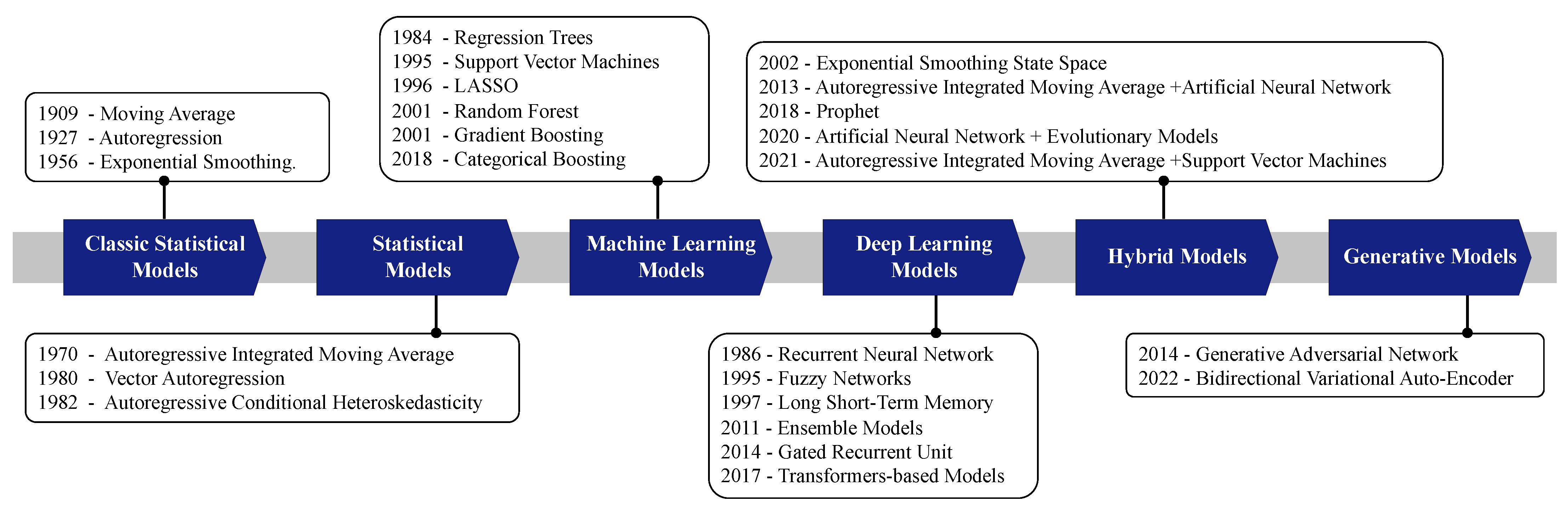

1.2. Evolution of Time-Series Forecasting Algorithms

| Category | Method | Year | Origin Paper | Application Paper |

|---|---|---|---|---|

| Classical Statistical Methods (Before 1970) | MA | 1909 | [11] | [12,13,14,15] |

| AR | 1927 | [16] | [12,17,18] | |

| ES | 1956 | [19] | [20,21,22,23] | |

| Classical Statistical Methods (1970s to 2000s) | ARIMA | 1970 | [24] | [12,25,26,27,28,29,30,31,32,33,34,35] |

| Seasonal Autoregressive Integrated Moving Average (SARIMA) | 1970 | [24] | [25,36,37,38,39,40] | |

| Vector Autoregression (VAR) | 1980 | [41] | [42] | |

| Autoregressive Conditional Heteroskedasticity | 1982 | [35] | [43] | |

| Generalized AutoRegressive Conditional Heteroskedasticity (GARCH) | 1986 | [44] | [45] | |

| State-Space Models | 1994 | [46] | [47] | |

| Machine Learning Methods (1960s to 2010s) | Decision Trees | 1963 | [48] | [49] |

| Regression Trees | 1984 | [50] | [49,51] | |

| SVM | 1995 | [52] | [53,54,55,56,57,58,59] | |

| LASSO Regression | 1996 | [60] | [61,62,63] | |

| k-Nearest Neighbors (KNN) | Various | [64] | [6,65,66,67] | |

| Random Forests | 2001 | [68] | [59,69,70,71,72] | |

| Gradient Boosting Machines | 2001 | [73] | [74,75,76,77,78] | |

| Categorical Boosting | 2018 | [79] | [80] | |

| Prophet | 2018 | [81] | [82,83,84,85,86] | |

| Deep Learning Methods (1950s to 2010s) | ANN | 1958 | [87] | [12,34,88,89,90,91,92] |

| Recurrent Neural Network (RNN) | 1986 | [93] | [94,95,96] | |

| Bayesian Models | 1995 | [97] | [98] | |

| Fuzzy Networks | 1995 | [99] | [100,101,102,103,104] | |

| Long Short-Term Memory (LSTM) | 1997 | [105] | [84,106,107] | |

| Ensemble Models | 2011 | [108] | [109] | |

| Gated Recurrent Unit (GRU) | 2014 | [110] | [111] | |

| Transformer-based Models | 2017 | [112] | [113] | |

| Hybrid Models (2000s to 2020s) | Exponential Smoothing State-Space Model (ETS) | 2002 | [114] | [114,115,116] |

| ARIMA + ANN | 2013 | [29] | [29,90,117,118] | |

| ANN + Evolutionary models | 2020 | [119] | [119,120,121,122] | |

| ARIMA + SVM | 2021 | [123] | [123,124,125] | |

| Generative Models (2010s to 2020s) | GAN | 2014 | [126] | [127,128] |

| Bidirectional Variational Auto-Encoder | 2022 | [129] | [130,131] |

1.3. Objectives of the Review

- To provide a comprehensive review of the classical statistical, ML, DL, and hybrid models used in manufacturing systems.

- To conduct a comparative study encompassing both the qualitative and quantitative dimensions of these methods.

- To explore the different hybrid model combinations, evaluating their effectiveness and performance.

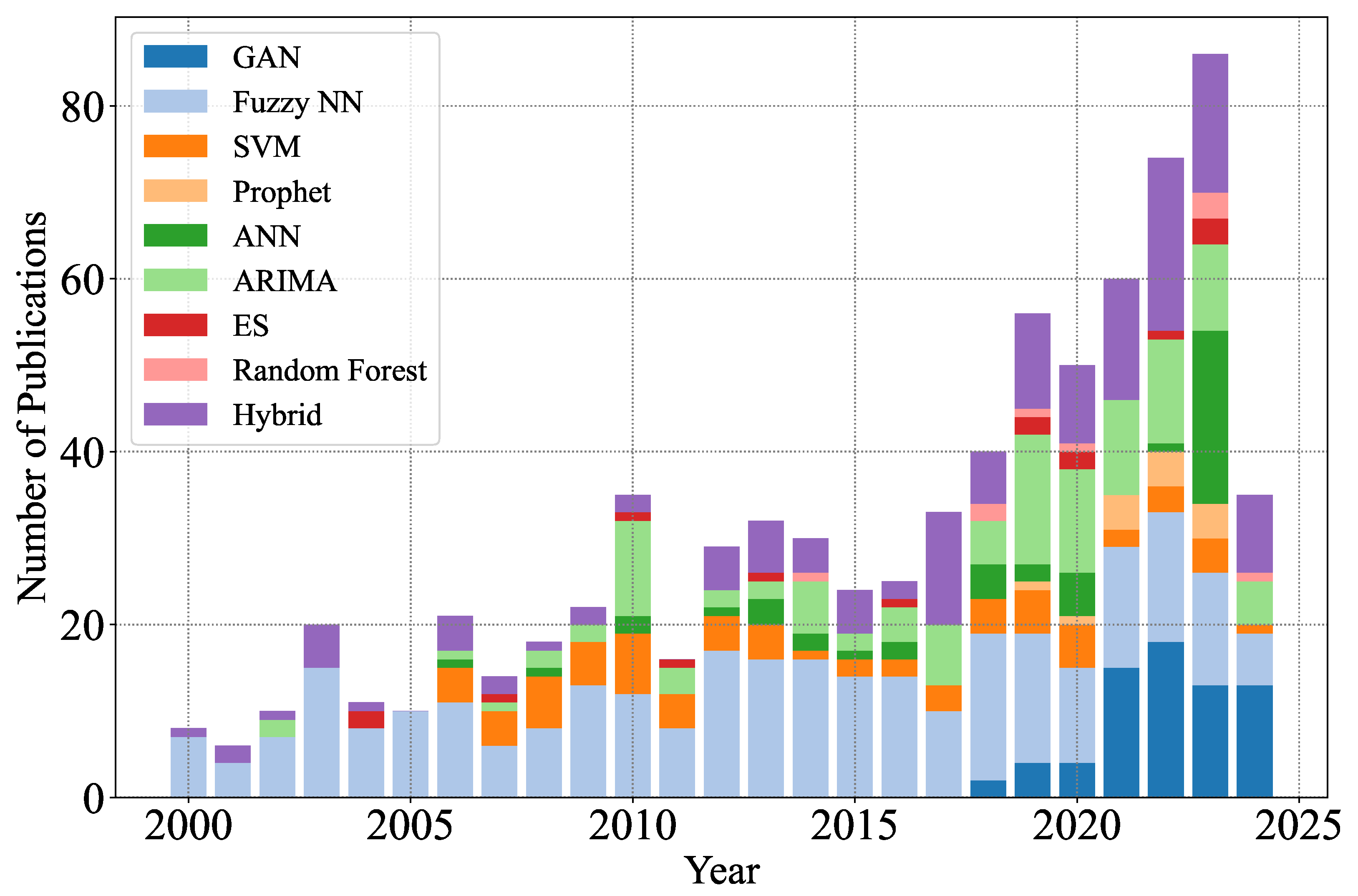

1.4. Summary of Reviewed Papers

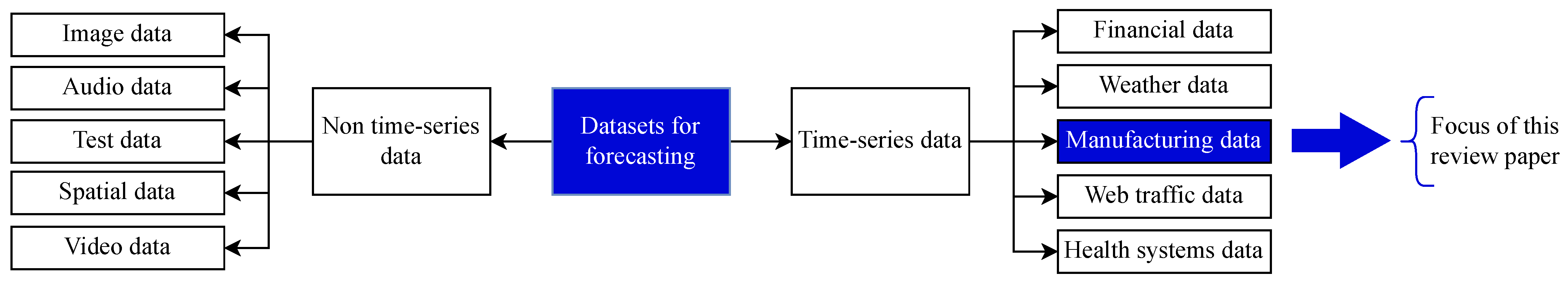

2. Time-Series Data

- Missing data: It is common to encounter gaps in time-series data due to issues such as sensor malfunctions or data collection errors. Common strategies to address this include imputing the missing values or omitting the affected records [136].

- Outliers: Time-series data can sometimes contain anomalies or outliers. To handle these, one can either remove them using robust statistical methods or include them into the model [137].

- Irregular intervals: Data observed at inconsistent intervals are termed data streams when the volume is large or are simply termed unevenly spaced time-series. Models must account for this irregularity [132].

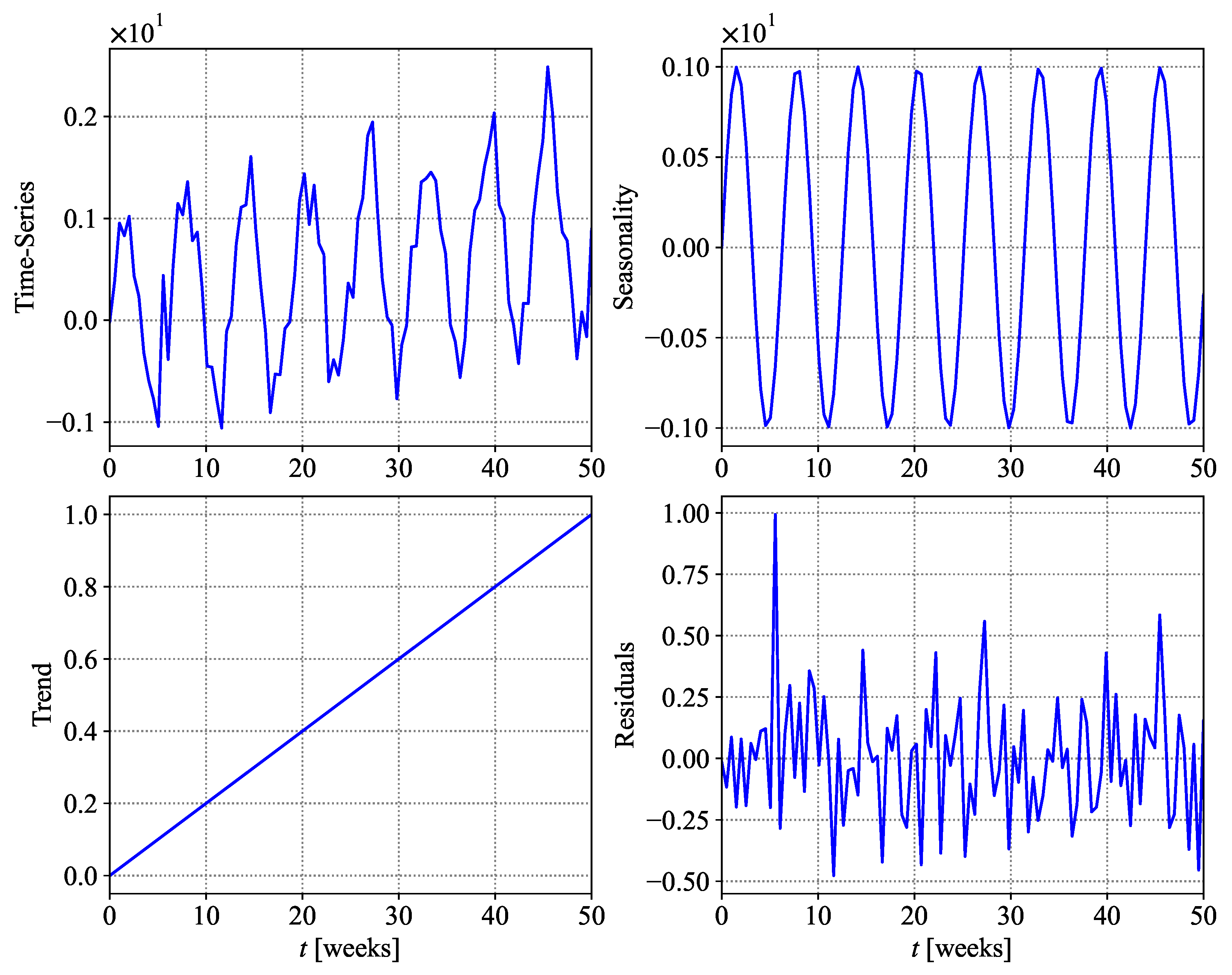

- Trend: This represents the direction in which the data move over time, excluding seasonal effects and irregularities. Trends can be linear, exponential, or parabolic.

- Seasonality: Patterns that recur at regular intervals fall under this component. Weather patterns, economic cycles, or holidays can induce seasonality.

- Residuals: After accounting for trend and seasonality, residuals remain. When these residuals are significant, they can overshadow the trend and seasonality. If the cause of these fluctuations can be identified, they can potentially signal upcoming changes in the trend.

2.1. Mathematical Representation

- Univariate time-series: Let be a univariate time-series. It has L historical values, and are the values of y for time where . The output of forecasting models is an estimated value of , often shown by . The objective function is to minimize the error − .

- Multivariate time-series: The multivariate time-series can be represented in a matrix form as:where is time-series data. Meanwhile, for historical data and , represents the h future values.

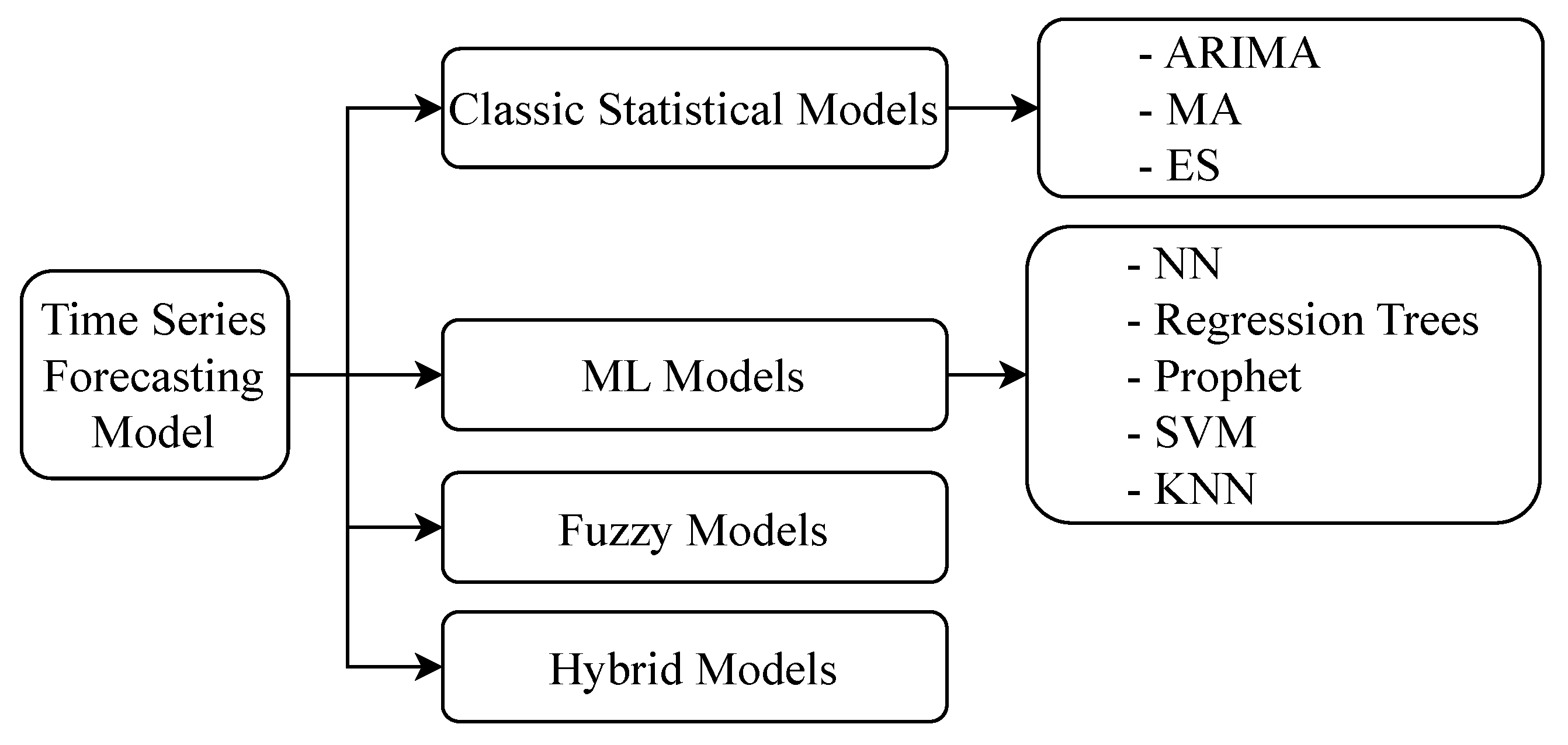

2.2. Time-Series Forecasting Models

3. Time-Series Algorithms

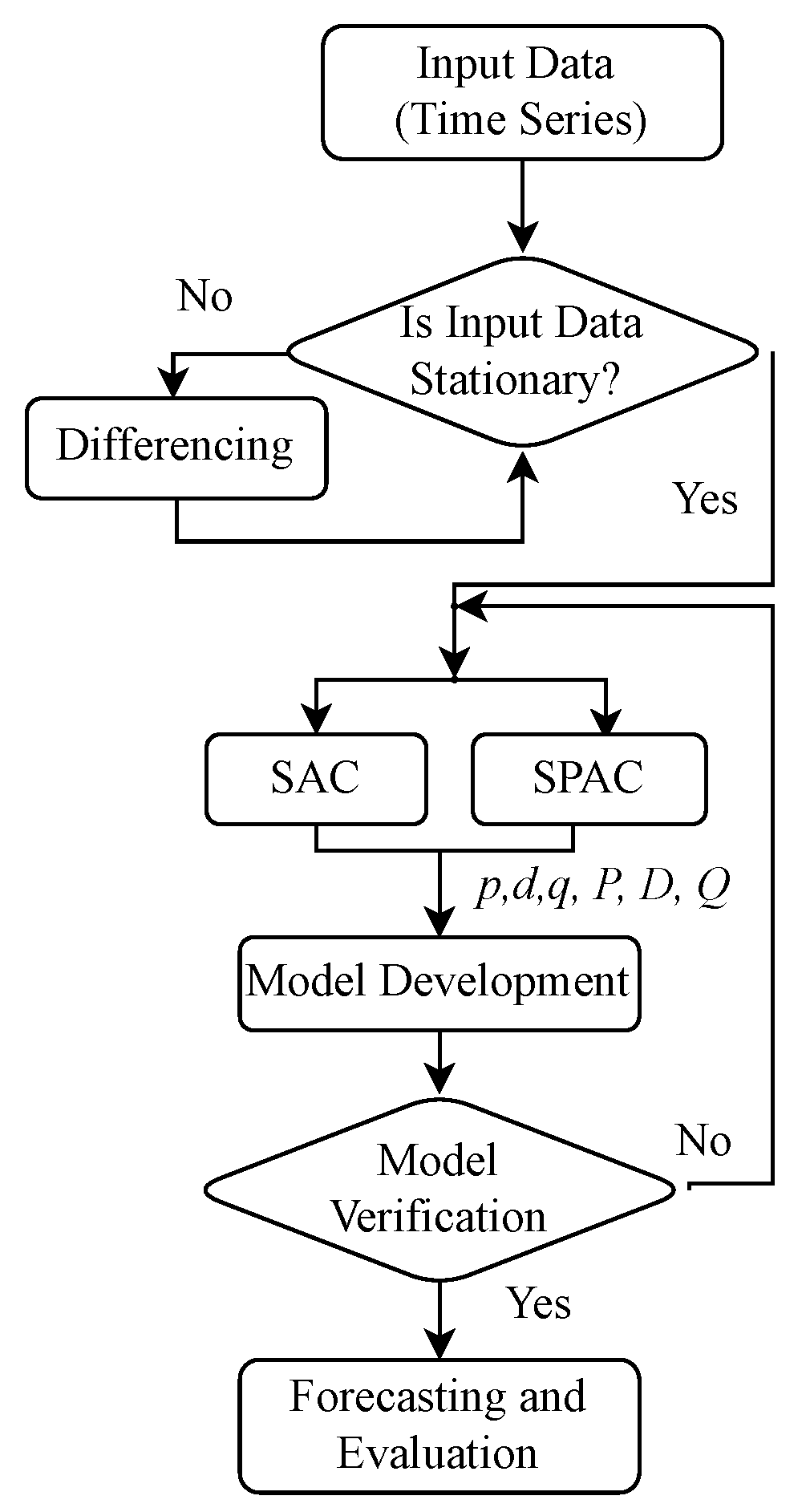

3.1. Autoregressive Integrated Moving Average

- AR component (order p): This component captures the dependency between an observation and several lagged observations.Here, is the lag of the time-series data, is the coefficient of lag that is estimated by the model, is the intercept value or a constant, is the error term, and p is the lag order.

- I component (order d): This component concerns differencing the time-series to make it stationary.

- MA component (order q): This component applies a moving average model to lagged observations using the observation and its error term .The terms are from the AR models of lagged observations.

Applications of ARIMA in Manufacturing Systems

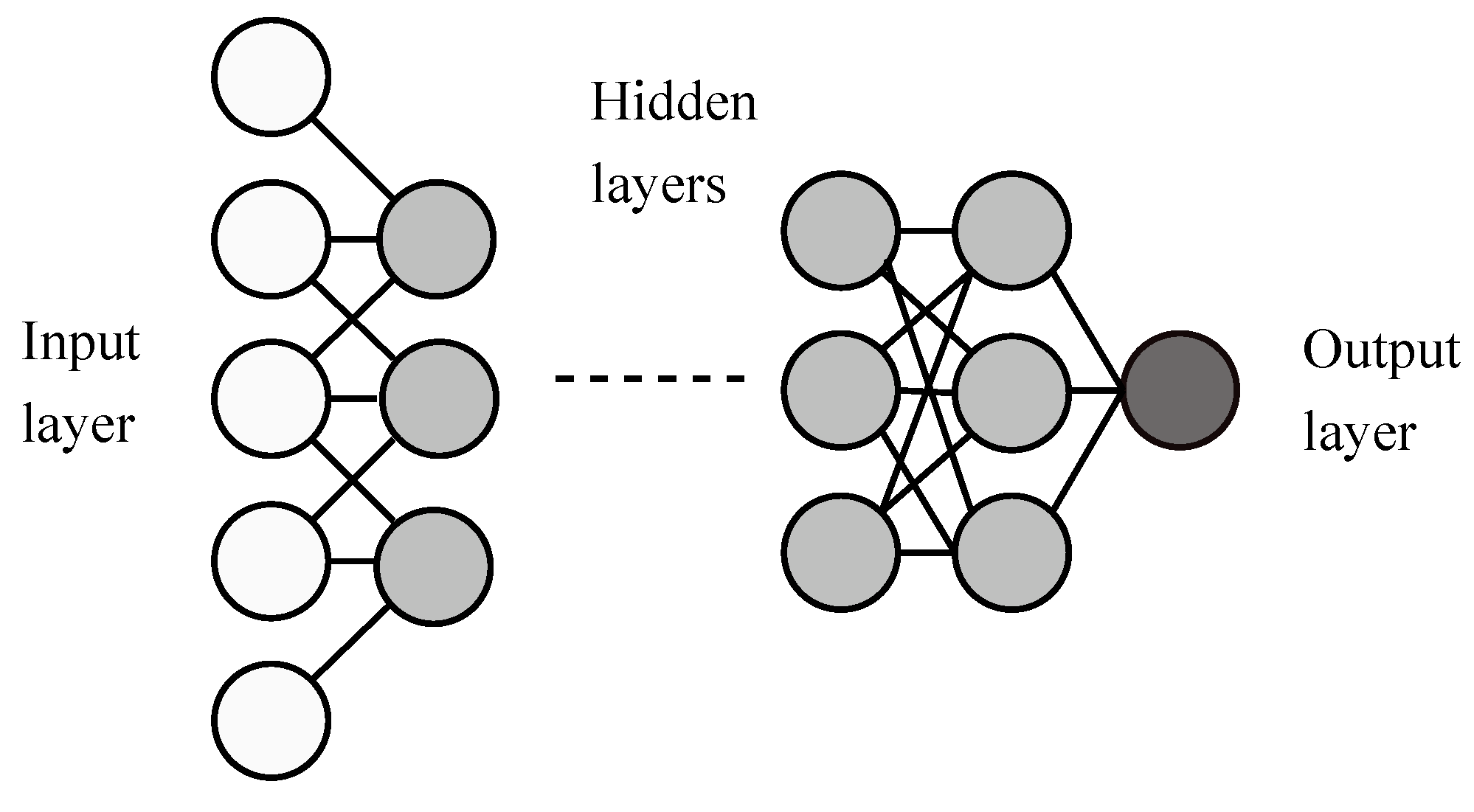

3.2. Artificial Neural Network

3.2.1. Feed-Forward Neural Networks

3.2.2. Recurrent Neural Networks

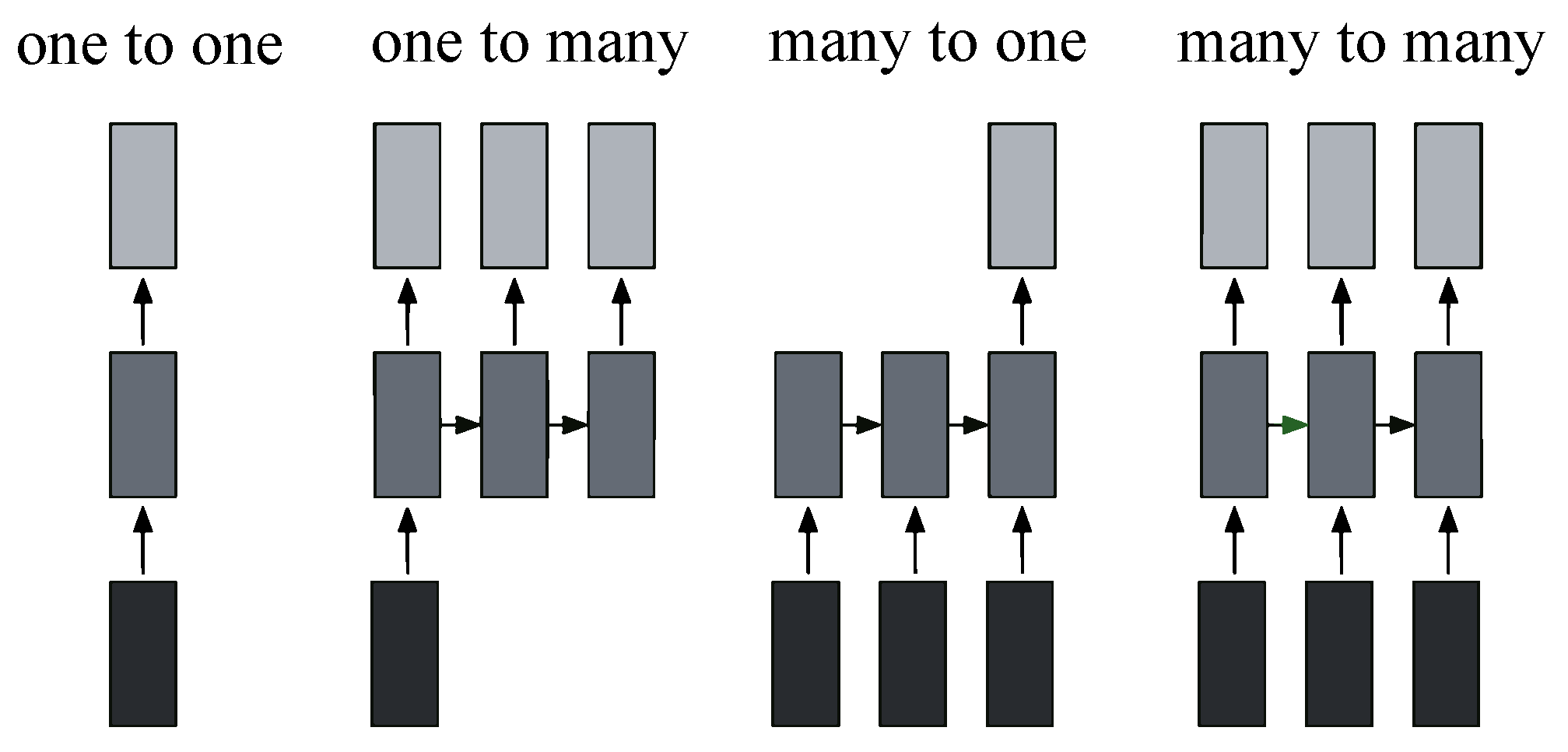

- Singular input–Singular output configuration (one-to-one): This configuration represents the classic feed-forward neural network structure, characterized by a solitary input and the expectation of a single output.

- Singular input–Multiple output configuration (one-to-many): In the context of image captioning, this configuration is aptly illustrated. A single image serves as a fixed-size input, while the output comprises words or sentences of varying lengths, making it adaptable to diverse textual descriptions.

- Multiple input–Singular output configuration (many-to-one): This configuration finds its application in sentiment classification tasks. Here, the input is anticipated to be a sequence of words or even paragraphs, while the output takes the form of continuous values, reflecting the likelihood of a positive sentiment.

- Multiple input–Multiple output configuration (many-to-many): This versatile model suits tasks such as machine translation, reminiscent of services like Google Translate. It is well suited to handle inputs of varying lengths, such as English sentences, and producing corresponding sentences in different languages. Additionally, it is applicable to video classification at the frame level, requiring the neural network to process each frame individually. Due to interdependencies among frames, recurrent neural networks become essential for propagating hidden states from one frame to the next in this particular configuration.

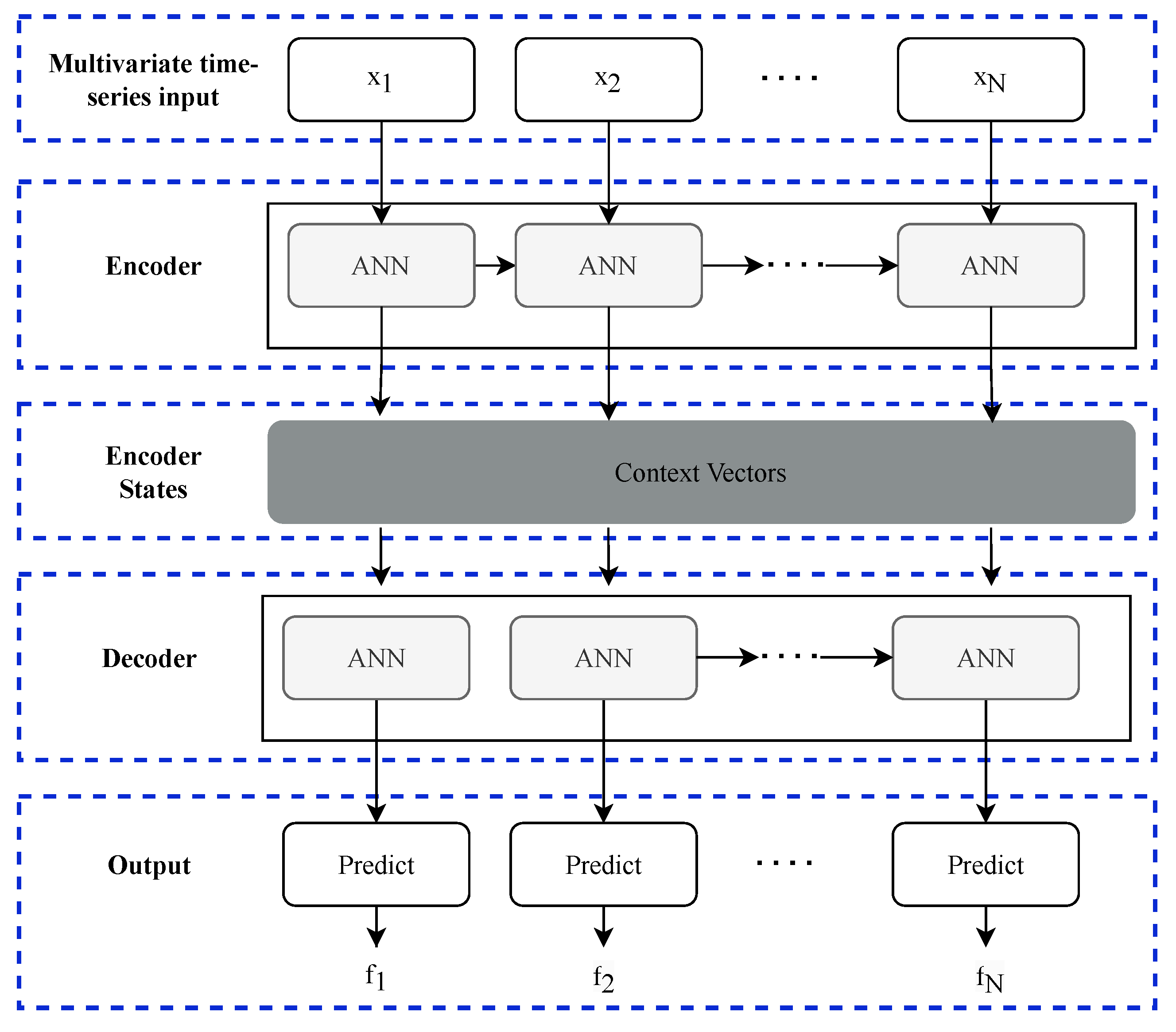

3.2.3. Encoder-Decoder Architecture

3.2.4. Applications of ANNs in Manufacturing Systems

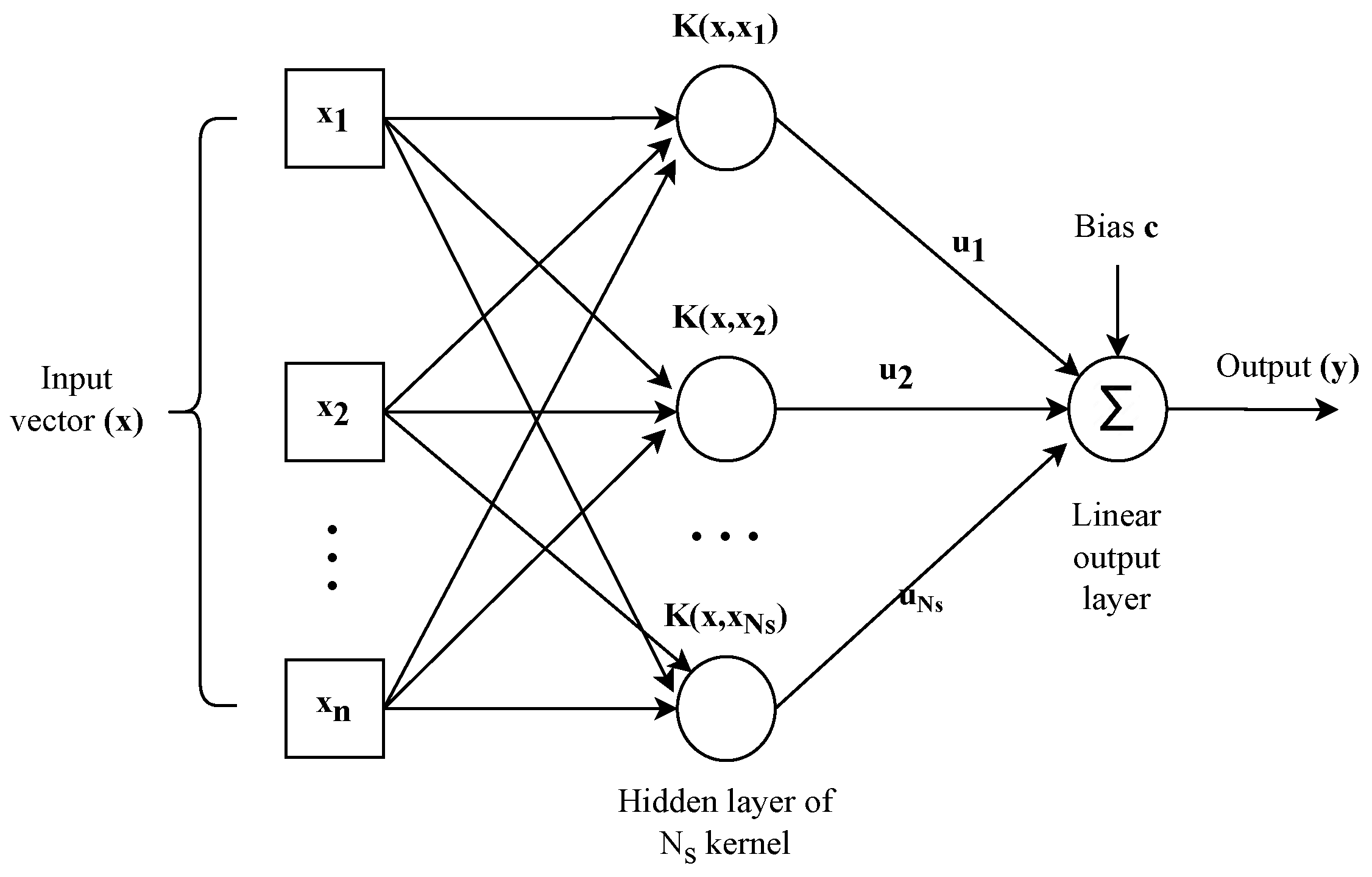

3.3. Support Vector Machines Models

Applications of SVMs in Manufacturing Systems

3.4. Fuzzy Network Models

Applications of Fuzzy Networks in Manufacturing Systems

3.5. Prophet

3.6. k-Nearest Neighbors Models

3.7. Generative Adversarial Network

- Generating synthetic time-series data: The generator of a GAN can learn the underlying data distribution of a time-series dataset and generate new data that mimics the real data. This can be particularly useful when there is a lack of data or when data augmentation is needed [188].

- Anomaly detection: GANs can be trained to reconstruct normal time-series data. It can be considered an anomaly if the network fails to reconstruct a data point properly. This is particularly useful in cybersecurity or preventive maintenance [189].

- Direct predictions: GANs have also been directly used for time-series forecasting by training the generator to predict future data points based on previous ones. The discriminator is then trained to evaluate the difference between true and predicted future values. The generator tries to trick the discriminator into believing that the predictions are real future values [190].

3.8. Hybrid Models

4. Discussion of Time-Series Forecasting Models for Industrial Systems

5. Challenges and Research Directions

5.1. Challenges

- Data quality and pre-processing:

- −

- Inconsistent data: industrial data often come from various sources with different formats and frequencies, requiring significant pre-processing to ensure consistency.

- −

- Missing data: handling gaps in data and ensuring accurate imputation methods can be complex but is crucial for reliable forecasting.

- −

- Noise and outliers: industrial data can be noisy and can contain outliers that can distort model predictions, necessitating robust cleaning techniques.

- Computational complexity:

- −

- Scalability: advanced forecasting models, especially those involving Deep Learning, can be computationally intensive and may not scale efficiently with large datasets common in industrial settings.

- −

- Resource requirements: high computational power and memory requirements can be a barrier for many companies, particularly for small- and medium-sized enterprises.

- Model interpretability:

- −

- Black box nature: many advanced models, such as ANNs and other Deep Learning techniques, are often criticized for their lack of transparency, making it difficult for practitioners to trust and adopt them.

- −

- Explainability tools: developing and integrating tools that can provide insights into model decisions is essential to increase user trust and model acceptance.

- Integration with existing systems:

- −

- Compatibility issues: integrating new forecasting models with legacy systems and existing infrastructure can be challenging and requires careful planning and execution.

- −

- Maintenance and updates: ensuring that the models remain up to date with the latest data and perform well over time involves ongoing maintenance efforts.

- Real-time processing:

- −

- Latency: for many industrial applications, real-time or near-real-time forecasting is essential, requiring models and systems that can process data and generate predictions with minimal delay.

- −

- Streaming data: handling continuous data streams and updating models dynamically can be technically challenging but is crucial for timely decision making.

5.2. Future Directions

- Advanced Deep Learning models: The development and application of advanced Deep Learning models, such as transformers and attention mechanisms, can potentially improve the performance of time-series forecasting in industrial systems by capturing complex, high-dimensional, and long-term dependencies in data.

- Ensemble and hybrid approaches: Combining multiple forecasting models, including ensemble techniques and hybrid approaches, can lead to more robust and accurate predictions by leveraging the strengths of different methods and mitigating individual model weaknesses.

- Transfer Learning and Meta-Learning: Applying Transfer Learning and Meta-Learning techniques can potentially improve the generalization of time-series forecasting models across different industries and tasks, enabling the reuse of knowledge learned from one domain to another, thus reducing the need for extensive domain-specific training data.

- Explainable AI: The need for explainable and interpretable models will grow as industries increasingly adopt AI-based forecasting models; developing explainable time-series forecasting models can help decision makers understand the patterns and underlying features in data, leading to more informed decisions and better model trust.

- Real-time and adaptive forecasting: With the increasing availability of real-time data in industrial systems, developing time-series forecasting models that can adapt to changing patterns and trends in real-time will become increasingly important; these models can enable industries to respond quickly to unforeseen events and dynamically optimize operations.

- Integration with other data sources: Incorporating auxiliary information, such as external factors and contextual data, can potentially improve the performance of time-series forecasting models; future research may focus on integrating multiple data sources and modalities to enhance prediction accuracy in industrial settings.

- Domain-specific models: As time-series forecasting techniques evolve, future research may focus on developing domain-specific models tailored to specific industries’ unique requirements and challenges, such as energy, finance, manufacturing, and transportation.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mendoza Valencia, J.; Hurtado Moreno, J.J.; Nieto Sánchez, F.d.J. Artificial Intelligence as a Competitive Advantage in the Manufacturing Area. In Telematics and Computing; Springer International Publishing: Cham, Switzerland, 2019; pp. 171–180. [Google Scholar] [CrossRef]

- Susto, G.A.; Schirru, A.; Pampuri, S.; McLoone, S.; Beghi, A. Machine Learning for Predictive Maintenance: A Multiple Classifier Approach. IEEE Trans. Ind. Inform. 2015, 11, 812–820. [Google Scholar] [CrossRef]

- Wang, B.; Tao, F.; Fang, X.; Liu, C.; Liu, Y.; Freiheit, T. Smart Manufacturing and Intelligent Manufacturing: A Comparative Review. Engineering 2021, 7, 738–757. [Google Scholar] [CrossRef]

- Zhou, X.; Zhai, N.; Li, S.; Shi, H. Time Series Prediction Method of Industrial Process with Limited Data Based on Transfer Learning. IEEE Trans. Ind. Inform. 2023, 19, 6872–6882. [Google Scholar] [CrossRef]

- Chen, B.; Liu, Y.; Zhang, C.; Wang, Z. Time Series Data for Equipment Reliability Analysis with Deep Learning. IEEE Access 2020, 8, 105484–105493. [Google Scholar] [CrossRef]

- Deb, C.; Zhang, F.; Yang, J.; Lee, S.E.; Shah, K.W. A review on time series forecasting techniques for building energy consumption. Renew. Sustain. Energy Rev. 2017, 74, 902–924. [Google Scholar] [CrossRef]

- Rivera-Castro, R.; Nazarov, I.; Xiang, Y.; Pletneev, A.; Maksimov, I.; Burnaev, E. Demand Forecasting Techniques for Build-to-Order Lean Manufacturing Supply Chains. In Advances in Neural Networks—ISNN 2019; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; pp. 213–222. [Google Scholar] [CrossRef]

- Market.us. AI in Manufacturing Market. 2024. Available online: https://market.us/report/ai-in-manufacturing-market/ (accessed on 14 May 2024).

- Research, P. Artificial Intelligence (AI) Market (By Offering: Hardware, Software, Services; By Technology: Machine Learning, Natural Language Processing, Context-Aware Computing, Computer Vision; By Deployment: On-premise, Cloud; By Organization Size: Large enterprises, Small & medium enterprises; By Business Function: Marketing and Sales, Security, Finance, Law, Human Resource, Other; By End-Use:)—Global Industry Analysis, Size, Share, Growth, Trends, Regional Outlook, and Forecast 2023–2032. 2023; Available online: https://www.precedenceresearch.com/artificial-intelligence-market (accessed on 20 September 2023).

- Panigrahi, S.; Behera, H. A hybrid ETS–ANN model for time series forecasting. Eng. Appl. Artif. Intell. 2017, 66, 49–59. [Google Scholar] [CrossRef]

- Yule, G.U. The Applications of the Method of Correlation to Social and Economic Statistics. J. R. Stat. Soc. 1909, 72, 721. [Google Scholar] [CrossRef]

- Fradinata, E.; Suthummanon, S.; Sirivongpaisal, N.; Suntiamorntuthq, W. ANN, ARIMA and MA timeseries model for forecasting in cement manufacturing industry: Case study at lafarge cement Indonesia—Aceh. In Proceedings of the 2014 International Conference of Advanced Informatics: Concept, Theory and Application (ICAICTA), Bandung, Indonesia, 20–21 August 2014; pp. 39–44. [Google Scholar] [CrossRef]

- Hansun, S. A new approach of moving average method in time series analysis. In Proceedings of the 2013 Conference on New Media Studies (CoNMedia), Tangerang, Indonesia, 27–28 November 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Ivanovski, Z.; Milenkovski, A.; Narasanov, Z. Time series forecasting using a moving average model for extrapolation of number of tourist. UTMS J. Econ. 2018, 9, 121–132. [Google Scholar]

- Nau, R. Forecasting with Moving Averages; Lecture notes in statistical forecasting; Fuqua School of Business, Duke University: Durham, NC, USA, 2014. [Google Scholar]

- Yule, G.U. On a method of investigating periodicities disturbed series, with special reference to Wolfer’s sunspot numbers. Philos. Trans. R. Soc. London. Ser. A Contain. Pap. A Math. Phys. Character 1927, 226, 267–298. [Google Scholar]

- Besse, P.C.; Cardot, H.; Stephenson, D.B. Autoregressive Forecasting of Some Functional Climatic Variations. Scand. J. Stat. 2000, 27, 673–687. [Google Scholar] [CrossRef]

- Owen, J.; Eccles, B.; Choo, B.; Woodings, M. The application of auto–regressive time series modelling for the time–frequency analysis of civil engineering structures. Eng. Struct. 2001, 23, 521–536. [Google Scholar] [CrossRef]

- Holt, C.C. Forecasting seasonals and trends by exponentially weighted moving averages. Int. J. Forecast. 2004, 20, 5–10. [Google Scholar] [CrossRef]

- Ostertagová, E.; Ostertag, O. Forecasting using simple exponential smoothing method. Acta Electrotech. Inform. 2012, 12. [Google Scholar] [CrossRef]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting Time Series with Complex Seasonal Patterns Using Exponential Smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef]

- Cipra, T.; Hanzák, T. Exponential smoothing for time series with outliers. Kybernetika 2011, 47, 165–178. [Google Scholar]

- Cipra, T.; Hanzák, T. Exponential smoothing for irregular time series. Kybernetika 2008, 44, 385–399. [Google Scholar]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C. Time Series Analysis; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Valipour, M. Long-term runoff study using SARIMA and ARIMA models in the United States: Runoff forecasting using SARIMA. Meteorol. Appl. 2015, 22, 592–598. [Google Scholar] [CrossRef]

- Contreras, J.; Espinola, R.; Nogales, F.; Conejo, A. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- De Felice, M.; Alessandri, A.; Ruti, P.M. Electricity demand forecasting over Italy: Potential benefits using numerical weather prediction models. Electr. Power Syst. Res. 2013, 104, 71–79. [Google Scholar] [CrossRef]

- Torres, J.; García, A.; De Blas, M.; De Francisco, A. Forecast of hourly average wind speed with ARMA models in Navarre (Spain). Sol. Energy 2005, 79, 65–77. [Google Scholar] [CrossRef]

- Wang, L.; Zou, H.; Su, J.; Li, L.; Chaudhry, S. An ARIMA-ANN Hybrid Model for Time Series Forecasting. Syst. Res. Behav. Sci. 2013, 30, 244–259. [Google Scholar] [CrossRef]

- Huang, J.; Ma, S.; Zhang, C.H. Adaptive Lasso for Sparse High-Dimensional Regression Models. Stat. Sin. 2008, 18, 1603–1618. [Google Scholar]

- Yu, R.; Xu, Y.; Zhou, T.; Li, J. Relation between rainfall duration and diurnal variation in the warm season precipitation over central eastern China. Geophys. Res. Lett. 2007, 34. [Google Scholar] [CrossRef]

- Williams, B.M.; Hoel, L.A. Modeling and Forecasting Vehicular Traffic Flow as a Seasonal ARIMA Process: Theoretical Basis and Empirical Results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Vlahogianni, E.I.; Golias, J.C.; Karlaftis, M.G. Short-term traffic forecasting: Overview of objectives and methods. Transp. Rev. 2004, 24, 533–557. [Google Scholar] [CrossRef]

- Khashei, M.; Bijari, M. An artificial neural network (p, d, q) model for timeseries forecasting. Expert Syst. Appl. 2010, 37, 479–489. [Google Scholar] [CrossRef]

- Hamilton, J.D.; Susmel, R. Autoregressive conditional heteroskedasticity and changes in regime. J. Econom. 1994, 64, 307–333. [Google Scholar] [CrossRef]

- Vagropoulos, S.I.; Chouliaras, G.I.; Kardakos, E.G.; Simoglou, C.K.; Bakirtzis, A.G. Comparison of SARIMAX, SARIMA, modified SARIMA and ANN-based models for short-term PV generation forecasting. In Proceedings of the 2016 IEEE International Energy Conference (ENERGYCON), Leuven, Belgium, 4–8 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Dabral, P.P.; Murry, M.Z. Modelling and Forecasting of Rainfall Time Series Using SARIMA. Environ. Process. 2017, 4, 399–419. [Google Scholar] [CrossRef]

- Chen, P.; Niu, A.; Liu, D.; Jiang, W.; Ma, B. Time series forecasting of temperatures using SARIMA: An example from Nanjing. IOP Conf. Ser. Mater. Sci. Eng. 2018, 394, 052024. [Google Scholar] [CrossRef]

- Divisekara, R.W.; Jayasinghe, G.J.M.S.R.; Kumari, K.W.S.N. Forecasting the red lentils commodity market price using SARIMA models. SN Bus. Econ. 2020, 1, 20. [Google Scholar] [CrossRef]

- Milenković, M.; Švadlenka, L.; Melichar, V.; Bojović, N.; Avramović, Z. SARIMA modelling approach for railway passenger flow forecasting. Transport 2016, 33, 1–8. [Google Scholar] [CrossRef]

- Sims, C.A. Macroeconomics and Reality. Econometrica 1980, 48, 1. [Google Scholar] [CrossRef]

- Schorfheide, F.; Song, D. Real-Time Forecasting with a Mixed-Frequency VAR. J. Bus. Econ. Stat. 2015, 33, 366–380. [Google Scholar] [CrossRef]

- Dhamija, A.K.; Bhalla, V.K. Financial time series forecasting: Comparison of neural networks and ARCH models. Int. Res. J. Financ. Econ. 2010, 49, 185–202. [Google Scholar]

- Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econom. 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Garcia, R.; Contreras, J.; van Akkeren, M.; Garcia, J. A GARCH forecasting model to predict day-ahead electricity prices. IEEE Trans. Power Syst. 2005, 20, 867–874. [Google Scholar] [CrossRef]

- Hamilton, J.D. Chapter 50 State-space models. In Handbook of Econometrics; Elsevier: Amsterdam, The Netherlands, 1994; pp. 3039–3080. [Google Scholar] [CrossRef]

- Akram-Isa, M. State Space Models for Time Series Forecasting. J. Comput. Stat. Data Anal. 1990, 6, 119–121. [Google Scholar]

- Zhang, H.; Crowley, J.; Sox, H.C.; Olshen, R.A., Jr. Tree-Structured Statistical Methods; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Gocheva-Ilieva, S.G.; Voynikova, D.S.; Stoimenova, M.P.; Ivanov, A.V.; Iliev, I.P. Regression trees modeling of time series for air pollution analysis and forecasting. Neural Comput. Appl. 2019, 31, 9023–9039. [Google Scholar] [CrossRef]

- Loh, W. Classification and regression trees. WIREs Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Rady, E.; Fawzy, H.; Fattah, A.M.A. Time Series Forecasting Using Tree Based Methods. J. Stat. Appl. Probab. 2021, 10, 229–244. [Google Scholar] [CrossRef]

- Joachims, T. Learning to Classify Text Using Support Vector Machines; Springer: Boston, MA, USA, 2002. [Google Scholar] [CrossRef]

- Thissen, U.; van Brakel, R.; de Weijer, A.; Melssen, W.; Buydens, L. Using support vector machines for time series prediction. Chemom. Intell. Lab. Syst. 2003, 69, 35–49. [Google Scholar] [CrossRef]

- Cao, L.; Tay, F. Support vector machine with adaptive parameters in financial time series forecasting. IEEE Trans. Neural Networks 2003, 14, 1506–1518. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Tay, F.E.; Cao, L. Application of support vector machines in financial time series forecasting. Omega 2001, 29, 309–317. [Google Scholar] [CrossRef]

- Huang, W.; Nakamori, Y.; Wang, S.Y. Forecasting stock market movement direction with support vector machine. Comput. Oper. Res. 2005, 32, 2513–2522. [Google Scholar] [CrossRef]

- Guo, B.; Wang, Z.; Yu, Z.; Wang, Y.; Yen, N.Y.; Huang, R.; Zhou, X. Mobile Crowd Sensing and Computing: The Review of an Emerging Human-Powered Sensing Paradigm. ACM Comput. Surv. 2015, 48, 1–31. [Google Scholar] [CrossRef]

- Kumar, M.; Thenmozhi, M. Forecasting Stock Index Movement: A Comparison of Support Vector Machines and Random Forest. SSRN Electron. J. 2006. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection Via the Lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Yang, D.; Ye, Z.; Lim, L.H.I.; Dong, Z. Very short term irradiance forecasting using the lasso. Sol. Energy 2015, 114, 314–326. [Google Scholar] [CrossRef]

- Li, J.; Chen, W. Forecasting macroeconomic time series: LASSO-based approaches and their forecast combinations with dynamic factor models. Int. J. Forecast. 2014, 30, 996–1015. [Google Scholar] [CrossRef]

- Roy, S.S.; Mittal, D.; Basu, A.; Abraham, A. Stock Market Forecasting Using LASSO Linear Regression Model. In Afro-European Conference for Industrial Advancement; Springer International Publishing: Cham, Switzerland, 2015; pp. 371–381. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Martínez, F.; Frías, M.P.; Pérez, M.D.; Rivera, A.J. A methodology for applying k-nearest neighbor to time series forecasting. Artif. Intell. Rev. 2017, 52, 2019–2037. [Google Scholar] [CrossRef]

- Martínez, F.; Frías, M.P.; Pérez-Godoy, M.D.; Rivera, A.J. Dealing with seasonality by narrowing the training set in time series forecasting with k NN. Expert Syst. Appl. 2018, 103, 38–48. [Google Scholar] [CrossRef]

- Kohli, S.; Godwin, G.T.; Urolagin, S. Sales Prediction Using Linear and KNN Regression. In Advances in Machine Learning and Computational Intelligence; Springer: Singapore, 2020; pp. 321–329. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Seçkin, M.; Seçkin, A.u.; Coşkun, A. Production fault simulation and forecasting from time series data with machine learning in glove textile industry. J. Eng. Fibers Fabr. 2019, 14, 155892501988346. [Google Scholar] [CrossRef]

- Dudek, G. Short-Term Load Forecasting Using Random Forests. In Intelligent Systems’2014; Springer International Publishing: Cham, Switzerland, 2015; pp. 821–828. [Google Scholar] [CrossRef]

- Khaidem, L.; Saha, S.; Dey, S.R. Predicting the direction of stock market prices using random forest. arXiv 2016, arXiv:1605.00003. [Google Scholar] [CrossRef]

- Lahouar, A.; Ben Hadj Slama, J. Hour-ahead wind power forecast based on random forests. Renew. Energy 2017, 109, 529–541. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 1189–1232. [Google Scholar] [CrossRef]

- Zhang, L.; Bian, W.; Qu, W.; Tuo, L.; Wang, Y. Time series forecast of sales volume based on XGBoost. J. Phys. Conf. Ser. 2021, 1873, 012067. [Google Scholar] [CrossRef]

- Ben Taieb, S.; Hyndman, R.J. A gradient boosting approach to the Kaggle load forecasting competition. Int. J. Forecast. 2014, 30, 382–394. [Google Scholar] [CrossRef]

- Barros, F.S.; Cerqueira, V.; Soares, C. Empirical Study on the Impact of Different Sets of Parameters of Gradient Boosting Algorithms for Time-Series Forecasting with LightGBM. In PRICAI 2021: Trends in Artificial Intelligence. PRICAI 2021; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; pp. 454–465. [Google Scholar] [CrossRef]

- Cinto, T.; Gradvohl, A.L.S.; Coelho, G.P.; da Silva, A.E.A. Solar Flare Forecasting Using Time Series and Extreme Gradient Boosting Ensembles. Sol. Phys. 2020, 295, 93. [Google Scholar] [CrossRef]

- Lainder, A.D.; Wolfinger, R.D. Forecasting with gradient boosted trees: Augmentation, tuning, and cross-validation strategies. Int. J. Forecast. 2022, 38, 1426–1433. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the Advances in Neural Information Processing Systems; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Ding, J.; Chen, Z.; Xiaolong, L.; Lai, B. Sales Forecasting Based on CatBoost. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 636–639. [Google Scholar] [CrossRef]

- Taylor, S.J.; Letham, B. Forecasting at Scale. Am. Stat. 2018, 72, 37–45. [Google Scholar] [CrossRef]

- Yusof, U.K.; Khalid, M.N.A.; Hussain, A.; Shamsudin, H. Financial Time Series Forecasting Using Prophet. In Innovative Systems for Intelligent Health Informatics; Springer International Publishing: Cham, Switzerland, 2021; pp. 485–495. [Google Scholar] [CrossRef]

- Triebe, O.; Hewamalage, H.; Pilyugina, P.; Laptev, N.; Bergmeir, C.; Rajagopal, R. NeuralProphet: Explainable Forecasting at Scale. arXiv 2021, arXiv:2111.15397. [Google Scholar] [CrossRef]

- Ensafi, Y.; Amin, S.H.; Zhang, G.; Shah, B. Time-series forecasting of seasonal items sales using machine learning—A comparative analysis. Int. J. Inf. Manag. Data Insights 2022, 2, 100058. [Google Scholar] [CrossRef]

- Kong, Y.H.; Lim, K.Y.; Chin, W.Y. Time Series Forecasting Using a Hybrid Prophet and Long Short-Term Memory Model. In Soft Computing in Data Science; Springer: Singapore, 2021; pp. 183–196. [Google Scholar] [CrossRef]

- Hasan Shawon, M.M.; Akter, S.; Islam, M.K.; Ahmed, S.; Rahman, M.M. Forecasting PV Panel Output Using Prophet Time Series Machine Learning Model. In Proceedings of the 2020 IEEE REGION 10 CONFERENCE (TENCON), Osaka, Japan, 16–19 November 2020; pp. 1141–1144. [Google Scholar] [CrossRef]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Tealab, A. Time series forecasting using artificial neural networks methodologies: A systematic review. Future Comput. Inform. J. 2018, 3, 334–340. [Google Scholar] [CrossRef]

- Zhang, C.; Sjarif, N.N.A.; Ibrahim, R. Deep learning models for price forecasting of financial time series: A review of recent advancements: 2020–2022. WIREs Data Min. Knowl. Discov. 2023, 14, e1519. [Google Scholar] [CrossRef]

- Zhang, G. Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Sako, K.; Mpinda, B.N.; Rodrigues, P.C. Neural Networks for Financial Time Series Forecasting. Entropy 2022, 24, 657. [Google Scholar] [CrossRef]

- Ratna Prakarsha, K.; Sharma, G. Time series signal forecasting using artificial neural networks: An application on ECG signal. Biomed. Signal Process. Control 2022, 76, 103705. [Google Scholar] [CrossRef]

- Ivakhnenko, A.G.; Lapa, V.G. Cybernetics and Forecasting Techniques; Elsevier Science: London, UK, 1968. [Google Scholar]

- Morariu, C.; Borangiu, T. Time series forecasting for dynamic scheduling of manufacturing processes. In Proceedings of the 2018 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 24–26 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Malhotra, P.; Vig, L.; Shroff, G.; Agarwal, P. Long short term memory networks for anomaly detection in time series. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 22–24 April 2015; pp. 89–94. [Google Scholar]

- HuH, D.; Todorov, E. Real-time motor control using recurrent neural networks. In Proceedings of the 2009 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning, Nashville, TN, USA, 30 March–2 April 2009; pp. 42–49. [Google Scholar] [CrossRef]

- Raftery, A.E. Bayesian Model Selection in Social Research. Sociol. Methodol. 1995, 25, 111. [Google Scholar] [CrossRef]

- Ghosh, B.; Basu, B.; O’Mahony, M. Bayesian Time-Series Model for Short-Term Traffic Flow Forecasting. J. Transp. Eng. 2007, 133, 180–189. [Google Scholar] [CrossRef]

- Hayashi, Y.; Buckley, J. Implementation of fuzzy max-min neural controller. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 6, pp. 3113–3117. [Google Scholar] [CrossRef]

- Wang, C.H.; Liu, H.L.; Lin, T.C. Direct adaptive fuzzy-neural control with state observer and supervisory controller for unknown nonlinear dynamical systems. IEEE Trans. Fuzzy Syst. 2002, 10, 39–49. [Google Scholar] [CrossRef]

- Jang, J.S. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Aladag, C.H.; Egrioglu, E.; Kadilar, C. Forecasting nonlinear time series with a hybrid methodology. Appl. Math. Lett. 2009, 22, 1467–1470. [Google Scholar] [CrossRef]

- Song, Q.; Chissom, B.S. Fuzzy time series and its models. Fuzzy Sets Syst. 1993, 54, 269–277. [Google Scholar] [CrossRef]

- Lee, W.J.; Hong, J. A hybrid dynamic and fuzzy time series model for mid-term power load forecasting. Int. J. Electr. Power Energy Syst. 2015, 64, 1057–1062. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Punia, S.; Nikolopoulos, K.; Singh, S.P.; Madaan, J.K.; Litsiou, K. Deep learning with long short-term memory networks and random forests for demand forecasting in multi-channel retail. Int. J. Prod. Res. 2020, 58, 4964–4979. [Google Scholar] [CrossRef]

- Ormándi, R.; Hegedűs, I.; Jelasity, M. Gossip learning with linear models on fully distributed data. Concurr. Comput. Pract. Exp. 2012, 25, 556–571. [Google Scholar] [CrossRef]

- Zhang, G.P.; Berardi, V.L. Time series forecasting with neural network ensembles: An application for exchange rate prediction. J. Oper. Res. Soc. 2001, 52, 652–664. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014. [Google Scholar] [CrossRef]

- Li, X.; Ma, X.; Xiao, F.; Wang, F.; Zhang, S. Application of Gated Recurrent Unit (GRU) Neural Network for Smart Batch Production Prediction. Energies 2020, 13, 6121. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Wu, N.; Green, B.; Ben, X.; O’Banion, S. Deep Transformer Models for Time Series Forecasting: The Influenza Prevalence Case. arXiv 2020, arXiv:2001.08317. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B.; Snyder, R.D.; Grose, S. A state space framework for automatic forecasting using exponential smoothing methods. Int. J. Forecast. 2002, 18, 439–454. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, D.; Reindl, T.; Walsh, W.M. Short-term solar irradiance forecasting using exponential smoothing state space model. Energy 2013, 55, 1104–1113. [Google Scholar] [CrossRef]

- Corberán-Vallet, A.; Bermúdez, J.D.; Vercher, E. Forecasting correlated time series with exponential smoothing models. Int. J. Forecast. 2011, 27, 252–265. [Google Scholar] [CrossRef]

- Büyükşahin, U.; Ertekin, c. Improving forecasting accuracy of time series data using a new ARIMA-ANN hybrid method and empirical mode decomposition. Neurocomputing 2019, 361, 151–163. [Google Scholar] [CrossRef]

- Wahedi, H.; Wrona, K.; Heltoft, M.; Saleh, S.; Knudsen, T.R.; Bendixen, U.; Nielsen, I.; Saha, S.; Gregers Sandager, B.; Duck Young, K.; et al. Improving Accuracy of Time Series Forecasting by Applying an ARIMA-ANN Hybrid Model; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Panigrahi, S.; Behera, H.S. Time Series Forecasting Using Differential Evolution-Based ANN Modelling Scheme. Arab. J. Sci. Eng. 2020, 45, 11129–11146. [Google Scholar] [CrossRef]

- Flores, J.J.; Graff, M.; Rodriguez, H. Evolutive design of ARMA and ANN models for time series forecasting. Renew. Energy 2012, 44, 225–230. [Google Scholar] [CrossRef]

- Donate, J.P.; Li, X.; Sánchez, G.G.; de Miguel, A.S. Time series forecasting by evolving artificial neural networks with genetic algorithms, differential evolution and estimation of distribution algorithm. Neural Comput. Appl. 2011, 22, 11–20. [Google Scholar] [CrossRef]

- Son, N.N.; Van Cuong, N. Neuro-evolutionary for time series forecasting and its application in hourly energy consumption prediction. Neural Comput. Appl. 2023, 35, 21697–21707. [Google Scholar] [CrossRef]

- Tiwari, S.K.; Kumaraswamidhas, L.A.; Garg, N. Comparison of SVM and ARIMA Model in Time-Series Forecasting of Ambient Noise Levels; Springer: Singapore, 2021; pp. 777–786. [Google Scholar] [CrossRef]

- Khairalla, M.A.; Ning, X. Financial Time Series Forecasting Using Hybridized Support Vector Machines and ARIMA Models. In Proceedings of the 2017 International Conference on Wireless Communications, Networking and Applications, WCNA 2017, Shenzhen, China, 20–22 October 2017. [Google Scholar] [CrossRef]

- Aradhye, G.; Rao, A.C.S.; Mastan Mohammed, M.D. A Novel Hybrid Approach for Time Series Data Forecasting Using Moving Average Filter and ARIMA-SVM. In Emerging Technologies in Data Mining and Information Security; Springer: Singapore, 2018; pp. 369–381. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Ahmed, N.; Schmidt-Thieme, L. Sparse self-attention guided generative adversarial networks for time-series generation. Int. J. Data Sci. Anal. 2023, 16, 421–434. [Google Scholar] [CrossRef]

- Vuletić, M.; Prenzel, F.; Cucuringu, M. Fin-GAN: Forecasting and classifying financial time series via generative adversarial networks. Quant. Financ. 2024, 24, 175–199. [Google Scholar] [CrossRef]

- Li, Y.; Lu, X.; Wang, Y.; Dou, D. Generative Time Series Forecasting with Diffusion, Denoise, and Disentanglement. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 23009–23022. [Google Scholar]

- Cai, B.; Yang, S.; Gao, L.; Xiang, Y. Hybrid variational autoencoder for time series forecasting. Knowl. -Based Syst. 2023, 281, 111079. [Google Scholar] [CrossRef]

- Yoon, J.; Jarrett, D.; van der Schaar, M. Time-series Generative Adversarial Networks. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Torres, J.F.; Hadjout, D.; Sebaa, A.; Martínez-Álvarez, F.; Troncoso, A. Deep Learning for Time Series Forecasting: A Survey. Big Data 2021, 9, 3–21. [Google Scholar] [CrossRef]

- Lara-Benítez, P.; Carranza-García, M.; Riquelme, J.C. An Experimental Review on Deep Learning Architectures for Time Series Forecasting. Int. J. Neural Syst. 2021, 31, 2130001. [Google Scholar] [CrossRef]

- Masini, R.P.; Medeiros, M.C.; Mendes, E.F. Machine learning advances for time series forecasting. J. Econ. Surv. 2021, 37, 76–111. [Google Scholar] [CrossRef]

- Gasparin, A.; Lukovic, S.; Alippi, C. Deep learning for time series forecasting: The electric load case. CAAI Trans. Intell. Technol. 2021, 7, 1–25. [Google Scholar] [CrossRef]

- Demirtas, H. Flexible Imputation of Missing Data. J. Stat. Softw. 2018, 85, 1–5. [Google Scholar] [CrossRef]

- Tyler, D.E. Robust Statistics: Theory and Methods. J. Am. Stat. Assoc. 2008, 103, 888–889. [Google Scholar] [CrossRef]

- Pruengkarn, R.; Wong, K.W.; Fung, C.C. A Review of Data Mining Techniques and Applications. J. Adv. Comput. Intell. Intell. Inform. 2017, 21, 31–48. [Google Scholar] [CrossRef]

- Shumway, R.H.; Stoffer, D.S. Time Series Analysis and Its Applications: With R Examples; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with artificial neural networks: The state of the art. Int. J. Forecast. 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Taylor, J.W.; Buizza, R. Density forecasting for weather derivative pricing. Int. J. Forecast. 2006, 22, 29–42. [Google Scholar] [CrossRef]

- Guerard, J.B.; Clemen, R.T. Collinearity and the use of latent root regression for combining GNP forecasts. J. Forecast. 1989, 8, 231–238. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; Otexts: Hoboken, NJ, USA, 2018. [Google Scholar]

- Box, G. Box and Jenkins: Time Series Analysis, Forecasting and Control; Palgrave Macmillan: London, UK, 2013; pp. 161–215. [Google Scholar] [CrossRef]

- Lutkepohl, H. New Introduction to Multiple Time Series Analysis, 1st ed.; Springer: Berlin, Germany, 2005. [Google Scholar]

- Scott, S.L.; Varian, H.R. Predicting the present with Bayesian structural time series. Int. J. Math. Model. Numer. Optim. 2014, 5, 4. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- De Gooijer, J.G.; Hyndman, R.J. 25 years of time series forecasting. Int. J. Forecast. 2006, 22, 443–473. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200209. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A. (Eds.) State-space models. In Introduction to Time Series and Forecasting; Springer: New York, NY, USA, 2002; pp. 259–316. [Google Scholar] [CrossRef]

- Pankratz, A. Forecasting with Univariate Box-Jenkins Models; Wiley: Hoboken, NJ, USA, 1983. [Google Scholar]

- Tsay, R.S. Analysis of Financial Time Series, 2nd ed.; Wiley Series in Probability and Statistics; Wiley-Blackwell: Chichester, UK, 2005. [Google Scholar]

- Chen, P.; Pedersen, T.; Bak-Jensen, B.; Chen, Z. ARIMA-Based Time Series Model of Stochastic Wind Power Generation. IEEE Trans. Power Syst. 2010, 25, 667–676. [Google Scholar] [CrossRef]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Anderson, D.; Burnham, K. Model Selection and Multimodel Inference; Springer: New York, NY, USA, 2004. [Google Scholar] [CrossRef]

- Yamak, P.T.; Yujian, L.; Gadosey, P.K. A Comparison between ARIMA, LSTM, and GRU for Time Series Forecasting. In Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence. ACM, ACAI 2019, Sanya, China, 20–22 December 2019. [Google Scholar] [CrossRef]

- Hayes, A. Autoregressive Integrated Moving Average (ARIMA) Prediction Model. 2023. Available online: https://www.investopedia.com/terms/a/autoregressive-integrated-moving-average-arima.asp (accessed on 20 September 2023).

- Çınar, Z.M.; Abdussalam Nuhu, A.; Zeeshan, Q.; Korhan, O.; Asmael, M.; Safaei, B. Machine Learning in Predictive Maintenance towards Sustainable Smart Manufacturing in Industry 4.0. Sustainability 2020, 12, 8211. [Google Scholar] [CrossRef]

- Haykin, S.O. Neural Networks and Learning Machines, 3rd ed.; Pearson: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.J.; Chang, M.W.; Lin, C.J. Load forecasting using support vector Machines: A study on EUNITE competition 2001. IEEE Trans. Power Syst. 2004, 19, 1821–1830. [Google Scholar] [CrossRef]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Mandic, D.P.; Chambers, J.A. Recurrent Neural Networks for Prediction; Wiley: Hoboken, NJ, USA, 2001. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. arXiv 2014, arXiv:1409.3215. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Ni, Z.; Zhang, C.; Karlsson, M.; Gong, S. A study of deep learning-based multi-horizon building energy forecasting. Energy Build. 2024, 303, 113810. [Google Scholar] [CrossRef]

- Kumbala, B.R. Predictive Maintenance of NOx Sensor Using Deep Learning: Time Series Prediction with Encoder-Decoder LSTM. Master Thesis, Blekinge Institute of Technology, Karlskrona, Sweden, 2019. [Google Scholar]

- Wang, Y.; Li, T.; Lu, W.; Cao, Q. Attention-inspired RNN Encoder-Decoder for Sensory Time Series Forecasting. Procedia Comput. Sci. 2022, 209, 103–111. [Google Scholar] [CrossRef]

- Song, W.; Chandramitasari, W.; Weng, W.; Fujimura, S. Short-Term Electricity Consumption Forecasting Based on the Attentive Encoder-Decoder Model. IEEJ Trans. Electron. Inf. Syst. 2020, 140, 846–855. [Google Scholar] [CrossRef]

- Guo, J.; Lin, P.; Zhang, L.; Pan, Y.; Xiao, Z. Dynamic adaptive encoder-decoder deep learning networks for multivariate time series forecasting of building energy consumption. Appl. Energy 2023, 350, 121803. [Google Scholar] [CrossRef]

- Cho, Y.; Go, B.G.; Sung, J.H.; Cho, Y.S. Double Encoder-Decoder Model for Improving the Accuracy of the Electricity Consumption Prediction in Manufacturing. KIPS Trans. Softw. Data Eng. 2020, 9, 419–430. [Google Scholar] [CrossRef]

- Laubscher, R. Time-series forecasting of coal-fired power plant reheater metal temperatures using encoder-decoder recurrent neural networks. Energy 2019, 189, 116187. [Google Scholar] [CrossRef]

- Vapnik, V.; Chapelle, O. Bounds on Error Expectation for Support Vector Machines. Neural Comput. 2000, 12, 2013–2036. [Google Scholar] [CrossRef] [PubMed]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Jang, J.S.; Sun, C.T. Neuro-fuzzy modeling and control. Proc. IEEE 1995, 83, 378–406. [Google Scholar] [CrossRef]

- Yager, R.R.; Filev, D.P. Essentials of fuzzy modeling and control. N. Y. 1994, 388, 22–23. [Google Scholar]

- Tajmouati, S.; Wahbi, B.E.; Bedoui, A.; Abarda, A.; Dakkoun, M. Applying k-nearest neighbors to time series forecasting: Two new approaches. arXiv 2021, arXiv:2103.14200. [Google Scholar] [CrossRef]

- Mahaseth, R.; Kumar, N.; Aggarwal, A.; Tayal, A.; Kumar, A.; Gupta, R. Short term wind power forecasting using k-nearest neighbour (KNN). J. Inf. Optim. Sci. 2022, 43, 251–259. [Google Scholar] [CrossRef]

- Arroyo, J.; Espínola, R.; Maté, C. Different Approaches to Forecast Interval Time Series: A Comparison in Finance. Comput. Econ. 2010, 37, 169–191. [Google Scholar] [CrossRef]

- Al-Garadi, M.A.; Mohamed, A.; Al-Ali, A.K.; Du, X.; Ali, I.; Guizani, M. A Survey of Machine and Deep Learning Methods for Internet of Things (IoT) Security. IEEE Commun. Surv. Tutorials 2020, 22, 1646–1685. [Google Scholar] [CrossRef]

- Borovykh, A.; Bohte, S.; Oosterlee, C.W. Conditional Time Series Forecasting with Convolutional Neural Networks. arXiv 2017, arXiv:1703.04691. [Google Scholar] [CrossRef]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery. In Information Processing in Medical Imaging; Springer International Publishing: Cham, Switzerland, 2017; pp. 146–157. [Google Scholar] [CrossRef]

- Gui, T.; Zhang, Q.; Zhao, L.; Lin, Y.; Peng, M.; Gong, J.; Huang, X. Long Short-Term Memory with Dynamic Skip Connections. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6481–6488. [Google Scholar] [CrossRef]

- Mandal, P.; Senjyu, T.; Urasaki, N.; Funabashi, T. A neural network based several-hour-ahead electric load forecasting using similar days approach. Int. J. Electr. Power Energy Syst. 2006, 28, 367–373. [Google Scholar] [CrossRef]

- Zhang, G.; Qi, M. Neural network forecasting for seasonal and trend time series. Eur. J. Oper. Res. 2005, 160, 501–514. [Google Scholar] [CrossRef]

- Kamarianakis, Y.; Shen, W.; Wynter, L. Real-time road traffic forecasting using regime-switching space-time models and adaptive LASSO. Appl. Stoch. Model. Bus. Ind. 2012, 28, 297–315. [Google Scholar] [CrossRef]

- Baruah, P.; Chinnam, R.B. HMMs for diagnostics and prognostics in machining processes. Int. J. Prod. Res. 2005, 43, 1275–1293. [Google Scholar] [CrossRef]

- Wahid, A.; Breslin, J.G.; Intizar, M.A. Prediction of Machine Failure in Industry 4.0: A Hybrid CNN-LSTM Framework. Appl. Sci. 2022, 12, 4221. [Google Scholar] [CrossRef]

- Liu, C.; Zhu, H.; Tang, D.; Nie, Q.; Li, S.; Zhang, Y.; Liu, X. A transfer learning CNN-LSTM network-based production progress prediction approach in IIoT-enabled manufacturing. Int. J. Prod. Res. 2022, 61, 4045–4068. [Google Scholar] [CrossRef]

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-head CNN–RNN for multi-time series anomaly detection: An industrial case study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Zhang, X.; Lei, Y.; Chen, H.; Zhang, L.; Zhou, Y. Multivariate Time-Series Modeling for Forecasting Sintering Temperature in Rotary Kilns Using DCGNet. IEEE Trans. Ind. Inform. 2021, 17, 4635–4645. [Google Scholar] [CrossRef]

- Gardner, E.S. Exponential smoothing: The state of the art. J. Forecast. 1985, 4, 1–28. [Google Scholar] [CrossRef]

- Sapankevych, N.I.; Sankar, R. Time Series Prediction Using Support Vector Machines: A Survey. IEEE Comput. Intell. Mag. 2009, 4, 24–38. [Google Scholar] [CrossRef]

- Ghayvat, H.; Pandya, S.; Patel, A. Deep Learning Model for Acoustics Signal Based Preventive Healthcare Monitoring and Activity of Daily Living. In Proceedings of the 2nd International Conference on Data, Engineering and Applications (IDEA), Bhopal, India, 28–29 February 2020; pp. 1–7. [Google Scholar] [CrossRef]

| Hybrid Models | Novelty |

|---|---|

| ARIMA + ANN | The ARIMA model is used to capture the periodic and linear elements in the training data, and the ANN is used to capture the non-linear features. This type of model can capture the linear and non-linear features in the training data, which has proven to be more useful for complex datasets. |

| Dynamic model + Fuzzy | The dynamic model can capture the temporal features in the datasets, and when it incorporates AR components, it can capture the linear dependencies in the data. The Fuzzy model captures the uncertainty in the dataset. This type of model is robust to the changing patterns in the underlying data. |

| ANN + Evolutionary | Multiple structures of ANN such as LSTM and RNN can be used to capture the complex patterns and dependencies in the data. The evolutionary algorithm part is used to optimize the hyperparameters of the underlying ANN model. This model can enhance the forecasting accuracy of the ANN and reduces overfitting. |

| ARIMA + SVM | ARIMA is effective at capturing the linear features in the data along with seasonality patterns. SVM captures the non-linear features in the residuals that are missed by ARIMA. |

| Ensemble + hybrid ANNs | The Ensemble ANN model combines the forecasts from multiple ANN models, which results in better accuracy. It is particularly useful for capturing the non-linear features in the training data. |

| Kernel-based SVRs | The kernel function allows SVR to perform non-linear regression and reduces the risk of over-fitting by using the subsets of training data as support vectors. Also, Vapnik’s Structural Risk Minimization Principle of SVR reduces the overall error by minimizing the upper bound on the generalization error. |

| EMD + AR + SVR | Hybridization of SVR with AR and different EMD methods. |

| Fuzzy Neuro | Fuzzy logic incorporates the uncertainty in the data, while the neural network is able to capture the non-linear relationships in the data. It is particularly useful when the dataset is complex and an understanding of the underlying processes is as important as the accuracy of the forecasts. |

| Fuzzy + SVM + Evolutionary | Fuzzy logic is used for handling data uncertainty, and the pattern recognition ability of SVM is used for accurate forecasting. The evolutionary algorithm provides the global optimization to fine tune the system. It is very useful in cases where the data can be highly volatile and ambiguous. |

| Model | Advantages | Disadvantages |

|---|---|---|

| ARIMA | - Handles linear relationships - Can model stationary time-series data | - Requires data to be stationary - Struggles with non-linear patterns |

| ANN | - Can model complex non-linear relationships - Flexible architecture | - Requires large datasets - Black box nature |

| RNN | - Can capture sequences and patterns over time - Suitable for time-series data | - Can struggle with long-term dependencies |

| LSTM | - Addresses vanishing gradient problem of RNNs - Can model long-term dependencies | - Computationally intensive - Requires careful tuning |

| KNN | - Non-parametric - Simple to understand | - Computationally expensive - Sensitive to irrelevant features |

| Fuzzy Networks | - Can handle uncertainty and vagueness - Flexible modeling | - Requires expert knowledge - Can be computationally intensive |

| Regression Models | - Interpretable - Can handle multiple predictors | - Assumes linear relationship - Sensitive to outliers |

| Prophet | - Handles seasonality and holidays - Robust to missing data | - Assumes additive model - Might require domain-specific adjustments |

| MeanAbsolute | - Simple to compute - Provides a baseline measure | - Does not capture complex patterns |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fatima, S.S.W.; Rahimi, A. A Review of Time-Series Forecasting Algorithms for Industrial Manufacturing Systems. Machines 2024, 12, 380. https://doi.org/10.3390/machines12060380

Fatima SSW, Rahimi A. A Review of Time-Series Forecasting Algorithms for Industrial Manufacturing Systems. Machines. 2024; 12(6):380. https://doi.org/10.3390/machines12060380

Chicago/Turabian StyleFatima, Syeda Sitara Wishal, and Afshin Rahimi. 2024. "A Review of Time-Series Forecasting Algorithms for Industrial Manufacturing Systems" Machines 12, no. 6: 380. https://doi.org/10.3390/machines12060380

APA StyleFatima, S. S. W., & Rahimi, A. (2024). A Review of Time-Series Forecasting Algorithms for Industrial Manufacturing Systems. Machines, 12(6), 380. https://doi.org/10.3390/machines12060380