VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation

Abstract

1. Introduction

- Development of an integrated VR-based training environment that combines full-body tracking using the Quest Pro HMD with a real-time ROS–Unity bridge, enabling precise human motion capture and dynamic control of robotic models.

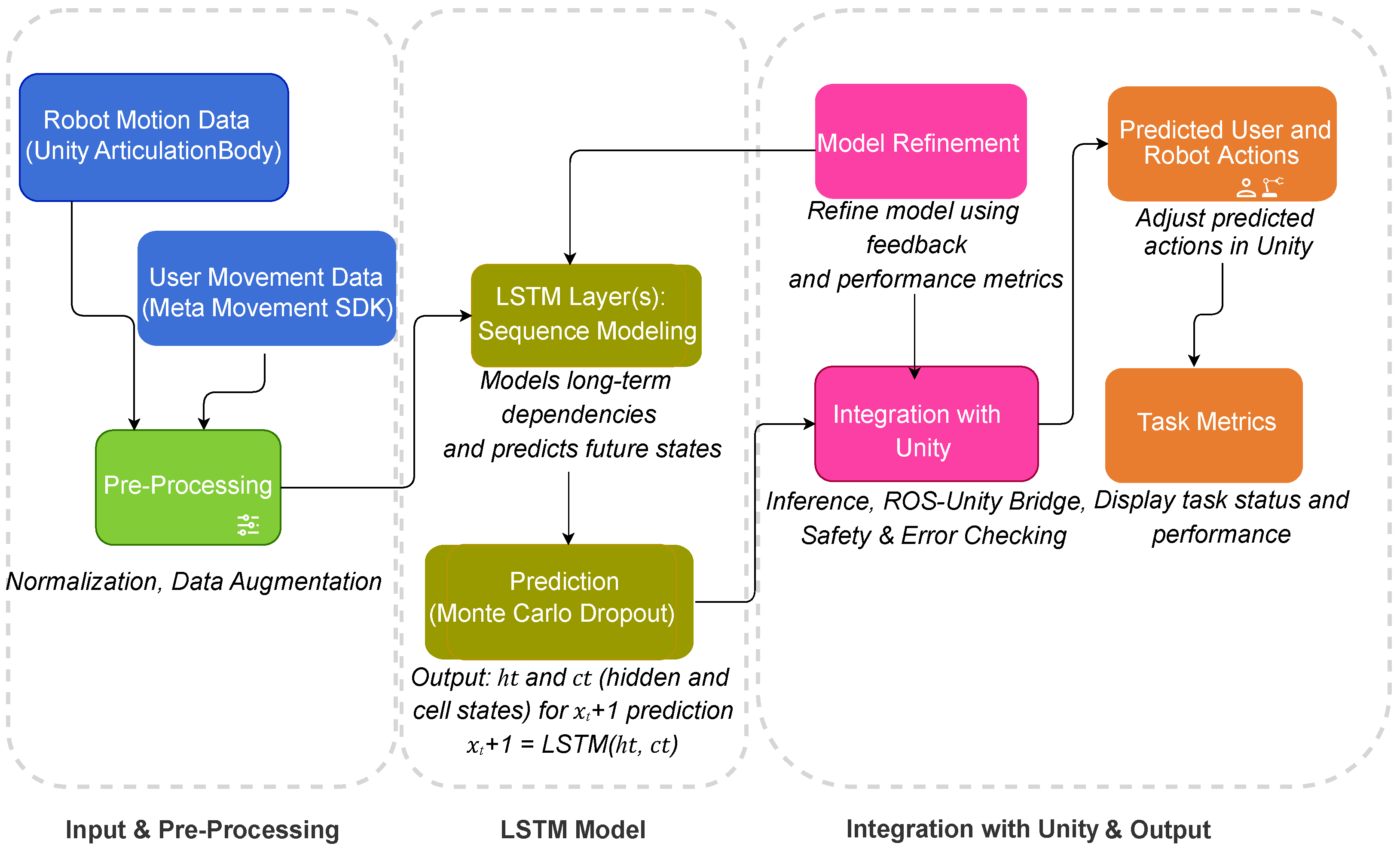

- Implementation of an LSTM-based motion prediction model with Monte Carlo dropout for uncertainty estimation, which continuously refines robotic motion trajectories, leveraging past interaction data for dynamic, real-time task adaptation. The model runs using Unity’s Barracuda engine, eliminating external inference server latency and ensuring responsive interactions. This builds upon the work of Liu et al. [13] but extends it to immersive VR environments with real-time feedback loops.

- Creation of a synthetic data generation pipeline that captures complex human–robot interactions to refine disassembly sequence planning and optimize collaborative task execution. Our approach specifically targets human–robot collaboration in disassembly tasks, addressing a critical gap in the literature identified by Jacob et al. [14].

2. Related Work

2.1. VR/AR Training Systems for Industrial Applications

2.2. Human Motion Prediction in HRC

2.3. Inverse and Forward Kinematics in Robotic Training

2.4. Digital Twins and Industry 4.0 Integration

2.5. Comparison with Existing Approaches

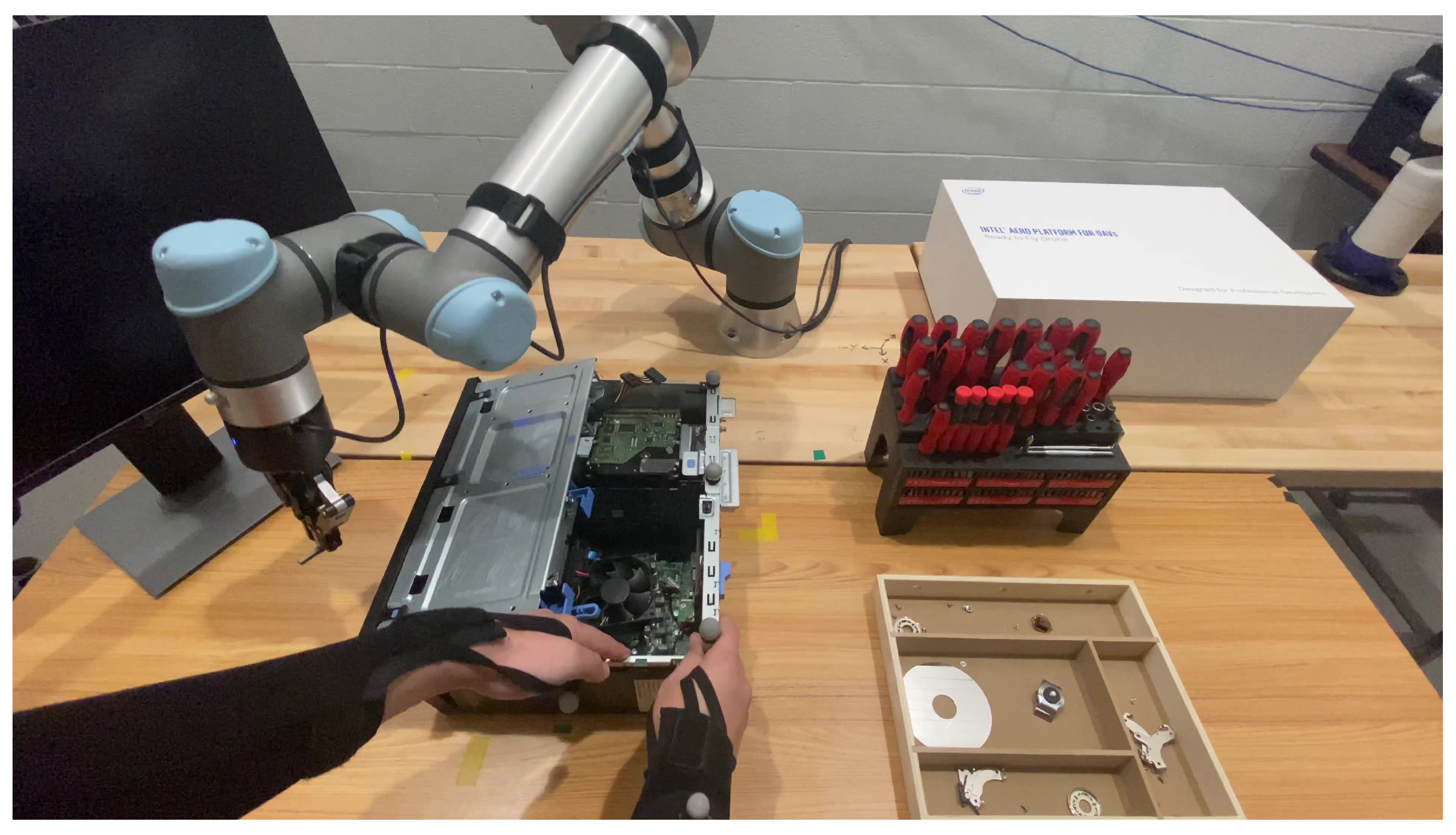

3. VR Training System Implementation

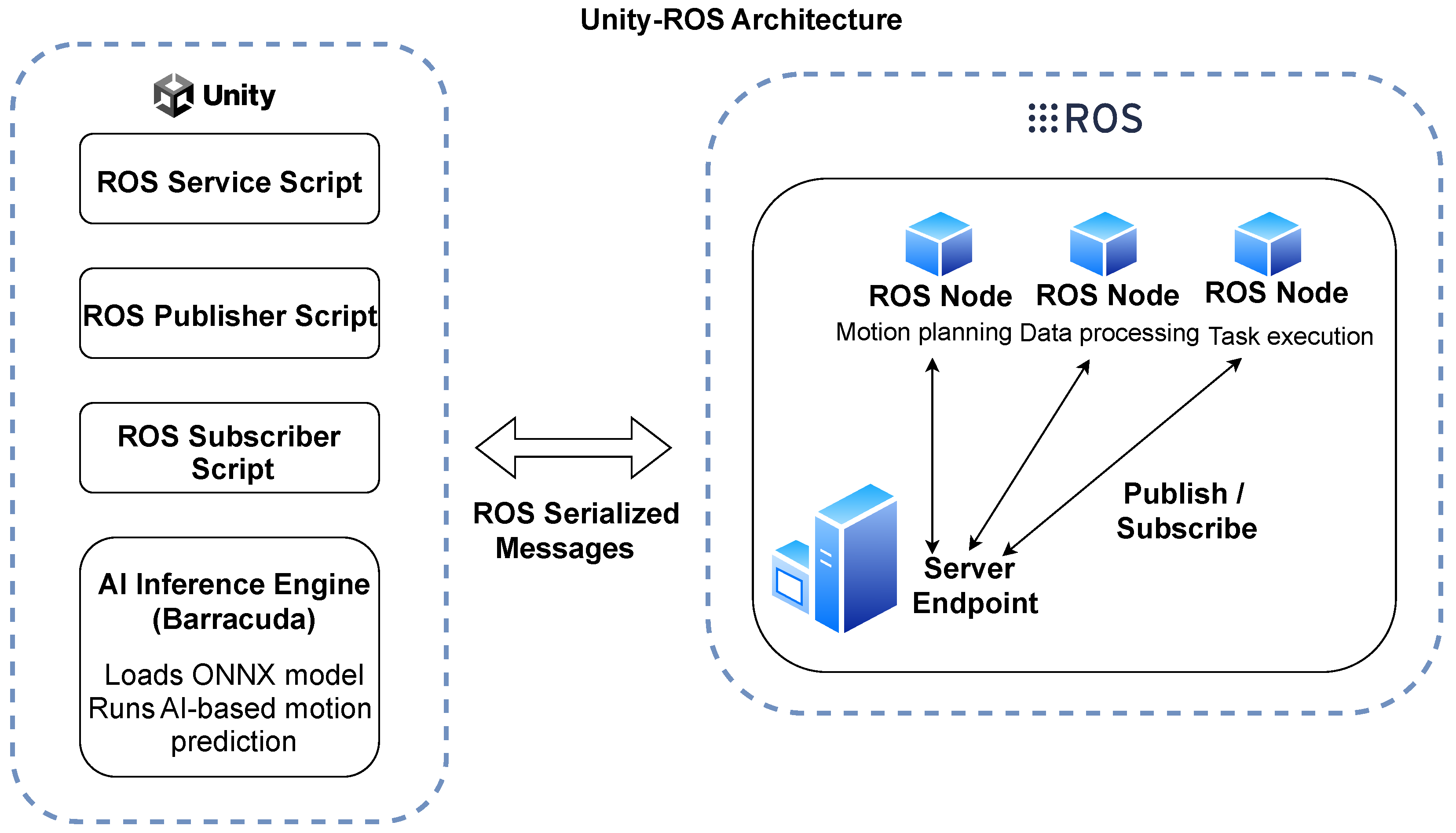

3.1. Technology Stack

3.2. VR Training System Architecture

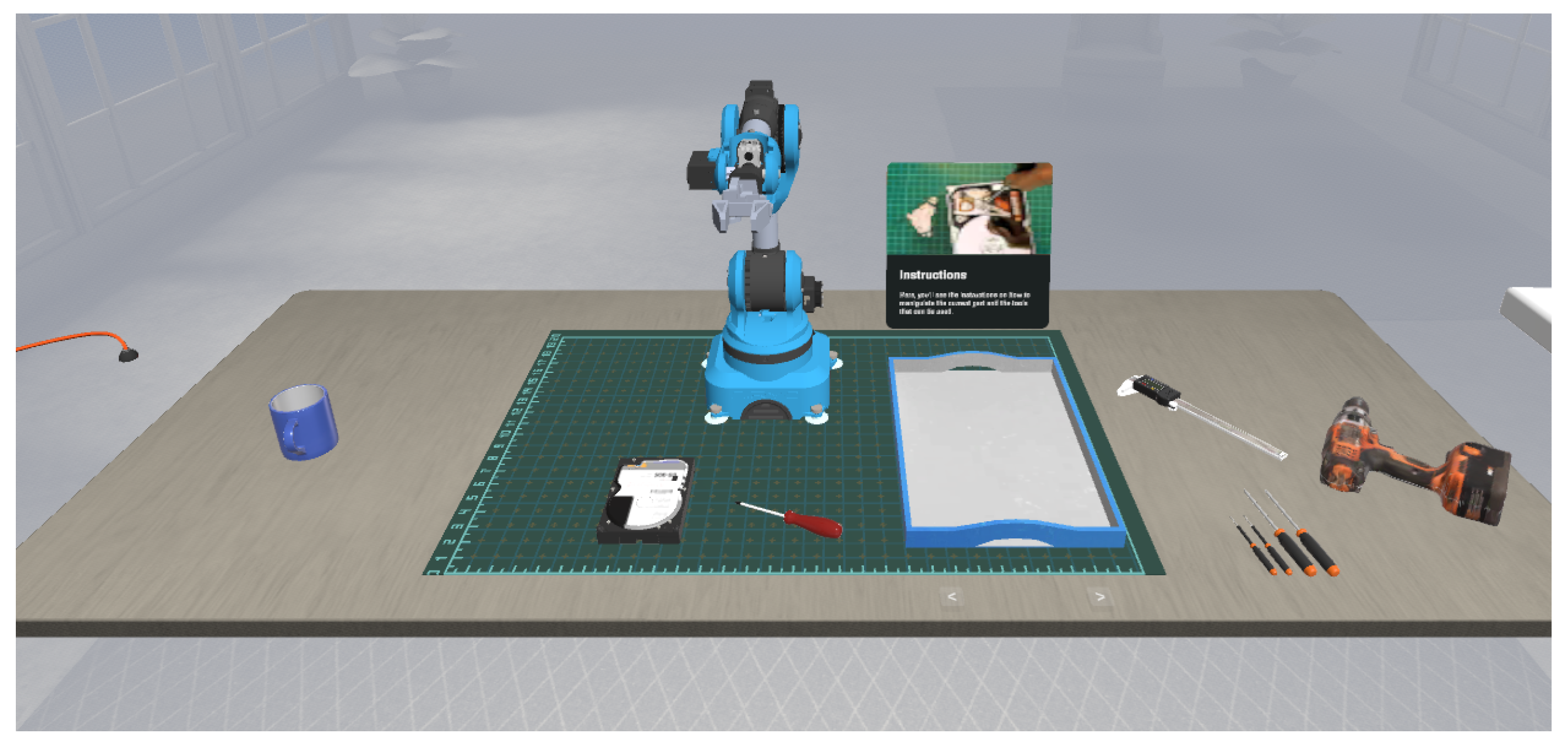

3.2.1. VR Environment

3.2.2. Robotic Control System

- Loads a pre-trained ONNX LSTM model optimized for human motion forecasting.

- Processes real-time user movement data to anticipate the next actions.

- Feeds the predicted motion trajectory into the ROS network for adaptive robot response.

- Enables low-latency inference directly in Unity’s runtime, reducing dependence on external servers.

- Supports GPU acceleration when available, improving the efficiency of AI inference in VR.

- Adaptive task scheduling, reducing wait times in collaborative workflows.

- Enhanced safety mechanisms, as the robot can proactively adjust to user movements.

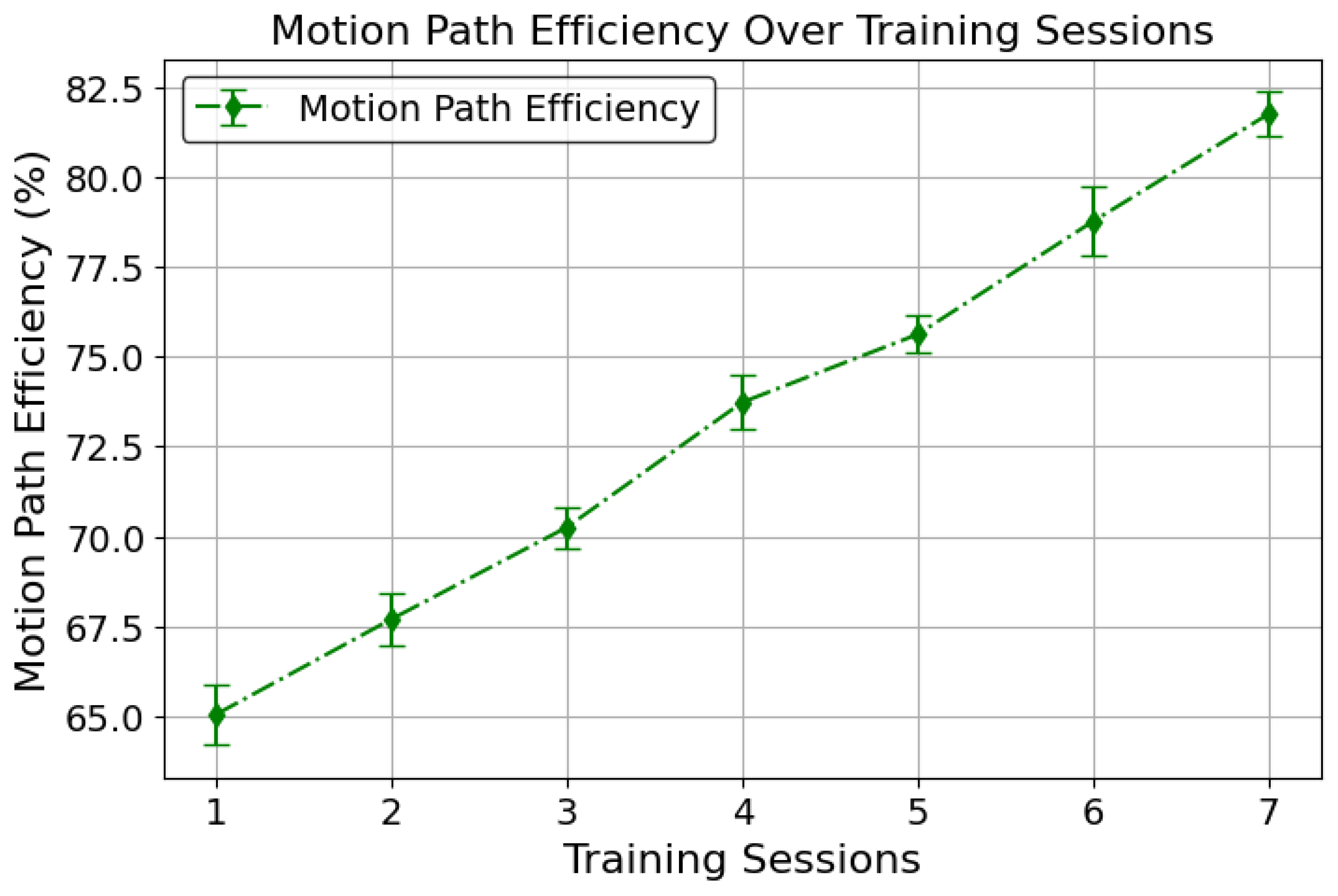

- Increased task efficiency, with optimized movement paths informed by AI predictions.

3.2.3. Data Collection and Feedback Mechanisms

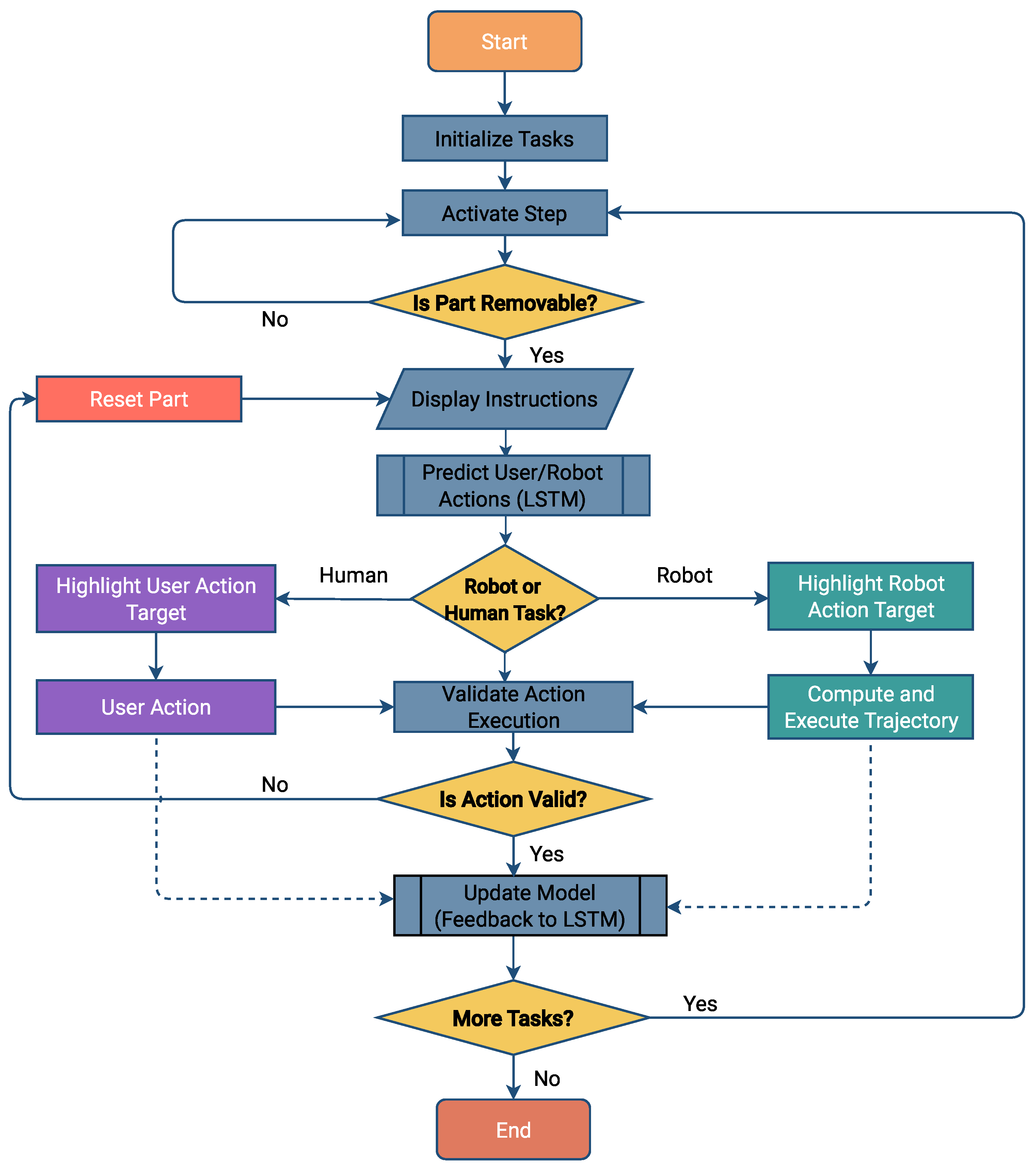

3.2.4. Task Flow and User Actions

- Initialize tasks: the VR environment is set up with all necessary tools, components, and the initial system configuration.

- Activate step: the system prompts the user to begin the task, initiating the first step in the disassembly process.

- Is part removable?: The system checks whether the targeted part can be safely removed. If not, the part is reset, and the process loops back to prompt the user again.

- Display instructions: the VR system provides detailed instructions specific to the current step in the task, guiding the user through the disassembly process.

- Predict user/robot actions (LSTM): the LSTM model predicts both the user’s and the robot’s next actions based on the current task state and previous interactions.

- Human or robot task?: the system determines whether the predicted action should be executed by the user (human task) or by the robot (robot task).

- Apply shader and execute actions:

- Human task: a purple shader highlights the part that the user needs to interact with, and the system waits for the user’s action.

- Robot task: a green shader is applied to the part to be manipulated by the robot, followed by the computation and execution of the robot’s trajectory.

- Validate action execution: the system validates whether the action performed by the user or robot was correct and executed as planned.

- Update model (feedback to LSTM): the results of the action validation are fed back into the LSTM model to update its predictions and improve future interactions.

- Is action valid?: The system checks if the action taken was valid. If not, it resets the part and loops back to display instructions.

- More tasks?: The system checks if there are additional tasks to be performed. If yes, it returns to the “Activate Step” phase; if not, it proceeds to calculate the final metrics.

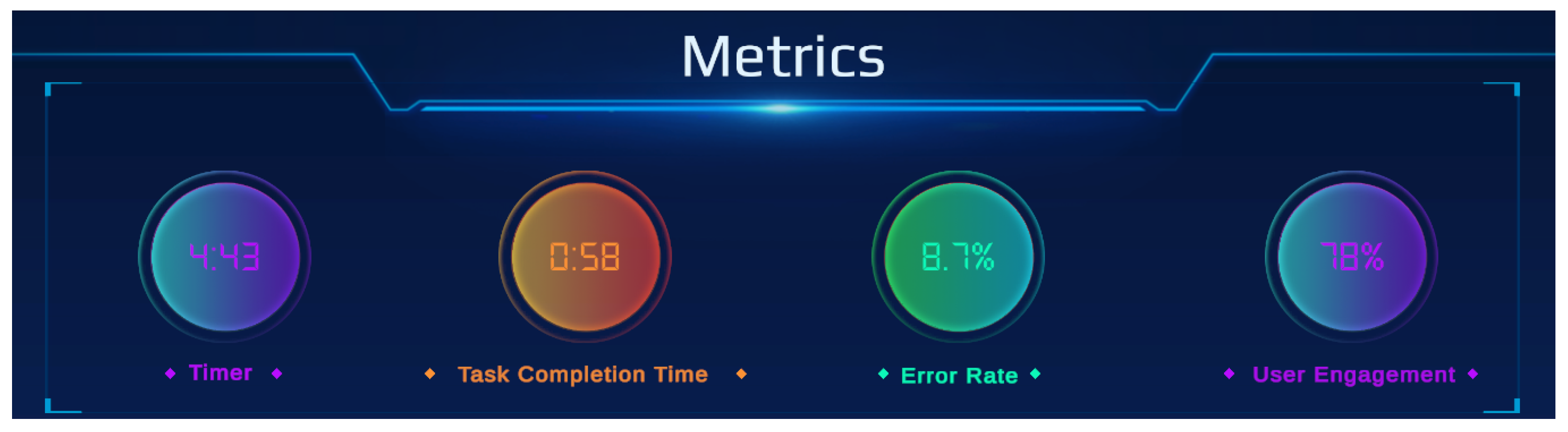

- Metrics calculation: at the end of the session, the system computes and displays performance metrics, providing immediate feedback to the user on the effectiveness and efficiency of their interactions.

3.3. Body Tracking and Interaction Through Meta SDKs

3.4. Performance Monitoring and Synthetic Data Generation

3.4.1. Performance Metrics

Synthetic Data for Machine Learning

3.5. Mathematical Foundations and Implementation of Forward Kinematics

- : link length, or the distance between two joint axes along the x-axis.

- : link twist, or the angle between two joint axes around the x-axis.

- : link offset, or the displacement along the z-axis.

- : joint angle, or the rotation about the z-axis.

3.5.1. Implementation in Unity

- DH parameter extraction: the joint parameters, such as angles or displacements, are extracted dynamically from Unity’s articulation bodies.

- Transformation matrix calculation: each joint’s transformation matrix is computed using the Denavit–Hartenberg (DH) parameters.

- Composite transformation: the transformation matrices are multiplied sequentially to compute the end-effector’s position and orientation relative to the robot’s base frame.

- Simulation output: the computed position is used for visualization and task execution in Unity’s 3D environment.

3.5.2. Mathematical Workflow

- Input: joint parameters .

- Transformation matrix calculation: for each joint, the transformation matrix is computed using the DH parameter set .

- Cumulative transformation: the transformation matrices are multiplied sequentially to form the composite matrix T, representing the end-effector’s pose.

- Output: the position and orientation (rotation matrix) of the end-effector in the global frame.

- Example: Calculating the End-Effector Pose

3.5.3. Applications in Robotic Training

3.6. Integrated Pipeline for Predictive Analytics

3.6.1. Synthetic Data Generation and Augmentation

3.6.2. Data Cleaning and Preprocessing

3.6.3. Data Augmentation

- Motion perturbations: a combination of Gaussian noise injection (0.005) and a small scaling factor of (0.05) is applied to simulate real-world sensor noise and variations in movement execution.

- Temporal warping: slight shifts in the motion sequence timing help simulate variations in human and robotic reaction times.

3.6.4. Augmented Data for Training

3.6.5. LSTM Model Architecture and Training

- User movement data captured from the Meta Movement SDK.

- Robotic motion data captured through Unity’s ArticulationBody components [51].

- is the input motion data at time t.

- is the updated hidden state, encoding learned temporal dependencies.

- is the updated cell state, retaining long-term memory across sequences.

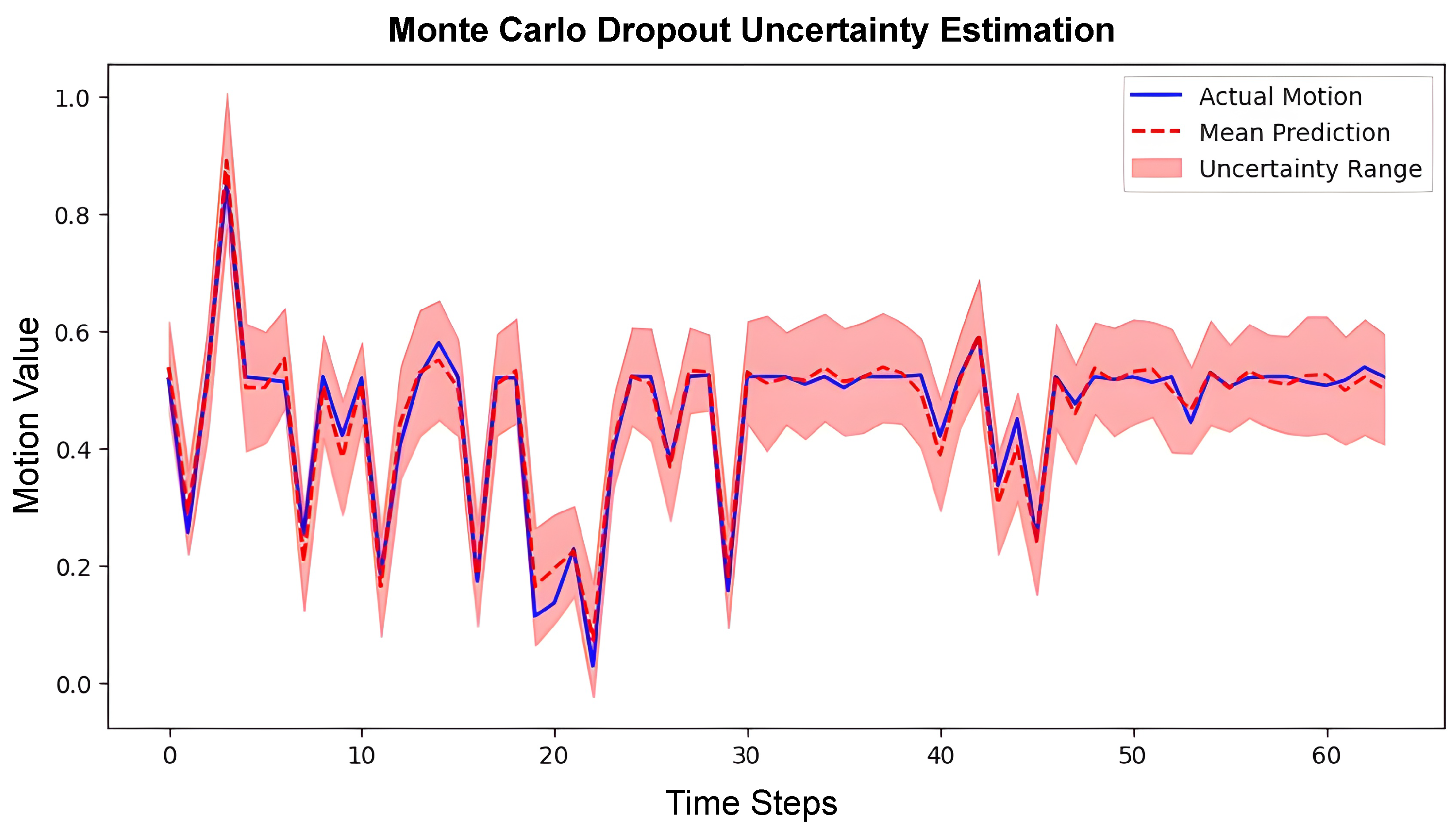

Monte Carlo Dropout for Uncertainty Estimation

Loss Function and Training

3.6.6. Model Training and Evaluation

Training Setup

- Sequence length: 50 time steps per input sequence.

- Batch size: 128.

- Optimizer: AdamW with weight decay ().

- Learning rate: 0.001, decayed using a cosine annealing schedule.

Evaluation Metrics

- Root Mean Squared Error (RMSE): 0.0179

- Mean Absolute Error (MAE): 0.0060

- R2 score: 0.4965

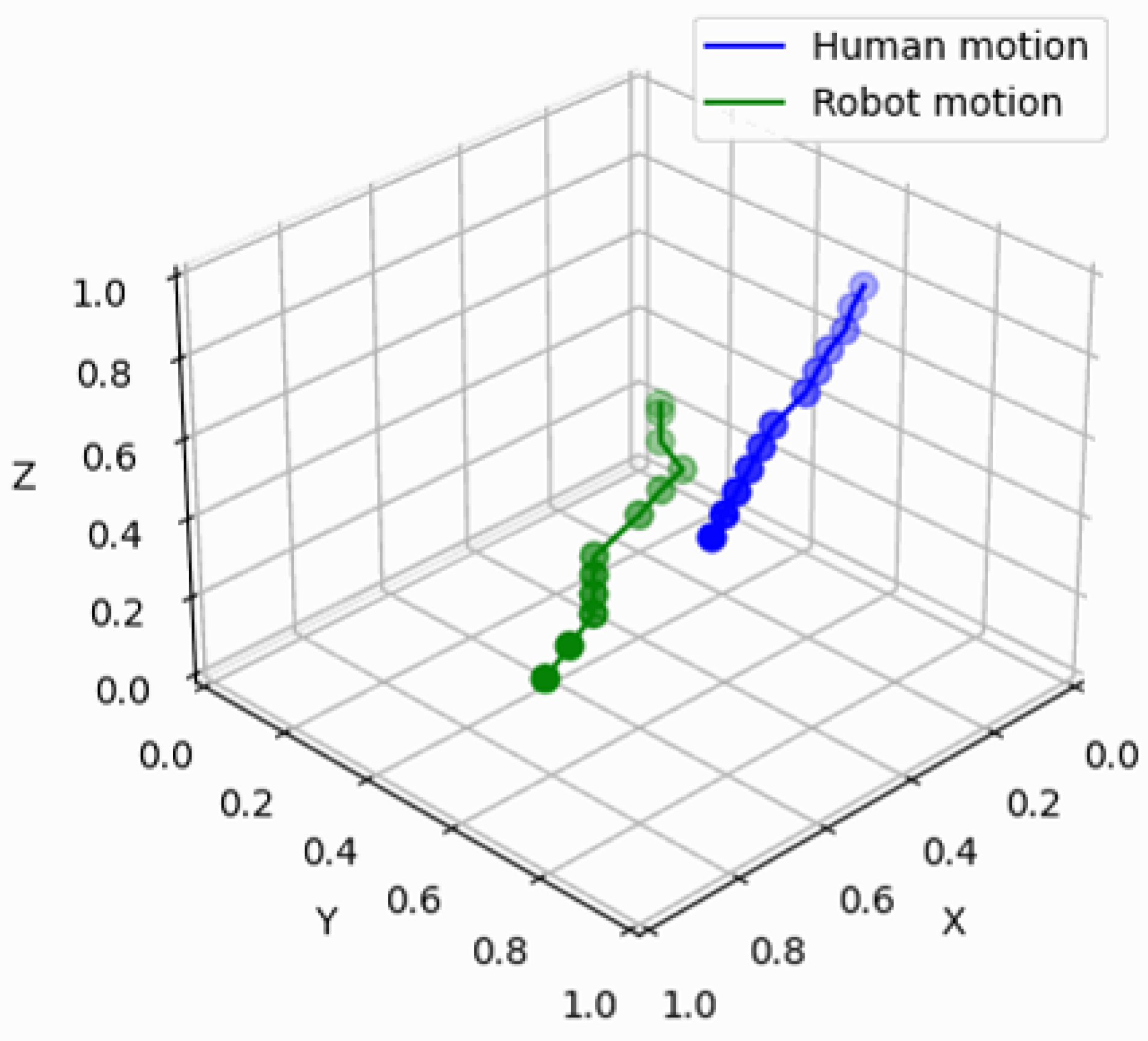

Visualization and Results

- LSTM predictions with Monte Carlo dropout: a time-series plot comparing predicted and actual motion sequences, including uncertainty estimation using Monte Carlo dropout (Figure 7).

- True vs. predicted values: scatter plot comparing predicted and actual values, demonstrating model fit (Figure 8).

- Performance metrics table: summarizing model accuracy (Table 1).

3.6.7. Docker-Based Deployment

3.6.8. Integration into Unity Environment

- The ONNX model is imported into Unity using the Unity Barracuda library, a lightweight inference engine optimized for neural networks [45].

- The ROS–Unity bridge is established using ROS#, enabling real-time communication between Unity and the ROS [44].

- User and robotic motion data are streamed to the ONNX model for inference, generating movement predictions. These predictions guide robotic trajectory adjustments, optimizing collaborative task allocation in disassembly.

3.6.9. Real-Time Inference and Monte Carlo Dropout

- More reliable robotic trajectory adjustments by considering uncertainty estimates.

- Enhanced user experience by adapting training difficulty based on confidence levels in motion predictions.

- Identification of high-variance cases where additional data collection or model fine-tuning is needed.

3.6.10. Validation and Refinement

3.6.11. Benefits of the Integration

- Efficiency: ONNX provides fast and lightweight inference suitable for real-time VR applications [55].

- Cross-platform compatibility: The ONNX format enables deployment across different devices and hardware configurations [55].

- Reproducibility: encapsulation in a Docker container ensures that all components (ROS, Unity, and model dependencies) remain consistent across various development and deployment environments [58].

- Uncertainty-aware predictions: MC dropout improves prediction reliability by incorporating uncertainty into robotic motion planning.

Deployment and Future Considerations

4. VR Training and Discussion

4.1. Hard Disk Disassembly Task

4.2. Training Setup

4.3. Demonstration of a Short Disassembly Task

4.4. Discussion

4.4.1. Training Performance Analysis

- Timer: displays the session duration in minutes.

- Task completion time: shows the time to complete the last disassembly step.

- Error rate: tracks the percentage of incorrect actions performed by the user.

- User engagement: represents an engagement score based on interaction frequency and consistency.

4.4.2. Effectiveness

4.4.3. Comparison with Traditional Training Methods

4.5. Implications for VR Training

4.6. Limitations of the System

5. Conclusions and Future Work

5.1. Conclusions

- Enhanced engagement: the interactive VR environment increases user engagement through realistic simulation of industrial tasks.

- Accelerated learning: immediate feedback and risk-free repetition enable faster skill acquisition without material costs.

- Improved precision: advanced tracking technology ensures proper technique execution, particularly for fine motor skills.

- Data-driven insights: the system collects comprehensive performance metrics that inform both training optimization and motion prediction models.

5.2. Future Work

5.3. Implications for Industry

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented reality |

| VR | Virtual reality |

| MR | Mixed reality |

| HRC | Human–robot collaboration |

| ROS | Robot Operating System |

| URDF | Unified Robot Description Format |

| LSTM | Long Short-Term Memory |

| RNN | Recurrent Neural Network |

| TCT | Task completion time |

| ONNX | Open Neural Network Exchange |

References

- Mourtzis, D.; Zogopoulos, V.; Vlachou, E. Augmented Reality Application to Support Remote Maintenance as a Service in the Robotics Industry. Procedia CIRP 2017, 63, 46–51. [Google Scholar] [CrossRef]

- Webel, S.; Bockholt, U.; Engelke, T.; Gavish, N.; Olbrich, M.; Preusche, C. An augmented reality training platform for assembly and maintenance skills. Robot. Auton. Syst. 2013, 61, 398–403. [Google Scholar] [CrossRef]

- Gavish, N.; Gutierrez, T.; Webel, S.; Rodriguez, J.; Tecchia, F. Design Guidelines for the Development of Virtual Reality and Augmented Reality Training Systems for Maintenance and Assembly Tasks. Bio Web Conf. 2011, 1, 00029. [Google Scholar] [CrossRef]

- Li, J.R.; Khoo, L.P.; Tor, S.B. Desktop virtual reality for maintenance training: An object oriented prototype system (V-REALISM). Comput. Ind. 2003, 52, 109–125. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y.C. Digital Twin in Industry: State-of-the-Art. IEEE Trans. Ind. Inform. 2019, 15, 2405–2415. [Google Scholar] [CrossRef]

- Werrlich, S.; Nitsche, K.; Notni, G. Demand Analysis for an Augmented Reality based Assembly Training. In Proceedings of the PETRA ’17: 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; pp. 416–422. [Google Scholar]

- Hořejší, P. Augmented Reality System for Virtual Training of Parts Assembly. Procedia Eng. 2015, 100, 699–706. [Google Scholar] [CrossRef]

- Schwarz, S.; Regal, G.; Kempf, M.; Schatz, R. Learning Success in Immersive Virtual Reality Training Environments: Practical Evidence from Automotive Assembly. In Proceedings of the NordiCHI ’20: 11th Nordic Conference on Human-Computer Interaction, Tallinn, Estonia, 25–29 October 2020; pp. 1–11. [Google Scholar] [CrossRef]

- Lerner, M.A.; Ayalew, M.; Peine, W.J.; Sundaram, C.P. Does Training on a Virtual Reality Robotic Simulator Improve Performance on the da Vinci® Surgical System? J. Endourol. 2010, 24, 467–472. [Google Scholar] [CrossRef]

- Dwivedi, P.; Cline, D.; Jose, C.; Etemadpour, R. Manual Assembly Training in Virtual Environments. In Proceedings of the 2018 IEEE 18th International Conference on Advanced Learning Technologies, Mumbai, India, 9–13 July 2018; pp. 395–401. [Google Scholar]

- Bortolini, M.; Botti, L.; Galizia, F.G.; Mora, C. Ergonomic Design of an Adaptive Automation Assembly System. Machines 2023, 11, 898. [Google Scholar] [CrossRef]

- Carlson, R.; Gonzalez, R.; Geary, D. Enhancing Training Effectiveness with Synthetic Data and Feedback in VR Environments. J. Appl. Ergon. 2015, 46, 56–65. [Google Scholar]

- Liu, W.; Liang, X.; Zheng, M. Task-Constrained Motion Planning Considering Uncertainty-Informed Human Motion Prediction for Human–Robot Collaborative Disassembly. IEEE/ASME Trans. Mechatron. 2023, 28, 2056–2071. [Google Scholar] [CrossRef]

- Jacob, S.; Klement, N.; Bearee, R.; Pacaux-Lemoine, M.P. Human-Robot Cooperation in Disassembly: A Rapid Review. In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics, Porto, Portugal, 18–20 November 2024; SCITEPRESS-Science and Technology Publications: Setubal, Portugal, 2024; Volume 2, pp. 212–219. [Google Scholar]

- Lee, Y.S.; Rashidi, A.; Talei, A.; Beh, H.J.; Rashidi, S. A Comparison Study on the Learning Effectiveness of Construction Training Scenarios in a Virtual Reality Environment. Virtual Worlds 2023, 2, 36–52. [Google Scholar] [CrossRef]

- Schwarz, M.; Weser, J.; Martinetz, T.; Pawelzik, K. Immersive Virtual Reality for Cognitive Training: A Pilot Study on Spatial Navigation in the Cave Automatic Virtual Environment. Front. Psychol. 2020, 11, 579993. [Google Scholar]

- Rocca, R.; Rosa, P.; Fumagalli, L.; Terzi, S. Integrating Virtual Reality and Digital Twin in Circular Economy Practices: A Laboratory Application Case. Sustainability 2020, 12, 2286. [Google Scholar] [CrossRef]

- Boud, A.C.; Baber, C.; Steiner, S.J. Virtual Reality: A Tool for Assembly? Presence 2000, 9, 486–496. [Google Scholar] [CrossRef]

- Al-Ahmari, A.M.; Abidi, M.H.; Ahmad, A.; Darmoul, S. Development of a virtual manufacturing assembly simulation system. Adv. Mech. Eng. 2016, 8, 1687814016639824. [Google Scholar] [CrossRef]

- Numfu, M.; Riel, A.; Noel, F. Virtual Reality Technology for Maintenance Training. Appl. Sci. Eng. Prog. 2020, 13, 274–282. [Google Scholar] [CrossRef]

- Gutiérrez, T.; Rodríguez, J.; Vélaz, Y.; Casado, S.; Suescun, A.; Sánchez, E.J. IMA-VR: A multimodal virtual training system for skills transfer in Industrial Maintenance and Assembly tasks. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 428–433, ISSN 1944–9437. [Google Scholar] [CrossRef]

- Li, Z.; Wang, J.; Yan, Z.; Wang, X.; Anwar, M.S. An Interactive Virtual Training System for Assembly and Disassembly Based on Precedence Constraints. In Advances in Computer Graphics, Proceedings of the 36th Computer Graphics International Conference, CGI 2019, Calgary, AB, Canada, 17–20 June 2019; Gavrilova, M., Chang, J., Thalmann, N.M., Hitzer, E., Ishikawa, H., Eds.; Springer: Cham, Switzerland, 2019; pp. 81–93. [Google Scholar] [CrossRef]

- Westerfield, G.; Mitrovic, A.; Billinghurst, M. Intelligent Augmented Reality Training for Industrial Operations. IEEE Trans. Learn. Technol. 2014, 7, 331–344. [Google Scholar]

- Pan, X.; Cui, X.; Huo, H.; Jiang, Y.; Zhao, H.; Li, D. Virtual Assembly of Educational Robot Parts Based on VR Technology. In Proceedings of the 2019 IEEE 11th International Conference on Engineering Education (ICEED), Kanazawa, Japan, 6–7 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Seth, A.; Vance, J.M.; Oliver, J.H. Virtual reality for assembly methods prototyping: A review. Virtual Real. 2011, 15, 5–20. [Google Scholar] [CrossRef]

- Devagiri, J.S.; Paheding, S.; Niyaz, Q.; Yang, X.; Smith, S. Augmented Reality and Artificial Intelligence in industry: Trends, tools, and future challenges. Expert Syst. Appl. 2022, 207, 118002. [Google Scholar] [CrossRef]

- Zhao, X. Extended Reality for Safe and Effective Construction Management: State-of-the-Art, Challenges, and Future Directions. Buildings 2023, 13, 155. [Google Scholar] [CrossRef]

- Lee, M.L.; Liu, W.; Behdad, S.; Liang, X.; Zheng, M. Robot-Assisted Disassembly Sequence Planning with Real-Time Human Motion Prediction. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 438–450. [Google Scholar] [CrossRef]

- Vongbunyong, S.; Vongseela, P.; Sreerattana-aporn, J. A Process Demonstration Platform for Product Disassembly Skills Transfer. Procedia CIRP 2017, 61, 281–286. [Google Scholar] [CrossRef]

- Hjorth, S.; Chrysostomou, D. Human–robot collaboration in industrial environments: A literature review on non-destructive disassembly. Robot. Comput. Integr. Manuf. 2022, 73, 102208. [Google Scholar] [CrossRef]

- Ottogalli, K.; Rosquete, D.; Rojo, J.; Amundarain, A.; María Rodríguez, J.; Borro, D. Virtual reality simulation of human-robot coexistence for an aircraft final assembly line: Process evaluation and ergonomics assessment. Int. J. Comput. Integr. Manuf. 2021, 34, 975–995. [Google Scholar] [CrossRef]

- Kothari, A. Real-Time Motion Prediction for Efficient Human-Robot Collaboration. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2023. Available online: https://dspace.mit.edu/handle/1721.1/152639 (accessed on 16 March 2025).

- Liu, W.; Liang, X.; Zheng, M. Dynamic Model Informed Human Motion Prediction Based on Unscented Kalman Filter. IEEE/ASME Trans. Mechatron. 2022, 27, 5287–5295. [Google Scholar] [CrossRef]

- Daneshmand, M.; Noroozi, F.; Corneanu, C.; Mafakheri, F.; Fiorini, P. Industry 4.0 and prospects of circular economy: A survey of robotic assembly and disassembly. Int. J. Adv. Manuf. Technol. 2023, 124, 2973–3000. [Google Scholar] [CrossRef]

- Kuts, V.; Cherezova, N.; Sarkans, M.; Otto, T. Digital Twin: Industrial robot kinematic model integration to the virtual reality environment. J. Mach. Eng. 2020, 20, 53–64. [Google Scholar] [CrossRef]

- Xu, X.; Guo, P.; Zhai, J.; Zeng, X. Robotic kinematics teaching system with virtual reality, remote control and an on–site laboratory. Int. J. Mech. Eng. Educ. 2020, 48, 197–220. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Digital Twin and Virtual Reality Based Methodology for Multi-Robot Manufacturing Cell Commissioning. Appl. Sci. 2020, 10, 3633. [Google Scholar] [CrossRef]

- Sassanelli, C.; Rosa, P.; Terzi, S. Supporting disassembly processes through simulation tools: A systematic literature review with a focus on printed circuit boards. J. Manuf. Syst. 2021, 60, 429–448. [Google Scholar] [CrossRef]

- Zhao, F.; Deng, W.; Pham, D.T. A Robotic Teleoperation System with Integrated Augmented Reality and Digital Twin Technologies for Disassembling End-of-Life Batteries. Batteries 2024, 10, 382. [Google Scholar] [CrossRef]

- Zhao, G.; Wang, Y. Development of Machine Tool Disassembly and Assembly Training and Digital Twin Model Building System based on Virtual Simulation. Int. J. Mech. Electr. Eng. 2024, 2, 50–59. [Google Scholar] [CrossRef]

- Pérez, L.; Diez, E.; Usamentiaga, R.; García, D.F. Industrial robot control and operator training using virtual reality interfaces. Comput. Ind. 2019, 109, 114–120. [Google Scholar] [CrossRef]

- Zhao, F.; Pham, D.T. Integration of Augmented Reality and Digital Twins in a Teleoperated Disassembly System. In Advances in Remanufacturing, Proceedings of the VII International Workshop on Autonomous Remanufacturing, IWAR 2023, Detroit, MI, USA, 16–18 October 2023; Fera, M., Caterino, M., Macchiaroli, R., Pham, D.T., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 93–105. [Google Scholar] [CrossRef]

- Lewczuk, K. Virtual Reality Application for the Safety Improvement of Intralogistics Systems. Sustainability 2024, 16, 6024. [Google Scholar] [CrossRef]

- Siemens AG. ROS#: Integration of Unity3D with ROS 2019. Available online: https://github.com/siemens/ros-sharp (accessed on 16 March 2025).

- Unity-Technologies. Unity Barracuda Documentation 2024. Available online: https://docs.unity3d.com/Packages/com.unity.barracuda%401.0/manual/index.html (accessed on 16 March 2025).

- Meta Platforms Inc. Inside-Out Body Tracking and Generative Legs; Meta Platforms Inc.: Menlo Park, CA, USA, 2023; Available online: https://developers.meta.com/horizon/blog/inside-out-body-tracking-and-generative-legs/ (accessed on 16 March 2025).

- Meta Platforms Inc. Movement Overview; Meta Platforms Inc.: Menlo Park, CA, USA, 2023; Available online: https://developers.meta.com/horizon/documentation/unity/move-overview (accessed on 16 March 2025).

- Meta Platforms Inc. Learn About Hand and Body Tracking on Meta Quest; Meta Platforms Inc.: Menlo Park, CA, USA, 2024; Available online: https://www.meta.com/help/quest/290147772643252/ (accessed on 16 March 2025).

- Behdad, S.; Berg, L.P.; Thurston, D.; Vance, J. Leveraging Virtual Reality Experiences with Mixed-Integer Nonlinear Programming Visualization of Disassembly Sequence Planning Under Uncertainty. J. Mech. Des. 2014, 136, 041005. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R.S. A kinematic notation for lower-pair mechanisms based on matrices. J. Appl. Mech. 1955, 22, 215–221. [Google Scholar] [CrossRef]

- Technologies, U. Articulation Body Component Reference. 2021. Available online: https://docs.unity3d.com/2021.3/Documentation/Manual/class-ArticulationBody.html (accessed on 13 March 2025).

- Yang, Y.; Yang, P.; Li, J.; Zeng, F.; Yang, M.; Wang, R.; Bai, Q. Research on virtual haptic disassembly platform considering disassembly process. Neurocomputing 2019, 348, 74–81. [Google Scholar] [CrossRef]

- Tahriri, F.; Mousavi, M.; Yap, I.D.H.J. Optimizing the Robot Arm Movement Time Using Virtual Reality Robotic Teaching System. Int. J. Simul. Model. 2015, 14, 28–38. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Corporation, M. ONNX Runtime: A High-Performance Inference Engine for Machine Learning Models 2024. Available online: https://onnxruntime.ai (accessed on 13 March 2025).

- Liu, Q.; Liu, Z.; Xu, W.; Tang, Q.; Zhou, Z.; Pham, D.T. Human-robot collaboration in disassembly for sustainable manufacturing. Int. J. Prod. Res. 2019, 57, 4027–4044. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 10761–10771. [Google Scholar]

- Merkel, D. Docker: Lightweight Linux containers for consistent development and deployment. Linux J. 2014, 239, 2. [Google Scholar]

- Shamsuzzoha, A.; Toshev, R.; Vu Tuan, V.; Kankaanpaa, T.; Helo, P. Digital factory—Virtual reality environments for industrial training and maintenance. Interact. Learn. Environ. 2021, 29, 1339–1362. [Google Scholar] [CrossRef]

- Chen, J.; Mitrouchev, P.; Coquillart, S.; Quaine, F. Disassembly task evaluation by muscle fatigue estimation in a virtual reality environment. Int. J. Adv. Manuf. Technol. 2017, 88, 1523–1533. [Google Scholar] [CrossRef]

- Qu, M.; Wang, Y.; Pham, D.T. Robotic Disassembly Task Training and Skill Transfer Using Reinforcement Learning. IEEE Trans. Ind. Inform. 2023, 19, 10934–10943. [Google Scholar] [CrossRef]

| Metric | Value |

|---|---|

| Root Mean Squared Error (RMSE) | 0.0179 |

| Mean Absolute Error (MAE) | 0.0060 |

| R2 score | 0.4965 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maddipatla, Y.; Tian, S.; Liang, X.; Zheng, M.; Li, B. VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation. Machines 2025, 13, 239. https://doi.org/10.3390/machines13030239

Maddipatla Y, Tian S, Liang X, Zheng M, Li B. VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation. Machines. 2025; 13(3):239. https://doi.org/10.3390/machines13030239

Chicago/Turabian StyleMaddipatla, Yashwanth, Sibo Tian, Xiao Liang, Minghui Zheng, and Beiwen Li. 2025. "VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation" Machines 13, no. 3: 239. https://doi.org/10.3390/machines13030239

APA StyleMaddipatla, Y., Tian, S., Liang, X., Zheng, M., & Li, B. (2025). VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation. Machines, 13(3), 239. https://doi.org/10.3390/machines13030239