Intelligent Casting Quality Inspection Method Integrating Anomaly Detection and Semantic Segmentation

Abstract

1. Introduction

1.1. Key Benefits of AI in Quality Control

1.2. Defect Detection in Metal Castings Using AI and Imaging Technologies

1.3. Impact of AI on Casting Quality Inspection Methods

2. Materials and Methods

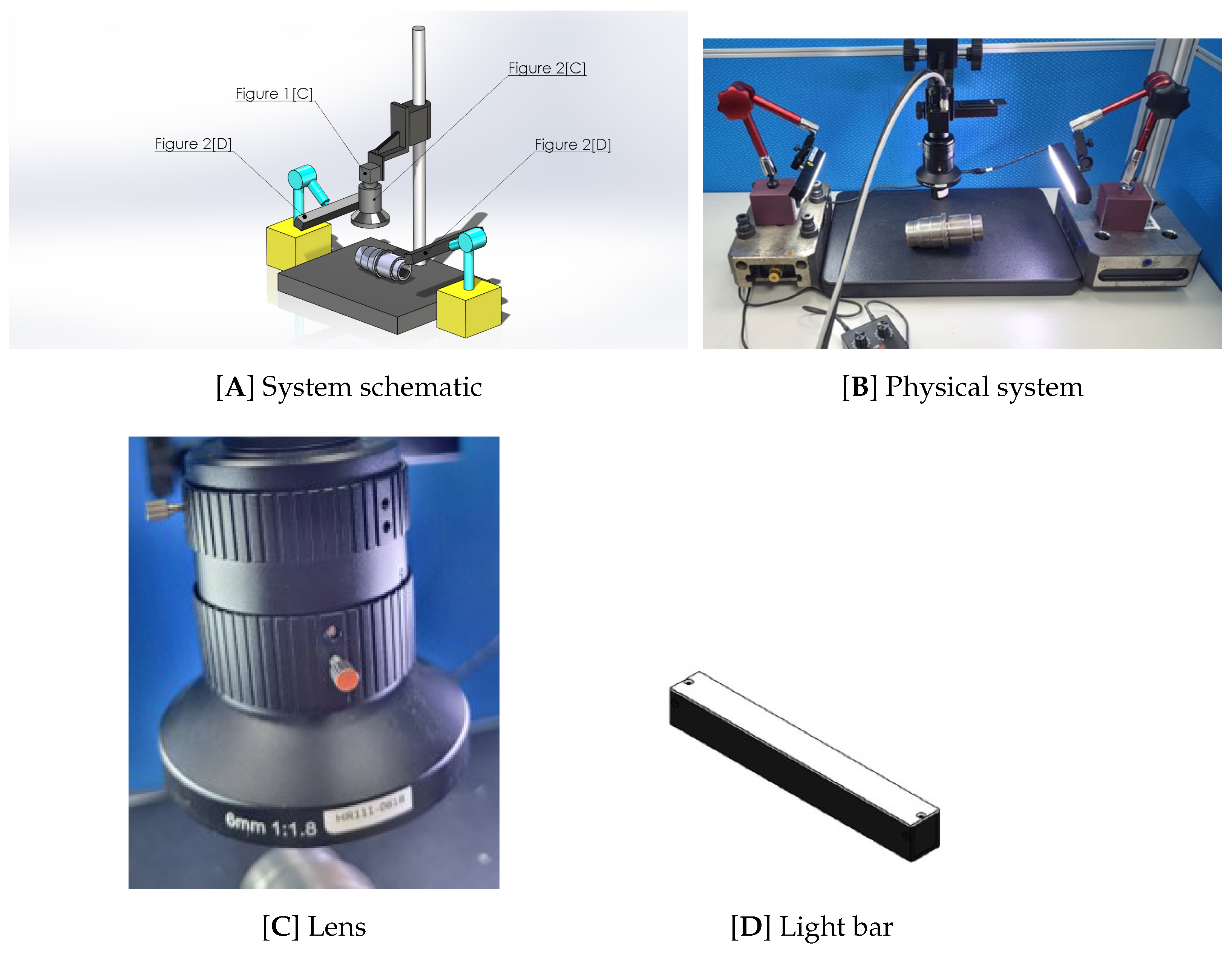

2.1. Hardware System for Internal Defect Detection in Castings Based on Machine Vision

2.1.1. System Overview

2.1.2. Hardware Configuration

- Camera module: A PGE-200S6M-Cindustrial-grade camera is used for high-resolution image capture. With its high resolution and sensitive photosensitivity, it can capture the internal details of the casting and strengthen the reliability of image recognition (see Figure 1C).

- Endoscope module: This module is paired with the PRHI230-82 industrial endoscope. It has a slender body and high imaging capability. It can penetrate complex cavities for local magnification inspections and is suitable for observing subtle defects (see Figure 1D).

- Light source module: An LDR_9050_W30-type ring LED white light source is used to provide a uniform and stable lighting environment. It can effectively reduce shadow and reflection interference and augment image contrast and defect boundary clarity (see Figure 1E).

- Platform and dimming control: The inspection platform has position fine-tuning and angle-correction mechanisms. Together with the dimming module, the lighting intensity can be adjusted according to the brightness requirements of the inspection area, ensuring optimal image quality under different materials and surface conditions.

- PC computing system: The core control system uses a high-performance computer equipped with a Windows 10 64-bit operating system. It integrates image acquisition control, data processing, defect classification, and reporting modules and can be used with subsequent algorithm modules for real-time interpretation and report generation.

2.2. Machine Vision-Based External Defect Detection System for Castings

2.2.1. System Overview

2.2.2. Hardware Configuration

- Industrial camera: This system uses a BES-PGE-200S6M-C industrial camera, which has a resolution of 5472 × 3468, that can capture small surface defects and texture details, thereby improving overall inspection accuracy (see Figure 1C).

- Lens module: Equipped with an HR111-0618 high-precision lens, this module provides excellent imaging clarity and edge contrast, ensuring that defect information can be clearly presented in the captured image (see Figure 2C).

- Lighting module: This module uses a CHD-BA1510 15W strip LED white light source, which has stable and uniform lighting characteristics, reduces shadow and reflection interference, increases the contrast performance of defective areas, and boosts overall image quality (see Figure 2D).

- PC control system: The integrated control platform is equipped with a Windows 10 64-bit operating system, responsible for performing image acquisition, image preprocessing, defect location, and feature classification functions. It can also adjust model parameters and output report records for different types of castings.

2.3. System Flow

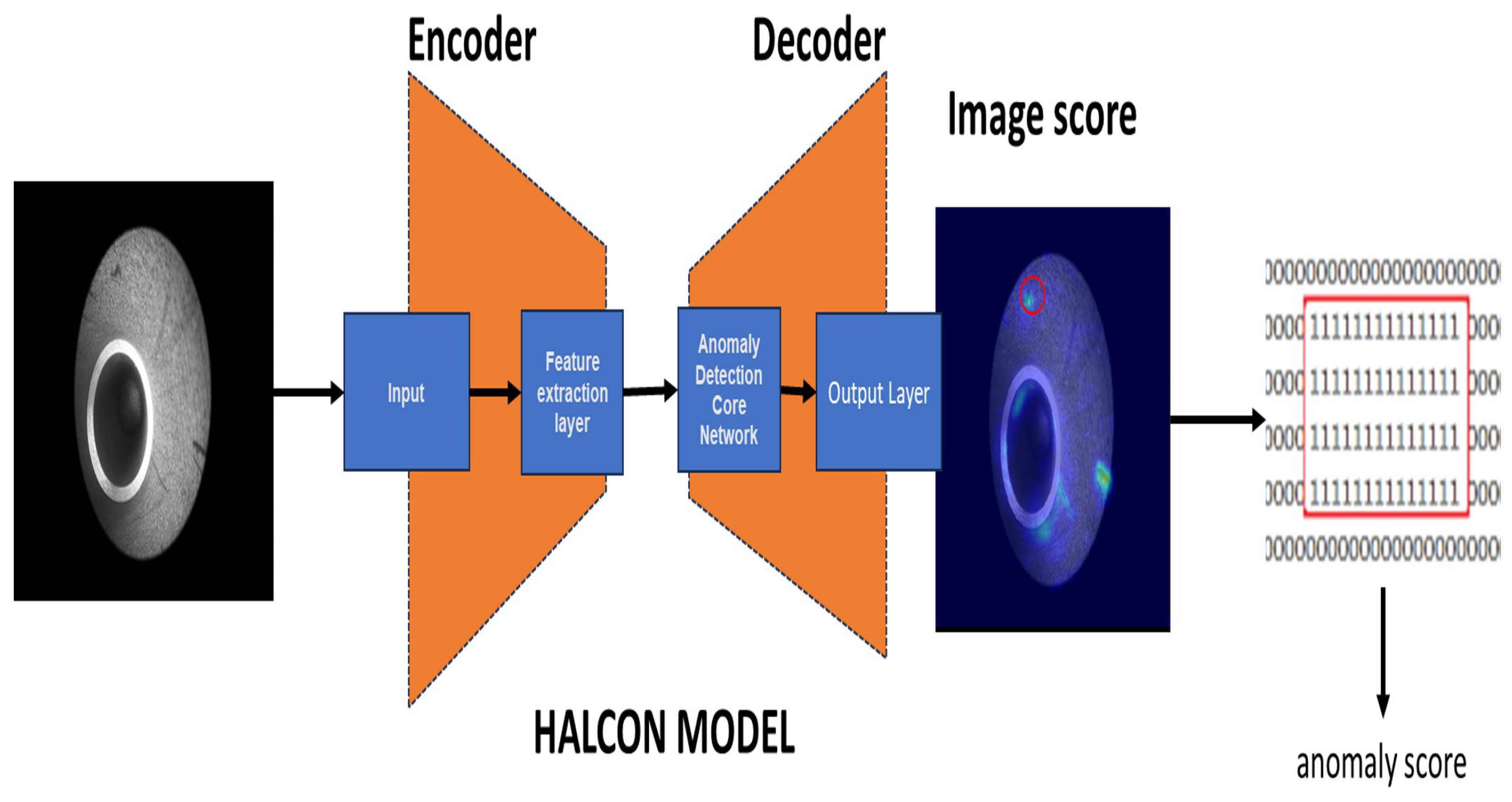

2.4. HALCON-Based Anomaly Detection Architecture Design

2.4.1. Input Layer

2.4.2. Feature Extraction Layer

2.4.3. Anomaly Detection Core Network

2.4.4. Output Layer and Evaluation Metrics

- Anomaly Mask: Presents the abnormal area in the form of a heatmap or binary mask to visualize the location and shape of the defect;

- Anomaly Score: Provides an anomaly score for each image or each area as a basis for judging the severity of the defect.

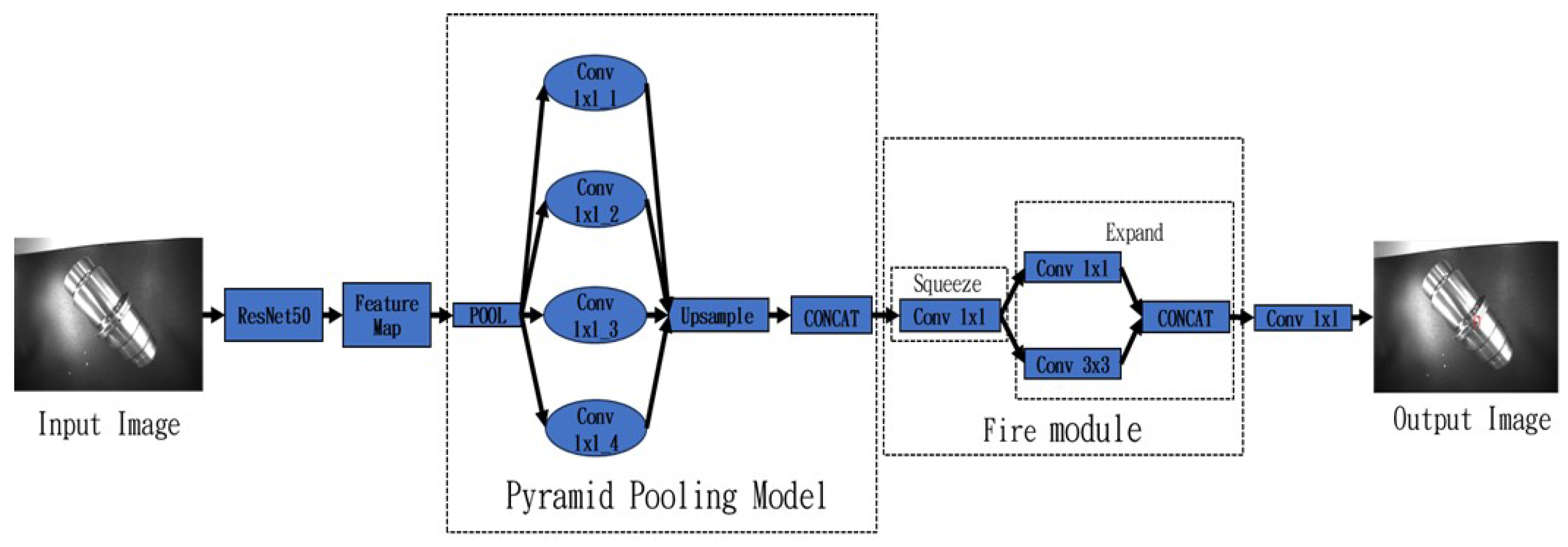

2.5. Semantic Segmentation Model for Industrial Inspection

2.5.1. Feature Extraction Phase

2.5.2. Pretrained Deep Learning Models

- The Compact model is based on the SqueezeNet architecture. It has low memory usage and high computational efficiency, making it particularly suitable for resource-limited embedded devices and classification tasks that require instant responses.

- AlexNet has a larger first-layer convolution kernel, which can boost feature extraction capabilities but also requires relatively more computing resources.

- The Enhanced model has a deeper structural design and more substantial classification capabilities. It is suitable for complex or diverse classification scenarios, but it also comes with higher training and inference costs. For large input images, the model can automatically adjust the weight initialization method of the fully connected layer to extend its versatility.

- ResNet-50 uses a residual network architecture, has high training stability and good generalization ability, and is suitable for industrial inspection and medical image analysis tasks with high-precision requirements. It is worth mentioning that when the input image size is adjusted, the weights of the fully connected layer are not affected, which makes the model highly adaptable under various application conditions.

2.5.3. Model Selection and Application

2.6. Model Performance Evaluation

2.6.1. Precision

2.6.2. Sensitivity/Recall

2.6.3. Score

2.6.4. Mean Pixel Accuracy (mPA)

2.6.5. Intersection over Union (IoU)

3. Discussion

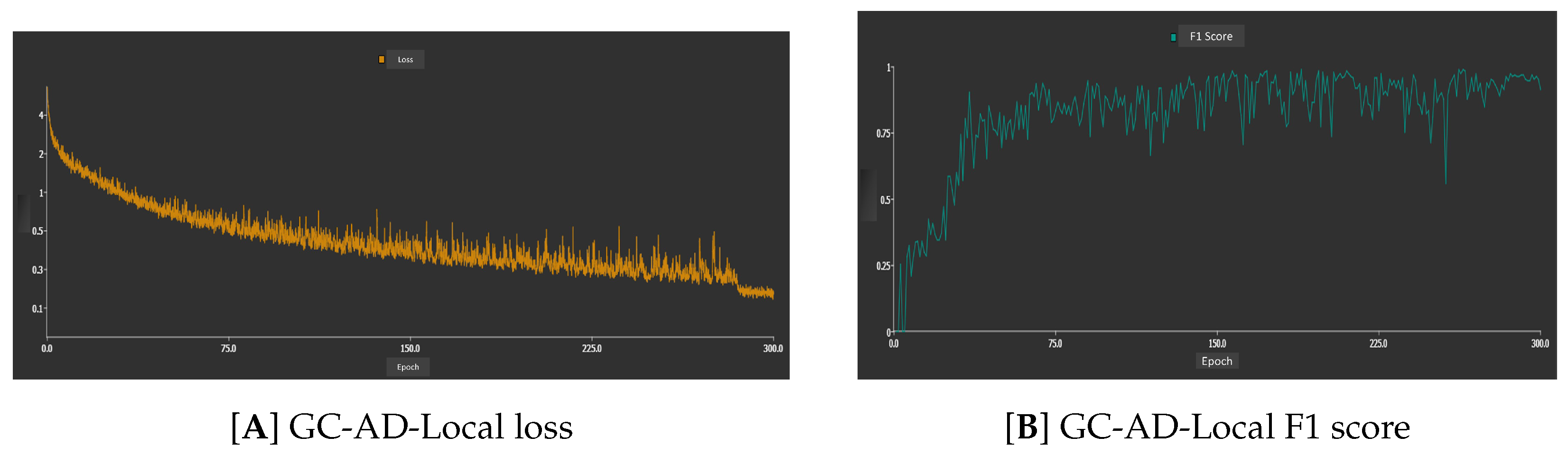

3.1. Internal Anomaly Detection

3.1.1. Training Process

3.1.2. Loss Curve Analysis

3.2. Experimental Comparison of Anomaly Detection Models

3.3. Detected Anomalies and Classification

3.4. Normal Object Detection Case

3.5. Performance Analysis of the Appearance Inspection Anomaly Detection Model

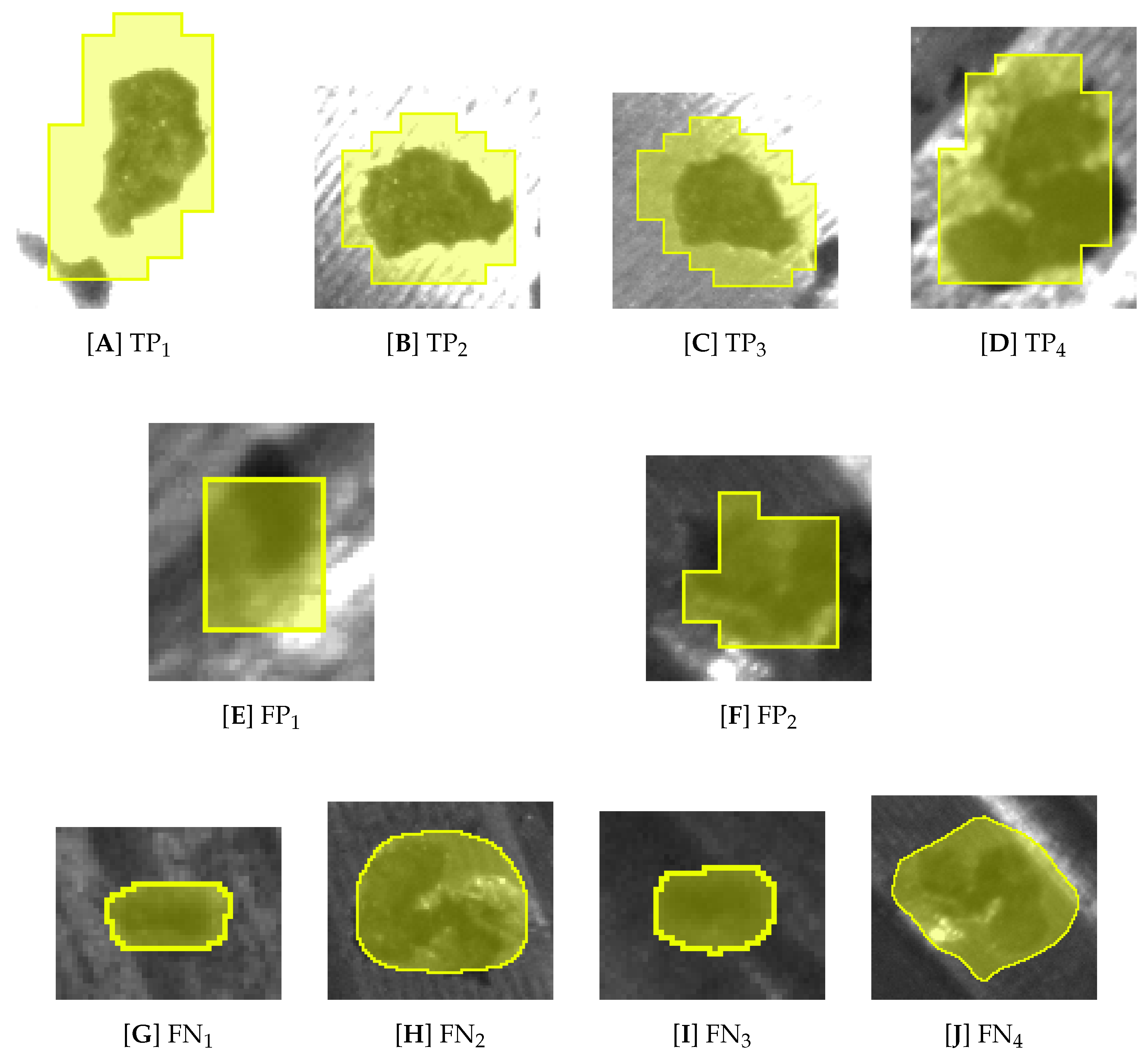

3.6. Performance Analysis of the Semantic Segmentation Model for Appearance Inspection

3.7. Performance Evaluation of Semantic Segmentation Models

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ding, H.; Gao, R.; Isaksson, A.; Landers, R.; Parisini, T.; Yuan, Y. State of AI-Based Monitoring in Smart Manufacturing and Introduction to Focused Section. IEEE/ASME Trans. Mechatron. 2020, 25, 2143–2154. [Google Scholar] [CrossRef]

- Ghelani, H. AI-Driven Quality Control in PCB Manufacturing: Enhancing Production Efficiency and Precision. Int. J. Sci. Res. Manag. (IJSRM) 2024, 12, 1549–1564. [Google Scholar] [CrossRef]

- Cevallos, M.G.O.; Jaramillo, G.A.L.; Vélez, J.L.B.; Zambrano, M.I.U.; Montesdeoca, L.D.Z.; Palomeque, M.A.B. Implementation of Artificial Intelligence in Quality Management in SMEs: Benefits and Challenges. Evol. Stud. Imaginative Cult. 2024, 1489–1500. [Google Scholar] [CrossRef]

- Arinez, J.; Chang, Q.; Gao, R.; Xu, C.; Zhang, J. Artificial Intelligence in Advanced Manufacturing: Current Status and Future Outlook. J. Manuf. Sci. Eng. 2020, 142, 110804. [Google Scholar] [CrossRef]

- Rakholia, R.M.; Suárez-Cetrulo, A.L.; Singh, M.; Carbajo, R.S. Advancing Manufacturing Through Artificial Intelligence: Current Landscape, Perspectives, Best Practices, Challenges, and Future Direction. IEEE Access 2024, 12, 131621–131637. [Google Scholar] [CrossRef]

- Lodhi, S.K.; Gill, A.Y.; Hussain, I. AI-Powered Innovations in Contemporary Manufacturing Procedures: An Extensive Analysis. Int. J. Multidiscip. Sci. Arts 2024, 3, 15–25. [Google Scholar] [CrossRef]

- Roy, R.; Srivastava, A. Role of Artificial Intelligence (AI) in Enhancing Operational Efficiency in Manufacturing Medical Devices. J. Multidiscip. Res. 2024, 4, 35–40. [Google Scholar] [CrossRef]

- Patel, P. Impact of AI on Manufacturing and Quality Assurance in Medical Device and Pharmaceuticals Industry. Int. J. Innov. Technol. Explor. Eng. 2024, 13, 9–21. [Google Scholar] [CrossRef]

- Ejjami, R.; Boussalham, K. Industry 5.0 in Manufacturing: Enhancing Resilience and Responsibility through AI-Driven Predictive Maintenance, Quality Control, and Supply Chain Optimization. Int. J. Multidiscip. Res. 2024, 6, 1–31. [Google Scholar] [CrossRef]

- Lin, X. Artificial Intelligence in the Industrial Engineering. Adv. Oper. Res. Prod. Manag. 2024, 1, 1–6. [Google Scholar] [CrossRef]

- Du, W.; Shen, H.; Fu, J.; Zhang, G.; He, Q. Approaches for improvement of the X-ray image defect detection of automobile casting aluminum parts based on deep learning. NDT E Int. 2019, 107, 102144. [Google Scholar] [CrossRef]

- Li, X.; Tso, S.; Guan, X.; Huang, Q. Improving Automatic Detection of Defects in Castings by Applying Wavelet Technique. IEEE Trans. Ind. Electron. 2006, 53, 1927–1934. [Google Scholar] [CrossRef]

- Duan, L.; Yang, K.; Ruan, L. Research on Automatic Recognition of Casting Defects Based on Deep Learning. IEEE Access 2021, 9, 12209–12216. [Google Scholar] [CrossRef]

- Galan, U.; Orta, P.; Kurfess, T.; Ahuett-Garza, H. Surface defect identification and measurement for metal castings by vision system. Manuf. Lett. 2018, 15, 5–8. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, H.; Zhao, H.; Li, X.; Wang, Q. Surface defect detection of metal castings based on Centernet. In Proceedings of the International Conference on Optical and Photonic Engineering (icOPEN 2022), Online, China, 24–27 November 2022; Volume 12550, p. 125501K. [Google Scholar] [CrossRef]

- Ferguson, M.; Ronay, A.; Lee, Y.T.; Law, K. Detection and Segmentation of Manufacturing Defects with Convolutional Neural Networks and Transfer Learning. Smart Sustain. Manuf. Syst. 2018, 2, 137–164. [Google Scholar] [CrossRef]

- Lin, C.H.; Ho, C.W.; Hu, G.H.; Kuo, P.C.; Hu, C.Y. Alloy Cast Product Defect Detection Based on Object Detection. In Proceedings of the 2021 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Hualien City, Taiwan, 16–19 November 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Hossain, S.; Krenek, R.; Taheri, H.; Dababneh, F. Ultrasonic Phased Array Technique for Defect Detection and Sizing in Heavy-Walled Cast Components. In Proceedings of the ASME 2020 International Mechanical Engineering Congress and Exposition, Virtual, 16–19 November 2020; Volume 2B: Advanced Manufacturing. [Google Scholar] [CrossRef]

- Raj, V.; Srihari, M.; Mohan, D.A. Casting defect detection using YOLO V4. Int. Res. J. Mod. Eng. Technol. Sci. 2021, 3, 1581–1585. [Google Scholar]

- Sundaram, S.; Zeid, A. Artificial Intelligence-Based Smart Quality Inspection for Manufacturing. Micromachines 2023, 14, 570. [Google Scholar] [CrossRef]

- Oh, S.; Cha, J.M.; Kim, D.; Jeong, J. Quality Inspection of Casting Product Using CAE and CNN. In Proceedings of the 2020 4th International Conference on Imaging, Signal Processing and Communications (ICISPC), Kumamoto, Japan, 23–25 October 2020; pp. 34–38. [Google Scholar] [CrossRef]

- Jaśkowiec, K.; Wilk-Kołodziejczyk, D.; Bartłomiej, Ś.; Reczek, W.; Bitka, A.; Małysza, M.; Doroszewski, M.; Pirowski, Z.; Boroń, Ł. Assessment of the Quality and Mechanical Parameters of Castings Using Machine Learning Methods. Materials 2022, 15, 2884. [Google Scholar] [CrossRef]

- Nguyen, T.; Choi, S.; Park, S.J.; Park, S.H.; Yoon, J. Inspecting Method for Defective Casting Products with Convolutional Neural Network (CNN). Int. J. Precis. Eng. Manuf.-Green Technol. 2020, 8, 583–594. [Google Scholar] [CrossRef]

- Hu, H.; Li, S.; Huang, J.; Liu, B.; Che, C. Casting Product Image Data for Quality Inspection with Xception and Data Augmentation. J. Theory Pract. Eng. Sci. 2023, 3, 42–46. [Google Scholar] [CrossRef]

- Lin, C.H.; Hu, G.H.; Ho, C.W.; Hu, C.Y. Press Casting Quality Prediction and Analysis Based on Machine Learning. Electronics 2022, 11, 2204. [Google Scholar] [CrossRef]

- Sharma, G.; Anand, V.; Chauhan, R.; Pokhariya, H.; Gupta, S.; Sunil, G. Advanced Deep Learning Methods for Comprehensive Quality Inspection and Fault Detection in Casting Processes. In Proceedings of the 2024 2nd International Conference on Advancement in Computation & Computer Technologies (InCACCT), Gharuan, India, 2–3 May 2024; pp. 602–606. [Google Scholar] [CrossRef]

- Maheswari, M.; Brintha, N.C. A Survey on Detection of Various Casting Defects Using Deep Learning Techniques. In Proceedings of the 2024 2nd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 4–6 January 2024; pp. 1436–1440. [Google Scholar] [CrossRef]

- Bolla, B.; Kingam, M.; Ethiraj, S. Efficient Deep Learning Methods for Identification of Defective Casting Products. arXiv 2022, arXiv:2205.07118. [Google Scholar] [CrossRef]

| Model | Loss Value | F1 Score | Accuracy (%) | Recall |

|---|---|---|---|---|

| GC-AD-Combined | 0.539846 | 96.15 | 96.3 | 52 |

| GC-AD-Global | 0.193084 | 96.15 | 83.33 | 90 |

| GC-AD-Local | 0.410715 | 97.96 | 100 | 96 |

| Model | Precision (%) | Recall (%) | F1 Score (%) | Mean Precision (%) |

|---|---|---|---|---|

| Compact | 69.23 | 60.00 | 64.29 | 47.97 |

| Enhanced | 83.33 | 66.67 | 74.07 | 59.31 |

| MobileNetV2 | 83.33 | 66.67 | 74.07 | 65.80 |

| ResNet-18 | 66.67 | 53.33 | 59.26 | 49.97 |

| ResNet-50 | 69.23 | 60.00 | 64.29 | 55.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.-C.; Yen, S.-Y.; Lin, Y.-F.; Tsai, M.-Y.; Chuang, T.-H. Intelligent Casting Quality Inspection Method Integrating Anomaly Detection and Semantic Segmentation. Machines 2025, 13, 317. https://doi.org/10.3390/machines13040317

Chen M-C, Yen S-Y, Lin Y-F, Tsai M-Y, Chuang T-H. Intelligent Casting Quality Inspection Method Integrating Anomaly Detection and Semantic Segmentation. Machines. 2025; 13(4):317. https://doi.org/10.3390/machines13040317

Chicago/Turabian StyleChen, Min-Chieh, Shih-Yu Yen, Yue-Feng Lin, Ming-Yi Tsai, and Ting-Hsueh Chuang. 2025. "Intelligent Casting Quality Inspection Method Integrating Anomaly Detection and Semantic Segmentation" Machines 13, no. 4: 317. https://doi.org/10.3390/machines13040317

APA StyleChen, M.-C., Yen, S.-Y., Lin, Y.-F., Tsai, M.-Y., & Chuang, T.-H. (2025). Intelligent Casting Quality Inspection Method Integrating Anomaly Detection and Semantic Segmentation. Machines, 13(4), 317. https://doi.org/10.3390/machines13040317