Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

- CPTAC-GBM [31]—this dataset contains collection from the National Cancer Institute’s Clinical Proteomic Tumor Analysis Consortium Glioblastoma Multiform cohort. It contains CR, CT, MR, SC imaging modalities from 66 participants, totaling 164 studies;

- TCGA-HNSC [35]—the cancer genome atlas head-neck squamous cell carcinoma data collection 479 studies from 227 participants from CT, MR, PET, RTDOSE, RTPLAN, RTSTRUCT modalities;

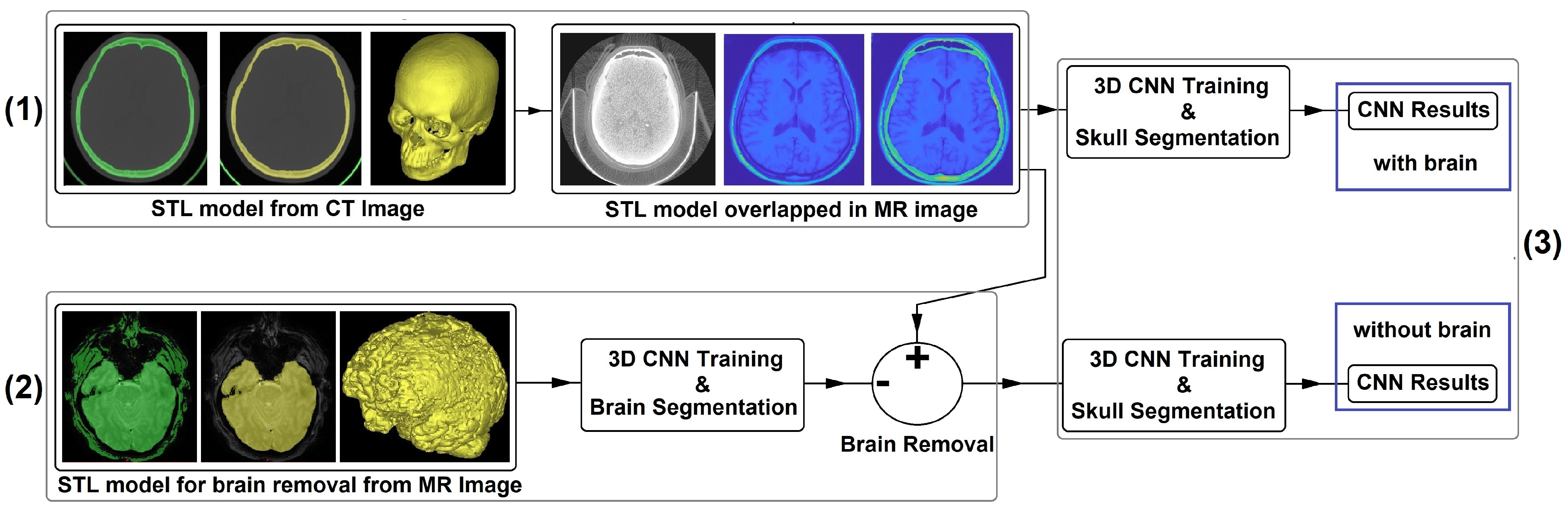

2.2. Data Processing I

2.3. Data Processing II

2.4. CNN Architecture and Implementation Details

2.5. Model Performance Evaluation and Statistical Analysis

3. Results and Discussion

3.1. Performance Analysis

3.2. Comparison between UNet, UNet++, and UNet3+

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Disclosure Statement

Abbreviations

| CNN | convolutional neural network |

| CR | Computed Radiography |

| cRBM | convolutional restricted Boltzmann machines |

| CSF | cerebro-spinal fluid |

| CT | computed tomography |

| DSC | dice similarity coefficient |

| FN | false negatives |

| FP | false positives |

| GB | gigabyte |

| HD | hausdorf distances |

| JSC | jaccard similarity coefficient |

| MRI | magnetic resonance imaging |

| PT or PET | positron emission tomography |

| ROI | regions of interest |

| RT | radiotherapy |

| RTDOSE | radiotherapy dose |

| RTPLAN | radiotherapy plan |

| RTSTRUCT | radiotherapy structure set |

| SC | secondary capture |

| SD | standard deviation |

| SPM8 | statistical parametric mapping 8 |

| STL | standard tessellation language |

| SVD | symmetric volume difference |

| SVM | support vector machine |

| TP | true positives |

| VOE | volumetric overlap error |

| VRAM | video random access memory |

References

- Meulepas, J.M.; Ronckers, C.M.; Smets, A.M.; Nievelstein, R.A.; Gradowska, P.; Lee, C.; Jahnen, A.; van Straten, M.; de Wit, M.C.Y.; Zonnenberg, B.; et al. Radiation Exposure From Pediatric CT Scans and Subsequent Cancer Risk in the Netherlands. JNCI J. Natl. Cancer Inst. 2018, 111, 256–263. [Google Scholar] [CrossRef]

- Migimatsu, T.; Wetzstein, G. Automatic MRI Bone Segmentation. 2015; unpublished. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Kolařík, M.; Burget, R.; Uher, V.; Říha, K.; Dutta, M.K. Optimized High Resolution 3D Dense-U-Net Network for Brain and Spine Segmentation. Appl. Sci. 2019, 9, 404. [Google Scholar] [CrossRef]

- Ker, J.; Singh, S.P.; Bai, Y.; Rao, J.; Lim, T.; Wang, L. Image Thresholding Improves 3-Dimensional Convolutional Neural Network Diagnosis of Different Acute Brain Hemorrhages on Computed Tomography Scans. Sensors 2019, 19, 2167. [Google Scholar] [CrossRef]

- Livne, M.; Rieger, J.; Aydin, O.U.; Taha, A.A.; Akay, E.M.; Kossen, T.; Sobesky, J.; Kelleher, J.D.; Hildebr, K.; Frey, D.; et al. A U-Net Deep Learning Framework for High Performance Vessel Segmentation in Patients with Cerebrovascular Disease. Front. Neurosci. 2019, 13. [Google Scholar] [CrossRef]

- Hwang, H.; Rehman, H.Z.U.; Lee, S. 3D U-Net for Skull Stripping in Brain MRI. Appl. Sci. 2019, 9, 569. [Google Scholar] [CrossRef]

- Ambellan, F.; Tack, A.; Ehlke, M.; Zachow, S. Automated segmentation of knee bone and cartilage combining statistical shape knowledge and convolutional neural networks. Med. Image Anal. 2019, 52, 109–118. [Google Scholar] [CrossRef]

- Dong, N.; Li, W.; Roger, T.; Jianfu, L.; Peng, Y.; James, X.; Dinggang, S.; Qian, W.; Yinghuan, S.; Heung-Il, S.; et al. Segmentation of Craniomaxillofacial Bony Structures from MRI with a 3D Deep-Learning Based Cascade Framework. Mach. Learn. Med. Imaging 2017, 10541, 266–273. [Google Scholar] [CrossRef]

- Deniz, C.; Siyuan, X.; Hallyburton, S.; Welbeck, A.; Babb, J.; Honig, S.; Cho, K.; Chang, G. Segmentation of the Proximal Femur from MR Images Using Deep Convolutional Neural Networks. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef]

- Chen, C.; Qin, C.; Qiu, H.; Tarroni, G.; Duan, J.; Bai, W.; Rueckert, D. Deep Learning for Cardiac Image Segmentation: A Review. Front. Cardiovasc. Med. 2020, 7. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Dogdas, B.; Shattuck, D.; Leahy, R. Segmentation of skull and scalp in 3-D human MRI using mathematical morphology. Hum. Brain Mapp. 2005, 26, 273–285. [Google Scholar] [CrossRef]

- Wang, D.; Shi, L.; Chu, W.; Cheng, J.; Heng, P. Segmentation of human skull in MRI using statistical shape information from CT data. J. Magn. Reson. Imaging 2009, 30, 490–498. [Google Scholar] [CrossRef] [PubMed]

- Sjölund, J.; Järlideni, A.; Andersson, M.; Knutsson, H.; Nordström, H. Skull Segmentation in MRI by a Support Vector Machine Combining Local and Global Features. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 3274–3279. [Google Scholar] [CrossRef]

- Puonti, O.; Leemput, K.; Nielsen, J.; Bauer, C.; Siebner, H.; Madsen, K.; Thielscher, A. Skull segmentation from MR scans using a higher-order shape model based on convolutional restricted Boltzmann machines. In Proceedings of the Medical Imaging 2018: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10574. [Google Scholar] [CrossRef]

- Ashburner, J.; Friston, K.J. Unified Segmentation. NeuroImage 2005, 26, 839–851. [Google Scholar] [CrossRef] [PubMed]

- UCL Queen Square Institute of Neurology. Statistical Parametric Mapping. Available online: https://www.fil.ion.ucl.ac.uk/spm/software/spm8/ (accessed on 17 September 2020).

- Nielsen, J.D.; Madsen, K.H.; Puonti, O.; Siebner, H.R.; Bauer, C.; Madsen, C.G.; Saturnino, G.B.; Thielscher, A. Automatic skull segmentation from MR images for realistic volume conductor models of the head: Assessment of the state-of-the-art. NeuroImage 2018, 174, 587–598. [Google Scholar] [CrossRef] [PubMed]

- Smith, S.M.; Jenkinson, M.; Woolrich, M.W.; Beckmann, C.F.; Behrens, T.E.; Johansen-Berg, H.; Bannister, P.R.; De Luca, M.; Drobnjak, I.; Flitney, D.E.; et al. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage 2004, 23, S208–S219. [Google Scholar] [CrossRef] [PubMed]

- UCL Queen Square Institute of Neurology. Statistical Parametric Mapping. Available online: https://www.fil.ion.ucl.ac.uk/spm/software/spm12/ (accessed on 17 February 2021).

- Yamashita, R.; Nishio, M.; Do, R.; Togashi, K. Convolutional Neural Networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Minnema, J.; Eijnatten, M.; Kouw, W.; Diblen, F.; Mendrik, A.; Wolff, J. CT Image Segmentation of Bone for Medical Additive Manufacturing using a Convolutional Neural Network. Comput. Biol. Med. 2018, 103, 130–139. [Google Scholar] [CrossRef]

- Ferraiuoli, P.; Taylor, J.C.; Martin, E.; Fenner, J.W.; Narracott, A.J. The Accuracy of 3D Optical Reconstruction and Additive Manufacturing Processes in Reproducing Detailed Subject-Specific Anatomy. J. Imaging 2017, 3. [Google Scholar] [CrossRef]

- Im, C.H.; Park, J.M.; Kim, J.H.; Kang, Y.J.; Kim, J.H. Assessment of Compatibility between Various Intraoral Scanners and 3D Printers through an Accuracy Analysis of 3D Printed Models. Materials 2020, 13, 4419. [Google Scholar] [CrossRef] [PubMed]

- Di Fiore, A.; Stellini, E.; Savio, G.; Rosso, S.; Graiff, L.; Granata, S.; Monaco, C.; Meneghello, R. Assessment of the Different Types of Failure on Anterior Cantilever Resin-Bonded Fixed Dental Prostheses Fabricated with Three Different Materials: An In Vitro Study. Appl. Sci. 2020, 10. [Google Scholar] [CrossRef]

- Zubizarreta-Macho, Á; Triduo, M.; Pérez-Barquero, J.A.; Guinot Barona, C.; Albaladejo Martínez, A. Novel Digital Technique to Quantify the Area and Volume of Cement Remaining and Enamel Removed after Fixed Multibracket Appliance Therapy Debonding: An In Vitro Study. J. Clin. Med. 2020, 9, 1098. [Google Scholar] [CrossRef] [PubMed]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- National Cancer Institute Clinical Proteomic Tumor Analysis Consortium (CPTAC). Radiology Data from the Clinical Proteomic Tumor Analysis Consortium Glioblastoma Multiforme [CPTAC-GBM] Collection [Dataset]. T Cancer Imaging Arch. 2018. [Google Scholar] [CrossRef]

- Grossberg, A.; Elhalawani, H.; Mohamed, A.; Mulder, S.; Williams, B.; White, A.L.; Zafereo, J.; Wong, A.J.; Berends, J.E.; AboHashem, S.; et al. Anderson Cancer Center Head and Neck Quantitative Imaging Working Group HNSCC [Dataset]. Cancer Imaging Arch. 2020. [Google Scholar] [CrossRef]

- Grossberg, A.; Mohamed, A.; Elhalawani, H.; Bennett, W.; Smith, K.; Nolan, T.; Williams, B.; Chamchod, S.; Heukelom, J.; Kantor, M.; et al. Imaging and Clinical Data Archive for Head and Neck Squamous Cell Carcinoma Patients Treated with Radiotherapy. Sci. Data 2018, 5, 180173. [Google Scholar] [CrossRef]

- Elhalawani, H.; Mohamed, A.S.; White, A.L.; Zafereo, J.; Wong, A.J.; Berends, J.E.; AboHashem, S.; Williams, B.; Aymard, J.M.; Kanwar, A.; et al. Matched computed tomography segmentation and demographic data for oropharyngeal cancer radiomics challenges. Sci. Data 2017, 4, 170077. [Google Scholar] [CrossRef]

- Zuley, M.L.; Jarosz, R.; Kirk, S.; Lee, Y.; Colen, R.; Garcia, K.; Aredes, N.D. Radiology Data from The Cancer Genome Atlas Head-Neck Squamous Cell Carcinoma [TCGA-HNSC] collection. Cancer Imaging Arch. 2016. [Google Scholar] [CrossRef]

- Kinahan, P.; Muzi, M.; Bialecki, B.; Coombs, L. Data from ACRIN-FMISO-Brain. Cancer Imaging Arch. 2018. [Google Scholar] [CrossRef]

- Gerstner, E.R.; Zhang, Z.; Fink, J.R.; Muzi, M.; Hanna, L.; Greco, E.; Prah, M.; Schmainda, K.M.; Mintz, A.; Kostakoglu, L.; et al. ACRIN 6684: Assessment of Tumor Hypoxia in Newly Diagnosed Glioblastoma Using 18F-FMISO PET and MRI. Clin Cancer Res. 2016, 22, 5079–5086. [Google Scholar] [CrossRef] [PubMed]

- Ratai, E.M.; Zhang, Z.; Fink, J.; Muzi, M.; Hanna, L.; Greco, E.; Richards, T.; Kim, D.; Andronesi, O.C.; Mintz, A.; et al. ACRIN 6684: Multicenter, phase II assessment of tumor hypoxia in newly diagnosed glioblastoma using magnetic resonance spectroscopy. PLoS ONE 2018, 13. [Google Scholar] [CrossRef] [PubMed]

- Pati, S.; Ravi, B. Voxel-based representation, display and thickness analysis of intricate shapes. In Proceedings of the Ninth International Conference on Computer Aided Design and Computer Graphics (CAD-CG’05), Hong Kong, China, 7–10 December 2005; Volume 6. [Google Scholar] [CrossRef]

- Dalvit Carvalho da Silva, R.; Jenkyn, T.R.; Carranza, V.A. Convolutional Neural Network and Geometric Moments to Identify the Bilateral Symmetric Midplane in Facial Skeletons from CT Scans. Biology 2021, 10, 182. [Google Scholar] [CrossRef] [PubMed]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Lecture Notes in Computer Science, Athens, Greece, 17–21 October 2016; 2016; Volume 9901. [Google Scholar] [CrossRef]

- Sudre, C.; Li, W.; Vercauteren, T.; Ourselin, S.; Cardoso, M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop, Quebec City, QC, Canada, 14 September 2017; pp. 240–248. [Google Scholar] [CrossRef]

- Dice, L. Measures of the Amount of Ecologic Association Between Species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Schenk, A.; Prause, G.; Peitgen, H.O. Efficient semiautomatic segmentation of 3D objects in medical images. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2000, Lecture Notes in Computer Science, 2000, Pittsburgh, PA, USA, 11–14 October 2000; Volume 1935, pp. 186–195. [Google Scholar] [CrossRef]

- Jaccard, P. Distribution de la flore alpine dans le bassin des Dranses et dans quelques regions voisines. Bull. Soc. Vaudoise Des Sci. Nat. 1901, 37, 241–272. [Google Scholar] [CrossRef]

- Rusko, L.; Bekes, G.; Fidrich, M. Automatic segmentation of the liver from multi- and single-phase contrast-enhanced CT images. Med Image Anal. 2009, 13, 871–882. [Google Scholar] [CrossRef]

- Karimi, D.; Salcudean, S. Reducing the Hausdorff Distance in Medical Image Segmentation With Convolutional Neural Networks. IEEE Trans. Med Imaging 2020, 39, 499–513. [Google Scholar] [CrossRef]

- Zou, K.H.; Warfield, S.K.; Bharatha, A.; Tempany, C.M.; Kaus, M.R.; Haker, S.J.; Wells, W.M., III; Jolesz, F.A.; Kikinis, R. Statistical validation of image segmentation quality based on a spatial overlap index. Acad. Radiol. 2004, 11, 178–189. [Google Scholar] [CrossRef]

- Wang, G. Paint on an BW Image (Updated Version), MATLAB Central File Exchange. Available online: https://www.mathworks.com/matlabcentral/fileexchange/32786-paint-on-an-bw-image-updated-version (accessed on 10 September 2020).

- Kodym, O.; Španěl, M.; Herout, A. Segmentation of defective skulls from ct data for tissue modelling. arXiv 2019, arXiv:1911.08805. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. DLMIA 2018, ML-CDS 2018. Lecture Notes in Computer Science; Stoyanov, D., Ed.; Springer: Cham, Switzerland, 2018; Volume 11045. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Encoder Depth | 4 |

| Filter Size | 3 |

| Number of First Encoder Filters | 15 |

| Patch Per Image | 1 |

| Mini Batch Size | 128 |

| Initial Learning Rate | 5 × |

| DSC (Skull) | DSC (Background) | SVD (Skull) | JSC (Skull) | JSC (Background) | VOE (Skull) | HD (Skull) |

|---|---|---|---|---|---|---|

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Encoder Depth | 3 |

| Filter Size | 5 |

| Number of First Encoder Filters | 7 |

| Patch Per Image | 2 |

| Mini Batch Size | 128 |

| Initial Learning Rate |

| DSC (Brain) | DSC (Background) | SVD (Brain) | JSC (Brain) | JSC (Background) | VOE (Brain) | HD (Brain) |

|---|---|---|---|---|---|---|

| DSC (Skull) | DSC (Background) | SVD (Skull) | JSC (Skull) | JSC (Background) | VOE (Skull) | HD (Skull) |

|---|---|---|---|---|---|---|

| DSC (Skull) | DSC (Background) | SVD (Skull) | JSC (Skull) | JSC (Background) | VOE (Skull) | HD (Skull) |

|---|---|---|---|---|---|---|

| 0.0231 | 0.0024 | −0.0231 | 0.0046 | 0.0331 | −0.0331 | −08.36 |

| 0.0390 | 0.0040 | −0.0390 | 0.0077 | 0.0533 | −0.0533 | −12.43 |

| 0.0371 | 0.0038 | −0.0371 | 0.0074 | 0.0503 | −0.0503 | −03.09 |

| 0.0686 | 0.0060 | −0.0686 | 0.0116 | 0.0909 | −0.0909 | −08.96 |

| 0.0727 | 0.0081 | −0.0727 | 0.0155 | 0.0937 | −0.0937 | −21.68 |

| 0.0573 | 0.0086 | −0.0573 | 0.0162 | 0.0711 | −0.0711 | −05.29 |

| 0.0687 | 0.0083 | −0.0687 | 0.0157 | 0.0850 | −0.0850 | −13.91 |

| 0.0616 | 0.0099 | −0.0616 | 0.0187 | 0.0755 | −0.0755 | −15.94 |

| 0.0463 | 0.0065 | −0.0463 | 0.0124 | 0.0555 | −0.0555 | −09.94 |

| Dataset 1 | Dataset 2 | |||||

|---|---|---|---|---|---|---|

| Samples | Unet | Unet++ | Unet3+ | Unet | Unet++ | Unet3+ |

| A | ||||||

| B | ||||||

| C | ||||||

| D | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dalvit Carvalho da Silva, R.; Jenkyn, T.R.; Carranza, V.A. Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models. J. Pers. Med. 2021, 11, 310. https://doi.org/10.3390/jpm11040310

Dalvit Carvalho da Silva R, Jenkyn TR, Carranza VA. Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models. Journal of Personalized Medicine. 2021; 11(4):310. https://doi.org/10.3390/jpm11040310

Chicago/Turabian StyleDalvit Carvalho da Silva, Rodrigo, Thomas Richard Jenkyn, and Victor Alexander Carranza. 2021. "Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models" Journal of Personalized Medicine 11, no. 4: 310. https://doi.org/10.3390/jpm11040310

APA StyleDalvit Carvalho da Silva, R., Jenkyn, T. R., & Carranza, V. A. (2021). Development of a Convolutional Neural Network Based Skull Segmentation in MRI Using Standard Tesselation Language Models. Journal of Personalized Medicine, 11(4), 310. https://doi.org/10.3390/jpm11040310