Impact of the Volume and Distribution of Training Datasets in the Development of Deep-Learning Models for the Diagnosis of Colorectal Polyps in Endoscopy Images

Abstract

:1. Introduction

2. Methods

2.1. Input Datasets

2.2. Labeling of the Training Dataset

2.3. Establishment of an Artificial Intelligence Model

2.4. Training and Data Preprocessing

2.5. Primary Outcome and Statistics

3. Results

3.1. Diagnostic Performances of the Deep-Learning Models According to Various Data Volume and Class Distributions

3.2. Gradient-Weighted Class Activation Mapping

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

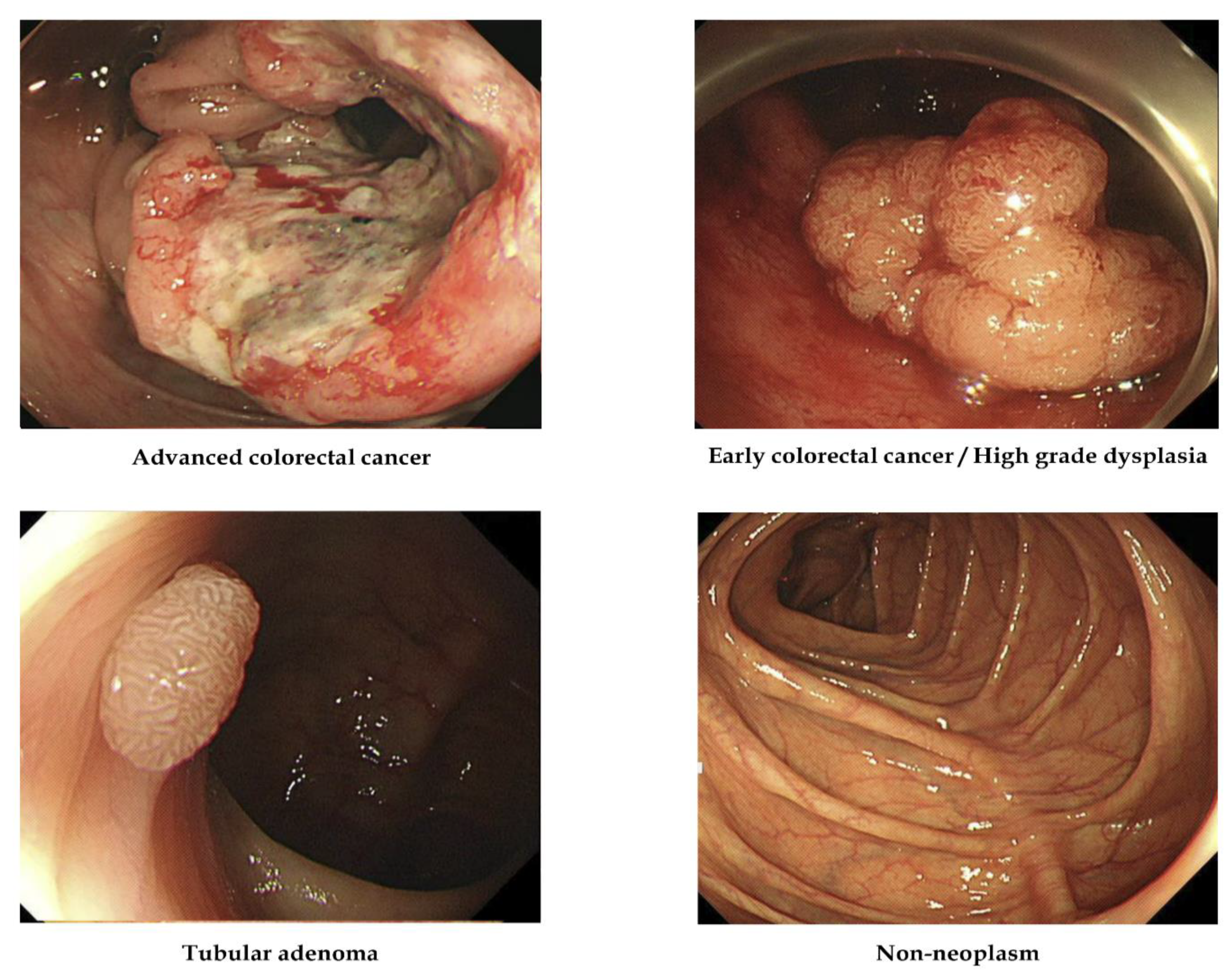

| ACC | advanced colorectal cancer |

| ECC/HGD | early cancers/high-grade dysplasia |

| TA | tubular adenoma |

| LGD | low-grade dysplasia |

References

- Yang, Y.J.; Bang, C.S. Application of Artificial Intelligence in Gastroenterology. World J. Gastroenterol. 2019, 25, 1666–1683. [Google Scholar] [CrossRef] [PubMed]

- Cho, B.J.; Bang, C.S. Artificial Intelligence for the Determination of a Management Strategy for Diminutive Colorectal Polyps: Hype, Hope, or Help. Am. J. Gastroenterol. 2020, 115, 70–72. [Google Scholar] [CrossRef] [PubMed]

- Bang, C.S. Artificial Intelligence in the Analysis of Upper Gastrointestinal Disorders. Korean J. Helicobacter Up. Gastrointest. Res. 2021, 21, 300–310. [Google Scholar] [CrossRef]

- Bang, C.S. Deep Learning in Upper Gastrointestinal Disorders: Status and Future Perspectives. Korean J. Gastroenterol. 2020, 75, 120–131. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kandel, P.; Wallace, M.B. Should We Resect and Discard Low Risk Diminutive Colon Polyps. Clin. Endosc. 2019, 52, 239–246. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bang, C.S.; Lee, J.J.; Baik, G.H. Computer-Aided Diagnosis of Diminutive Colorectal Polyps in Endoscopic Images: Systematic Review and Meta-analysis of Diagnostic Test Accuracy. J. Med. Internet Res. 2021, 23, e29682. [Google Scholar] [CrossRef] [PubMed]

- Almadi, M.A.; Sewitch, M.; Barkun, A.N.; Martel, M.; Joseph, L. Adenoma Detection Rates Decline with Increasing Procedural Hours in an Endoscopist’s Workload. Can. J. Gastroenterol. Hepatol. 2015, 29, 304–308. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.J.; Cho, B.J.; Lee, M.J.; Kim, J.H.; Lim, H.; Bang, C.S.; Jeong, H.M.; Hong, J.T.; Baik, G.H. Automated Classification of Colorectal Neoplasms in White-Light Colonoscopy Images via Deep Learning. J. Clin. Med. 2020, 9, 1593. [Google Scholar] [CrossRef] [PubMed]

- Gong, E.J.; Bang, C.S.; Lee, J.J.; Seo, S.I.; Yang, Y.J.; Baik, G.H.; Kim, J.W. No-Code Platform-Based Deep-Learning Models for Prediction of Colorectal Polyp Histology from White-Light Endoscopy Images: Development and Performance Verification. J. Pers. Med. 2022, 12, 963. [Google Scholar] [CrossRef] [PubMed]

- Bang, C.S.; Lim, H.; Jeong, H.M.; Hwang, S.H. Use of Endoscopic Images in the Prediction of Submucosal Invasion of Gastric Neoplasms: Automated Deep Learning Model Development and Usability Study. J. Med. Internet Res. 2021. Online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Milluzzo, S.M.; Cesaro, P.; Grazioli, L.M.; Olivari, N.; Spada, C. Artificial Intelligence in Lower Gastrointestinal Endoscopy: The Current Status and Future Perspective. Clin. Endosc. 2021, 54, 329–339. [Google Scholar] [CrossRef] [PubMed]

- Khamparia, A.; Singh, K.M. A Systematic Review on Deep Learning Architectures and Applications. Expert Syst. 2019, 36, e12400. [Google Scholar] [CrossRef]

- Yoshida, Y.; Okada, M. Data-Dependence of Plateau Phenomenon in Learning with Neural Network—Statistical Mechanical Analysis. J. Stat. Mech. Theory Exp. 2020, 2020, 124013. [Google Scholar] [CrossRef]

- Bang, C.S.; Lee, J.J.; Baik, G.H. Computer-Aided Diagnosis of Esophageal Cancer and Neoplasms in Endoscopic Images: A Systematic Review and Meta-analysis of Diagnostic Test Accuracy. Gastrointest. Endosc. 2021, 93, 1006–1015.e13. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.B.; Kim, S.H.; Lim, Y.J. Preparation of image databases for artificial intelligence algorithm development in gastrointestinal endoscopy. Clin. Endosc. 2022. Online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Bria, A.; Marrocco, C.; Tortorella, F. Addressing class imbalance in deep learning for small lesion detection on medical images. Comput. Biol. Med. 2020, 120, 103735. [Google Scholar] [CrossRef] [PubMed]

- Schouten, J.P.E.; Matek, C.; Jacobs, L.F.P.; Buck, M.C.; Bošnački, D.; Marr, C. Tens of Images can Suffice to Train Neural Networks for Malignant Leukocyte detection. Sci. Rep. 2021, 11, 7995. [Google Scholar] [CrossRef] [PubMed]

- Shahinfar, S.; Meek, P.; Falzon, G. “How many images do I need?” Understanding How Sample Size per Class Affects Deep Learning Model Performance Metrics for Balanced Designs in Autonomous Wildlife Monitoring. Ecol. Inform. 2020, 57, 101085. [Google Scholar] [CrossRef]

- Jha, D.; Ali, S.; Emanuelsen, K.; Hicks, S.A.; Thambawita, V.; Garcia-Ceja, E.; Riegler, M.A.; Lange, T.D.; Schmidt, P.T.; Johansenm, H.D.; et al. Kvasir-instrument: Diagnostic and therapeutic tool segmentation dataset in gastrointestinal endoscopy. In International Conference on Multimedia Modeling (MMM 2021); Springer: Cham, Switzerland, 2021; pp. 218–229. [Google Scholar]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Halvorsen, P.; Lange, T.D.; Johansen, D.; Johansen, H.D. Kvasir-SEG: A segmented polyp dataset. In International Conference on Multimedia Modeling (MMM 2020); Springer: Cham, Switzerland, 2020; pp. 451–462. [Google Scholar]

- Juba, B.; Le, H.S. Precision-Recall versus Accuracy and the Role of Large Data Sets. AAAI Conf. Artif. Intell. 2019, 33, 4039–4048. [Google Scholar] [CrossRef] [Green Version]

| Original Dataset | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | Doubling the Number of Total Data; (Original Dataset of 3828 Images with New 3964 Images) | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | Tripling the Number of Total Data; (Original Dataset of 3828 Images with New 6838 Images) | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | 3828 | 3224 | 2418 | 2418 | 7792 | 3540 | 2656 | 2656 | 10,666 | 3980 | 2986 | 2986 |

| Advanced colorectal cancer | 810 | 806 | 806 | 403 | 994 | 885 | 885 | 443 | 1356 | 995 | 995 | 498 |

| Early colorectal cancer/high-grade dysplasia | 806 | 806 | 806 | 806 | 885 | 885 | 885 | 885 | 995 | 995 | 995 | 995 |

| Tubular adenoma with or without low-grade dysplasia | 1316 | 806 | 403 | 806 | 3634 | 885 | 443 | 885 | 4902 | 995 | 498 | 995 |

| Nonneoplasm | 896 | 806 | 403 | 403 | 2279 | 885 | 443 | 443 | 3413 | 995 | 498 | 498 |

| Original Dataset | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | Doubling the Number of Total Data | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | Tripling the Number of Total Data | Even Distribution of Each Class | Doubling Data for Fewer Categories | Doubling Data for Less Accurate Categories | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | 3828 | 3224 | 2418 | 2418 | 7792 | 3540 | 2656 | 2656 | 10,666 | 3980 | 2986 | 2986 |

| Training dataset | 3444 | 2900 | 2176 | 2176 | 7013 | 3184 | 2258 | 2258 | 9599 | 3582 | 3688 | 3688 |

| Internal-test dataset | 384 | 324 | 242 | 242 | 779 | 356 | 398 | 398 | 1067 | 398 | 298 | 298 |

| Data Distribution (ACC: ECC/HGD: TA: Nonneoplasm) | Original Dataset (n = 3828) | Doubling the Total Data; Combined Dataset (n = 7792) | Tripling the Total Data; (n = 10,666) |

|---|---|---|---|

| Raw data | 75.3% | 67.5% | 72.4% |

| Even distribution (1:1:1:1) | 72.8% (n = 3224) | 75.6% (n = 3540) | 74.0% (n = 3980) |

| Doubling the proportion of data for fewer categories (2:2:1:1) | 86.4% (n = 2418) | 78.9% (n = 2656) | 82.4% (n = 2986) |

| Doubling the proportion of data for less accurate categories (1:2:2:1) | 81.5% (n = 2418) | 74.9% (n = 2656) | 79.2% (n = 2986) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, E.J.; Bang, C.S.; Lee, J.J.; Yang, Y.J.; Baik, G.H. Impact of the Volume and Distribution of Training Datasets in the Development of Deep-Learning Models for the Diagnosis of Colorectal Polyps in Endoscopy Images. J. Pers. Med. 2022, 12, 1361. https://doi.org/10.3390/jpm12091361

Gong EJ, Bang CS, Lee JJ, Yang YJ, Baik GH. Impact of the Volume and Distribution of Training Datasets in the Development of Deep-Learning Models for the Diagnosis of Colorectal Polyps in Endoscopy Images. Journal of Personalized Medicine. 2022; 12(9):1361. https://doi.org/10.3390/jpm12091361

Chicago/Turabian StyleGong, Eun Jeong, Chang Seok Bang, Jae Jun Lee, Young Joo Yang, and Gwang Ho Baik. 2022. "Impact of the Volume and Distribution of Training Datasets in the Development of Deep-Learning Models for the Diagnosis of Colorectal Polyps in Endoscopy Images" Journal of Personalized Medicine 12, no. 9: 1361. https://doi.org/10.3390/jpm12091361