The Role of Artificial Intelligence in Endocrine Management: Assessing ChatGPT’s Responses to Prolactinoma Queries

Abstract

:1. Introduction

2. Materials and Methods

Statistical Analyses

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Vilar, L.; Vilar, C.F.; Lyra, R.; da Freitas, M.C. Pitfalls in the Diagnostic Evaluation of Hyperprolactinemia. Neuroendocrinology 2019, 109, 7–19. [Google Scholar] [CrossRef]

- Auriemma, R.S.; Pirchio, R.; Pivonello, C.; Garifalos, F.; Colao, A.; Pivonello, R. Approach to the Patient with Prolactinoma. J. Clin. Endocrinol. Metab. 2023, 108, 2400–2423. [Google Scholar] [CrossRef]

- Riedl, D.; Schüßler, G. The Influence of Doctor-Patient Communication on Health Outcomes: A Systematic Review. Z. Psychosom. Med. Psychother. 2017, 63, 131–150. [Google Scholar] [CrossRef] [PubMed]

- Ramezani, M.; Takian, A.; Bakhtiari, A.; Rabiee, H.R.; Ghazanfari, S.; Mostafavi, H. The Application of Artificial Intelligence in Health Policy: A Scoping Review. BMC Health Serv. Res. 2023, 23, 1416. [Google Scholar] [CrossRef] [PubMed]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in Medicine: An Overview of Its Applications, Advantages, Limitations, Future Prospects, and Ethical Considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef] [PubMed]

- Khodve, G.B.; Banerjee, S. Artificial Intelligence in Efficient Diabetes Care. Curr. Diabetes Rev. 2023, 19, e050922208561. [Google Scholar] [CrossRef] [PubMed]

- Mese, I.; Taslicay, C.A.; Sivrioglu, A.K. Improving radiology workflow using ChatGPT and artificial intelligence. Clin. Imaging 2023, 103, 109993. [Google Scholar] [CrossRef]

- Ruksakulpiwat, S.; Kumar, A.; Ajibade, A. Using ChatGPT in Medical Research: Current Status and Future Directions. J. Multidiscip. Healthc. 2023, 16, 1513–1520. [Google Scholar] [CrossRef] [PubMed]

- Abbasgholizadeh Rahimi, S.; Légaré, F.; Sharma, G.; Archambault, P.; Zomahoun, H.T.V.; Chandavong, S.; Rheault, N.; Wong, T.; Langlois, L.; Couturier, Y.; et al. Application of Artificial Intelligence in Community-Based Primary Health Care: Systematic Scoping Review and Critical Appraisal. J. Med. Internet Res. 2021, 23, e29839. [Google Scholar] [CrossRef] [PubMed]

- Mohammad, B.; Supti, T.; Alzubaidi, M.; Shah, H.; Alam, T.; Shah, Z.; Househ, M. The Pros and Cons of Using ChatGPT in Medical Education: A Scoping Review. Stud. Health Technol. Inform. 2023, 305, 644–647. [Google Scholar] [CrossRef]

- Mondal, H.; Dash, I.; Mondal, S.; Behera, J.K. ChatGPT in Answering Queries Related to Lifestyle-Related Diseases and Disorders. Cureus 2023, 15, e48296. [Google Scholar] [CrossRef]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.-S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the Performance of ChatGPT in Answering Questions Regarding Cirrhosis and Hepatocellular Carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed]

- Rahsepar, A.A.; Tavakoli, N.; Kim, G.H.J.; Hassani, C.; Abtin, F.; Bedayat, A. How AI Responds to Common Lung Cancer Questions: ChatGPT versus Google Bard. Radiology 2023, 307, e230922. [Google Scholar] [CrossRef] [PubMed]

- Melmed, S.; Casanueva, F.F.; Hoffman, A.R.; Kleinberg, D.L.; Montori, V.M.; Schlechte, J.A.; Wass, J.A.H. Diagnosis and Treatment of Hyperprolactinemia: An Endocrine Society Clinical Practice Guideline. J. Clin. Endocrinol. Metab. 2011, 96, 273–288. [Google Scholar] [CrossRef] [PubMed]

- Casanueva, F.F.; Molitch, M.E.; Schlechte, J.A.; Abs, R.; Bonert, V.; Bronstein, M.D.; Brue, T.; Cappabianca, P.; Colao, A.; Fahlbusch, R.; et al. Guidelines of the Pituitary Society for the Diagnosis and Management of Prolactinomas. Clin. Endocrinol. 2006, 65, 265–273. [Google Scholar] [CrossRef]

- Petersenn, S.; Fleseriu, M.; Casanueva, F.F.; Giustina, A.; Biermasz, N.; Biller, B.M.K.; Bronstein, M.; Chanson, P.; Fukuoka, H.; Gadelha, M.; et al. Diagnosis and Management of Prolactin-Secreting Pituitary Adenomas: A Pituitary Society International Consensus Statement. Nat. Rev. Endocrinol. 2023, 19, 722–740. [Google Scholar] [CrossRef]

- Sierra, M.; Cianelli, R. Health Literacy in Relation to Health Outcomes: A Concept Analysis. Nurs. Sci. Q. 2019, 32, 299–305. [Google Scholar] [CrossRef]

- Kim, M.T.; Kim, K.B.; Ko, J.; Murry, N.; Xie, B.; Radhakrishnan, K.; Han, H.-R. Health Literacy and Outcomes of a Community-Based Self-Help Intervention: A Case of Korean Americans with Type 2 Diabetes. Nurs. Res. 2020, 69, 210–218. [Google Scholar] [CrossRef]

- Kim, K.; Shin, S.; Kim, S.; Lee, E. The Relation Between eHealth Literacy and Health-Related Behaviors: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2023, 25, e40778. [Google Scholar] [CrossRef]

- Goodman, R.S.; Patrinely, J.R.; Stone, C.A.; Zimmerman, E.; Donald, R.R.; Chang, S.S.; Berkowitz, S.T.; Finn, A.P.; Jahangir, E.; Scoville, E.A.; et al. Accuracy and Reliability of Chatbot Responses to Physician Questions. JAMA Netw. Open 2023, 6, e2336483. [Google Scholar] [CrossRef]

- Ali, R.; Tang, O.Y.; Connolly, I.D.; Fridley, J.S.; Shin, J.H.; Zadnik Sullivan, P.L.; Cielo, D.; Oyelese, A.A.; Doberstein, C.E.; Telfeian, A.E.; et al. Performance of ChatGPT, GPT-4, and Google Bard on a Neurosurgery Oral Boards Preparation Question Bank. Neurosurgery 2023, 93, 1090–1098. [Google Scholar] [CrossRef] [PubMed]

- Samaan, J.S.; Yeo, Y.H.; Rajeev, N.; Hawley, L.; Abel, S.; Ng, W.H.; Srinivasan, N.; Park, J.; Burch, M.; Watson, R.; et al. Assessing the Accuracy of Responses by the Language Model ChatGPT to Questions Regarding Bariatric Surgery. Obes. Surg. 2023, 33, 1790–1796. [Google Scholar] [CrossRef] [PubMed]

- Takagi, S.; Watari, T.; Erabi, A.; Sakaguchi, K. Performance of GPT-3.5 and GPT-4 on the Japanese Medical Licensing Examination: Comparison Study. JMIR Med. Educ. 2023, 9, e48002. [Google Scholar] [CrossRef] [PubMed]

| Questions | Accuracy Score b | Adequacy Score c |

|---|---|---|

| What is prolactin? | 6 | 5 |

| What is the physiological role of prolactin in the body? | 6 | 5 |

| How is prolactin measured? | 6 | 3 |

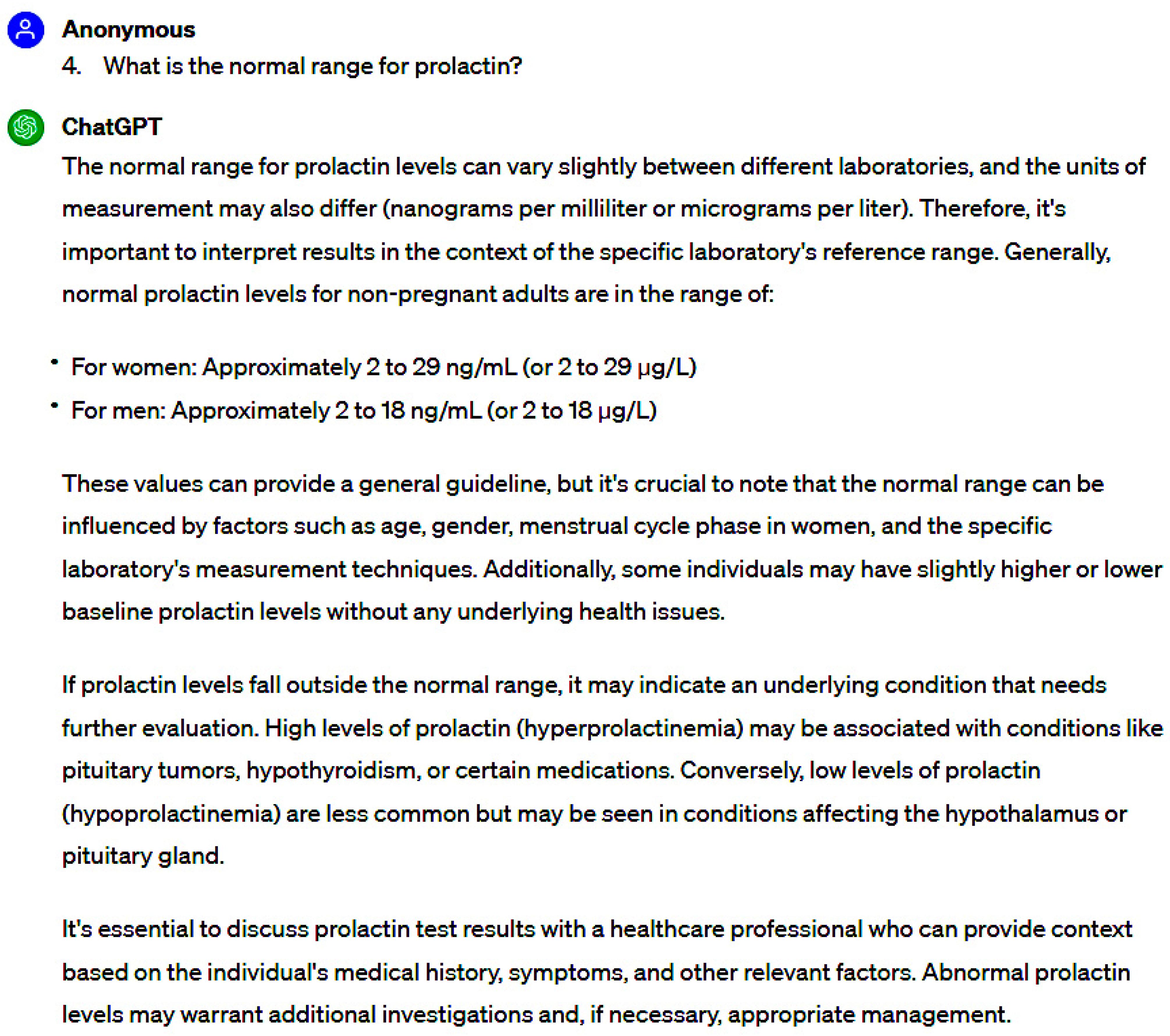

| What is the normal range for prolactin? | 6 | 5 |

| What precautions should be taken before blood sampling for prolactin measurement? | 5.5 | 4 |

| What factors contribute to elevated levels of prolactin? | 6 | 5 |

| Which medications can increase prolactin levels? | 6 | 5 |

| What are the symptoms of hyperprolactinemia? | 6 | 4.5 |

| What is macroprolactinemia? | 6 | 4 |

| What is prolactinoma? | 6 | 5 |

| What are the symptoms of prolactinoma? | 6 | 4 |

| How is the diagnosis of prolactinoma established? | 5.5 | 3 |

| In which gender is prolactinoma more commonly observed? | 6 | 5 |

| How can it be determined whether the elevated prolactin is due to a pituitary adenoma? | 6 | 4 |

| What is macroprolactinoma? | 6 | 5 |

| What is the treatment for prolactinoma? | 6 | 5 |

| Should every patient be treated, or is it possible to opt for a conservative approach with regular monitoring without intervention? | 5 | 4.5 |

| How is cabergoline used in the treatment of prolactinoma? | 6 | 5 |

| What are the side effects of cabergoline? | 6 | 3 |

| How is bromocriptine used in the treatment of prolactinoma? | 5.5 | 4 |

| What are the side effects of bromocriptine? | 5 | 3 |

| In what situations is surgery required for prolactinoma? | 6 | 5 |

| Is radiotherapy administered in the treatment of prolactinoma? | 6 | 5 |

| What should be the target prolactin level with treatment? | 5 | 4.5 |

| How long should medication be administered in the treatment of prolactinoma? | 6 | 3 |

| Do symptoms completely resolve after treatment? | 5.5 | 3 |

| Can prolactinoma shrink with medical treatment? | 6 | 5 |

| Is it possible to achieve complete recovery from prolactinoma after treatment? | 6 | 4 |

| How often should a patient diagnosed with prolactinoma consult a doctor? | 5 | 2 |

| How often should an MRI be performed in prolactinoma cases? | 5 | 2.5 |

| Can there be a recurrence after discontinuing medication for prolactinoma? | 5.5 | 3.5 |

| Can patients with prolactinoma conceive? | 5 | 3 |

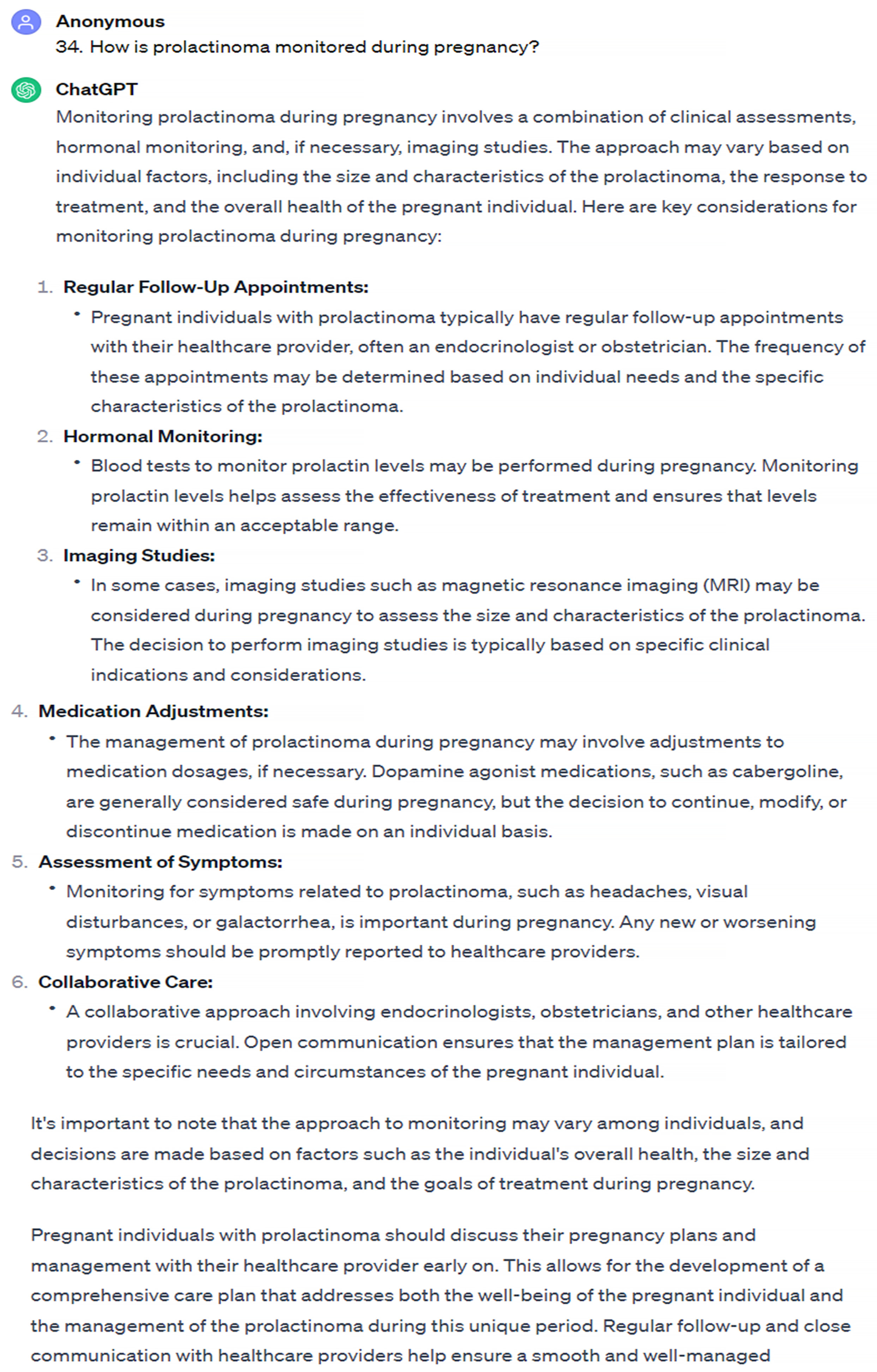

| How is prolactinoma monitored during pregnancy? | 2 | 3 |

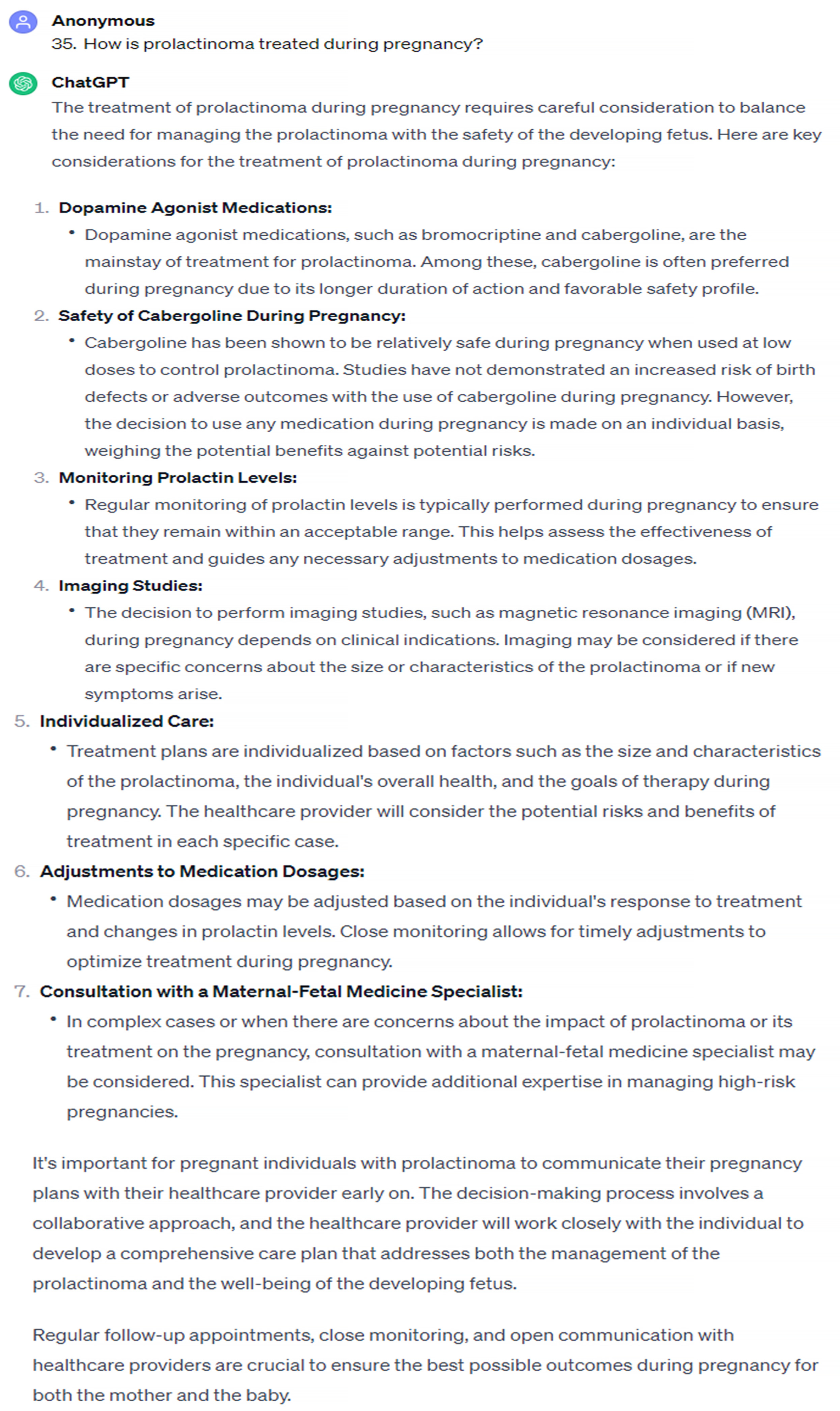

| How is prolactinoma treated during pregnancy? | 2 | 3 |

| In a pregnant patient with prolactinoma, when should medical treatment be discontinued? | 5 | 3.5 |

| Can women with prolactinoma use medication after childbirth? | 5 | 3.5 |

| Is breastfeeding allowed for a patient with prolactinoma after childbirth? | 5 | 4 |

| Should postmenopausal prolactinoma be treated? | 5.5 | 4 |

| What are the complications of elevated prolactin levels? | 6 | 4.5 |

| Which other hormones are affected by hyperprolactinemia? | 5.5 | 5 |

| What ocular manifestations are associated with prolactinoma? | 6 | 5 |

| Does prolactinoma cause headaches? | 6 | 5 |

| Does elevated prolactin affect sexual function in men? | 6 | 5 |

| Does hyperprolactinemia cause menstrual irregularities? | 6 | 5 |

| Is it possible to conceive while having elevated prolactin levels? | 6 | 5 |

| General Information | Diagnostic Process | Treatment Process | Follow-Up | Pregnancy Period | Total | p Value | |

|---|---|---|---|---|---|---|---|

| Rater 1 | |||||||

| Median (IQR) | 6.0 (6.0–6.0) | 6.0 (6.0–6.0) | 6.0 (5.5–6.0) | 6.0 (5.0–6.0) | 5.0 (2.5–5.7) | 6.0 (5.8–6.0) | 0.001 |

| Mean (SD) | 6.0 (0.0) | 6.0 (0.0) | 5.8 (0.4) | 5.7 (0.5) | 4.4 (1.6) | 5.6 (0.9) | |

| Rater 2 | |||||||

| Median (IQR) | 6.0 (6.0–6.0) | 6.0 (6.0–6.0) | 6.0 (5.0–6.0) | 5.0 (5.0–6.0) | 5.0 (2.5–5.7) | 6.0 (5.0–6.0) | 0.015 |

| Mean (SD) | 5.8 (0.3) | 5.8 (0.4) | 5.5 (0.5) | 5.4 (0.5) | 4.4 (1.6) | 5.5 (0.9) | |

| Average Score | |||||||

| Median (IQR) | 6.0 (6.0–6.0) | 6.0 (6.0–6.0) | 6.0 (5.2–6.0) | 5.5 (5.0–6.0) | 5.0 (2.5–5.7) | 6.0 (5.4–6.0) | 0.005 |

| Mean (SD) | 5.9 (0.2) | 5.9 (0.2) | 5.7 (0.4) | 5.5 (0.5) | 4.4 (1.6) | 5.5 (0.9) | |

| General İnformation | Diagnostic Process | Treatment Process | Follow-Up | Pregnancy Period | Total | p Value | |

|---|---|---|---|---|---|---|---|

| Rater 1 | |||||||

| Median (IQR) | 5.0 (4.5–5.0) | 5.0 (4.0–5.0) | 5.0 (3.5–5.0) | 4.0 (3.0–4.5) | 3.0 (3.0–4.8) | 5.0 (3.0–5.0) | 0.018 |

| Mean (SD) | 4.8 (0.4) | 4.6 (0.8) | 4.4 (0.9) | 3.6 (1.0) | 3.6 (0.9) | 4.3 (0.9) | |

| Rater2 | |||||||

| Median (IQR) | 5.0 (4.0–5.0) | 5.0 (4.0–5.0) | 4.0 (3.5–5.0) | 3.0 (2.5–4.5) | 4.0 (3.0–4.8) | 4.0 (3.0–5.0) | 0.059 |

| Mean (SD) | 4.7 (0.5) | 4.4 (0.8) | 4.2 (0.8) | 3.0 (1.1) | 3.9 (0.8) | 4.1 (0.9) | |

| Average Score | |||||||

| Median (IQR) | 5.0 (4.2–5.0) | 5.0 (4.0–5.0) | 4.5 (3.5–5.0) | 3.5 (2.7–4.5) | 3.5 (3.0–4.8) | 4.5 (3.5–5.0) | 0.023 |

| Mean (SD) | 4.9 (1.1) | 4.5 (0.8) | 4.3 (0.8) | 3.5 (1.1) | 3.8 (0.8) | 4.2 (0.9) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Şenoymak, M.C.; Erbatur, N.H.; Şenoymak, İ.; Fırat, S.N. The Role of Artificial Intelligence in Endocrine Management: Assessing ChatGPT’s Responses to Prolactinoma Queries. J. Pers. Med. 2024, 14, 330. https://doi.org/10.3390/jpm14040330

Şenoymak MC, Erbatur NH, Şenoymak İ, Fırat SN. The Role of Artificial Intelligence in Endocrine Management: Assessing ChatGPT’s Responses to Prolactinoma Queries. Journal of Personalized Medicine. 2024; 14(4):330. https://doi.org/10.3390/jpm14040330

Chicago/Turabian StyleŞenoymak, Mustafa Can, Nuriye Hale Erbatur, İrem Şenoymak, and Sevde Nur Fırat. 2024. "The Role of Artificial Intelligence in Endocrine Management: Assessing ChatGPT’s Responses to Prolactinoma Queries" Journal of Personalized Medicine 14, no. 4: 330. https://doi.org/10.3390/jpm14040330

APA StyleŞenoymak, M. C., Erbatur, N. H., Şenoymak, İ., & Fırat, S. N. (2024). The Role of Artificial Intelligence in Endocrine Management: Assessing ChatGPT’s Responses to Prolactinoma Queries. Journal of Personalized Medicine, 14(4), 330. https://doi.org/10.3390/jpm14040330