Assessment of Transfer Learning Capabilities for Fatigue Damage Classification and Detection in Aluminum Specimens with Different Notch Geometries

Abstract

1. Introduction

2. Experimental Method

2.1. Fatigue Testing

2.2. Binary Classification of the Ultrasonic Time-Series Data Using Confocal Microscope

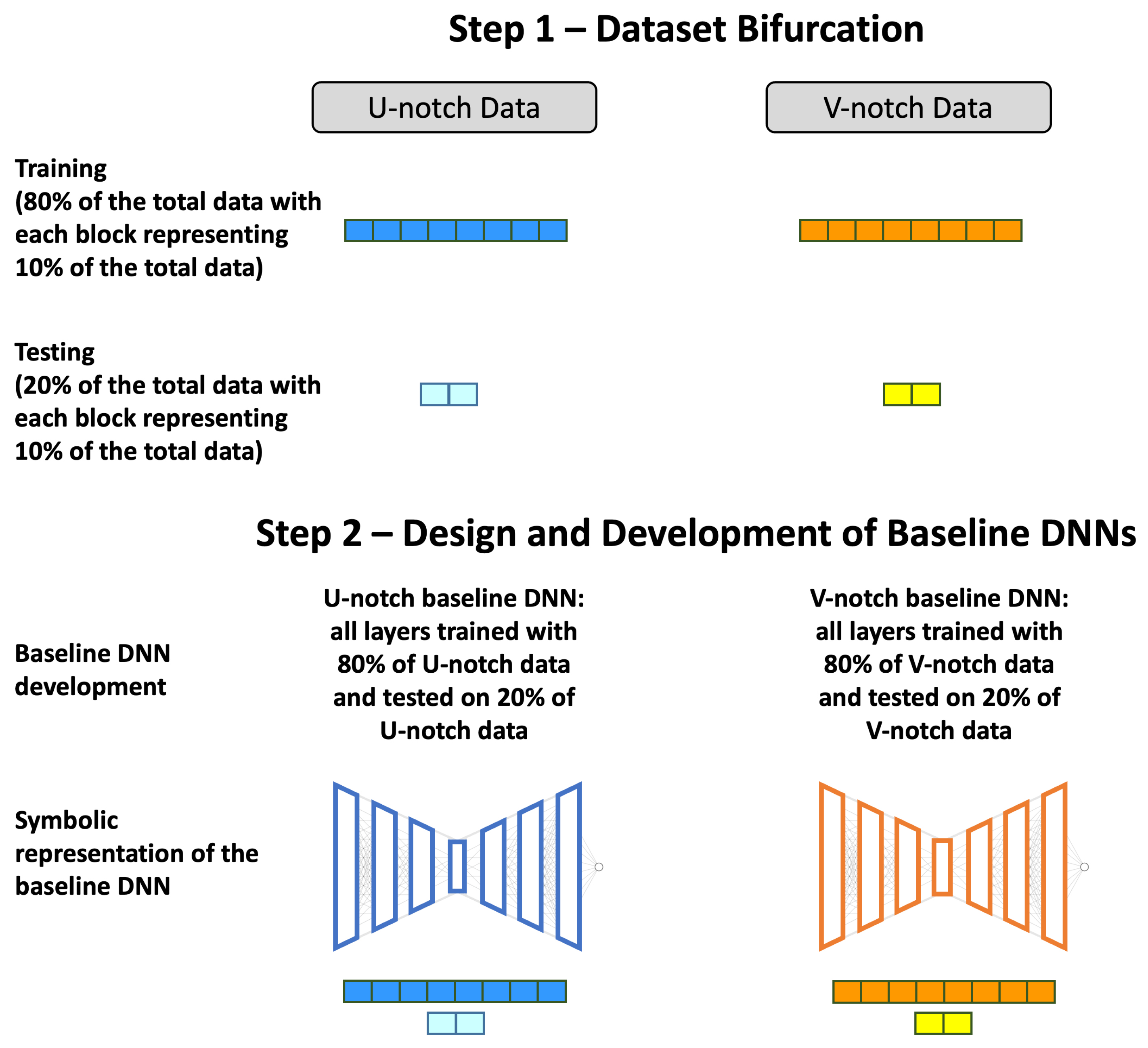

3. Design of Deep Neural Networks (DNNs) and Transfer Learning Methodology

4. Results and Discussion

4.1. Performance of the Baseline DNNs

4.2. Transductive Results

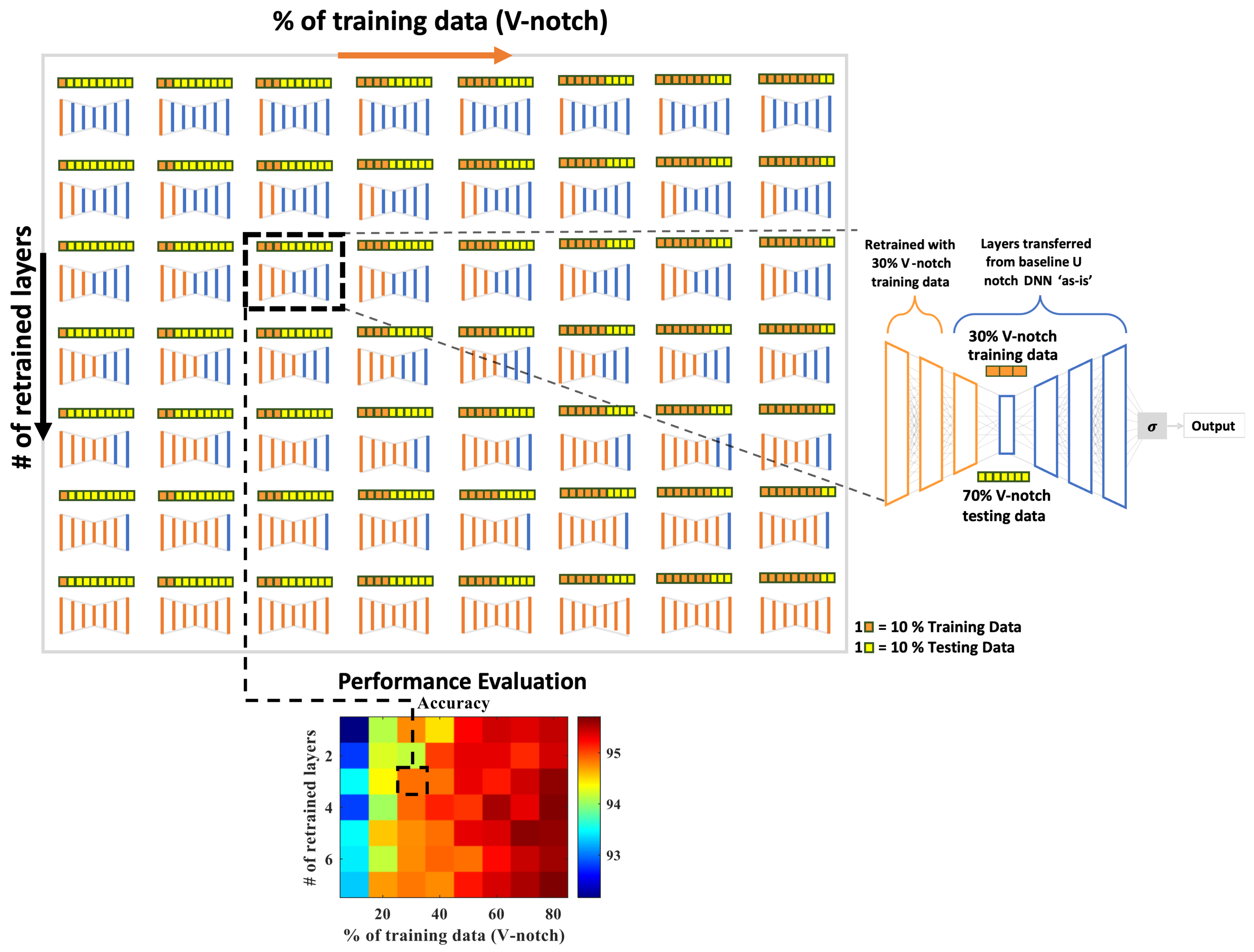

4.3. Effect of Transfer Learning

4.3.1. Impact of the Percentage of Training Data

4.3.2. Impact of the Number of Retrained Layers

5. Conclusions and Future Work

- Verification of the proposed method with additional experimental data, where knowledge of micro-crack emergence is available through different measurement methods.

- Integration of the current framework with physics-informed neural networks to enable mechanistic interpretation of the fatigue failure.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krautkrämer, J.; Krautkrämer, H. Ultrasonic Testing of Materials; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Atzori, B.; Meneghetti, G. Fatigue strength of fillet welded structural steels: Finite elements, strain gauges and reality. Int. J. Fatigue 2001, 23, 713–721. [Google Scholar] [CrossRef]

- Roberts, T.; Talebzadeh, M. Acoustic emission monitoring of fatigue crack propagation. J. Constr. Steel Res. 2003, 59, 695–712. [Google Scholar] [CrossRef]

- Zilberstein, V.; Schlicker, D.; Walrath, K.; Weiss, V.; Goldfine, N. MWM eddy current sensors for monitoring of crack initiation and growth during fatigue tests and in service. Int. J. Fatigue 2001, 23, 477–485. [Google Scholar] [CrossRef]

- Dharmadhikari, S.; Basak, A. Fatigue damage detection of aerospace-grade aluminum alloys using feature-based and feature-less deep neural networks. Mach. Learn. Appl. 2022, 7, 100247. [Google Scholar] [CrossRef]

- Gupta, S.; Ray, A.; Keller, E. Symbolic time series analysis of ultrasonic data for early detection of fatigue damage. Mech. Syst. Signal Process. 2007, 21, 866–884. [Google Scholar] [CrossRef]

- Ghalyan, N.F.; Ray, A. Symbolic time series analysis for anomaly detection in measure-invariant ergodic systems. J. Dyn. Syst. Meas. Control 2020, 142, 061003. [Google Scholar] [CrossRef]

- Bhattacharya, C.; Dharmadhikari, S.; Basak, A.; Ray, A. Early detection of fatigue crack damage in ductile materials: A projection-based probabilistic finite state automata approach. ASME Lett. Dyn. Syst. Control 2021, 1, 041003. [Google Scholar] [CrossRef]

- Zhang, R.; Tao, H.; Wu, L.; Guan, Y. Transfer learning with neural networks for bearing fault diagnosis in changing working conditions. IEEE Access 2017, 5, 14347–14357. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.; Haddad, A.; Soares, C.A.P.; Waller, S.T. Image-Based Crack Detection Methods: A Review. Infrastructures 2021, 6, 115. [Google Scholar] [CrossRef]

- Yang, C.; Chen, J.; Li, Z.; Huang, Y. Structural Crack Detection and Recognition Based on Deep Learning. Appl. Sci. 2021, 11, 2868. [Google Scholar] [CrossRef]

- Dung, C.V.; Sekiya, H.; Hirano, S.; Okatani, T.; Miki, C. A vision-based method for crack detection in gusset plate welded joints of steel bridges using deep convolutional neural networks. Autom. Constr. 2019, 102, 217–229. [Google Scholar] [CrossRef]

- Che, C.; Wang, H.; Fu, Q.; Ni, X. Deep transfer learning for rolling bearing fault diagnosis under variable operating conditions. Adv. Mech. Eng. 2019, 11, 1687814019897212. [Google Scholar] [CrossRef]

- Li, J.; Li, X.; He, D.; Qu, Y. A domain adaptation model for early gear pitting fault diagnosis based on deep transfer learning network. Proc. Inst. Mech. Eng. Part O J. Risk Reliab. 2020, 234, 168–182. [Google Scholar] [CrossRef]

- Guo, L.; Lei, Y.; Xing, S.; Yan, T.; Li, N. Deep convolutional transfer learning network: A new method for intelligent fault diagnosis of machines with unlabeled data. IEEE Trans. Ind. Electron. 2018, 66, 7316–7325. [Google Scholar] [CrossRef]

- Wen, L.; Gao, L.; Li, X. A new deep transfer learning based on sparse auto-encoder for fault diagnosis. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 136–144. [Google Scholar] [CrossRef]

- Wang, Q.; Michau, G.; Fink, O. Domain adaptive transfer learning for fault diagnosis. In Proceedings of the 2019 Prognostics and System Health Management Conference (PHM-Paris), Paris, France, 2–5 May 2019; pp. 279–285. [Google Scholar]

- Dharmadhikari, S.; Keller, E.; Ray, A.; Basak, A. A dual-imaging framework for multi-scale measurements of fatigue crack evolution in metallic materials. Int. J. Fatigue 2021, 142, 105922. [Google Scholar] [CrossRef]

- Dharmadhikari, S.; Bhattacharya, C.; Ray, A.; Basak, A. A Data-Driven Framework for Early-Stage Fatigue Damage Detection in Aluminum Alloys Using Ultrasonic Sensors. Machines 2021, 9, 211. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 2017, 18, 6765–6816. [Google Scholar]

- Angel, N.M.; Basak, A. On the fabrication of metallic single crystal turbine blades with a commentary on repair via additive manufacturing. J. Manuf. Mater. Process. 2020, 4, 101. [Google Scholar] [CrossRef]

- Dharmadhikari, S.; Basak, A. Evaluation of Early Fatigue Damage Detection in Additively Manufactured AlSi10Mg. In Proceedings of the 2021 International Solid Freeform Fabrication Symposium, Virtual, 2–4 August 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dharmadhikari, S.; Raut, R.; Bhattacharya, C.; Ray, A.; Basak, A. Assessment of Transfer Learning Capabilities for Fatigue Damage Classification and Detection in Aluminum Specimens with Different Notch Geometries. Metals 2022, 12, 1849. https://doi.org/10.3390/met12111849

Dharmadhikari S, Raut R, Bhattacharya C, Ray A, Basak A. Assessment of Transfer Learning Capabilities for Fatigue Damage Classification and Detection in Aluminum Specimens with Different Notch Geometries. Metals. 2022; 12(11):1849. https://doi.org/10.3390/met12111849

Chicago/Turabian StyleDharmadhikari, Susheel, Riddhiman Raut, Chandrachur Bhattacharya, Asok Ray, and Amrita Basak. 2022. "Assessment of Transfer Learning Capabilities for Fatigue Damage Classification and Detection in Aluminum Specimens with Different Notch Geometries" Metals 12, no. 11: 1849. https://doi.org/10.3390/met12111849

APA StyleDharmadhikari, S., Raut, R., Bhattacharya, C., Ray, A., & Basak, A. (2022). Assessment of Transfer Learning Capabilities for Fatigue Damage Classification and Detection in Aluminum Specimens with Different Notch Geometries. Metals, 12(11), 1849. https://doi.org/10.3390/met12111849