Microstructure Image Segmentation of 23crni3mo Steel Carburized Layer Based on a Deep Neural Network

Abstract

1. Introduction

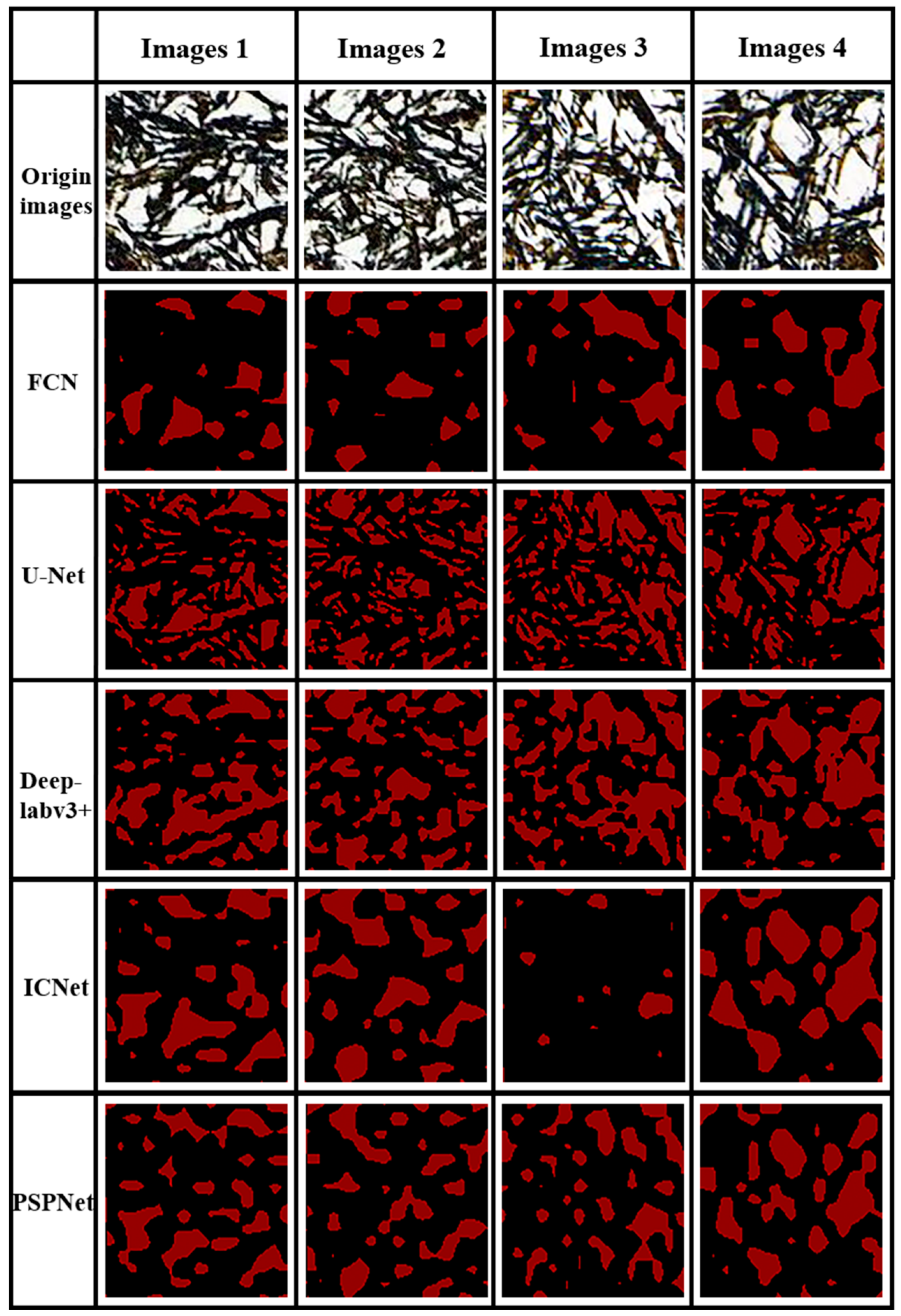

- (1)

- Establish five high-precision neural network models, namely, an FCN, U-Net, DeepLabv3+, PSPNet, and ICNet, and train them on a self-built 23CrNi3Mo steel carburized layer microstructure dataset (MCLD) to determine the best neural network model for carburized layer microstructure segmentation.

- (2)

- Improve the segmentation accuracy of the deep neural network model for the microstructure of the carburized layer by optimizing the neural network model.

2. Experiment and Model Building

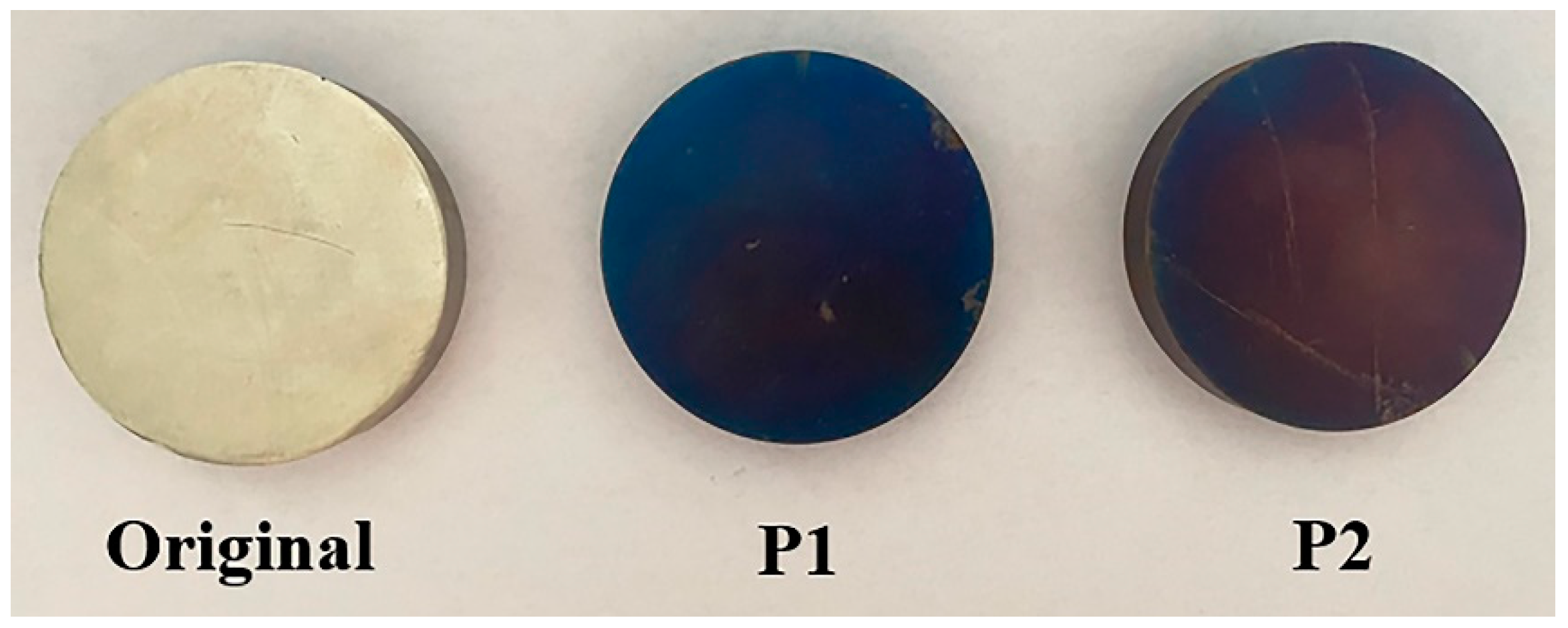

2.1. Experiment

2.1.1. Heat Treatment Process

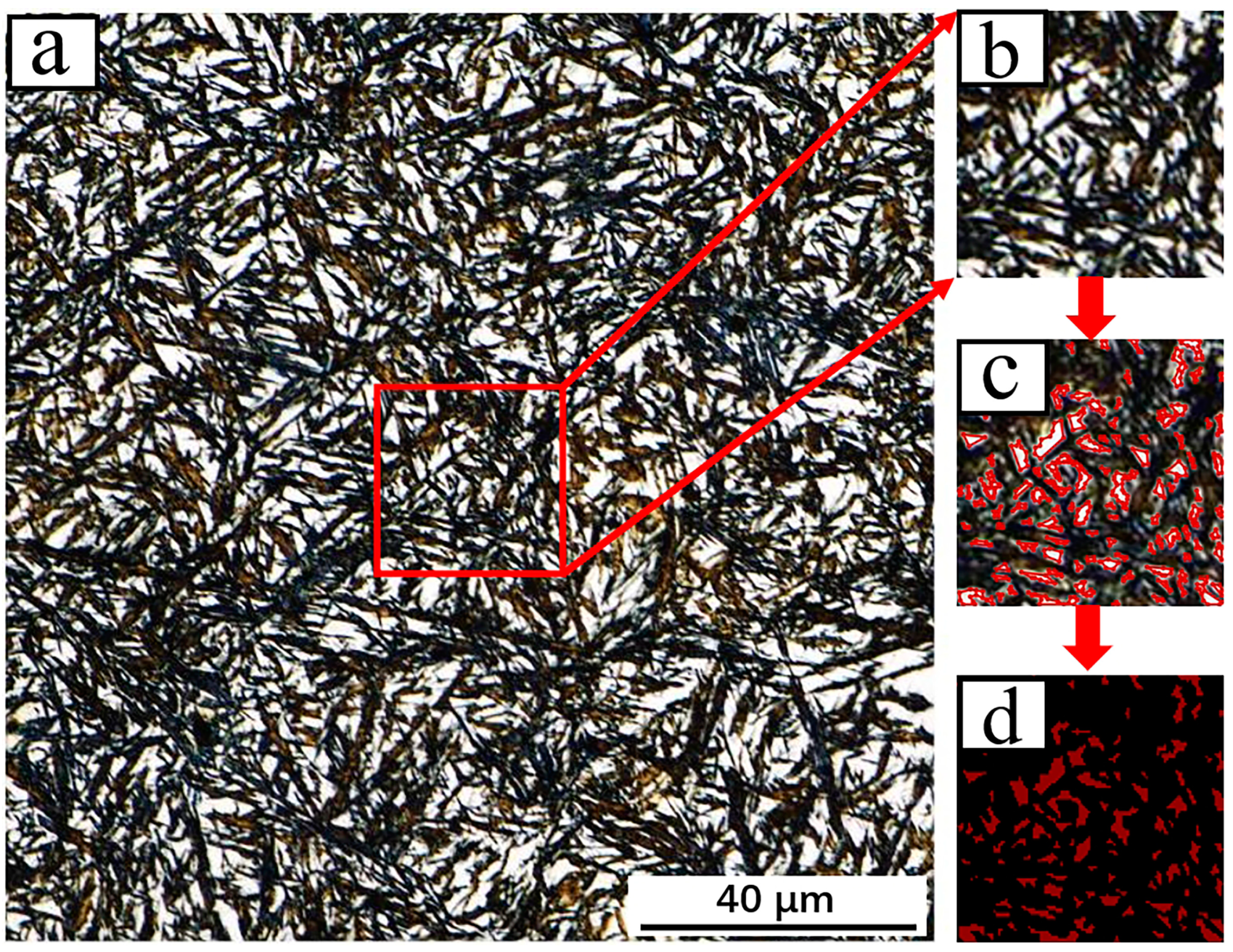

2.1.2. Microstructure and Electron Back Scatter Diffraction (EBSD)

2.2. Construction of a Microstructure Segmentation Model of a Carburized Layer

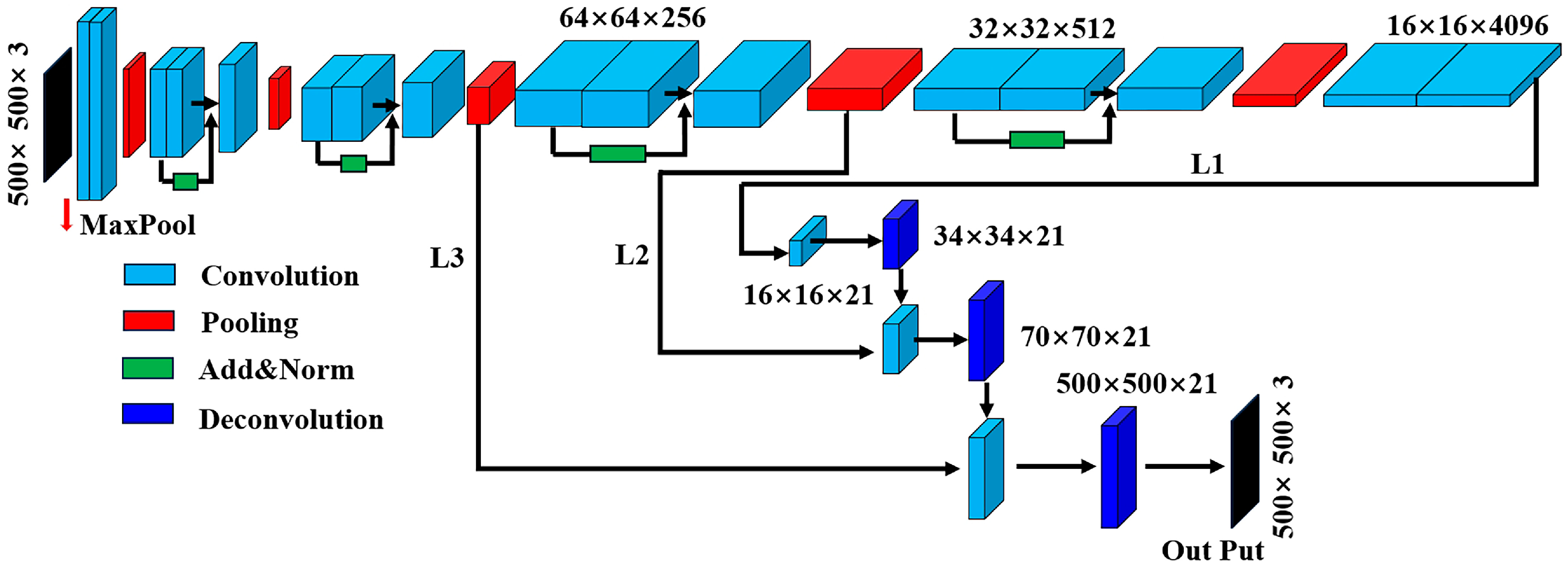

2.2.1. Full Convolutional Network (Fcn) Segmentation Model

2.2.2. U-Net Segmentation Model

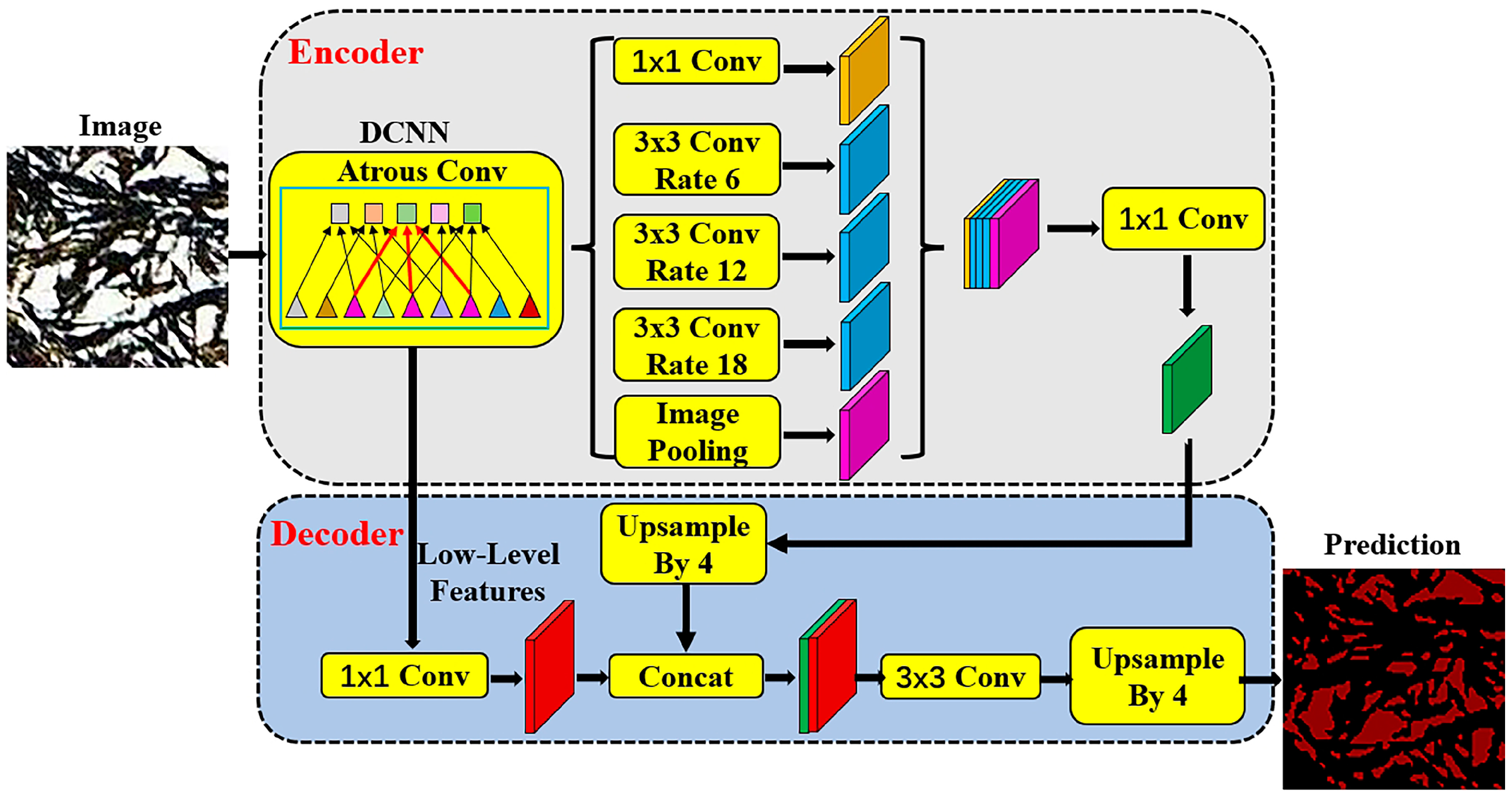

2.2.3. DeepLabv3_plus Segmentation Model

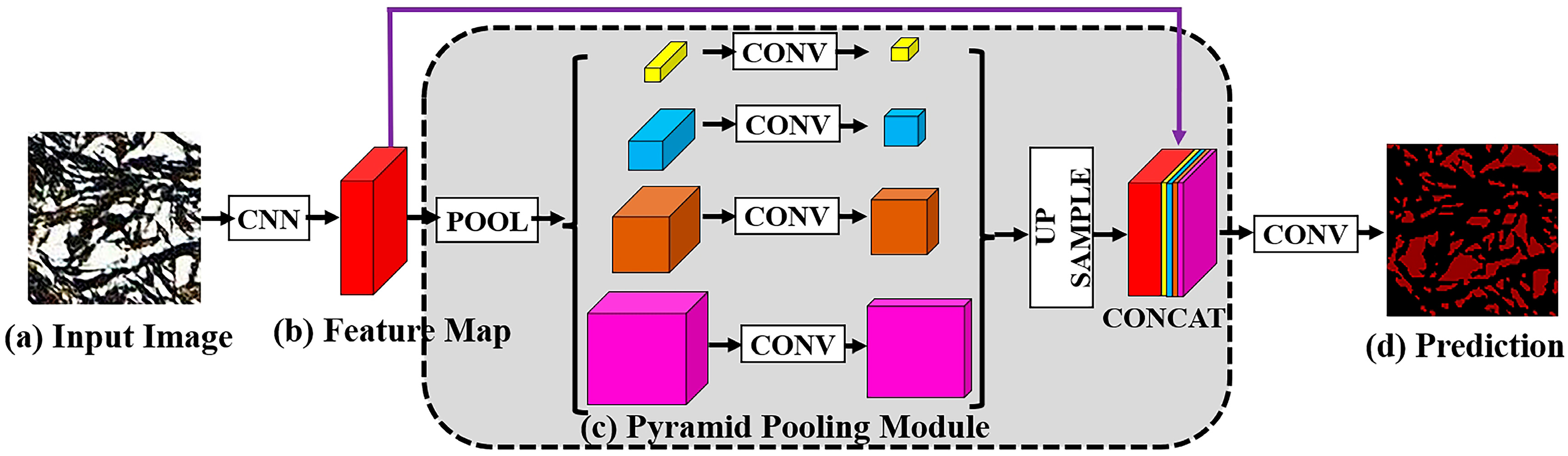

2.2.4. Pyramid Scene Parsing Network (PSPNet) Segmentation Model

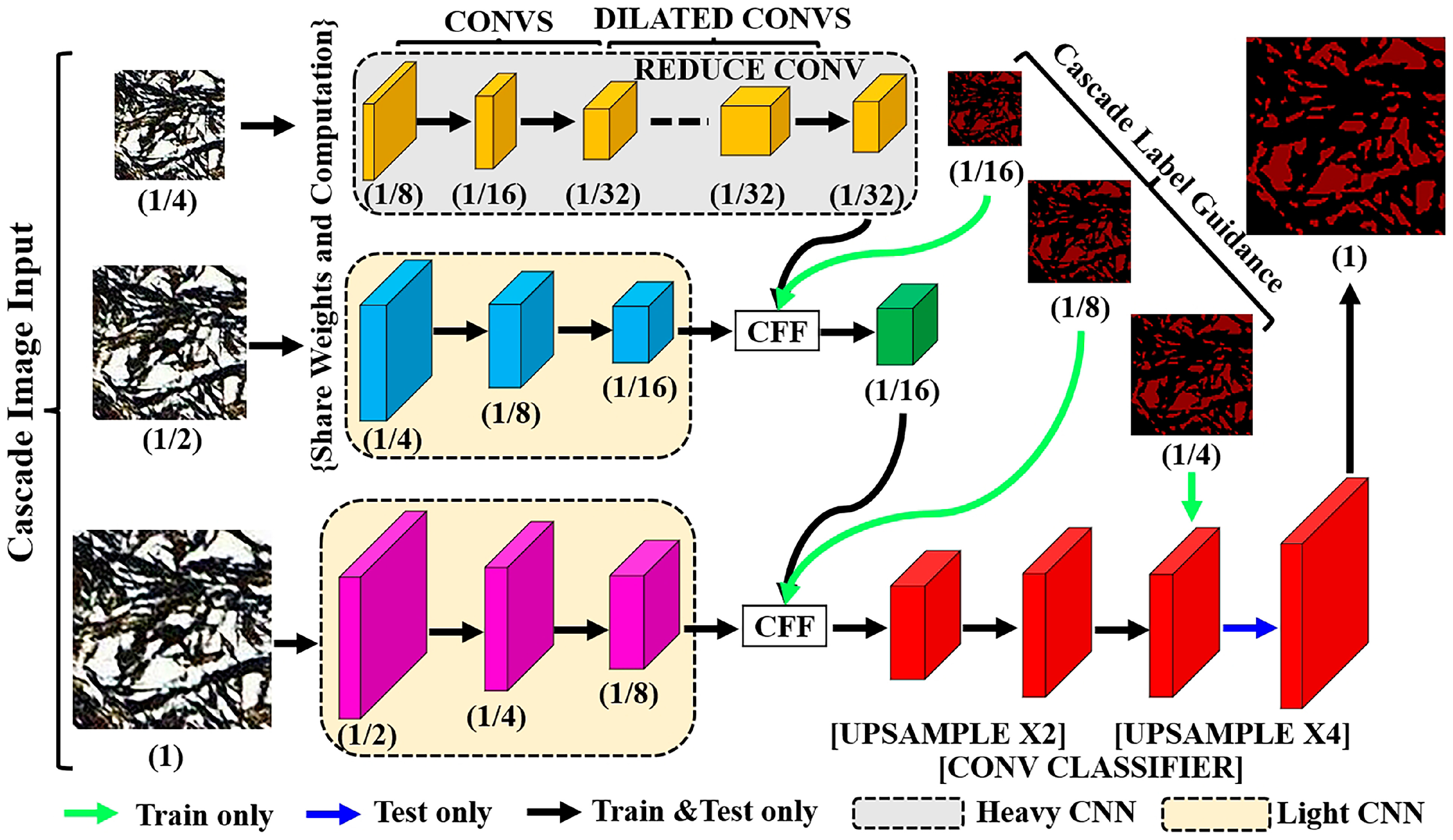

2.2.5. Image Cascade Network (ICNet) Segmentation Model

3. Experimental Results and Analysis

3.1. Data Processing

3.2. Model Segmentation Evaluation Metrics

3.3. DeepLabv3_plus Model Segmentation Results of Microstructure Images

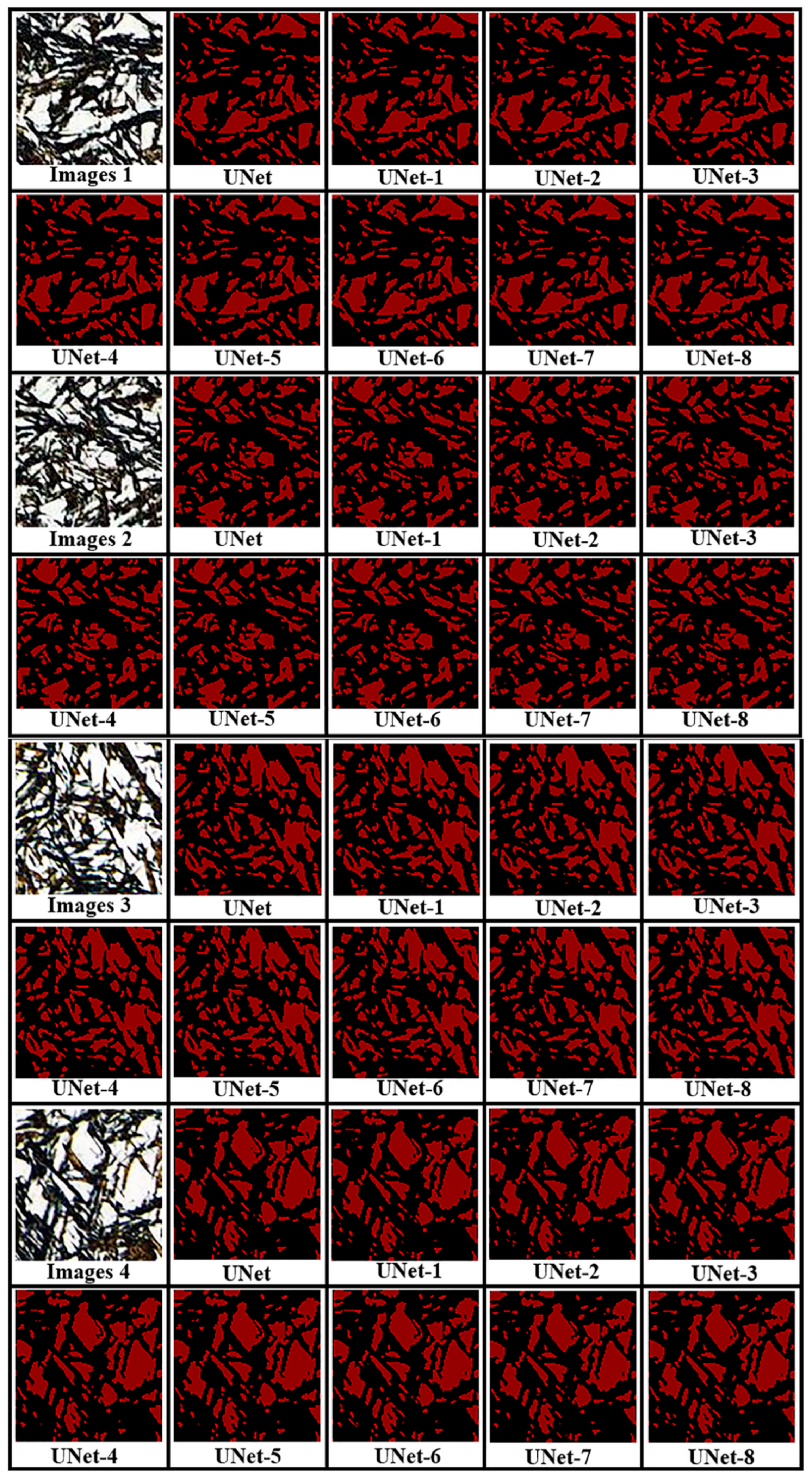

3.4. Optimization of the U-Net Network Model

- (1)

- U-Net-1: replace the activation function RELU of the U-Net model with the GELU activation function;

- (2)

- U-Net-2: replace the backbone network of the U-Net model with efficientnetb0;

- (3)

- U-Net-3: set the batch size of the training phase of the U-Net model to 16;

- (4)

- U-Net-4: add ECA attention mechanism and DropBlock regularization method to the U-Net model;

- (5)

- U-Net-5: replace the activation function RELU of the U-Net model with the Mish activation function and add the ECA attention mechanism;

- (6)

- U-Net-6: replace the activation function RELU of the U-Net model with the Mish activation function, add the ECA attention mechanism, and add the DropBlock regularization method;

- (7)

- U-Net-7: add network skip layer component U-Net++model;

- (8)

- U-Net-8: add residual network Resnet50 to the U-Net model_ U-net.

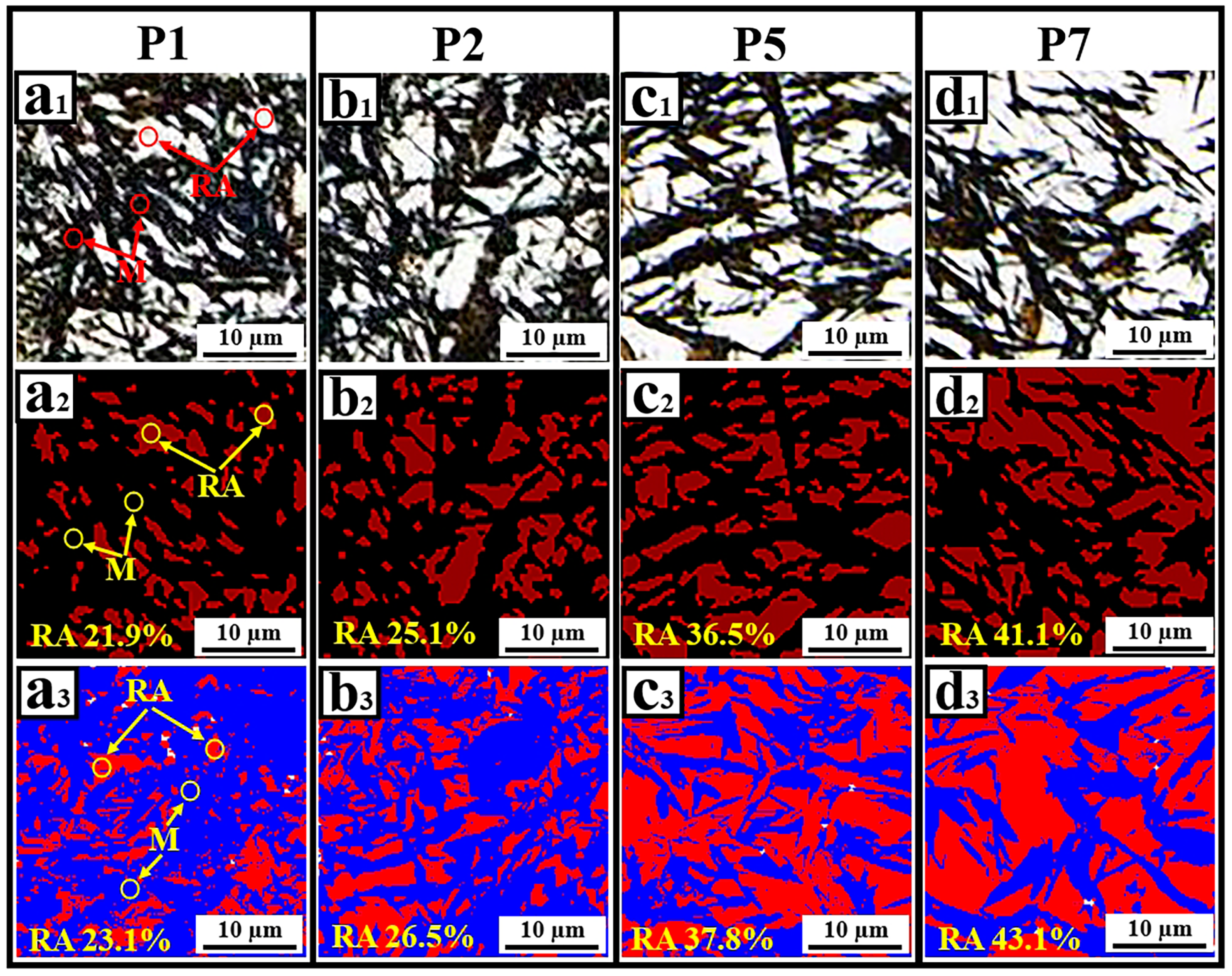

3.5. U-Net-6 Model for Residual Austenite Segmentation in the Carburized Layer Compared to EBSD

4. Conclusions

- (1)

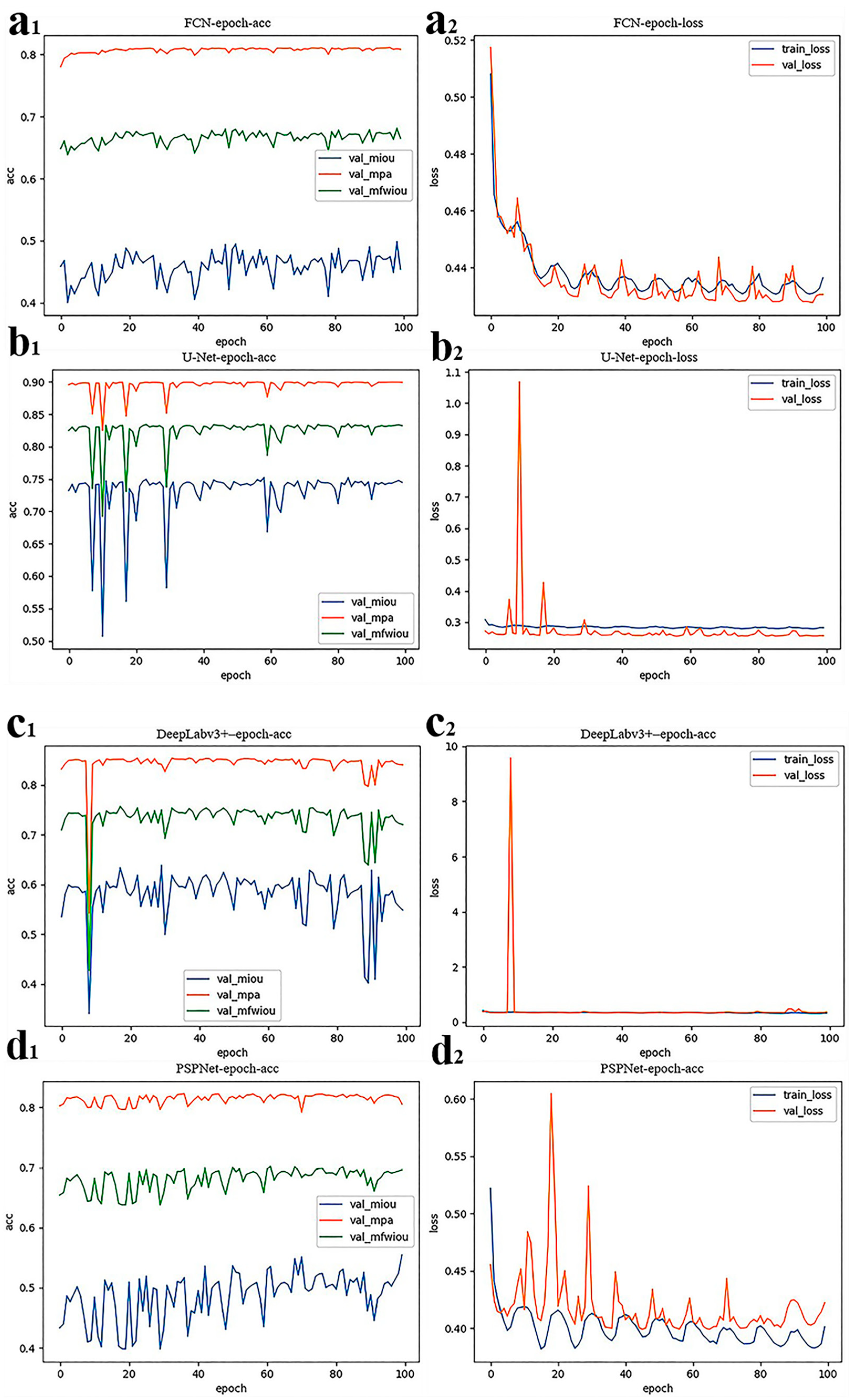

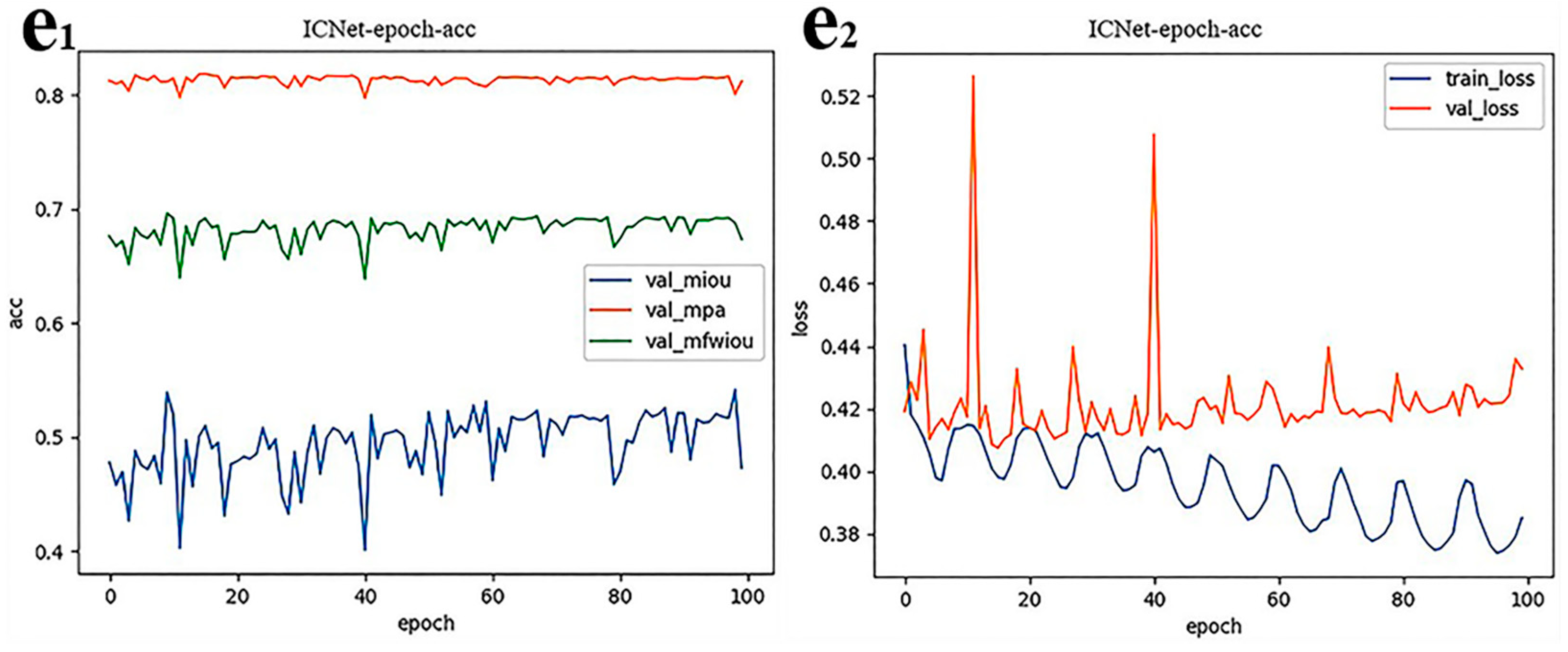

- Five neural network models based on deep learning—an FCN, U-Net, DeepLabv3+, PSPNet, and ICNet—were trained on the self-built 23CrNi3Mo steel carburized layer microstructure dataset (MCLD). The experimental results show that the U-Net model has the best segmentation effect on the microstructure of the carburized layer, with three evaluation indicators: MIOU of 0.75, MPA of 0.89, and MFWIOU of 0.83.

- (2)

- After optimizing the U-Net model, it is found that the U-Net-6 model, after replacing the activation function RELU with Mish and adding an attention mechanism and regularization treatment, has more advantages for the metallographic structure segmentation of the carburized layer. The evaluation indexes MIOU, MPA, and MFWIOU of the U-Net-6 model for microstructure segmentation are 0.76, 0.92, and 0.85, respectively.

- (3)

- Segmentation recognition and EBSD comparative analysis were performed on the metallographic images of the carburized surface treated with four different carburizing processes—P1, P2, P5, and P7—using the U-Net-6 algorithm. The segmentation results of the U-Net-6 model for residual austenite on the carburized surface of processes P1, P2, P5, and P7 were compared with the EBSD recognition results, with errors of 5.2%, 5.3%, 3.4%, and 4.6%, respectively.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bodyakova, A.; Belyakov, A. Microstructure and Mechanical Properties of Structural Steels and Alloys. Materials 2023, 16, 5188. [Google Scholar] [CrossRef]

- Xiao, H.; Zhang, C.; Zhu, H. Effect of direct aging and annealing on the microstructure and mechanical properties of AlSi10Mg fabricated by selective laser melting. Rapid Prototyp. J. 2023, 29, 118–127. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, Z.-X. Effect of annealing temperature on microstructure and mechanical properties of nanocrystalline Incoloy800 alloy. J. Plast. Eng. 2023, 30, 184–190. [Google Scholar] [CrossRef]

- Ma, G.; Zhu, S.; Wang, D.; Xue, P.; Xiao, B.; Ma, Z. Effect of heat treatment on microstructure, mechanical properties, and fracture behaviors of ultra-high strength SiC/Al-Zn-Mg-Cu composite. Int. J. Miner. Metall. Mater. 2024. [Google Scholar] [CrossRef]

- Itoh, A.; Imafuku, M. Applicability Limit of X-ray Line Profile Analysis for Curved Surface by Micro-focus XRD. Tetsu-to-Hagane 2023, 109, 267–276. [Google Scholar] [CrossRef]

- Bolzoni, L.; Yang, F. X-ray Diffraction for Phase Identification in Ti-Based Alloys: Benefits and Limitations; IOP Publishing Ltd.: Bristol, UK, 2024. [Google Scholar] [CrossRef]

- Peruzzo, L. Electron Backscatter Diffraction (EBSD); American Cancer Society: Atlanta, GA, USA, 2018. [Google Scholar] [CrossRef]

- Gardner, J.; Wallis, D.; Hansen, L.N.; Wheeler, J. Weighted Burgers Vector analysis of orientation fields from high-angular resolution electron backscatter diffraction. Ultramicroscopy 2024, 257, 113893. [Google Scholar] [CrossRef] [PubMed]

- Impraimakis, M. A convolutional neural network deep learning method for model class selection. Earthq. Eng. Struct. Dyn. 2024, 53, 784–814. [Google Scholar] [CrossRef]

- Gertsvolf, D.; Horvat, M.; Aslam, D.; Khademi, A.; Berardi, U. A U-net convolutional neural network deep learning model application for identification of energy loss in infrared thermographic images. Appl. Energy 2024, 360, 122696. [Google Scholar] [CrossRef]

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J.; Fricout, G. Steel Defect Classification with Max-pooling Convolutional Neural Networks. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, QLD, Australia, 10–15 June 2012. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Azimi, S.M.; Britz, D.; Engstler, M.; Fritz, M.; Mücklich, F. Advanced Steel Microstructural Classification by Deep Learning Methods. Sci. Rep. 2018, 8, 2128. [Google Scholar] [CrossRef]

- Bulgarevich, D.S.; Tsukamoto, S.; Kasuya, T.; Demura, M.; Watanabe, M. Pattern recognition with machine learning on optical microscopy images of typical metallurgical microstructures. Sci. Rep. 2018, 8, 2078. [Google Scholar] [CrossRef]

- Pustovoit, V.N.; Dolgachev, Y.I. Structural State of Martensite and Retained Austenite in Carbon Steels after Quenching in Magnetic Field. Met. Sci. Heat Treat. 2023, 64, 688–692. [Google Scholar] [CrossRef]

- Sun, D.; Wang, H.; An, X. Quantitative evaluation of the contribution of carbide-free bainite, lath martensite, and retained austenite on the mechanical properties of C-Mn-Si high-strength steels. Mater. Charact. 2023, 199, 112802. [Google Scholar] [CrossRef]

- Shen, C.; Wang, C.; Wei, X.; Li, Y.; van der Zwaag, S.; Xu, W. Physical metallurgy-guided machine learning and artificial intelligent design of ultrahigh-strength stainless steel. Acta Mater. 2019, 179, 201–214. [Google Scholar] [CrossRef]

- Datta, S.; Pettersson, F.; Ganguly, S.; Saxén, H.; Chakraborti, N. Designing High Strength Multiphase Steel for Improved Strength–Ductility Balance Using Neural Networks and Multiobjective Genetic Algorithms. ISIJ Int. 2007, 47, 1195–1203. [Google Scholar] [CrossRef]

- Aristeidakis, J.S.; Haidemenopoulos, G.N. Composition and processing design of medium-Mn steels based on CALPHAD, SFE modeling, and genetic optimization. Acta Mater. 2020, 193, 291–310. [Google Scholar] [CrossRef]

- Wang, H.; Liu, S.; Yao, Z. Prediction of mechanical properties of AZ91 magnesium alloys based on genetic neural network. J. Jiangsu Univ. (Nat. Sci. Ed.) 2006, 27, 67–70. [Google Scholar]

- Chen, Q.; Xu, J.; Koltun, V. Fast Image Processing with Fully-Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Chen, X.; Tang, X.; Xiong, J.; He, R.; Wang, B. Pore characterization was achieved based on the improved U-net deep learning network model and scanning electron microscope images. Pet. Sci. Technol. 2024, 1–5. [Google Scholar] [CrossRef]

- Zheng, N. A Co-Point Mapping-Based Approach to Drivable Area Detection for Self-Driving Cars. Engineering 2018, 4, 479–490. [Google Scholar]

- Zhao, G.; Zhang, Y.; Ge, M.; Yu, M. Bilateral U-Net semantic segmentation with spatial attention mechanism. CAAI Trans. Intell. Technol. 2023, 8, 297–307. [Google Scholar] [CrossRef]

- Wang, Y.; Luo, L.; Zhou, Z. Road scene segmentation based on KSW and FCNN. J. Image Graph. 2019, 24, 4. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-Segmentation-Based Curb Detection Method for Self-Driving via a 3D-LiDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Xue, F.F.; Peng, J.; Wang, R.; Zhang, Q.; Zheng, W.S. Improving Robustness of Medical Image Diagnosis with Denoising Convolutional Neural Networks. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019. [Google Scholar] [CrossRef]

- Yang, C.; Guo, H.; Yang, Z. A Method of Image Semantic Segmentation Based on PSPNet. Math. Probl. Eng. 2022, 2022, 8958154. [Google Scholar] [CrossRef]

- Wang, W. Using PSPNet and UNet to analyze the internal parameter relationship and visualization of the convolutional neural network. 2020. ArXiv 2020, arXiv:2008.03411. [Google Scholar] [CrossRef]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Ming, D.; Du, J.; Zhang, X.; Liu, T. Modified average local variance for pixel-level scale selection of multiband remote sensing images and its scale effect on image classification accuracy. Am. Hist. Rev. 2013, 96, 3565. [Google Scholar] [CrossRef]

- Darling, D.A. Low-Frequency Expansions for Scattering by Separable and Nonseparable Bodies. J. Acoust. Soc. Am. 1965, 37, 228–234. [Google Scholar] [CrossRef]

- Pazhanikumar, K.; Kuzhalvoimozhi, S.N. Remote sensing image classification using modified random forest with empirical loss function through crowd-sourced data. Multimed. Tools Appl. 2024, 83, 53899–53921. [Google Scholar] [CrossRef]

| Carburization Processes | Oil Quenching Temperature (°C) | Tempering Temperature (°C) | Tempering Time (h) | |||

|---|---|---|---|---|---|---|

| Carburizing Temperature (°C) | Boost Stage Time (h) | Diffusion Stage Time (h) | ||||

| P1 | 930 | 1 | 2 | 860 | 200 | 2 |

| P2 | 1 | 3 | ||||

| P3 | 1 | 4 | ||||

| P4 | 2 | 4 | ||||

| P5 | 2 | 5 | ||||

| P6 | 2 | 6 | ||||

| P7 | 3 | 5 | ||||

| Model | MIOU | MPA | MFWIOU |

|---|---|---|---|

| FCN | 0.50 | 0.81 | 0.68 |

| U-Net | 0.75 | 0.89 | 0.83 |

| DeepLabv3+ | 0.64 | 0.84 | 0.75 |

| PSPNet | 0.55 | 0.81 | 0.70 |

| ICNet | 0.54 | 0.80 | 0.69 |

| Model | MIOU | MPA | MFWIOU |

|---|---|---|---|

| U-Net | 0.75 | 0.89 | 0.83 |

| U-Net-1 | 0.74 | 0.89 | 0.83 |

| U-Net-2 | 0.74 | 0.89 | 0.82 |

| U-Net-3 | 0.75 | 0.90 | 0.83 |

| U-Net-4 | 0.75 | 0.90 | 0.83 |

| U-Net-5 | 0.75 | 0.90 | 0.83 |

| U-Net-6 | 0.76 | 0.92 | 0.85 |

| U-Net-7 | 0.75 | 0.90 | 0.83 |

| U-Net-8 | 0.75 | 0.89 | 0.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, B.; Zhu, Z. Microstructure Image Segmentation of 23crni3mo Steel Carburized Layer Based on a Deep Neural Network. Metals 2024, 14, 761. https://doi.org/10.3390/met14070761

Gong B, Zhu Z. Microstructure Image Segmentation of 23crni3mo Steel Carburized Layer Based on a Deep Neural Network. Metals. 2024; 14(7):761. https://doi.org/10.3390/met14070761

Chicago/Turabian StyleGong, Boxiang, and Zhenlong Zhu. 2024. "Microstructure Image Segmentation of 23crni3mo Steel Carburized Layer Based on a Deep Neural Network" Metals 14, no. 7: 761. https://doi.org/10.3390/met14070761

APA StyleGong, B., & Zhu, Z. (2024). Microstructure Image Segmentation of 23crni3mo Steel Carburized Layer Based on a Deep Neural Network. Metals, 14(7), 761. https://doi.org/10.3390/met14070761