Robust Lane Detection Algorithm for Autonomous Trucks in Container Terminals

Abstract

:1. Introduction

2. Related Work

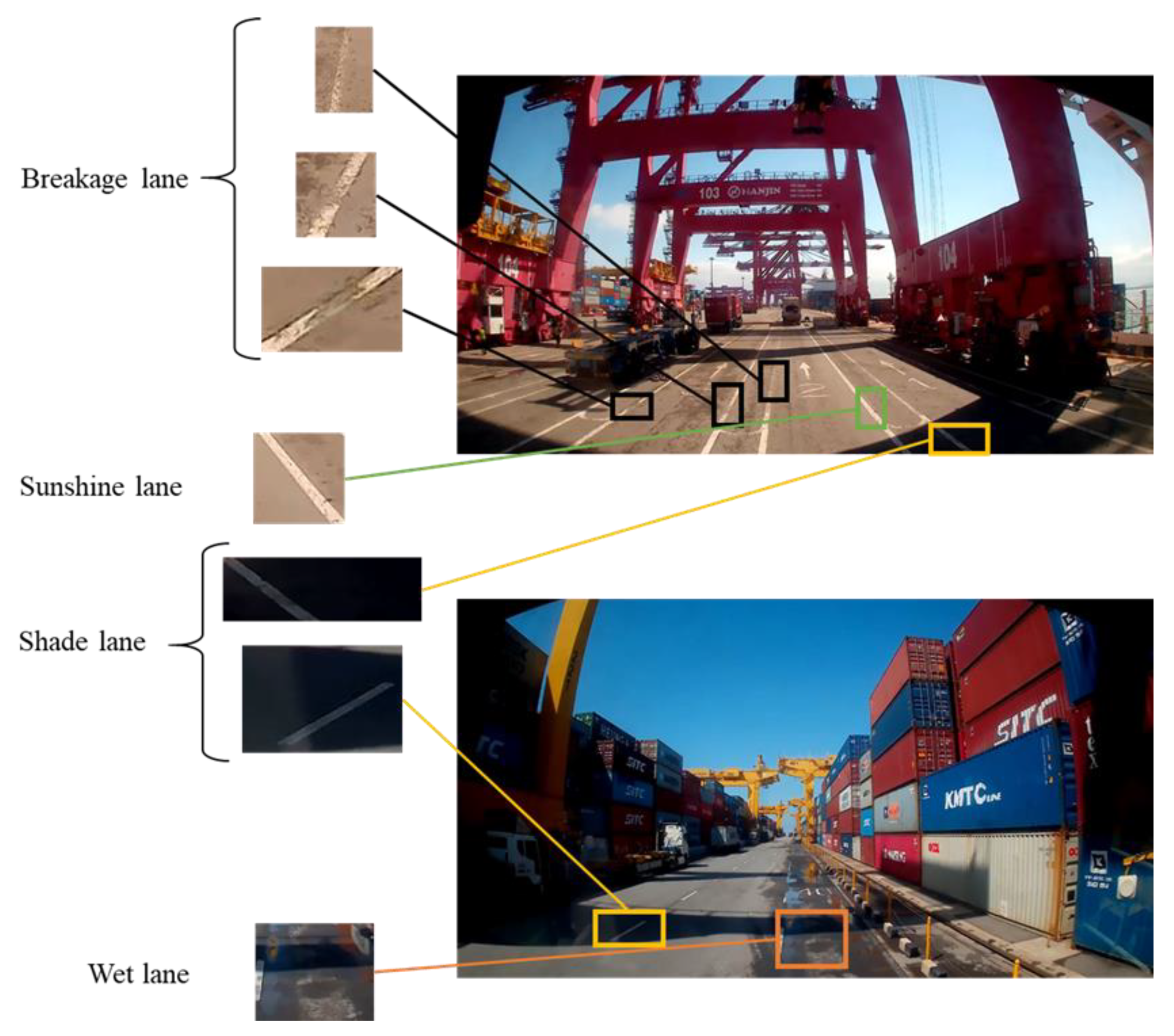

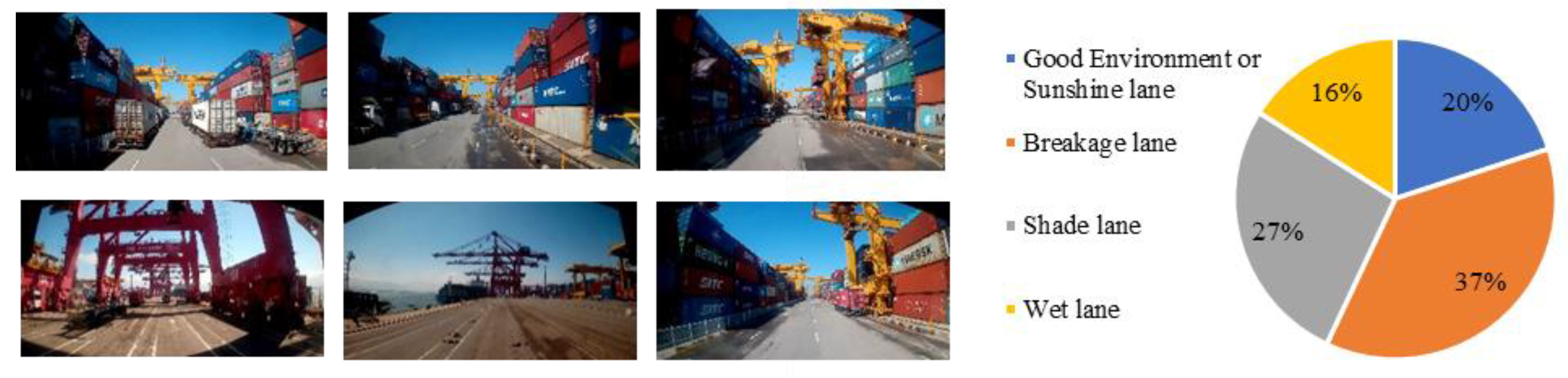

3. Problem Description

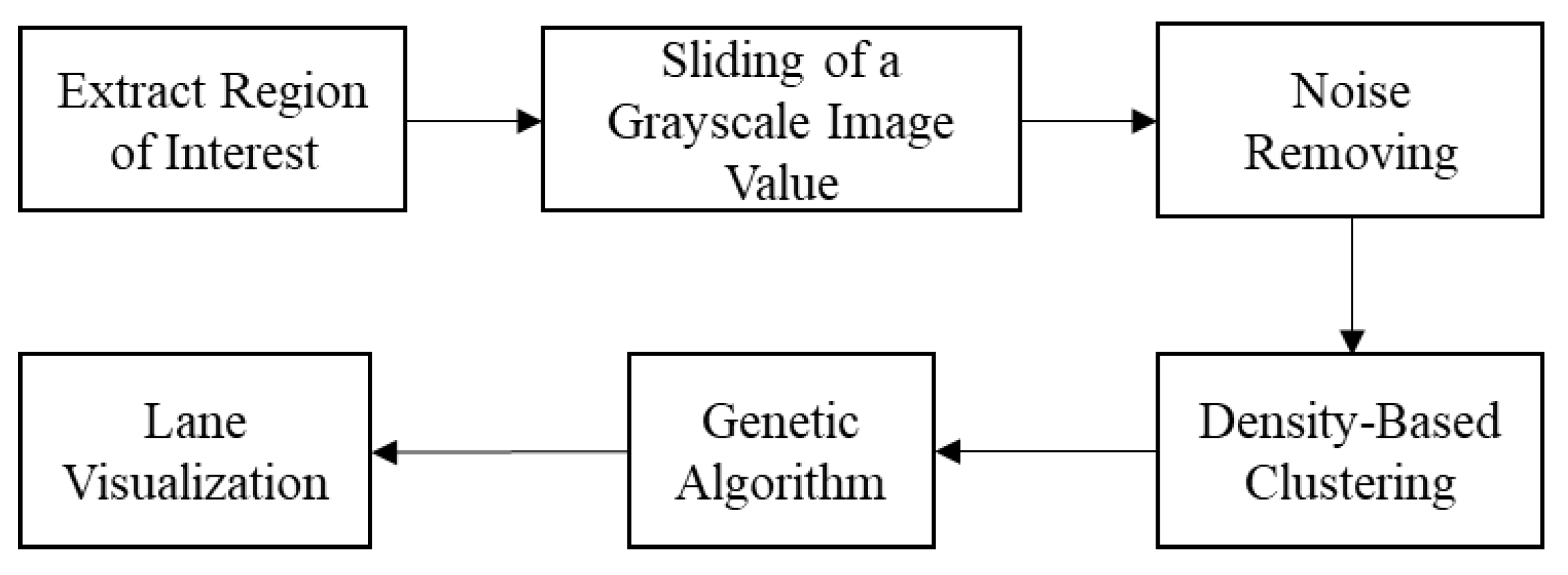

4. Robust Lane Detection Model

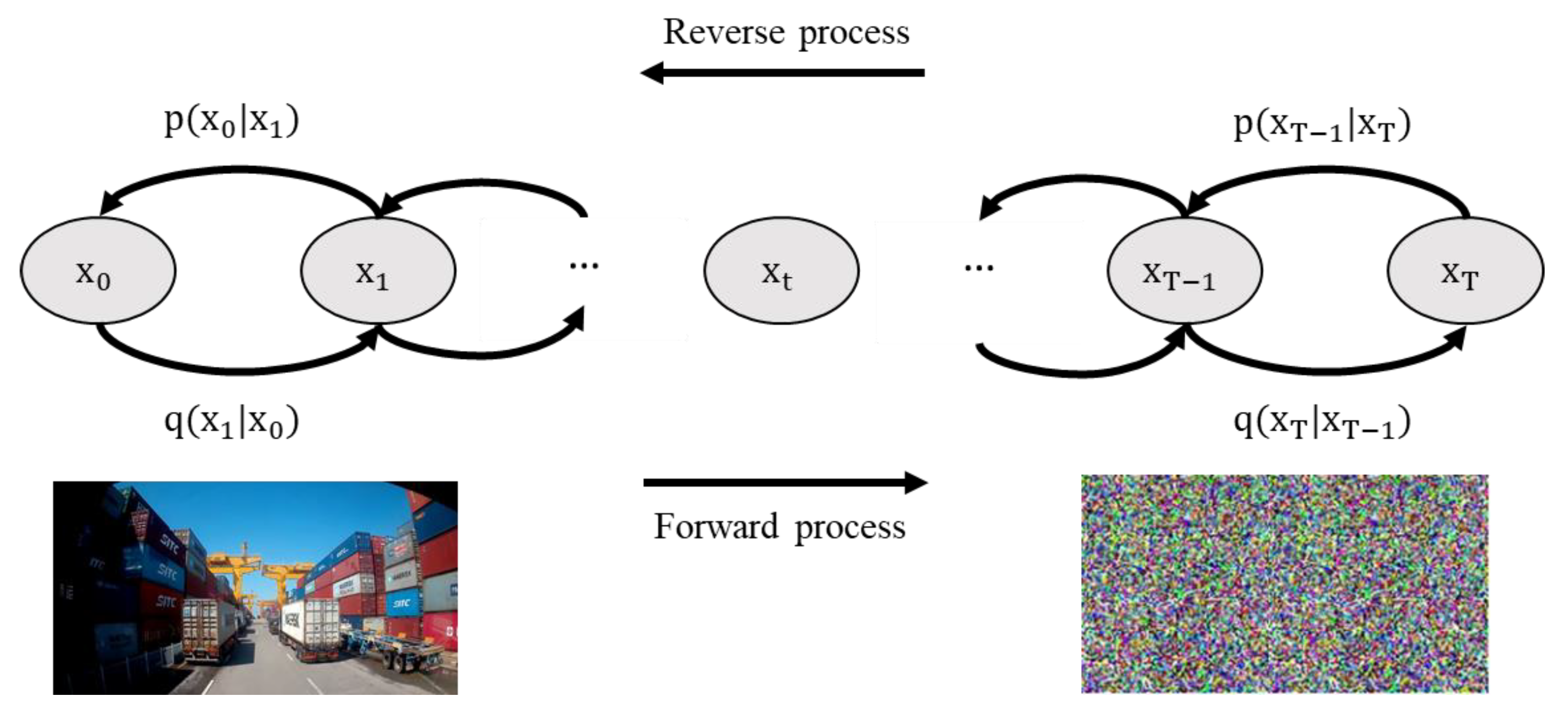

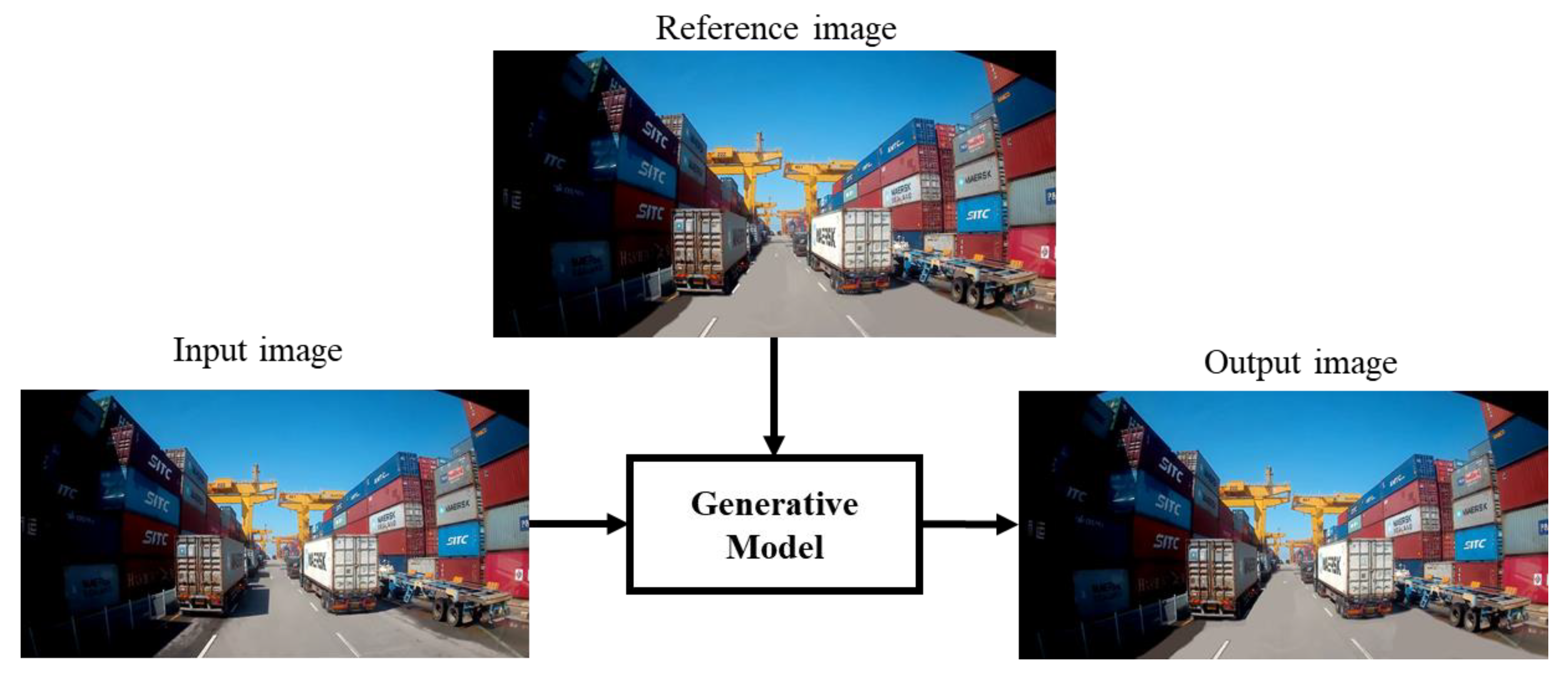

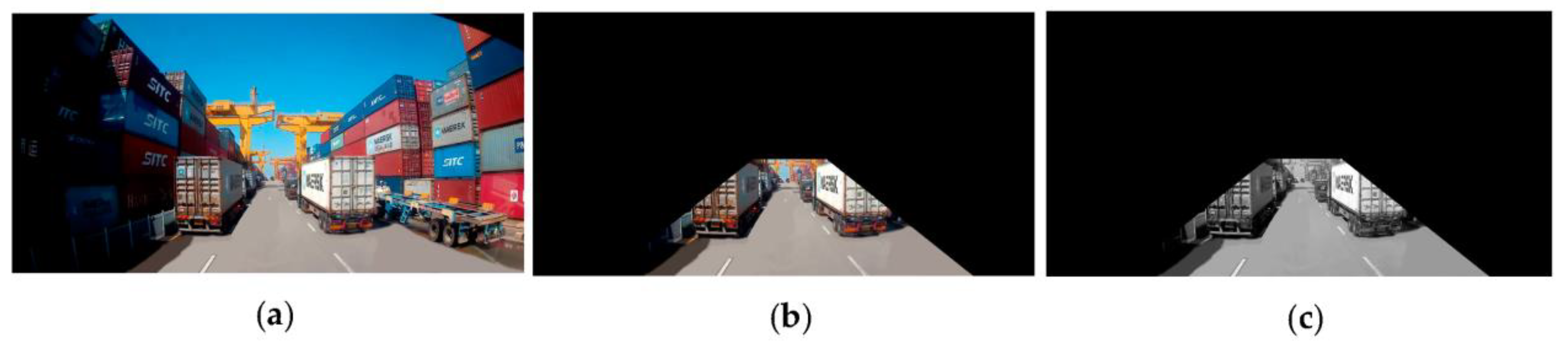

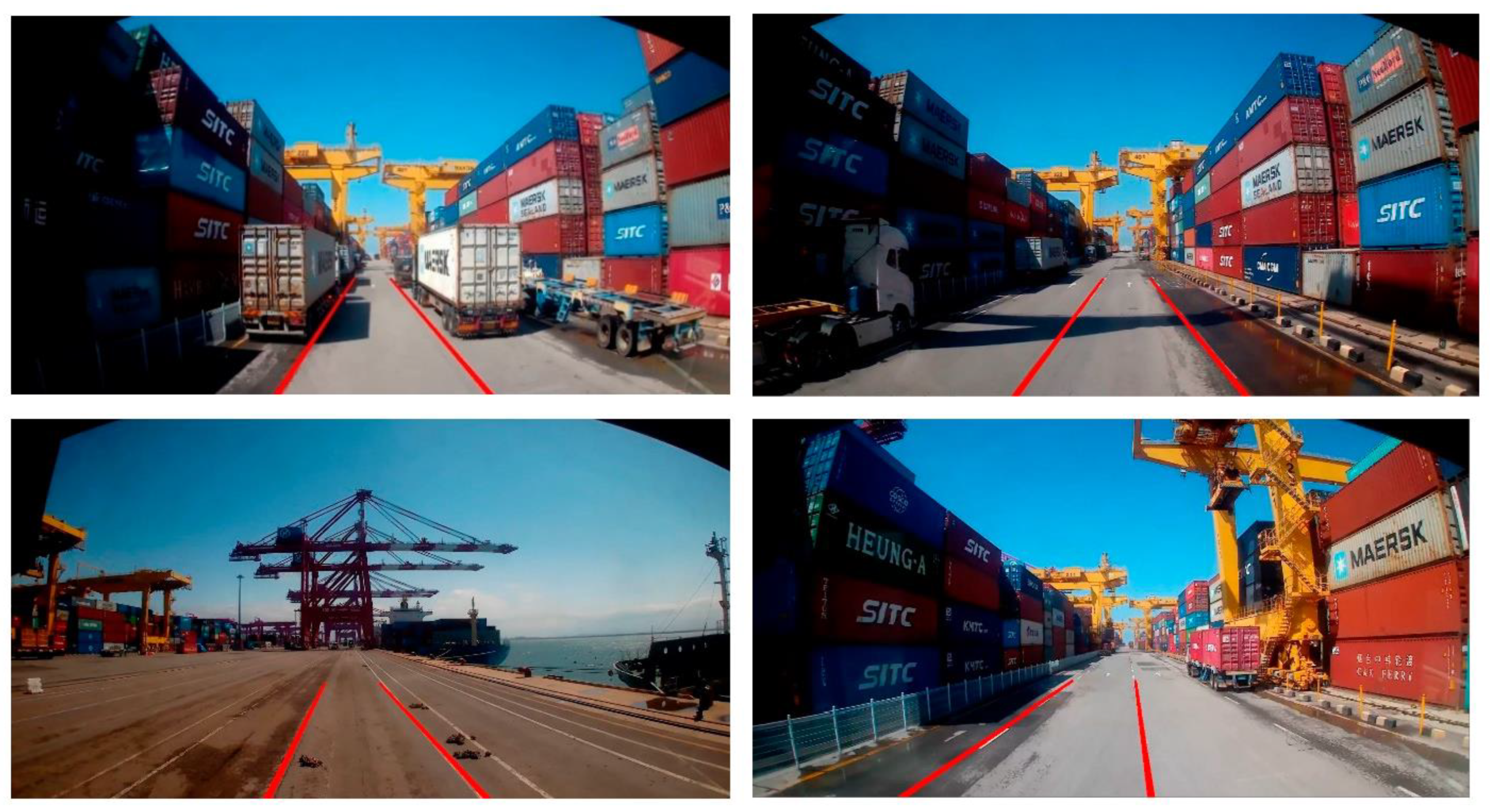

4.1. Transforming Images

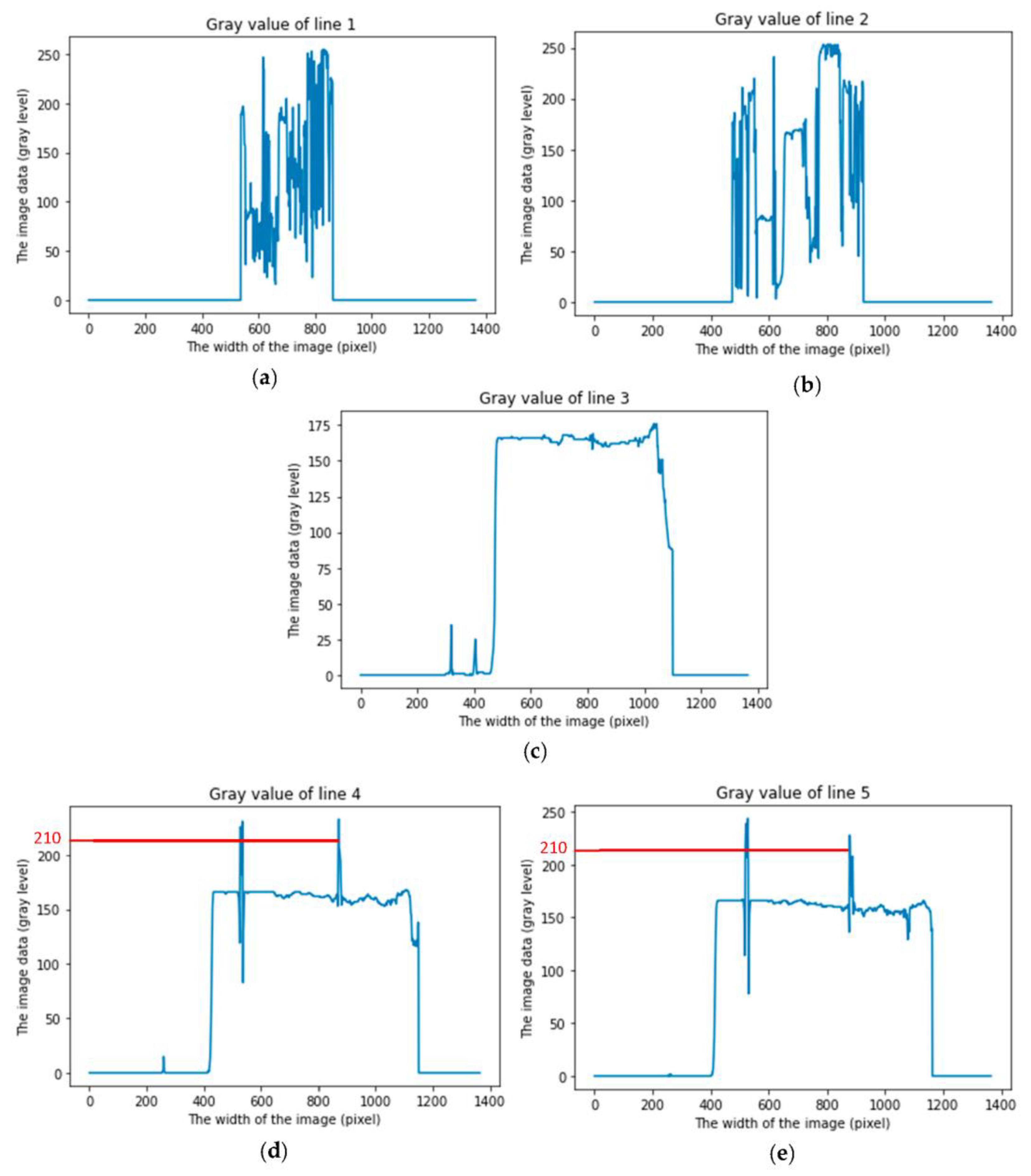

4.2. Lane Positioning

4.2.1. Extract the Region of Interest and Slide of a Grayscale Image

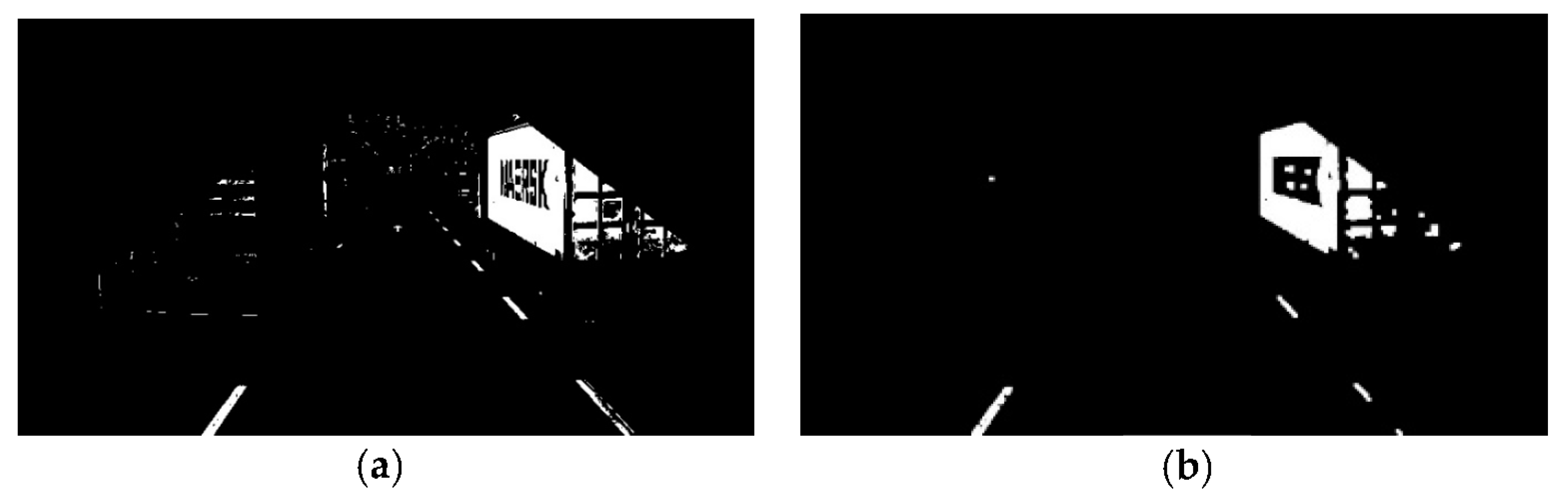

4.2.2. Noise Removing

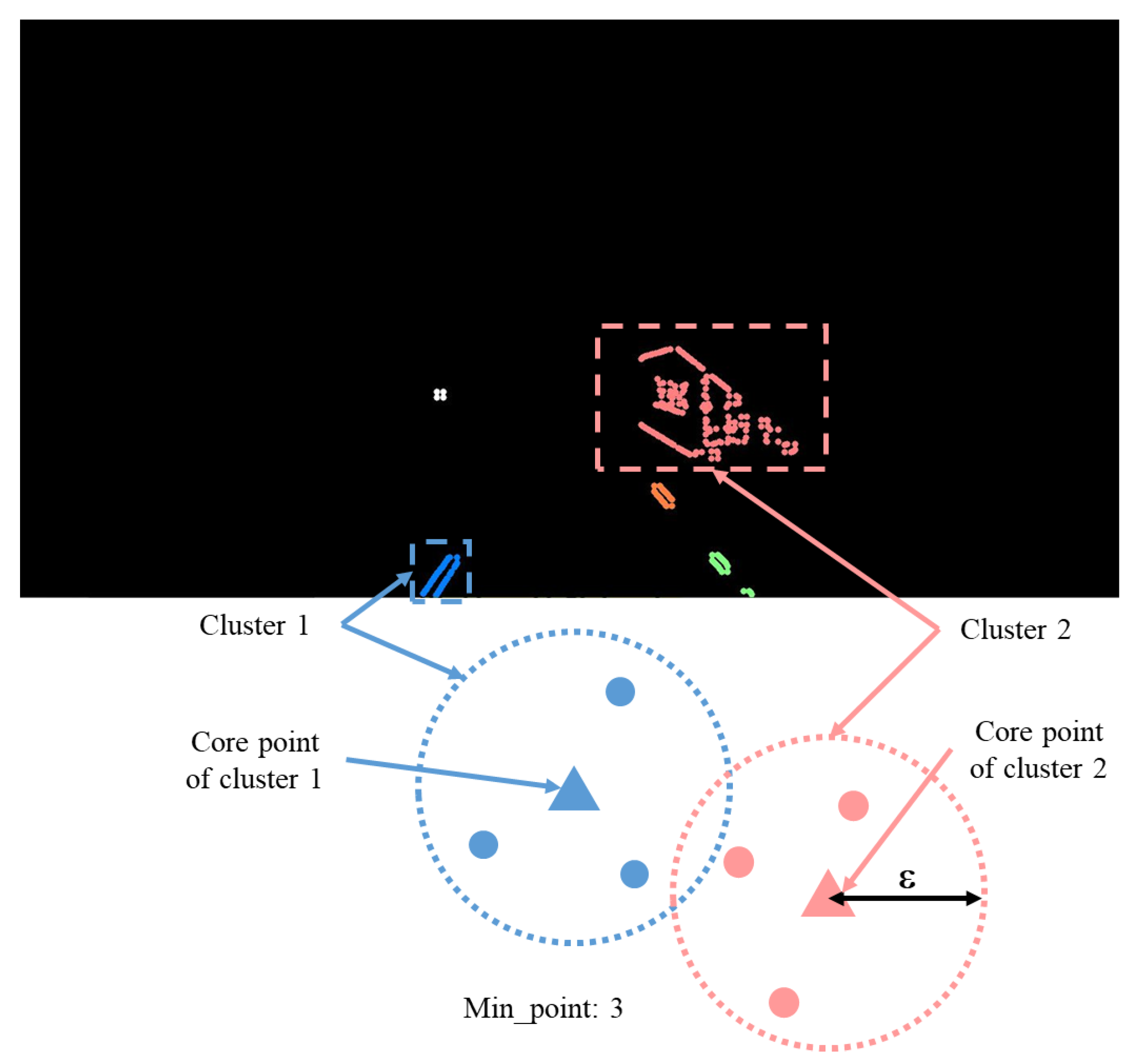

4.2.3. DBSCAN Clustering

4.2.4. Genetic Algorithm

| Algorithm 1. Lane positioning based on Genetic Algorithm |

| Input: Clusters data from the DBSCAN clustering. For cluster data in range (Num_clusters): Select four random points in the cluster Based on the four random points, find out two gene sequences (a,b,c) For in range (Max_iteration): Selection of a pair of parent genes—Roulette Wheel Selection Crossover the two genes Mutation Evaluation of the objective function using the Formula (12) Output: Optimal gene (a,b,c) for each cluster. |

5. Test Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Review of Maritime Report 2021. Available online: https://unctad.org/system/files/official-document/rmt2021_en_0.pdf (accessed on 19 February 2023).

- Heikkilä, M.; Saarni, J.; Saurama, A. Innovation in Smart Ports: Future Directions of Digitalization in Container Ports. J. Mar. Sci. Eng. 2022, 10, 1925. [Google Scholar] [CrossRef]

- Hur, S.H.; Lee, C.; Roh, H.S.; Park, S.; Choi, Y. Design and Simulation of a New Intermodal Automated Container Transport System (ACTS) Considering Different Operation Scenarios of Container Terminals. J. Mar. Sci. Eng. 2020, 8, 233. [Google Scholar] [CrossRef] [Green Version]

- Bimbraw, K. Autonomous cars: Past, present and future: A review of the developments in the last century, the present scenario and the expected future of autonomous vehicle technology. In Proceedings of the ICINCO 2015—12th International Conference on Informatics in Control, Automation and Robotics, Colmar, France, 21–23 July 2015; Volume 1. [Google Scholar] [CrossRef]

- Rebelle, J.; Mistrot, P.; Poirot, R. Development and validation of a numerical model for predicting forklift truck tip-over. Veh. Syst. Dyn. 2009, 47, 771–804. [Google Scholar] [CrossRef]

- Martini, A.; Bonelli, G.P.; Rivola, A. Virtual testing of counterbalance forklift trucks: Implementation and experimental validation of a numerical multibody model. Machines 2020, 8, 26. [Google Scholar] [CrossRef]

- Ogawa, T.; Takagi, K. Lane recognition using on-vehicle LIDAR. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 540–545. [Google Scholar] [CrossRef]

- Yim, Y.U.; Oh, S.Y. Three-feature based automatic lane detection algorithm (TFALDA) for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2003, 4, 219–225. [Google Scholar] [CrossRef]

- Tan, H.; Zhou, Y.; Zhu, Y.; Yao, D.; Li, K. A novel curve lane detection based on Improved River Flow and RANSA. In Proceedings of the 2014 17th IEEE International Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014. [Google Scholar] [CrossRef]

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and Gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar] [CrossRef]

- Tang, J.; Li, S.; Liu, P. A review of lane detection methods based on deep learning. Pattern Recognit. 2021, 111, 107623. [Google Scholar] [CrossRef]

- He, B.; Ai, R.; Yan, Y.; Lang, X. Accurate and robust lane detection based on Dual-View Convolutional Neutral Network. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016; pp. 1041–1046. [Google Scholar] [CrossRef]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust lane detection from continuous driving scenes using deep neural networks. IEEE Trans. Veh. Technol. 2020, 69, 41–54. [Google Scholar] [CrossRef] [Green Version]

- Neven, D.; de Brabandere, B.; Georgoulis, S.; Proesmans, M.; van Gool, L. Towards End-to-End Lane Detection: An Instance Segmentation Approach. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; Volume 2018, pp. 286–291. [Google Scholar] [CrossRef] [Green Version]

- Xiao, D.; Zhuo, L.; Li, J.; Li, J. Structure-prior deep neural network for lane detection. J. Vis. Commun. Image Represent. 2021, 81, 103373. [Google Scholar] [CrossRef]

- Muthalagu, R.; Bolimera, A.; Kalaichelvi, V. Lane detection technique based on perspective transformation and histogram analysis for self-driving cars. Comput. Electr. Eng. 2020, 85, 106653. [Google Scholar] [CrossRef]

- Huang, Y.; Li, Y.; Hu, X.; Ci, W. Lane detection based on inverse perspective transformation and Kalman filter. KSII Trans. Internet Inf. Syst. 2018, 12, 643–661. [Google Scholar] [CrossRef]

- Voisin, V.; Avila, M.; Emile, B.; Begot, S.; Bardet, J.C. Road markings detection and tracking using Hough Transform and Kalman filter. Lect. Notes Comput. Sci. 2005, 3708, 76–83. [Google Scholar] [CrossRef]

- Waykole, S.; Shiwakoti, N.; Stasinopoulos, P. Review on lane detection and tracking algorithms of advanced driver assistance system. Sustainability 2021, 13, 11417. [Google Scholar] [CrossRef]

- Lee, D.H.; Liu, J.L. End-to-End Deep Learning of Lane Detection and Path Prediction for Real-Time Autonomous Driving. Signal Image Video Process. 2021, 17, 199–205. [Google Scholar] [CrossRef]

- Ye, Y.Y.; Hao, X.L.; Chen, H.J. Lane detection method based on lane structural analysis and CNNs. IET Intell. Transp. Syst. 2018, 12, 513–520. [Google Scholar] [CrossRef]

- Feng, Y.; Li, J.Y. Robust Lane Detection and Tracking for Autonomous Driving of Rubber-Tired Gantry Cranes in a Container Yard*. In Proceedings of the 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), Mexico City, México, 20–24 August 2022; pp. 1729–1734. [Google Scholar] [CrossRef]

- Song, Y.; Sohl-Dickstein, J.N.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-Based Generative Modeling through Stochastic Differential Equations. arXiv 2020, arXiv:2011.13456. [Google Scholar] [CrossRef]

- Chen, T.Q.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D.K. Neural Ordinary Differential Equations. arXiv 2018, arXiv:1806.07366. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, Portland, Oregon, 2–4 August 1996; Available online: http://www.cs.ecu.edu/~dingq/CSCI6905/readings/DBSCAN.pdf (accessed on 13 August 2022).

- Mirjalili, S. Genetic Algorithm. In Evolutionary Algorithms and Neural Networks: Theory and Applications; Mirjalili, S., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 43–55. [Google Scholar] [CrossRef]

| Lane Type | No. Images | Failed | Proportion of Lane Detection (%) | Average Processing Time (s) |

|---|---|---|---|---|

| Sunshine | 220 | 8 | 96.4% | 0.063 |

| Breakage | 407 | 28 | 93.1% | 0.058 |

| Shade | 297 | 23 | 92.3% | 0.061 |

| Wet | 176 | 15 | 91.5% | 0.082 |

| Average | 93.3% | 0.066 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vinh, N.Q.; Kim, H.-S.; Long, L.N.B.; You, S.-S. Robust Lane Detection Algorithm for Autonomous Trucks in Container Terminals. J. Mar. Sci. Eng. 2023, 11, 731. https://doi.org/10.3390/jmse11040731

Vinh NQ, Kim H-S, Long LNB, You S-S. Robust Lane Detection Algorithm for Autonomous Trucks in Container Terminals. Journal of Marine Science and Engineering. 2023; 11(4):731. https://doi.org/10.3390/jmse11040731

Chicago/Turabian StyleVinh, Ngo Quang, Hwan-Seong Kim, Le Ngoc Bao Long, and Sam-Sang You. 2023. "Robust Lane Detection Algorithm for Autonomous Trucks in Container Terminals" Journal of Marine Science and Engineering 11, no. 4: 731. https://doi.org/10.3390/jmse11040731

APA StyleVinh, N. Q., Kim, H.-S., Long, L. N. B., & You, S.-S. (2023). Robust Lane Detection Algorithm for Autonomous Trucks in Container Terminals. Journal of Marine Science and Engineering, 11(4), 731. https://doi.org/10.3390/jmse11040731