1. Introduction

Starting with the definition of a Maritime Autonomous Surface Ship (MASS) at the IMO MSC 98 meeting [

1] as ‘A ship which, to a varying degree, seafarers can operate without the intervention of human’, the technologies of the 4th Industrial Revolution have also been applied to the marine field. Breakthrough technology development using artificial intelligence and big data technologies is underway [

2,

3,

4]. Because most marine accidents are human accidents, the realisation of autonomous ships can reduce marine accidents caused by human error by reducing the number of crew members on board the ships [

5,

6,

7]. Therefore, systems that can perform remotely and manage the work a seafarer has to perform manually are being developed [

8,

9,

10,

11].

One such task that requires automation is a ship’s material resource inventory management system. Ships have various material resources. For example, there are more than 240 machines in one ship plant, and more than 3000 types of inventories are listed concerning them. If consumables are considered, these would be more than 4000 [

12,

13]. Although the inventory of consumption or supply of the ship material resources is managed through the resource management platform of each ship owner, there is bound to be some discrepancy from the actual inventory. To reduce this difference, ship inventories are periodically inventoried by the crew members in inventory management [

13,

14]. In particular, in the case of spare parts that are not readily available or statutory spare parts, inventory management must be strictly performed. This is necessary for the safe operation of ships. However, having more materials than necessary increases maintenance costs and restricts space. Moreover, if there is a shortage, it is impossible to repair the machines necessary for navigation, which may lead to a situation in which navigation is impossible [

15].

In the logistics industry, various studies on the importance of inventory management and management technology have been conducted; Studies on inventory control for spare parts in aviation logistics [

16], inventory control systems using the Markov model [

17], RFID-based inventory control systems [

18,

19,

20], and QR-code-based inventory control systems [

21,

22]. However, these methods are not suitable for actual ship spare parts models. Spare parts for ships are supplied by various manufacturers; therefore, there is a limit to assigning RFID and QR codes individually. In object recognition, research and development have been conducted using various APIs (YOLO, OpenCV, etc.) [

23,

24,

25,

26], and devices using embedded systems are also being developed [

27,

28,

29,

30].

Recently, studies have been conducted to recognise ship parts using transfer-learning models [

31]. However, these studies deal with the accuracy of recognition models and do not deal with the algorithms for counting the number of spare parts.

In image processing, numerous algorithms have been developed for object recognition. However, achieving proper performance requires optimizing or customizing the parameters based on the specific environment in which they are used. This study proposes an image processing algorithm optimized for identifying spare-part numbers in the ship environment. Furthermore, selecting a learning model with low memory usage but high accuracy is crucial since a predictive model that guarantees high performance requires significant time and memory to operate. A model was selected for the device that balances prediction, accuracy and learning time by comparing four transfer-learning models (ResNet50, VGG-19, ShuffleNet, and SqueezeNet) that have shown excellent performance in object classification.

The hardware for device development was selected based on

Table 1. The microcomputer, Raspberry Pi, was chosen over microcontrollers, like the Arduino, due to their higher hardware performance, making them more suitable for testing the generalization performance of the selected prediction model and proposed image processing algorithm.

This study achieved automated ship-spare management by utilizing balanced transfer learning and the proposed image processing algorithm through high-performance hardware. This presents the possibility of automating spare-part management on actual operating ships, which can be verified through generalized testing via a remote network.

2. Image Identification and Prediction Algorithm

By inputting the image of the spare part, the class of the spare part is predicted, and the number of spare parts in a given class is identified. The spare-part class is predicted using a DCNN model covered in

Section 3.

This section describes an algorithm for back-projecting an image using the image -histogram and OpenCV’s contour function on the back-projected image to identify the number of spare -parts. Open-Source Computer Vision Library (OpenCV) is the most representative and popular library in image processing and visual programming.

2.1. Proposed Image-Processing Algorithm

There are several algorithms for recognizing the number of objects in an image, including Template matching, Blob detection, Vascade classifiers, and Contour detection. Among them, contour detection is the most commonly used method, a computer vision technique used to detect the boundaries of objects in an image.

The cv2.findContours() function is used for contour detection, which takes a binary image as input and detects all contours in the image. The quality and accuracy of the contours depend on various factors, such as the quality of the input image, the threshold or edge detection algorithm used, and the contour approximation method used.

Figure 1 is a result image of the spindle, one of the ship’s spare parts, obtained using the contour detection algorithm. The contour detection algorithm determines boundaries with similar colors or intensities based on the threshold value. It performs greyscale binary classification so that it can be affected by the type and environment of the object.

Therefore, in this section, a new object detection algorithm is introduced that removes the background, excluding the color characteristic of the object, by combining the Histogram technique, inverse image, and contour technique to reduce the influence of the object’s environment when detecting the object’s contour [

32,

33].

Figure 2 shows 2D histograms of RGB images of the Spindle and Atomizer spare parts with the same pixels. Each histogram shows the distribution of blue and green pixels in the image (A), the distribution of green and red pixels in the image (B), and the distribution of blue and red pixels in the image (C). The two spare parts have different distributions (32 × 32 pixels) and intensities (0~255), and the 2D histogram information of the spare parts, excluding the background, is stored in a Python histogram data list variable. This stored data is used as input for the inverse projection function. Using the information obtained from the spare part class through the learning model, the stored 2D histogram data can be outputted.

2.1.1. Image-Histogram

An image histogram is a graphical representation of the pixel-value distribution of the image.

The histogram in a grayscale image can be obtained by counting the number of pixels corresponding to each grayscale value distributed in the image and expressing it as a bar graph. The horizontal axis of the histogram is called the bin. Generally, a grayscale image is represented as a histogram with brightness bins ranging from 0 to 255. In colour images, it can be expressed as a histogram with brightness bins of 0–255 for the three colours (Red, Green, and Blue), the three primary colours of light [

32,

33].

2.1.2. Back-Projection Function

The back-projection function is provided by OpenCV to extract an object that matches the input histogram from an image. Using this function, if the bitwise _not operator is used between the back-projected image and background image, used as a mask, only the part corresponding to the object would be extracted from the image [

33]

First, the stored histogram data described in

Section 2.1 is input to the cv2.calcBackProject function to back-project. Next, the threshold function on the back-projected image removes ambiguous values that could be confused with histogram data. Finally, the threshold function binarizes the pixels in the image. The input image is divided into binaries based on a set value (threshold value) [

33,

34,

35].

Subsequently, the necessary objects are extracted through the bitwise _not operation with the thresholded mask image from the input image. In the bitwise_not operation, the background, excluding the necessary objects, is changed to white (pixel:0) through the operation between the white image and the thresholded image. If the background is black (pixel:255), the entire picture is recognised as a single object during the contour process. Thus, the background is white so the object can be recognised.

2.1.3. Contour Function

The contour function connects adjacent pixels with similar values among the pixels in the entire image in a curved shape. This function helps recognise the objects necessary for an image [

36]. However, the contour technique has several limitations. For example, if there are many white pixels in the histogram of an object with a white background, the pixel boundary with the background is blurred. Therefore, in the back-projection process, an appropriate background should be selected based on the histogram data of the object to be contoured.

2.1.4. Combined Image Processing Algorithm

The proposed image processing algorithm is illustrated in

Figure 3.

This process is implemented on Python version 3.7 and OpenCV library version 4.5.1.48. First, images of the ship’s spare parts are captured using a camera, and the trained model outputs classes for the spare parts, which are then stored in a result list. Next, the algorithm uses histogram data for the pre-stored spare parts as input to predict the class of the spare parts. Then it applies a process called „Back-projection” to convert spare-parts image (A) to image (B) with a white background by setting all pixels except for the histogram data to 0.

Next, the contour function is applied to image (B) to create the outermost contour shape, as shown in the image (C). Finally, by using the len() function on the contoured image (C), the number of objects can be estimated. A transfer learning model based on the CNN model was used to predict the type of spare parts for ships. A Convolutional Neural Network (CNN) is a type of ANN that uses convolutional operations and is specialized in multidimensional processing, such as colour images, because it can process multidimensional array data. The CNN algorithm extracts and classifies the features of the image data through several layers that perform the convolutional operations. Since the algorithm was first introduced by LeCun in 1995, CNN has been in the limelight in image recognition [

37]. In particular, significant advances have been made in image feature recognition and classification techniques using deep convolutional neural networks (DCNN), having more operations and layers, especially in computer vision [

38,

39,

40].

3. Deep Convolutional Neural Networks (DCNN)

Figure 4 shows a simple structure of a DCNN.

Deep Convolutional Neural Networks (DCNN) are a type of deep learning utilised to tackle problems related to image recognition, classification, and processing. DCNN has a similar structure to a basic Artificial Neural Network (ANN) but includes unique components such as convolutional layers and pooling layers, allowing it to process image data more effectively. DCNN comprise repetitions of convolution layers, pooling layers (subsampling), and fully connected layers.

Figure 5 illustrates the process of convolutional layers and max pooling layers.

The convolution layer extracts feature from an image. In this layer, as in many feature maps, filters called ‘kernels’ are created.

Figure 5 illustrates an example of a convolution process [

41]. Input data of size 3 × 3 generates features in the form of [[14, 0], [16, 11]] through a kernel in the form of a unit matrix of size 2 × 2. A feature map is formed by repeating this process over the entire matrix.

The pooling layer down-samples the dimensions of the feature map produced by the convolution layer to reduce the number of operations and extract feature vectors so that the model can learn effectively. That means it reduces the number of parameters in the model. The pooling layer mainly uses max pooling, which extracts the largest value during the operation, and average pooling, which extracts the average value. The max pooling was used for feature extraction [

42].

Figure 5B illustrates an example of the max-pooling process. First, the convolution layer is partially extracted with a size of 2 × 2, and the largest value is selected. Then, by repeating this process over the entire matrix, a max-pooling layer is formed.

Finally, the fully connected layer derives the prediction results. The fully connected layer derives the result by connecting feature maps with a reduced resolution by operating a convolution layer and pooling layer to a multi-perceptron layer [

43].

In addition, a dropout layer can be added to avoid overfitting. Dropout is a learning method that activates only a part of the neural network during the learning process. Dropout is a method developed to solve a problem in which the learning result can be overfitted as the neural network becomes more complex. For example, during training, if the weight or bias value of a specific neuron in the neural network increases, the learning speed of the other neurons may decrease, or the learning may not work properly. Adding a dropout layer reduces the effects of such neurons and avoids overfitting [

44].

The feature map extracted through these processes is passed to the fully connected layer as a one-dimensional array through flattening. Finally, the image is classified through the multi-perceptron layer with learned weights and biases.

4. Transfer-Learning

Transfer learning is a technique for efficiently training machine learning and deep learning models by applying the knowledge of an already-trained model to a new, related problem. The main objectives of transfer learning are to reduce learning time, alleviate the problem of data scarcity, and improve the performance of models on new problems [

45]. Transfer learning is useful when there is insufficient learning data for a new problem when similar patterns exist between the new problem and the original problem, or when computational resources or learning time for the new problem are limited [

46].

This technique is primarily used in deep learning fields such as image classification, natural language processing, and speech recognition. The transfer learning process consists of the following steps [

45]: First, the model is trained on the original problem in the pre-training phase. In this process, large datasets are used to learn the model’s weights effectively. Second, in the fine-tuning phase, the weights of the pre-trained model are used as initial values, and data for the new problem is added to train the model further. In this process, the learning rate is lowered to fine-tune the weights, allowing the model to adapt to the new data. Third, the fine-tuned model is applied to the new problem to perform prediction and classification tasks in the application phase.

Prominent transfer learning models include AlexNet, GoogLeNet, VGG, and ResNet. This paper introduces high-performing models, such as VGG19 and ResNet-50, and SqueezeNet and ShuffleNet, which emphasise computational efficiency whilst maintaining performance. Using the Deep Network Designer application provided by MATLAB, we train and compare these models in the context of ship-spare prediction. The following section will identify the most suitable model for the ASSM application using this comparative analysis.

4.1. VGG19

VGG19 is one of the convolutional neural networks (CNNs) that performs extremely highly in image classification tasks. It comprises 19 layers and extracts complex features through small filter sizes and deep layers. Therefore, the model trained on the ImageNet dataset is suitable for transfer learning. VGG19 requires large datasets and considerable computational resources for high accuracy, but its performance is outstanding [

47].

4.2. ResNet-50

ResNet-50 is a convolutional neural network (CNN) widely used in image recognition tasks. It has 50 layers, including residual blocks that allow for the efficient training of deep neural networks. ResNet-50 achieves state-of-the-art performance on many benchmark datasets due to its ability to capture complex features through its deep architecture. The model was trained on the ImageNet dataset, making it well-suited for transfer learning. Despite its high computational requirements, ResNet-50 is known for its high accuracy in image classification tasks [

48].

4.3. ShuffleNet

ShuffleNet is a lightweight CNN model used in deep learning that achieves high accuracy despite its small model size and low computational cost. It reduces the number of parameters and resolves bottlenecks by utilizing special techniques such as weight sharing and feature map shuffling. This model is suitable for mobile devices and embedded systems and can be applied in autonomous driving systems such as cars and drones for object detection and tracking [

49].

4.4. SqueezeNet

SqueezeNet is a CNN architecture designed for efficient model size and computational cost without sacrificing accuracy. It achieves this by combining 1 × 1 and 3 × 3 filters with a small number of channels. The network structure also includes a „squeeze” layer that reduces the number of input channels before applying the convolutional layer, further reducing the number of parameters in the model. SqueezeNet can be trained on large datasets such as ImageNet and has been shown to perform similarly to larger models with much fewer parameters [

50].

5. Ship Spare-Part Recognition Deep Learning Model

5.1. Dataset and Split

Learning data are required to train the transfer-learning predictive model. The spare parts of machines used on ships were selected for learning data. Because the operating ship plant is a special environment far from the manufacturer’s repair service centres, shipowners must manage more than the legally designated number of spare parts to ensure the ship’s safety. This is called the ‘Demanded quantity in law’.

The six spare parts of a ship’s internal combustion engines that are strictly managed on ships were selected as the dataset, as shown in

Table 2.

The image data collection for each object was performed at a consistent location called the “spare warehouse” as shown in

Figure 6, for generalization verification. An experimental device was used for this purpose. A CMOS (Complementary Metal-Oxide-Semiconductor) type OV7670 camera module was used to collect RGB images in 640 × 480-pixel format, which was then converted to 64 × 64 pixels and stored. When collecting images, they were captured at different angles on a white background as they would be used for histogram information extraction.

The size of each spare part satisfies the minimum size of 9 (3 × 3) or more of the convolution kernel, and a total of 660 data sets were prepared by collecting 110 images from each spare.

Next, for model learning and verification, the training data and verification data were divided in the ratio of 80:20, as shown in

Figure 7.

5.2. Selection of Ship Parts Recognition Model Based on Transfer Learning

This paper uses representative transfer learning models such as VGG19, resnet50, SqueezeNet, and ShuffleNet models for learning. For learning, a deep learning designer provided by MATLAB was used.

5.2.1. Hyperparameter Optimization

To increase the model’s prediction accuracy and avoid overfitting, it is essential to properly select hyperparameters such as the number of epochs, batch size, learning rate, and optimization algorithms.

The dataset is divided into three categories: training and validation: 80%, and 20%, respectively. Therefore, 80% of the images were considered for network training in each stage, and 20% were considered for validation.

Because the number of samples for learning is 528 (660 × 0.8), the step_per_epoch value and batch size were set to maximum values of 52 and 10, respectively, according to Equation (1). The epoch value was set to 12, the optimal value, based on several tests [

51].

The learning rate is a hyperparameter that controls the rate at which the network’s weights are updated during training. Setting the learning rate too high may cause weights to update too quickly, resulting in unstable behavior or overfitting. On the other hand, setting the learning rate too low may update weights too slowly, slowing convergence or getting stuck in suboptimal solutions. The optimal learning rate depends on the problem you are trying to solve and can be determined through experimentation and model performance monitoring. In transfer learning, we have initial parameters obtained through pre-training. Then, the learning rate is tuned by fine-tuning the weights of the pre-trained model. For this study, to select the optimal transfer model, the learning rate was set to 0.0001, and a cyclical learning rate lowered by 0.1 for every two iterations was applied.

Optimization algorithms in deep learning are methods used to adjust the weights and biases of a model during training for better prediction performance and faster convergence. In the experiment, the accuracy is compared to select the optimal optimization algorithm after learning. As a comparison group of optimization algorithms, SGD (Stochastic Gradient Descent), RMSProp (Root Mean Square Propagation), and Adam (Adaptive Moment Estimation) were used.

SGD is one of the most basic optimization algorithms. Instead of calculating the parameters for the entire dataset, SGD calculates them for a randomly selected data subset, reducing the computational cost.

RMSProp addresses one of the issues with SGD: the uniform application of a learning rate that causes parameters to be updated at an imbalanced ratio. RMSProp dynamically adjusts the learning rate to increase the stability of the training process. It uses an exponentially weighted moving average of the previously squared gradients to give more weight to the latest gradient and converge more quickly.

Adam is an optimization algorithm that combines the advantages of RMSProp, and SGD. Adam uses the exponentially weighted moving average of previous gradients and the exponentially weighted moving average of RMSProp to adjust the learning rate automatically and help find optimal weights and biases.

5.2.2. Model Evaluation

The results of the confusion matrix to check how accurately the model predicted each object in the validation dataset showed excellent accuracy for each object, as shown in

Figure 8.

Accuracy is a metric determined by dividing the number of correct predictions by all observations. It is a good metric when the number of positive and negative samples in the dataset is balanced, and the cost of false positives and false negatives is similar. The formula for calculating accuracy is as follows:

Accuracy is the most straightforward and accurate metric for evaluating model performance, but if the distribution of data between classes is imbalanced, accuracy can show misleading evaluation results. In such cases, various evaluation metrics, such as precision, recall, and F1-score, can be used to evaluate the model’s performance.

Instances of classification models have TP, TN, FP, and FN. TP and TN indicate the number of true positive and true negative results, respectively, whereas FP and FN indicate the number of false positives and negatives. Accuracy is used when the dataset is balanced, and the ratio of TP and TN is high. Precision is useful when the ratio of FP is high, and recall is useful when the ratio of FN is high. F1-score is a metric used when both FP and FN are essential, as it is calculated as the harmonic mean of precision and recall.

In

Figure 8, we can see the distribution of each instance. The present model has a constant number of 110 spare part dataset samples. Additionally, since the ratio of classified TP and TN is high and balanced, accuracy is more helpful than other evaluation metrics. Furthermore, as the model size increases, the required computational resources for training increase, resulting in slower training speed and possible degradation of accuracy performance. Therefore, to select a model with optimized memory usage, we also considered training speed.

Accuracy loss is a metric that represents the difference between the accuracy value and 1. It is a measure used to quantify the difference between the predicted values of a model and the actual values and is calculated as a value between 0 and 1.

The lower the accuracy loss, the more accurate the model’s predictions, and the higher the accuracy loss, the more inaccurate the model’s predictions.

5.3. Learning and Results

This study presents the training process of a ship spare parts recognition model using transfer learning.

Table 3 shows the results of training the model using three different optimization algorithms.

Figure 9 illustrates the training process of four transfer learning models using the SGDm algorithm, which showed the fastest training time. The training accuracy ranged from 99.8% for ResNet-50 to 95.4% for SqueezeNet, and the training speed differed by approximately 14 times between the fastest SqueezeNet and the slowest VGG-19.

While ResNet-50 and VGG-19 seem suitable in terms of accuracy, SqueezeNet and ShuffleNet, which have faster training speeds, are more appropriate for embedded devices such as Raspberry Pi.

Comparing the training processes of (C) and (D) in

Figure 9, it was found that SqueezeNet had larger overfitting than ShuffleNet. When overfitting occurs, the model may perform well on the training data but may have lower overall stability and accuracy on a test or new data. Therefore, ShuffleNet, which showed a balanced performance in training speed, accuracy, and stability, was adopted as a learning model for embedded devices.

The model trained in MATLAB is saved in MAT format. Next, it must be converted to a format compatible with Tensorflow (HDF5 or h5) and then finally stored on the Raspberry Pi integrated with the automated ship spare-part management (ASSM) device.

6. Proposal of Automated Ship Spare-Part Management Device

The proposed device works as shown in

Figure 10.

The laptop and controller of the automated ship spare-part management (ASSM) device communicate remotely, and the device machine is placed in the warehouse where the spare parts are stocked. For the device to move on a designated path, a path with a black outline was marked on a white background, and a black horizontal line was marked in the middle of the path to recognise the point where the object was placed.

The spare parts to be recognised are placed in front of the warehouse wall so that there is no obstruction by other objects when recognising the image and is placed where the device’s camera is turned and pointed.

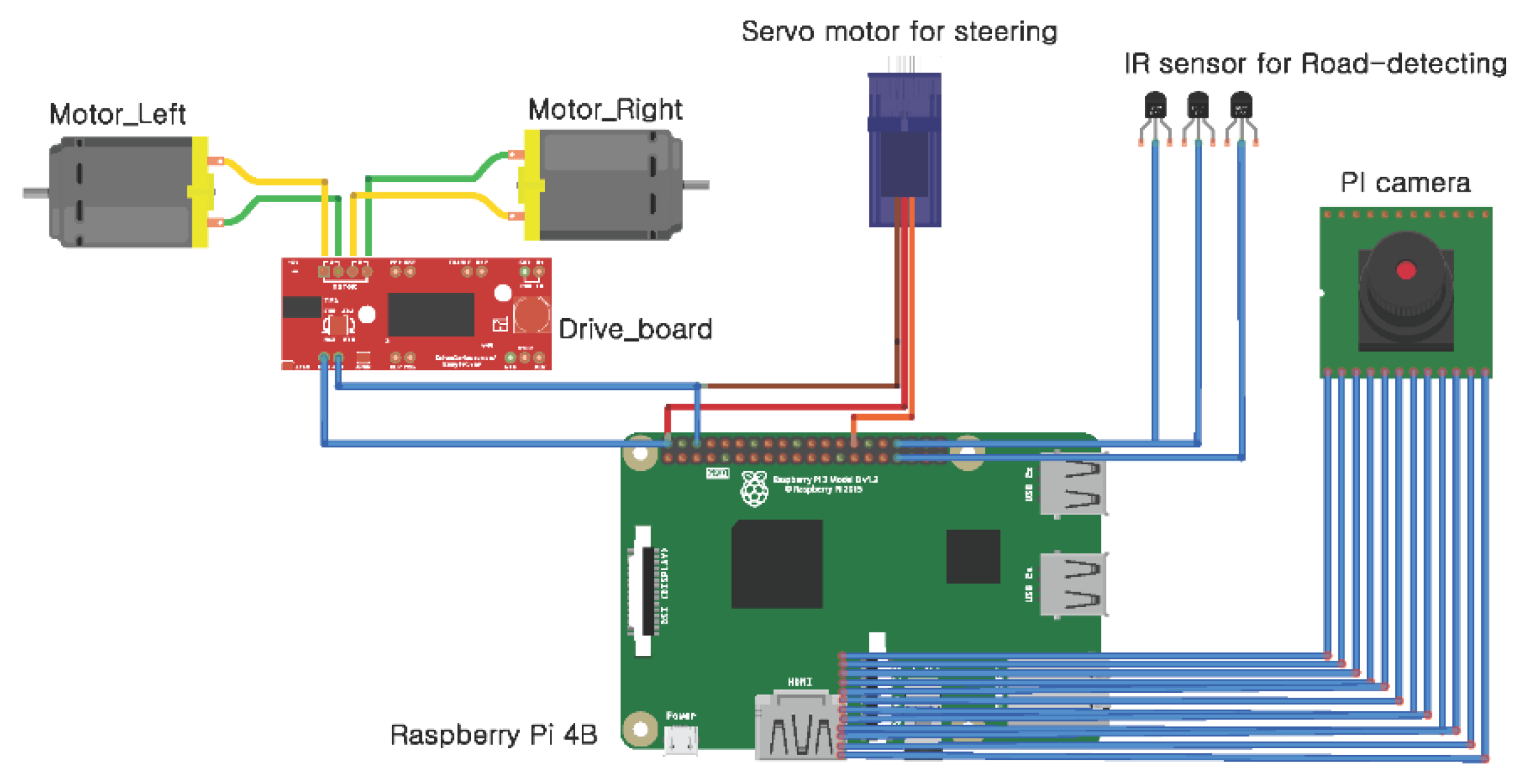

6.1. Experiment Equipment Description

The hardware circuit diagram of the automated ship spare-part management (ASSM) is shown in

Figure 11.

Adeept’s model ‘ADR019’ was used as the main frame of the experimental device. The overall hardware configuration is composed of the main parts: the centre, the input, the output, and the driving. The input unit has three IR sensors (line detecting sensors) to detect black straight lines and horizontal lines and a camera module to collect images of the spare part.

The central part receives the binary signal of the IR sensor and sends the signal to the steering servo motor, which is the output part. It gets the stock image from the camera module and stores it in the storage disk of the main part. In addition, it determines forward/stop according to the internal variable ‘CAR STATE’ and sends a signal to the drive motor, which is the output unit.

Table 3 lists the hardware configurations of the ASSM device.

6.2. Algorithm Description

Figure 12 shows the flowchart for the ASSM device.

A ‘GO’ signal is sent to the experimental device through the SSH protocol using a Wifi module to start the experiment. When the ‘CAR STATE’ of the device is changed to ‘GO’, it moves in a straight line along the path, marked with two black lines on the warehouse floor. During movement, when a horizontal line indicating the spare-part location is detected, ‘CAR STATE’ becomes ‘Capture stop’, and the driving motor is stopped. The device turns the camera 90° in the direction of the spare part, captures the spare-part photo, and saves it to the designated path in the form of a PNG file. If the total number of stored spare-part photos is less than 3, ‘CAR STATE’ returns to ‘GO’ again and this process is repeated. When three spare-part photos were collected, the driving motor was stopped and ‘CAR STATE’ was changed to ‘STOP’.

When ‘CAR STATE’ is ‘STOP’, the class of spare-part is identified by inputting the saved spare-part photo into the pre-learned DCNN model. Based on the histogram data corresponding to the type of object, the number of objects is estimated through image processing using a contour. Based on the histogram data corresponding to the class of the predicted spare part, the number of spare parts is estimated through image processing using the contour function.

Finally, the class and number of predicted spare parts were output through the Tkinter GUI, a Python library.

6.3. Device Validation

The process for verification of the device is illustrated in

Figure 13.

Three black lines were marked in the middle of the path so that the device could identify the location of the spare part. In a representative experiment, Spindles and springs were selected as the spare parts to be recognised.

By detecting the first and second objects, which were the same spare part, the ‘Spindle’, it was verified that the device could recognise a given object and count the number of similar objects. After that, by identifying the next object, a ‘Spring’ it was verified that the device could recognise up to three objects. The experiment was conducted using a laptop remotely connected to the Raspberry Pi through SSH communication. The device traced the path to the end while detecting a black line using three IR sensors. When the device encountered a horizontal black line in the middle of the path, it paused to collect images. After collecting the image of the third spare part, the device stopped, and the spare parts stock result was displayed using the Python Tkinter GUI on the laptop, as shown in

Figure 14.

The spare-part class was accurately predicted, and the number of spare parts according to the class was accurately identified. In 7 experiments, the recognition accuracy for the type and number of spares was demonstrated as shown in

Table 4.

7. Conclusions and Perspectives

In this study, a remote device is proposed for automating the management of spare parts for ships. For recognizing the ship’s spare parts, six objects were selected as spare parts for ships, and 110 image data were used for each object. The training model for recognizing the class of spare parts was trained and compared using four transfer-learning models (ResNet-50, VGG-19, ShuffleNet, SqueezeNet) with verified performance, and ShuffleNet was selected as suitable for embedded devices based on comparisons of accuracy and training speed.

The number of objects was identified using an algorithm that combined the back-projection and contour functions, which are image processing technologies of OpenCV, and good results were obtained. The device experiment in this study was conducted through a WiFi network of the internal Ethernet in an actual ship environment. Still, the experiment through an internet connection from an external network to the ship’s internal Ethernet network was also successful.

The proposed automated ship spare-part management device is expected to enable shipping companies to accurately identify individual spare parts of a ship and reduce unnecessary stock to achieve economic benefits and secure the ship’s safety by managing the quantities as demanded by law for the safety of the ship. In addition, it can significantly contribute to the development of autonomous ships, thereby reducing the manpower on ships.

A relatively large spare part was used in this experiment. However, in the case of tiny spare parts, such as an O-ring, image recognition software may have limitations. Therefore, in the future, we plan to develop a device that can identify a ship’s spare parts by adding a weight-measurement-based spare-part management device to identify even such tiny spare parts.