Underwater Image Restoration Method Based on Multi-Frame Image under Artificial Light Source

Abstract

:1. Introduction

- (1)

- An improved underwater image restoration method is developed based on multi-frame neighboring images under artificial light source.

- (2)

- To address the limitations of the traditional image restoration methods where the normalized residual energy ratio should be known a priori and the attenuation coefficient is difficult to be adjusted, a transmittance estimation method is developed based on the multi-frame images. Specifically, the attenuation coefficient is calculated using the light intensity attenuation relationship of corresponding points between multiple frame sequence images.

- (3)

- There is significant deviation or even failure in the estimation of background light under artificial light sources in turbid water bodies based on the existing methods. To solve the problem, this paper presents a new background light estimation method. By segmenting the foreground and background regions of underwater images under artificial light sources, the accuracy of background light estimation in turbid water bodies is improved by locking the small area where the background light is located. Therefore, the accuracy of image restoration is improved.

- (4)

- The developed method and some existing methods are used to restore the underwater images. The comparative results demonstrate the effectiveness of the developed method under artificial light source.

2. Transmittance Estimation under Artificial Light Source

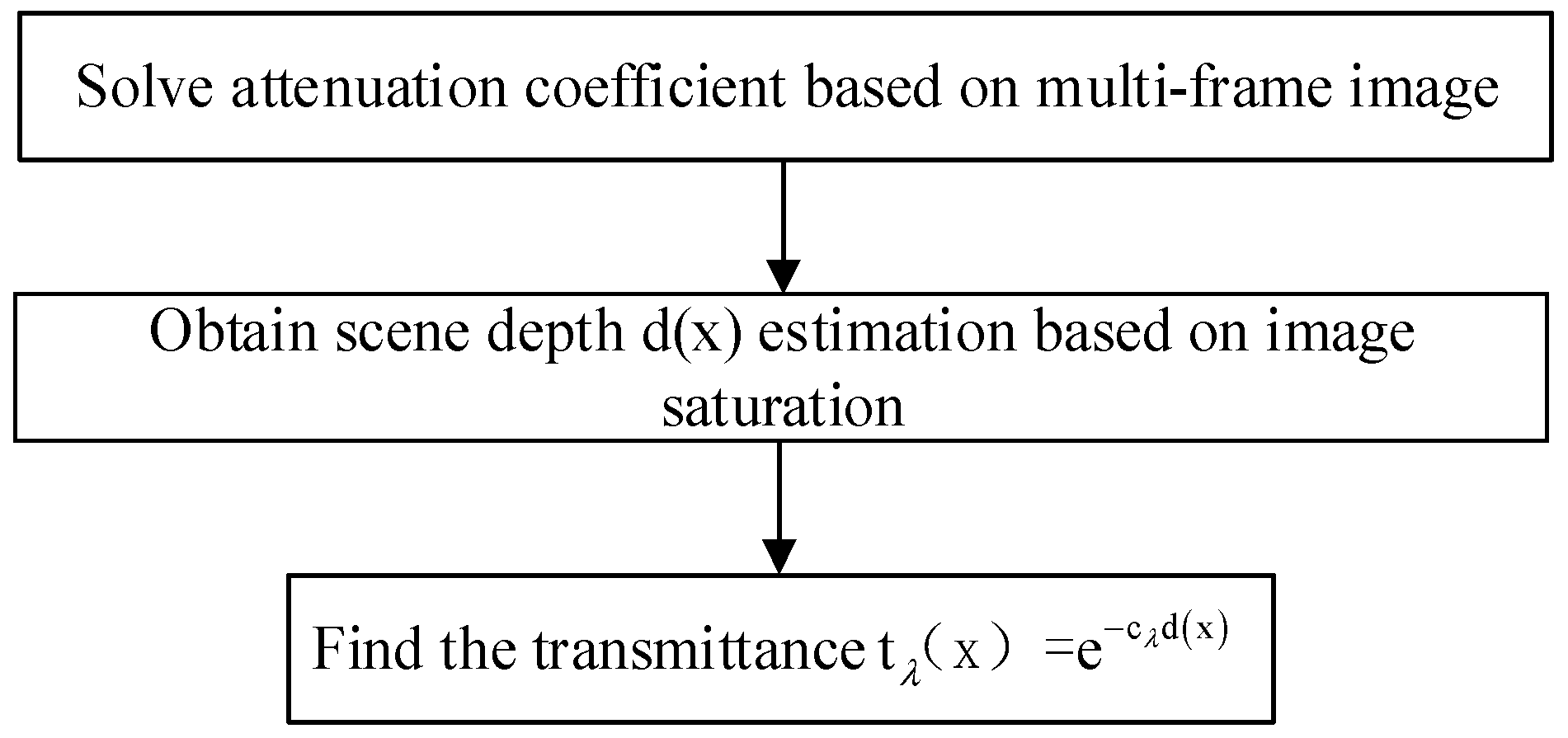

2.1. Ideas of the Improved Transmittance Estimation

2.2. Specific Implementation of the Improved Transmittance Estimation

- Step 1: Calculate attenuation coefficient

- Step 2: Scene depth estimation

- Step 3: Transmission estimation

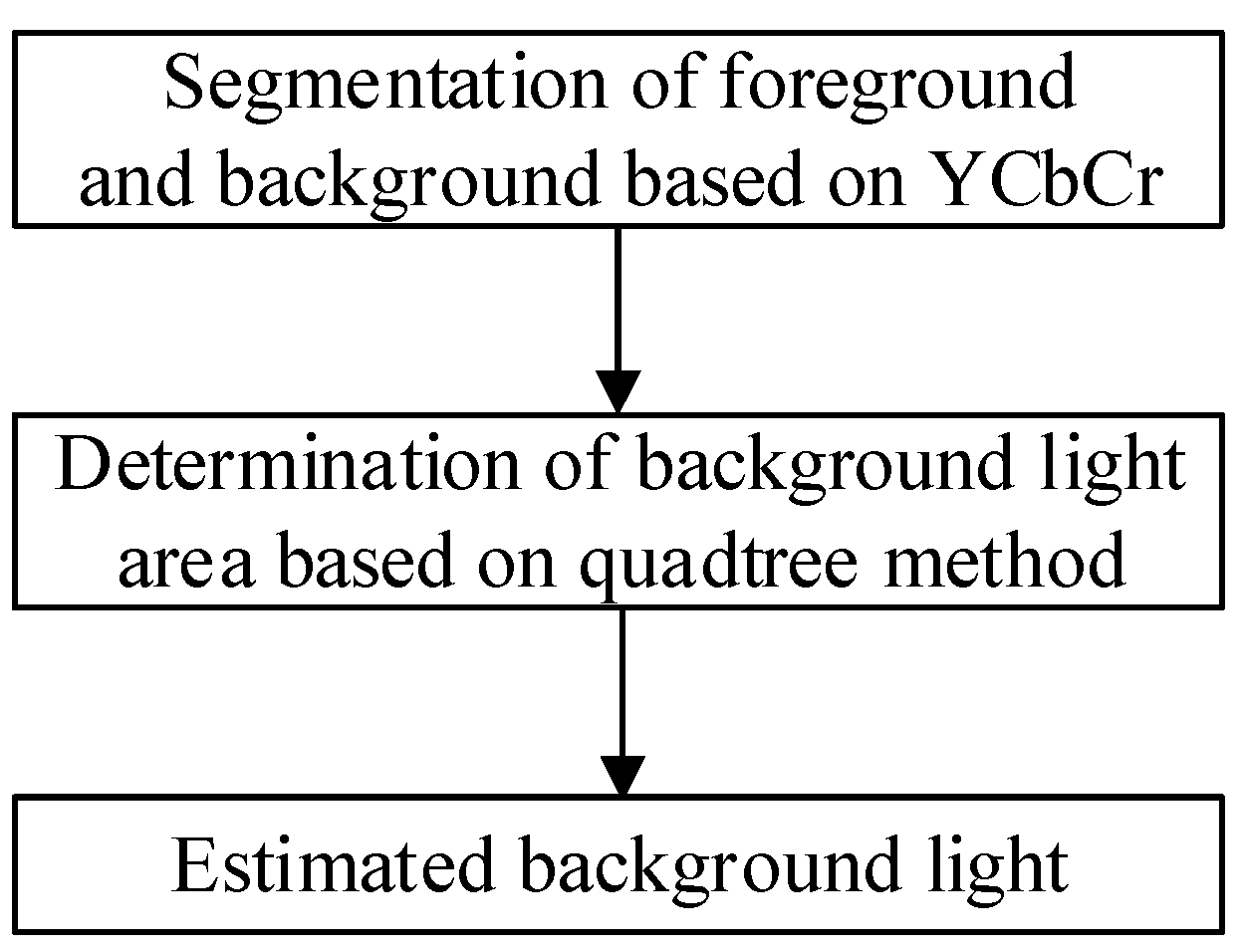

3. Background Light Estimation under Artificial Light Source

3.1. Ideas of Background Light Estimation

3.2. Specific Implementation of Background Light Estimation

- Step 1: Segmentation of foreground and background based on YCbCr

- Step 2: Determination of background light area based on quadtree method

- Step 3: Background light estimation

4. Experimental Verification

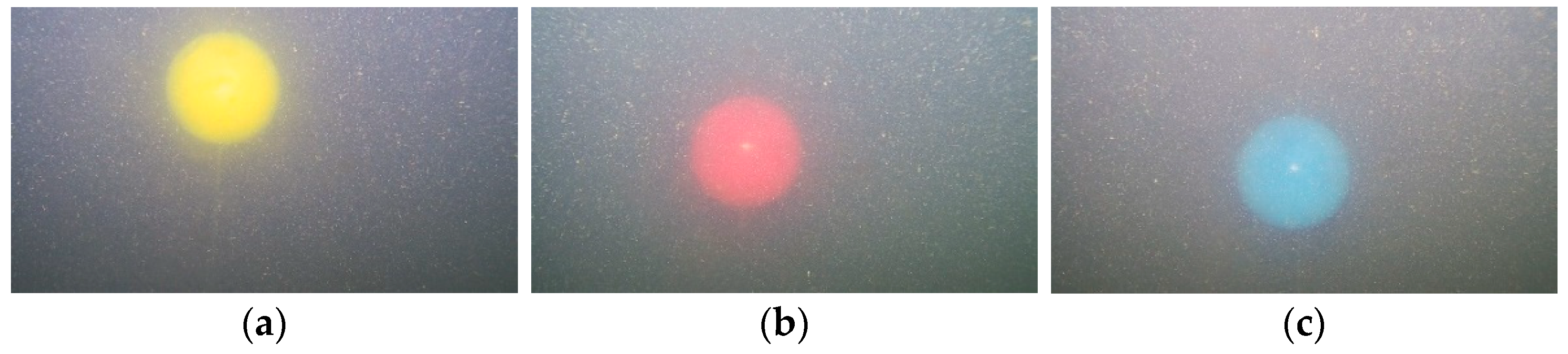

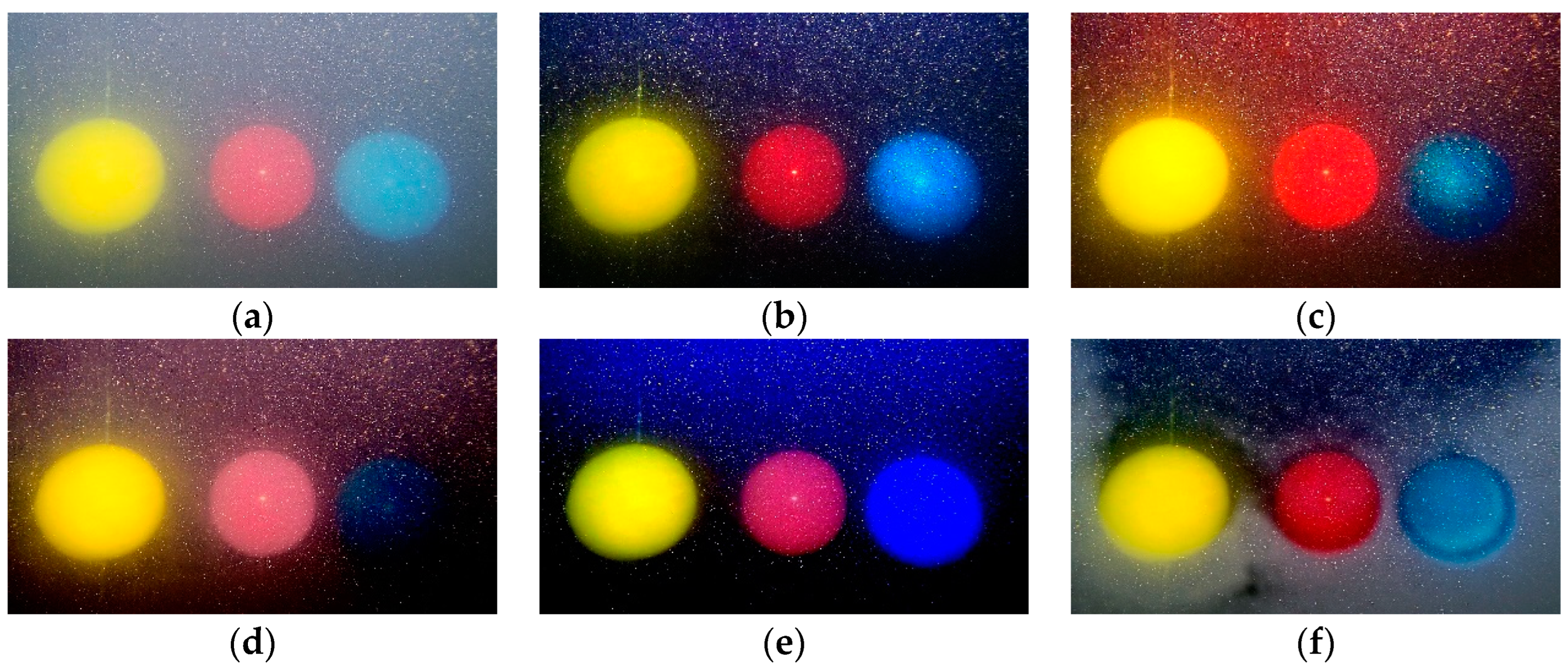

4.1. Generation of Original Images

4.2. Experimental Result

- (1)

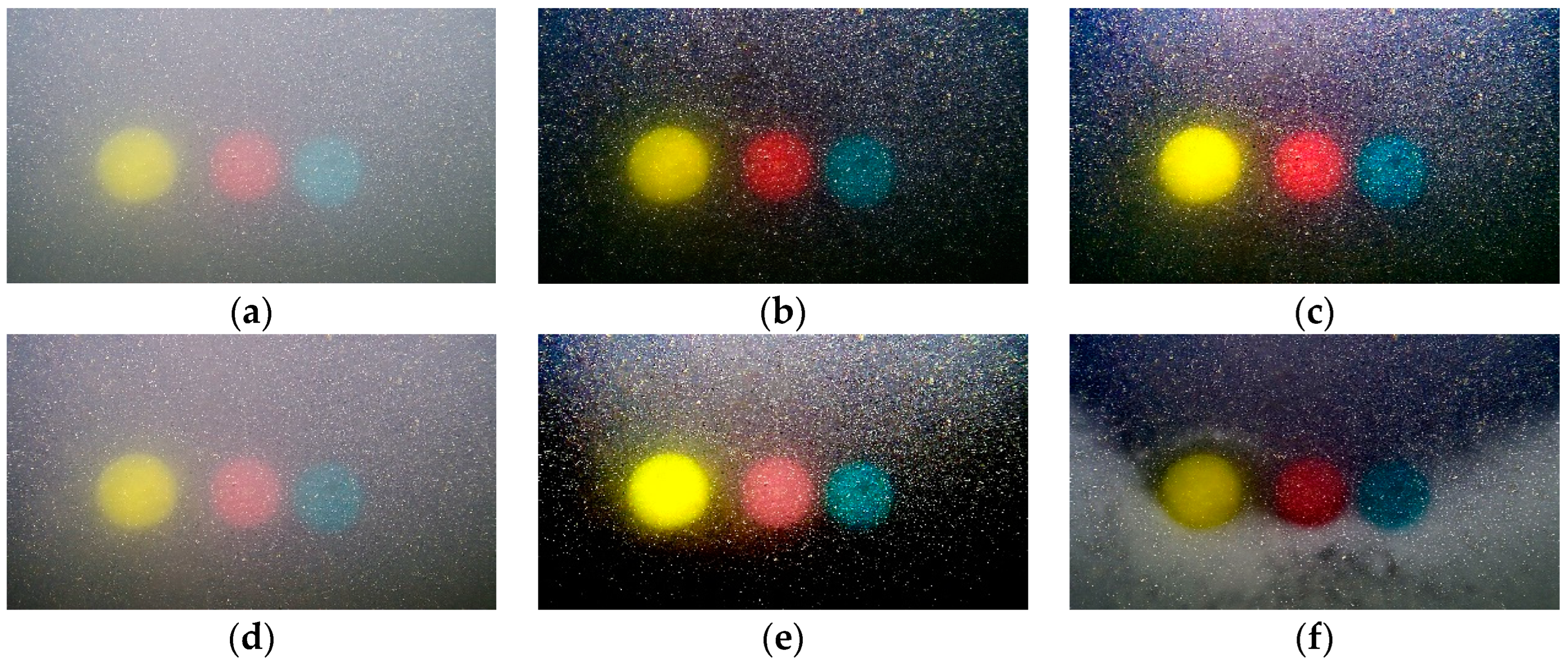

- Experimental verification of multi-balls

- (2)

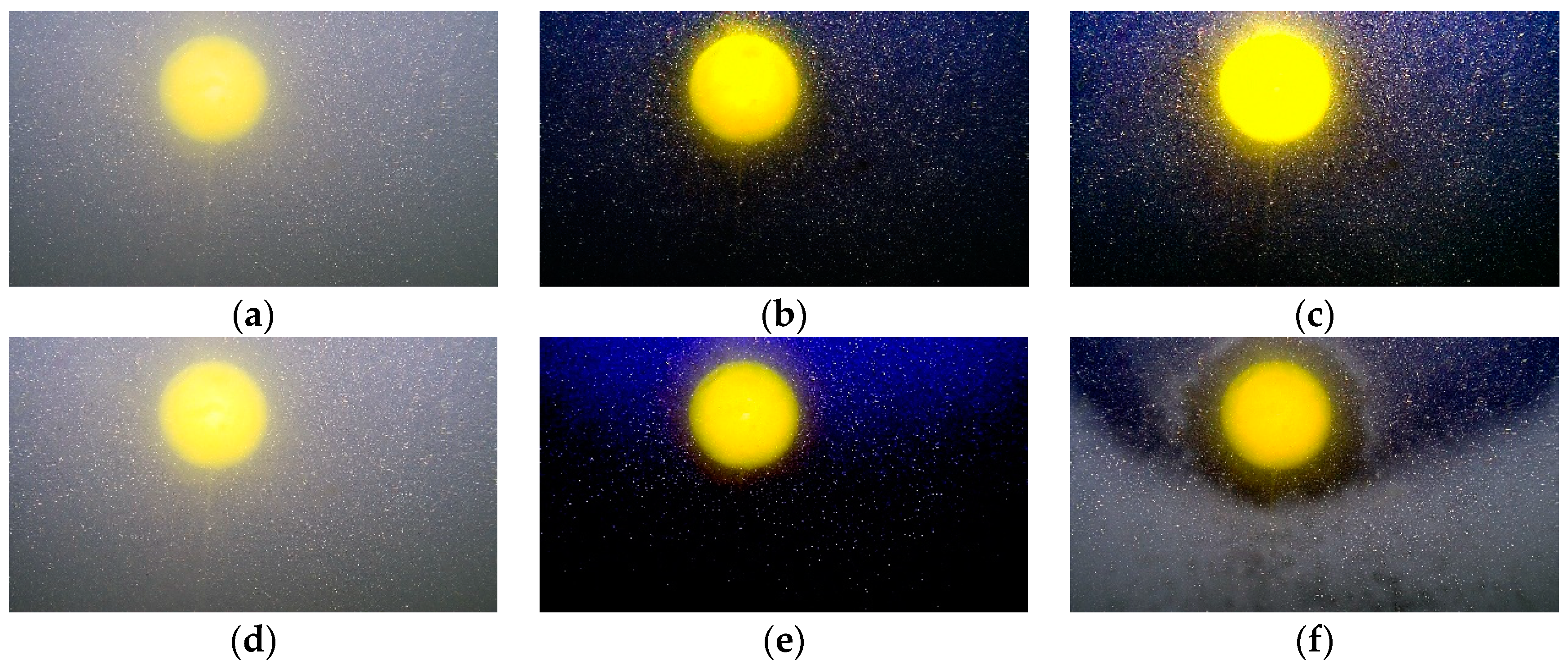

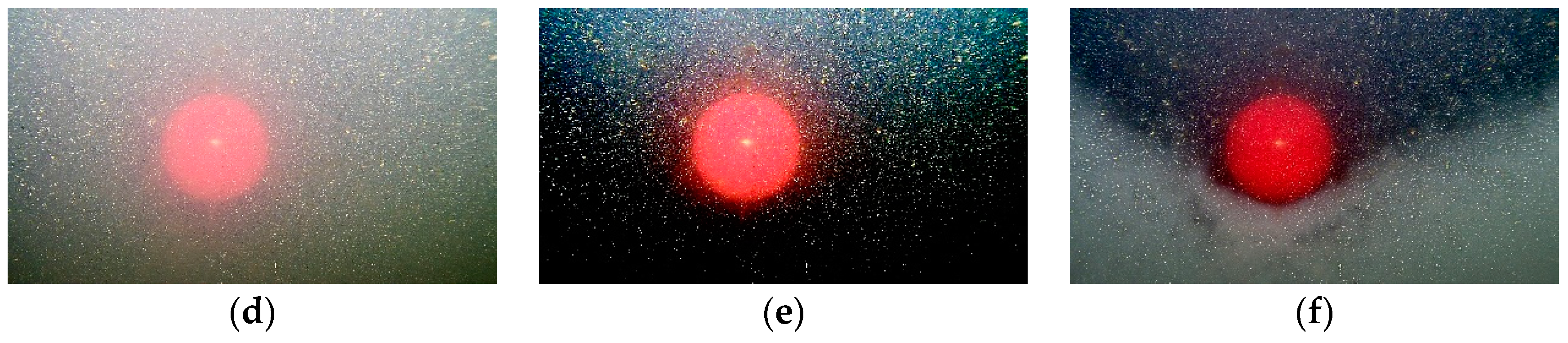

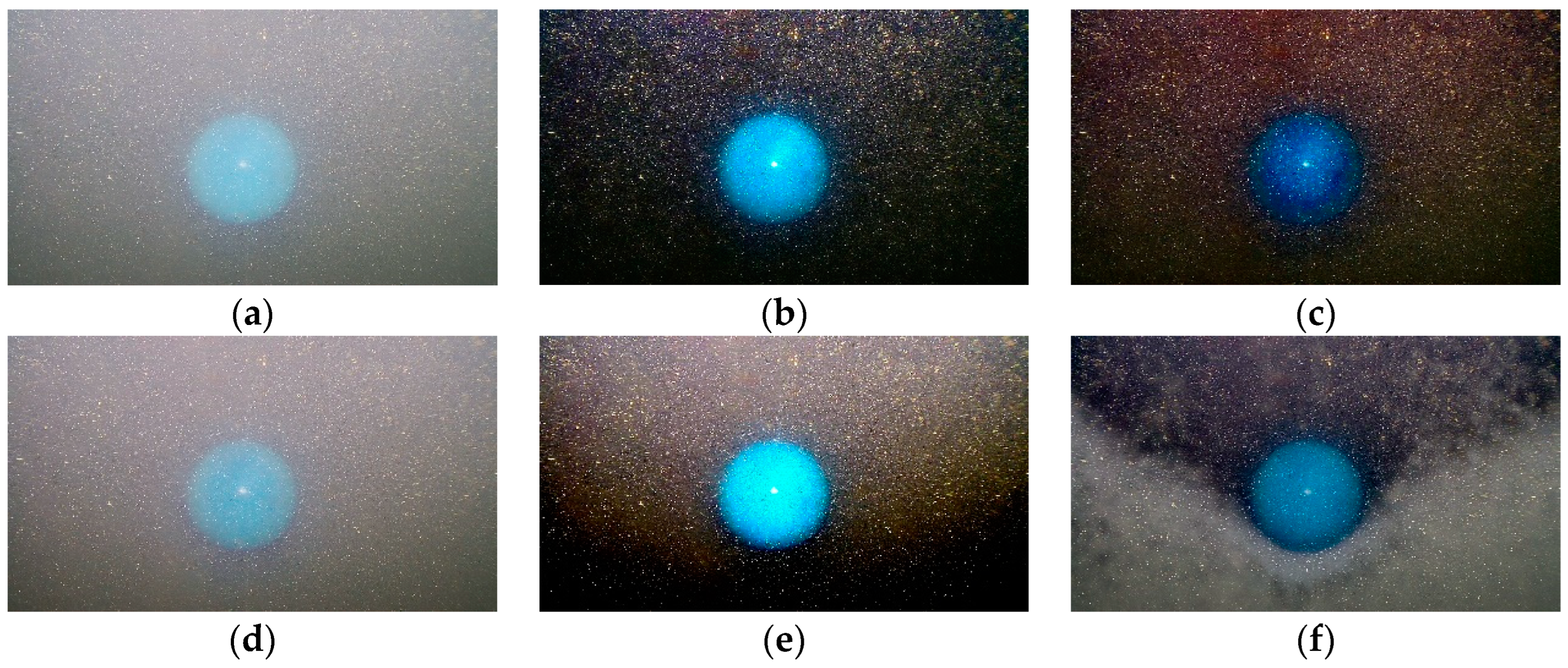

- Experimental verification of single ball with different color

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, X.; Zhang, M.-J.; Chu, Z.-Z.; Rogers, E. A Sphere Region Tracking Control Scheme for Underwater Vehicles. IEEE Trans. Veh. Technol. 2023. [Google Scholar] [CrossRef]

- Kim, L.; Sung, M.; Yu, S.-C. Development of simulator for autonomous underwater vehicles utilizing underwater acoustic and optic al sensing emulators. In Proceedings of the 18th International Conference on Control, Automation and Systems, PyeongChang, Republic of Korea, 17–20 October 2018; pp. 416–419. [Google Scholar]

- Xu, S.B.; Zhang, M.H.; Song, W.; Mei, H.B.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Zhang, T.C.; Li, Q.; Li, Y.S.; Liu, X. Underwater Optical Image Restoration Method for Natural/Artificial Light. J. Mar. Sci. Eng. 2023, 11, 470. [Google Scholar] [CrossRef]

- Lodi Rezzini, D.; Kallasi, F.; Aleotti, J.; Oleari, F.; Caselli, S. Integration of a stereo vision system into an autonomous underwater vehicle for pipe manipulation tasks. Comput. Electr. Eng. 2017, 58, 560–571. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, S.; Wei, Q.; Tan, M.; Zhou, C.; Yu, J. Development of an underwater manipulator and its free-floating autonomous operation. IEEE/ASME Trans. Mechatron. 2016, 21, 815–824. [Google Scholar] [CrossRef]

- Bobkov, V.A.; Kudryashov, A.P.; Mel’man, S.V.; Shcherbatyuk, A.F. Autonomous Underwater Navigation with 3D Environment Modeling Using Stereo Images. Gyroscopy Navig. 2018, 9, 67–75. [Google Scholar] [CrossRef]

- Li, X.; Zhang, M. Underwater color image segmentation method via RGB channel fusion. Opt. Eng. 2017, 56, 023101. [Google Scholar]

- Manzanilla, A.; Reyes, S.; Garcia, M.; Mercado, D.; Lozano, R. Autonomous navigation for unmanned underwater vehicles: Real-time experiments using computer vision. IEEE Robot. Autom. Lett. 2019, 4, 1351–1356. [Google Scholar] [CrossRef]

- Qin, J.; Li, M.; Li, D.; Zhong, J.; Yang, K. A Survey on Visual Navigation and Positioning for Autonomous UUVs. Remote Sens. 2022, 14, 3794. [Google Scholar] [CrossRef]

- Raveendran, S.; Patil, M.D.; Birajdar, G.K. Underwater image enhancement: A comprehensive review, recent trends, challenges and applications. Artif. Intell. Rev. 2021, 54, 5413–5467. [Google Scholar] [CrossRef]

- Liu, Y.; Rong, S.; Cao, X.; Li, T.; He, B. Underwater Single Image Dehazing Using the Color Space Dimensionality Reduction Prior. IEEE Access 2020, 8, 91116–91128. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, H.; Shen, J.; Li, X.; Xu, L. Region-specialized underwater image restoration in inhomogeneous optical environments. Int. J. Light Electron Opt. 2014, 125, 2090–2098. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2822–2837. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Tao, J.; Song, Q. deriving inherent optical properties from background color and underwater image enhancement. Ocean. Eng. 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Han, P.; Liu, F.; Yang, K.; Ma, J.; Li, J.; Shao, X. Active underwater descattering and image recovery. Appl. Opt. 2017, 56, 6631–6638. [Google Scholar] [CrossRef]

- Li, T.Y.; Rong, S.H.; Cao, X.T.; Liu, Y.B.; Chen, L.; He, B. Underwater image enhancement framework and its application on an autonomous underwater vehicle platform. Opt. Eng. 2020, 59, 083102. [Google Scholar] [CrossRef]

- Peng, Y.T.; Zhao, X.; Cosman, P.C. Underwater Image Restoration Based on Image Blurriness and Light Absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- He, K.M.; Sun, J.; Tang, X.O. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic Red-Channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Liu, H.; Chau, L.-P. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 992–1002. [Google Scholar] [CrossRef]

- Nair, D.; Sankaran, P. Color image dehazing using surround filter and dark channel prior. J. Vis. Commun. Image Represent. 2018, 50, 9–15. [Google Scholar] [CrossRef]

- Paulo Drews, J.R.; Nascimento, E.; Moraes, F.; Botelho1, S.; Campos, M. Transmission Estimation in Underwater Single Images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 1–8 December 2013; pp. 825–830. [Google Scholar]

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R.M. Initial results in underwater single image dehazing. In Proceedings of the IEEE Conference on OCEANS, Seattle, WA, USA, 20–23 September 2010; pp. 1–8. [Google Scholar]

- Deng, X.Y.; Wang, H.G.; Liu, X. Underwater Image Enhancement Based on Removing Light Source Color and Dehazing. IEEE Access 2019, 7, 114297–114309. [Google Scholar] [CrossRef]

- Deng, X.; Wang, H.; Zhang, Y. Deep Sea Enhancement Method Based on the Active Illumination. Acta Photonica Sin. 2020, 49, 0310001. [Google Scholar] [CrossRef]

- Hao, J.Y.; Yang, H.B.; Hou, X.; Zhang, Y. Two-Stage Underwater Image Restoration Algorithm Based on Physical Model and Causal Intervention. IEEE Signal Process Lett. 2023, 30, 120–124. [Google Scholar] [CrossRef]

- Guo, W.; Zhang, Y.B.; Zhou, Y.; Xu, G.F.; Li, G.W. Rapid Deep-Sea Image Restoration Algorithm Applied to Unmanned Underwater Vehicles. Acta Opt. Sin. 2022, 42, 0410002. [Google Scholar]

- Tan, Y.; Qin, J.; Xiang, X.; Ma, W.; Pan, W.; Xiong, N.N. A Robust Watermarking Scheme in YCbCr Color Space Based on Channel Coding. IEEE Access 2019, 7, 25026–25036. [Google Scholar] [CrossRef]

- Wang, X.F.; Zhang, X.Y.; Gao, R. Research of Image Edge Detection Based on Mathematical Morphology. Int. J. Signal Process. Image Process. Pattern Recognit. 2013, 6, 227–236. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Again, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Avcibas, I.; Sankur, B.; Sayood, K. Statistical evaluation of image quality measures. J. Electron. Imaging 2002, 11, 206. [Google Scholar] [CrossRef] [Green Version]

| Original | Developed | UDCP | MIP | IBLA | WCID | |

|---|---|---|---|---|---|---|

| UCIQE | 1.2319 | 3.1258 | 1.3846 | 1.8618 | 2.9908 | 1.2923 |

| UIQM | 0.8843 | 1.5567 | 1.1746 | 0.6831 | 0.3386 | 0.8956 |

| ENTROPY | 7.0131 | 7.4544 | 7.4282 | 6.9281 | 4.6765 | 7.1672 |

| Original | Developed | UDCP | MIP | IBLA | WCID | |

|---|---|---|---|---|---|---|

| UCIQE | 0.6109 | 1.5192 | 1.7505 | 0.6552 | 0.9531 | 1.0329 |

| UIQM | 0.7237 | 1.6064 | 1.5813 | 0.7167 | 1.2899 | 1.1950 |

| ENTROPY | 6.8341 | 7.0880 | 7.1713 | 6.9140 | 6.3142 | 7.1595 |

| Original | Developed | UDCP | MIP | IBLA | WCID | |

|---|---|---|---|---|---|---|

| UCIQE | 0.9956 | 2.4187 | 2.1073 | 1.0645 | 1.8124 | 1.1310 |

| UIQM | 1.0097 | 1.8819 | 1.0353 | 0.9464 | 1.6657 | 0.8202 |

| ENTROPY | 7.1095 | 6.5587 | 4.0751 | 7.0766 | 3.8817 | 7.1193 |

| Original | Developed | UDCP | MIP | IBLA | WCID | |

|---|---|---|---|---|---|---|

| UCIQE | 0.5714 | 1.6970 | 1.8035 | 0.5849 | 1.1617 | 1.0105 |

| UIQM | 0.6996 | 1.6814 | 1.6756 | 0.7117 | 1.2721 | 0.8362 |

| ENTROPY | 5.6138 | 7.4733 | 6.0740 | 5.5967 | 6.0717 | 7.1237 |

| Original | Developed | UDCP | MIP | IBLA | WCID | |

|---|---|---|---|---|---|---|

| UCIQE | 1.8544 | 3.1258 | 2.3846 | 1.8618 | 2.9908 | 2.5723 |

| UIQM | 1.0391 | 2.2560 | 1.9791 | 1.1080 | 2.8653 | 1.3046 |

| ENTROPY | 6.1132 | 7.1400 | 6.6855 | 6.1409 | 6.9177 | 6.9818 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Gao, Y.; Wang, Z.; Zhang, M. Underwater Image Restoration Method Based on Multi-Frame Image under Artificial Light Source. J. Mar. Sci. Eng. 2023, 11, 1213. https://doi.org/10.3390/jmse11061213

Zhang T, Gao Y, Wang Z, Zhang M. Underwater Image Restoration Method Based on Multi-Frame Image under Artificial Light Source. Journal of Marine Science and Engineering. 2023; 11(6):1213. https://doi.org/10.3390/jmse11061213

Chicago/Turabian StyleZhang, Tianchi, Yong Gao, Zhiyong Wang, and Mingjun Zhang. 2023. "Underwater Image Restoration Method Based on Multi-Frame Image under Artificial Light Source" Journal of Marine Science and Engineering 11, no. 6: 1213. https://doi.org/10.3390/jmse11061213