ABiLSTM Based Prediction Model for AUV Trajectory

Abstract

:1. Introduction

- The ABiLSTM model for AUV trajectory prediction is proposed. The trajectory prediction issue is regarded as a time series prediction problem in this work. In order to increase the accuracy of AUV trajectory prediction, the features of the data are extracted, and the attention mechanism is utilized to boost the contribution of important aspects and decrease the effect of unimportant elements.

- Different factors influencing AUV trajectory prediction are considered, such as historical AUV trajectory data and ocean current influencing factors. In this paper, historical AUV track data is a time series that considers not only the longitude, latitude, and altitude information of AUV historical data points but also the course over ground and speed over ground of AUV and ocean current information about the position of the lost AUV in the ocean to improve data variety.

- A sliding-window data training approach is used. Using the historical trajectory information in the time window to forecast the development direction of the next moment in the future can ensure the continuity of data, which is more conducive to model training and trajectory prediction.

2. Model Description

2.1. Recurrent Neural Network Model

2.2. LSTM Model

2.3. BiLSTM Model

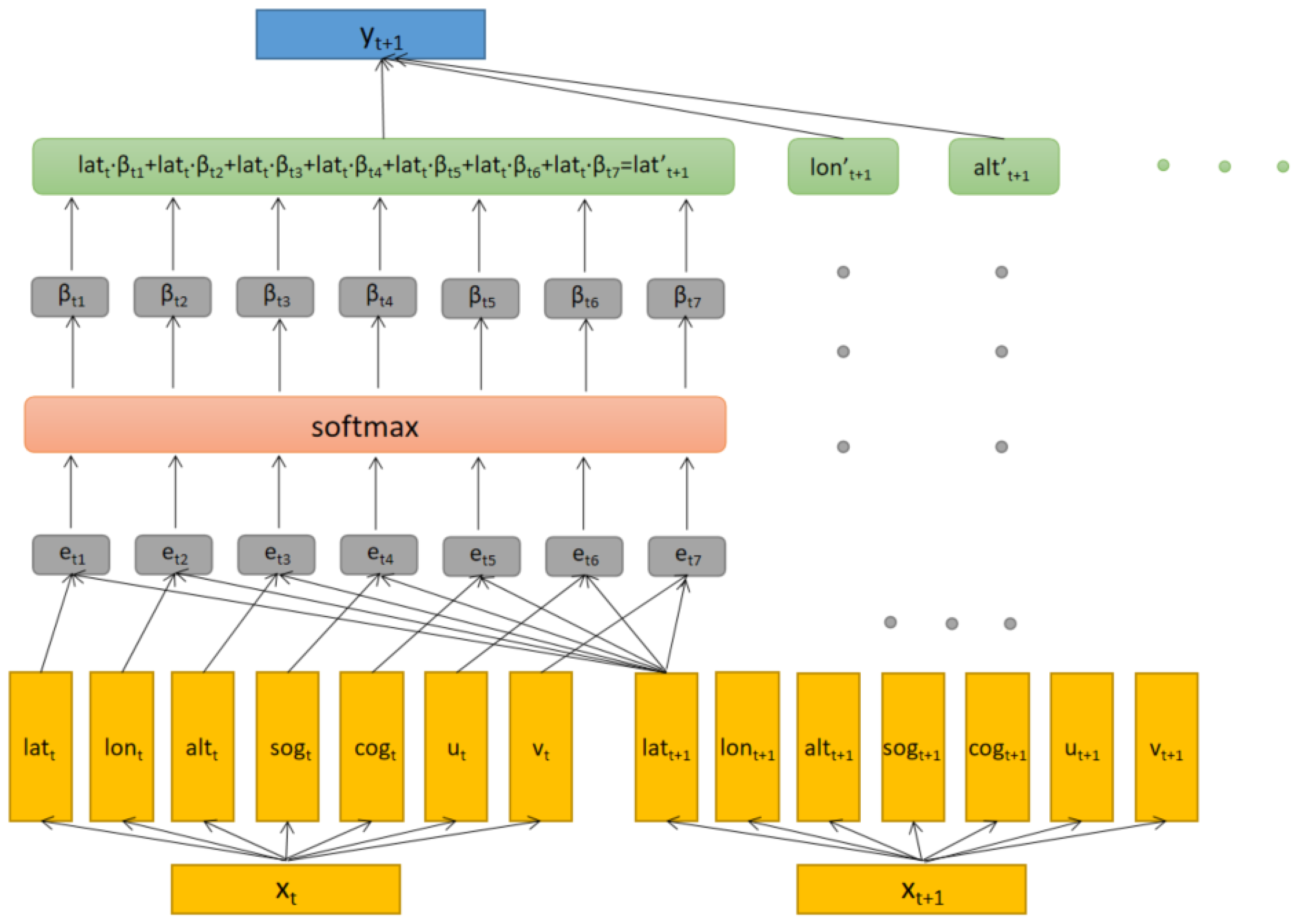

2.4. Attention-LSTM Model

2.5. Trajectory Prediction Model Proposed Based on ABiLSTM

3. Experiments and Result Analysis

3.1. Experimental Environment and Experimental Settings

3.2. Evaluation Metrics

3.3. Model Implementation

3.4. Result Analysis

3.4.1. Analysis of AUV Trajectory Prediction Results

3.4.2. Results of Ship Trajectory Prediction Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiang, X.; Yu, C.; Zhang, Q. On intelligent risk analysis and critical decision of underwater robotic vehicle. Ocean Eng. 2017, 140, 453–465. [Google Scholar] [CrossRef]

- Hamilton, K.; Lane, D.M.; Brown, K.E.; Evans, J.; Taylor, N.K. An integrated diagnostic architecture for autonomous underwater vehicles. J. Field Robot. 2007, 24, 497–526. [Google Scholar] [CrossRef]

- Brito, M.; Griffiths, G.; Ferguson, J.; Hopkin, D.; Mills, R.; Pederson, R.; MacNeil, E. A behavioral probabilistic risk assessment framework for managing autonomous underwater vehicle deployments. J. Atmos. Ocean. Technol. 2012, 29, 1689–1703. [Google Scholar] [CrossRef] [Green Version]

- Luo, J.; Han, Y.; Fan, L. Underwater acoustic target tracking: A review. Sensors 2018, 18, 112. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Lu, J.; Chen, X.; Yan, Z.; Yan, Y.; Sun, Y. High-fidelity data supported ship trajectory prediction via an ensemble machine learning framework. Phys. A Stat. Mech. Its Appl. 2022, 586, 126470. [Google Scholar] [CrossRef]

- Liu, R.W.; Liang, M.; Nie, J.; Lim, W.Y.B.; Zhang, Y.; Guizani, M. Deep Learning-Powered Vessel Trajectory Prediction for Improving Smart Traffic Services in Maritime Internet of Things. IEEE Trans. Netw. Sci. Eng. 2022, 9, 3080–3094. [Google Scholar] [CrossRef]

- Xu, T.; Liu, X.; Yang, X. Ship Trajectory online prediction based on BP neural network algorithm. In Proceedings of the IEEE International Conference of Information Technology, Computer Engineering and Management Sciences, Nanjing, China, 24–25 September 2011; pp. 103–106. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, Y.; Zhang, S. Ship trajectory prediction based on BP neural network. J. Art. Int. 2019, 1, 29. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Mikolov, T.; Joulin, A.; Chopra, S.; Mathieu, M.; Ranzato, M.A. Learning longer memory in recurrent neural networks. arXiv 2014, arXiv:1412.7753. [Google Scholar]

- Le, Q.V.; Jaitly, N.; Hinton, G.E. A simple way to initialize recurrent networks of rectified linear units. arXiv 2015, arXiv:1504.00941. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar] [CrossRef]

- Jia, P.; Chen, H.; Zhang, L.; Han, D. Attention-LSTM based prediction model for aircraft 4-D trajectory. Sci. Rep. 2022, 12, 15533. [Google Scholar] [CrossRef]

- Yin, J.; Ning, C.; Tang, T. Data-driven models for train control dynamics in high-speed railways: LAG-LSTM for train trajectory prediction. Inf. Sci. 2022, 600, 377–400. [Google Scholar] [CrossRef]

- Wu, X.; Yang, H.; Chen, H.; Hu, Q.; Hu, H. Long-term 4D trajectory prediction using generative adversarial networks. Transp. Res. Part C Emerg. Technol. 2022, 136, 103554. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–15. [Google Scholar]

- Dong, S.; Xiao, J.; Hu, X.; Fang, N.; Liu, L.; Yao, J. Deep transfer learning based on Bi-LSTM and attention for remaining useful life prediction of rolling bearing. Reliab. Eng. Syst. Saf. 2023, 230, 108914. [Google Scholar] [CrossRef]

- Irsoy, O.; Cardie, C. Deep recursive neural networks for compositionality in language. Adv. Neural. Inf. Process. Syst. 2014, 27. Available online: https://www.cs.cornell.edu/~oirsoy/files/nips14drsv.pdf (accessed on 24 May 2023).

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef] [Green Version]

- Cho, H.; Lee, H. Biomedical named entity recognition using deep neural networks with contextual information. BMC Bioinform. 2019, 20, 735. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Guo, J. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing 2019, 337, 325–338. [Google Scholar] [CrossRef]

- Meng, X.-Y.; Cui, R.-Y.; Zhao, Y.-H.; Zhang, Z. Multilingual short text classification based on LDA and BiLSTM-CNN neural network. In Proceedings of the 16th International Conference in Web Information Systems and Applications, Qingdao, China, 20–22 September 2019; pp. 319–323. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

| Models | Sliding Window Size | Optimizer | Epoch | Learning Rate | Batch Size |

|---|---|---|---|---|---|

| LSTM | 7 | Adam | 200 | 0.001 | 64 |

| BiLSTM | 7 | Adam | 200 | 0.001 | 64 |

| Attention-LSTM | 7 | Adam | 200 | 0.001 | 64 |

| ABiLSTM | 7 | Adam | 200 | 0.001 | 64 |

| Models | Metrics | Latitude (°N) | Longitude (°E) | Altitude (m) |

|---|---|---|---|---|

| LSTM | MSE | 0.003778 | 0.002774 | 595.90824 |

| RMSE | 0.061463 | 0.052671 | 24.411232 | |

| MAE | 0.053417 | 0.046633 | 17.732996 | |

| BiLSTM | MSE | 0.000553 | 0.002584 | 176.978811 |

| RMSE | 0.023516 | 0.050829 | 13.303338 | |

| MAE | 0.018266 | 0.036888 | 9.690001 | |

| Attention-LSTM | MSE | 0.002663 | 0.001150 | 121.345507 |

| RMSE | 0.051604 | 0.033917 | 11.015694 | |

| MAE | 0.042865 | 0.028207 | 8.181799 | |

| ABiLSTM | MSE | 0.000233 | 0.000580 | 79.710532 |

| RMSE | 0.015279 | 0.024088 | 8.928076 | |

| MAE | 0.012825 | 0.017669 | 5.989188 |

| Models | ABiLSTM & Attention-LSTM | ABiLSTM & BiLSTM | ABiLSTM & LSTM |

|---|---|---|---|

| p | 0.008 | 0.008 | 0.005 |

| Models | Sliding Window Size | Optimizer | Epoch | Learning Rate | Batch Size |

|---|---|---|---|---|---|

| LSTM | 3 | Adam | 150 | 0.001 | 32 |

| BiLSTM | 3 | Adam | 150 | 0.001 | 32 |

| Attention-LSTM | 3 | Adam | 150 | 0.001 | 32 |

| ABiLSTM | 3 | Adam | 150 | 0.001 | 32 |

| Models | Metrics | Latitude (°N) | Longitude (°E) |

|---|---|---|---|

| LSTM | MSE | 0.00175 | 0.00069 |

| RMSE | 0.04187 | 0.02629 | |

| MAE | 0.03569 | 0.02247 | |

| BiLSTM | MSE | 0.00041 | 0.00009 |

| RMSE | 0.02031 | 0.00943 | |

| MAE | 0.01436 | 0.00993 | |

| Attention-LSTM | MSE | 0.000033 | 0.00007 |

| RMSE | 0.00574 | 0.00842 | |

| MAE | 0.00395 | 0.00673 | |

| ABiLSTM | MSE | 0.000019 | 0.00004 |

| RMSE | 0.00436 | 0.00624 | |

| MAE | 0.00353 | 0.00461 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhang, J.; Billah, M.M.; Zhang, T. ABiLSTM Based Prediction Model for AUV Trajectory. J. Mar. Sci. Eng. 2023, 11, 1295. https://doi.org/10.3390/jmse11071295

Liu J, Zhang J, Billah MM, Zhang T. ABiLSTM Based Prediction Model for AUV Trajectory. Journal of Marine Science and Engineering. 2023; 11(7):1295. https://doi.org/10.3390/jmse11071295

Chicago/Turabian StyleLiu, Jianzeng, Jing Zhang, Mohammad Masum Billah, and Tianchi Zhang. 2023. "ABiLSTM Based Prediction Model for AUV Trajectory" Journal of Marine Science and Engineering 11, no. 7: 1295. https://doi.org/10.3390/jmse11071295