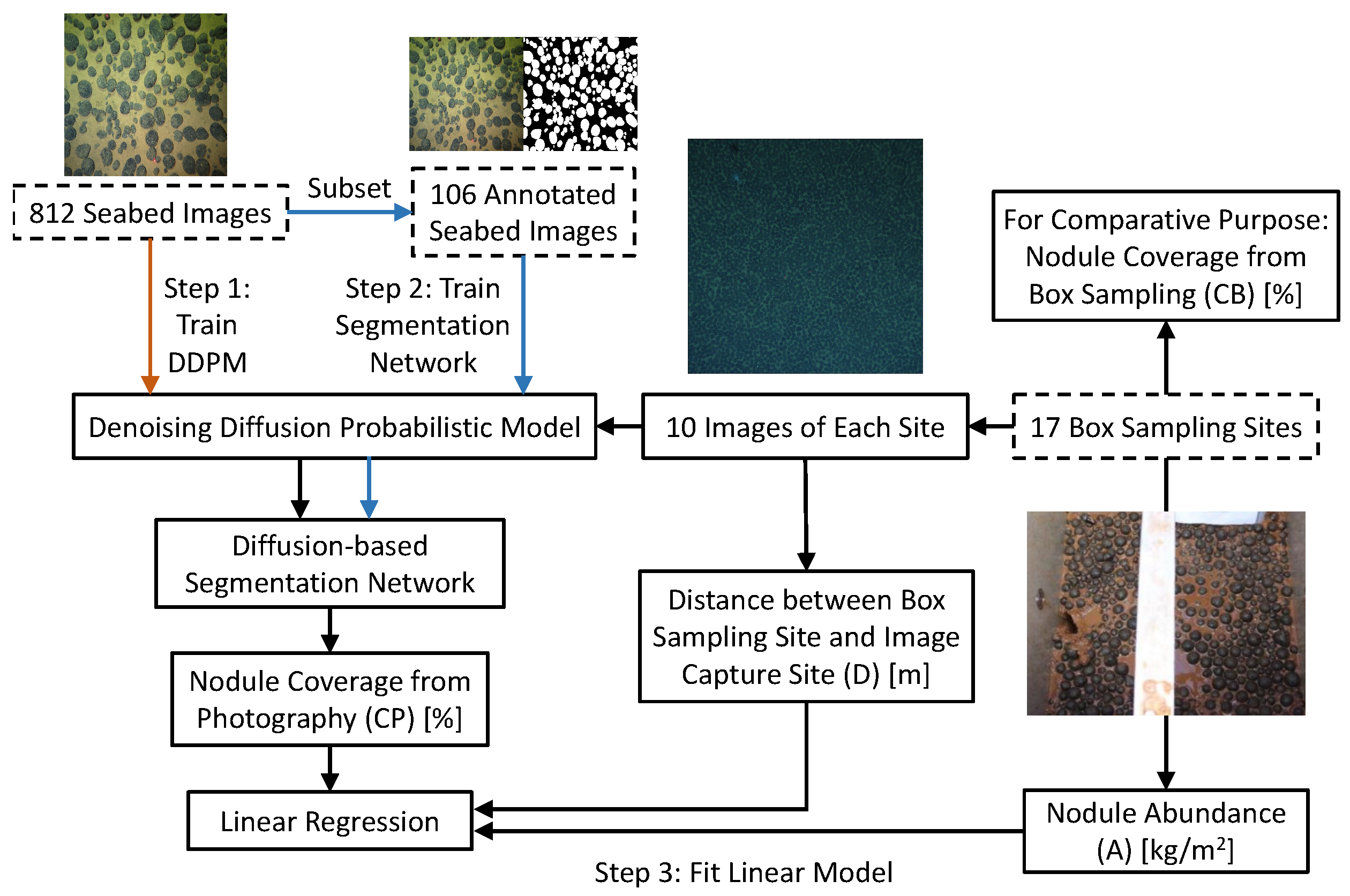

Polymetallic Nodule Resource Assessment of Seabed Photography Based on Denoising Diffusion Probabilistic Models

Abstract

:1. Introduction

- It is the first utilization of a DDPM for extracting features in seabed nodule images, exhibiting its effectiveness in capturing high-level semantic information for accurate segmentation;

- After training on a large set of unlabeled seabed nodule images, the DDPM has the capability to generate synthetic images that closely resemble real images;

- We introduce an efficient semantic segmentation network that harnesses diffusion-based features, demonstrating strong generalization capabilities.

2. Related Works

2.1. Seabed Nodule Image Segmentation

2.2. Diffusion Models

3. Model

3.1. Denoising Diffusion Probabilistic Model

3.2. Diffusion-Based Segmentation Network

3.3. Linear Regression Model

4. Experiment

4.1. Dataset

4.2. Metrics

4.3. Implementation Details

4.4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hein, J.R.; Mizell, K.; Koschinsky, A.; Conrad, T.A. Deep-ocean mineral deposits as a source of critical metals for high- and green-technology applications: Comparison with land-based resources. Ore Geol. Rev. 2013, 51, 1–14. [Google Scholar] [CrossRef]

- Ma, W.; Zhang, K.; Du, Y.; Liu, X.; Shen, Y. Status of Sustainability Development of Deep-Sea Mining Activities. J. Mar. Sci. Eng. 2022, 10, 1508. [Google Scholar] [CrossRef]

- Kuhn, T.; Wegorzewski, A.; Rühlemann, C.; Vink, A. Composition, Formation, and Occurrence of Polymetallic Nodules. In Deep-Sea Mining: Resource Potential, Technical and Environmental Considerations; Sharma, R., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 23–63. [Google Scholar]

- Hein, J.R.; Koschinsky, A.; Bau, M.; Manheim, F.T.; Kang, J.K.; Roberts, L. Cobalt-rich ferromanganese crusts in the Pacific. In Handbook of Marine Mineral Deposits; Cronan, D.S., Ed.; CRC Press: London, UK, 1999; pp. 239–280. [Google Scholar]

- Hein, J.R.; Koschinsky, A.; Kuhn, T. Deep-ocean polymetallic nodules as a resource for critical materials. Nat. Rev. Earth Environ. 2020, 1, 158–169. [Google Scholar] [CrossRef] [Green Version]

- Sharma, R.; Sankar, S.J.; Samanta, S.; Sardar, A.A.; Gracious, D. Image analysis of seafloor photographs for estimation of deep-sea minerals. Geo-Mar. Lett. 2010, 30, 617–626. [Google Scholar] [CrossRef]

- Song, W.; Zheng, N.; Liu, X.; Qiu, L.; Zheng, R. An Improved U-Net Convolutional Networks for Seabed Mineral Image Segmentation. IEEE Access 2019, 7, 82744–82752. [Google Scholar] [CrossRef]

- Dong, L.; Wang, H.; Song, W.; Xia, J.; Liu, T. Deep sea nodule mineral image segmentation algorithm based on Mask R-CNN. In Proceedings of the ACM Turing Award Celebration Conference—China ( ACM TURC 2021), Hefei, China, 30 July–1 August 2021; pp. 278–284. [Google Scholar] [CrossRef]

- Croitoru, F.A.; Hondru, V.; Ionescu, R.T.; Shah, M. Diffusion models in vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 1–20. [Google Scholar] [CrossRef]

- Baranchuk, D.; Rubachev, I.; Voynov, A.; Khrulkov, V.; Babenko, A. Label-Efficient Semantic Segmentation with Diffusion Models. arXiv 2022, arXiv:2112.03126. [Google Scholar] [CrossRef]

- Kuhn, T.; Rühlemann, C. Exploration of Polymetallic Nodules and Resource Assessment: A Case Study from the German Contract Area in the Clarion-Clipperton Zone of the Tropical Northeast Pacific. Minerals 2021, 11, 618. [Google Scholar] [CrossRef]

- Mucha, J.; Wasilewska-Błaszczyk, M. Estimation Accuracy and Classification of Polymetallic Nodule Resources Based on Classical Sampling Supported by Seafloor Photography (Pacific Ocean, Clarion-Clipperton Fracture Zone, IOM Area). Minerals 2020, 10, 263. [Google Scholar] [CrossRef] [Green Version]

- Wasilewska-Błaszczyk, M.; Mucha, J. Possibilities and Limitations of the Use of Seafloor Photographs for Estimating Polymetallic Nodule Resources—Case Study from IOM Area, Pacific Ocean. Minerals 2020, 10, 1123. [Google Scholar] [CrossRef]

- Wasilewska-Błaszczyk, M.; Mucha, J. Application of General Linear Models (GLM) to Assess Nodule Abundance Based on a Photographic Survey (Case Study from IOM Area, Pacific Ocean). Minerals 2021, 11, 427. [Google Scholar] [CrossRef]

- Glasby, G. Distribution of manganese nodules and lebensspuren in underwater photographs from the Carlsberg Ridge, Indian Ocean. N. Z. J. Geol. Geophys. 1973, 16, 1–17. [Google Scholar] [CrossRef]

- Park, C.Y.; Park, S.H.; Kim, C.W.; Kang, J.K.; Kim, K.H. An Image Analysis Technique for Exploration of Manganese Nodules. Mar. Georesour. Geotechnol. 1999, 17, 371–386. [Google Scholar] [CrossRef]

- Ma, X.; He, Z.; Huang, J.; Dong, Y.; You, C. An Automatic Analysis Method for Seabed Mineral Resources Based on Image Brightness Equalization. In Proceedings of the 2019 3rd International Conference on Digital Signal Processing, Jeju Island, Republic of Korea, 24 February 2019; pp. 32–37. [Google Scholar] [CrossRef]

- Prabhakaran, K.; Ramesh, R.; Nidhi, V.; Rajesh, S.; Gopakumar, K.; Ramadass, G.A.; Atman, M.A. Underwater Image Processing to Detect Polymetallic Nodule Using Template Matching. In Proceedings of the Global Oceans 2020: Singapore–U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Mao, H.; Liu, Y.; Yan, H.; Qian, C.; Xue, J. Image Processing of Manganese Nodules Based on Background Gray Value Calculation. Comput. Mater. Contin. 2020, 65, 511–527. [Google Scholar] [CrossRef]

- Vijayalakshmi, D.; Nath, M.K. A Novel Contrast Enhancement Technique using Gradient-Based Joint Histogram Equalization. Circuits Syst. Signal Process. 2021, 40, 3929–3967. [Google Scholar] [CrossRef]

- Vijayalakshmi, D.; Nath, M.K. A strategic approach towards contrast enhancement by two-dimensional histogram equalization based on total variational decomposition. Multimed. Tools Appl. 2023, 82, 19247–19274. [Google Scholar] [CrossRef]

- Schoening, T.; Jones, D.O.B.; Greinert, J. Compact-Morphology-based poly-metallic Nodule Delineation. Sci. Rep. 2017, 7, 13338. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ye, Z. Objective assessment of nonlinear segmentation approaches to gray level underwater images. Int. J. Graph. Vis. Image Process. (GVIP) 2009, 9, 39–46. [Google Scholar]

- Wang, Y.; Fu, L.; Liu, K.; Nian, R.; Yan, T.; Lendasse, A. Stable underwater image segmentation in high quality via MRF model. In Proceedings of the OCEANS 2015-MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Schoening, T.; Kuhn, T.; Nattkemper, T.W. Estimation of poly-metallic nodule coverage in benthic images. In Proceedings of the 41st Conference of the Underwater Mining Institute, Shanghai, China, 15–20 October 2012. [Google Scholar]

- Kuhn, T.; Rathke, M. Report on Visual Data Acquisition in the Field and Interpretation for SMnN; Blue Mining Project; Blue Mining Deliverable D1.31; European Commission Seventh Framework Programme; Blue Mining; European Commission: Brussels, Belgium, 2017; p. 34. [Google Scholar]

- Schoening, T.; Kuhn, T.; Jones, D.O.B.; Simon-Lledo, E.; Nattkemper, T.W. Fully automated image segmentation for benthic resource assessment of poly-metallic nodules. Methods Oceanogr. 2016, 15–16, 78–89. [Google Scholar] [CrossRef]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Deep neural networks segment neuronal membranes in electron microscopy images. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; Volume 2, pp. 2843–2851. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning using Nonequilibrium Thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Volume 37, pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6 December 2020; pp. 6840–6851. [Google Scholar]

- Nichol, A.Q.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18 July 2021; Volume 139, pp. 8162–8171. [Google Scholar]

- Freedman, D.A. Statistical Models: Theory and Practice, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

| Statistics | Abundance (kg/m) | Coverage (%) |

|---|---|---|

| Count | 17 | 17 |

| Average | 32.03 | 72.65 |

| Median | 31.4 | 74.4 |

| 20% Trimmed Mean | 31.92 | 72.85 |

| Standard Deviation | 5.97 | 7.96 |

| Coefficient of Variation | 18.65% | 10.95% |

| Minimum | 21.1 | 59 |

| Maximum | 42.8 | 88 |

| Range | 21.7 | 29 |

| Skewness | 0.04 | −0.12 |

| Kurtosis | −0.41 | −0.74 |

| p-value (Shapiro–Wilk test) | 0.495 | 0.616 |

| W (Shapiro–Wilk test) | 0.95 | 0.96 |

| Method | Accuracy | Precision | Recall | IoU |

|---|---|---|---|---|

| U-Net [8] | 96.97 | 84.17 | 79.02 | 71.12 |

| Improved U-Net [8] | 96.80 | 82.94 | 79.97 | 71.38 |

| CGAN [8] | 95.91 | 79.28 | 80.10 | 67.51 |

| Mask R-CNN [8] | 97.24 | 83.51 | 85.58 | 74.73 |

| Ours | 96.94 | 87.30 | 86.49 | 76.67 |

| Coverage Type | Linear Regression Model | R | MAE | MAPE | RMSE |

|---|---|---|---|---|---|

| Coverage from photography | 0.221 | 4.059 | 0.138 | 5.273 | |

| 0.220 | 4.062 | 0.138 | 5.277 | ||

| 0.223 | 3.988 | 0.136 | 5.267 | ||

| 0.222 | 3.981 | 0.135 | 5.269 | ||

| Coverage from box sampling | 0.428 | 3.358 | 0.114 | 4.519 | |

| 0.423 | 3.425 | 0.116 | 4.539 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, M.; Song, W.; Zhao, X. Polymetallic Nodule Resource Assessment of Seabed Photography Based on Denoising Diffusion Probabilistic Models. J. Mar. Sci. Eng. 2023, 11, 1494. https://doi.org/10.3390/jmse11081494

Shao M, Song W, Zhao X. Polymetallic Nodule Resource Assessment of Seabed Photography Based on Denoising Diffusion Probabilistic Models. Journal of Marine Science and Engineering. 2023; 11(8):1494. https://doi.org/10.3390/jmse11081494

Chicago/Turabian StyleShao, Mingyue, Wei Song, and Xiaobing Zhao. 2023. "Polymetallic Nodule Resource Assessment of Seabed Photography Based on Denoising Diffusion Probabilistic Models" Journal of Marine Science and Engineering 11, no. 8: 1494. https://doi.org/10.3390/jmse11081494